Components in an Agent Builder

Components, also referred to as nodes, are the building blocks in an Agent Builder flow.

This section explains when to use each node, their inputs and outputs, and how to configure them to reliably build your flows.

Common data types across nodes include:

- Message: A string, typically human-readable

- JSON: A Python

dictorlistused for structured data - DataFrame: A

list[dict]suitable for table-like flows

Core Orchestration

LLM

The LLM node executes prompts using a Large Language Model configured in LLM Management. This node serves as the primary reasoning and completion engine for many flows.

When to use?

- To generate, summarize, or transform text

- To perform reasoning on inputs based on provided instructions

- As a foundational component for Agent nodes

How to add and configure?

- Drag the LLM node onto the canvas.

- Choose a configured model from LLM Management.

- Supply prompts or instructions via an upstream Prompt or Message node.

- Set the temperature to a low value for more predictable and deterministic responses from the LLM, or to a high value for more varied and creative output.

- Connect the output Message as input to Agent, Output, or Processing components per the flow logic.

Inputs:

- Message (prompt)

- Optional JSON or context as a Message

Outputs:

- Message: The model’s response text

- JSON: If the model response is valid JSON, it can be parsed downstream by the Type Convert node

Agent

An Agent node uses an LLM configured in LLM Management to carry out instructions, answer questions, and orchestrate complex multi-step tasks using hierarchical manager/worker orchestration and sub-agents.

When to use?

- For multi-step tasks that require tool calls, planning, or delegation

- In manager/worker scenarios where a coordinator Agent assigns tasks to sub-agents

- When tool-enabled reasoning is needed such as search, APIs, or debugging tools

How to add and configure?

- Drag the Agent node onto the canvas.

- Select a base LLM from LLM Management.

- Optionally, attach relevant Tools.

- Optionally, connect other Agent nodes to the Sub-agents connector to set up manager/worker orchestration.

- Provide instructions through a Prompt or Message input.

- Optionally, set the temperature to a low value for more predictable and deterministic responses from the LLM, or to a high value for more varied and creative output.

- Connect the output Message as input to Agent, Output, or Processing components per the flow logic.

Inputs:

- Message (instructions)

- Optional JSON or tool configurations as needed

Outputs:

- Message: Agent result or summary

- JSON: Tool results or any structured output produced by the agent

Tools

Bug Tools

The Bug Tools node is a specialized component for interacting with your organization’s bug or issue trackers.

When to use?

- To query bugs, create updates or comments, or retrieve triage information

- To automate bug lifecycle processes within an Agent flow

How to add and configure?

- Drag the Agent node (or another compatible node that supports tool calls) onto the canvas.

- Attach Bug Tools in the Agent’s Tools configuration.

- Provide the required credentials or configuration for your bug tracking system.

- Use prompts or instructions within your Agent to invoke Bug Tools.

Inputs: Tool parameters supplied by Agent prompts or upstream data

Outputs: Message summaries or structured JSON results returned by the tool

- Be explicit in your prompts by specifying which bug ID(s) to query or update.

- Use the Parser or Type Convert node to format tool JSON output for display.

MCP Server

The MCP Server node integrates an MCP server to expose external capabilities as tools to Agents. It is a specialized component for interfacing with AI models using the Model Context Protocol (MCP) with Server-Sent Events (SSE) transport.

When to use?

- When you need to access custom tools or endpoints provided by your MCP server

- To extend Agent capabilities with organization-specific functions

How to add and configure?

-

Configure your MCP Server and build the server URL. See Build Your Own MCP Server.

-

Drag and drop an MCP Server node from the Tools category

-

Set the URL of MCP server to

https://<host>:<port>/mcporhttps://<host>:<port>/sse. The URL fully defines the endpoint path, there are no extra path fields. - Choose from any of the authentication types for accessing MCP server:

- Basic auth Uses HTTP Basic authentication with a username and password.

- Bearer token: Uses your OAuth 2.0 access token. Leave the Basic user and Basic password fields empty.

- None: No authentication. Leave all authentication fields empty.

Note: Leave the Bearer token field in the node empty for Basic auth. For Bearer token authentication, leave Basic user and Basic password fields empty. Leave all authentication fields empty for None Auth type.

-

Connect the MCP Server node’s Tools output to downstream Agent nodes that consume the discovered MCP tools. Trigger tool usage through Agent prompts or tool-calling logic.

- Test the MCP Server node using Playground. If the server requires authentication and if the configured credentials are missing or invalid, a friendly error appears in the chat: “The MCP server requires Authorisation, please check the Auth details on the MCP Server Node in the created workflow.” If the authentication is successful, the node discovers the tool list and the downstream node can call the selected tool.

Inputs: Agent instructions and any necessary tool arguments

Outputs: JSON responses from MCP tools and message summaries generated by the Agent

- Validate the schemas and output structure of your tools.

- Use deterministic tool outputs to make downstream parsing easier.

See FAQs and Troubleshooting for MCP server related questions.

REST API Tools

The REST API node allows you to call REST APIs directly from your flows, often through an Agent.

When to use?

- To integrate with external services or internal microservices

- To retrieve or send data as part of your workflow

How to add and configure?

- Configure REST API connection. See Add REST API Data Source.

- Drag the REST API node onto the canvas and connect it to an Agent or any other compatible tool-enabled node.

- Specify the endpoints, headers, authentication, and HTTP method (GET, POST, PUT, or DELETE).

- Use Agent prompts to determine which endpoint and parameters to use.

Inputs: URL/endpoint and parameters or body data, often passed from previous node outputs

Outputs: JSON responses from the API and message summaries created by the Agent

- Use Type Convert to ensure the JSON response is in the expected format.

- Combine JSON outputs to merge multiple API responses for downstream use.

Inputs

Chat Input

Chat Input node captures conversational user input in a chat interface.

Note: A custom flow can contain a maximum of one Chat Input component.

When to use:

- For interactive flows where the user submits messages or questions

- To supply prompts or context to an LLM or Agent

How to add and configure:

- Drag the Chat Input node onto the canvas.

- Connect its Message output to downstream nodes such as Prompt, Agent, or LLM.

Inputs: None, as the user provides input at runtime

Outputs: Message containing the user’s text

- Use with the Agent node to pass instructions from Chat Input node as a Prompt.

- Use the Condition node to enable keyword-based branching in your flow.

Text Input

The Text Input node captures plain text input to pass to the next node.

When to use?

- For one-off values, such as IDs, categories, or thresholds

- To capture simple user parameters that influence the flow

How to add and configure?

- Drag the Text Input node onto the canvas.

- Optionally, set a label or placeholder for the input field.

- Connect its Message output to downstream nodes.

Inputs: None, as input is provided by the user

Outputs: Message containing the entered text

Prompt

The Prompt node creates and formats textual instructions for an LLM or Agent, often combining fixed instructions with dynamic values from upstream nodes.

When to use?

- To centralize system instructions and role-based prompts

- To create templates that incorporate values from upstream nodes

How to add and configure?

- Drag the Prompt node onto the canvas.

- Write your instructions, optionally using placeholders to reference outputs from upstream nodes.

- Connect the Prompt node to an LLM or Agent node.

Inputs: Message or JSON data for variable interpolation

Outputs: Message containing the final prompt text

Outputs

Chat Output

The Chat Output node renders or returns model/agent responses in a chat interface.

When to use?

- For chat interfaces intended for end users

- To display conversational responses in the user interface

How to add and configure?

- Drag the Chat Output node onto the canvas.

- Connect Message output from the LLM or Agent (or a formatted message from a Parser node) to the Chat Output node.

Inputs: Message

Outputs: None, as the response is rendered directly in the UI

Email Output

The Email Output node sends generated content via email. Accepts comma-separated email addresses and sends the generated content by email to multiple recipients.

When to use?

- To notify stakeholders by sending artifacts such as summaries, alerts, or reports

- To automate email communications within your workflows

How to add and configure?

- Make sure SMTP is configured. See SMTP Configuration

- Drag the Email Output node onto the canvas.

- Provide a subject, specify recipients as comma-separated email addresses to send to multiple people, and connect the Message output from an upstream node to serve as the email body.

- Optionally, attach any supported files using upstream file or data nodes.

Inputs: Message (email body); optional attachments or JSON for subject templating

Outputs: None (the node sends the email)

Data

Read CSV File

The Read CSV node imports and parses CSV files for batch or tabular data processing.

When to Use?

- To import tabular data for analysis or reporting.

- To feed a DataFrame into a Parser or LLM node for summarization.

How to Add and Configure?

- Drag the Read CSV node into your workflow.

- Select or upload a CSV file.

- Adjust delimiter and encoding settings if necessary.

- Connect the node’s outputs to subsequent steps in your workflow.

Inputs: File path or uploaded CSV file

Outputs:

- DataFrame: A list of dictionaries

list[dict], each representing a row - JSON: An array of objects, if this format is provided

File Upload

The File Upload node allows users to upload files for processing or analysis.

When to Use?

- To import external documents or data into a workflow

- To use alongside parsing or data extraction steps

How to Add and Configure?

- Drag the File Upload component into your flow

- Select the file to upload

- Connect the node to parsers, LLMs, or data converters

Inputs: None

Outputs: File handles/paths and/or Message/JSON, depending on integration

SQL Query

The SQL Query node executes SQL statements against configured databases and returns the results for use in workflows.

Note: Only SELECT-like queries are supported for security and governance.

When to use?

- To retrieve data for enriching prompts

- To enable further processing or visualization downstream

How to add and configure?

- Ensure the database connection is set up. See Database Data Source.

- Drag the SQL Query node into your workflow.

- Enter your SQL query text. This can be static or parameterized using data from upstream nodes.

- Connect the output to nodes such as Parser, Type Convert, or Combine JSON.

Inputs: Message or JSON containing the query and its parameters

Outputs:

- DataFrame: Tabular data results

- JSON: Array of row objects

Processing

Condition

The Condition node enables simple if-else decision logic in agent flows. Use condition node to compare a Text Input against a Match Text using operators. If the condition evaluates to true, it outputs a configurable true message and follows the “true” branch. If false, it outputs a false message and follows the “false” branch. You can use it for basic branching without complex orchestration.

When to use?

- Route flows based on keywords or user roles.

- Validate input values, such as numbers or specific formats.

- Gate actions before invoking tools or services.

How to add and configure?

-

Drag the Condition node onto the canvas.

- Configure the following settings:

- Text Input: The value you want to check.

- Match Text: The value to compare against.

- Operator: Choose from equals, not equals, contains, not contains, greater than, less than, or regex match.

- True Message (optional): Message to return if the condition is met.

- False Message (optional): Message to return if the condition is not met.

- Connect the True and False outputs to their respective next steps.

- Test the Condition node using sample inputs.

- equals / not equals: Checks for an exact match (case and spacing matter).

- contains / not contains: Useful for keywords.

- greater than / less than: Only works with numeric values.

- regex match: Matches text patterns using regular expressions (best for simple patterns).

Type Convert

The Type Convert node transforms data between message to string, JSON to dictionary or list, and dataframe to list of dictionaries for use by downstream nodes that require a specific structural type. It outputs all three types simultaneously, allowing downstream nodes to use whichever type they require.

Type Convert node supports robust JSON parsing from text:

- Detects and extracts the first valid JSON object or array found within the text (such as from code blocks or mixed content).

- Recursively parses nested JSON strings (for example, JSON fields that contain JSON data) to generate a structured output.

When to use?

- When downstream nodes require a specific data type:

- Parser: Accepts JSON or DataFrame

- Combine JSON: Accepts JSON only

- LLM/Prompt/Chat: Requires Message format

- To standardize mixed input formats received from APIs, tools, or earlier steps

How to add and configure?

- Drag and drop a Type Convert node from the Processing category.

- Connect any of Message, JSON, or DataFrame from the upstream node as input.

- Test the Type Convert node by connecting the output(s) - string, dictionary, or list of dictinories to appropriate downstream node(s). Execute the flow by selecting Playground.

Conversion Examples:

-

Input: “Hello” Outputs: Message - “Hello”, JSON - {“value”:”Hello”}, DataFrame - [{“value”:”Hello”}]

-

Input: ‘{“id”:1,”text”:”Hi”}’ Outputs: Message - string, JSON - {“id”:1,”text”:”Hi”}, DataFrame - [{“id”:1,”text”:”Hi”}]

-

Input: ‘[{“id”:1},{“id”:2}] Outputs: Message - string, JSON - [{“id”:1},{“id”:2}], DataFrame - [{“id”:1},{“id”:2}]

Inputs: Message, JSON, or DataFrame

Outputs (provided simultaneously):

- String for Message. JSON string for JSON objects/arrays

dictorlist(Union[Dict,List]) suitable for Parser or Combine JSON Datalist[dict]for table‑like flows

For example flows that use the Type Convert node together with the Parser and Combine JSON Data nodes, see Example Flows Using Combine JSON Data and Example Flow Using Parser.

- Use this node before Parser or Combine JSON to ensure the data is correctly formatted as JSON.

- If you expect arrays, check that the DataFrame output is a list of dictionaries (

list[dict]). - Keep the Message output concise if it will be used as input for prompts.

Combine JSON Data

The Combine JSON Data node merges multiple JSON payloads into a single object. It allows you to filter top-level keys and define how to resolve conflicts between keys. The node outputs both a JSON object and a Message containing the stringified JSON.

When to use?

- Combine results from multiple APIs or queries.

- Create a single JSON payload for downstream processing.

How to add and configure?

-

Drag and drop a *Combine JSON Data** node from the Processing category.

-

Connect one or more upstream JSON sources to the JSON inputs inlet.

-

Optionally, connect the output of a previous Combine JSON node to the Initial accumulator field for multi-stage merging. Leave it empty for a filter-only operation.

-

Input the top-level keys to include from input sources in the Keys to include field. Supported formats are a JSON array or a comma-separated list:

["a","b"],a, b, ora b. Leave it empty to include all the top-level keys. -

Select the Merge mode from Deep or Replace. Use Deep merge to recursively extend lists, replace scalars, and combine keys from all JSONs. Use Replace mode to overwrite keys with values from the JSON of the last connected node to the JSON inputs inlet.

-

Test the Combine JSON node by connecting two or more JSON inputs to the JSON inlet and the stringified JSON or merged dictorionary JSON to a chat output node. Execute the flow by selecting Playground, typing a space in the Chat input, and pressing Enter.

Inputs: Multiple JSON or Message-with-JSON

Outputs:

- JSON: A merged object suitable for downstream nodes.

- Message: A stringified JSON for display or logging purposes.

Example Flows

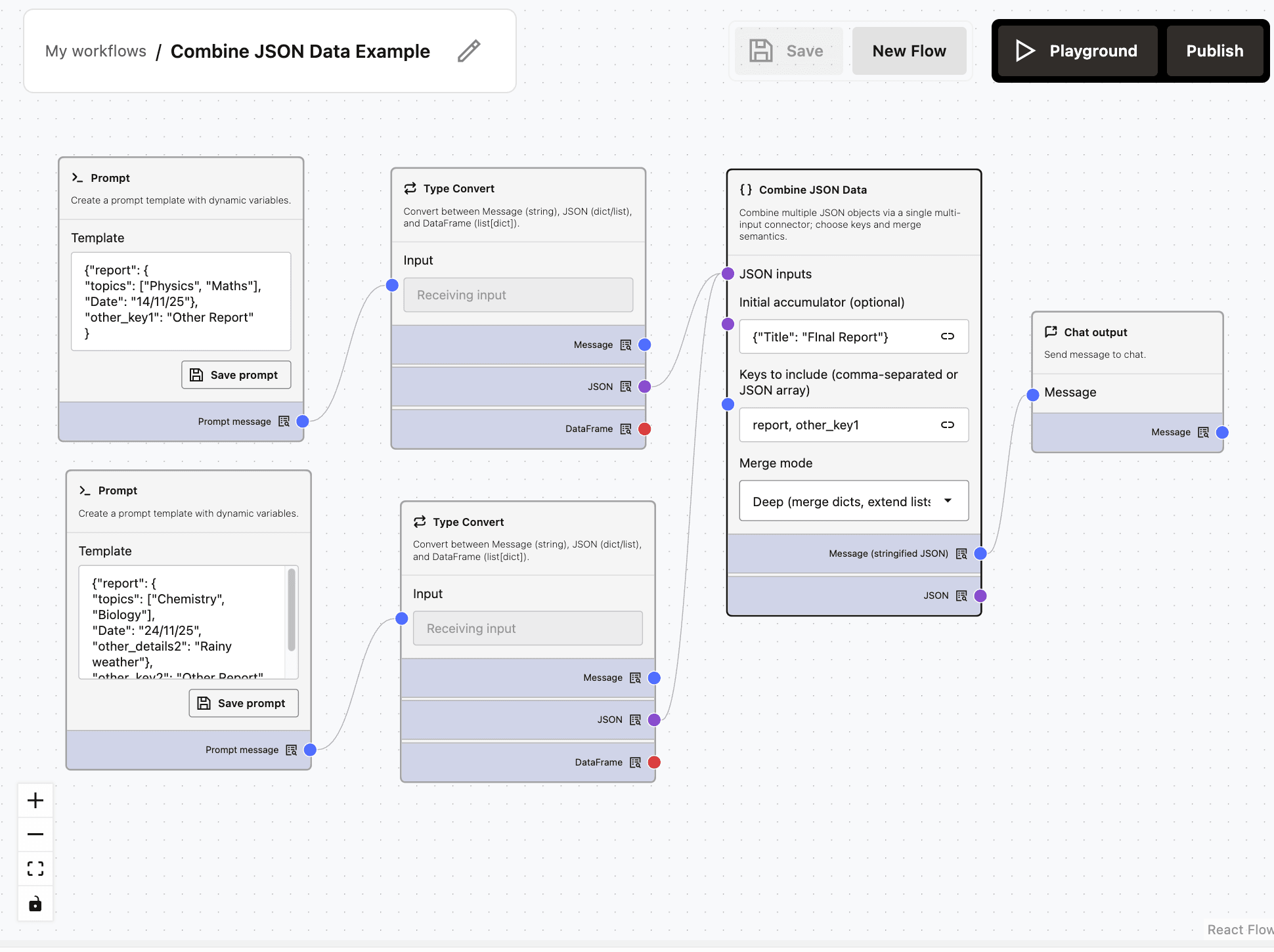

Example 1: Using the Combine JSON Data Component in Deep Merge Mode:

The output JSON {"Title": "Final Report", "report": {"topics": ["Physics", "Maths", "Chemistry", "Biology"], "Date": "24/11/25", "other_details2": "Rainy weather"}, "other_key1": "Other Report"} includes only the keys specified in the ‘Keys to include’ field (report and other_key1) of the Combine JSON Data node. In Deep Merge mode, list values are combined for matching keys (key=topics), whereas scalar values (key=Date) are replaced.

The Initial Accumulator can be any JSON, with or without keys that overlap with the incoming JSONs and will appear in the final JSON output. Any matching key in the Initial Accumulator will be replaced by the incoming value in the final JSON output. In this example, the Initial Accumulator is {“Title”: “Final Report”}.

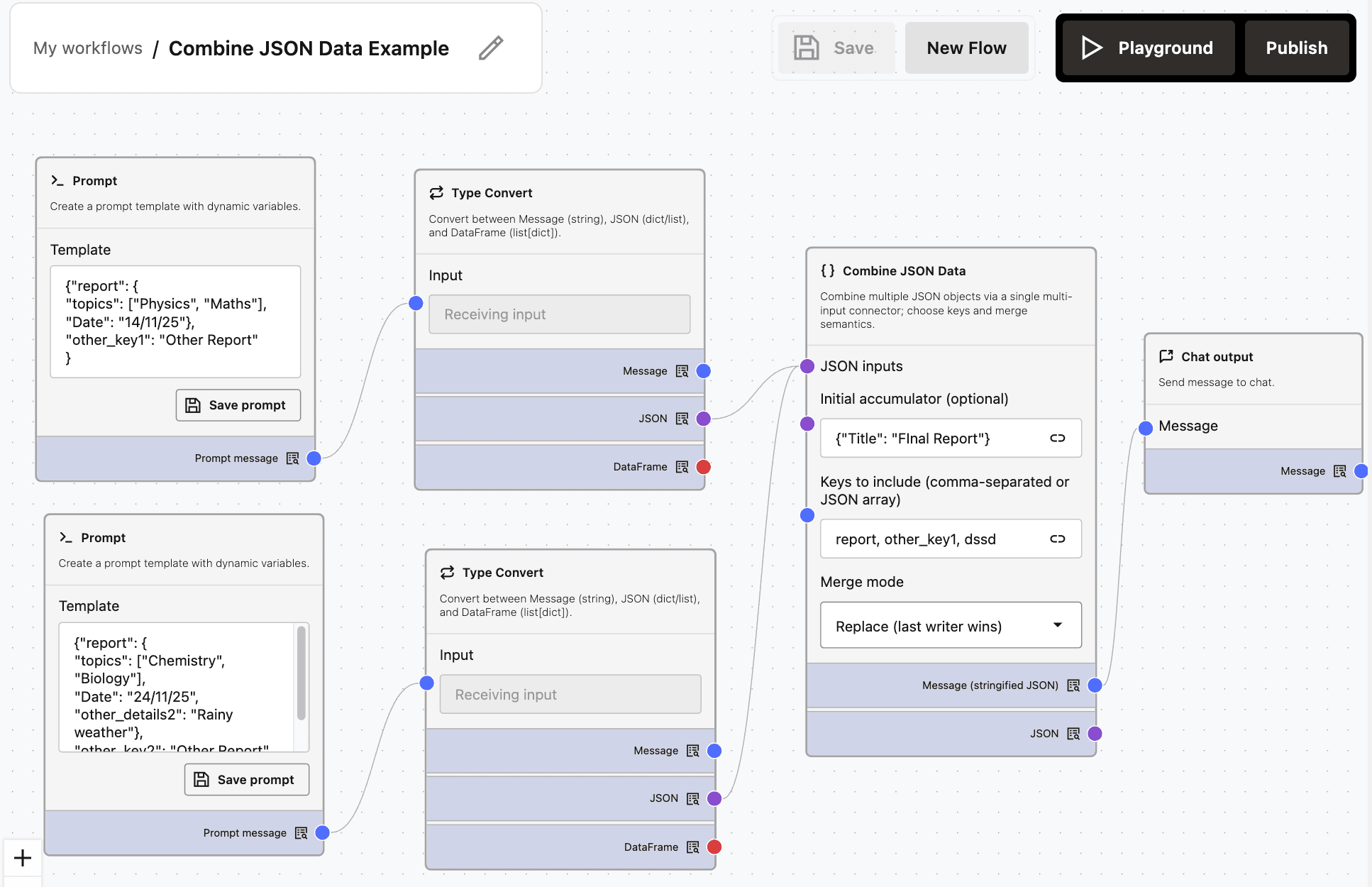

Example 2: Using the Combine JSON Data Component in Replace Merge Mode:

Description of the illustration combine-json-data-example-replace.png

The output JSON {"Title": "Final Report", "report": {"topics": ["Chemistry", "Biology"], "Date": "24/11/25", "other_details2": "Rainy weather"}, "other_key1": "Other Report"} includes the keys that were entered in the ‘Keys to include’ field (report and other_key1) of the Combine JSON Data Node. In Replace Merge mode, all the values for the same keys (key=topics) are replaced. The key value from the last input replaces the previous key values, so nodes connected later to Combine JSON Data overwrite those connected earlier.

The Initial Accumulator can be any JSON, with or without keys that overlap with the incoming JSONs and will appear in the final JSON output. Any matching key in the Initial Accumulator will be replaced by the incoming value in the final JSON output. In this example, the Initial Accumulator is {"Title": "Final Report"}.

Parser

The Parser node extracts, formats, and combines text from structured inputs, such as JSON or DataFrame. It transforms these inputs into a clean, readable message output that can be displayed or passed to downstream steps.

When to use?

- Convert SQL or API results into easily readable messages.

- Create lists from arrays, such as orders, tickets, or products.

- Quickly convert JSON to a string format for logging or debugging.

How to add and configure?

-

Drag and drop a Parser node from the Processing category .

-

Connect JSON or Message outputs from upstream nodes to the JSON or DataFrame inlet. Alternatively, input a JSON in the text box.

-

Input an optional dot-path in the Root path field to navigate into the input before applying template. Examples: records, records.0, outer.inner.items.

-

Select Mode from Parser or Stringify. The parser mode applies the template to each of the selected items or objects. The stringify mode ignores template and converts the selection(s) to a raw string.

-

Input Template for parser mode as a format string with placeholders referencing keys or columns from objects, or {text} for entire string inputs.

Examples:

- Name: {name}, Age: {age}

- {PRODUCT_ID}: {PRODUCT_NAME} — stock {STOCK_QUANTITY}

- Text: {text}

-

Enter a Separator which is a string used to join multiple rendered items when the input is a list. Default is

\n. -

Test the Parser node by connecting a JSON input to its JSON inlet and routing the output message to a chat output node. Execute the flow by selecting Playground.

Inputs: JSON or DataFrame

Outputs: Message containing the final rendered text

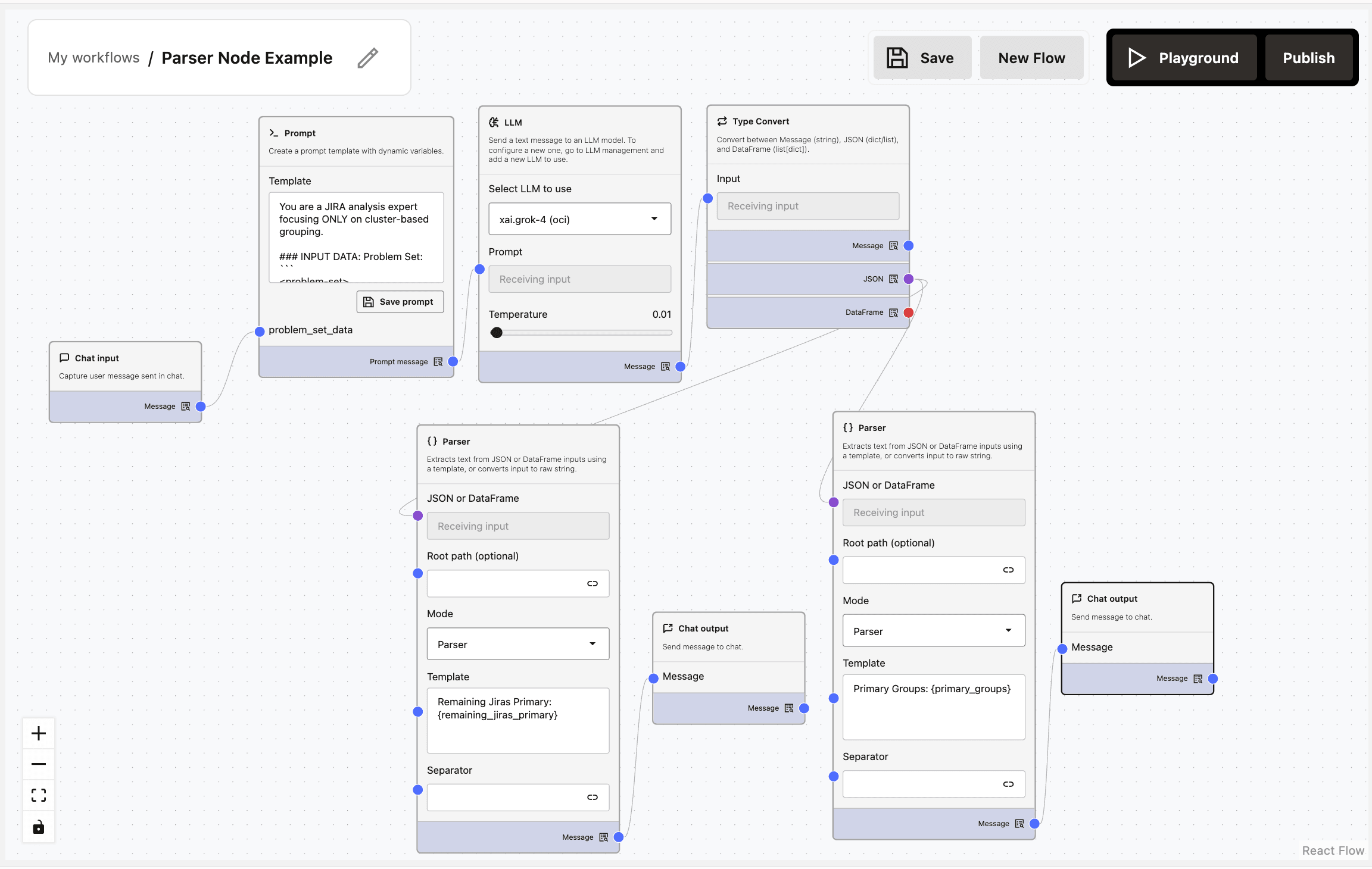

Example Flow

Below flow demonstrates grouping the Jira issues into a ‘Primary Group’ and storing the remaining Jira issues as ‘Remaining Jiras Primary’.

Description of the illustration parser-example.png

Using the following prompt, the LLM will generate a JSON with two keys - primary_groups and remaining_jiras_primary.

You are a JIRA analysis expert focusing ONLY on cluster-based grouping.

INPUT DATA: Problem Set:

<problem-set>

{{problem_set_data}}

</problem-set>

TASK: Group JIRAs based ONLY on cluster IDs.

STRICT RULES:

ONLY group by cluster ID matches

MANDATORY : EXACT cluster ID should match

MANDATORY : Each group MUST have minimum 2 JIRAs in the jira_list

If a group has fewer than 2 JIRAs, dissolve that group and ALL JIRAs from that group MUST be moved to remaining_jiras_primary

Order JIRAs chronologically within groups

DO NOT group by any other attributes

NO partial matches allowed

NO inferred relationships

VERIFY all groups meet the minimum 2 JIRAs requirement BEFORE outputting

DO NOT explain your reasoning or corrections

DO NOT output any intermediate results

MANDATORY: Use double quotes for ALL strings in JSON (not single quotes)

MANDATORY: Do NOT add “JIRA” prefix to any ID - use the exact ID format from the input data

OUTPUT FORMAT:

{{

"primary_groups": [

{{

"title": "Cluster - <Full Cluster ID>",

"summary": "Clear description of cluster impact",

"jira_list": ["EXACSOPS-1", "EXACSOPS2-2"]

}}

],

"remaining_jiras_primary": ["EXACSOPS-3", "EXACSOPS-4"]

}}

CRITICAL INSTRUCTION: Return ONLY the final VALID JSON with proper double quotes for all strings. Do not include ANY explanations, reasoning, comments, corrections, or text of any kind outside the JSON structure. The response must begin with {{ and end with }} without any other characters.Now, if you want to send the data in primary_groups directly for review and route remaining_jiras_primary through another round of analysis, you can use Parser nodes to parse the JSON generated by the LLM and extract data from two keys. This requires two Parser nodes. Select Playground to execute the flow.

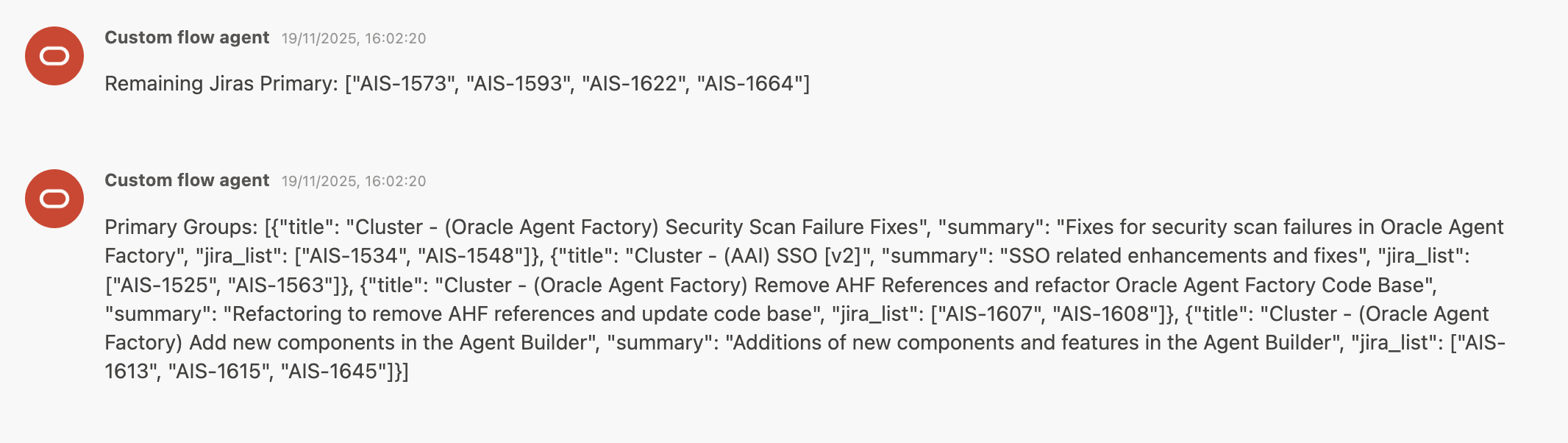

Description of the illustration parser-example-output.png

You can use the two outputs from the two Parser nodes for different purposes in a complex workflow.

Utilities

Sticky Notes

The Sticky Notes node keeps track of important information or instructions within your flow.

When to use?

- Explain complex logic

- Give context to collaborators

- Add annotations for future reference

How to add and configure?

- Drag the Sticky Notes node onto the canvas.

- Click the note to edit its text.

- Resize or move the note as needed.

- Choose your preferred color.

Inputs: Raw text

Outputs: Not applicable