OS Image

Describes the OS images used to create Kubernetes nodes using the

byo provider.

You need the following to perform a BYO installation:

-

An Oracle Linux kernel to boot.

-

An initrd (initial ramdisk) that matches the boot kernel.

-

A root file system that can run Anaconda and Kickstart.

-

A method to perform an automated installation using Kickstart.

-

A method to serve the OCK OSTree archive.

-

A method to serve Ignition files.

An easy way to achieve the first four points is to download an Oracle Linux ISO file. For information about the available ISO files, see Oracle Linux ISO Images.

A Kickstart file defines an automated installation using a local OSTree archive server. For information on creating a Kickstart file, see Oracle Linux 9: Installing Oracle Linux or Oracle Linux 8: Installing Oracle Linux.

The CLI can generate a container image that serves an OSTree archive over HTTP. The container image can be served using a container runtime such as Podman, or inside a Kubernetes cluster. You can also generate an OSTree archive manually and serve it over any HTTP server.

Ignition files can be served using any of the platforms listed in the upstream Ignition documentation. You could also embed the Ignition configuration file directly on to the root file system of the host if the installation is done reasonably close to when the Ignition configuration was generated.

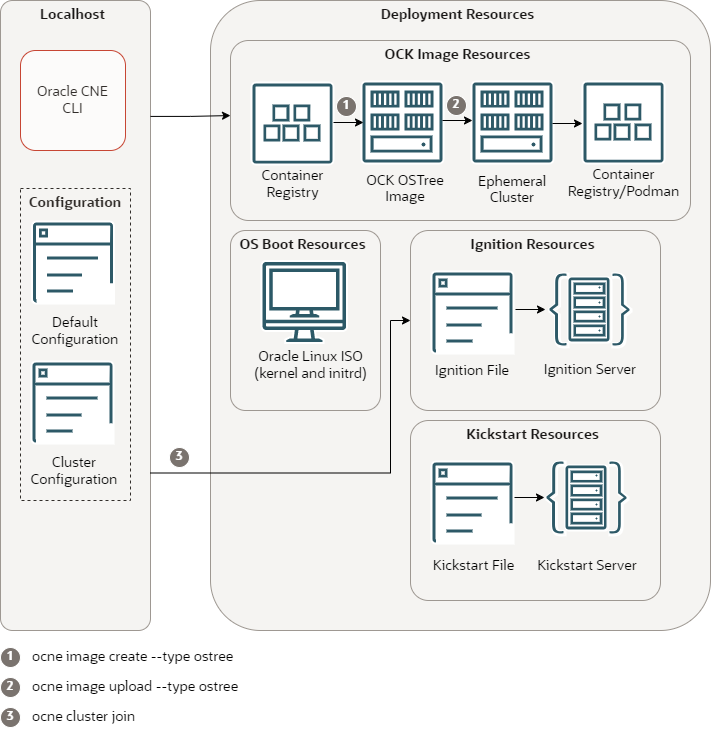

Figure 8-2 OCK Images in a Bring Your Own Cluster

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Container registry: A container registry used to pull the OCK OSTree images. The default is the Oracle Container Registry.

-

OCK OSTree Image: The OCK OSTree image pulled from the container registry.

-

Ephemeral cluster: A temporary Kubernetes cluster used to perform a CLI command.

-

Container registry/Podman: A container registry or container server, such as Podman, used to serve the OCK OSTree images.

-

Oracle Linux ISO: An ISO file to serve the kernel and initrd to use for the OS on nodes.

-

Ignition file: An Ignition file, generated by the CLI, used to join nodes to a cluster.

-

Ignition server: The Ignition file, loaded into a method that serves Ignition files.

-

Kickstart file: A Kickstart file that provides the location of the OCK OSTree image, Ignition file, and the OS kernel and initrd.

-

Kickstart server: The Kickstart file, loaded into a method that servers Kickstart files.

Oracle Linux ISO Images

Describes the Oracle Linux ISO image used for OS images with the byo

provider.

You can use any of the available Oracle Linux ISO images as the basis for the OS image. Only the kernel, initrd, and root file system from the media is used. All installation content comes from the OCK OSTree archive. The ISO images are available on the Oracle Linux yum server.

We recommend you use a UEK boot ISO file as these are smaller and include all the OS components required.

OSTree Archive Server

Describes the OSTree archive used for building an OS image with the

byo provider.

A container image that contains the OSTree archive can be generated using the

ocne image create command. For example:

ocne image create --type ostreeA Kubernetes cluster is used to download and generate the OSTree archive image. Any

running cluster can be used. To specify which cluster to use, set the

KUBECONFIG environment variable, or use the

--kubeconfig option of ocne commands. If no

cluster is available, a cluster is created automatically using the

libvirt provider, with the default configuration.

The image might take some time to download and generate. When completed, the image is

available in the $HOME/.ocne/images/ directory.

Use the ocne image upload command to upload the image to a location

where it can be used. Container images can be uploaded using any transport provided by

libcontainer. See containers-transports(5) for the

list of transports. A transport is always required.

Typically, the image is uploaded to a container registry. For example:

ocne image upload --type ostree --file $HOME/.ocne/images/ock-1.31-amd64-ostree.tar --destination docker://myregistry.example.com/ock-ostree:latest --arch amd64To load the container image into the local image cache, use the Open Container Initiative image loading facility built in to a container runtime or other some other tool that performs the same task. For example, to load the image into Podman:

podman load < $HOME/.ocne/images/ock-1.31-arm64-ostree.tarCreating an OSTree Image for the Bring Your Own Provider

Create an OSTree image for the Bring Your Own (byo) provider. Then

upload the image to a container registry so it can be used as the boot disk for Virtual Machines

(VMs).

You can load the image into several destinations. This example show you how to load the image into a container registry. For information on setting up a container registry, see Oracle Linux: Podman User's Guide.

You can load the image into any target available with the Open Container Initiative

transports and formats. See containers-transports(5) for available options.