8 Bring Your Own Provider

Learn about the byo provider used to create Kubernetes clusters using

bare metal or other virtual instances not provided explicitly by Oracle CNE.

You can make custom installations of the Oracle Container Host for Kubernetes (OCK)

image on arbitrary platforms. This means you can create a Kubernetes cluster using bare metal

or other virtual instances, not provided explicitly by Oracle CNE. These installations are known as Bring Your

Own (BYO) installations. You use the byo provider to perform these

installations.

You can install the OCK image into environments that require manual installation of individual hosts. A common case is a bare metal deployment. Another is a case where a standardized golden image for an OS is required. This install type is intended to cover all cases where deploying the standard OS boot image isn't possible.

This installation process is used to create new Kubernetes clusters or expand existing ones. This installation type leverages the Anaconda and Kickstart installation options of Oracle Linux to deploy OSTree content onto a host.

The BYO installation consists of a handful of components, spread across several Oracle CNE CLI commands.

-

The

ocne image createcommand is used to download OSTree content from official Oracle CNE sources, and convert them into a format that can be used for a custom installation. It also creates an OSTree archive server. -

The

ocne image uploadcommand is used to copy the OSTree archive server to a container registry. You can also use Podman to serve the OSTree archive locally if you don't want to use a container registry. You can load the image into any target available with the Open Container Initiative transports and formats. Seecontainers-transports(5)for available options. -

The

ocne cluster startcommand generates Ignition content that's consumed by the newly installed host during boot. This Ignition information is used to start a new Kubernetes cluster. You specify what you want to include in the Ignition configuration. -

The

ocne cluster joincommand generates Ignition content that's used to add nodes to an existing Kubernetes cluster.

For more information on OSTree, see the upstream OSTree documentation.

For more information on Ignition, see the upstream Ignition documentation.

BYO installations of the OCK image use an OSTree archive with Anaconda and Kickstart to create bootable media. When the base OS installation is complete, Ignition is used to complete the first-boot configuration and provision Kubernetes services on the host.

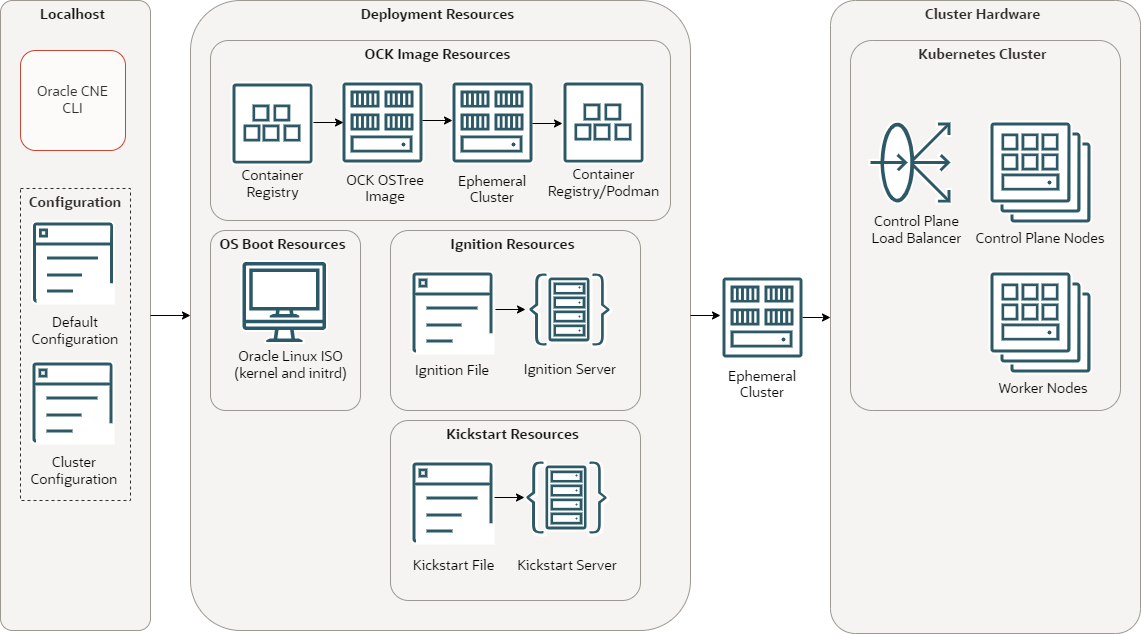

Figure 8-1 BYO Cluster

The BYO cluster architecture has the following components:

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Container registry: A container registry used to pull the OCK OSTree images. The default is the Oracle Container Registry.

-

OCK OSTree Image: The OCK OSTree image pulled from the container registry.

-

Ephemeral cluster: A temporary Kubernetes cluster used to perform a CLI command.

-

Container registry/Podman: A container registry or container server, such as Podman, used to serve the OCK OSTree images.

-

Oracle Linux ISO: An ISO file to serve the kernel and initrd to use for the OS on nodes.

-

Ignition file: An Ignition file, generated by the CLI, used to join nodes to a cluster.

-

Ignition server: The Ignition file, loaded into a method that serves Ignition files.

-

Kickstart file: A Kickstart file that provides the location of the OCK OSTree image, Ignition file, and the OS kernel and initrd.

-

Kickstart server: The Kickstart file, loaded into a method that servers Kickstart files.

-

Ephemeral cluster: A temporary Kubernetes cluster used to perform a CLI command.

-

Control plane load balancer: A load balancer used for High Availability (HA) of the control plane nodes. This might be the default internal load balancer, or an external one.

-

Control plane nodes: Control plane nodes in a Kubernetes cluster.

-

Worker nodes: Worker nodes in a Kubernetes cluster.