9.1.10 MongoDB

The Oracle GoldenGate capture (Extract) for MongoDB is used to get changes from MongoDB databases.

This chapter describes how to use the Oracle GoldenGate Capture for MongoDB.

- Overview

- Prerequisites to Setting up MongoDB

- MongoDB Database Operations

- Using Extract Initial Load

- Using Change Data Capture Extract

- Positioning the Extract

- Security and Authentication

- MongoDB Bidirectional Replication

- Mongo DB Configuration Reference

- Columns in Trail File

- Update Operation Behavior

- Oplog Size Recommendations

- Troubleshooting

- MongoDB Capture Client Dependencies

What are the dependencies for the MongoDB Capture to connect to MongoDB databases?

Parent topic: Source

9.1.10.1 Overview

MongoDB is a document-oriented NoSQL database used for high volume data storage and which provides high performance and scalability along with data modelling and data management of huge sets of data in an enterprise application. MongoDB provides:

- High availability through built-in replication and failover

- Horizontal scalability with native sharding

- End-to-end security and many more

Parent topic: MongoDB

9.1.10.2 Prerequisites to Setting up MongoDB

- MongoDB cluster or a MongoDB node must have a replica set.

The minimum recommended configuration for a replica set is a three member

replica set with three data-bearing members: one primary and two secondary

members.

Create mongod instance with the replica set as follows:

bin/mongod --bind_ip localhost --port 27017 --replSet rs0 --dbpath ../data/d1/ bin/mongod --bind_ip localhost --port 27018 --replSet rs0 --dbpath ../data/d2/ bin/mongod --bind_ip localhost --port 27019 --replSet rs0 --dbpath ../data/d3/ bin/mongod --host localhost --port 27017Adding a replica set:

rs.initiate( { _id : "rs0", members: [ { _id: 0, host: "localhost:27017" }, { _id: 1, host: "localhost:27018" }, { _id: 2, host: "localhost:27019" } ] }) - Replica Set Oplog

MongoDB capture uses oplog to read the CDC records. The operations log (

oplog) is a capped collection that keeps a rolling record of all operations that modify the data stored in your databases.The MongoDB only removes an oplog entry in the following cases: the oplog has reached the maximum configured size, and the oplog entry is older than the configured number of hours based on the host system clock.

You can control the retention of oplog entries using:

oplogMinRetentionHoursandreplSetResizeOplog.For more information about oplog, see Oplog Size Recommendations.

- You must download and provide the third party libraries of MongoDB

clients having version 5.0.0 and forward. See Reactive Streams Java Driver 5.0.1.

Note:

MongoDB client version less than 5.0.0 is not supported.

Parent topic: MongoDB

9.1.10.2.1 Set up Credential Store Entry to Detect Source Type

userid. The generic format for userid is as follows:

<dbtype>://<db-user>@<comma separated list of server

addresses>:<port> . The userid

value for MongoDB is any valid MongoDB clientURI without the password.

MongoDB Capture

alter credentialstore add user "mongodb+srv://user@127.0.0.1:27017" password db-passwd alias mongo

Note:

Ensure that the userid value is in double quotes.MongoDB Atlas

Example:

alter credentialstore add user "mongodb+srv://user@127.0.0.1:27017" password db-passwd alias mongo

Parent topic: Prerequisites to Setting up MongoDB

9.1.10.3 MongoDB Database Operations

Supported Operations

- INSERT

- UPDATE

- DELETE

Unsupported Operations

- CREATE collection

- RENAME collection

- DROP collection

Note:

MongoDB Capture does not include operations related to the admin, config, and local databases.Parent topic: MongoDB

9.1.10.4 Using Extract Initial Load

MongoDB Extract supports the standard initial load capability to extract source table data to Oracle GoldenGate trail files.

Initial load for MongoDB can be performed to synchronize tables, either as a prerequisite step to replicating changes or as a standalone function.Configuring the Initial Load

Initial Load Parameter file:-- ggsci> alter credentialstore add user mongodb://db-user@localhost:27017/admin password db-passwd alias mongo

EXTRACT LOAD

JVMOPTIONS CLASSPATH ggjava/ggjava.jar:/path/to/mongo-capture/libs/*

SOURCEISTABLE

SOURCEDB USERIDALIAS mongo

TABLE database.collection;adminclient> ADD EXTRACT load, SOURCEISTABLE

adminclient> START EXTRACT load

Parent topic: MongoDB

9.1.10.5 Using Change Data Capture Extract

Review the example .prm files from Oracle GoldenGate for Distributed

Applications and Analytics (GG for DAA) installation directory here:

AdapterExamples/big-data/mongodbcapture.

When adding the MongoDB Extract trail, you need to use EXTTRAIL to

create a local trail file.

RMTTRAIL

option.adminclient> ADD EXTRACT groupname, TRANLOG

adminclient> ADD EXTTRAIL trailprefix, EXTRACT groupname

Example:

adminclient> ADD EXTRACT mongo, TRANLOG

adminclient> ADD EXTTRAIL ./dirdat/z1, EXTRACT mongo

Parent topic: MongoDB

9.1.10.6 Positioning the Extract

MongoDB extract process allows us to position from EARLIEST, TIMESTAMP, EOF and LSN.

EARLIEST: Positions to the start of the Oplog for a given collection.

Syntax:

ADD EXTRACT groupname, TRANLOG, EARLIESTTIMESTAMP: Positions to a given time stamp. Token BEGIN can use either NOW to start from present time or with a given timestamp.

BEGIN {NOW | yyyy-mm-dd[ hh:mi:[ss[.cccccc]]]}

Syntax

ADD EXTRACT groupname, TRANLOG, BEGIN NOW

ADD EXTRACT groupname, TRANLOG, BEGIN ‘yyyy-mm-dd hh:mm:ss’

EOF: Positions to end of oplog.

Syntax

ADD EXTRACT groupname, TRANLOG, EOFLSN: Positions to a given LSN.

In MongoDB Capture, the Log Sequence Number (LSN) corresponds to the Operation Time in theoplog,

which is unique for each entry. This Operation Time can be represented in two formats:

as a timestamp with an increment (in the t.i format)

or as a 20-digit numerical value. For example, if the oplog's Operation Time is

"ts": {"$timestamp": {"t": 1733328879, "i": 2}}, the corresponding

LSN can be expressed as 1733328879.2 or

07444590848517341186.

The syntax for adding an extract is as follows:

For the timestamp.increment

format:

ADD EXTRACT groupname, TRANLOG, LSN "1733328879.2"

For the 20-digit LSN format:

ADD EXTRACT groupname, TRANLOG, LSN "07444590848517341186"

Parent topic: MongoDB

9.1.10.7 Security and Authentication

- Credential Store Integration

- MongoDB URI-Based Authentication

MongoDB uses a URI-style connection string that supports various authentication methods and options as query parameters. - SSL Configuration Setup

Parent topic: MongoDB

9.1.10.7.1 Credential Store Integration

- Eliminates the need to hard-code usernames and passwords in parameter files.

- Stores connection URIs securely using an alias.

- References credentials using the alias in all configuration.

- adminclient> add credentialstore

- adminclient> alter credentialstore add user

"mongodb://myUserAdmin@localhost:27017/admin?replicaSet=rs0" password MySecurePassword alias mongoNote:

The MongoDB URI must be enclosed in double quotes.

- adminclient> dblogin useridalias mongo

- adminclient> list tables tcust*

Use alias in parameter file

SOURCEDB USERIDALIAS mongo

Parent topic: Security and Authentication

9.1.10.7.2 MongoDB URI-Based Authentication

MongoDB uses a URI-style connection string that supports various authentication methods and options as query parameters.

9.1.10.7.2.1 Authentication Methods

- SCRAM-SHA-256 : Recommended password-based authentication

mechanism.

Example:

mongodb://username@host:27017/?authSource=admin&authMechanism=SCRAM-SHA-256 - SCRAM-SHA-1 : For backward compatibility.

Example:

mongodb://username@host:27017/?authSource=admin&authMechanism=SCRAM-SHA-1 - PLAIN (LDAP): Requires TLS.

Example:

mongodb://ldapUser@host:27017/?authMechanism=PLAIN&authSource=$external&tls=true - x.509 Certificate Authentication:

Example:

mongodb://host:27017/?authMechanism=MONGODB-X509&tls=true&tlsCertificateKeyFile=client.pem&tlsCAFile=ca.pemNote:

For more information on X509 configuration steps, see MongoDB Extract and Replicat Configuration.

Parent topic: MongoDB URI-Based Authentication

9.1.10.7.2.2 TLS/SSL Configuration

Note:

The MongoDB extract utilizes both the C driver and the Java driver of MongoDB. Therefore, it is essential to respect the tlsCAFile and tlsCertificateKeyFile parameters in the clientURL when establishing a DBConnection, as well as to include thekeystore/truststore details in the JVM bootoptions during

the extract process. For more information, see SSL Configuration Setup to generate the certificates required for

tls/mtls.

Parent topic: MongoDB URI-Based Authentication

9.1.10.7.2.2.1 Disable TLS (Not Recommended)

Example: mongodb://host:27017/?tls=false

Parent topic: TLS/SSL Configuration

9.1.10.7.2.2.2 Enable TLS

-

If server uses the CA-trusted certificate:

Example:

mongodb://host:27017/?tls=true

-

If server uses the self-signed certificate:

Example:

mongodb://host:27017/?tls=true&tlsAllowInvalidCertificates=trueor

Example:

mongodb://host:27017/?tls=true&tlsCAFile=/path/to/ca.pemNote:

The truststore information should be provided in the JVM bootoptions when used tlsCAFile in clientURI.

Parent topic: TLS/SSL Configuration

9.1.10.7.2.2.3 Mutual TLS (mTLS)

Performs the client certificate validation along with the server certificate validation.

mongodb://host:27017/?tls=true&tlsCAFile=/path/to/ca.pem&tlsCertificateKeyFile=/path/to/client.pemNote:

The truststore and keystore information should be provided in the JVM bootoptions.-

-Djavax.net.ssl.trustStore=/path/to/truststore.jks -

-Djavax.net.ssl.trustStorePassword=changeit -

-Djavax.net.ssl.keyStore=/path/to/keystore.jks -

-Djavax.net.ssl.keyStorePassword=changeit

Parent topic: TLS/SSL Configuration

9.1.10.7.3 SSL Configuration Setup

To configure SSL between the MongoDB instance and Oracle GoldenGate for Distributed Applications and Analytics MongoDB Capture, do the following:

openssl req -passout pass:password -new -x509 -days 3650 -extensions v3_ca -keyout

ca_private.pem -out ca.pem -subj

"/CN=CA/OU=GOLDENGATE/O=ORACLE/L=BANGALORE/ST=KA/C=IN"Create key and certificate signing requests (CSR) for client and all server nodes

openssl req -newkey rsa:4096 -nodes -out client.csr -keyout client.key -subj

'/CN=certName/OU=OGGBDCLIENT/O=ORACLE/L=BANGALORE/ST=AP/C=IN'

openssl req -newkey rsa:4096 -nodes -out server.csr -keyout server.key -subj

'/CN=slc13auo.us.oracle.com/OU=GOLDENGATE/O=ORACLE/L=BANGALORE/ST=TN/C=IN'Sign the certificate signing requests with CA

openssl x509 -passin pass:password -sha256 -req -days 365 -in client.csr -CA ca.pem -CAkey

ca_private.pem -CAcreateserial -out client-signed.crtopenssl x509 -passin pass:password -sha256 -req -days 365 -in server.csr -CA ca.pem -CAkey

ca_private.pem -CAcreateserial -out server-signed.crt -extensions v3_req -extfile

<(cat << EOF[ v3_req ]subjectAltName = @alt_names

[ alt_names ]

DNS.1 = 127.0.0.1

DNS.2 = localhost

DNS.3 = hostname

EOF)cat client-signed.crt client.key > client.pem

cat server-signed.crt server.key > server.pemCreate trust store and keystore

openssl pkcs12 -export -out server.pkcs12 -in server.pem

openssl pkcs12 -export -out client.pkcs12 -in client.pem

bash-4.2$ ls

ca.pem ca_private.pem client.csr client.pem server-signed.crt server.key server.pkcs12

ca.srl client-signed.crt client.key client.pkcs12 server.csr server.pem

Start instances of mongod with the following options:

--tlsMode requireTLS --tlsCertificateKeyFile ../opensslKeys/server.pem --tlsCAFile

../opensslKeys/ca.pem credentialstore connectionString

alter credentialstore add user

mongodb://myUserAdmin@localhost:27017/admin?ssl=true&tlsCertificateKeyFile=../mcopensslkeys/client.pem&tlsCertificateKeyFilePassword=password&tlsCAFile=../mcopensslkeys/ca.pem

password root alias mongoNote:

The Length ofconnectionString should not exceed 256.

For CDC Extract, add the key store and trust store as part of the JVM options.

JVM options

-Xms512m -Xmx4024m -Xss32m -Djavax.net.ssl.trustStore=../mcopensslkeys /server.pkcs12

-Djavax.net.ssl.trustStorePassword=password

-Djavax.net.ssl.keyStore =../mcopensslkeys/client.pkcs12

-Djavax.net.ssl.keyStorePassword=passwordParent topic: Security and Authentication

9.1.10.8 MongoDB Bidirectional Replication

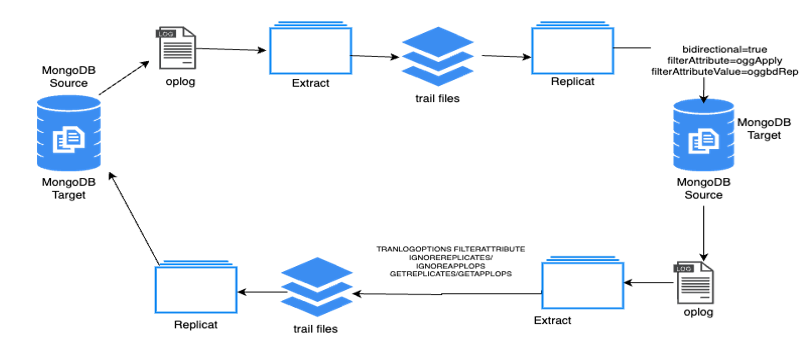

Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) has integration to capture changes from a MongoDB source database, and also apply the changes to a MongoDB target database. In bidirectional replication, Changes that are made to one source collection are replicated to target collection, and changes that are made to the second copy are replicated back to the first copy.

This topic explains the design to support bidirectional replication for

MongoDB.

Note:

MongoDB Version 6 or above is required to support bi-directional replication. With versions before 6.0, MongoDB bi-directional is not supported and it fails with the following error message: MONGODB-000XX MongoDB version should be 6 or greater to support bi-directional replication.

- Enabling Trandata

- Enabling MongoDB Bi-directional Replication

- Extracting from Target Replicat which is Bidirectionally Processed

- Troubleshooting

Parent topic: MongoDB

9.1.10.8.1 Enabling Trandata

Before starting the replicat process with bidirectional enabled, one should enable the trandata for the collection where the data is been replicated. By enabling the trandata on the collection before the start of the replicat process, will capture the before image of the operation with which an Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) extract process can identify if the document is processed by the GG for DAA or not.

Extract abends if trandata is not enabled on the collection that been used in the bidirectional enabled replicat process.

Command to Enable Trandata

Dblogin useridalias <aliasname>

“add trandata <schema>.<collectionname>”

Note:

The target collection should be available before the replicat process when executed with bidirectionally enabled.Parent topic: MongoDB Bidirectional Replication

9.1.10.8.2 Enabling MongoDB Bi-directional Replication

To enable MongoDB bi-directional replication, set

gg.handler.mongodb.bidirectional to true

(gg.handler.mongodb.bidirectional=true) in replicat properties.

When gg.handler.mongodb.bidirectional property is set to

true, replicat process adds filterAttribute and

filterAttributeValue key value pair to the document. filterAttribute

and filterAttributeValue is needed for loop-detection. Ensure that the

filterAttributeValue contain only ASCII characters [A-Za-z] and

numbers [0-9] with a Maximum length of 256 characters. If the document has the key-value

pair of filterAttribute and filterAttributeValue, then

it shows that the document is processed by Oracle GoldenGate for Distributed

Applications and Analytics (GG for DAA) replicat process.

When gg.handler.mongodb.bidirectional property is set to

true, replicat ingests the default value of

filterAttribute as oggApply and the default

filterAttributeValue as true if not specified

explicitly. You can enable MongoDB bi-directional replication with default settings.

For example: gg.handler.mongodb.bidirectional=true

{ "_id" : ObjectId("65544aa60b0a066d021ba508"), "CUST_CODE" : "test65", "name" : "hello

world", "cost" : 3000, "oggApply":"true"}

filterAttribute and

filterAttributeValue. For

example:gg.handler.mongodb.bidirectional=true

gg.handler.mongodb.filterAttribute=region

gg.handler.mongodb.filterAttributeValue=westcentral{ "_id" : ObjectId("65544aa60b0a066d021ba508"), "CUST_CODE" : "test65", "name" : "hello world", "cost" : 3000, "region":"westcentral"}Parent topic: MongoDB Bidirectional Replication

9.1.10.8.3 Extracting from Target Replicat which is Bidirectionally Processed

TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE can be used in extract parameters

to filter source MongoDB operations. TRANLOGOPTIONS

EXCLUDEFILTERATTRIBUTE is a value/ key pair. Default

EXCLUDEFILTERATTRIBUTE attribute name and value is oggApply and

true. Optionally, name and value can be set by user. User can mention multiple

TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE options with different key

value pairs.

This option may be used to avoid data looping in a bidirectional

configuration of MongoDB capture by specifying EXCLUDEFILTERATTRIBUTE

name with the value that was used by MongoDB Replicat.

Example 1

TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE filters attribute with

oggApply and value with true. If the source

document contains the specified EXCLUDEFILTERATTRIBUTE, the document

will be filtered and will not be extracted.

TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE

Filtered Sample Message:

{ "_id" : ObjectId("65544aa60b0a066d021ba508"), "CUST_CODE" : "test65", "name" : "hello world", "cost" : 3000, "oggApply":"true"} TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE parameter value should be in line

with source replicat's FILTERATTRIBUTE and

FILTERATTRIBUTEVALUE to defect the loop or decide to process/filter

the operations. If the source document contains the specified

FILTERATTRIBUTE, the document is identified as a replicated

operation.

Example 2

**

The following extract parameter filters the replicated operations marked with attribute region and value westcentral. And captures the application operations. Also, if there are other operations marked with a different attribute value, they will be extracted.

TRANLOGOPTIONS EXCLUDEFILTERATTRIBUTE region=westcentral

Filtered sample message:

{ "_id" : ObjectId("65544aa60b0a066d021ba508"), "CUST_CODE" : "test65", "name" :

"hello world", "cost" : 3000, "region":"westcentral"}

Extracted sample message:

{ "_id" : ObjectId("1881aa60bMKA66d021b1938"), "CUST_CODE" : "test38", "name" : "hello world", "cost" : 2000 } **

Note:

From version 23.4 onwards, the extract parameter FILTERATTRIBUTE is

renamed to EXCLUDEFILTERATTRIBUTE, the parameters

GETREPLICATES/IGNOREREPLICATE and

GETAPPLOPS/IGNOREAPPLOPS are deprecated. Usage of these

parameters results in abend of the extract process.

Parent topic: MongoDB Bidirectional Replication

9.1.10.8.4 Troubleshooting

- In bidirectional replication, If no before image is available for the delete

document then abend the process and error out.

Sample error

MONGODB-000XX No before image is available for collection [ <collection name> ] with the document [ <document> ]. - If MongoDB version used is less than 6, then

MONGODB-000XXMongoDB version should be 6 or greater to support bi-directional replication.

Parent topic: MongoDB Bidirectional Replication

9.1.10.9 Mongo DB Configuration Reference

The following properties are used with MongoDB change data capture.

| Properties | Required/Optional | Location | Default | Explanation |

|---|---|---|---|---|

OGGSOURCE <source> |

Required | GLOBALS file

Note: Starting from Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) release 23.4.0.0.0, this parameter will be deprecated. |

None |

The source database for CDC capture or database

queries. The valid value is |

|

|

Optional |

Extract Parameter file |

None | CLASSPATH: The classpath for the Java Virtual

Machine. You can include an asterisk (*) wildcard to match all JAR

files in any directory. Multiple paths should be delimited with a

colon (:) character. BOOTOPTIONS: The boot options

for the Java Virtual Machine. Multiple options are delimited by a

space character.

|

|

|

Optional |

GLOBALS file

Note: Starting from GG for DAA release 23.4.0.0.0, this parameter will be deprecated. |

None |

The boot options for the Java Virtual Machine. Multiple options are delimited by a space character. |

JVMCLASSPATH <classpath> |

Required |

GLOBALS file

Note: Starting from GG for DAA release 23..0,0.0.0, this parameter will be deprecated. |

None | The

classpath for the Java Virtual Machine. You can include an asterisk

(*) wildcard to match all JAR files in any directory. Multiple paths

should be delimited with a colon (:) character.

Example: |

SOURCEDB USERIDALIAS <alias

name> |

Required | Extract parameter (.prm) file | None | This parameter is used by the extract process for authentication in to the source MongoDB database. The alias name refers to the alias that should exist in Oracle Wallet. See Security and Authentication. |

ABEND_ON_DDL |

Optional | CDC Extract parameter (.prm) file | None | This is a default behaviour of MongoDB Capture

extract. On detection of CREATE collection,

RENAME collection, and DROP

collection, extract process will be abended.

|

NO_ABEND_ON_DDL |

Optional | CDC Extract parameter (.prm)

file

|

None |

On detection of |

ABEND_ON_DROP_DATABASE |

Optional | CDC Extract parameter (.prm)

file

|

None | This is a default behaviour of MongoDB Capture extract. On detection of Drop Database operation, extract process will be abended. |

NO_ABEND_ON_DROP_DATABASE |

Optional |

CDC Extract parameter (. |

None |

On detection of Drop Database operation, extract process will skip these operations and continue processing the next operation. |

BINARY_JSON_FORMAT |

Optional | prm | None |

When configured

When using When

|

TRANLOGOPTIONS

FETCHPARTIALJSON |

Optional | CDC Extract parameter (.prm)

file

|

None | On configuring tranlogoptions

FETCHPARTIALJSON, the extract process does a DB

lookup and fetches the full document for the given update operation.

See MongoDB Bidirectional Replication.

|

TRANLOGOPTIONS

READFROMCHANGESTREAM |

Optional | CDC Extract parameter (.prm)

file

|

None | On configuring TRANLOGOPTIONS

READFROMCHANGESTREAM, Capture monitor documents through

a Change Stream in MongoDB or

DocumentDB.

|

DBOPTIONS JSON_MODE

<CANONICAL/RELAXED> |

Optional | CDC Extract parameter (.prm)

file

|

CANONICAL | Specifies the JSON output format for MongoDB payload

data.

Valid values are:

|

Table Metadata

When BINARY_JSON_FORMAT is configured, the column metadata should

have data_type as 64, sub_data_type as 4, and JSON

as the Remarks.

Example:

2021/11/11 06:45:06.311.849 Metadata Len 143 RBA 1533 Table Name: MYTEST.TEST * 1)Name 2)Data Type 3)External Length 4)Fetch Offset 5)Scale 6)Level 7)Null 8)Bump if Odd 9)Internal Length 10)Binary Length 11)Table Length 12)Most Sig DT 13)Least Sig DT 14)High Precision 15)Low Precision 16)Elementary Item 17)Occurs 18)Key Column 19)Sub DataType 20)Native DataType 21)Character Set 22)Character Length 23)LOB Type 24)Partial Type 25)Remarks * TDR version: 11 Definition for table MYTEST.TEST Record Length: 16010 Columns: 2 id 64 8000 0 0 0 0 0 8000 8000 0 0 0 0 0 1 0 1 4 -4 -1 0 0 0 JSON payload 64 8000 8005 0 0 1 0 8000 8000 0 0 0 0 0 1 0 0 4 -4 -1 0 1 0 JSON End of definition s

When BINARY_JSON_FORMAT is not configured, the column metadata

should have data_type as 64, sub_data_type as 0,

and JSON as the Remarks.

Example

2021/11/11 06:45:06.311.849 Metadata Len 143 RBA 1533 Table Name: MYTEST.TEST * 1)Name 2)Data Type 3)External Length 4)Fetch Offset 5)Scale 6)Level 7)Null 8)Bump if Odd 9)Internal Length 10)Binary Length 11)Table Length 12)Most Sig DT 13)Least Sig DT 14)High Precision 15)Low Precision 16)Elementary Item 17)Occurs 18)Key Column 19)Sub DataType 20)Native DataType 21)Character Set 22)Character Length 23)LOB Type 24)Partial Type 25)Remarks * TDR version: 11 Definition for table MYTEST.TEST Record Length: 16010 Columns: 2 id 64 8000 0 0 0 0 0 8000 8000 0 0 0 0 0 1 0 1 0 -4 -1 0 0 0 JSON payload 64 8000 8005 0 0 1 0 8000 8000 0 0 0 0 0 1 0 0 0 -4 -1 0 1 0 JSON End of definition

Parent topic: MongoDB

9.1.10.10 Columns in Trail File

- Column 0 as ‘

_id’, which identifies a document in a collection. - Column 1 as ‘

payload’, which holds all the columns (fields of a collection).

Based on property BINARY_JSON_FORMAT, columns are presented as a BSON

format or Extended JSON format. When BINARY_JSON_FORMAT is configured,

the captured documents are represented in the BSON format as follows.

2021/10/26 06:21:33.000.000 Insert Len 329 RBA 1921 Name: MYTEST.TEST (TDR Index: 1) After Image: Partition x0c G s 0000 1a00 0000 1600 1600 0000 075f 6964 0061 7800 | ..............ax. ddc2 d894 d2f5 fca4 9e00 0100 2701 0000 2301 2301 | ............'...#.#. 0000 075f 6964 0061 7800 ddc2 d894 d2f5 fca4 9e02 | ..._id.ax........... 4355 5354 5f43 4f44 4500 0500 0000 7361 6162 0002 | CUST_CODE.....saab.. 6e61 6d65 0005 0000 006a 6f68 6e00 026c 6173 746e | name.....john..lastn 616d 6500 0500 0000 7769 6c6c 0003 6164 6472 6573 | ame.....will..addres 7365 7300 8300 0000 0373 7472 6565 7464 6574 6169 | ses......streetdetai Column 0 (0x0000), Length 26 (0x001a) id. 0000 1600 1600 0000 075f 6964 0061 7800 ddc2 d894 | ..........ax..... d2f5 fca4 9e00 | ...... Column 1 (0x0001), Length 295 (0x0127) payload. 0000 2301 2301 0000 075f 6964 0061 7800 ddc2 d894 | ..#.#.....ax..... d2f5 fca4 9e02 4355 5354 5f43 4f44 4500 0500 0000 | ......CUST_CODE..... 7361 6162 0002 6e61 6d65 0005 0000 006a 6f68 6e00 | saab..name.....john. 026c 6173 746e 616d 6500 0500 0000 7769 6c6c 0003 | .lastname.....will.. 6164 6472 6573 7365 7300 8300 0000 0373 7472 6565 | addresses......stree 7464 6574 6169 6c73 006f 0000 0003 6172 6561 0020 | tdetails.o....area. 0000 0003 5374 7265 6574 0013 0000 0001 6c61 6e65 | ....Street......lane 0000 0000 0000 005e 4000 0003 666c 6174 6465 7461 | .......^@...flatdeta 696c 7300 3700 0000 0166 6c61 746e 6f00 0000 0000 | ils.7....flatno..... 0040 6940 0270 6c6f 746e 6f00 0300 0000 3262 0002 | .@i@.plotno.....2b.. 6c61 6e65 0009 0000 0032 6e64 7068 6173 6500 0000 | lane.....2ndphase... 0003 7072 6f76 6973 696f 6e00 3000 0000 0373 7461 | ..provision.0....sta 7465 0024 0000 0003 6b61 001b 0000 0002 6b61 726e | te.$....ka......karn 6174 616b 6100 0700 0000 3537 3031 3032 0000 0000 | ataka.....570102.... 0263 6974 7900 0400 0000 626c 7200 00 | .city.....blr..

When BINARY_JSON_FORMAT is not configured, the captured documents are

represented in the JSON format as follows:

2021/10/01 01:09:35.000.000 Insert Len 366 RBA 1711

Name: MYTEST.testarr (TDR Index: 1)

After Image: Partition x0c G s

0000 2700 0000 2300 7b22 246f 6964 223a 2236 3135 | ..'...#.{"$oid":"615

3663 3233 6633 3466 3061 3965 3661 3735 3536 3930 | 6c23f34f0a9e6a755690

6422 7d01 003f 0100 003b 017b 225f 6964 223a 207b | d"}..?...;.{"_id": {

2224 6f69 6422 3a20 2236 3135 3663 3233 6633 3466 | "$oid": "6156c23f34f

3061 3965 3661 3735 3536 3930 6422 7d2c 2022 4355 | 0a9e6a755690d"}, "CU

5354 5f43 4f44 4522 3a20 2265 6d70 3122 2c20 226e | ST_CODE": "emp1", "n

616d 6522 3a20 226a 6f68 6e22 2c20 226c 6173 746e | ame": "john", "lastn

Column 0 (0x0000), Length 39 (0x0027).

0000 2300 7b22 246f 6964 223a 2236 3135 3663 3233 | ..#.{"$oid":"6156c23

6633 3466 3061 3965 3661 3735 3536 3930 6422 7d | f34f0a9e6a755690d"}

Column 1 (0x0001), Length 319 (0x013f).

0000 3b01 7b22 5f69 6422 3a20 7b22 246f 6964 223a | ..;.{"_id": {"$oid":

2022 3631 3536 6332 3366 3334 6630 6139 6536 6137 | "6156c23f34f0a9e6a7

3535 3639 3064 227d 2c20 2243 5553 545f 434f 4445 | 55690d"}, "CUST_CODE

223a 2022 656d 7031 222c 2022 6e61 6d65 223a 2022 | ": "emp1", "name": "

6a6f 686e 222c 2022 6c61 7374 6e61 6d65 223a 2022 | john", "lastname": "

7769 6c6c 222c 2022 6164 6472 6573 7365 7322 3a20 | will", "addresses":

7b22 7374 7265 6574 6465 7461 696c 7322 3a20 7b22 | {"streetdetails": {"

6172 6561 223a 207b 2253 7472 6565 7422 3a20 7b22 | area": {"Street": {"

6c61 6e65 223a 2031 3230 2e30 7d7d 2c20 2266 6c61 | lane": 120.0}}, "fla

7464 6574 6169 6c73 223a 207b 2266 6c61 746e 6f22 | tdetails": {"flatno"

3a20 3230 322e 302c 2022 706c 6f74 6e6f 223a 2022 | : 202.0, "plotno": "

3262 222c 2022 6c61 6e65 223a 2022 326e 6470 6861 | 2b", "lane": "2ndpha

7365 227d 7d7d 2c20 2270 726f 7669 7369 6f6e 223a | se"}}}, "provision":

207b 2273 7461 7465 223a 207b 226b 6122 3a20 7b22 | {"state": {"ka": {"

6b61 726e 6174 616b 6122 3a20 2235 3730 3130 3222 | karnataka": "570102"

7d7d 7d2c 2022 6369 7479 223a 2022 626c 7222 7d | }}}, "city": "blr"}

Parent topic: MongoDB

9.1.10.11 Update Operation Behavior

MongoDB Capture extract reads change records from the capped collection

oplog.rs. For Update operations, the collection contains

information on the modified fields only. Thus the MongoDB Capture extract will write

only the modified fields in trail on Update operation as MongoDB native

$set and $unset documents.

Example trail record:

2022/02/22 01:26:52.000.000 FieldComp Len 243 RBA 1711

Name: lobt.MNGUPSRT (TDR Index: 1)

Min. Replicat version: 21.5, Min. GENERIC version: 0.0, Incompatible Replicat: Abend

Column 0 (0x0000), Length 55 (0x0037) id.

0000 3300 7b20 225f 6964 2220 3a20 7b20 2224 6f69 | ..3.{ "_id" : { "$oi

6422 203a 2022 3632 3133 3633 3064 3931 3561 6631 | d" : "6213630d915af1

3633 3265 6264 6461 3766 2220 7d20 7d | 632ebdda7f" } }

Column 1 (0x0001), Length 180 (0x00b4) payload.

0000 b000 7b22 2476 223a 207b 2224 6e75 6d62 6572 | ....{"$v": {"$number

496e 7422 3a20 2231 227d 2c20 2224 7365 7422 3a20 | Int": "1"}, "$set":

7b22 6c61 7374 4d6f 6469 6669 6564 223a 207b 2224 | {"lastModified": {"$

6461 7465 223a 207b 2224 6e75 6d62 6572 4c6f 6e67 | date": {"$numberLong

223a 2022 3136 3435 3532 3230 3132 3238 3522 7d7d | ": "1645522012285"}}

2c20 2273 697a 652e 756f 6d22 3a20 2263 6d22 2c20 | , "size.uom": "cm",

2273 7461 7475 7322 3a20 2250 227d 2c20 225f 6964 | "status": "P"}, "_id

223a 207b 2224 6f69 6422 3a20 2236 3231 3336 3330 | ": {"$oid": "6213630

6439 3135 6166 3136 3332 6562 6464 6137 6622 7d7d | d915af1632ebdda7f"}}

GGS tokens:

TokenID x50 'P' COLPROPERTY Info x01 Length 6

Column: 1, Property: 0x02, Remarks: Partial

TokenID x74 't' ORATAG Info x01 Length 0

TokenID x4c 'L' LOGCSN Info x00 Length 20

3037 3036 3734 3633 3232 3633 3838 3131 3935 3533 | 07067463226388119553

TokenID x36 '6' TRANID Info x00 Length 19

3730 3637 3436 3332 3236 3338 3831 3139 3535 33 | 7067463226388119553

Here The GGS token x50 with Remarks as Partial indicates that this record is a partial record.

On configuring tranlogoptions FETCHPARTIALJSON, the extract process does

a database lookup and fetches the full document for the given update operation.

Example

2022/02/22 01:26:59.000.000 FieldComp Len 377 RBA 2564

Name: lobt.MNGUPSRT (TDR Index: 1)

Column 0 (0x0000), Length 55 (0x0037) id.

0000 3300 7b20 225f 6964 2220 3a20 7b20 2224 6f69 | ..3.{ "_id" : { "$oi

6422 203a 2022 3632 3133 3633 3064 3931 3561 6631 | d" : "6213630d915af1

3633 3265 6264 6461 3764 2220 7d20 7d | 632ebdda7d" } }

Column 1 (0x0001), Length 314 (0x013a) payload.

0000 3601 7b20 225f 6964 2220 3a20 7b20 2224 6f69 | ..6.{ "_id" : { "$oi

6422 203a 2022 3632 3133 3633 3064 3931 3561 6631 | d" : "6213630d915af1

3633 3265 6264 6461 3764 2220 7d2c 2022 6974 656d | 632ebdda7d" }, "item

2220 3a20 226d 6f75 7365 7061 6422 2c20 2271 7479 | " : "mousepad", "qty

2220 3a20 7b20 2224 6e75 6d62 6572 446f 7562 6c65 | " : { "$numberDouble

2220 3a20 2232 352e 3022 207d 2c20 2273 697a 6522 | " : "25.0" }, "size"

203a 207b 2022 6822 203a 207b 2022 246e 756d 6265 | : { "h" : { "$numbe

7244 6f75 626c 6522 203a 2022 3139 2e30 2220 7d2c | rDouble" : "19.0" },

2022 7722 203a 207b 2022 246e 756d 6265 7244 6f75 | "w" : { "$numberDou

626c 6522 203a 2022 3232 2e38 3530 3030 3030 3030 | ble" : "22.850000000

3030 3030 3031 3432 3122 207d 2c20 2275 6f6d 2220 | 000001421" }, "uom"

3a20 2269 6e22 207d 2c20 2273 7461 7475 7322 203a | : "in" }, "status" :

2022 5022 2c20 226c 6173 744d 6f64 6966 6965 6422 | "P", "lastModified"

203a 207b 2022 2464 6174 6522 203a 207b 2022 246e | : { "$date" : { "$n

756d 6265 724c 6f6e 6722 203a 2022 3136 3435 3532 | umberLong" : "164552

3230 3139 3936 3122 207d 207d 207d | 2019961" } } }

GGS tokens:

TokenID x46 'F' FETCHEDDATA Info x01 Length 1

6 | Current by key

TokenID x4c 'L' LOGCSN Info x00 Length 20

3037 3036 3734 3633 3235 3634 3532 3839 3036 3236 | 07067463256452890626

TokenID x36 '6' TRANID Info x00 Length 19

3730 3637 3436 3332 3536 3435 3238 3930 3632 36 | 7067463256452890626

Here The GGS token x46 FETCHEDDATA indicates that this record is full

image for the update operation.

Parent topic: MongoDB

9.1.10.12 Oplog Size Recommendations

By default, MongoDB uses 5% of disk space as oplog size.

Oplog should be long enough to hold all transactions for the longest downtime you expect on a secondary. At a minimum, an oplog should be able to hold minimum 72 hours of operations or even a week’s work of operations.

Before mongod creates an oplog, you can specify its size with the

--oplogSize option.

After you have started a replica set member for the first time, use the

replSetResizeOplog administrative command to change the oplog size.

replSetResizeOplog enables you to resize the oplog dynamically

without restarting the mongod process.

Workloads Requiring Larger Oplog Size

If you can predict your replica set's workload to resemble one of the following patterns, then you might want to create an oplog that is larger than the default. Conversely, if your application predominantly performs reads with a minimal amount of write operations, a smaller oplog may be sufficient.

The following workloads might require a larger oplog size.

Updates to Multiple Documents at Once

The oplog must translate multi-updates into individual operations in order to maintain idempotency. This can use a great deal of oplog space without a corresponding increase in data size or disk use.

Deletions Equal the Same Amount of Data as Inserts

If you delete roughly the same amount of data as you insert, then the database doesn't grow significantly in disk use, but the size of the operation log can be quite large.

Significant Number of In-Place Updates

If a significant portion of the workload is updates that do not increase the size of the documents, then the database records a large number of operations but does not change the quantity of data on disk.

Parent topic: MongoDB

9.1.10.13 Troubleshooting

- Error : com.mongodb.MongoQueryException: Query

failed with error code 11600 and error message

'interrupted at shutdown' on server

localhost:27018.

The MongoDB server is killed or closed. Restart the Mongod instances and MongoDB capture.

- Error: java.lang.IllegalStateException: state

should be: open.

The active session is closed due to the session's idle time-out value getting exceeded. Increase the mongod instance's

logicalSessionTimeoutMinutesparamater value and restart the Mongod instances and MongoDB capture. - Error:Exception in thread "main"

com.mongodb.MongoQueryException: Query failed with

error code 136 and error message 'CollectionScan

died due to position in capped collection being

deleted. Last seen record id:

RecordId(6850088381712443337)' on server

localhost:27018 at

com.mongodb.internal.operation.QueryHelper.translateCommandException(QueryHelper.java:29)

This Exception happens when we have Fast writes to mongod and insufficient oplog size. See Oplog Size Recommendations.

- Error: not authorized on DB to execute

command

This error occurs due to insufficient privileges for the user. The user must be authenticated to run the specified command.

- Error: com.mongodb.MongoClientException: Sessions

are not supported by the MongoDB cluster to which this client is connected.

Ensure that the Replica Set is available and accessible. In case of MongoDB instance migration from a different version, set the property

FeatureCompatibilityVersionas follows:db.adminCommand( { setFeatureCompatibilityVersion: "3.6" } ){_} - Error: bson_append_array(): Invalid array detected. First element of

array parameter is not "0".

To suppress the given message in the Amazon DocumentDB capture report file, append the query param "&authMechanism=SCRAM-SHA-1" to the connection URL.

Parent topic: MongoDB

9.1.10.14 MongoDB Capture Client Dependencies

What are the dependencies for the MongoDB Capture to connect to MongoDB databases?

Oracle GoldenGate requires that you use the 5.x MongoDB reactive streams or higher integration with MongoDB. You can download this driver from: https://search.maven.org/artifact/org.mongodb/mongodb-driver-reactivestream

Parent topic: MongoDB

9.1.10.14.1 Reactive Streams Java Driver 5.0.1

The required dependent client libraries are: bson.jar,

mongodb-driver-core.jar,

mongodb-driver-reactivestreams.jar, and

reactive-streams.jar and reactor-core.jar

You must include the path to the MongoDB reactivestreams Java driver in the

gg.classpath property. To automatically download the Java driver

from the Maven central repository, add the following Maven coordinates of these third

party libraries that are needed to run MongoDB Change Data Capture in the

pom.xml file:

<!-- https://search.maven.org/artifact/org.mongodb/mongodb-driver-reactivestreams --> <dependency> <groupId>org.mongodb</groupId> <artifactId>mongodb-driver-reactivestreams</artifactId> <version>5.0.1</version> </dependency> <dependency> <groupId>org.mongodb</groupId> <artifactId>bson</artifactId> <version>5.0.1</version> </dependency> <dependency> <groupId>org.mongodb</groupId> <artifactId>mongodb-driver-core</artifactId> <version>5.0.1</version> </dependency> <dependency> <groupId>org.reactivestreams</groupId> <artifactId>reactive-streams</artifactId> <version>1.0.4</version> </dependency> <dependency> <groupId>io.projectreactor</groupId> <artifactId>reactor-core</artifactId> <version>3.5.0</version> </dependency>

The jars are as follows:

- bson-5.1.0.jar

- bson-record-codec-5.1.0.jar

- mongodb-driver-core-5.1.0.jar

- mongodb-driver-reactivestreams-5.1.0.jar

- reactive-streams-1.0.4.jar

- reactor-core-3.5.0.jar

Download version 5.1.0 from Maven central at: https://mvnrepository.com/artifact/org.mongodb/mongodb-driver-reactivestreams.

Parent topic: MongoDB Capture Client Dependencies