9.2.28 MongoDB

Learn how to use the MongoDB Handler, which can replicate transactional data from Oracle GoldenGate to a target MongoDB and Autonomous JSON databases (AJD and ATP) .

- Overview

- MongoDB Wire Protocol

- Supported Target Types

- Detailed Functionality

- Setting Up and Running the MongoDB Handler

- Security and Authentication

- Reviewing Sample Configurations

- MongoDB to AJD/ATP Migration

- Configuring an Initial Synchronization of Extract for a MongoDB Source Database using Precise Instantiation

- Delivery to Oracle JSON Collection Table (JCT)

- MongoDB Handler Client Dependencies

What are the dependencies for the MongoDB Handler to connect to MongoDB databases?

Parent topic: Target

9.2.28.1 Overview

Mongodb Handler can used to replicate data from RDMS as well as document based databases like Mongodb or Cassandra to the following target databases using MongoDB wire protocol

Parent topic: MongoDB

9.2.28.2 MongoDB Wire Protocol

The MongoDB Wire Protocol is a simple socket-based, request-response style protocol. Clients communicate with the database server through a regular TCP/IP socket, see https://docs.mongodb.com/manual/reference/mongodb-wire-protocol/.

Parent topic: MongoDB

9.2.28.3 Supported Target Types

-

MongoDB is an open-source document database that provides high performance, high availability, and automatic scaling, see https://www.mongodb.com/.

-

Oracle Autonomous JSON Database (AJD) is a cloud document database service that makes it simple to develop JSON-centric applications, see Autonomous JSON Database | Oracle.

-

Autonomous Database for transaction processing and mixed workloads (ATP) is a fully automated database service optimized to run transactional, analytical, and batch workloads concurrently, see Autonomous Transaction Processing | Oracle.

- On-premises Oracle Database 21c with Database API for MongoDB is also a supported target. See Installing Database API for MongoDB for any Oracle Database.

Parent topic: MongoDB

9.2.28.4 Detailed Functionality

The MongoDB Handler takes operations from the source trail file and creates corresponding documents in the target MongoDB or Autonomous databases (AJD and ATP).

A record in MongoDB is a Binary JSON (BSON) document, which is a data structure composed of field and value pairs. A BSON data structure is a binary representation of JSON documents. MongoDB documents are similar to JSON objects. The values of fields may include other documents, arrays, and arrays of documents.

A collection is a grouping of MongoDB or AJD/ATP documents and is the equivalent of an RDBMS table. In MongoDB or AJD/ATP databases, a collection holds collection of documents. Collections do not enforce a schema. MongoDB or AJD/ATP documents within a collection can have different fields.

9.2.28.4.1 Document Key Column

MongoDB or AJD/ATP databases require every document (row) to have a

column named _id whose value should be unique in a collection

(table). This is similar to a primary key for RDBMS tables. If a document does not

contain a top-level _id column during an insert, the MongoDB driver

adds this column.

The MongoDB Handler builds custom _id field values for

every document based on the primary key column values in the trail record. This

custom _id is built using all the key column values concatenated

by a : (colon) separator. For example:

KeyColValue1:KeyColValue2:KeyColValue3The MongoDB Handler enforces uniqueness based on these custom

_id values. This means that every record in the trail must be

unique based on the primary key columns values. Existence of non-unique records for

the same table results in a MongoDB Handler failure and in Replicat abending with a

duplicate key error.

The behavior of the _id field is:

-

By default, MongoDB creates a unique index on the column during the creation of a collection.

-

It is always the first column in a document.

-

It may contain values of any BSON data type except an array.

Parent topic: Detailed Functionality

9.2.28.4.2 Primary Key Update Operation

_id column to be

modified. This means a primary key update operation record in the trail needs special

handling. The MongoDB Handler converts a primary key update operation into a combination

of a DELETE (with old key) and an INSERT (with new

key). To perform the INSERT, a complete before-image of the update

operation in trail is recommended. You can generate the trail to populate a complete

before image for update operations by enabling the Oracle GoldenGate

GETUPDATEBEFORES and NOCOMPRESSUPDATES

parameters.

Parent topic: Detailed Functionality

9.2.28.4.3 MongoDB Trail Data Types

The MongoDB Handler supports delivery to the BSON data types as follows:

-

32-bit integer

-

64-bit integer

-

Double

-

Date

-

String

-

Binary data

Parent topic: Detailed Functionality

9.2.28.5 Setting Up and Running the MongoDB Handler

The following topics provide instructions for configuring the MongoDB Handler components and running the handler.

- Classpath Configuration

- MongoDB Handler Configuration

- Using Bulk Write

- Using Write Concern

- Using Three-Part Table Names

- Using Undo Handling

Parent topic: MongoDB

9.2.28.5.1 Classpath Configuration

The MongoDB Java Driver is required for Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) to connect and stream data to MongoDB. If the GG for DAA version is 21.7.0.0.0 and below, then you need to use 3.x (MongoDB Java Driver 3.12.8). If the GG for DAA version is 21.8.0.0.0 and above, then you need to use MongoDB Java Driver 4.6.0. The MongoDB Java Driver is not included in the GG for DAA product. You must download the driver from: mongo java driver.

Select mongo-java-driver and the version to download the recommended driver JAR file.

You must configure the gg.classpath variable to load the MongoDB

Java Driver JAR at runtime. For example:

gg.classpath=/home/mongodb/mongo-java-driver-3.12.8.jar.

GG for DAA supports the MongoDB Decimal 128 data type that was added in MongoDB 3.4.

Use of a MongoDB Java Driver prior to 3.12.8 results in a

ClassNotFound exception.

Parent topic: Setting Up and Running the MongoDB Handler

9.2.28.5.2 MongoDB Handler Configuration

You configure the MongoDB Handler operation using the properties file. These properties are located in the Java Adapter properties file (not in the Replicat properties file).

To enable the selection of the MongoDB Handler, you must first configure the handler type by specifying gg.handler.name.type=mongodb and the other MongoDB properties as follows:

Table 9-34 MongoDB Handler Configuration Properties

| Properties | Required/ Optional | Legal Values | Default | Explanation |

|---|---|---|---|---|

|

|

Required |

|

None |

Selects the MongoDB Handler for use with Replicat. |

|

|

Optional |

|

|

Set to Set to |

|

|

Optional |

|

None |

Sets the required write concern for all the operations performed by the MongoDB Handler. The property value is in JSON format and can only accept keys as |

|

|

Optional |

Valid MongoDB client URI |

None |

Sets the MongoDB client URI. A client URI can also be used to set other MongoDB

connection properties, such as authentication and

|

|

|

Optional |

|

|

When set to If the size of the document exceeds the MongoDB limit, an exception occurs and Replicat abends. |

|

|

Optional |

|

|

Set to |

|

|

Optional |

|

|

MongoDB version 3.4 added support for a 128-bit decimal data type called Decimal128.

This data type was needed since Oracle GoldenGate for

Distributed Applications and Analytics (GG for DAA) supports

both integer and decimal data types that do not fit into a

64-bit Long or Double. Setting this property to

|

|

|

Optional |

|

|

Set to Note: MongoDB added support for transactions in MongoDB version 4.0. Additionally, the minimum version of the MongoDB client driver is 3.10.1. |

Parent topic: Setting Up and Running the MongoDB Handler

9.2.28.5.3 Using Bulk Write

Bulk write is enabled by default. For better throughput, Oracle recommends that you use bulk write.

You can also enable bulk write by using the BulkWrite handler

property. To enable or disable bulk write use the

gg.handler.handler.BulkWrite=true | false.

The MongoDB Handler does not use the

gg.handler.handler.mode=

property that is used by Oracle GoldenGate for Distributed Applications and

Analytics (GG for DAA).

op | tx

With bulk write, the MongoDB Handler uses the GROUPTRANSOPS parameter to retrieve the batch size. The handler converts a batch of trail records to MongoDB documents, which are then written to the database in one request.

Parent topic: Setting Up and Running the MongoDB Handler

9.2.28.5.4 Using Write Concern

Write concern describes the level of acknowledgement that is requested from MongoDB for write operations to a standalone MongoDB, replica sets, and sharded-clusters. With sharded-clusters, Mongo instances pass the write concern on to the shards, see https://docs.mongodb.com/manual/reference/write-concern/.

Use the following configuration:

w: value

wtimeout: numberParent topic: Setting Up and Running the MongoDB Handler

9.2.28.5.5 Using Three-Part Table Names

An Oracle GoldenGate trail may have data for sources that support three-part table names, such as Catalog.Schema.Table. MongoDB only supports two-part names, such as DBName.Collection. To support the mapping of source three-part names to MongoDB two-part names, the source Catalog and Schema is concatenated with an underscore delimiter to construct the Mongo DBName.

For example, Catalog.Schema.Table would become catalog1_schema1.table1.

Parent topic: Setting Up and Running the MongoDB Handler

9.2.28.5.6 Using Undo Handling

The MongoDB Handler can recover from bulk write errors using a lightweight undo engine. This engine works differently from typical RDBMS undo engines, rather the best effort to assist you in error recovery. Error recovery works well when there are primary violations or any other bulk write error where the MongoDB database provides information about the point of failure through BulkWriteException.

Table 9-35Table 1 lists the requirements to make the best use of this functionality.

Table 9-35 Undo Handling Requirements

| Operation to Undo | Require Full Before Image in the Trail? |

|---|---|

|

|

No |

|

|

Yes |

|

|

No (before image of fields in the |

If there are errors during undo operations, it may be not possible to get the MongoDB collections to a consistent state. In this case, you must manually reconcile the data.

Parent topic: Setting Up and Running the MongoDB Handler

9.2.28.6 Security and Authentication

- MongoDB URI-Based Authentication

- TLS/SSL Configuration

MongoDB connections can be secured using TLS or mutual TLS (mTLS). - SSL Configuration Setup

- MongoDB Extract and Replicat Configuration

For secure connection to MongoDB cluster with X509 authentication and authorization enabled, the connection URI must include parameters for the authentication type, client certificate, and CA certificate. Also, we need to pass the client and server trust-store/key-store files and their passwords in JVM boot options.

Parent topic: MongoDB

9.2.28.6.1 MongoDB URI-Based Authentication

MongoDB uses a URI-style connection string that supports various authentication methods and options as query parameters.

Parent topic: Security and Authentication

9.2.28.6.1.1 Authentication Methods

-

SCRAM-SHA-256 : Recommended password-based authentication mechanism.

Example:

mongodb://username@host:27017/?authSource=admin&authMechanism=SCRAM-SHA-256 - SCRAM-SHA-1 : For backward compatibility.

Example:

mongodb://username@host:27017/?authSource=admin&authMechanism=SCRAM-SHA-1 - PLAIN (LDAP): Requires TLS.

Example:

mongodb://ldapUser@host:27017/?authMechanism=PLAIN&authSource=$external&tls=true - x.509 Certificate Authentication:

Example:

mongodb://host:27017/?authMechanism=MONGODB-X509&tls=true&tlsCertificateKeyFile=client.pem&tlsCAFile=ca.pemNote:

For more information on X509 configuration steps, see MongoDB Extract and Replicat Configuration.

Parent topic: MongoDB URI-Based Authentication

9.2.28.6.2 TLS/SSL Configuration

MongoDB connections can be secured using TLS or mutual TLS (mTLS).

Note:

For more information, see SSL Configuration Setup to generate the certificates required fortls/mtls.

Parent topic: Security and Authentication

9.2.28.6.2.1 Disable TLS (Not Recommended)

Example: mongodb://host:27017/?tls=false

Parent topic: TLS/SSL Configuration

9.2.28.6.2.2 Enable TLS

- If server uses the CA-trusted certificate:

Example:

mongodb://host:27017/?tls=true - If server uses the self-signed certificate:

Example:

mongodb://host:27017/?tls=true&tlsAllowInvalidCertificates=trueor

Example:mongodb://host:27017/?tls=trueNote:

The truststore information should be provided in the JVM bootoptions of replicat properties as-Djavax.net.ssl.trustStore=/path/to/truststore.jks -Djavax.net.ssl.trustStorePassword=changeit

Parent topic: TLS/SSL Configuration

9.2.28.6.2.3 Mutual TLS (mTLS)

Performs the client certificate validation along with the server certificate validation.

mongodb://host:27017/?tls=trueNote:

The truststore and keystore information should be provided in the JVM bootoptions.JVM bootoptions for mTLS:

-Djavax.net.ssl.trustStore=/path/to/truststore.jks

-Djavax.net.ssl.trustStorePassword=changeit

-Djavax.net.ssl.keyStore=/path/to/keystore.jks

-Djavax.net.ssl.keyStorePassword=changeit

Parent topic: TLS/SSL Configuration

9.2.28.6.3 SSL Configuration Setup

To configure SSL between the MongoDB instance and Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) MongoDB Handler, do the following:

openssl req -passout pass:password -new -x509 -days 3650 -extensions v3_ca -keyout

ca_private.pem -out ca.pem -subj

"/CN=CA/OU=GOLDENGATE/O=ORACLE/L=BANGALORE/ST=KA/C=IN"Create key and certificate signing requests (CSR) for client and all server nodes

openssl req -newkey rsa:4096 -nodes -out client.csr -keyout client.key -subj

'/CN=certName/OU=OGGBDCLIENT/O=ORACLE/L=BANGALORE/ST=AP/C=IN'

openssl req -newkey rsa:4096 -nodes -out server.csr -keyout server.key -subj

'/CN=slc13auo.us.oracle.com/OU=GOLDENGATE/O=ORACLE/L=BANGALORE/ST=TN/C=IN'

Sign the certificate signing requests with CA

openssl x509 -passin pass:password -sha256 -req -days 365 -in client.csr -CA ca.pem -CAkey

ca_private.pem -CAcreateserial -out client-signed.crt

openssl x509 -passin pass:password -sha256 -req -days 365 -in server.csr -CA ca.pem -CAkey

ca_private.pem -CAcreateserial -out server-signed.crt -extensions v3_req -extfile

<(cat << EOF[ v3_req ]subjectAltName = @alt_names

[ alt_names ]

DNS.1 = 127.0.0.1

DNS.2 = localhost

DNS.3 = hostname

EOF)

cat client-signed.crt client.key > client.pem

cat server-signed.crt server.key > server.pemopenssl pkcs12 -export -out server.pkcs12 -in server.pem

openssl pkcs12 -export -out client.pkcs12 -in client.pemNote:

Provide the password for server and client trust-store when prompted.bash-4.2$ ls

ca.pem ca_private.pem client.csr client.pem server-signed.crt server.key server.pkcs12

ca.srl client-signed.crt client.key client.pkcs12 server.csr server.pem

openssl x509 -in <pathToClientPEM> -inform PEM -subject -nameopt RFC2253

Example Output:

subject= CN=myName,OU=myOrgUnit,O=myOrg,L=myLocality,ST=myState,C=myCountry

Start MongoDB instance without TLS/SSL to create a user

Note:

The user name should match with the client certificate subject received for MongoDB and the role as admin.mongosh --bind_ip <hostname> --port <port>

db.getSiblingDB("$external").runCommand({

createUser: " CN=myName,OU=myOrgUnit,O=myOrg,L=myLocality,ST=myState,C=myCountry ",

roles: [{ role: "root", db: "admin" }]

});

Restart MongoDB cluster with TLS/SSL enabled

--tlsMode requireTLS --tlsCertificateKeyFile ../opensslKeys/server.pem --tlsCAFile

../opensslKeys/ca.pem

OR

Create a config file for each node of MongoDB cluster with relevant node information.

net:

bindIp: <hostname of node>

port: <port number of node>

tls:

mode: requireTLS

certificateKeyFile: /certificates/server.pem

CAFile: /certificates/ca.pem

clusterFile: /certificates/server.pem

replication:

replSetName: "rs0"

storage:

dbPath: /data/d1

security:

authorization: enabled

clusterAuthMode: x509

mongod –config node1.conf

mongod –config node2.conf

mongod –config node3.conf

Initialize replica Set for MongoDB cluster

mongosh "mongodb://host-name:port/?authMechanism=MONGODB-X509&tls=true" --tlsCertificateKeyFile=certs/client.pem --tlsCAFile=certs/ca.pem mongosh “mongodb://hostname:port/?authMechanism=MONGODBX509&tls=true&tlsCertificateKeyFile=/path/to/certificates/client.pem&tlsCAFile=/path/to/certificates/ca.pem”rs.initiate({ _id: "rs1", members:

[

{ id: 0, host: "localhost:27017" },

{ id: 1, host: "localhost:27018" },

{ id: 2, host: "localhost:27019" }

] });

Parent topic: Security and Authentication

9.2.28.6.4 MongoDB Extract and Replicat Configuration

For secure connection to MongoDB cluster with X509 authentication and authorization enabled, the connection URI must include parameters for the authentication type, client certificate, and CA certificate. Also, we need to pass the client and server trust-store/key-store files and their passwords in JVM boot options.

Connection URI format

mongodb://hostname:port/?authMechanism=MONGODBX509&tls=true&tlsCertificateKeyFile=/path/to/certificates/client.pem&tlsCAFile=/path/to/certificates/ca.pem

Jvm.bootoptions= -Xms512m -Xmx4024m -Xss32m -Djavax.net.ssl.trustStore=../mcopensslkeys /server.pkcs12 -Djavax.net.ssl.trustStorePassword=<trust-store password> -Djavax.net.ssl.keyStore=../mcopensslkeys/client.pkcs12 -Djavax.net.ssl.keyStorePassword=<key-store password>

add credentialstore user mongodb://hostname:port/?authMechanism=MONGODBX509&tls=true&tlsCertificateKeyFile=/path/to/certificates/client.pem&tlsCAFile=/path/to/certificates/ca.pem password root alias mongo

alter credentialstrore add user mongodb://hostname:port/?authMechanism=MONGODBX509&tls=true&tlsCertificateKeyFile=/path/to/certificates/client.pem&tlsCAFile=/path/to/certificates/ca.pem password root alias mongo

Note:

The length of connectionString should not exceed 256.Extract configuration

EXTRACT emongo

EXTTRAIL mn

SOURCEDB USERIDALIAS mongo DOMAIN OracleGoldenGate

JVMOPTIONS BOOTOPTIONS -Xms512m -Xmx4024m -Xss32m -Djavax.net.ssl.trustStore=/certs/server.pkcs12 -Djavax.net.ssl.trustStorePassword=pass -Djavax.net.ssl.keyStore=/certs/client.pkcs12 -Djavax.net.ssl.keyStorePassword=pass CLASSPATH $OGG_HOME/ggjava/ggjava.jar:/DependencyDownloader/dependencies/mongodb_capture_5.0.1/*

no_abend_on_ddl

TABLE srcDB.*;

Replicat configuration

gg.handlerlist=mongodb

gg.handler.mongodb.type=mongodb

gg.handler.mongodb.clientURI=mongodb://hostname:port/?authMechanism=MONGODBX509&tls=true&tlsCertificateKeyFile=/path/to/certificates/client.pem&tlsCAFile=/path/to/certificates/ca.pem

gg.classpath=/DependencyDownloader/dependencies/mongodb_5.0.1/*

jvm.bootoptions=-Xms512m -Xmx4024m -Xss32m -Djavax.net.ssl.trustStore=/tmp/certs/server.pkcs12 -Djavax.net.ssl.trustStorePassword=pass -Djavax.net.ssl.keyStore=/tmp/certs/client.pkcs12 -Djavax.net.ssl.keyStorePassword=pass

Parent topic: Security and Authentication

9.2.28.7 Reviewing Sample Configurations

Basic Configuration

The following is a sample configuration for the MongoDB Handler from the Java adapter properties file:

gg.handlerlist=mongodb gg.handler.mongodb.type=mongodb #The following handler properties are optional. #Refer to the Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) documentation #for details about the configuration. #gg.handler.mongodb.clientURI=mongodb://localhost:27017/ #gg.handler.mongodb.WriteConcern={w:value, wtimeout: number } #gg.handler.mongodb.BulkWrite=false #gg.handler.mongodb.CheckMaxRowSizeLimit=true goldengate.userexit.timestamp=utc goldengate.userexit.writers=javawriter javawriter.stats.display=TRUE javawriter.stats.full=TRUE gg.log=log4j gg.log.level=INFO gg.report.time=30sec #Path to MongoDB Java driver. # maven co-ordinates # <dependency> # <groupId>org.mongodb</groupId> # <artifactId>mongo-java-driver</artifactId> # <version>3.10.1</version> # </dependency> gg.classpath=/path/to/mongodb/java/driver/mongo-java-driver-3.10.1.jar javawriter.bootoptions=-Xmx512m -Xms32m -Djava.class.path=.:ggjava/ggjava.jar:./dirprm

Oracle or MongDB Database Source to MongoDB, AJD, and ATP Target

You can map an Oracle or MongDB Database source table name in uppercase to a table in MongoDB that is in lowercase. This applies to both table names and schemas. There are two methods that you can use:

- Create a Data Pump

-

You can create a data pump before the Replicat, which translates names to lowercase. Then you configure a MongoDB Replicat to use the output from the pump:

extract pmp exttrail ./dirdat/le map RAMOWER.EKKN, target "ram"."ekkn"; - Convert When Replicating

-

You can convert table column names to lowercase when replicating to the MongoDB table by adding this parameter to your MongoDB properties file:

gg.schema.normalize=lowercase

Parent topic: MongoDB

9.2.28.8 MongoDB to AJD/ATP Migration

Parent topic: MongoDB

9.2.28.8.1 Overview

Oracle Autonomous JSON Database (AJD) and Autonomous Database for transaction processing also uses wire protocol to connect. Wire protocol has the same MongoDB CRUD APIs.

Parent topic: MongoDB to AJD/ATP Migration

9.2.28.8.2 Configuring MongoDB handler to Write to AJD/ATP

Basic configuration remains the same including optional properties mentioned in this chapter.

The handler uses same protocol (mongodb wire protocol) and same driver jar for Autonomous databases as that of mongodb for performing all operation in target agnostic manner for performing the replication. The properties can also be used for any of the supported targets.

gg.handlerlist=mongodb gg.handler.mongodb.type=mongodb #URL mentioned below should be an AJD instance URL gg.handler.mongodb.clientURI=mongodb://[username]:[password]@[url]?authSource=$external&authMechanism=PLAIN&ssl=true #Path to MongoDB Java driver. Maven co-ordinates # <dependency> # <groupId>org.mongodb</groupId> # <artifactId>mongo-java-driver</artifactId> # <version>3.10.1</version> # </dependency> gg.classpath=/path/to/mongodb/java/driver/mongo-java-driver-3.10.1.jar javawriter.bootoptions=-Xmx512m -Xms32m -Djava.class.path=.:ggjava/ggjava.jar:./dirprm

Parent topic: MongoDB to AJD/ATP Migration

9.2.28.8.3 Steps for Migration

To migrate from MongoDB to AJD, first it is required to run initial load. Initial load comprises inserts operations only. After running initial load, start CDC which keeps the source and target database synchronized.

- Start CDC extract and generate trails. Do not start replicat to consume these trail files.

- Start Initial load extract and wait for initial load to complete.

- Create a new replicat to consume the initial load trails generated in Step 2. Wait for completion and then stop replicat.

- Create a new replicate to consume the CDC trails. Configure this replicat to use

HANDLECOLLISIONSand then start replicat. - Wait for the CDC replicat (Step 4) to consume all the trails, check replicat lag, and replicat RBA to ensure that the CDC replicat has caught up. At this point, the source and target databases should be in sync.

- Stop the CDC replicat, remove

HANDLECOLLISIONSparameter, and then restart the CDC replicat.

Parent topic: MongoDB to AJD/ATP Migration

9.2.28.8.4 Best Practices

- Before running CDC, ensure to run initial load, which loads the initial data using insert operations.

- Use bulk mode for running mongoDB handler in order to achieve better throughput.

- Enable handle-collision while migration to allow replicat to handle any collision error automatically.

- In order to insert missing update, ensure to add the

INSERTMISSINGUPDATESproperty in the.prmfile.

Parent topic: MongoDB to AJD/ATP Migration

9.2.28.9 Configuring an Initial Synchronization of Extract for a MongoDB Source Database using Precise Instantiation

This article how to perform an initial synchronization of data from a MongoDB source database to a MongoDB/ORDS target using the Precise Instantiation method. This approach eliminates need for initial load enhancing performance and ensures that no collisions occur in the target Replicat, optimizing performance by eliminating the need for collision handling, which can degrade performance.

This precise instantiation approach involves taking a snapshoof the MongoDB source database using the MongoDB dump utility, and synchronizing the first operation of this initial dump with the starting position of Change Data Capture (CDC) on the Extract side. This process ensures no data loss or duplication between the dumped data and the subsequent CDC trail generated by the Extract.

Parent topic: MongoDB

9.2.28.9.1 Overview of the Synchronization Process

- MongoDB Dump Utility: The mongodump utility is used to create a

snapshot of the source MongoDB database. It can dump documents, metadata, and index

definitions to a binary archive. When the

--oplogoption is enabled, it also writes an entry in theoplog.bsonfile, capturing all operations (including any that occur during the dump) with their timestamps. - Analyzing the Oplog: The

oplog.bsonfile contains a series of operations and their timestamps. This file is analyzed to identify the first and last operation during the dump. If no operations occurred during the dump process, theoplog.bsoncontains only anoopentry. The timestamps for the first and last operations (or the firstnoopentry if no operations occurred) are critical for synchronizing the CDC Extract. - MongoDB Restore Utility: After the dump is completed, the mongorestore

utility is used to restore the data, metadata, and index definitions to the target

MongoDB instance. The

--nsIncludeoption allows for selective restoration of specific collections on target. - CDC Extract and Replicat: The CDC Extract is started from the first operation

timestamp noted in the

oplog.bsonfile. The Replicat is configured to apply changes from the trail file, starting from the last operation timestamp from theoplog.bson, configured in theoplogReplayLastLsnproperty ensuring no need for handlecollision.

These steps ensure that no operation is missed and avoid any duplication on target.

9.2.28.9.2 Prerequisites

Ensure the following:

- MongoDB source version is 5 or higher.

- Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) 23.7 or above is installed and configured for both the source MongoDB and target database instances.

- The MongoDB Database Tools (including mongodump and mongorestore) are installed, and the respective directory paths are added to the path environment variable.

9.2.28.9.3 Step-by-Step Instructions

- Run MongoDB Dump Utility. Run the

mongodumpcommand with the--oplogoption to create a snapshot of the source MongoDB database:$ ./bin/mongodump --uri="mongodb://localhost:27021" --oplog -v Output: 2025-02-28T07:24:53.260+0000 getting most recent oplog timestamp 2025-02-28T07:24:53.268+0000 writing admin.system.version to dump/admin/system.version.bson 2025-02-28T07:24:53.269+0000 done dumping admin.system.version (1 document) 2025-02-28T07:24:53.270+0000 dumping up to 4 collections in parallel 2025-02-28T07:24:53.271+0000 writing testDB.coll1 to dump/testDB/coll1.bson 2025-02-28T07:24:53.272+0000 writing testDB.coll2 to dump/testDB/coll2.bson 2025-02-28T07:24:53.272+0000 writing testDB.coll3 to dump/testDB/coll3.bson 2025-02-28T07:24:53.303+0000 done dumping testDB.coll3 (10000 documents) 2025-02-28T07:24:53.313+0000 done dumping testDB.coll1 (10000 documents) 2025-02-28T07:24:53.326+0000 done dumping testDB.coll2 (10000 documents) 2025-02-28T07:24:53.328+0000 writing captured oplog to 2025-02-28T07:24:53.328+0000 dumped 7 oplog entries

This generates a dump folder containing the binary archive data file in the following:dump/database-name/collection-name/collection-name.bsonfor all databases and collections and anoplog.bsondirectly under dump folder. To inspect the contents of theoplog.bsonfile (which is in binary format and not human readable), you can convert it to JSON using the bsondump utility:$ ./bin/bsondump --pretty --outFile /path/to/oplog.json dump/oplog.bson Output: 2025-02-28T07:25:03.346+0000 7 objects found

- Extract Timestamps Using

OplogLSN.sh.Run the

OplogLSN.shscript to extract the first and last operation timestamps from theoplog.bsonfile by providing the path tooplog.bsonfile as an argument as follows:$./oplogLSN.sh /path/to/dump/oplog.bsonThe output provides the timestamps of the first and last operation (or the first

Output:noopif no operation occurred during the dump process)2025-02-27T13:53:00.885+0000 1 objects found First LSN: 1740663867.1 Last LSN: 1740663946.211

- Inspect Oplog Entries.

If there were any incoming operations during the dump, then the

oplog.bsonfile contains entries for those operations, each with a timestamp. You can useOplogLSN.shto capture the first and last operation timestamps OR convert it to JSON file for manual inspection, as shown in the previous step. - Run MongoDB Restore Utility.

After you have the necessary timestamps, use the mongorestore utility to restore the selected collections from the dump to the target MongoDB instance:

$ ./mongorestore --uri="mongodb://localhost:27021" --nsInclude=testDB.coll1 --nsInclude=testDB.coll2 /path/to/dump -v Output: 2025-02-28T07:26:08.760+0000 using write concern: &{majority <nil> 0s} 2025-02-28T07:26:08.785+0000 using default 'dump' directory 2025-02-28T07:26:08.785+0000 preparing collections to restore from 2025-02-28T07:26:08.785+0000 found collection admin.system.version bson to restore to admin.system.version 2025-02-28T07:26:08.785+0000 found collection metadata from admin.system.version to restore to admin.system.version 2025-02-28T07:26:08.785+0000 don't know what to do with file "dump/oplog.json", skipping... 2025-02-28T07:26:08.785+0000 found collection testDB.coll1 bson to restore to testDB.coll1 2025-02-28T07:26:08.785+0000 found collection metadata from testDB.coll1 to restore to testDB.coll1 2025-02-28T07:26:08.785+0000 found collection testDB.coll2 bson to restore to testDB.coll2 2025-02-28T07:26:08.785+0000 found collection metadata from testDB.coll2 to restore to testDB.coll2 2025-02-28T07:26:08.785+0000 reading metadata for testDB.coll1 from dump/testDB/coll1.metadata.json 2025-02-28T07:26:08.785+0000 reading metadata for testDB.coll2 from dump/testDB/coll2.metadata.json 2025-02-28T07:26:08.786+0000 creating collection testDB.coll1 with no metadata 2025-02-28T07:26:08.791+0000 creating collection testDB.coll2 with no metadata 2025-02-28T07:26:08.827+0000 restoring testDB.coll1 from dump/testDB/coll1.bson 2025-02-28T07:26:08.843+0000 restoring testDB.coll2 from dump/testDB/coll2.bson 2025-02-28T07:26:09.144+0000 finished restoring testDB.coll1 (10000 documents, 0 failures) 2025-02-28T07:26:09.204+0000 finished restoring testDB.coll2 (10000 documents, 0 failures) 2025-02-28T07:26:09.204+0000 no indexes to restore for collection testDB.coll1 2025-02-28T07:26:09.204+0000 no indexes to restore for collection testDB.coll2 2025-02-28T07:26:09.204+0000 20000 document(s) restored successfully. 0 document(s) failed to restore.This command restores the data, metadata, and index definitions for the specified collection (for Example: coll1 and coll2 in database testDB as shown above) to the target MongoDB/ORDS instance.

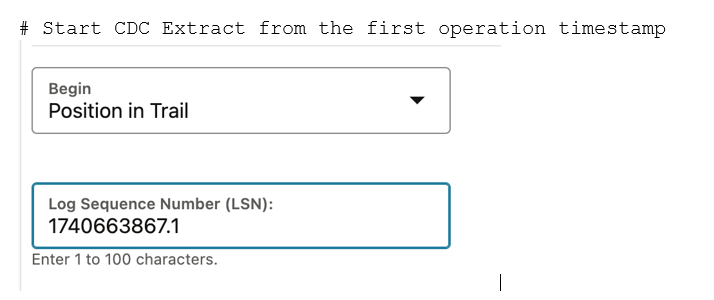

- Start MongoDB CDC Extract.

Start the MongoDB CDC Extract process from the first operation timestamp (LSN position) extracted from the

oplog.bsonfile. This will ensure the CDC Extract begins capturing operations that occur after the dump process started.Figure 9-1 Start MongoDB CDC Extract

- Configure MongoDB Replicat.

Configure the MongoDB Replicat with the generated CDC trail file and set the

oplogReplayLastLsnproperty to the last operation timestamp from theoplog.bsonfile. This ensures the Replicat runs in oplog-replay mode, avoiding any collisions. Once the last timestamp is processed, it continues in normal mode.# Set the oplogReplayLastLsn property in Replicat configuration. Either provide the last timestamp or just the path to oplog.bson file (and it figures out the last timestamp automatically). gg.handler.mongodb.oplogReplayLastLsn=1740663946.211

This configuration guarantees precise initiation, with no data loss or duplication, and eliminates the need for costly collision handling in the Replicat.

Note:

Clean-up before mongo-dump: The existing dump folder created inconsistent state of data at the time of mongo restore. Ensure that the exisiting dump folder is cleaned up before taking a new dump. It is very important to cleanup existing dump folder by deleting it and it's contents before taking a new dump.

MongoDB restore: Multiple collections of a database can be replicated using

multiple --nsInclude option in mongorestore command. However, the

ORDS multiple databases cannot be restored using mulitple

--nsIncludeMultiple restore commands are required, one for each

database need to be restored.

Recommended version of mongodb-database-tools is 100.10.0 or below. Latest version is not stable yet.

9.2.28.10 Delivery to Oracle JSON Collection Table (JCT)

This topic outlines the steps for installing Oracle Database 23c Free, configuring the environment, creating a Pluggable Database (PDB) for Oracle REST Data Services (ORDS) integration, installing and setting up ORDS and use that to connect to the Oracle Database using Oracle Database MongoDB API using Oracle GoldenGate for Distributed Applications and Analytics MongoDB Handler.

Note:

You can also capture from MongoDB source and deliver to Oracle JSON Collection Table (JCT) and Oracle JSON Duality View (JDV),This topic contains the following:

- Install Oracle Database 23c Free

- Connect to Oracle Database

- Create a New Pluggable Database (PDB)

- Create a User for ORDS

- Generate SSL Certificates

- Oracle REST Data Services (ORDS) Installation and Setup

- MongoDB Handler Configuration and Setup

- Error Handling

Parent topic: MongoDB

9.2.28.10.1 Install Oracle Database 23c Free

- Go to the official Oracle download page: Oracle Database Free 23c (https://www.oracle.com/in/database/free/get-started/).

- Download the RPM package

oracle-database-free-23c-1.0-1.el8.x86_64.rpmfor Oracle Linux 8 (OL8) machines. - Install the RPM Package:

- Run the following command to install Oracle Database Free

sudo dnf install -y oracle-database-free*Expected OutputPackage: oracle-database-free-23ai Version: 1.0-1 Architecture: x86_64 Repository: @commandline Size: 1.3 GB

- Run the following command to install Oracle Database Free

- Configure Oracle Database: After installation, configure the database with:

sudo /etc/init.d/oracle-free-23ai configure. You will be prompted to enter passwords forSYS,SYSTEM, andPDBADMINusers. Follow the on-screen instructions to complete configuration.

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.2 Connect to Oracle Database

- Set Environment Variable:

export ORACLE_HOME=/opt/oracle/product/23ai/dbhomeFree/. - Verify Installation: Connect to the Oracle Database to ensure

installation is successful:

/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus sys/Admin01@localhost:1521/FREE as sysdba.

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.3 Create a New Pluggable Database (PDB)

- Log in to SQL*Plus as

SYSDBA:/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus sys/Admin01@localhost:1521/FREE as sysdba. - Check Current Container and

PDBs:

show con_name; show pdbs; Expected Output: CON_NAME ------------------------------ CDB$ROOT CON_ID CON_NAME OPEN MODE RESTRICTED ------ ------------ ---------- ---------- 2 PDB$SEED READ ONLY NO 3 FREEPDB1 READ WRITE NO

- Create a New PDB for ORDS:

CREATE PLUGGABLE DATABASE pdbords ADMIN USER pdbordsadmin IDENTIFIED BY Admin01 FILE_NAME_CONVERT = ('/opt/oracle/oradata/FREE/pdbseed/', '/scratch/oracle/pdbseed_ords/');Note:

Precreate directory/scratch/oracle/pdbseed_ords/ - Open the New PDB and verify:

ALTER PLUGGABLE DATABASE pdbords OPEN;SHOW pdbs;Expected Output:CON_ID CON_NAME OPEN MODE RESTRICTED ------ ------------ ---------- ---------- 2 PDB$SEED READ ONLY NO 3 FREEPDB1 READ WRITE NO 5 PDBORDS READ WRITE NO

- Set Container to

PDBORDS:ALTER SESSION SET CONTAINER = pdbords; SHOW con_name;

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.4 Create a User for ORDS

- Create ORDS

User:

CREATE USER ordsuser IDENTIFIED BY Admin01; GRANT CONNECT, RESOURCE, DBA TO ordsuser;

This user will be used for MongoDB integration through ORDS.

- Connect as the New

User:

/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus ordsuser/Admin01@localhost:1521/PDBORDS

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.5 Generate SSL Certificates

- Create a directory for certificates:

mkdir certs cd certs/

- Generate the CA's Private Key. The following command will generate

a private key for your Certificate Authority (CA). Remember the password used

here, as it will be needed to sign other

certificates.

openssl genrsa -aes256 -out ca-key.pem 4096

- Create the CA's Public Certificate

Generate a self-signed public certificate for your CA, which acts as the Certificate Authority.

openssl req -new -x509 -sha256 -days 365 -key ca-key.pem -out ca.pem

- View the CA Certificate’s Details. Verify the details of the CA

certificate with the following command:

openssl x509 -in ca.pem -text

- Generate the Client's Private KeyCreate a private key for the

client:

openssl genrsa -out client-key.pem 4096

- Create a Certificate Signing Request (CSR)Generate a CSR for the client

certificate. Make sure the Common Name (CN) matches the hostname where the

certificate will be

used.

openssl req -new -sha256 -subj "/CN=oraclelinux8" -key client-key.pem -out client.csr

- Generate the Client Certificate Using the CA. Add the IP addresses and DNS names

that match your current environment to extfile.cnf. Ensure they are consistent

with the hostname details in your hosts file.

Hosts File Example:

127.0.0.1 localhost 100.01.01.01 phoenix.dev3sub2phx.oraclevcn.com

Create extfile.cnf:

echo "subjectAltName=DNS:*.oraclevcn.com,DNS:phoenix.dev3sub2phx.oraclevcn.com,IP:100.01.01.01" >> extfile.cnf

- Sign the

Certificate:

openssl x509 -req -sha256 -days 365 -in client.csr -CA ca.pem -CAkey ca-key.pem -out client.pem -extfile extfile.cnf -CAcreateserial

- Verify the Client Certificate.

Use the following command to verify the client certificate:

openssl verify -CAfile ca.pem -verbose client.pem

- Combine Certificates for Full Chain.

Create a full chain certificate by combining the client certificate and the CA certificate:

cat client.pem > fullchain.pem cat ca.pem >> fullchain.pem

- Configure ORDS to Use Your SSL Certificate.

Some systems require certificates in DER format instead of PEM.

Convert the Client Key and Certificate to DER Format:openssl pkcs8 -topk8 -inform PEM -outform DER -in client-key.pem -out client-key.der -nocrypt openssl x509 -inform PEM -outform DER -in client.pem -out client.der

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.6 Oracle REST Data Services (ORDS) Installation and Setup

- Download ORDS.

- Download the file ords-24.3.0.262.0924.zip from Oracle's website: ORDS Download

- Unzip the downloaded file.

mkdir certs cd certs/

- Setup ORDS:

- Set Environment

Variables.

export ORDS_HOME=/scratch/ords/ export ORDS_CONFIG=/scratch/ords/ordsconfig export PATH=${ORDS_HOME}/bin:${PATH} export _JAVA_OPTIONS="-Xms1126M -Xmx1126M"Note:

Requires JAVA11 or above. UnsetORACLE_HOMEif set any. - Install ORDS. Run the following command:

ords install

- Choose the following options during installation:

- Connection Type: Basic

- Database: localhost:1521/PDBORDS

- Protocol: HTTPS

- Port: 8443

- Certificate Type: Use Self-Signed Certificate with fully qualified hostname

Expected Output:

-bash-4.4$ ords install Enter a number to update the value or select option A to Accept and Continue [1] Connection Type: Basic [2] Basic Connection: HOST=localhost PORT=1521 SERVICE_NAME=PDBORDS Administrator User: SYS AS SYSDBA [3] Database password for ORDS runtime user (ORDS_PUBLIC_USER): <generate> [4] ORDS runtime user and schema tablespaces: Default: SYSAUX Temporary TEMP [5] Additional Feature: Database Actions [6] Configure and start ORDS in Standalone Mode: Yes [7] Protocol: HTTPS [8] HTTPS Port: 8443 [9] Certificate Type: Use Self-Signed Certificate [10] SSL Hostname: phoenix.dev3sub2phx.oraclevcn.com [A] Accept and Continue - Create configuration and Install ORDS in the database [Q] Quit - Do not proceed. No changes Choose [A]: A - Choose the following options during installation:

- Enable ORDS Schema. Connect to the database and grant necessary

permissions:

/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus ordsuser/Admin01@localhost:1521/PDBORDS SQL> grant soda_app, create session, create table, create view, create sequence, create procedure, create job, unlimited tablespace to ordsuser; SQL> exec ords.enable_schema;

- Configure Certificates and

MongoDB.

ords config set standalone.https.cert /scratch/ords/certs/client.der ords config set standalone.https.cert.key /scratch/ords/certs/client-key.der ords config set mongo.enabled true ords config set mongo.port 27040

- Set Environment

Variables.

- Start ORDS

- Run the following command to start ORDS:

ords serve

Expected Output:

-bash-4.4$ ords serve Picked up _JAVA_OPTIONS: -Xms1126M -Xmx1126M 2024-11-04T10:53:10.119Z WARNING Your configuration folder /scratch/ords/ordsconfig is located in ORDS product folder. Oracle recommends to use a different configuration folder. Refer to Oracle REST Data Services Documentation on how to setup your configuration folder. ORDS: Release 24.3 Production on Mon Nov 04 10:53:10 2024 Copyright (c) 2010, 2024, Oracle. Configuration: /scratch/ords/ordsconfig 2024-11-04T10:53:10.642Z INFO HTTP and HTTP/2 cleartext listening on host: 0.0.0.0 port: 8080 2024-11-04T10:53:10.643Z INFO HTTPS and HTTPS/2 listening on host: 0.0.0.0 port: 8443 2024-11-04T10:53:10.679Z INFO Disabling document root because the specified folder does not exist: /scratch/ords/ordsconfig/global/doc_root 2024-11-04T10:53:10.680Z INFO Default forwarding from / to contextRoot configured. 2024-11-04T10:53:10.764Z SEVERE ORAMLVERSION null 2024-11-04T10:53:10.775Z INFO Oracle API for MongoDB listening on port: 27040 2024-11-04T10:53:10.775Z INFO The Oracle API for MongoDB connection string is: mongodb://[{user}:{password}@]localhost:27040/{user}?authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true - Run the following command to start ORDS:

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.7 MongoDB Handler Configuration and Setup

- Add generated CA Certificate to Java Keystore.

- Add generated CA Certificate to Java KeystoreTo enable secure communication with MongoDB, the CA certificate (ca.pem) must be added to the Java keystore. Run the following command:

keytool -import -trustcacerts -keystore /scratch/oui_21.15/ogg_install_home/jdk/jre/lib/security/cacerts -storepass changeit -alias MiCA -file /scratch/ca.pem -noprompt

The certificate should now be added to the keystore successfully.Note:

Use cacerts fromOGGDAAinstallation directory. - MongoDB Connection URI: To connect to MongoDB securely, use

the following connection URI. Make sure that paths for

tlsCAFileandtlsCertificateKeyFileare correct and match your environment.Set Environment Variables.mongodb://ordsuser:Admin01@phoenix.dev3sub2phx.oraclevcn.com:27040/ordsuser? authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true&tlsCAFile=/scratch/ca.pem

Syntax:mongodb://[{user}:{password}@]localhost:27040/{user}?authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true - Create Table Space ORDS

- Connect to SQL Plus:

/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus ordsuser/Admin01@localhost:1521/PDBORDS

- Create

Tablespace

CREATE TABLESPACE jcttest DATAFILE '/scratch/ords/tablespace/jct_datafile.dbf' SIZE 100M AUTOEXTEND ON NEXT 10M MAXSIZE UNLIMITED EXTENT MANAGEMENT LOCAL SEGMENT SPACE MANAGEMENT AUTO;

Note:

Precreate/scratch/ords/tablespacedirectory. - Set Default

Tablespace:

ALTER USER ordsuser DEFAULT TABLESPACE JCTTEST;

- Connect to SQL Plus:

- Configure Replication

- To configure the MongoDB handler, create a prm file for the replicat as:

REPLICAT JCT MAP *.*, TARGET ordsuser.*; // here target schema is the ords user that we created.

- Create a properties file for the Replicat

JCT.

gg.handlerlist=mongodb gg.handler.mongodb.type=mongodb #TODO: MongoDB Client URI (replace with the actual MongoDB URI) gg.handler.mongodb.clientURI=mongodb://ordsuser:Admin01@phoenix.dev3sub2phx.oraclevcn.com:27040/ordsuser?authMechanism=PLAIN&authSource=$external&ssl=true&retryWrites=false&loadBalanced=true&tlsCAFile=/scratch/ca.pem #TODO: Path to MongoDB Java driver gg.classpath=/scratch/oui_21.15/ogg_install_home/opt/DependencyDownloader/dependencies/mongodb_5.0.0/*

- To configure the MongoDB handler, create a prm file for the replicat as:

- Verify Data in ORDS SQL Developer

After setting up replication, you can verify the data in SQL Developer:

Login URL: SQL Developer URL (https://phoenix.dev3sub2phx.oraclevcn.com:8443/ords/sql-developer)

Credentials:ordsuser/Admin01SELECT DATA, RESID, ETAG FROM ORDSUSER.JSONCT;

The DATA column should hold the JCT records from MongoDB.

Note:

- If the JCT table is not present, the MongoDB handler will create it by default.

- You may also create the target JCT table manually and map it in the handler configuration.

CREATE JSON COLLECTION TABLE JSONCT;

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.10.8 Error Handling

- If you come across the error:

JSON type cannot be used in non-automatic segment space management tablespace 'SYSTEM', run the following commands to assign a default tablespace to ordsuser.Connect to SQL Plus:

/opt/oracle/product/23ai/dbhomeFree/bin/sqlplus ordsuser/Admin01@localhost:1521/PDBORDS

Set Default Tablespace:ALTER USER ordsuser DEFAULT TABLESPACE JCTTEST;

This sets

JCTTESTas the default tablespace for the ordsuser schema.

Parent topic: Delivery to Oracle JSON Collection Table (JCT)

9.2.28.11 MongoDB Handler Client Dependencies

What are the dependencies for the MongoDB Handler to connect to MongoDB databases?

Note:

If the Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) version is 21.7.0.0.0 and below, the driver version is MongoDB Java Driver 3.12.8. For Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA) versions 21.8.0.0.0 and above, the driver version is MongoDB Java Driver 4.6.0.Parent topic: MongoDB

9.2.28.11.1 MongoDB Java Driver 4.6.0

The required dependent client libraries are:

bson-4.6.0.jarbson-record-codec-4.6.0.jarmongodb-driver-core-4.6.0.jarmongodb-driver-legacy-4.6.0.jarmongodb-driver-legacy-4.6.0.jarmongodb-driver-sync-4.6.0.jar

The Maven coordinates of these third-party libraries that are needed to run MongoDB replicat are:

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>mongodb-driver-legacy</artifactId>

<version>4.6.0</version>

</dependency>

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>mongodb-driver-sync</artifactId>

<version>4.6.0</version>

</dependency>

Example

Download the latest version from Maven central at: https://central.sonatype.com/artifact/org.mongodb/mongodb-driver-reactivestreams/4.6.0.

Parent topic: MongoDB Handler Client Dependencies

9.2.28.11.2 MongoDB Java Driver 3.12.8

pom.xml file, substituting

your correct

information:<!-- https://mvnrepository.com/artifact/org.mongodb/mongo-java-driver -->

<dependency>

<groupId>org.mongodb</groupId>

<artifactId>mongo-java-driver</artifactId>

<version>3.12.8</version>

</dependency>Parent topic: MongoDB Handler Client Dependencies