6 Configuring High Availability for Oracle Identity Governance Components

This chapter describes how to design and deploy a high availability environment for Oracle Identity Governance.

Oracle Identity Governance (OIG) is a user provisioning and administration solution that automates the process of adding, updating, and deleting user accounts from applications and directories. It also improves regulatory compliance by providing granular reports that attest to who has access to what. OIG is available as a stand-alone product or as part of Oracle Identity and Access Management Suite.

For details about OIG, see in Product Overview for Oracle Identity Governance in Administering Oracle Identity Governance.

Note:

Oracle Identity Governance and Oracle Identity Manager product name references in the documentation mean the same.Oracle Identity Governance Architecture

Oracle Identity Governance architecture consists of its components, runtime processes, process lifecycle, configuration artifacts, external dependencies, and log files.

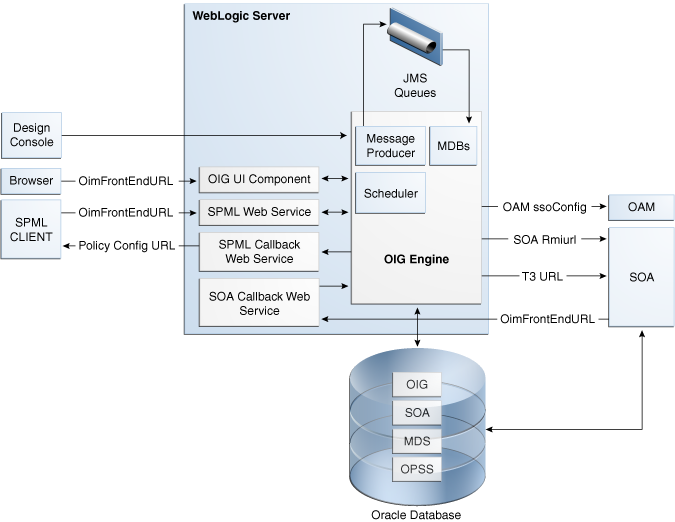

Figure 6-1 shows the Oracle Identity Governance architecture:

Figure 6-1 Oracle Identity Governance Component Architecture

Description of "Figure 6-1 Oracle Identity Governance Component Architecture"

Oracle Identity Governance Component Characteristics

Oracle Identity Governance Server is Oracle's self-contained, standalone identity management solution. It provides User Administration, Workflow and Policy, Password Management, Audit and Compliance Management, User Provisioning and Organization and Role Management functionalities.

Oracle Identity Governance (OIG) is a standard Java EE application that is deployed on WebLogic Server and uses a database to store runtime and configuration data. The MDS schema contains configuration information; the runtime and user information is stored in the OIG schema.

OIG connects to the SOA Managed Servers over RMI to invoke SOA EJBs.

OIG uses the human workflow module of Oracle SOA Suite to manage its request workflow. OIG connects to SOA using the T3 URL for the SOA server, which is the front end URL for SOA. Oracle recommends using the load balancer or web server URL for clustered SOA servers. When the workflow completes, SOA calls back OIM web services using OIGFrontEndURL. Oracle SOA is deployed along with the OIG.

Several OIGmodules use JMS queues. Each queue is processed by a separate Message Driven Bean (MDB), which is also part of the OIG application. Message producers are also part of the OIG application.

OIG uses a Quartz based scheduler for scheduled activities. Various scheduled activities occur in the background, such as disabling users after their end date.

In this release, BI Publisher is not embedded with OIG. However, you can integrate BI Publisher with OIG by following the instructions in Configuring Reports in Developing and Customizing Applications for Oracle Identity Governance.

Runtime Processes

Oracle Identity Governance deploys on WebLogic Server as a no-stage application. The OIG server initializes when the WebLogic Server it is deployed on starts up. As part of application initialization, the quartz-based scheduler is also started. Once initialization is done, the system is ready to receive requests from clients.

You must start the Design Console separately as a standalone utility.

Component and Process Lifecycle

Oracle Identity Manager deploys to a WebLogic Server as an externally managed application. By default, WebLogic Server starts, stops, monitors and manages other lifecycle events for the OIM application.

OIM starts after the application server components start. It uses the authenticator which is part of the OIM component mechanism; it starts up before the WebLogic JNDI initializes and the application starts.

OIM uses a Quartz technology-based scheduler that starts the scheduler thread on all WebLogic Server instances. It uses the database as centralized storage for picking and running scheduled activities. If one scheduler instance picks up a job, other instances do not pick up that same job.

You can configure Node Manager to monitor the server process and restart it in case of failure.

The Oracle Enterprise Manager Fusion Middleware Control is used to monitor as well as to modify the configuration of the application.

Starting and Stopping Oracle Identity Governance

You manage OIM lifecycle events with these command line tools and consoles:

-

Oracle WebLogic Scripting Tool (WLST)

-

WebLogic Server Remote Console

-

Oracle Enterprise Manager Fusion Middleware Control

-

Oracle WebLogic Node Manager

Configuration Artifacts

The OIM server configuration is stored in the MDS repository at /db/oim-config.xml. The oim-config.xml file is the main configuration file. Manage OIM configuration using the MBean browser through Oracle Enterprise Manager Fusion Middleware Control or command line MDS utilities. For more information about MDS utilities, see Migrating User Configurable Metadata Files in Developing and Customizing Applications for Oracle Identity Governance.

The installer configures JMS out-of-the-box; all necessary JMS queues, connection pools, data sources are configured on WebLogic application servers. These queues are created when OIM deploys:

-

oimAttestationQueue

-

oimAuditQueue

-

oimDefaultQueue

-

oimKernelQueue

-

oimProcessQueue

-

oimReconQueue

-

oimSODQueue

The xlconfig.xml file stores Design Console and Remote Manager configuration.

External Dependencies

Oracle Identity Manager uses the Worklist and Human workflow modules of the Oracle SOA Suite for request flow management. OIM interacts with external repositories to store configuration and runtime data, and the repositories must be available during initialization and runtime. The OIM repository stores all OIM credentials. External components that OIM requires are:

-

WebLogic Server

-

Administration Server

-

Managed Server

-

-

Data Repositories

-

Configuration Repository (MDS Schema)

-

Runtime Repository (OIM Schema)

-

User Repository (OIM Schema)

-

SOA Repository (SOA Schema)

-

-

BI Publisher, which can be optionally integrated with OIM

The Design Console is a tool used by the administrator for development and customization. The Design Console communicates directly with the OIM engine, so it relies on the same components that the OIM server relies on.

Remote Manager is an optional independent standalone application, which calls the custom APIs on the local system. It needs JAR files for custom APIs in its classpath.

Oracle Identity Governance Log File Locations

As a Java EE application deployed on WebLogic Server, all server log messages log to the server log file. OIM-specific messages log into the WebLogic Server diagnostic log file where the application is deployed.

WebLogic Server log files are in the directory:

DOMAIN_HOME/servers/serverName/logs

The three main log files are serverName.log, serverName.out, and serverName-diagnostic.log, where serverName is the name of the WebLogic Server. For example, if the WebLogic Server name is wls_OIM1, then the diagnostic log file name is wls_OIM1-diagnostic.log. Use Oracle Enterprise Manager Fusion Middleware Control to view log files.

Oracle Identity Governance High Availability Concepts

The concepts related to Oracle Identity Governance High Availability are OIG high availability architecture, starting and stopping OIG cluster, and cluster-wide configuration changes.

Note:

-

You can deploy OIM on an Oracle RAC database, but Oracle RAC failover is not transparent for OIM in this release. If Oracle RAC failover occurs, end users may have to resubmit requests.

-

OIM always requires the availability of at least one node in the SOA cluster. If the SOA cluster is not available, end user requests fail. OIM does not retry for a failed SOA call. Therefore, the end user must retry when a SOA call fails.

Oracle Identity Governance High Availability Architecture

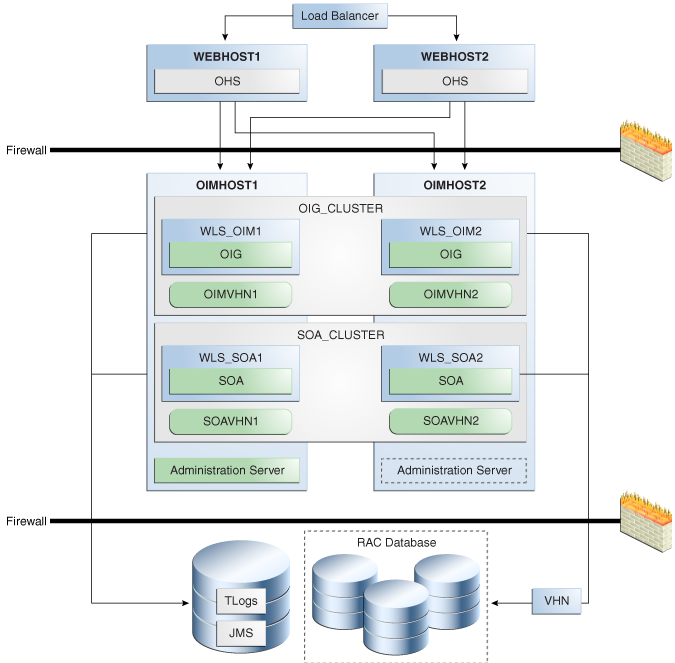

Figure 6-2 shows OIG deployed in a high availability architecture.

Figure 6-2 Oracle Identity Governance High Availability Architecture

Description of "Figure 6-2 Oracle Identity Governance High Availability Architecture"

On OIMHOST1, the following installations have been performed:

-

An OIM instance is installed in the WLS_OIM1 Managed Server and a SOA instance is installed in the WLS_SOA1 Managed Server.

-

The Oracle RAC database is configured in a GridLink data source to protect the instance from Oracle RAC node failure.

-

A WebLogic Server Administration Server is installed. Under normal operations, this is the active Administration Server.

On OIMHOST2, the following installations have been performed:

-

An OIM instance is installed in the WLS_OIM2 Managed Server and a SOA instance is installed in the WLS_SOA2 Managed Server.

-

The Oracle RAC database is configured in a GridLink data source to protect the instance from Oracle RAC node failure.

-

The instances in the WLS_OIM1 and WLS_OIM2 Managed Servers on OIMHOST1 and OIMHOST2 are configured as the OIM_Cluster cluster.

-

The instances in the WLS_SOA1 and WLS_SOA2 Managed Servers on OIMHOST1 and OIMHOST2 are configured as the SOA_Cluster cluster.

-

An Administration Server is installed. Under normal operations, this is the passive Administration Server. You make this Administration Server active if the Administration Server on OIMHOST1 becomes unavailable.

DB-SCAN refers to the database SCAN address for the Oracle Real Application Clusters (Oracle RAC) database hosts.

Starting and Stopping the OIG Cluster

By default, WebLogic Server starts, stops, monitors, and manages lifecycle events for the application. The OIM application leverages high availability features of clusters. In case of hardware or other failures, session state is available to other cluster nodes that can resume the work of the failed node.

Use these command line tools and consoles to manage OIM lifecycle events:

-

WebLogic Server Remote Console

-

Oracle Enterprise Manager Fusion Middleware Control

-

Oracle WebLogic Scripting Tool (WLST)

High Availability Directory Structure Prerequisites

A high availability deployment requires product installations and files to reside in specific directories. A standard directory structure makes facilitates configuration across nodes and product integration.

Before you configure high availability, verify that your environment meets the requirements that High Availability Directory Structure Prerequisites describes.

Oracle Identity Governance High Availability Configuration Steps

Oracle Identity Governance high availability configuration involves setting the prerequisites, configuring the domain, post-installation steps, starting servers, SOA integration, validating managed server instances, and scaling up and scaling out Oracle Identity Governance.

This section provides high-level instructions for setting up a high availability deployment for OIM and includes these topics:

Prerequisites for Configuring Oracle Identity Governance

Before you configure OIM for high availability, you must:

-

Install the Oracle Database. See About Database Requirements for an Oracle Fusion Middleware Installation.

-

Install the JDK on OIMHOST1 and OIMHOST2. See Preparing for Installation in Installing and Configuring Oracle WebLogic Server and Coherence.

-

Install WebLogic Server, Oracle SOA Suite, and Oracle Identity Management software on OIMHOST1 and OIMHOST2 by using the quickstart installer. See Installing Oracle Identity Governance Using Quickstart Installer.

-

Run the Repository Creation Utility to create the OIM schemas in a database. See Running RCU to Create the OIM Schemas in a Database.

Running RCU to Create the OIM Schemas in a Database

The schemas you create depend on the products you want to install and configure. Use a Repository Creation Utility (RCU) that is version compatible with the product you install. See Creating the Database Schemas.

Configuring the Domain

Use the Configuration Wizard to create and configure a domain.

See Configuring the Domain and Performing Post-Configuration Tasks for information about creating the Identity Management domain.

Post-Installation Steps on OIMHOST1

This section describes post-installation steps for OIMHOST1.

Running the Offline Configuration Command

After you configure the Oracle Identity Governance domain, run the offlineConfigManager script to perform post configuration tasks.

offlineConfigManager command, do the following:

Updating the System Properties for SSL Enabled Servers

For SSL enabled servers, you must set the required properties in the setDomainEnv file in the domain home.

DOMAIN_HOME/bin/setDomainEnv.sh (for UNIX) or DOMAIN_HOME\bin\setDomainEnv.cmd (for Windows) file before you start the servers:

-

-Dweblogic.security.SSL.ignoreHostnameVerification=true -

-Dweblogic.security.TrustKeyStore=DemoTrust

Starting the Administration Server, oim_server1, and soa_server1

Integrating Oracle Identity Governance with Oracle SOA Suite

Propagating Oracle Identity Governance to OIMHOST2

After the configuration succeeds on OIMHOST1, you can propagate it to OIMHOST2 by packing the domain on OIMHOST1 and unpacking it on OIMHOST2.

Note:

Oracle recommends that you perform a clean shut down of all Managed Servers on OIMHOST1 before you propagate the configuration to OIMHOST2.

To pack the domain on OIMHOST1 and unpack it on OIMHOST2:

Post-Installation Steps on OIMHOST2

Start Node Manager on OIMHOST2

Start the Node Manager on OIMHOST2 using the startNodeManager.sh script located under the following directory:

DOMAIN_HOME/bin

Start WLS_SOA2 and WLS_OIM2 Managed Servers on OIMHOST2

To start Managed Servers on OIMHOST2:

- Start the WLS_SOA2 Managed Server using the RemoteConsole.

- Start the WLS_OIM2 Managed Server using the Remote Console. The WLS_OIM2 Managed Server must be started after the WLS_SOA2 Managed Server is started.

Validate Managed Server Instances on OIMHOST2

Validate the Oracle Identity Manager (OIM) instances on OIMHOST2.

Open the OIM Console with this URL:

http://identityvhn2.example.com:14000/identity

Log in using the xelsysadm password.

Configuring Server Migration for OIG and SOA Managed Servers

For this high availability topology, Oracle recommends that you configure server migration for the WLS_OIM1, WLS_SOA1, WLS_OIM2, and WLS_SOA2 Managed Servers. See Section 3.9, "Whole Server Migration" for information on the benefits of using Whole Server Migration and why Oracle recommends it.

-

The WLS_OIM1 and WLS_SOA1 Managed Servers on OIMHOST1 are configured to restart automatically on OIMHOST2 if a failure occurs on OIMHOST1.

-

The WLS_OIM2 and WLS_SOA2 Managed Servers on OIMHOST2 are configured to restart automatically on OIMHOST1 if a failure occurs on OIMHOST2.

In this configuration, the WLS_OIM1, WLS_SOA1, WLS_OIM2 and WLS_SOA2 servers listen on specific floating IPs that WebLogic Server Migration fails over.

The subsequent topics enable server migration for the WLS_OIM1, WLS_SOA1, WLS_OIM2, and WLS_SOA2 Managed Servers, which in turn enables a Managed Server to fail over to another node if a server or process failure occurs.

Editing Node Manager's Properties File

You must edit the nodemanager.properties file to add the following properties for each node where you configure server migration:

Interface=eth0 eth0=*,NetMask=255.255.248.0 UseMACBroadcast=true

-

Interface: Specifies the interface name for the floating IP (such aseth0).Note:

Do not specify the sub interface, such as

eth0:1oreth0:2. This interface is to be used without the:0, or:1. The Node Manager's scripts traverse the different:Xenabled IPs to determine which to add or remove. For example, valid values in Linux environments areeth0,eth1, or,eth2,eth3,ethn, depending on the number of interfaces configured. -

NetMask: Net mask for the interface for the floating IP. The net mask should be the same as the net mask on the interface;255.255.255.0is an example. The actual value depends on your network. -

UseMACBroadcast: Specifies whether or not to use a node's MAC address when sending ARP packets, that is, whether or not to use the -bflag in thearpingcommand.

Verify in Node Manager's output (shell where Node Manager starts) that these properties are being used or problems may arise during migration. (Node Manager must be restarted to do this.) You should see an entry similar to the following in Node Manager's output:

... StateCheckInterval=500 Interface=eth0 NetMask=255.255.255.0 ...

Install Oracle HTTP Server on WEBHOST1 and WEBHOST2

Install Oracle HTTP Server on WEBHOST1 and WEBHOST2.

Configuring Oracle Identity Governance to Work with the Web Tier

You can co-locate Oracle HTTP Server and Oracle Identity Governance in a High Availability set up in the following ways:

Note:

Oracle recommends that you do not co-locate Oracle HTTP Server and Oracle Identity Governance in the same domain.

- Create an OIG domain and extend same OIM domain with Oracle HTTP Server. In this case, you can re-use the same schema for both Oracle HTTP Server and OIG.

- Create a separate domain for Oracle HTTP Server and OIG. In this case, you can not re-use the same schema for both Oracle HTTP Server and OIG. You will need two separate schemas.

- Create separate domains for Oracle HTTP Server and OIG using Silent/wlst. In this case, you can re-use the same schema for both Oracle HTTP Server and OIG.

The following topics describe how to configure OIG to work with the Oracle Web Tier.

Prerequisites to Configure OIG to Work with the Web Tier

Verify that the following tasks have been performed:

- Oracle Web Tier has been installed on WEBHOST1 and WEBHOST2.

- OIG is installed and configured on OIGHOST1 and OIGHOST2.

- The load balancer has been configured with a

virtual hostname

(

oig.example.com) pointing to the web servers on WEBHOST1 and WEBHOST2.oig.example.comis customer facing and the main point of entry; it is typically SSL terminated. - The load balancer has been configured with a

virtual hostname

(

oiginternal.example.com) pointing to web servers WEBHOST1 and WEBHOST2.oiginternal.example.comis for internal callbacks and is not customer facing.

Configuring SSL Certificates for Load Balancer

For an SSL enabled server with load balancer, you must configure SSL

certificates when using Oracle Web Tier for OIGExternalFrontendURL

.

Validate the Oracle HTTP Server Configuration

To validate that Oracle HTTP Server is configured properly, follow these steps:

Oracle Identity Governance Failover and Expected Behavior

In a high availability environment, you configure Node Manager to monitor Oracle WebLogic Servers. In case of failure, Node Manager restarts the WebLogic Server.

A hardware load balancer load balances requests between multiple OIM instances. If one OIM Managed Server fails, the load balancer detects the failure and routes requests to surviving instances.

In a high availability environment, state and configuration information is stored in a database that all cluster members share. Surviving OIM instances continue to seamlessly process any unfinished transactions started on the failed instance because state information is in the shared database, available to all cluster members.

When an OIM instance fails, its database and LDAP connections are released. Surviving instances in the active-active deployment make their own connections to continue processing unfinished transactions on the failed instance.

When you deploy OIM in a high availability configuration:

-

You can deploy OIM on an Oracle RAC database, but Oracle RAC failover is not transparent for OIM in this release. If Oracle RAC failover occurs, end users may have to resubmit their requests.

-

Oracle Identity Manager always requires the availability of at least one node in the SOA cluster. If the SOA cluster is not available, end user requests fail. OIM does not retry for a failed SOA call. Therefore, the end user must retry when a SOA call fails.

Scaling Up Oracle Identity Governance

You can scale out or scale up the OIG high availability topology. When you scale up the topology, you add new Managed Servers to nodes that are already running one or more Managed Servers. When you scale out the topology, you add new Managed Servers to new nodes. See Scaling Out Oracle Identity Governance to scale out.

In this case, you have a node that runs a Managed Server configured with SOA. The node contains:

-

An Oracle Home

-

A domain directory for existing Managed Servers

You can use the existing installations (Oracle home and domain directories) to create new WLS_OIG and WLS_SOA Managed Servers. You do not need to install OIG and SOA binaries in a new location or run pack and unpack.

This procedure describes how to clone OIG and SOA Managed Servers. You may clone one or two of these component types, as long as one of them is OIG.

Note the following:

-

This procedure refers to WLS_OIG and WLS_SOA. However, you may not be scaling up both the components. For each step, choose the component(s) that you are scaling up in your environment. Also, some steps do not apply to all components

-

The persistent store's shared storage directory for JMS Servers must exist before you start the Managed Server or the start operation fails.

-

Each time you specify the persistent store's path, it must be a directory on shared storage

To scale up the topology:

-

In the Administration Console, clone WLS_OIG1/WLS_SOA1. The Managed Server that you clone should be one that already exists on the node where you want to run the new Managed Server.

-

Select Environment -> Servers from the Administration Console.

-

Select the Managed Server(s) that you want to clone.

-

Select Clone.

-

Name the new Managed Server WLS_OIG

n/WLS_SOAn, wherenis a number to identity the new Managed Server.

The rest of the steps assume that you are adding a new Managed Server to OIGHOST1, which is already running WLS_OIG1 and WLS_SOA1.

-

-

For the listen address, assign the hostname or IP for the new Managed Server(s).

-

Create JMS Servers for OIM/SOA, BPM, UMS, JRFWSAsync, and SOAJMServer on the new Managed Server.

-

In the Administration Console, create a new persistent store for the OIM JMS Server(s) and name it. Specify the store's path, a directory on shared storage.

ORACLE_BASE/admin/domain_name/cluster_name/jms

-

Create a new JMS Server for OIM. Use

JMSFileStore_n for JMSServer. TargetJMSServer_n to the new Managed Server(s). -

Create a persistence store for the new UMSJMSServer(s), for example,

UMSJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/UMSJMSFileStore_n

-

Create a new JMS Server for UMS, for example,

UMSJMSServer_n. Target it to the new Managed Server (WLS_SOAn). -

Create a persistence store for the new BPMJMSServer(s), for example,

BPMJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/BPMJMSFileStore_n

-

Create a new JMS Server for BPM, for example,

BPMJMSServer_n. Target it to the new Managed Server (WLS_SOAn). -

Create a new persistence store for the new JRFWSAsyncJMSServer, for example,

JRFWSAsyncJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/JRFWSAsyncJMSFileStore_n

-

Create a JMS Server for JRFWSAsync, for example,

JRFWSAsyncJMSServer_n. UseJRFWSAsyncJMSFileStore_n for this JMSServer. TargetJRFWSAsyncJMSServer_n to the new Managed Server (WLS_OIMn).Note:

You can also assign

SOAJMSFileStore_n as store for the new JRFWSAsync JMS Servers. For clarity and isolation, individual persistent stores are used in the following steps. -

Create a persistence store for the new SOAJMSServer, for example,

SOAJMSFileStore_auto_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/SOAJMSFileStore_auto_n

-

Create a JMS Server for SOA, for example,

SOAJMSServer_auto_n. UseSOAJMSFileStore_auto_n for this JMSServer. TargetSOAJMSServer_auto_n to the new Managed Server (WLS_SOAn).Note:

You can also assign

SOAJMSFileStore_n as store for the new PS6 JMS Servers. For the purpose of clarity and isolation, individual persistent stores are used in the following steps. -

Update SubDeployment targets for SOA JMS Module to include the new SOA JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click SOAJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the SOAJMSServerXXXXXX subdeployment and add

SOAJMSServer_n to it Click Save.Note:

A subdeployment module name is a random name in the form COMPONENTJMSServerXXXXXX. It comes from the Configuration Wizard JMS configuration for the first two Managed Servers, WLS_COMPONENT1 and WLS_COMPONENT2).

-

Update SubDeployment targets for UMSJMSSystemResource to include the new UMS JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click UMSJMSSystemResource (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the UMSJMSServerXXXXXX subdeployment and add

UMSJMSServer_n to it. Click Save. -

Update SubDeployment targets for OIMJMSModule to include the new OIM JMS Server. Expand the Services node, then expand Messaging node. Choose JMS Modules from the Domain Structure window. Click OIMJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click OIMJMSServerXXXXXX and

OIMJMSServer_nto it. Click Save. -

Update SubDeployment targets for the JRFWSAsyncJmsModule to include the new JRFWSAsync JMS Server. Expand the Services node then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click JRFWSAsyncJmsModule (hyperlink in the Names column of the table). In the Settings page, click the SubDeployments tab. Click the JRFWSAsyncJMSServerXXXXXX subdeployment and add

JRFWSAsyncJMSServer_n to this subdeployment. Click Save -

Update SubDeployment targets for BPM JMS Module to include the new BPM JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click BPMJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the BPMJMSServerXXXXXX subdeployment and add

BPMJMSServer_n to it. Click Save.

-

-

Configure the transaction persistent store for the new server in a shared storage location visible from other nodes.

From the Administration Console, select Server_name > Services tab. Under Default Store, in Directory, enter the path to the default persistent store.

-

Disable hostname verification for the new Managed Server (required before starting/verifying a WLS_SOAn Managed Server) You can re-enable it after you configure server certificates for Administration Server / Node Manager communication in SOAHOSTn. If the source server (from which you cloned the new Managed Server) had disabled hostname verification, these steps are not required; hostname verification settings propagate to a cloned server.

To disable hostname verification:

-

In the Administration Console, expand the Environment node in the Domain Structure window.

-

Click Servers. Select WLS_SOAn in the Names column of the table.

-

Click the SSL tab. Click Advanced.

-

Set Hostname Verification to None. Click Save.

-

-

Start and test the new Managed Server from the Administration Console.

-

Shut down the existing Managed Servers in the cluster.

-

Ensure that the newly created Managed Server is up.

-

Access the application on the newly created Managed Server to verify that it works. A login page opens for OIM. For SOA, a HTTP basic authorization opens.

Table 6-2 Managed Server Test URLs

Component Managed Server Test URL SOA

http://vip:port/soa-infra

OIM

http://vip:port/identity

-

-

In the Administration Console, select Services then Foreign JNDI provider. Confirm that ForeignJNDIProvider-SOA targets

cluster:t3://soa_cluster, not a Managed Server(s). You target the cluster so that new Managed Servers don't require configuration. If ForeignJNDIProvider-SOA does not target the cluster, target it to the cluster. -

Configure Server Migration for the new Managed Server.

Note:

For scale up, the node must have a Node Manager, an environment configured for server migration, and the floating IP for the new Managed Server(s).

To configure server migration:

-

Log into the Administration Console.

-

In the left pane, expand Environment and select Servers.

-

Select the server (hyperlink) that you want to configure migration for.

-

Click the Migration tab.

-

In the Available field, in the Migration Configuration section, select machines to enable migration for and click the right arrow. Select the same migration targets as for the servers that already exist on the node.

For example:

For new Managed Servers on SOAHOST1, which is already running WLS_SOA1, select SOAHOST2.

For new Managed Servers on SOAHOST2, which is already running WLS_SOA2, select SOAHOST1.

Verify that the appropriate resources are available to run Managed Servers concurrently during migration.

-

Select the Automatic Server Migration Enabled option to enable Node Manager to start a failed server on the target node automatically.

-

Click Save.

-

Restart the Administration Server, Managed Servers, and Node Manager.

-

Repeat these steps to configure server migration for the newly created WLS_OIMn Managed Server.

-

-

To test server migration for this new server, follow these steps from the node where you added the new server:

-

Stop the Managed Server.

Run

kill -9pid on the PID of the Managed Server. To identify the PID of the node, enter, for example,ps -ef | grep WLS_SOAn. -

Watch Node Manager Console for a message indicating that the Managed Server floating IP is disabled.

-

Wait for Node Manager to try a second restart of the Managed Server. Node Manager waits for 30 seconds before trying this restart.

-

After Node Manager restarts the server, stop it again. Node Manager logs a message indicating that the server will not restart again locally.

-

-

Edit the OHS configuration file to add the new managed server(s). See Configuring Oracle HTTP Server to Recognize New Managed Servers.

Scaling Out Oracle Identity Governance

When you scale out the topology, you add new Managed Servers configured with software to new nodes.

Note:

Steps in this procedure refer to WLS_OIG and WLS_SOA. However, you may not be scaling up both the components. For each step, choose the component(s) that you are scaling up in your environment. Some steps do not apply to all components.

Before you scale out, check that you meet these requirements:

-

Existing nodes running Managed Servers configured with OIG and SOA in the topology.

-

The new node can access existing home directories for WebLogic Server, SOA, and OIG. (Use existing installations in shared storage to create new Managed Server. You do not need to install WebLogic Server or component binaries in a new location, but must run pack and unpack to bootstrap the domain configuration in the new node.)

Note:

If there is no existing installation in shared storage, you must install WebLogic Server, SOA, and OIG in the new nodes.

Note:

When multiple servers in different nodes share ORACLE_HOME or WL_HOME, Oracle recommends keeping the Oracle Inventory and Middleware home list in those nodes updated for consistency in the installations and application of patches. To update the oraInventory in a node and "attach" an installation in a shared storage to it, use ORACLE_HOME

/oui/bin/attachHome.sh.

To scale out the topology:

-

On the new node, mount the existing Oracle Home, which contains the SOA and OIG installations, and ensure that the new node has access to this directory, just like the rest of the nodes in the domain.

-

Attach ORACLE_HOME in shared storage to the local Oracle Inventory. For example:

cd /u01/oracle/products/fmw ./attachHome.sh -jreLoc u01/JRE-JDK_version> -

Log in to the Administration Console.

-

Create a new machine for the new node. Add the machine to the domain.

-

Update the machine's Node Manager's address to map the IP of the node that is being used for scale out.

-

Clone WLS_OIG1/WLS_SOA1.

To clone OIG and SOA:

-

Select Environment -> Servers from the Administration Console.

-

Select the Managed Server(s) that you want to clone.

-

Select Clone.

-

Name the new Managed Server WLS_OIG

n/WLS_SOAn, wherenis a number to identity the new Managed Server.

Note:

These steps assume that you are adding a new server to node n, where no Managed Server was running previously.

-

-

Assign the hostname or IP to use for the new Managed Server for the listen address of the Managed Server. In addition, update the value of the Machine parameter with the new machine created in step 4.

If you plan to use server migration for this server (which Oracle recommends), this should be the server VIP (floating IP). This VIP should be different from the one used for the existing Managed Server.

-

Create JMS servers for OIG (if applicable), UMS, BPM, JRFWSAsync, and SOA on the new Managed Server.

-

In the Administration Console, create a new persistent store for the OIG JMS Server and rename it. Specify the store's path, a directory on shared storage.

ORACLE_BASE/admin/domain_name/cluster_name/jms

-

Create a new JMS Server for OIG. Use

JMSFileStore_n for JMSServer. TargetJMSServer_n to the new Managed Server(s). -

Create a persistence store for the new UMSJMSServer(s), for example,

UMSJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/UMSJMSFileStore_n

-

Create a new JMS Server for UMS, for example,

UMSJMSServer_n. Target it to the new Managed Server, which is WLS_SOAn (migratable). -

Create a persistence store for the new BPMJMSServer(s), for example,

BPMJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/BPMJMSFileStore_n

-

Create a new JMS Server for BPM, for example,

BPMJMSServer_n. Target it to the new Managed Server, which is WLS_SOAn (migratable). -

Create a new persistence store for the new JRFWSAsyncJMSServer, for example,

JRFWSAsyncJMSFileStore_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/JRFWSAsyncJMSFileStore_n

-

Create a JMS Server for JRFWSAsync, for example,

JRFWSAsyncJMSServer_n. UseJRFWSAsyncJMSFileStore_n for this JMSServer. TargetJRFWSAsyncJMSServer_n to the new Managed Server, which is WLS_OIGn (migratable).Note:

You can also assign

SOAJMSFileStore_n as store for the new JRFWSAsync JMS Servers. For clarity and isolation, the following steps use individual persistent stores. -

Create a persistence store for the new SOAJMSServer, for example,

SOAJMSFileStore_auto_n. Specify the store's path, a directory on shared storage.ORACLE_BASE/admin/domain_name/cluster_name/jms/SOAJMSFileStore_auto_n

-

Create a JMS Server for SOA, for example,

SOAJMSServer_auto_n. UseSOAJMSFileStore_auto_n for this JMSServer. TargetSOAJMSServer_auto_n to the new Managed Server, which is WLS_SOAn (migratable).Note:

You can also assign

SOAJMSFileStore_n as store for the new PS6 JMS Servers. For clarity and isolation, the following steps use individual persistent stores. -

Update SubDeployment targets for SOA JMS Module to include the new SOA JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click SOAJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the SOAJMSServerXXXXXX subdeployment and add

SOAJMSServer_n to it Click Save.Note:

A subdeployment module name is a random name in the form COMPONENTJMSServerXXXXXX. It comes from the Configuration Wizard JMS configuration for the first two Managed Servers, WLS_COMPONENT1 and WLS_COMPONENT2).

-

Update SubDeployment targets for UMSJMSSystemResource to include the new UMS JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click UMSJMSSystemResource (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the UMSJMSServerXXXXXX subdeployment and add

UMSJMSServer_n to it. Click Save. -

Update SubDeployment targets for OIGJMSModule to include the new OIG JMS Server. Expand the Services node, then expand Messaging node. Choose JMS Modules from the Domain Structure window. Click OIGJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click OIGJMSServerXXXXXX and

OIGJMSServer_nto it. Click Save. -

Update SubDeployment targets for the JRFWSAsyncJmsModule to include the new JRFWSAsync JMS Server. Expand the Services node then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click JRFWSAsyncJmsModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. Click the JRFWSAsyncJMSServerXXXXXX subdeployment and add

JRFWSAsyncJMSServer_n to this subdeployment. Click Save -

Update SubDeployment targets for BPM JMS Module to include the new BPM JMS Server. Expand the Services node, then expand the Messaging node. Choose JMS Modules from the Domain Structure window. Click BPMJMSModule (hyperlink in the Names column). In the Settings page, click the SubDeployments tab. In the subdeployment module, click the BPMJMSServerXXXXXX subdeployment and add

BPMJMSServer_n to it. Click Save.

-

-

Run the pack command on OIGHOST1 to create a template pack. For example:

cd ORACLE_HOME/oracle_common/common/bin ./pack.sh -managed=true/ -domain=/u01/oracle/config/domains/governance/ -template=oigdomaintemplateScale.jar -template_name='oigdomain_templateScale'Run the following command on HOST1 to copy the template file created to HOSTn:

scp oigdomain_templateScale.jar oracle@OIGHOSTn:templates/ ORACLE_BASE/product/fmw/oig/common/binRun the unpack command on HOSTn to unpack the template in the Managed Server domain directory. For example, for OIG:

ORACLE_HOME/oracle_common/common/bin /unpack.sh -domain=/u01oracle/config/domains/governance-template=templates/oigdomaintemplateScale.jar -

Disable hostname verification for the new Managed Server; you must do this before starting/verifying the Managed Server. You can re-enable it after you configure server certificates for the communication between the Administration Server and Node Manager. If the source Managed Server (server you cloned the new one from) had already disabled hostname verification, these steps are not required. Hostname verification settings propagate to cloned servers.

To disable hostname verification:

-

Open the Administration Console.

-

Expand the Environment node in the Domain Structure window.

-

Click Servers.

-

Select WLS_SOAn in the Names column of the table. The Settings page for the server appears.

-

Click the SSL tab.

-

Click Advanced.

-

Set Hostname Verification to None.

-

Click Save.

-

-

Start Node Manager on the new node, as shown:

u01/oracle/config/domains/governance/bin/startNodeManager.sh

-

Start and test the new Managed Server from the Administration Console.

-

Shut down the existing Managed Server in the cluster.

-

Ensure that the newly created Managed Server is up.

-

Access the application on the newly created Managed Server to verify that it works. A login page appears for OIG. For SOA, a HTTP basic authorization opens.

Table 6-3 Managed Server Test URLs

Component Managed Server Test URL SOA

http://vip:port/soa-infra

OIG

http://vip:port/identity

-

-

Edit the OHS configuration file to add the new managed server(s). See Configuring Oracle HTTP Server to Recognize New Managed Servers.

Configuring Oracle HTTP Server to Recognize New Managed Servers

To complete scale up/scale out, you must edit the oim.conf file to add the new Managed Servers, then restart the Oracle HTTP Servers.

Note:

If you are not using shared storage system (Oracle recommended), copy oim.conf to the other OHS servers.

Note:

See the General Parameters for WebLogic Server Plug-Ins in Oracle Fusion Middleware Using Web Server 1.1 Plug-Ins with Oracle WebLogic Server for additional parameters that can facilitate deployments.

Preparing for Shared Storage

Oracle Fusion Middleware allows you to configure multiple WebLogic Server domains from a single Oracle home. This allows you to install the Oracle home in a single location on a shared volume and reuse the Oracle home for multiple host installations.

If you plan to use shared storage in your environment, see Using Shared Storage in High Availability Guide for more information.

For configuration requirements specific to Managed File Transfer, see High Availability Properties in Using Oracle Managed File Transfer.

Deploying Oracle Identity and Access Management Cluster with Unicast Configuration

If multicast IP is disabled in deployment environment then you can deploy Oracle Identity and Access Management cluster with Unicast configuration.

In your deployment environment, if multicast IP is disabled because of security reasons or if you are using cloud Infrastructure for deployment, it is not feasible to deploy Oracle Identity and Access Management with the default configuration (multicast). It is not feasible because Oracle Identity and Access Management uses EH cache, which depends on JavaGroup or JGroup library and supports multicast configuration as default for messages broadcasting.

To perform unicast configuration for EH cache, complete the following steps: