5 Deploying the Unified Topology for Inventory and Automation Service

This chapter describes how to deploy and manage UTIA service.

Overview of UTIA

Oracle Communications Unified Topology for Inventory and Automation (UTIA) represents the spatial relationships among your inventory entities for the inventory and network topology.

-

UTIA provides a graphical representation of topology where you can see your inventory and its relationships at the level of detail that meets your needs.

See UTIA Help for more information about the topology visualization.

Use UTIA to view and analyze the network and service data in the form of topology diagrams. UTIA collects this data from UIM.

You use UTIA for the following:

- Viewing the networks and services, along with the corresponding resources, in the form of topological diagrams and graphical maps.

- Planning the network capacity.

- Tracking networks.

- Viewing alarm information.

UTIA Architecture

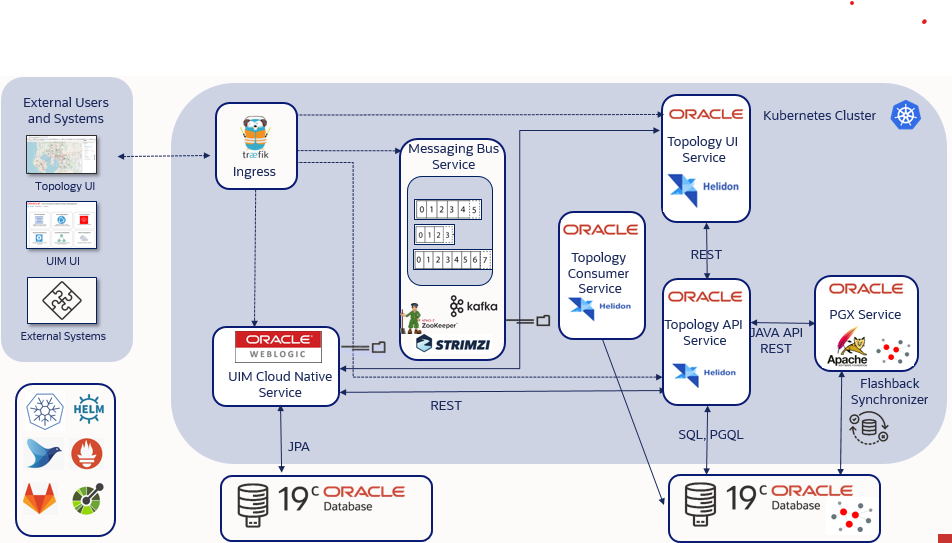

Figure 5-1 shows a high-level architecture of the UTIA service.

UIM as the Producer

UIM communicates with the Topology Service using REST APIs and Kafka Message Bus. UIM is the Producer for Create, Update and Delete operations from UIM that impact Topology. UIM uses REST APIs to communicate directly with the UTIA Service while building the messages and can also continue processing when the Topology Service is unavailable.

Topology as the Consumer

The UTIA service is a consumer for inventory system and assurance system messages. UTIA processes multiple message events including TopologyNodeCreate, TopologyNodeUpdate, TopologyNodeDelete, TopologyEdgeCreate, TopologyEdgeUpdate, TopologyEdgeDelete, TopologyFaultEventCreate, TopologyFaultEventUpdate, TopologyPerformanceEventCreate, TopologyPerformanceEventUpdate.

The service information is updated using the TopologyProfileCreate, TopologyProfileUpdate, and TopologyProfileDelete events.

Topology Graph Database

The UTIA Service communicates to the Oracle Databases using the Oracle Property Graph feature with PGQL and standard SQL. It can communicate directly to the database or with the In-Memory Graph for high performance operations. This converged database feature of Oracle Database makes it possible to utilize the optimal processing method with a single database. The Graph Database is isolated and a separate Pluggable Database (PDB) from the UIM Database but runs on the same 19c version for simplified licensing.

Creating UTIA Images

You must install the prerequisite software and tools for creating UTIA images.

Prerequisites for Creating UTIA Images

You require the following prerequisites for creating UTIA images:

- Podman on the build machine if Linux version is greater than or equal to 8.

- Docker on the build machine if Linux version is lesser than 8

- Unified Topology Builder Toolkit (ref about the deliverables)

- Install Maven and update path variable with Maven

Home.

Set PATH variable export PATH=$PATH:$MAVEN_HOME/bin - Java, installed with JAVA_HOME set in the

environment.

Set PATH variable export PATH=$PATH:$JAVA_HOME/bin - Bash, to enable the `<tab>` command complete feature.

See UIM Compatibility Matrix for details about the required and supported versions of these prerequisite software.

Configuring Unified Topology Images

The dependency manifest file describes the input that goes into the Unified Topology images. It is consumed by the image build process. The default configuration in the latest manifest file provides the necessary components for creating the Unified Topology images easily. See "About the Manifest File" for more information.

Creating Unified Topology Service Images

To create the Unified Topology service images:

Note:

See UIM Compatibility Matrix for the latest versions of software.- Go to WORKSPACEDIR.

- Download graph server war file from Oracle E-Delivery (https://www.oracle.com/database/technologies/spatialandgraph/property-graph-features/graph-server-and-client/graph-server-and-client-downloads.html

→ Oracle Graph Server

<version>→ Oracle Graph Webapps <version> for (Linux

x86-64)) and copy graph server war file to directory

$WORKSPACEDIR/unified-topology-builder/staging/downloads/graph. Ensure only one copy

of PGX.war exists in …/downloads/graph path.

Note:

The log level is set to debug by default in graph server war file. If required, update the log level to error/info in graph-server-webapp-23.3.0.war/WEB-INF/classes/logback.xml before building images. - Download tomcat-9.0.62.tar.gz and copy to $WORKSPACEDIR/unified-topology-builder/staging/downloads/tomcat.

- Download jdk-17.0.7_linux-x64_bin.tar.gz and copy to $WORKSPACEDIR/unified-topology-builder/staging/downloads/java.

- Export proxies in environment variables, fill the details on proxy

settings:

export ip_addr=`ip -f inet addr show eth0|egrep inet|awk '{print $2}'|awk -F/ '{print $1}'` export http_proxy= export https_proxy=$http_proxy export no_proxy=localhost,$ip_addr export HTTP_PROXY= export HTTPS_PROXY=$HTTP_PROXY export NO_PROXY=localhost,$ip_addr - Update $WORKSPACEDIR/unified-topology-builder/bin/gradle.properties with required

proxies.

systemProp.http.proxyHost= systemProp.http.proxyPort= systemProp.https.proxyHost= systemProp.https.proxyPort= systemProp.http.nonProxyHosts=localhost|127.0.0.1 systemProp.https.nonProxyHosts=localhost|127.0.0.1 - Uncomment the proxy block and provide

$WORKSPACEDIR/unified-topology-builder/bin/m2/settings.xml with required

proxies.

<proxies> <proxy> <id>oracle-http-proxy</id> <host>xxxxx</host> <protocol>http</protocol> <nonProxyHosts>localhost|127.0.0.1|xxxxx</nonProxyHosts> <port>xxxxx</port> <active>true</active> </proxy> </proxies> - Copy UI custom icons to directory older $WORKSPACEDIR/unified-topology-builder/staging/downloads/unified-topology-ui/images if you have any customizations for service topology icon. For making customizations, see "Customizing the Images".

- Update the image tag in $WORKSPACEDIR/unified-topology-builder/bin/unified_topology_manifest.yaml

- Run

build-all-imagesscript to create unified topology service images:$WORKSPACEDIR/unified-topology-builder/bin/build-all-images.shNote:

You can include the above procedure into your CI pipeline as long as the required components are already downloaded to the staging area.

Post-build Image Management

The Unified Topology image builder creates images with names and tags based on the settings in the manifest file. By default, this results in the following images:

- uim-7.5.1.2.0-unified-topology-base-1.0.0.2.0:latest

- uim-7.5.1.2.0-unified-topology-api-1.0.0.2.0:latest

- uim-7.5.1.2.0-unified-pgx-1.0.0.2.0:latest

- uim-7.5.1.2.0-unified-topology-ui-1.0.0.2.0:latest

- uim-7.5.1.2.0-unified-topology-dbinstaller-1.0.0.2.0:latest

- uim-7.5.1.2.0-unified-topology-consumer-1.0.0.2.0:latest

Customizing the Images

Service topology can be customized using a JSON configuration file. See Customizing UTIA Service Topology Configurations from UIM in UIM System Administrator's Guide for more information. As a part of customization, if custom icons are to be used to represent nodes in service topology, they must be placed in the $WORKSPACEDIR/unified-topology-builder/staging/downloads/unified-topology-ui/images/ folder and unified-topology-ui image must be rebuilt.

Creating a Unified Topology Instance

This section describes how to create a Unified Topology service instance in your cloud native environment using the operational scripts and the configuration provided in the common cloud native toolkit.

Before you can create a Unified Topology instance, you must validate cloud native environment. See "Planning and Validating Your Cloud Environment" for details on prerequisites.

In this section, while creating a basic instance, the project name is considered as sr and instance name is considered as quick.

Note:

Project and Instance names cannot contain any special characters.

Installing Unified Topology Cloud Native Artifacts and Toolkit

Build container images for the following using the Unified Topology cloud native Image Builder:

- Unified Topology Core application

- Unified PGX application

- Unified Topology User Interface application

- Unified Topology database installer

See "Deployment Toolkits" to download the Common cloud native toolkit archive file. Set the variable for the installation directory by running the following command, where $WORKSPACEDIR is the installation directory of the COMMON cloud native toolkit:

export COMMON_CNTK=$WORKSPACEDIR/common-cntkSetting up Environment Variables

Unified Topology Service relies on access to certain environment variables to run seamlessly. Ensure the following variables are set in your environment:

- Path to your common cloud native toolkit

- Traefik namespace

To set the environment variables:

- Set the COMMON_CNTK variable to the path of directory where common

cloud native toolkit is extracted as

follows:

$ export COMMON_CNTK=$WORKSPACEDIR/common-cntk - Set the

TRAEFIK_NSvariable for Traefik namespace as follows:$ export TRAEFIK_NS=Treafik Namespace - Set the TRAEFIK_CHART_VERSION variable for Traefik helm chart version.

Refer UIM Compatibility Matrix for appropriate version. The following is a sample

for Traefik chart version

15.1.0.

$ export TRAEFIK_CHART_VERSION=15.1.0 - Set SPEC_PATH variable to the location where application and database

yamls are copied as

follows:

$ export SPEC_PATH=$WORKSPACEDIR/utia_spec_dir

Registering the Namespace

After you set the environment variables, register the namespace.

To register the namespace, run the following command:

$COMMON_CNTK/scripts/register-namespace.sh -p sr -t targets

# For example, $COMMON_CNTK/scripts/register-namespace.sh -p sr -t traefik

# Where the targets are separated by a comma without extra spaces

Note:

traefik is the name of the target for registration of the

namespace sr. The script uses TRAEFIK_NS to find these targets.

Do not provide the Traefik target if you are not using Traefik.

Creating Secrets

You must store sensitive data and credential information in the form of Kubernetes Secrets that the scripts and Helm charts in the toolkit consume. Managing secrets is out of the scope of the toolkit and must be implemented while adhering to your organization's corporate policies. Additionally, Unified Topology service does not establish password policies.

Note:

The passwords and other input data that you provide must adhere to the policies specified by the appropriate component.

As a prerequisite to use the toolkit for either installing the Unified Topology database or creating a Unified Topology instance, you must create secrets to access the following:

- UTIA Database

- UIM Instance Credentials

- Secret for UTIA API

- Secret for UTIA UI

- OAM Authentication server details

- Truststore secret for OAM server

The toolkit provides sample scripts to perform this. These scripts should be used for manual and faster creation of an instance. It does not support any automated process for creating instances. The scripts also illustrate both the naming of the secret and the layout of the data within the secret that Unified Topology requires. You must create secrets before running the install-database.sh or create-applications.sh scripts.

Creating Secrets for Unified Topology Database Credentials

The database secret specifies the connectivity details and the credentials for connecting to the Unified Topology PDB (Unified Topology schema). This is consumed by the Unified Topology DB installer and Unified Topology runtime.

- Run the following script to create the required

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create database - Enter the corresponding values as prompted:

- TOPOLOGY DB Admin(sys) Username: Provide Topology Database admin username

- TOPOLOGY DB Admin(sys) Password: Provide Topology Database admin password

- TOPOLOGY Schema Username: Provide username for Unified Topology schema to be created

- TOPOLOGY Schema Password: Provide Unified Topology schema password

- TOPOLOGY DB Host: Provide Unified Topology Database Hostname

- TOPOLOGY DB Port: Provide Unified Topology Database Port

- TOPOLOGY DB Service Name: Provide Unified Topology Service Name

- PGX Client Username: Provide username for PGX Client User to be created

- PGX Client Password: Provide PGX Client Password

- Verify that the following secret is

created:

sr-quick-unified-topology-db-credentials

Creating Secrets for UIM Credentials

The UIM secret specifies the credentials for connecting to the UIM application. This is consumed by Unified Topology runtime.

-

Run the following scripts to create the UIM secret:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create uim - Enter the credentials and the corresponding values as prompted. The

credentials should be as shown in the following

example:

Provide UIM credentials ...(Format should be http: //<host>:<port>)Note:

- If OAUTH is enabled on UIM instance, enter UIM URL in

the format

https://<instance>.<project>.ohs.<oam-host-suffix>:<loadbalancerport> -

If OAUTH is not enabled on UIM instance, enter UIM URL in the format https://<uim-instance>.<uim.project>uim.org:<loadbalancerport>

UIM URL: Provide UIM Application URL, sample https://quick.sr.uim.org:30443 UIM Username: Provide UIM username UIM Password: Provide UIM password Is provided UIM a Cloud Native Environment ? (Select number from menu) 1) Yes 2) No #? 1 Provide UIM Cluster Service name (Format <project>-<instance>-cluster-uimcluster.<project>.svc.cluster.local) UIM Cluster Service name: sr-quick-cluster-uimcluster.sr.svc.cluster.local #Provide UIM Cluster Service name.

- If OAUTH is enabled on UIM instance, enter UIM URL in

the format

- Verify that the following secret is

created:

sr-quick-unified-topology-uim-credentials

Creating Secrets for Authentication on Unified Topology API

The appUsers secret specifies authentication configuration for Unified Topology API.

- Update

$COMMON_CNTK/samples/credentials/topology-user-credentials.yaml with

authentication

configuration:

security: enabled: true #set enabled flag to true to enable authentication providers: - oidc: identity-uri: "https://<oam-instance>.<oam-project>.ohs.<oam-host-suffix>:<port>" #Provide OHS service URL base-scopes: "UnifiedRserver.Info openid" #Provide scope of the created resource client-id: topologyClient #Provide name of the client-id created client-secret: xxxx #Provide Client Secret token-endpoint-auth: CLIENT_SECRET_POST cookie-name: "OIDC_SESSION" cookie-same-site: "Lax" header-use: true audience: "UnifiedRserver" #Provide audience details redirect: true redirect-uri: "/topology" - Run the following script to create the appUsers

secret:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create appUsers - Enter the values appropriately against prompts.

- Provide App User Credentials for

sr-quick. - Enter the app credentials file: $COMMON_CNTK/samples/credentials/topology-user-credentials.yaml.

- Verify that the following secret is

created:

sr-quick-unified-topology-user-credentials

Creating Secrets for Authentication on Unified Topology UI

The appUIUsers secret specifies authentication configuration for Unified Topology UI application.

- Update

$COMMON_CNTK/samples/credentials/topology-ui-user-credentials.yaml with

authentication configuration.

Note:

Uncomment and set the value of the property session timeout same as the value of tokenExpiry, if tokenExpiry is set with different value than default while creating an identity domain during OAM setup. tokenExpiry is set while creating an identity domain during OAM setup. See "Deploying the Common Authentication Service" for more information.

topology-ui-user-credentials.yaml

security: enabled: true #set enabled flag to true to enable authentication providers: - oidc: identity-uri: "https://<oam-instance>.<oam-project>.ohs.<oam-host-suffix>:<port>" #Provide OHS service URL base-scopes: "UnifiedRserver.Info openid" #Provide scope of the created resource client-id: topologyClient #Provide name of the client-id created client-secret: xxxx #Provide Client Secret token-endpoint-auth: CLIENT_SECRET_POST cookie-name: "OIDC_SESSION" cookie-same-site: "Lax" header-use: true audience: "UnifiedRserver" #Provide audience details redirect: true redirect-uri: "/redirect/unified-topology-ui" logout-enabled: true #The following values are needed when logout is enabled for OIDC logout-uri: "/oidc/logout" post-logout-uri: apps/unified-topology-ui #provide server logout else it it going to userlogout and dispalying error page of OAM logout-endpoint-uri: "https://<oam-instance>.<oam-project>.ohs.<oam-host-suffix>:<port>/oam/server/logout" #Provide oidc logout, update <loadbalancerport> value and ohshostname. cookie-encryption-password: "lpmaster" #uncomment and set the value of the property sessiontimeout same as the value of tokenExpiry, if tokenExpiry is set with different value than default while creating an identity domain during OAM setup. #sessiontimeout: 3600 - Run the following script to create the appUIUsers

secret:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create appUIUsers - Enter the Topology UI User Credentials for 'sr-quick'.

- Enter the app credentials file: $COMMON_CNTK/samples/credentials/topology-ui-user-credentials.yaml #Provide path to the topology-ui-user-credentials.yaml.

- Verify that the following secret is

created:

sr-quick-unified-topology-ui-user-credentials

Creating Secrets for Authentication Server Details

The OAuth secret specifies details of the authentication server. It is used by Unified Topology to connect to Message Bus Bootstrap service. See "Adding Common OAuth Secret and ConfigMap" for more information.

Creating Secrets for SSL enabled on traditional UIM truststore

The inventorySSL secret stores the truststore file of the SSL enabled on traditional UIM, it is required only if Authentication is not enabled on topology and to integrate topology with UIM traditional instance.

- Create truststore file using UIM certificates and to enable SSL on UIM. See UIM System Administrator's Guide for more information.

- Once you have the certificate of traditional UIM run following command to create

truststore:

keytool -importcert -v -alias uimonprem -file ./cert.pem -keystore ./uimtruststore.jks -storepass ******* - After creating uimtruststore.jks run following command to create

inventorySSL secret and pass the truststore created

above:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create inventorySSLThe system prompts for the trustsotre file location and passpharase for truststore. Provide appropriate values.

Installing Unified Topology Service Schema

To install the Unified Topology schema:

- Update values under unified-topology-dbinstaller in

$SPEC_PATH/sr/quick/database.yaml file with values required for unified topology

schema creation.

Note:

- The YAML formatting is case-sensitive. Use a YAML editor to ensure that you do not make any syntax errors while editing. Follow the indentation guidelines for YAML.

- Before changing the default values provided in the specification file, verify that they align with the values used during PDB creation. For example, the default tablespace name should match the value used when PDB is created.

- Edit the database.yaml file and update the DB installer image to point to the

location of your image as

follows:

unified-topology-dbinstaller: dbinstaller: image: DB_installer_image_in_your_repo tag: DB_installer image tag in your repo - If your environment requires a password to download the container images

from your repository, create a Kubernetes secret with the Docker pull credentials.

See "Kubernetes documentation" for

details. Refer the secret name in the database.yaml. Provide image pull secret and

image pull policy

details.

unified-topology-dbinstaller: imagePullPolicy: Never # The image pull access credentials for the "docker login" into Docker repository, as a Kubernetes secret. # Uncomment and set if required. # imagePullSecret: "" - Run the following script to start the Unified Topology DB installer,

which instantiates a Kubernetes pod resource. The pod resource lives until the DB

installation operation

completes.

$COMMON_CNTK/scripts/install-database.sh -p sr -i quick -f $SPEC_PATH/sr/quick/database.yaml -a unified-topology -c 1 - You can run the script with -h to see the available options.

- Check the console to see if the DB installer is installed successfully.

- If the installation has failed, run the following command to review the error

message in the

log:

kubectl logs -n sr sr-quick-unified-topology-dbinstaller - Clear the failed pod by running the following

command:

helm uninstall sr-quick-unified-topology-dbinstaller -n sr - Run the install-database script again to install the Unified Topology DB installer.

Configuring the applications.yaml File

The applications.yaml file is a Helm override values file to override default values of unified topology chart. Update values under chart unified-topology in $SPEC_PATH/<PROJECT>/<INSTANCE>/applications.yaml to override the default values.

The applications.yaml provides a section for values that are common for all microservices. Provide Values under that common section and it is reflected for all services.

Note:

There are common values specified in applications.yaml and database.yaml for the microservices. To override the common value, specify the value for the common value under chart name of microservice. If value under the chart is empty, then common value is considered.

To configure the project specification:

- Edit the applications.yaml to provide the image in your

repository (name and tag) by running the following

command:

vi $SPEC_PATH/<PROJECT>/<INSTANCE>/applications.yaml ** edit the topologyAPiName, pgxName, uiName to reflect the Unified Topology image names and location in your docker repository ** edit the topologyAPiTag, pgxTag, uiTag to reflect the Unified Topology image names and location in your docker repository unified-topology: image: topologyApiName: uim-7.5.1.2.0-unified-topology-api-1.0.0.2.0 pgxName: uim-7.5.1.2.0-unified-pgx-1.0.0.2.0 uiName: uim-7.5.1.2.0-unified-topology-ui-1.0.0.2.0 topologyConsumerName: uim-7.5.1.2.0-unified-topology-consumer-1.0.0.2.0 topologyApiTag: latest pgxTag: latest uiTag: latest topologyConsumerTag: latest - If your environment requires a password to download the container images from your

repository, create a Kubernetes secret with the Docker pull credentials. See the

"Kubernetes documentation" for details. See

the secret name in the applications.yaml for more

information.

# The image pull access credentials for the "docker login" into Docker repository, as a Kubernetes secret. # uncomment and set if required. unified-topology: # imagePullSecret: # imagePullSecrets: # - name: regcred - Set Pull Policy for unified topology images in applications.yaml. Set

pullPolicy to Always in case image is

updated.

unified-topology: image: pullPolicy: Never - Update loadbalancerhost, loadbalancerpost in applications.yaml. If there is

no external loadbalancer configured for the instance change the value of

loadbalancerportto the default Traefik NodePort and loadbalancerhost to the worker node IP. If TLS is enabled on Unified Topology Traefik NodePort is 30443 and if TLS is disabled it is 30305.If you use Oracle Cloud Infrastructure LBaaS, or any other external load balancer, if TLS is enabled set

loadbalancerportto 443 else setloadbalancerportto 80 and update the value forloadbalancerhostappropriately.loadbalancerhostandloadbalancerportare common values for all services.#provide loadbalancer host and post loadbalancerhost: 100.76.135.13 loadbalancerport: 30305 - To enable authentication, set flag authentication.enabled to

true. Provide OHS server hostname If authentication is enabled.

authentication.enabled flag and ohsHostname are common values for all

services.

# The enabled flag is to enable or disable authentication authentication: enabled: true #Provide ohs server hostname ohsHostname: <instance>.<project>.ohs.<oam-host-suffix> - If Authentication is not enabled on UTIA and want to integrate UTIA with traditonal

SSL enabled UIM, you have to create inventorySSL secret and enable the

inventorySSL flag in applications.yaml as shown

below:

# make it true if using on prem inventory with ssl port enabled and authentication is not enabled on topology # always false for Cloud Native inventory # not required in production environment isInventorySSL: true

Configuring Unified Topology Application Properties

Sample configuration files topology-static-config.yaml.sample, topology-dynamic-config.yaml.sample are provided as follows:

- The sample files for Topology API service are added in $COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/topology-api.

- The sample files for Topology Consumer service are added in $COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/topology-consumer.

To override configuration properties, copy the sample static property file to topology-static-config.yaml and sample dynamic property file to topology-dynamic-config.yaml. Provide key value to override the default value provided out-of-the-box for any specific system configuration property. The properties defined in property files are fed into the container using Kubernetes configuration maps. Any changes to these properties require the instance to be upgraded. Pods are restarted after configuration changes to topology-static-config.yaml.

Max Rows

Modify the following setting to limit the number of records returned in LIMIT queries:

topology:

query:

maxrows: 5000

Date Format

Any modifications to the date format used by all dates must be consistently applied to all consumers of the APIs.

topology:

api:

dateformat: yyyy-MM-dd'T'HH:mm:ss.SSS'Z'

Alarm Types

The out of the box alarm types utilize industry standard values. If you want to display a different value, modify the value accordingly:

For example: To modify the COMMUNICATIONS_ALARM change the value to COMMUNICATIONS_ALARM: Communications

alarm-types:

COMMUNICATIONS_ALARM: COMMUNICATIONS_ALARM

PROCESSING_ERROR_ALARM: PROCESSING_ERROR_ALARM

ENVIRONMENTAL_ALARM: ENVIRONMENTAL_ALARM

QUALITY_OF_SERVICE_ALARM: QUALITY_OF_SERVICE_ALARM

EQUIPMENT_ALARM: EQUIPMENT_ALARM

INTEGRITY_VIOLATION: INTEGRITY_VIOLATION

OPERATIONAL_VIOLATION: OPERATIONAL_VIOLATION

PHYSICAL_VIOLATION: PHYSICAL_VIOLATION

SECURITY_SERVICE: SECURITY_SERVICE

MECHANISM_VIOLATION: MECHANISM_VIOLATION

TIME_DOMAIN_VIOLATION: TIME_DOMAIN_VIOLATION

Event Status

UTIA supports 3 types of events: 'Raised' for new events, 'Updated' for existing events with updated information and 'Cleared' for events that have been Closed.

To modify the 'CLEARED' event change the value to CLEARED: closed

event-status:

CLEARED: CLEARED

RAISED: RAISED

UPDATED: UPDATED

Event Severity

UTIA supports various types of event severity on a Device. The severity from most severe to least severe is CRITICAL(1), MAJOR(5), WARNING(10), INTERMEDIATE(15), MINOR(20), CLEARED(25) and None(999).

Internally, a numeric value is used to identify the severity hierarchy. The top three most severe events are tracked in UTIA.

To modify the 'INTERMEDIATE' severity change the value to INTERMEDIATE: moderate

severity:

CLEARED: CLEARED

INDETERMINATE: INDETERMINATE

CRITICAL: CRITICAL

MAJOR: MAJOR

MINOR: MINOR

WARNING: WARNING

Path Analysis Cost Values

UTIA supports 3 different types of numeric cost values for each edge/connectivity maintained in topology. The cost type label is configured based on your business requirements and data available.

You select the cost parameter to evaluate while using path analysis. The cost values are maintained externally using the REST APIs.

To modify 'costValue3' from Distance to Packet Loss change the value to costValue3: PacketLoss after updating the data values.

pathAnalysis:

costType:

costValue1: Jitter

costValue2: Latency

costValue3: Distance

Path Analysis Alarms

Alarms can be used by path analysis to exclude devices in the returned paths. The default setting is to exclude devices with any alarm.

To allow Minor and Greater alarms modify the setting to:

excludeAlarmTypes: Critical and Greater, Major and Greater

All Paths Limit

To improve the response time, modify the max number of paths returned when using 'All' Paths.

Topology Consumer

Reduce the Poll size for Retry and dlt Topic

Uncomment or add the configuration values in topology-config.yaml and upgrade the Topology Consumer service.

Maximum Poll Interval and Records

Edit max.poll.interval.ms to increase or decrease the delay between invocations of poll() when using consumer group management and max.poll.records to increase or decrease the maximum number of records returned in a single call to poll().

mp.messaging:

incoming:

toInventoryChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 500

toFaultChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 500

toRetryChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 200

toDltChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 100Partition assignment strategy

The PartitionAssignor is the class that decides which partitions are assigned to which consumer. While creating a new Kafka consumer, you can configure the strategy that can be used to assign the partitions amongst the consumers. You can set it using the configuration partition.assignment.strategy. The partition re-balance (moving partition ownership from one consumer to another) happens, in case of:

- Addition of new Consumer to the Consumer group.

- Removal of Consumer from the Consumer group.

- Addition of New partition to the existing topic.

To change the partition assignment strategy, update the topology-config.yaml for topology consumer and redeploy the POD. The below example configuration shows the CooperativeStickyAssignor strategy. For list of supported partition assignment strategies, see partition.assignment.strategy in Apache Kafka documentation.

mp.messaging

connector:

helidon-kafka:

partition.assignment.strategy: org.apache.kafka.clients.consumer.CooperativeStickyAssignorIntegrate Unified Topology Service with Message Bus Service

To integrate Unified Topology API service with Message Bus service:

- In the file $SPEC_PATH/sr/quick/applications.yaml, uncomment the section messagingBusConfig.

- Provide namespace and instance name on which the Messaging Bus service is deployed.

- Security protocol is SASL_PLAINTEXT if authentication is enabled on Message bus service. If authentication is not enabled on the Message Bus service, the security protocol is PLAINTEXT.

A sample configuration when authentication is enabled and Messaging Bus is deployed on instance 'quick' and namespace 'sr' is as follows:

applications.yaml

authentication:

enabled: true

messagingBusConfig:

namespace: sr

instance: quickCreating a Unified Topology Instance

To create a Unified Topology instance in your environment using the scripts that are provided with the toolkit:

- Run the following command to create a UTIA

instance:

$COMMON_CNTK/scripts/create-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topologyThe create-applications script uses the helm chart located in $COMMON_CNTK/charts/unified-topology-app to create and deploy a unified-topology service.

- If the scripts fail, see the Troubleshooting Issues section at the end of this topic, before you make additional attempts.

Accessing Unified Topology

Proxy Settings

To set the proxy settings:

- In the browser's network no-proxy settings include

*<hostSuffix>. For example,

*uim.org. - In

/etc/hostsinclude etc/hosts<k8s cluster ip or loadbalancerIP> <instance>.<project>.topology.<hostSuffix> for example: <k8s cluster ip or external loadbalancer ip> quick.sr.topology.uim.org

Exercise Unified Topology service endpoints

If TLS is enabled on Unified Topology, exercise endpoints using Hostname <topology-instance>.<topology-project>.topology.uim.org.

Unified Topology UI endpoint format: https://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/apps/unified-topology-ui

Unified Topology API endpoint format: https://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/topology/v2/vertex

- Unified Topology UI endpoint: https://quick.sr.topology.uim.org:30443/apps/unified-topology-ui

- Unified Topology API endpoint: https://quick.sr.topology.uim.org:30443/topology/v2/vertex

If TLS is not enabled on Unified Topology, exercise endpoints:

Unified Topology UI endpoint format: http://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/apps/unified-topology-ui

Unified Topology API endpoint format: http://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/topology/v2/vertex

Validating the Unified Topology Instance

To validate the UTIA instance:

- Run the following to check the status of unified-topology instance

deployed.

$COMMON_CNTK/scripts/application-status.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topologyThe application-status script returns the status of unified topology service deployments and pods status.

- Run the following endpoint to monitor health of

unified-topology:

https://<loadbalancerhost>:<loadbalancerport>/unified-topology/health - Run the following Unified Topology service endpoints to add entry in

/etc/hosts <k8s cluster ip or external loadbalancer ip>

quick.sr.topology.uim.org:

- Unified Topology UI endpoint:

https://quick.sr.topology.uim.org:30443/apps/unified-topology-ui - Unified Topology API endpoint:

https://quick.sr.topology.uim.org:30443/topology/v2/vertex

- Unified Topology UI endpoint:

Deploying the Graph Server Instance

Graph Server or Pgx Server instance is needed for Path Analysis. By default, replicaCount of pgx(graph) server pods is set to '0'. For path analysis to function , set the replicaCount of pgx pods to '2' and upgrade instance. See "Upgrade Unified Topology Instance" for more information.

A cron job must be scheduled to periodically reload the active unified-topology-pgx pod.

pgx:

pgxName: "unified-pgx"

replicaCount: 2

java:

user_mem_args: "-Xms8000m -Xmx8000m -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/logMount/$(APP_PREFIX)/unified-topology/unified-pgx/"

gc_mem_args: "-XX:+UseG1GC"

options:

resources:

limits:

cpu: "4"

memory: 16Gi

requests:

cpu: 3500m

memory: 16Gi

Scheduling the Graph Server Restart CronJob

Once the instance is created succesfully, cronjob needs to schedule for unified-topology-pgx pod restarts. For a scheduled period of time, one of the unified-topology-pgx pod is restarted and all incoming requests are routed to other unfified-topology-pgx pod seamlessly.

Update the script $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.sh to include required environment variables - KUBECONFIG, pgx_ns, pgx_instance. For a basic instance, pgx_ns is sr and pgx_instance is quick.

export KUBECONFIG=<kube config path>

export pgx_ns=<unified-topology project name>

export pgx_instance=<unified-topology instance name>

pgx_pods=`kubectl get pods -n $pgx_ns --sort-by=.status.startTime -o name | awk -F "/" '{print $2}' | grep $pgx_instance-unified-pgx`

pgx_pod_arr=( $pgx_pods )

echo "Deleting pod - ${pgx_pod_arr[0]}"

kubectl delete pod ${pgx_pod_arr[0]} -n $pgx_ns --grace-period=0

The following crontab is scheduled for every day midnight. Scheduled time may vary depending on the volume of data.

Variable $COMMON_CNTK should be set in environment where cronjob runs or replace $COMMON_CNTK with complete path.

crontab –e 0 0 * * * $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.sh > $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.logAffinity on Graph Server

If multiple PGX pods are scheduled on the same worker node, the memory consumption by these PGX pods becomes very high. To address this, include the following affinity rule in applications.yaml, under the unified-topology chart to avoid scheduling of multiple PGX pods on the same worker node.

The following podantiaffinity rule uses the app= <topology-project>-<topology-instance>-unified-pgx label. Update the label with the corresponding project and instance names for UTIA service. For example: sr-quick-unified-pgx.

unified-topology:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- <topology-project>-<topology-instance>-unified-pgx

topologyKey: "kubernetes.io/hostname"

Upgrading the Unified Topology Instance

Upgrading Unified Topology is required when there are updates made to applications.yaml and topology-static-config.yaml and topology-dynamic-config.yaml configuration files.

Run the following command to upgrade unified topology service.

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a unified-topologyAfter script execution is done, validate the unified topology service by running application-status script.

Restarting the Unified Topology Instance

To restart the Unified Topology instance:

-

Run the following command to restart unified topology service

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology -r all -

After running the script, validate the unified topology service by running application-status script.

-

To restart unified-topology-api/unified-topology-ui/unified-pgx, run the above command by passing -r with service name as follows:

-

To restart Unified Topology API

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology -r unified-topology-api -

To restart Unified Topology PGX

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology -r unified-pgx -

To restart Unified Topology UI:

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology -r unified-topology-ui -

To restart Unified Topology Consumer

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology -r unified-topology-consumer

Alternate Configuration Options for UTIA

You can configure UTIA using the following alternate options.

Setting up Secure Communication using TLS

When Unified Topology service is involved in secure communication with other systems, either as the server or as the client, you should additionally configure SSL/TLS.The procedures for setting up TLS use self-signed certificates for demonstration purposes. However, replace the steps as necessary to use signed certificates.

To setup secure communication using TLS:

- Generate keystore by passing

commoncert.pemandcommonkey.pemgenerated while OAM setup for inputs. Provide-name "param".openssl pkcs12 -export -in $COMMON_CNTK/certs/commoncert.pem -inkey $COMMON_CNTK/certs/commonkey.pem -out $COMMON_CNTK/certs/keyStore.p12 -name "topology" - Edit the $SPEC_PATH/sr/quick/applications.yaml and set tls enabled to

true. Provide tls strategy to be used either terminate or reencrypt. Tls strategy

should be RENCRYPT If authetication is enabled using OHS service enabled with SSL.

tls: # The enabled flag is to enable or disable the TLS support for the unified topology m-s end points enabled: true # valid values are TERMINATE, REENCRYPT strategy: "REENCRYPT"Note:

TLS terminate strategy requires ingressTLS secret and TLS reencrypt requires both ingressTLS and appkeystore secrets to be created.

- Create IngressTLS secret to pass the generated certificate and key pem

files.

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create ingressTLS - The script prompts for the following detail:

- Ingress TLS Certificate Path (PEM file): <path_to_cert.pem>

- Ingress TLS Key file Path (PEM file): <path_to_key.pem>

- Create appkeystore secret to pass the generated keystore

file.

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topology create appKeystore - The script prompts for the following detail:

- App TLS Keystore Passphrase: <export password value passed while creating keyStrore.p12 key>

- App TLS Keystore Key Alias: <-name "param" passed while creating keyStore.p12 key>

- App TLS Keystore PrivateKey Path: <path to keyStore.p12>

- Verify that the following secrets are created

successfully.

sr-quick-unified-topology-ingress-tls-cert-secret sr-quick-unified-topology-keystore - Create Unified Topology Instance as usual. Access Topology endpoints using hostname <topology-instance>.<topology-instance>.topology.uim.org

- Add entry in /etc/hosts <k8s cluster ip or external loadbalancer ip> quick.sr.topology.uim.org

- Unified Topology UI endpoint:

https://quick.sr.topology.uim.org:30443/apps/unified-topology-ui - Unified Topology API endpoint:

https://quick.sr.topology.uim.org:30443/topology/v2/vertex

Setting up Secure Outgoing Communication using TLS

As part of the secret created under section Creating Secrets for Authentication Server details,, a truststore is created by adding OAM server certificate. This enables secure communication between OAM and UTIA applications.

Similarly, to enable secure outgoing communication between the server and UTIA, perform the steps mentioned in the section Creating Secrets for Authentication Server details.

- Add server certificates to the truststore.

- Recreate the secret using Creating Secrets for Authentication Server details.

- Upgrade the UTIA instance to take the latest truststore from secret. To upgrade UTIA, see Upgrade Unified Topology Instance section.

For example: To enable SSL outgoing communication from UTIA to UIM on premise application, Add UIM certificates to the truststore and recreate the secret and upgrade UTIA application.

Note:

Follow the standard procedure for certificate creation. If UIM Inventory is accessed using IP address/Hostname of the machine, UIM certificate should contain IP address/Hostname of the machine as subject alternative name in the certificate. Sample command for certificate creation along with subject alternative names (Both the cloud native value and subject alternative names has hostname entry):

openssl req -x509 -newkey rsa:2048 -days 365 -keyout key.pem -out cert.pem -nodes -subj "/CN=<hostname> /ST=TL /L=HYD /O=ORACLE /OU=CAGBU" -extensions san -config <(echo '[req]'; echo 'distinguished_name=req'; echo '[san]';echo 'subjectAltName=@alt_names';echo '[alt_names]';echo 'DNS.1=<hostname>';echo 'DNS.2=localhost';echo 'DNS.3=svc.cluster.local';)Choosing Worker Nodes for Unified Topology Service

By default, Unified Topology has its pods scheduled on all worker nodes in the Kubernetes cluster in which it is installed. However, in some situations, you may want to choose a subset of nodes where pods are scheduled.

For example:

Limitation on the deployment of Unified Topology on specific worker nodes per each team for reasons such as capacity management, chargeback, budgetary reasons, and so on.

To choose a subset of nodes where pods are scheduled, you can use the configuration in the applications.yaml file.

Sample node affinity configuration(requiredDuringSchedulingIgnoredDuringExecution) for unified topology service:

applications.yaml

unified-topology:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: name

operator: In

values:

- south_zone

Kubernetes pod is scheduled on the node with label name as south_zone. If node with label name: south_zone is not available, pod will not be scheduled.

Sample node affinity configuration (preferredDuringSchedulingIgnoredDuringExecution:) for unified topology service:

applications.yaml

unified-topology:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: name

operator: In

values:

- south_zone

Kubernetes pod is scheduled on the node with label name as south_zone. If node with label name: south_zone is not available, pod will still be scheduled on another node.

Setting up Persistent Storage

Follow the instructions mentioned in UIM Cloud Native Deployment guide for configuring Kubernetes persistent volumes.

To create persistent storage:

- Update applications.yaml to enable storage volume for unified topology

service and provide the persistent volume

name.

storageVolume: enabled: true pvc: sr-nfs-pvc #Specify the storage-volume name - Update database.yaml to enable storage volume for unified topology dbinstaller and

provide the persistent volume

name.

storageVolume: enabled: true type: pvc pvc: sr-nfs-pvc #Specify the storage-volume name

After the instance is created, you must see the directories unified-topology and unified-topology-dbinstaller in your PV mount point, if you have enabled logs.

Managing Unified Topology Logs

To customize and enable logging, update the logging configuration files for the application.

- Customize unified-topology-api service logs:

- For service level logs update file $COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/topology-api/logging-config.xml

- For Helidon-specific logs update file

$COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/topology-api/logging.properties.

By default console handler is used, you can provide filehandler as well

uncomment below lines and provide <project> and <instance> names for

location to save

logs

handlers=io.helidon.common.HelidonConsoleHandler,java.util.logging.FileHandler java.util.logging.FileHandler.formatter=java.util.logging.SimpleFormatter java.util.logging.FileHandler.pattern=/logMount/sr-quick/unified-topology/unified-topology-api/logs/TopologyJULMS-%g-%u.log

- Customize unified-topology-pgx service logs:

Update file $COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/pgx/logging-config.xml

- Customize unified-topology-ui service logs:

Update file $COMMON_CNTK/charts/unified-topology-app/charts/unified-topology/config/topology-ui/logging.properties

- Update the logging configuration files and upgrade the unified-topology

m-s

application:

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $SPEC_PATH/applications.yaml -a unified-topology

Viewing Logs using Elastic Stack

You can view and analyze the Unified Topology service logs using Elastic Stack.

The logs are generated as follows:

- Fluentd collects the text logs that are generated during Unified Topology deployment and sends them to Elasticsearch.

- Elasticsearch collects all types of logs and converts them into a common format so that Kibana can read and display the data.

- Kibana reads the data and presents it in a simplified view.

See "Setting Up Elastic Stack" for more information.

Setting Up Elastic Stack

To set up Elastic Stack:

- Install Elasticsearch and Kibana using the following

commands:

#Install elasticsearch and kibana . It might take time to download images from docker hub. kubectl apply -f $COMMON_CNTK/samples/charts/elasticsearch-and-kibana/elasticsearch_and_kibana.yaml #Check if services are running, append namespace if deployment is other than default like:- kubectl get services --all-namespaces kubectl get services Access kibana dashboard Method 1 - kubectl get svc ( will return all the services , append namespace if deployment is other than default like:- kubectl get services --all-namespaces) Ex- elasticsearch ClusterIP 10.96.190.99 <none> 9200/TCP,9300/TCP 113d kibana NodePort 10.100.198.88 <none> 5601:31794/TCP 113d Kibana service nodeport at port 31794 is created Now access kibana dashboard using url - http://<IP address of VM>:<nodeport>/ - Run the following command to create a namespace ensuring that it

does not already

exist.

kubectl get namespaces export FLUENTD_NS=fluentd kubectl create namespace $FLUENTD_NS - Update $COMMON_CNTK/samples/charts/fluentd/values.yaml with Elastic

Search Host and

Port.

elasticSearch: host: "elasticSearchHost" port: "elasticSearchPort"For example:

elasticSearch: host: "elasticsearch.default.svc.cluster.local" port: "9200" - Modify the Fluentd image resources if

required.

image: fluent/fluentd-kubernetes-daemonset:v1-debian-elasticsearch resources: limits: memory: 200Mi requests: cpu: 100m memory: 200Mi - Run the following commands to install fluentd-logging using the

$COMMON_CNTK/samples/charts/fluentd/values.yaml file in the

samples:

helm install fluentd-logging $COMMON_CNTK/samples/charts/fluentd -n $FLUENTD_NS --values $COMMON_CNTK/samples/charts/fluentd/values.yaml \ --set namespace=$FLUENTD_NS \ --atomic --timeout 800s - Run the following command to upgrade

fluentd-logging:

helm upgrade fluentd-logging $COMMON_CNTK/samples/charts/fluentd -n $FLUENTD_NS --values $COMMON_CNTK/samples/charts/fluentd/values.yaml \ --set namespace=$FLUENTD_NS \ --atomic --timeout 800s - Run the following command to uninstall

fluentd-looging:

helm delete fluentd-logging -n $FLUENTD_NS - Use 'fluentd_looging-YYYY.MM.DD' (default index configuration) index pattern in Kibana to check the logs.

Visualize logs in Kibana

To visualize logs in Kibana:

- Navigate to Kibana dashboard (http://<IP address of VM>:<nodeport>/).

- Create Index pattern (fluentd_looging-YYYY.MM.DD).

- Click on Discover.

Viewing Logs using OpenSearch

You can view and analyze the Application logs using OpenSearch.

The logs are generated as follows:

- Fluentd collects the application logs that are generated during cloud native deployments and sends them to OpenSearch.

- OpenSearch collects all types of logs and converts them into a common format so that OpenSearch Dashboard can read and display the data.

- OpenSearch Dashboard reads the data and presents it in a simplified view.

See "Setting Up OpenSearch" for more information.

Managing Unified Topology Metrics

Run the following endpoint to monitor metrics of unified topology:

https://<loadbalancerhost>:<loadbalancerport>/sr/quick/unified-topology/metricsPrometheus and Grafana setup

See "Setting Up Prometheus and Grafana" for more information.

Adding scrape Job in Prometheus

Add the following Scrape job in Prometheus Server. This can be added by editing the config map used by the Prometheus server:

- job_name: 'topologyApiSecuredMetrics'

oauth2:

client_id: <client-id>

client_secret: <client-secret>

scopes:

- <Scope>

token_url: <OAUTH-TOKEN-URL>

tls_config:

insecure_skip_verify: true

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_label_app]

action: keep

regex: (<project>-<instance>-unified-topology-api)

- source_labels: [__meta_kubernetes_pod_container_port_number]

action: keep

regex: (8080)

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_nameNote:

If Authentication is not enabled on Unified Topology, remove oauth section from above mentioned job.

Allocating Resources for Unified Topology Service Pods

To increase performance of the service, applications.yaml has configuration to provide JVM memory settings and pod resources for Unified Topology Service.

There are separate configurations provided for topology-api, topology-consumer, pgx and topology-ui services. Provide required values under the service name under unified-topology application.

unified-topology:

topologyApi:

apiName: "unified-topology-api"

replicaCount: 3

java:

user_mem_args: "-Xms2000m -Xmx2000m -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/logMount/$(APP_PREFIX)/unified-topology/unified-topology-api/"

gc_mem_args: "-XX:+UseG1GC"

options:

resources:

limits:

cpu: "2"

memory: 3Gi

requests:

cpu: 2000m

memory: 3Gi

Scaling Up or Scaling Down the Unified Topology Service

Provide replica count in applications.yaml to scale up or scale down the unified topology pods. Replica count can be configured for topology-api, topology-consumer, pgx and topology-ui pods individually by updating applications.yaml.

Update applications.yaml to increase replica count to 3 for topology-api deployment.

unified-topology:

topologyApi:

replicaCount: 3

Apply the change in replica count to the running Helm release by running the upgrade-applications script.

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a unified-topologyEnabling GC Logs for UTIA

By default, GC logs are disabled, you can enable them and view the logs at the corresponding folders inside location /logMount/sr-quick/unified-topology.

To Enable GC logs, update $SPEC_PATH/sr/quick/applications.yaml file as follows:

- Under

gcLogsmakeenabledastrueyou can uncommentgcLogsoptions under unified-topology to override the common values. - To configure the maximum size of each file and limit for number of files you need to

set

fileSizeandnoOfFilesinsidegcLogsas follows:gcLogs: enabled: true fileSize: 10M noOfFiles: 10

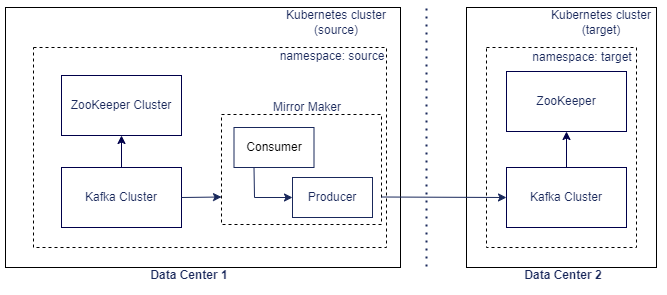

Geo Redundancy Support

The Geo Redundancy of Message Bus (which uses Kafka) is achieved with Mirror Maker tool. Apache Kafka Mirror Maker replicates data across two Kafka clusters, within or across data centers. See https://strimzi.io/blog/2020/03/30/introducing-mirrormaker2/ for more details.

The following diagram shows an example of how mirror maker replicates the topics from source Kafka cluster to target Kafka cluster.

The prerequisites are as follows:

- The Strimzi operator should be up and running

- The source Message Bus service should be up and running

- The target Message Bus service should be up and running

Strimzi Operator

Validate that the Strimzi operator is installed by running the following command:

$kubectl get pod -n <STRIMZI_NAMESPACE>

NAME READY STATUS RESTARTS AGE

strimzi-cluster-operator-566948f58c-sfj7c 1/1 Running 0 6m55s

Validate installed helm release for Strimzi operator by running the following command:

$helm list -n <STRIMZI_NAMESPACE>

NAME NAMESPACE REVISION STATUS CHART APP VERSION

strimzi-operator STRIMZI_NAMESPACE 1 deployed strimzi-kafka-operator-0.X.0 0.X.0

Source Message Bus

The source Message Bus should be up and running (the Kafka cluster from which the topics should be replicated).

Validate the Kafka cluster is installed by running the following command:

$kubectl get pod -n sr1

NAME READY STATUS RESTARTS AGE

sr1-quick1-messaging-entity-operator-5f9c688c7-2jcjg 3/3 Running 0 27h

sr1-quick1-messaging-kafka-0 1/1 Running 0 27h

sr1-quick1-messaging-zookeeper-0 1/1 Running 0 27h

Validate the persistent volume claims created for the Kafka cluster by running the following command:

$kubectl get pvc -n sr1

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-sr1-quick1-messaging-kafka-0 Bound <volume> 1Gi RWO sc 27h

data-sr1-quick1-messaging-zookeeper-0 Bound <volume> 1Gi RWO sc

Target Message Bus

The target Message Bus should be up and running (the Kafka cluster to which the topics should be replicated).

Validate the Kafka cluster is installed by running the following command:

$kubectl get pod -n sr2

NAME READY STATUS RESTARTS AGE

sr2-quick2-messaging-entity-operator-5f9c688c7-2jcjg 3/3 Running 0 27h

sr2-quick2-messaging-kafka-0 1/1 Running 0 27h

sr2-quick2-messaging-zookeeper-0 1/1 Running 0 27h

Validate the persistent volume claims created for the Kafka cluster by running the following command:

$kubectl get pvc -n <kafka target namespace>`

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

data-sr2-quick2-messaging-kafka-0 Bound <volume> 1Gi RWO sc 27h

data-sr2-quick2-messaging-zookeeper-0 Bound <volume> 1Gi RWO sc 27h

Installing and configuring Mirror Maker 2.0

A sample is provided at $COMMON_CNTK/samples/messaging/kafka-mirror-maker.

Disaster Recovery Support

A minimum of two pods is required for a service to be highly available. They should be on different worker nodes (Kubernetes can schedule the pods on different nodes using pod anti-affinity). If one node goes down, it takes out the corresponding pod, leaving the other pod(s) to handle the requests until the downed pod can be rescheduled. When a worker node goes down, the PODs running on that worker node will be rescheduled on other available worker nodes.

For DB High Availability we can use the Oracle Real Application Clusters (RAC) to run a single Oracle Database across multiple servers in order to maximize availability and enable horizontal scalability.

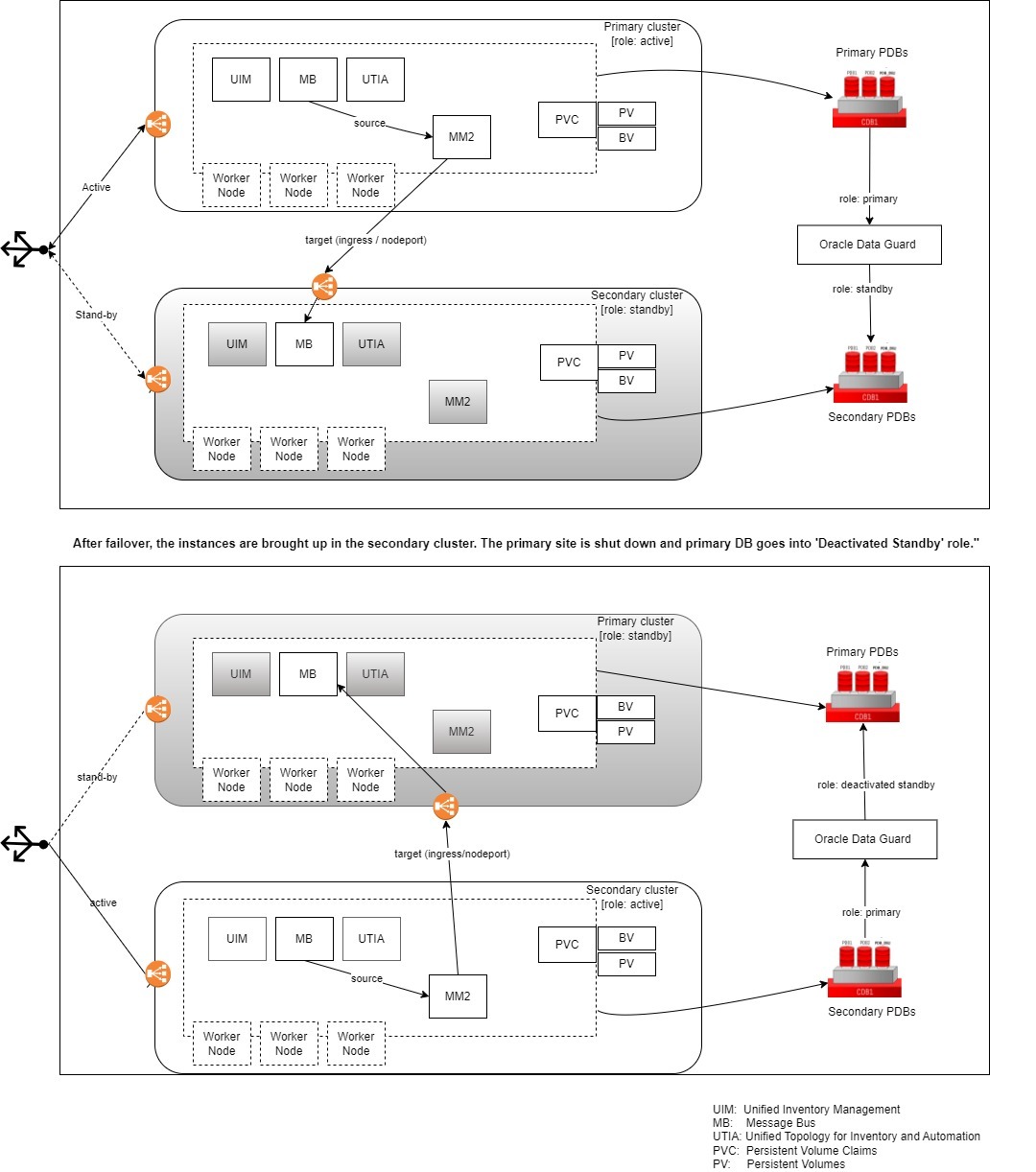

Disaster Recovery across Data Centers

The disaster recovery when the data center completely goes down is maintained with another passive data center.

Figure 5-2 documents the disaster recovery plan for the data center. A parallel passive data center is maintained, where the runtime data is periodically replicated from the active data center to the passive data center. In the event of any catastrophic failures in the primary (or active) data center, the load must be switched to secondary (or passive) data center. Before switching the load to secondary data center, you should shutdown all the services in the primary data center and start all the services in the secondary data center.

Figure 5-2 Disaster Recovery Plan for Data Center

About Switchover and Failover

The purpose of a geographically redundant deployment is to provide resiliency in the event of a complete loss of service in the primary site, due to a natural disaster or other unrecoverable failure in the primary UIM site. This resiliency is achieved by creating one or more passive standby sites that can take the load when the primary site becomes unavailable. The role reversal from the standby site to the primary site can be accomplished in any of the following ways:

- Switchover, in which the operator performs a controlled shutdown of the primary site before activating the standby site. This is primarily intended for planned service interruptions in the primary UIM site. Following a switchover, the former primary site becomes the standby site. The site roles of primary site and standby site can be restored by performing a second switchover operation, which is switchback.

- Failover, in which the primary site becomes unavailable due to unanticipated reasons and cannot be recovered. The operator then transitions the standby site to the primary role. The primary site that is down cannot act as a standby site and will require reconstruction of the database as a standby database before restoring the site roles.

About Kafka Mirror Maker

Kafka's Mirror Maker functionality makes it possible to maintain a replica of an existing Kafka cluster (which is used in Message Bus service). This mirrors a source Kafka cluster into a target (mirror) Kafka cluster. To use this mirror, it is a requirement that the source and target Kafka clusters (that is, Message Bus service) are up and running. If the target Kafka cluster is down or offline, we cannot mirror into the target cluster.

Oracle Data Guard

Oracle Data Guard is responsible for replicating transactions from the Active DB to the Standby DB. It is included as a part of every Oracle DB Enterprise Edition installation.

Note:

When using multi-tenant databases involving CDBs and PDBs with Data Guard, the replication happens at the CDB level. This means all the PDBs from the active CDB will be replicated over to the standby CDB and also, the commands to enable Data Guard must be run at the CDB level.

Installation and Configuration

If UTIA is disabled in UIM Cloud Native then it is not required to deploy Message Bus, UTIA and Mirror Maker Services in the clusters. These commands are intended to be used as samples. For detailed documentation on deploying UIM, see UIM Cloud Native Deployment Guide.

Setting up the Primary (active) Instance

To set up the primary (active) instance:

- Provision Databases one for the primary site and another for the secondary site.

- Set up Data Guard between primary site and secondary site. Primary site should be in ACTIVE role. Secondary site should be in STANDBY role. Refer to Oracle 19c Documentation.

- Deploy UIM Cloud Native.

- Create image pull secrets (if required).

- Create UIM secrets for WLS admin, OPSS, WLS RTE, RCU DB and UIM

DB.

Note:

uimprimaryhere refers to the Kubernetes namespace where the primary instance will be deployed. Replace this with the desired namespace.$UIM_CNTK/scripts/manage-instance-credentials.sh -p uimprimary -i dr create wlsadmin,opssWP,wlsRTE,rcudb,uimdb - Create Weblogic encrypted

password.

$UIM_CNTK/scripts/install-uimdb.sh -p uimprimary -i dr -s $SPEC_PATH -c 8 - Create UIM users

secrets.

$UIM_CNTK/samples/credentials/manage-uim-credentials.sh -p uimprimary -i dr -c create -f "/home/spec_dir/users.txt" - Create DB

schemas.

$UIM_CNTK/scripts/install-uimdb.sh -p uimprimary -i dr -s $SPEC_PATH -c 1 $UIM_CNTK/scripts/install-uimdb.sh -p uimprimary -i dr -s $SPEC_PATH -c 2 - Create UIM

instance.

$UIM_CNTK/scripts/create-ingress.sh -p uimprimary -i dr -s $SPEC_PATH $UIM_CNTK/scripts/create-instance.sh -p uimprimary -i dr -s $SPEC_PATH - Add UIM user

roles.

$UIM_CNTK/samples/credentials/assign-role.sh -p uimprimary -i dr -f uim-users-roles.txt

- Deploy Message Bus.

$COMMON_CNTK/scripts/create-applications.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a messaging-bus - Deploy UTIA:

- Create Topology DB

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create database - Create Topology UIM

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create uim - Create Topology

users:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create appUsers - Create Topology UI

users:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create appUIUsers - Create DB

schemas:

$COMMON_CNTK/scripts/install-database.sh -p uimprimary -i dr -f $SPEC_PATH/<proejct>/<instance>/database.yaml -a unified-topology -c 1 - Deploy

Topology:

$COMMON_CNTK/scripts/create-applications.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology

- Create Topology DB

secrets:

See "Deploying Unified Operations Message Bus" for deploying Message Bus, "Deploying the Unified Topology for Inventory and Automation Service" for deploying UTIA.

See UIM Cloud Native Deployment Guide for deploying UIM.

Setting up the Secondary (standby) Instance

To set up the secondary (standby) instance:

- Perform switchover operation on active (primary site) DB. Now secondary site DB should be in ACTIVE role and primary site DB should be in PASSIVE role. Refer to Oracle 19c Documentation.

- Deploy UIM Cloud Native:

- Export OPSS wallet file secret from primary instance and

recreate in secondary instance.

Note:

Where,uimsecondaryrefers to the Kubernetes namespace where the secondart instance will be deployed. Replace this with the desired namespace.kubectl -n uimprimary get configmap uimprimary-dr-weblogic-domain-introspect-cm -o jsonpath='{.data.ewallet\.p12}' > ./primary_ewallet.p12 $UIM_CNTK/scripts/manage-instance-credentials.sh -p uimsecondary -i dr create opssWF - (Optional) Create image pull secrets.

- Create UIM secrets for WLS admin, OPSS, WLS RTE, RCU DB and UIM

DB:

$UIM_CNTK/scripts/manage-instance-credentials.sh -p uimsecondary -i quick create wlsadmin,opssWP,wlsRTE,rcudb,uimdb - Create Weblogic encrypted

password:

$UIM_CNTK/scripts/install-uimdb.sh -p uimsecondary -i dr -s $SPEC_PATH -c 8 - Create UIM users

secrets:

$UIM_CNTK/samples/credentials/manage-uim-credentials.sh -p uimsecondary -i dr -c create -f "/home/spec_dir/users.txt" - Create UIM

instance:

$UIM_CNTK/scripts/create-ingress.sh -p uimsecondary -i dr -s $SPEC_PATH $UIM_CNTK/scripts/create-instance.sh -p uimsecondary -i dr -s $SPEC_PATH - Add UIM user

roles:

$UIM_CNTK/samples/credentials/assign-role.sh -p uimsecondary -i dr -f uim-users-roles.txt

- Export OPSS wallet file secret from primary instance and

recreate in secondary instance.

- Deploy message

bus:

$COMMON_CNTK/scripts/create-applications.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a messaging-bus - Deploy UTIA:

- Create Topology DB

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create database - Create Topology UIM

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create uim - Create Topology

users:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create appUsers - Create Topology UI

users:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology create appUIUsers - Deploy

Topology:

$COMMON_CNTK/scripts/create-applications.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology

- Create Topology DB

secrets:

- Deploy Mirror Maker. See "Installing and Configuring Mirror Maker 2.0" for more information.

- After the secondary instance has been setup, switchover back to the primary (active) site.

Switchover Sequence

To perform a switchover between site A (active) and site B (standby):

- Bring down instances in site A. These include UIM and UTIA. Message Bus

must be enabled to perform the replication using Mirror

Maker.

#Disable topology $COMMON_CNTK/scripts/delete-applications.sh -p uimprimary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology #Disable UIM $UIM_CNTK/scripts/delete-instance.sh -p uimprimary -i dr -s $SPEC_PATH - Perform switchover on DB. Site B DB will now become Primary. Site B DB will assume Standby role. Refer to Oracle 19c Documentation.

- Bring up instances in site B. This includes UIM and UTIA. Message Bus

should already be

active:

#EnableUIM $UIM_CNTK/scripts/create-instance.sh -p uimsecondary -i dr -s $SPEC_PATH #Enable topology $COMMON_CNTK/scripts/create-applications.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology - Perform DNS switching to route all traffic to site B.

Failover Sequence

In case of any irrecoverable failure in the primary site, perform a failover operation on the standby site. To do so:

- Perform failover on DB. Standby (secondary) DB will now become Primary. Primary site DB will assume Deactivated Standby role. Refer to Oracle 19c Documentation.

- Bring up instances in standby. This includes UIM and Topology. Message

Bus should already be

active:

#EnableUIM $UIM_CNTK/scripts/create-instance.sh -p uimsecondary -i dr -s $SPEC_PATH #Enable topology $COMMON_CNTK/scripts/create-applications.sh -p uimsecondary -i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology - Perform DNS switching to route all traffic to secondary instances.

Once the primary site to restored, establish a synchronization between secondary and primary site. To do so:

- Bring up Message Bus and DB in primary

site:

#Enable message bus $COMMON_CNTK/scripts/create-applications.sh -p uimprimary-i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a messaging-bus - Setup Kafka Mirror Maker with secondary Message Bus as source and primary Message Bus as target. See "About Kafka Mirror Maker" for more information.

- Switch primary DB role from Deactivated Standby → Standby. See Deploying Unified Operations Message Bus for more information.

- Bring up UIM in primary

site:

$UIM_CNTK/scripts/create-instance.sh -p uimprimary -i dr -s $SPEC_PATH - Bring up Topology in primary

site:

$COMMON_CNTK/scripts/create-applications.sh -p uimprimary-i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology - Perform DNS switching to route all traffic to primary instances.

- Bring down instances in secondary site. This includes UIM and

Topology. Message Bus should remain active for Kafka Mirror Maker

synchronization:

#Disable topology $COMMON_CNTK/scripts/delete-applications.sh -p uimsecondary-i dr -f $SPEC_PATH/<project>/<instance>/applications.yaml -a unified-topology #Disable UIM $UIM_CNTK/scripts/delete-instance.sh -p uimsecondary -i dr -s $SPEC_PATH

Debugging and Troubleshooting

Common Problems and Solutions

-

Unified Topology DBInstaller pod is not able to pull the dbinstaller image.

NAME READY STATUS RESTARTS AGE project-instance-unifed-topology-dbinstaller 0/1 ErrImagePull 0 5s ### OR NAME READY STATUS RESTARTS AGE project-instance-unifed-topology-dbinstaller 0/1 ImagePullBackOff 0 45sTo resolve this issue

- Verify that the image name and the tag provided in database.yaml for unified-topology-dbinstaller and that it is accessible from the repository by the pod.

- Verify that the image is copied to all worker nodes.

- If pulling image from a repository, verify the image pull policy and image pull secret in database.yaml for unified-topology-dbinstaller.

-

Unified Topology API, PGX and UI pod is not able to pull the images.

To resolve this issue

- Verify that the image names and the tags are provided in applications.yaml for unified-topology and that it is accessible from the repository by the pod.

- Verify that the image is copied to all worker nodes

- If pulling image from a repository, verify the image pull policy and image pull secret in applications.yaml for UTIA service.

-

Unified Topology pods are in crashloopbackoff state.

To resolve this issue, describe the Kubernetes pod and find the cause for the issue. It could be because of missing secrets.

- Unified Topology API pod did not come

up.

NAME READY STATUS RESTARTS AGE project-instance-unifed-topology-api 0/1 Running 0 5sTo resolve this issue, verify that the Message Bus bootstrap server provided in topology-static-config.yaml is a valid one.

Test Connection to PGX server

To troubleshoot PGX service, connect to pgx service using graph client by running the following command.

Connect to pgx service endpoint http://<LoadbalancerIP>:<LoadbalancerPort>/<topology-project>/<topology-instance>/pgx by providing pgx client user credentials.

C:\TopologyService\oracle-graph-client-22.1.0\oracle-graph-client-22.1.0\bin>opg4j -b http://<hostIP>:30305/sr/quick/pgx -u <PGX_CLIENT_USER>

password:<PGX_CLIENT_PASSWORD>

For an introduction type: /help intro

Oracle Graph Server Shell 22.1.0

Variables instance, session, and analyst ready to use.

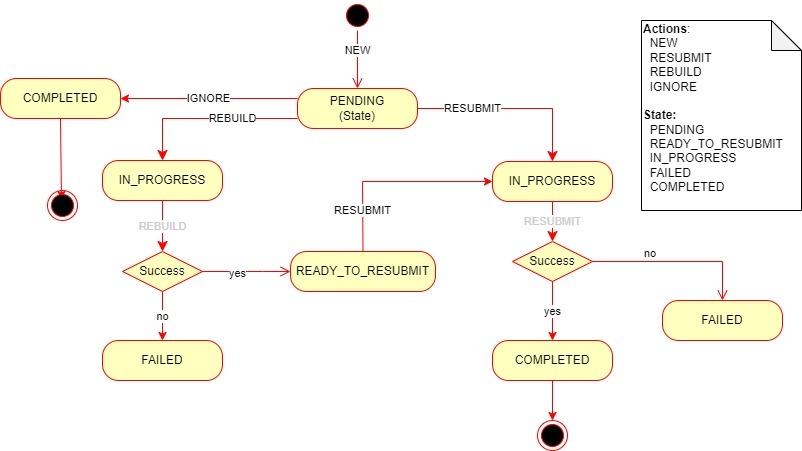

Fallout Events Resolution

The TOPOLOGY_FALLOUT_EVENTS table in the UTIA schema, persists the failed events from the Dead-Letter-Topic (that is: ora-dlt-topology) for further analysis and re-processing. The data between UIM and UTIA can go out of sync when UIM application fails to send topology events to message-bus and UIM transaction is committed. It can also happen when topology is disabled in UIM temporarily and re-enabled, or when the UTIA is consuming events at a much slower rate than that of the rate at which UIM is producing events. These lead to UTIA data being out of sync with that of the UIM, hence resulting in failed events eventually.

These failed events in the TOPOLOGY_FALLOUT_EVENTS table can be rebuilt and resubmitted. When a fallout event comes into the table it’s in “PENDING” state. These events can be Rebuilt or Resubmitted as follows:

-

REBUILD: This action processes the Fallout Event and gets any out of sync data from UIM into UTIA via the Database Link.

-

RESUBMIT: This action takes the events from the TOPOLOGY_FALLOUT_EVENTS table in “PENDING” or “READY_TO_RESUBMIT” states and moves them back into the “ora_uim_topology” topic to be re-processed.

The following figure illustrates the fallout events resolution process flow.

Figure 5-3 Process Flow of Fallout Events Resolution

Prerequisites for REBUILD

- Before Rebuild is performed, the UTIA Schema user should have the following

privileges:

- CREATE JOB

- ALTER SYSTEM

- CREATE DATABASE LINK

- Ensure a Database Link exists from UTIA schema to UIM schema with the name “REM_SCHEMA” (that is, UTIA schema user should be able to access objects from UIM schema). For more information, see https://docs.oracle.com/en/database/oracle/oracle-database/19/sqlrf/CREATE-DATABASE-LINK.html#GUID-D966642A-B19E-449D-9968-1121AF06D793

Performing REBUILD Action

You can perform the Rebuild action in the following ways:

-

DBMS Job Scheduling: In this approach the REBUILD action on the Fallout Events in “PENDING” state is scheduled to run for every 6 hours. The frequency at which the job runs automatically can be configured by changing the repeat_interval.

BEGIN DBMS_SCHEDULER.create_job ( job_name => 'FALLOUT_DATA_REBUILD', job_type => 'PLSQL_BLOCK', job_action => 'BEGIN PKG_FALLOUT_CORRECTION.SCHEDULE_FALLOUT_JOBS(commitSize => 1000, cpusJobs => 4, waitTime => 2); END;', start_date => SYSTIMESTAMP, repeat_interval => 'FREQ=HOURLY; INTERVAL=6', enabled => TRUE ); END; / -

On-Demand REST API Call: In this approach the REBUILD action on the Fallout Events in “PENDING” state is invoked via the REST API. Before invoking the Rebuild API.

-

POST - fallout/events/rebuild – To rebuild the Fallout Events on demand as and whenever required.

-

DELETE - fallout/events/scheduledJobs – To drop any running or previously scheduled jobs.

-

Performing RESUBMIT Action

Resubmit Action is performed through a REST call and it takes the fallout events in “READY_TO_RESUBMIT” (post Rebuild) and “PENDING” states based on the query parameters and pushed the events into the “ora_uim_topology” topic:

POST - fallout/events/resubmit – To resubmit the Fallout Events on

demand.

For more information on APIs available, see UTIA REST API Guide.