3.1 Classification Use Case

A retail store has information about its customers' behavior and the purchases they make. Now with the available data, they want you to analyze and identify the type of customers most likely to be positive responders to an Affinity Card loyalty program. High Affinity Card responders are defined as those customers who, when given a loyalty or affinity card, hyper-respond, that is, increase purchases more than the Affinity Card program's offered discount. In our data set, a responder is designated with value 1, and a non-responder with value 0. In this use case, you will demonstrate how to identify such customers using the Support Vector Machine model.

Related Contents

| Topic | Link |

|---|---|

| OML4R GitHub Example | Classification Support Vector Machines (SVMs) |

| About Support Vector Machines (SVMs) | Classification Support Vector Machines (SVMs) |

| Shared Settings | Shared Settings |

Before you start your OML4R use case journey, ensure that you have the following:

- Data Set

The data set used for this use case is from the SH schema. The SH schema can be readily accessed in Oracle Autonomous AI Database. For on-premises databases, the schema is installed during the installation or can be manually installed by downloading the scripts.

- Database

Select or create database out of the following options:

- Get your FREE cloud account. Go to https://cloud.oracle.com/database and select Oracle AI Database Cloud Service (DBCS), or Oracle Autonomous AI Database. Create an account and create an instance. See Autonomous Database Quick Start Workshop.

- Download the latest version of Oracle AI Database (on premises).

- Machine Learning Tools

Depending on your database selection,

- Use OML Notebooks for Oracle Autonomous AI Database.

- Install and use Oracle SQL Developer connected to an on-premises database or DBCS. See Installing and Getting Started with SQL Developer.

- Other Requirements

Data Mining Privileges (this is automatically set for ADW). See System Privileges for Oracle Machine Learning for SQL.

- Load Data

You will be using the SUPPLEMENTARY_DEMOGRAPHICS data set available in the SH schema. Use theore.syncfunction to create anore.frameproxy object in R that represents a database table, view, or query. - Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values. - Build Model

This model is designed to classify data into predefined categories by learning from training data. - Evaluate

Before you make predictions using your model on new data, you should first evaluate model accuracy. You can evaluate the model using different methods. - Deploy the Model

The machine learning model,SVM_CLASSIFICATION_MODEL, has been successfully trained and exists in your schema as a first-class database object. While you can use this model directly from R, for database applications, you can also run it directly from SQL queries.

Related Topics

Parent topic: Use Cases

3.1.1 Load Data

You will be using the SUPPLEMENTARY_DEMOGRAPHICS data set available in the SH schema. Use the ore.sync function to create an ore.frame proxy object in R that represents a database table, view, or query.

Examine Data

| Attribute Name | Information |

|---|---|

| CUST_ID | The ID of the customer |

| EDUCATION | Education level attained |

| OCCUPATION | Occupation of the customer |

| HOUSEHOLD_SIZE | Number of people living at residence |

| YRS_RESIDENCE | Number of years customer lived at current residence |

| AFFINITY_CARD |

Indicates whether the customer holds an affinity card. 1 means Yes. 0 means No. |

| BULK_PACK_DISKETTES |

Product. Indicates whether the customer purchased the bulk pack diskettes. 1 means Yes. 0 means No. |

| FLAT_PANEL_MONITOR |

Product. Indicates whether the customer purchased flat panel monitor. 1 means Yes. 0 means No |

| HOME_THEATER_PACKAGE |

Product. Indicates whether the customer purchased home theatre package. 1 means Yes. 0 means No |

| BOOKKEEPING_APPLICATION |

Product. Indicates whether the customer purchased bookkeeping application. 1 means Yes. 0 means No |

| PRINTER_SUPPLIES |

Product. Indicates whether the customer purchased printer supplies. 1 means Yes. 0 means No |

| Y_BOX_GAMES |

Product. Indicates whether the customer purchased YBox Games. 1 means Yes. 0 means No |

| OS_DOC_SET_KANJI |

Product. Indicates whether the customer purchased the Kanji character set for the operating system documentation. 1 means Yes. 0 means No |

| COMMENTS | Comments from customers |

Parent topic: Classification Use Case

3.1.2 Explore Data

Explore the data to understand and assess the quality of the data. At this stage assess the data to identify data types and noise in the data. Look for missing values and numeric outlier values.

Identify Target Variable

Data Understanding and Preparation

For this use case, the task is to train a Support Vector Machine model that predicts which customers most likely to be positive responders to an Affinity Card loyalty program. Therefore, the target variable is the attribute AFFINITY_CARD.

To access database data from R using OML4R, you must first create an ore.frame proxy object in R that represents a database table, view, or query. In this example, the proxy object is created using a query. Create proxy objects for SUPPLEMENTARY_DEMOGRAPHICS and then assess the data to identify data types and noise in the data. Look for missing values, outlier numeric values, or inconsistently labeled categorical values.

For data preparation and understanding run the following steps:

- Run the following command in an R interpreter paragraph (using %r) to import the Oracle Machine Learning for R libraries and to suppress warning regarding row ordering:

library(ORE) options(ore.warn.order=FALSE) - Use the

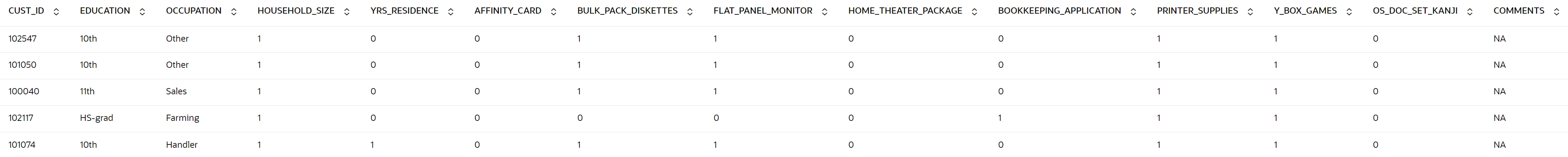

ore.syncfunction to create theore.frameobject that is a proxy for the SUPPLEMENTARY DEMOGRAPHICS table in the SH schema database table.ore.sync(query = c("SUP_DEM" = "select * from SH.SUPPLEMENTARY_DEMOGRAPHICS")) ore.attach() - Run the following command to display few rows from SUPPLEMENTARY_DEMOGRAPHICS table

z.show(head(SUP_DEM))

- To display the number of rows and columns in the ore.frame object

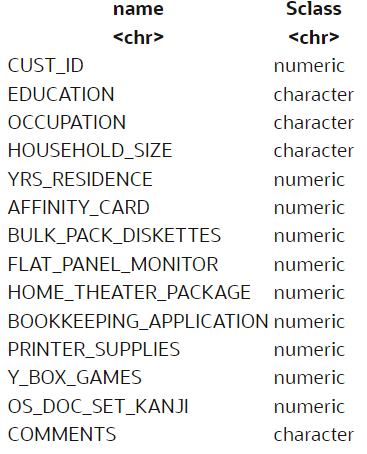

SUPPLEMENTARY_DEMOGRAPHICS, usez.show(dim(SUP_DEM))z.show(dim(SUP_DEM))(4500, 14) - View the data type of the columns in CUST_DF with the @desc operator.

SUP_DEM@desc

- Run the following command to check if there are any missing values in the data. The following code gives you the total number of missing values in the

CUST_DFproxy object.sum(is.na(SUP_DEM))205The value 205 indicates that there are missing values in the SUP_DEM proxy object.

OML supports Automatic Data Preparation (ADP). ADP is enabled through the model settings. When ADP is enabled, the transformations required by the algorithm are performed automatically and embedded in the model. You can enable ADP during the Build Model stage. The commonly used methods of data preparation are binning, normalization, and missing value treatment.

See How ADP Transforms the Data to understand how ADP prepares the data for some algorithms.

This completes the data understanding and data preparation stage.

Parent topic: Classification Use Case

3.1.3 Build Model

This model is designed to classify data into predefined categories by learning from training data.

Algorithm Selection

You can choose one of the following in-database algorithms to solve a classification problem:

- Decision Tree

- Generalized Linear Model

- Naive Bayes

- Neural Network

- Random Forest

- Support Vector Machine

Here you will be using the Support Vector Machine algorithms because the SVM classification is one of the algorithms that supports binary classification.

- Split the data into train and test data sets. The train set is used to train the model so that it learns the hidden patterns and the test set is used to evaluate the trained model. Split the

DEMO_DFdata with 60 percent of the records for the train data set and 40 percent for the test data set.sampleSize <- .4 * nrow(DEMO_DF) index <- sample(1:nrow(DEMO_DF),sampleSize) group <- as.integer(1:nrow(DEMO_DF) %in% index) rownames(DEMO_DF) <- DEMO_DF$CUST_ID DEMO_DF.train <- DEMO_DF[group==FALSE,] class(DEMO_DF.train) DEMO_DF.test <- DEMO_DF[group==TRUE,] class(DEMO_DF.test) 'ore.frame' 'ore.frame' - After splitting the data, let's see the count of rows in train and test to see if any rows are left out in either of the datasets.

cat("\nTraining data: ") dim(DEMO_DF.train) cat("\nTest data: ") dim(DEMO_DF.test) Training data: 2700 13 Test data: 1800 13 - Build your model using the

ore.odmSVMfunction, which creates a Support Vector Machine model using the training data. Theore.odmSVMfunction is the R interface to the in-database SVM algorithm. Then we will make the prediction using this model for our test data.ore.exec( "BEGIN DBMS_DATA_MINING.DROP_MODEL(model_name => 'SVM_CLASSIFICATION_MODEL'); EXCEPTION WHEN OTHERS THEN NULL; END;" ) MOD <- ore.odmSVM( formula = AFFINITY_CARD ~ ., data = DEMO_DF.train, type = "classification", kernel.function = "system.determined", odm.settings = list(model_name = "SVM_CLASSIFICATION_MODEL") ) RES <- predict( object = MOD, data = DEMO_DF.test, type = c("raw", "class"), norm.votes = TRUE, cache.model = TRUE, supplemental.cols = c( "CUST_ID", "AFFINITY_CARD", "EDUCATION", "HOUSEHOLD_SIZE", "OCCUPATION", "YRS_RESIDENCE" ) )

Parent topic: Classification Use Case

3.1.4 Evaluate

Before you make predictions using your model on new data, you should first evaluate model accuracy. You can evaluate the model using different methods.

Show Model Accuracy

To check the accuracy of our model, we use a confusion matrix. The confusion matrix is a table that shows the correct model predictions and incorrect predictions for each class. After creating the confusion matrix, the code calculates the accuracy of the model by dividing the number of correct predictions by the total number of predictions.

CMATRIX <- with(RES, table(AFFINITY_CARD, PREDICTION))

CMATRIX

PREDICTION

AFFINITY_CARD 0 1

0 1206 145

1 180 269To show the model accuracy, run the following statements:

ACCURACY <- CMATRIX / sum(CMATRIX)

round(sum(diag(ACCURACY)),3)*100

83.6The result of the confusion matrix shows that the accuracy on the test set is 83.6%

Show Prediction Results

Here you will display the prediction results.

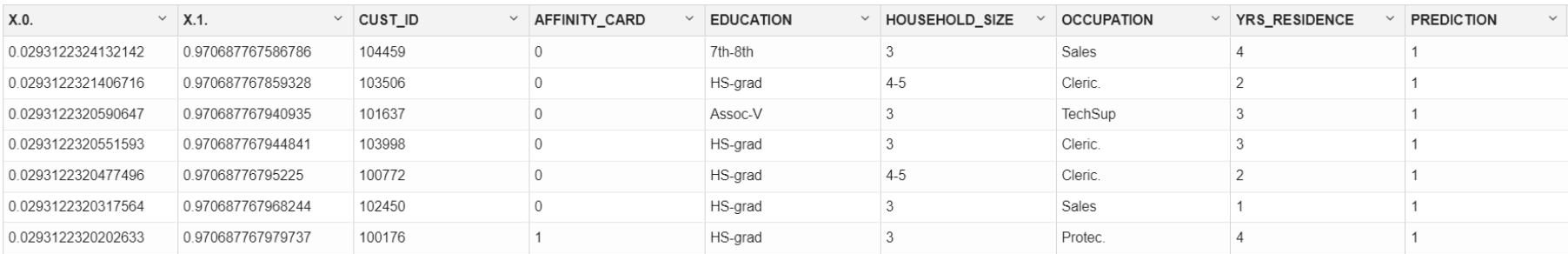

- To display the prediction results, run the following code:

z.show(ore.sort(RES[(RES$"'1'" > 0.5),], by = c("'1'")))

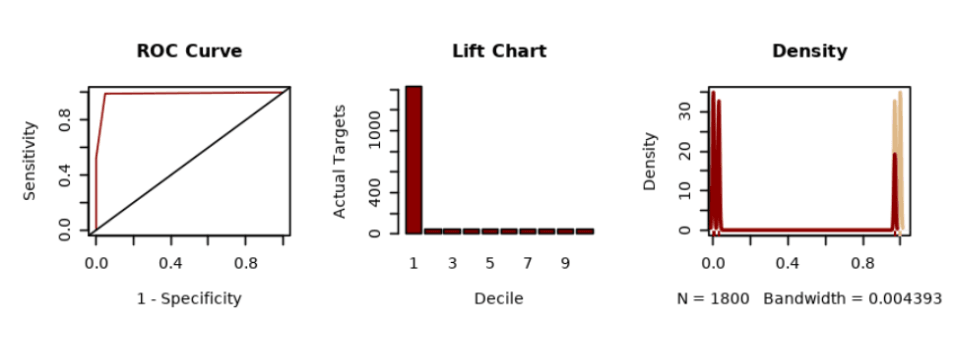

- To display the prediction result using ROC Curve, Lift Chart, and Distribution Chart, run the following code:

# BAR PLOT res <- ore.pull(RES) sensitivity <- res[order(res$"'1'",decreasing = TRUE), ] sens <- sum(sensitivity$"'0'")/sum(sensitivity$"'0'") - cumsum(sensitivity$"'0'")/sum(sensitivity$"'0'") spec <- cumsum(sensitivity$"'1'")/sum(sensitivity$"'1'") # LIFT CHART decile2 <- quantile(sensitivity$"'1'", probs = seq(.1, .9, by = .1)) df_sens <- as.data.frame(sensitivity$"'1'", col.names = c("sens")) df_sens$decile = as.numeric(cut(1-cumsum(df_sens$sens), breaks=10)) # DISTRIBUTION CHART dx <- density(res$"'0'") dx2 <- density(res$"'1'") # PLOTS 3x1 par(mfrow=c(3,3)) plot(1 - spec, sens, type = "l", col = "darkred", ylab = "Sensitivity", xlab = "1 - Specificity", main = 'ROC Curve') abline(c(0,0),c(1,1)) paste("AUC: ", round(sum(spec*diff(c(0, 1 - sens))),3)) barplot(table(df_sens$decile), xlab = 'Decile', ylab = 'Actual Targets', main = 'Lift Chart', col = "darkred") plot(dx, lwd = 2, col = "burlywood", main = "Density") lines(dx2, lwd = 2, col = "darkred") # Add the data-poins with noise in the X-axis rug(jitter(res$"'0'"),col='burlywood') rug(jitter(res$"'1'"),col='darkred')

Parent topic: Classification Use Case

3.1.5 Deploy the Model

The machine learning model, SVM_CLASSIFICATION_MODEL, has been successfully trained and exists in your schema as a first-class database object. While you can use this model directly from R, for database applications, you can also run it directly from SQL queries.

Using the SVM Model in SQL

To facilitate this, you will create a SQL table, SVM_TEST_TABLE, mirroring the structure of your R data frame, DEMO_DF. This will allow you to seamlessly integrate the model's predictions into your database work flows. To use the trained SVM model into your SQL environment, follow the steps below:

- Create a table that mirrors the structure of your R data frame, enabling seamless prediction workflows within the database.

Note:

The data provided to the model through SQL queries must be prepared in the same manner as the data used to build the model in R.ore.drop(table = "SVM_TEST_TABLE") ore.create(DEMO_DF, table ="SVM_TEST_TABLE") - Use the SQL Interface to score data and display the prediction results.

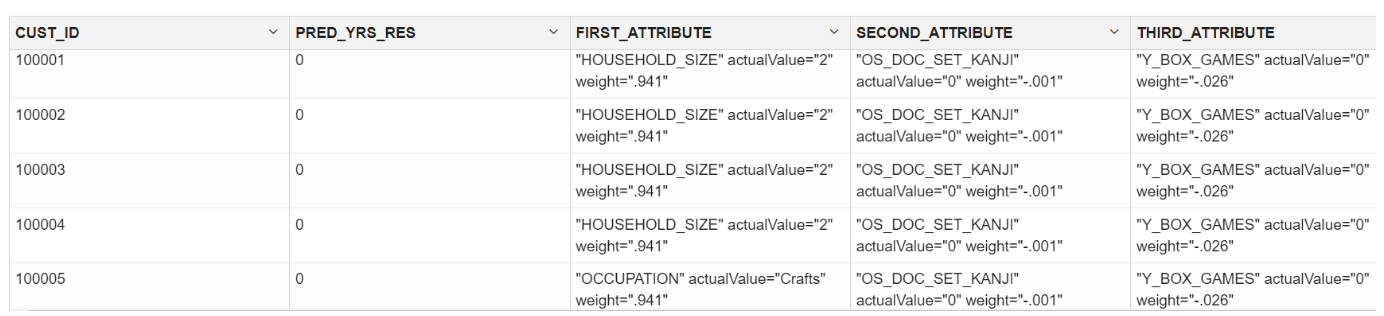

SELECT CUST_ID, round(PREDICTION_YRS_RES,3) PRED_YRS_RES, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute1",17,100)),'rank="1"/>') FIRST_ATTRIBUTE, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute2",17,100)),'rank="2"/>') SECOND_ATTRIBUTE, RTRIM(TRIM(SUBSTR(OUTPRED."Attribute3",17,100)),'rank="3"/>') THIRD_ATTRIBUTE FROM (SELECT CUST_ID, PREDICTION(SVM_CLASSIFICATION_MODEL USING *) PREDICTION_YRS_RES, PREDICTION_DETAILS(SVM_CLASSIFICATION_MODEL USING *) PD FROM SVM_TEST_TABLE WHERE CUST_ID < 100015 ORDER BY CUST_ID) OUT, XMLTABLE('/Details' PASSING OUT.PD COLUMNS "Attribute1" XMLType PATH 'Attribute[1]', "Attribute2" XMLType PATH 'Attribute[2]', "Attribute3" XMLType PATH 'Attribute[3]') OUTPRED

The SQL code demonstrates how to deploy and use a trained SVM classification model (

SVM_CLASSIFICATION_MODEL) within a database environment. It showcases the process of scoring new data, extracting predicted values (PREDICTION_YRS_RES), and retrieving relevant attributes (FIRST_ATTRIBUTE, SECOND_ATTRIBUTE, THIRD_ATTRIBUTE) using thePREDICTION_DETAILSfunction.

Using the SVM Model in R

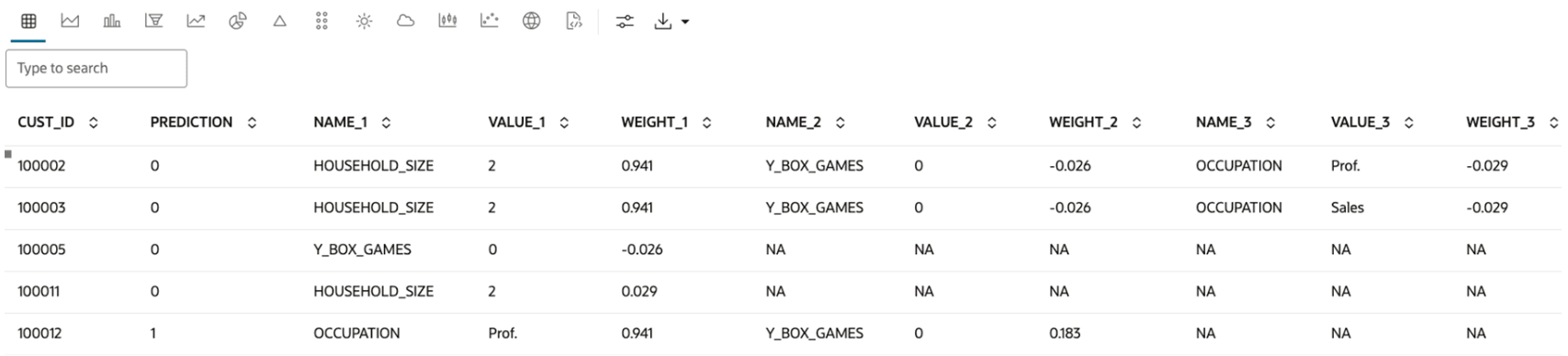

You can also make predictions and obtain prediction details directly from R using the following code:

z.show(predict(

object = MOD,

newdata = DEMO_DF.test,

supplemental.cols = c("CUST_ID"),

topN.attrs = 3

)

)The output appears as follows:

Deploying the Model to Other Databases or OML Services

To deploy the model to other databases or OML Services, follow these steps:

- Export the Model:

- Use the

DBMS_DATA_MINING.EXPORT_SERMODELprocedure to export the model to a BLOB object. - Save the BLOB object to a file or another storage location.

- Use the

- Import the Model into Another Database:

- In the target database, use

DBMS_DATA_MINING.IMPORT_SERMODELto import the model from the BLOB object.

- In the target database, use

- Deploy the Model to OML Services:

- Use the OML REST API to upload the model and create a REST endpoint for scoring. Refer to the OML Services documentation for specific instructions.

For more information, see DBMS_DATA_MINING Package and OML Services Documentation.

This use case identified customers most likely to be positive responders to an Affinity Card loyalty program using a Support Vector Machine (SVM) classification model. Thus, the model can be used to predict which customers are likely to become high-value customers with the Affinity Card program, allowing the store to focus their marketing resources more effectively.

Parent topic: Classification Use Case