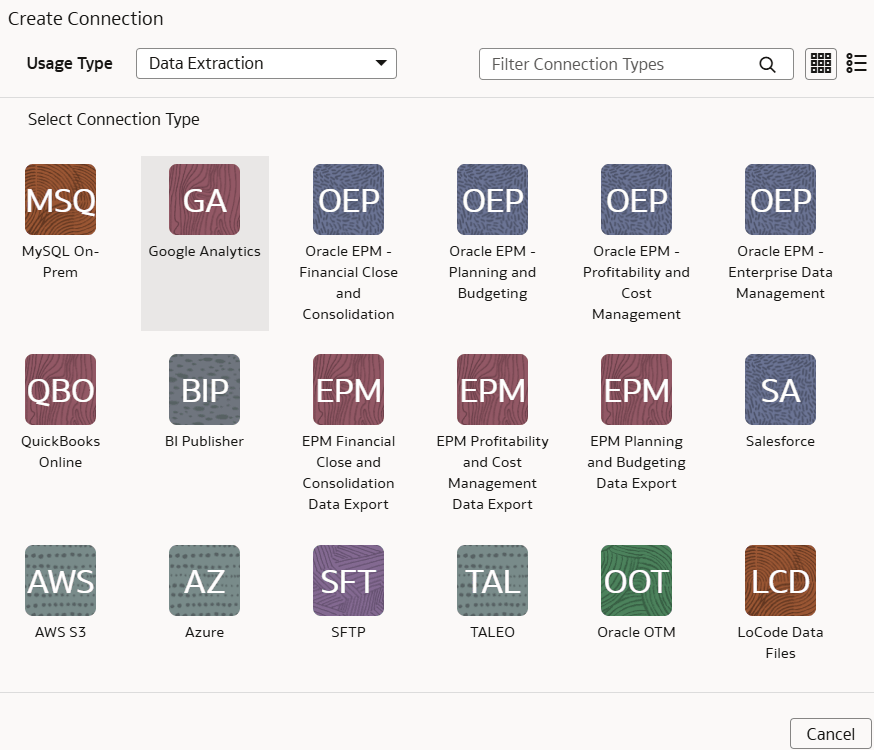

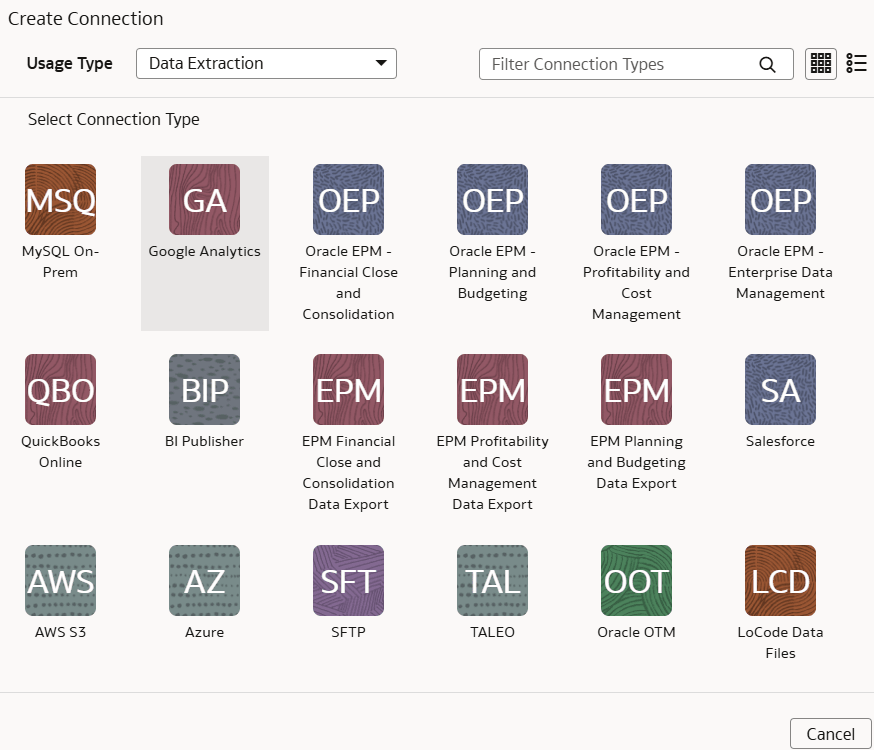

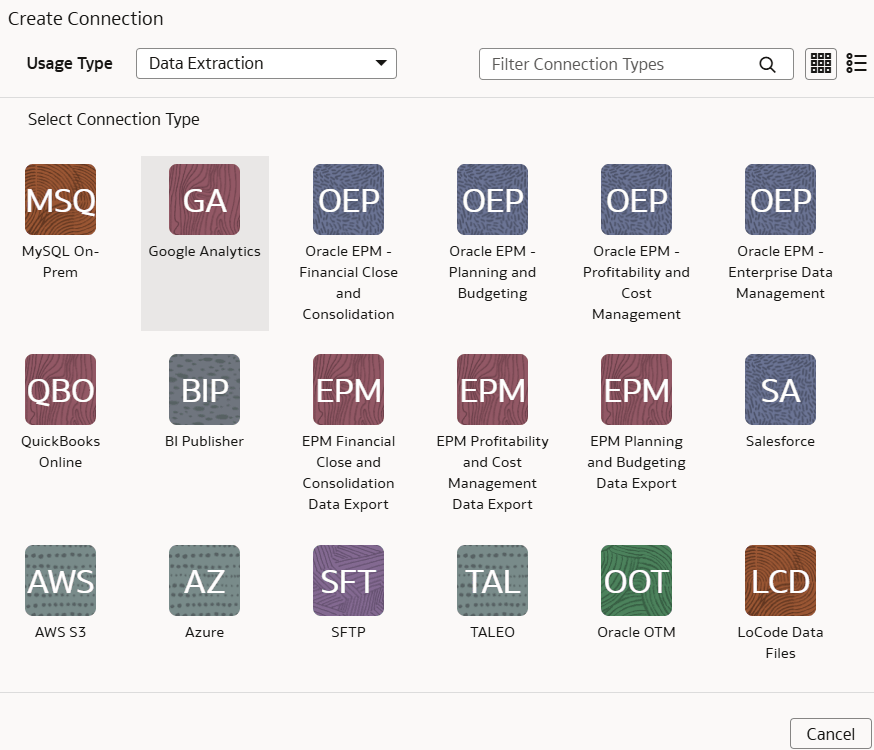

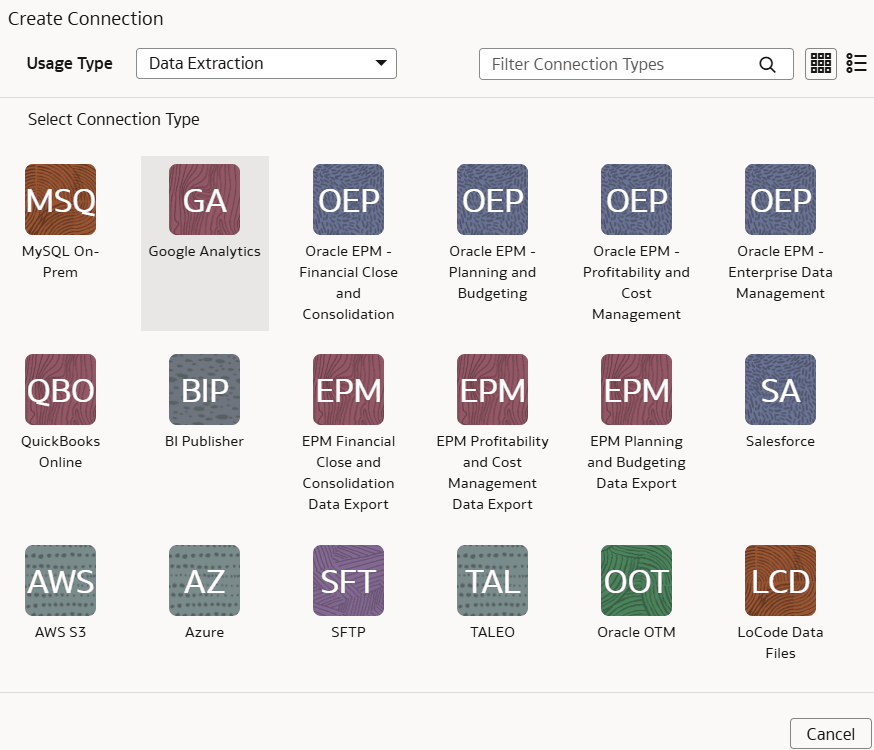

Manage Data Connections

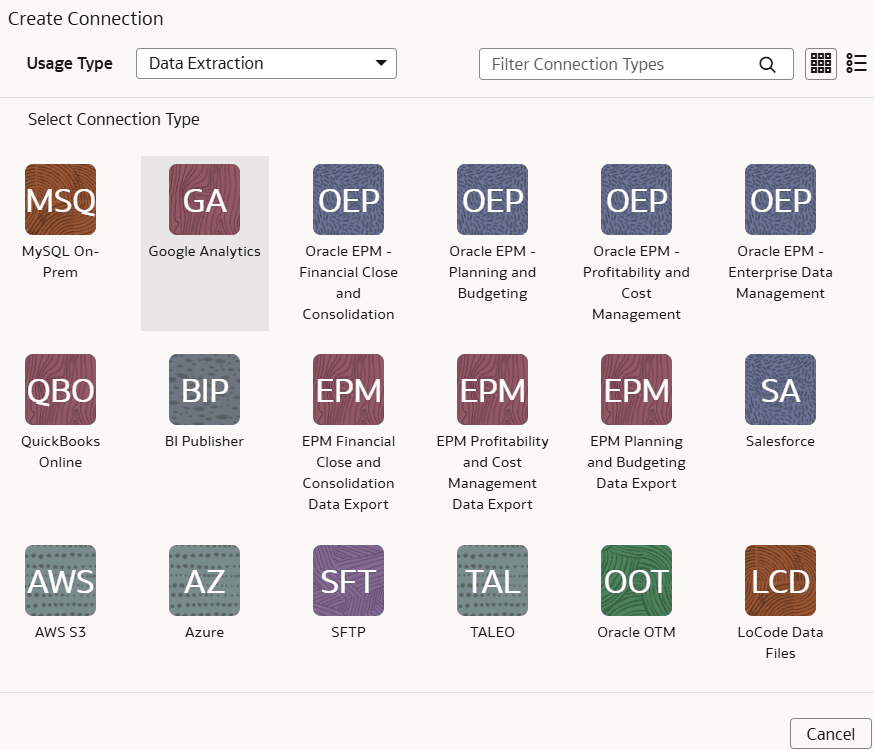

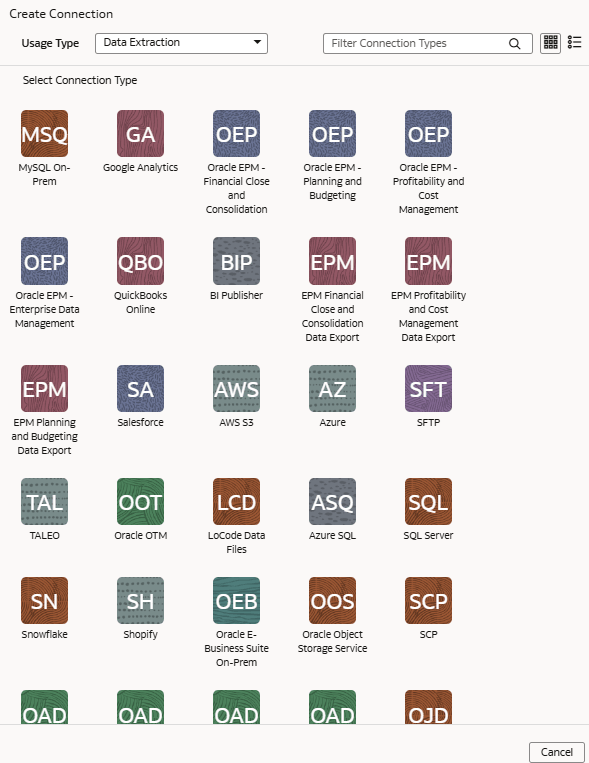

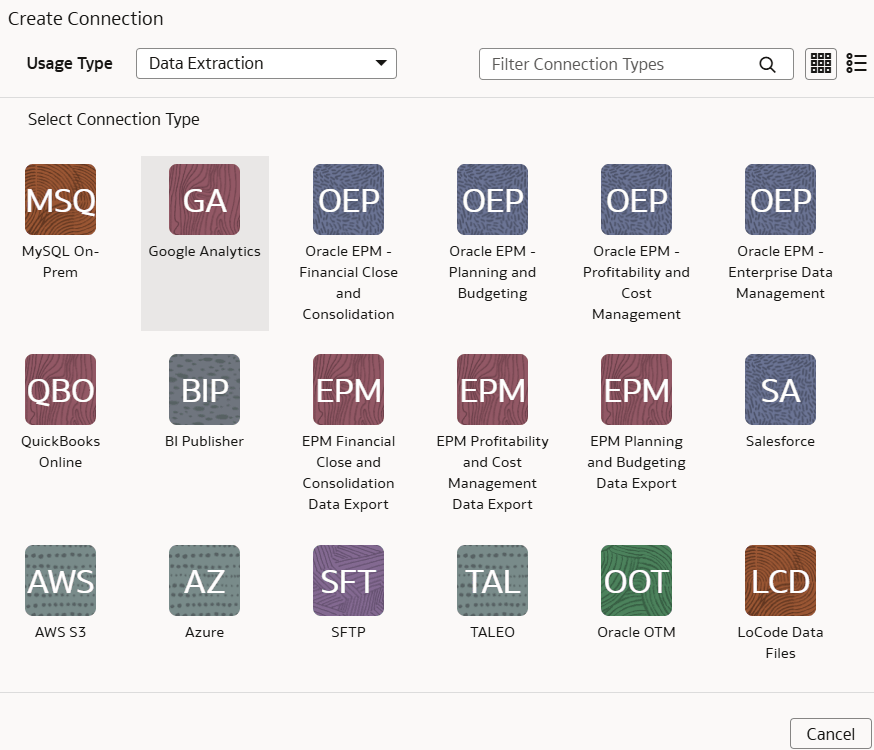

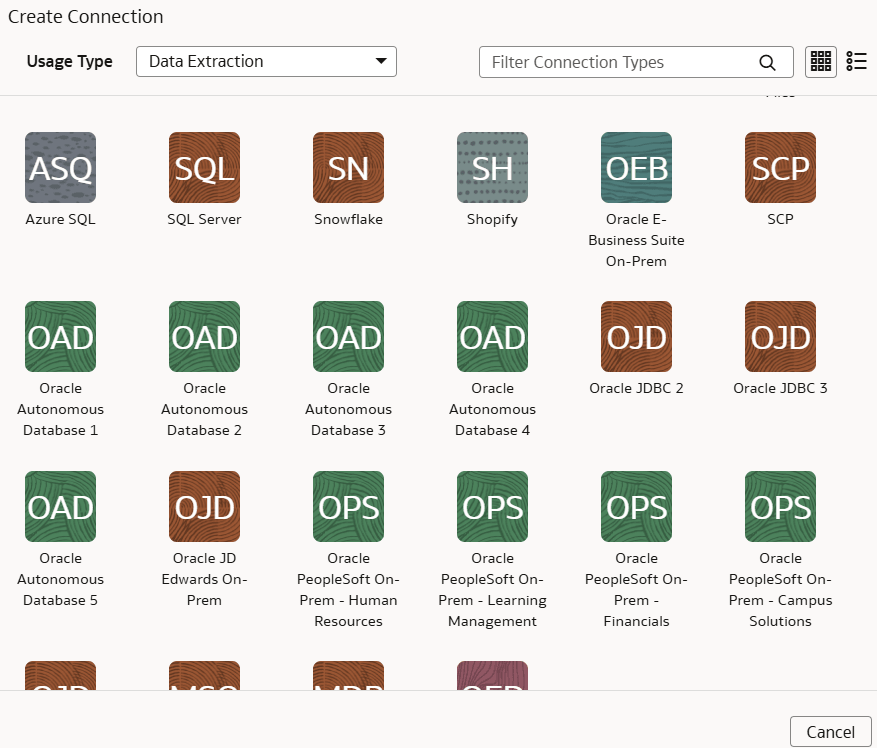

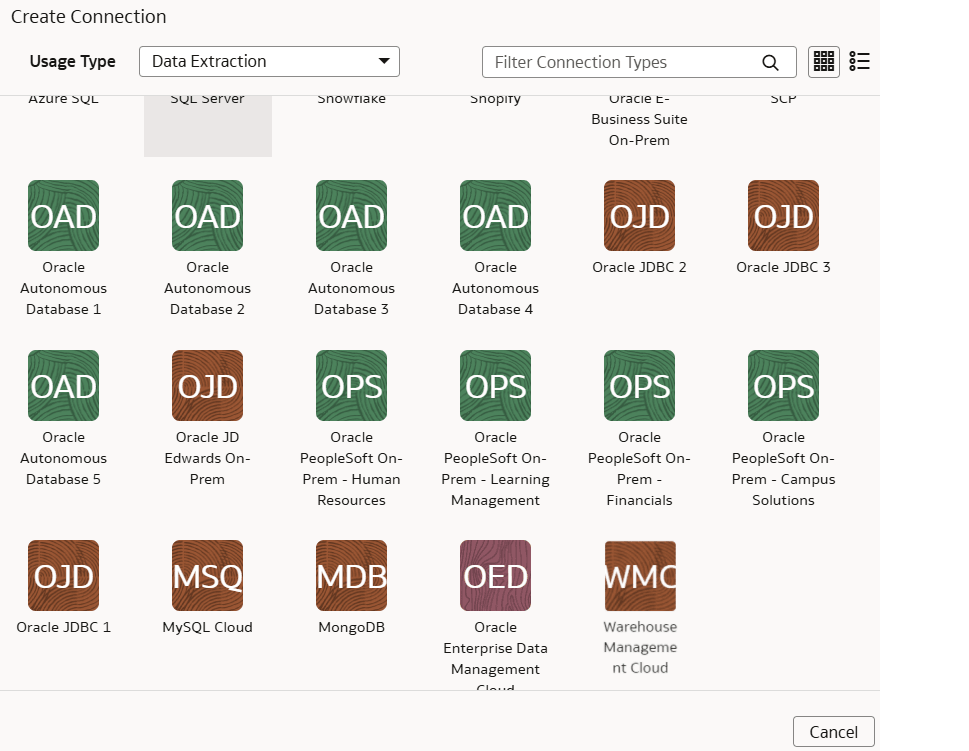

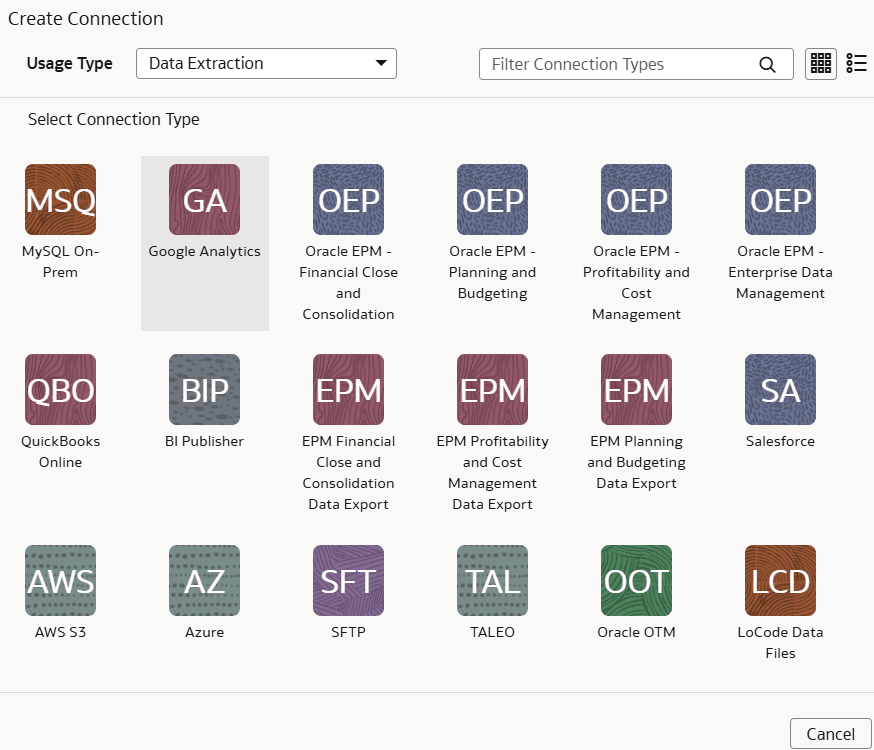

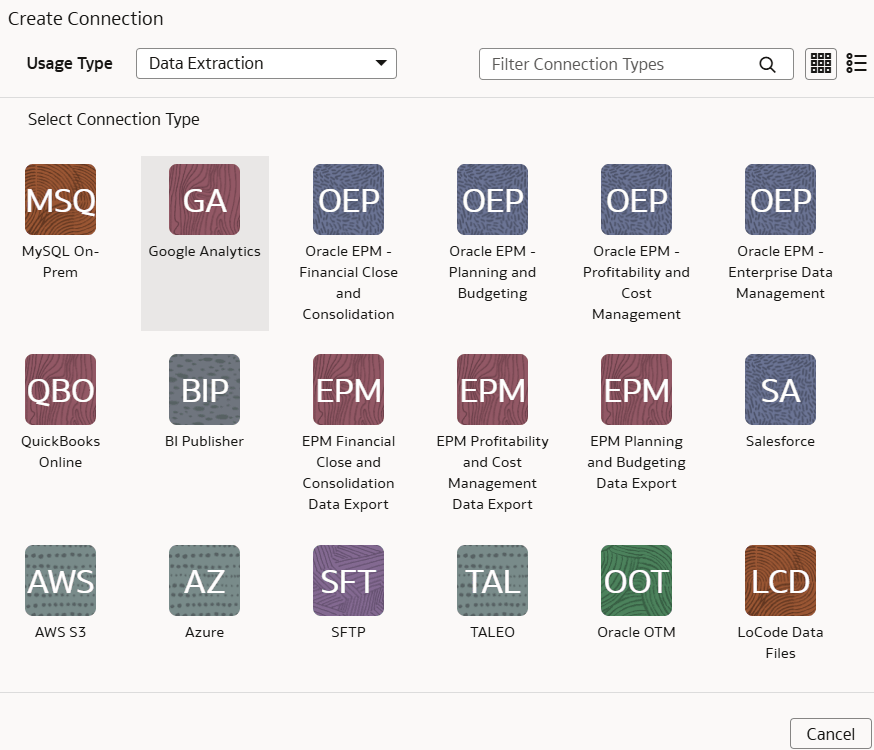

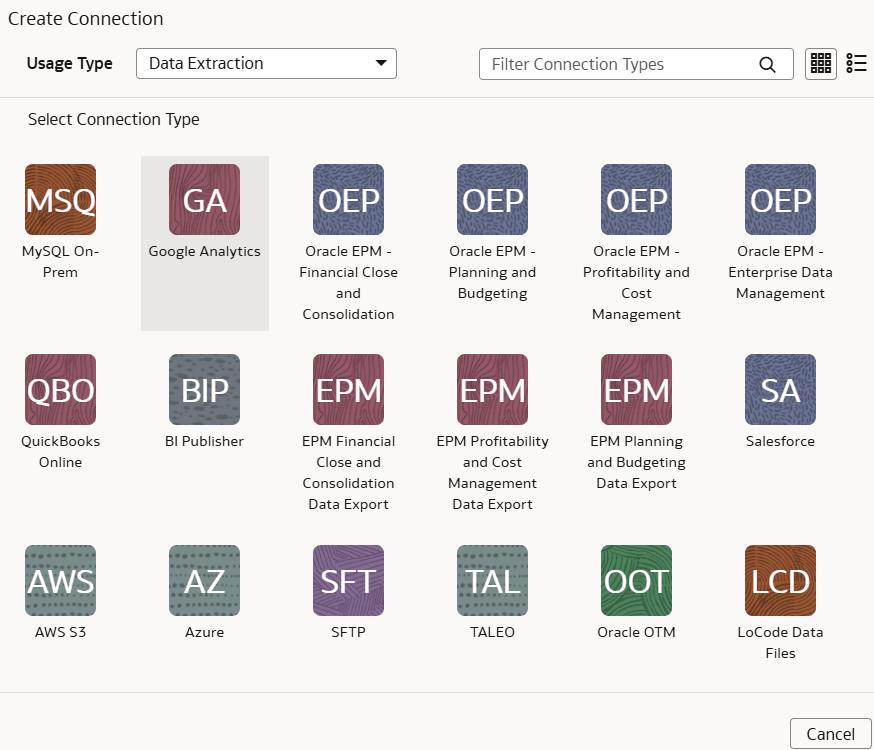

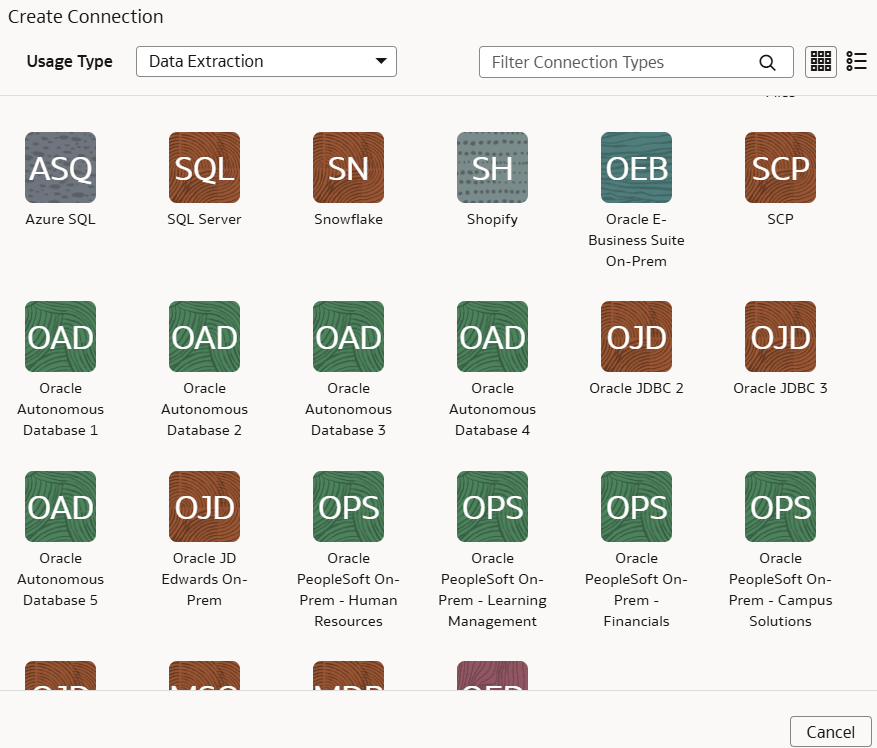

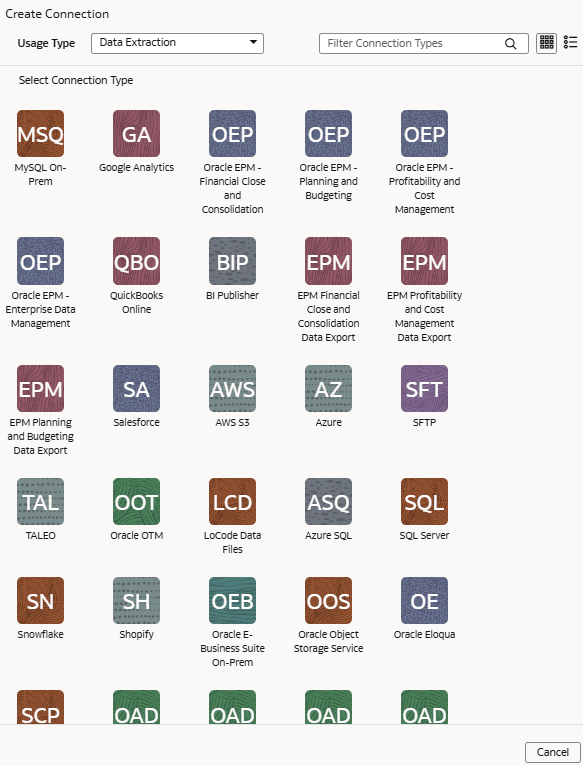

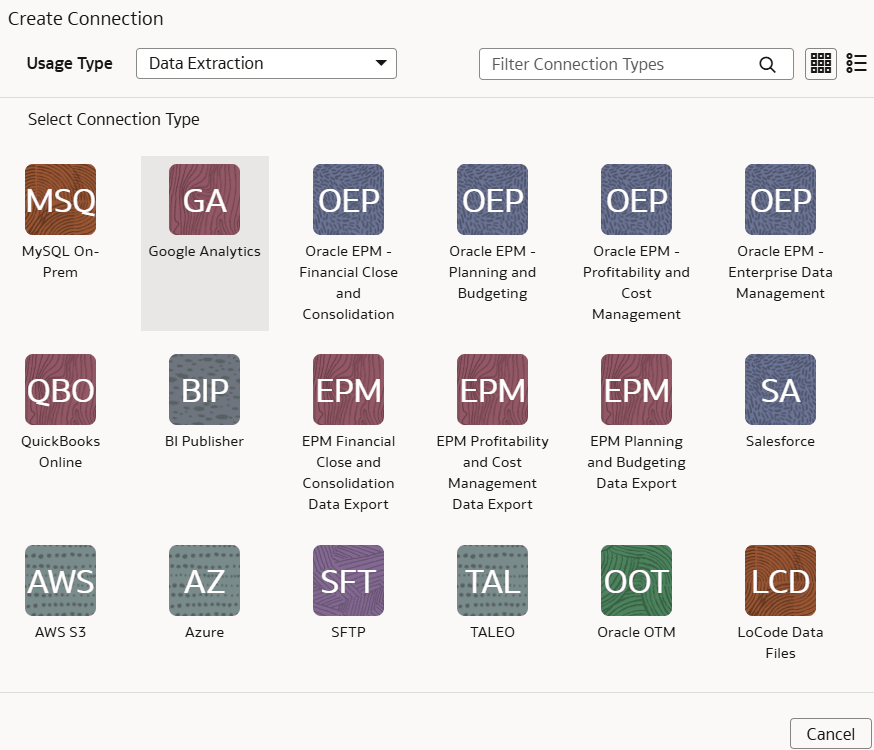

You can connect to a variety of data sources and remote applications to provide the background information for reports. You can blend the additional data from the various data sources with the prebuilt datasets to enhance business analysis.

Oracle NetSuite Analytics Warehouse can connect to other pre-validated data sources such as Oracle Object Storage, cloud applications such as Google Analytics, and on-premises applications such as Oracle E-Business Suite.

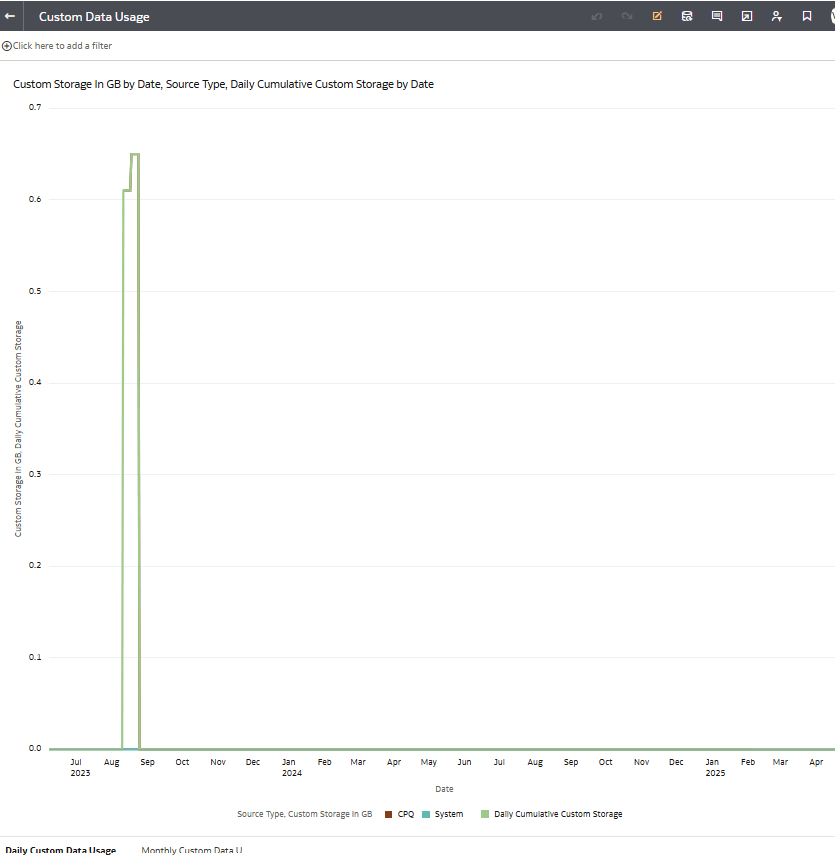

You can view the usage of capacity for custom data that's loaded into Oracle NetSuite

Analytics Warehouse through the connectors in the Custom

Data Usage dashboard available in the Common folder. The dashboard shows data loaded

daily and monthly from each of the activated external data sources.

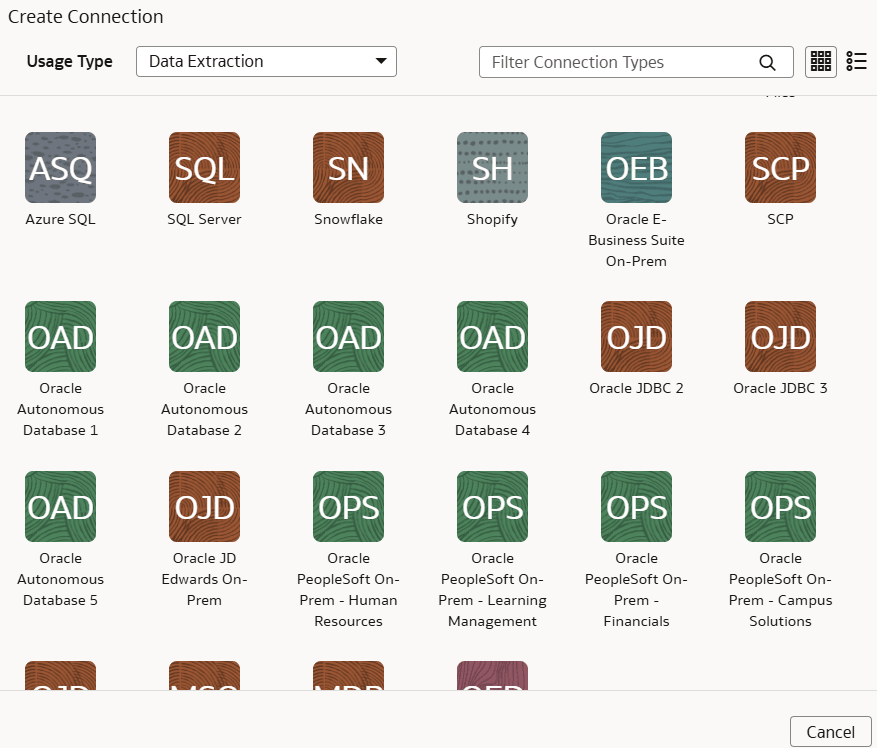

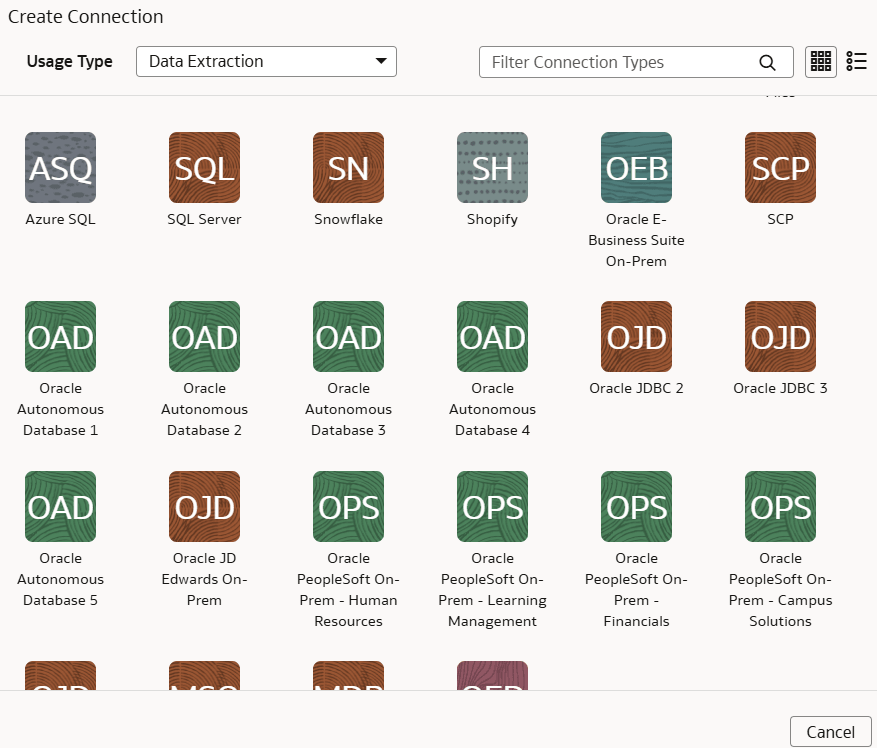

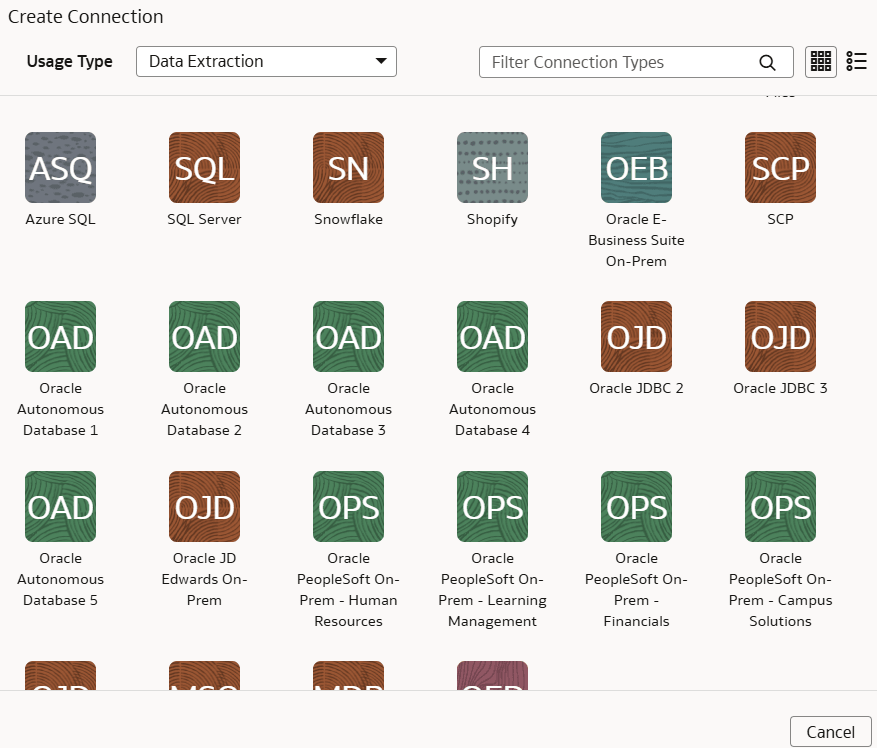

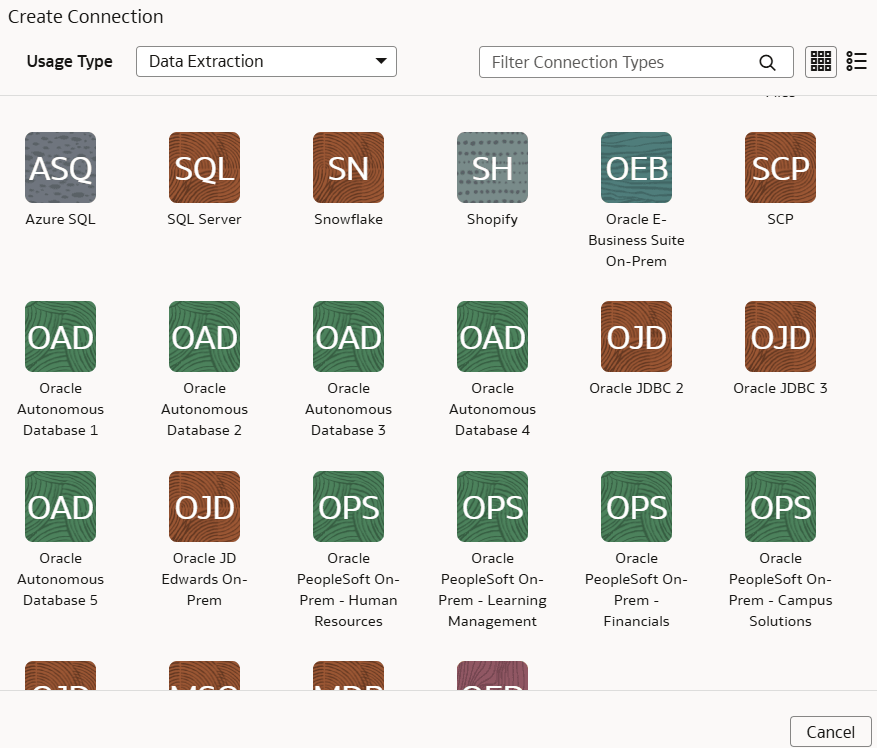

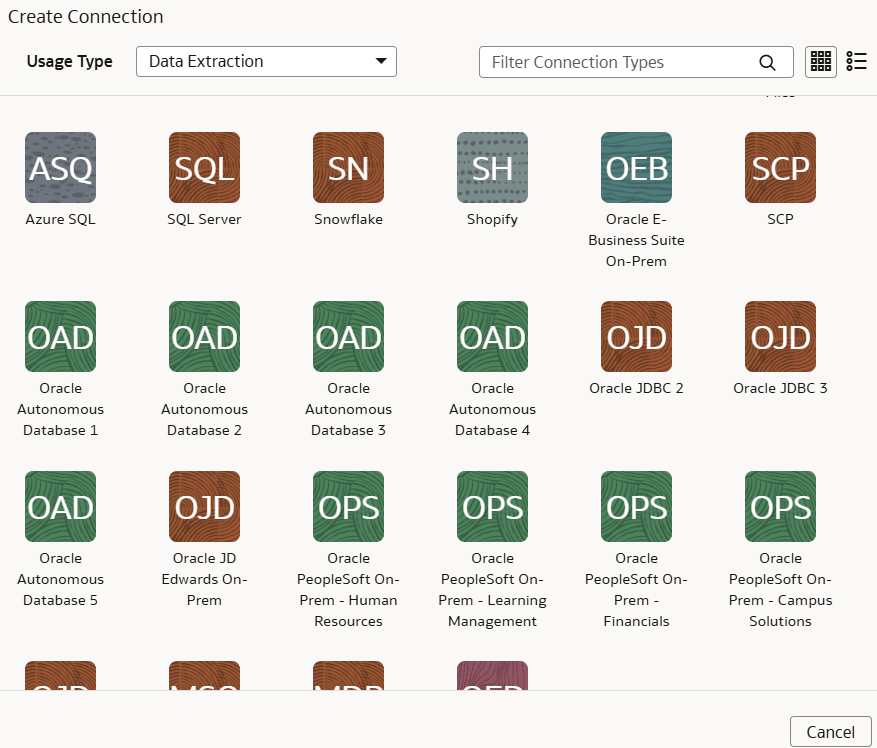

Connect With On-premises Sources

Connect with your on-premises applications to provide the background information for reports.

You can blend the additional data from these data sources with the prebuilt datasets to enhance business analysis.

Topics

- Set up the Remote Agent to Load Data into NetSuite Analytics Warehouse (Preview)

- Load Data from On-premises E-Business Suite into NetSuite Analytics Warehouse (Preview)

- Load Data from On-premises JD Edwards into NetSuite Analytics Warehouse (Preview)

- Load Data from On-premises MySQL Database into NetSuite Analytics Warehouse (Preview)

- Load Data from On-premises PeopleSoft into NetSuite Analytics Warehouse (Preview)

- Load Data from SQL Server into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Oracle Database Using JDBC into NetSuite Analytics Warehouse (Preview)

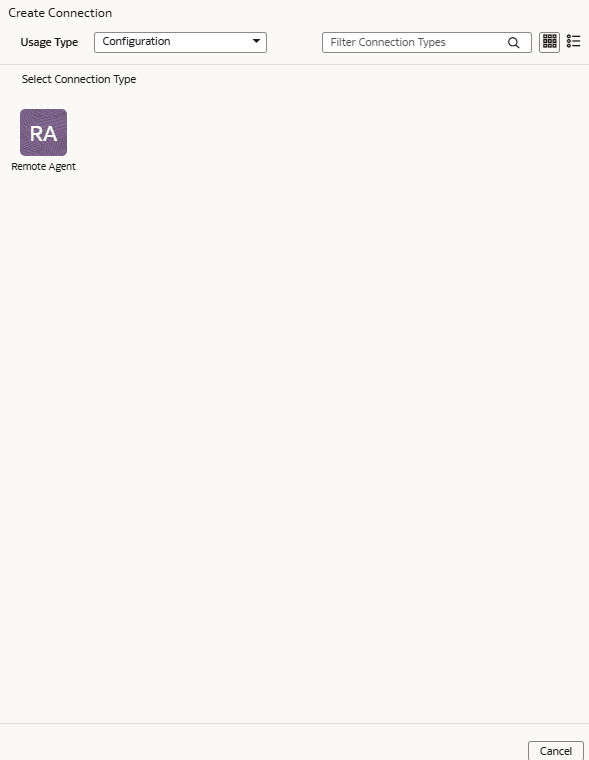

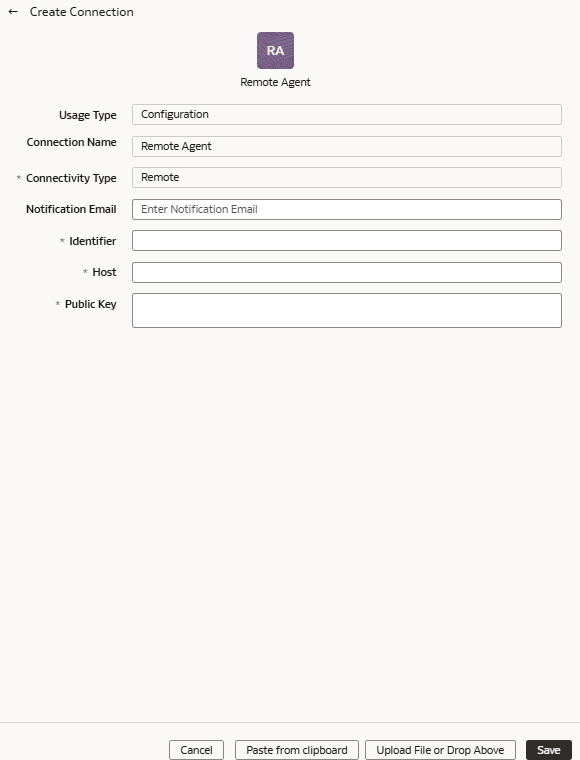

Set up the Remote Agent to Load Data into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to your on-premises systems such as E-Business Suite, Peoplesoft, and JD Edwards, load data from these on-premises systems into Oracle NetSuite Analytics Warehouse, and then use the on-premises data to create data augmentations.

After connecting to your on-premises system, the remote agent extracts the data and loads it into the autonomous data warehouse associated with your Oracle NetSuite Analytics Warehouse instance. You can extract and load the on-premises data into Oracle NetSuite Analytics Warehouse only once in 24 hours.

Configuring an extract service remote agent to connect to your on-premises systems and load data from these on-premises systems into Oracle NetSuite Analytics Warehouse involves the following steps:

- Perform Remote Agent Connection Prerequisites.

- Configure TLS for Remote Agent Configuration (Optional).

- Run the Remote Agent docker container and use the Remote Agent command line interface tool.

- For Windows users, see Run the Remote Agent Docker on Windows and Configure the Remote Agent Using the Command Line Interface Tool on Windows.

- For Linux users, see Run the Remote Agent Docker on Linux and Configure the Remote Agent Using the Command Line Interface Tool on Linux.

- Create the Remote Agent Connection.

Perform Remote Agent Connection Prerequisites

As a service administrator, you must perform setup steps before configuring an extract service remote agent to connect to your on-premises systems and load data from these on-premises systems into Oracle NetSuite Analytics Warehouse.

Configure TLS for Remote Agent Configuration (Optional)

You can configure your remote agent to use your TLS certificate instead of the default.

Run the Remote Agent Docker on Windows

Run the Remote Agent docker container as part of the remote agent connection configuration for Oracle NetSuite Analytics Warehouse.

container-registry.oracle.com/fdi/remoteagent:23.8.0. Use this name in any commands that call for <docker_image>.

Configure the Remote Agent Using the Command Line Interface Tool on Windows

Use the Remote Agent command line interface (CLI) tool to configure the remote agent.

- Ensure that Windows Subsystem for Linux (WSL) is installed on your system.

- Administrator access is required.

Run the Remote Agent Docker on Linux

Run the Remote Agent docker container as part of the remote agent connection configuration for Oracle NetSuite Analytics Warehouse.

container-registry.oracle.com/fdi/remoteagent:23.8.0. Use this name in any commands that call for <docker_image>.

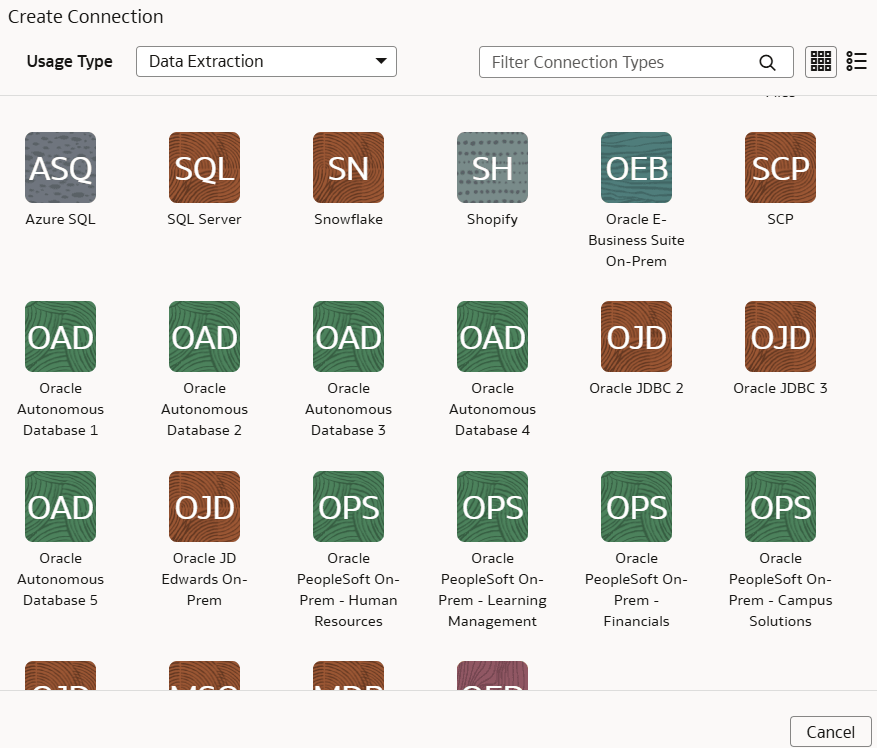

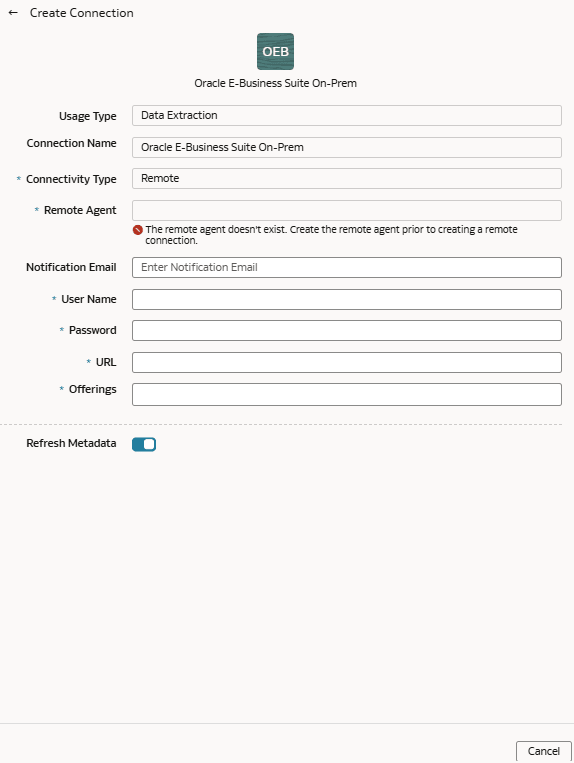

Load Data from On-premises E-Business Suite into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to your on-premises Oracle E-Business Suite system.

After connecting to your on-premises system, the remote agent extracts the data and loads it into the autonomous data warehouse associated with your Oracle NetSuite Analytics Warehouse instance. The remote agent pulls the metadata through the public extract service REST API and pushes data into object storage using the object storage REST API. You can extract and load the on-premises data into Oracle NetSuite Analytics Warehouse only once a day. Ensure that the user credentials you provide have access to the specific tables they need to extract data from within the EBS schema, whose URL you provide while creating the connection.

Ensure that Oracle E-Business Suite On-Prem is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

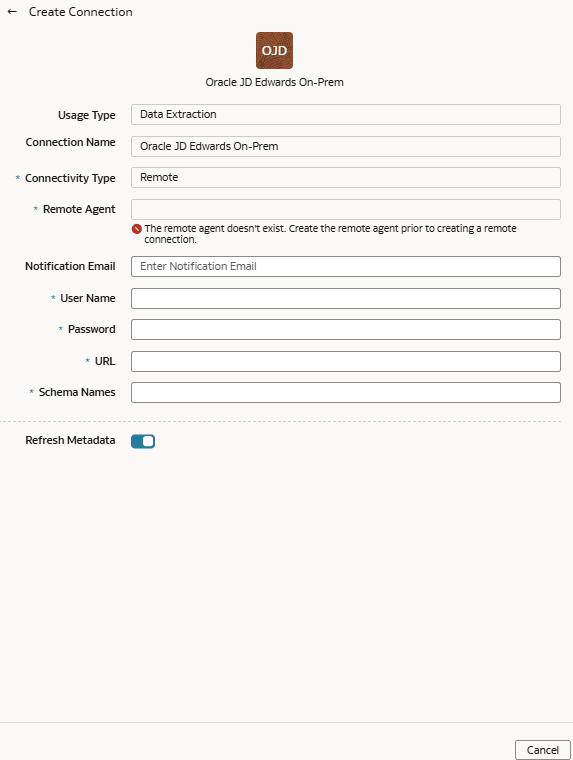

Load Data from On-premises JD Edwards into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to your on-premises JD Edwards system and use the JD Edwards data to create data augmentations.

Ensure that Remote Agent and Oracle JD Edwards On-Prem are enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

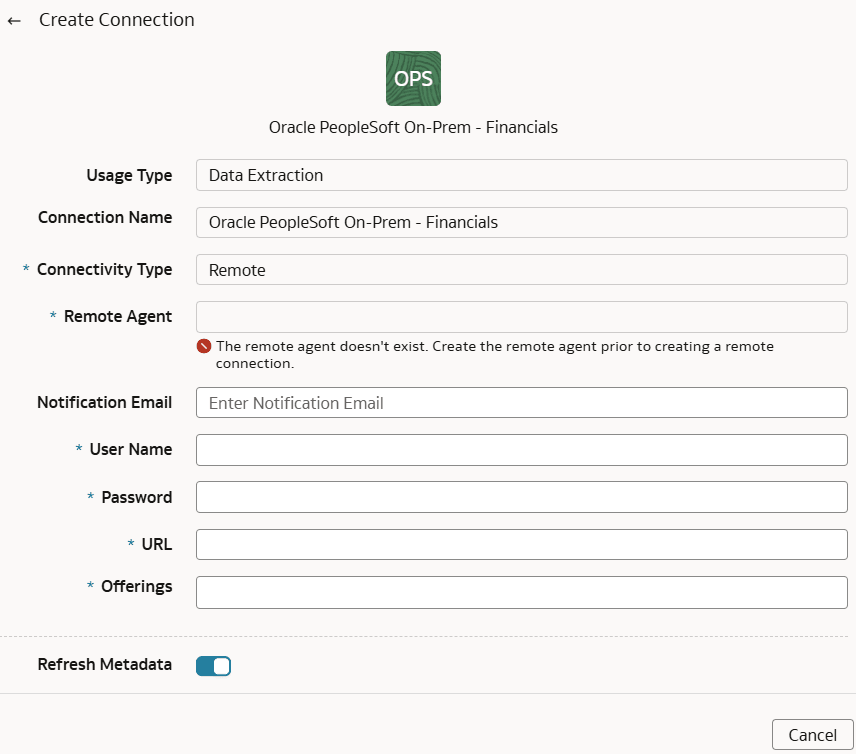

Load Data from On-premises PeopleSoft into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to your on-premises Oracle PeopleSoft system.

- Oracle PeopleSoft On-Prem - Campus Solutions

- Oracle PeopleSoft On-Prem - Financials

- Oracle PeopleSoft On-Prem - Human Resources

- Oracle PeopleSoft On-Prem - Learning Management

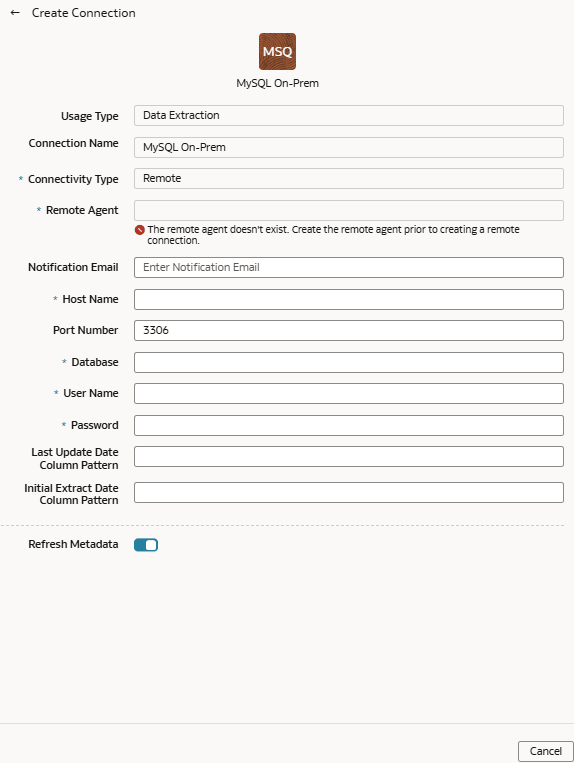

Load Data from On-premises MySQL Database into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to your on-premises MySQL database.

Ensure that MySQL On-Prem is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

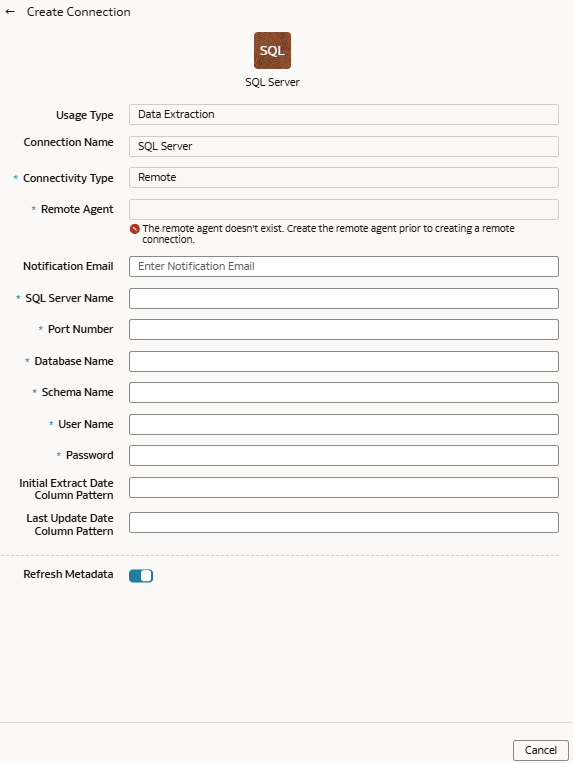

Load Data from SQL Server into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from SQL Server and use it to create data augmentations.

Ensure that SQL Server is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

Connect with Cloud File Storage Sources

Connect with your file storage-based cloud sources to provide the background information for reports.

You can blend the additional data from these data sources with the prebuilt datasets to enhance business analysis. The file-based connectors support only UTF-8 encoding for data files that you upload.

Topics

- About OpenCSV Standards

- About Date and Timestamp Formatting for CSV File-based Extractors

- Load Data from Amazon Simple Storage Service into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Azure Storage into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Oracle Object Storage Service into NetSuite Analytics Warehouse

- Load Data from an SFTP Source into NetSuite Analytics Warehouse (Preview)

About OpenCSV Standards

The CSV parser in the extract service for file extractors uses Opencsv. The csv files that are processed by extractservice must be compliant with the Opencsv standards.

See Opencsv File Standards. In addition to the CSV parser, the extract service supports files that are compliant with RFC4180 specification. The RFC 4180 CSV parser enables you to ingest single-line and multi-line data within your .csv files. The RFC 4180 parser supports ingesting data records with up to 99 line breaks. For more information on the RFC 4180 specification, see Common Format and MIME Type for Comma-Separated Values (CSV) Files.

- While using special characters:

- For strings without special characters, quotes are optional.

- For strings with special characters, quotes are mandatory. For example, if a string has a comma, then you must use quotes for the string such as "Abc, 123".

- Escapes (backslash character) are optional.

- Backslash characters must always be escaped. For example, if there is a backslash in your data, use the following format: "Double backslash ( \\ ) abc".

- To manage quotes inside a quoted string, use a backslash inside the quotes: "Asd \" asd".

- The Opencsv parser allows you to select one of these available characters as a delimiter:

- Comma (,)

- Semi-colon ( ; )

- Pipe (|)

- Tab ( )

About Date and Timestamp Formatting for CSV File-based Extractors

Extractors such as Secure FTP (SFTP), Amazon Simple Storage Service (AWS S3), and Oracle Object Storage Service use CSV data files that have date and timestamp fields.

Note:

Ensure that the date and timestamp formats for the data files match the date and timestamp formats in your source; for example, if you've used MM/dd/yyyy and MM/dd/yyyy hh:mm:ss in your source, then you must specify the same formats while creating the applicable data connections.| Example | Pattern |

|---|---|

| 1/23/1998 | MM/dd/yyyy |

| 1/23/1998 12:00:20 | MM/dd/yyyy hh:mm:ss |

| 12:08 PM | h:mm a |

| 01-Jan-1998 | dd-MMM-yyyy |

| 2001-07-04T12:08:56.235-0700 | yyyy-MM-dd'T'HH:mm:ss.SSSZ |

| Letter | Meaning |

|---|---|

| M | Month |

| d | Day |

| y | Year |

| h | Hour (0-12) |

| H | Hour (0-23) |

| m | Minute |

| s | Second |

| S | Milli Second |

| a | AM/PM |

| Z | Timezone |

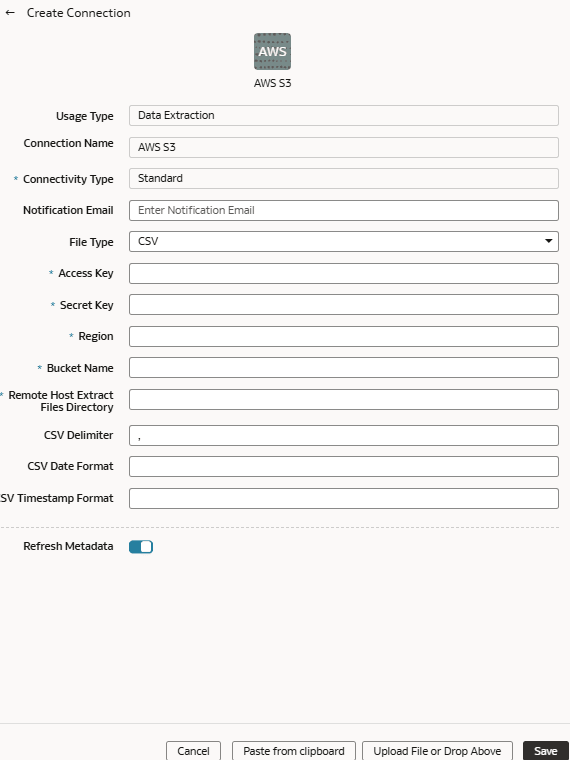

Load Data from Amazon Simple Storage Service into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from Amazon Simple Storage Service (AWS S3) and use it to create data augmentations.

Ensure that AWS S3 is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

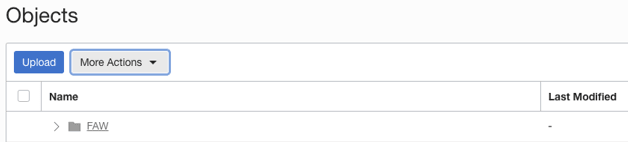

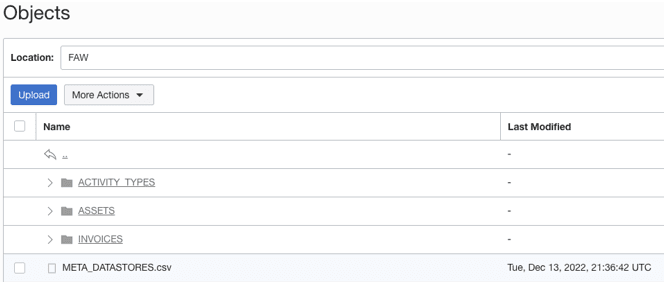

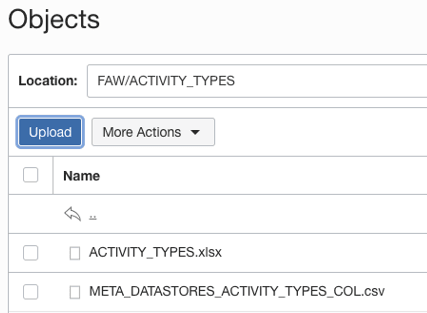

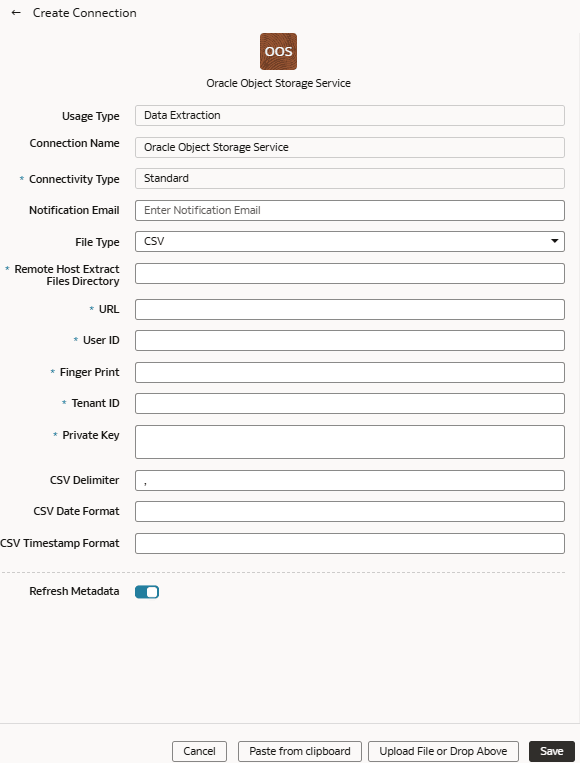

Load Data from Oracle Object Storage Service into NetSuite Analytics Warehouse

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from Oracle Object Storage Service and use it to create data augmentations.

The recommended approach is to create one augmentation from one source table after acquiring data from Oracle Object Storage Service. After completion of augmentation, NetSuite Analytics Warehouse renames the source table in this case and if you create more than one augmentation from the same source, all other augmentations may fail with a message that the source file wasn't found.

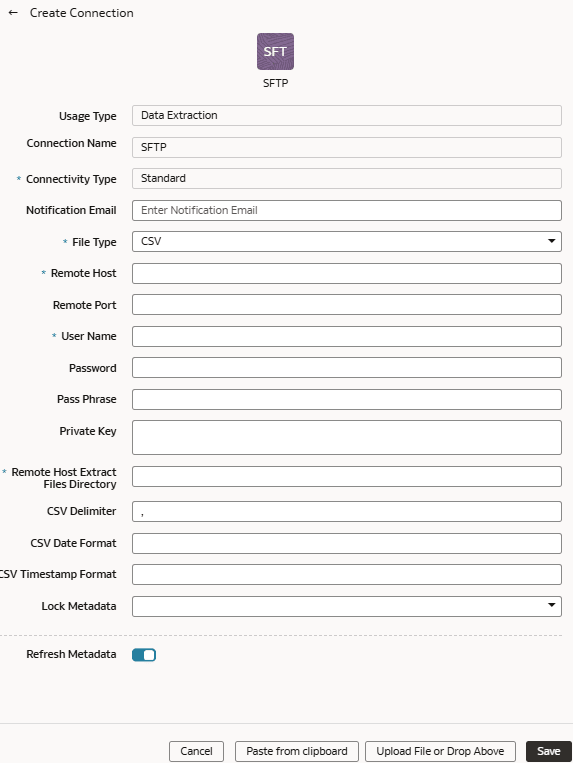

Load Data from an SFTP Source into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from a secure FTP source (SFTP) and use it to create data augmentations.

Ensure that SFTP is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

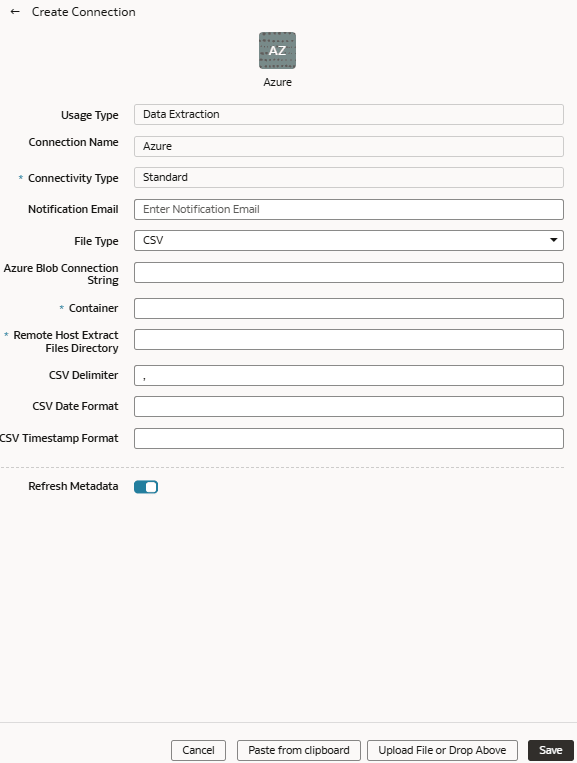

Load Data from Azure Storage into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from Azure Storage and use it to create data augmentations.

Ensure that Azure Storage is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

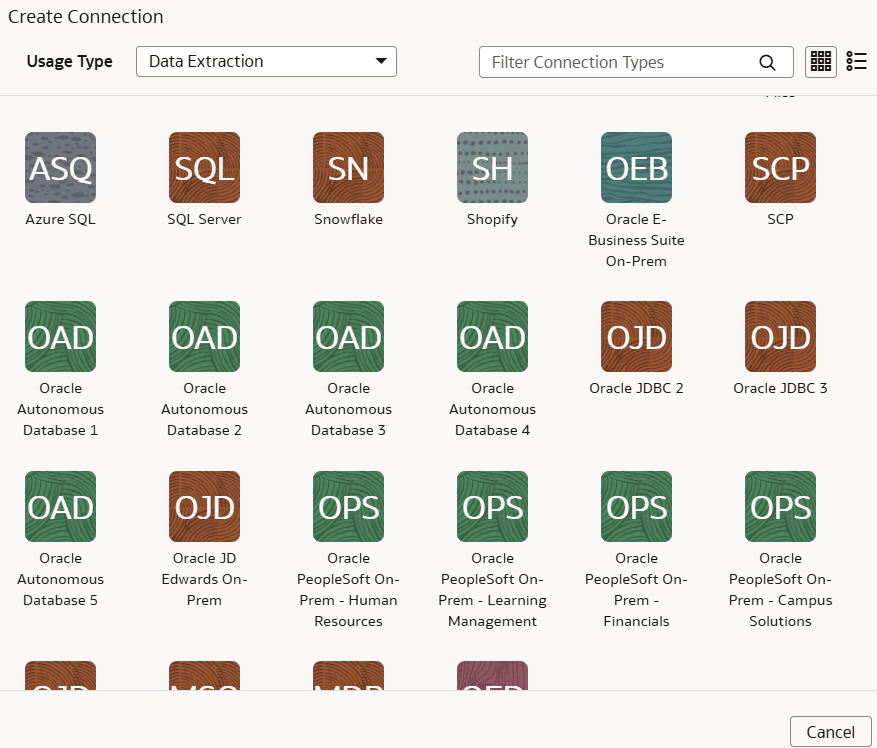

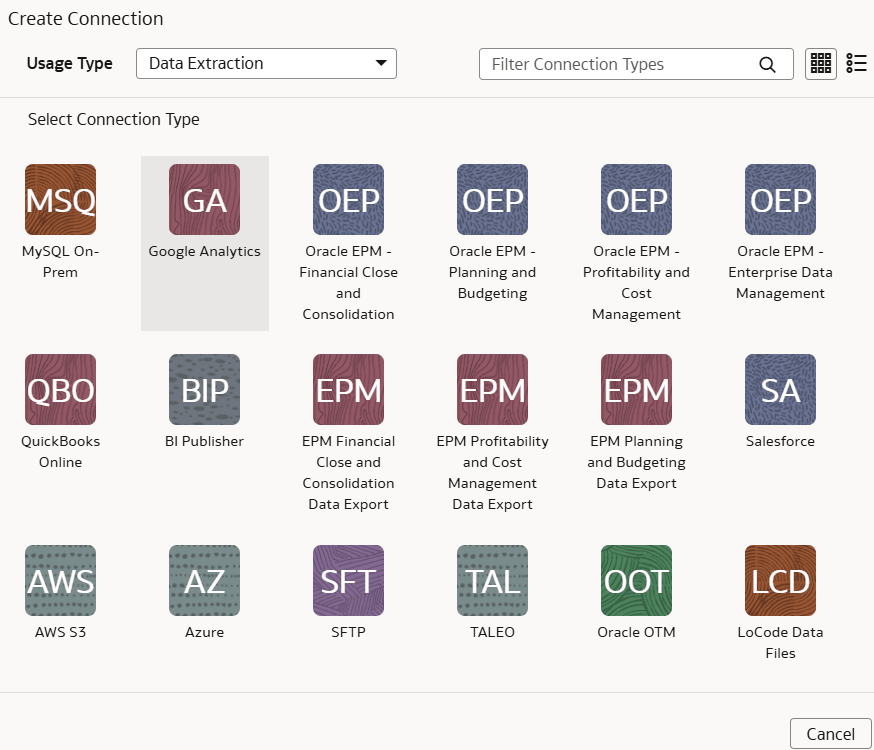

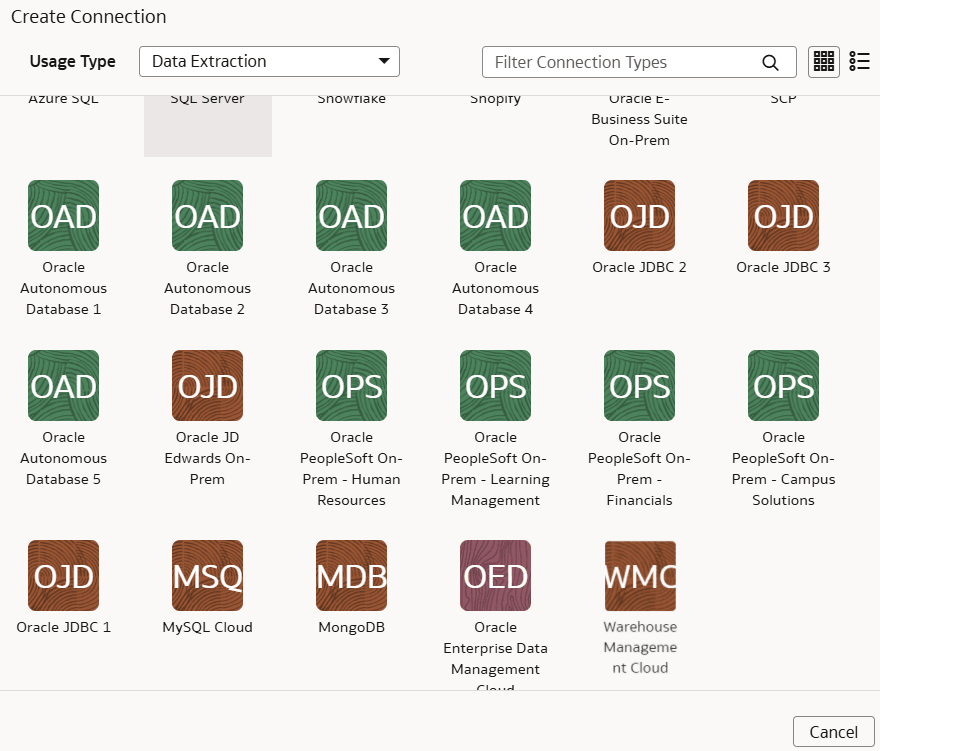

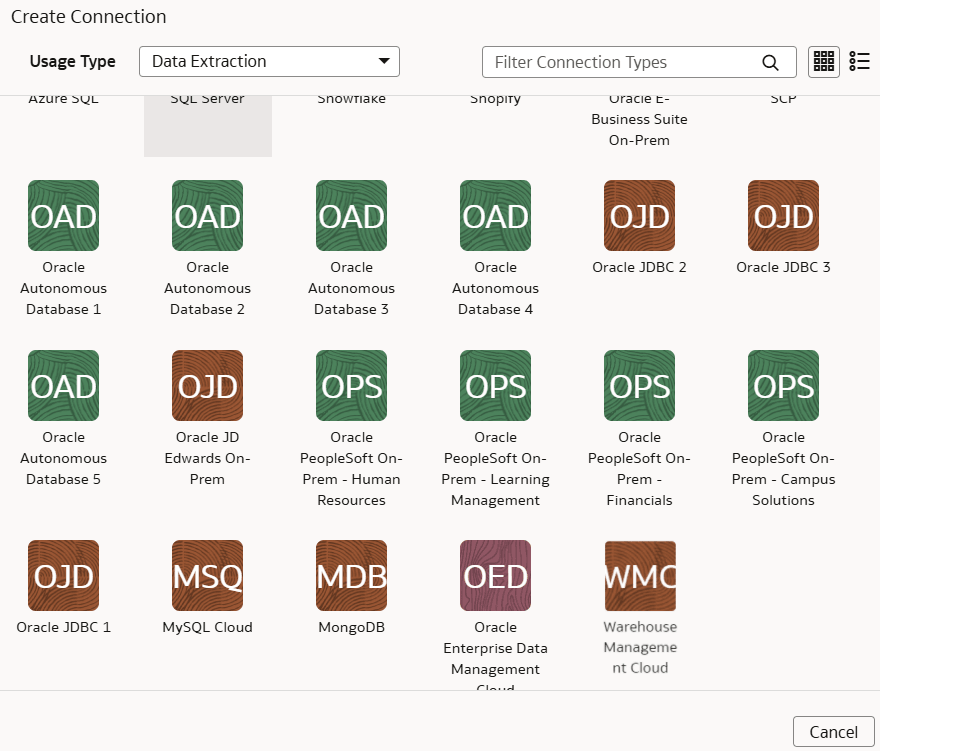

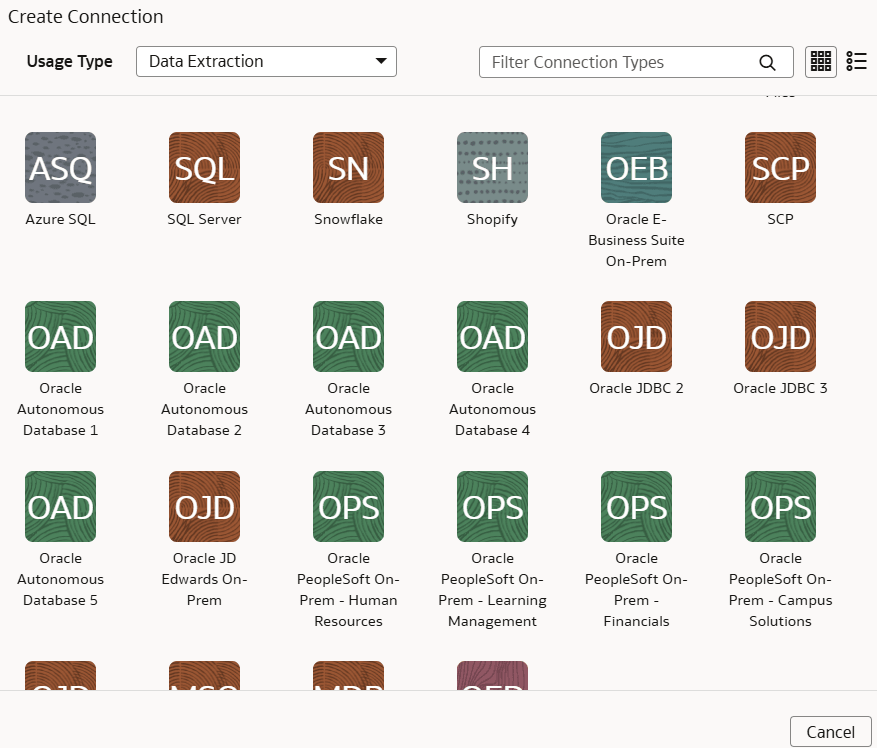

Connect With Cloud Sources

Connect with your cloud applications to provide the background information for reports.

You can blend the additional data from these data sources with the prebuilt datasets to enhance business analysis. To know about the date and timestamp formatting for the CSV file-based extractors, see About Date and Timestamp Formatting for CSV File-based Extractors.

Topics

- Load Data from Azure SQL into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Oracle Autonomous Database into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Oracle Enterprise Performance Management into NetSuite Analytics Warehouse (Preview)

- Load Data from EPM Export Data Instance into NetSuite Analytics Warehouse (Preview)

- Load Data from Google Analytics into NetSuite Analytics Warehouse

- Connect with Your Oracle Eloqua Data Source (Preview)

- Load Data from Salesforce into NetSuite Analytics Warehouse

- Load Data from Oracle Analytics Publisher into NetSuite Analytics Warehouse

- Load Data from Shopify into NetSuite Analytics Warehouse

- Load Data from Snowflake into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Taleo into NetSuite Analytics Warehouse (Preview)

- Load Data from Oracle Transportation Management Cloud Service into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from Fusion Supply Chain Planning into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from QuickBooks Online into Oracle NetSuite Analytics Warehouse (Preview)

- Load Data from MongoDB into NetSuite Analytics Warehouse (Preview)

- Load Data from MySQL Cloud Database into NetSuite Analytics Warehouse (Preview)

- Load Data from Enterprise Data Management Cloud into NetSuite Analytics Warehouse (Preview)

Create Additional NetSuite Connections

You can create additional connections to the NetSuite source based on your customer tier. These additional connections enable you to bring in data from multiple NetSuite accounts.

- One NetSuite primary account with live data and other NetSuite accounts having static data.

- More than one NetSuite account with live data but no data mash-up required.

- More than one NetSuite account with live data requiring data mash-up.

As a premium license tier Oracle NetSuite Analytics Warehouse customer, you can connect to two additional NetSuite accounts. If you're an enterprise license tier customer, then you can connect to ten additional NetSuite accounts. Based on your license tier, Oracle displays the additional NetSuite connections on the Create Connection dialog.

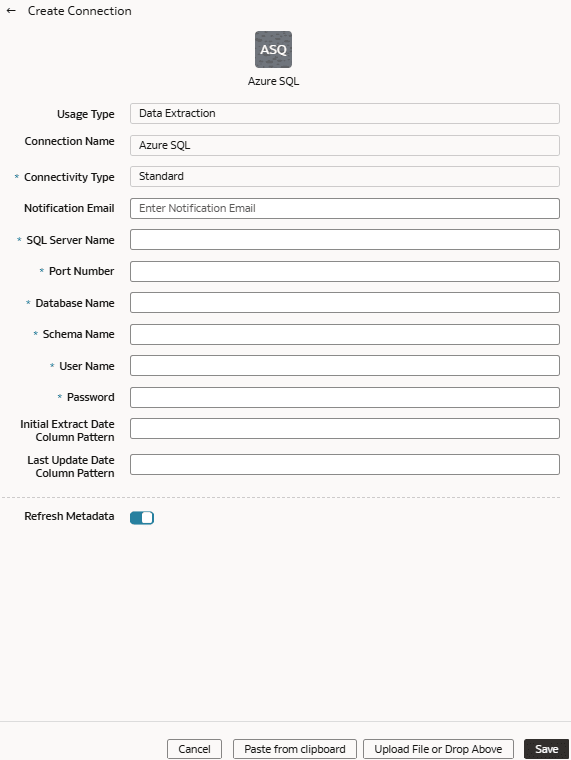

Load Data from Azure SQL into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from Azure SQL and use it to create data augmentations.

Ensure that Azure SQL is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

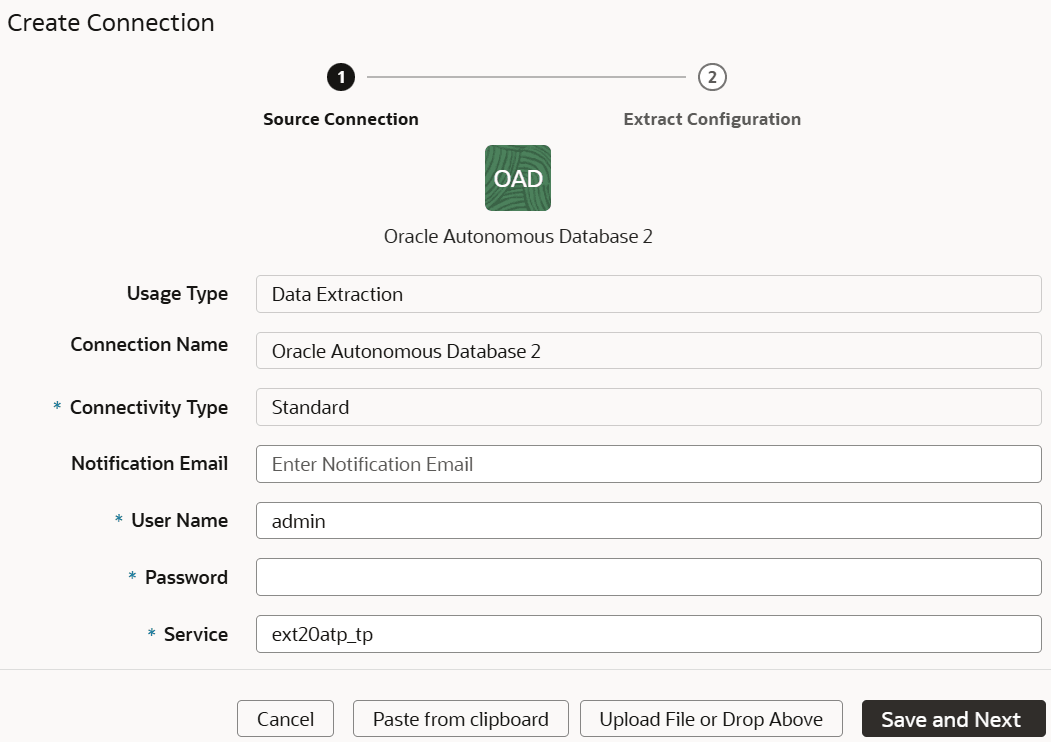

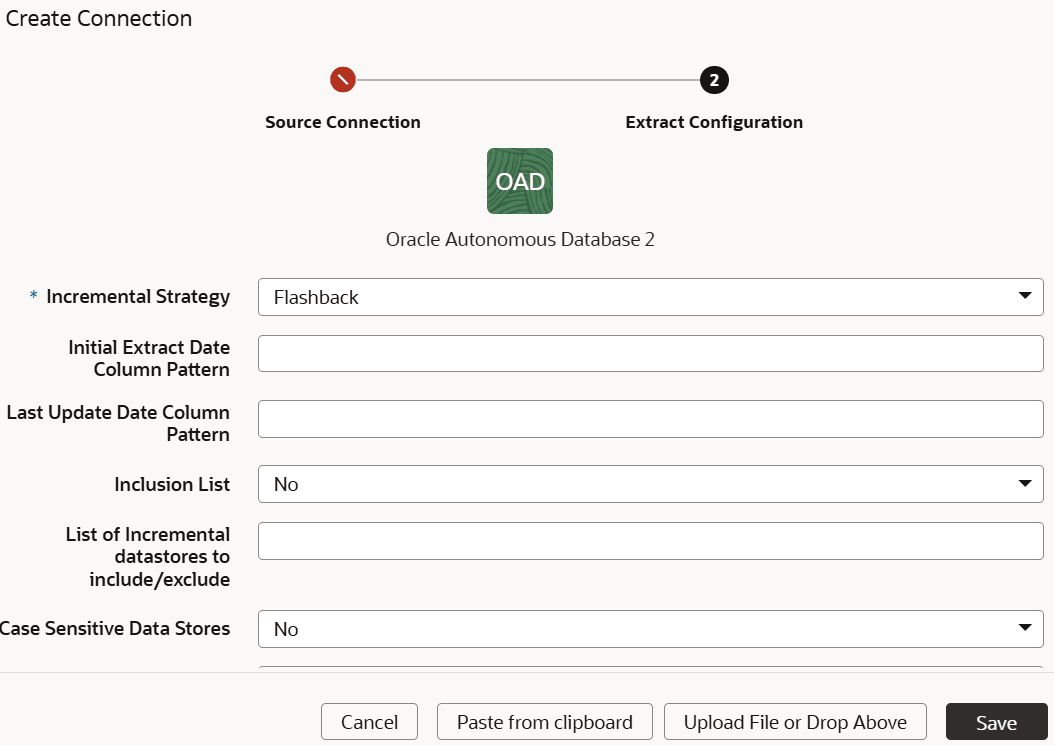

Load Data from Oracle Autonomous Database into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from Oracle Autonomous Database and use it to create data augmentations.

Note:

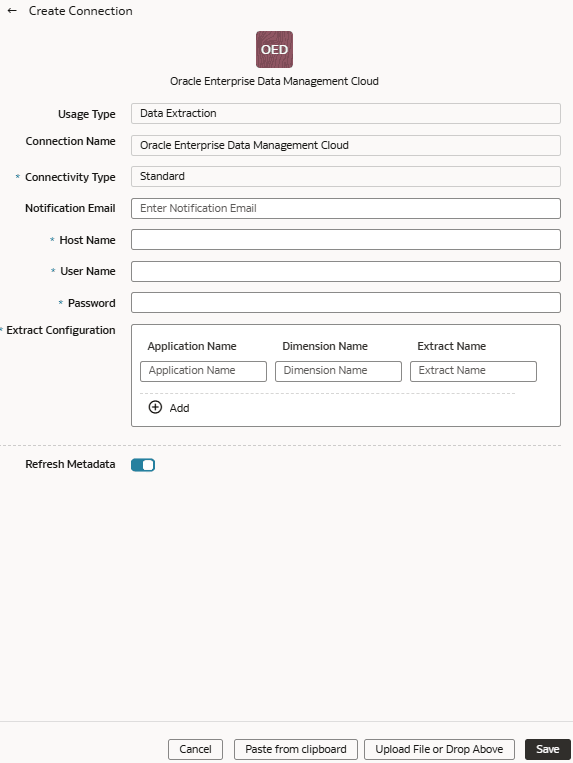

Currently, you can't connect to a private autonomous transaction processing database (ATP database).Load Data from Enterprise Data Management Cloud into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Enterprise Data Management Cloud instance and use it to create data augmentations.

The extracts created in the Enterprise Data Management Cloud service need to be public, hence you must promote your private extracts to the public. Review the documentation and error messages for the metadata refresh failures for the private extract. This connector supports only the CSV data format.

Ensure that Oracle Enterprise Data Management Cloud is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

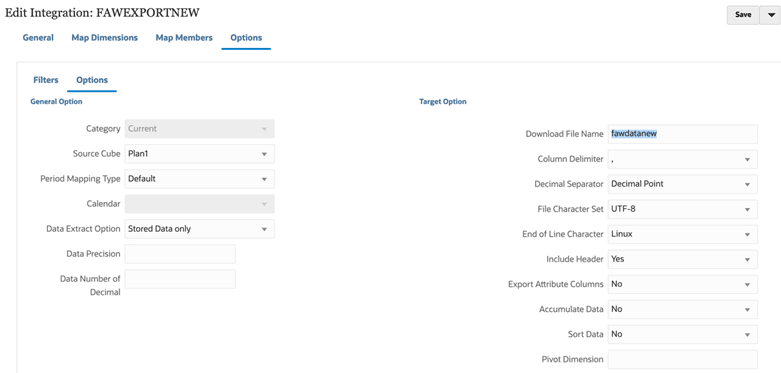

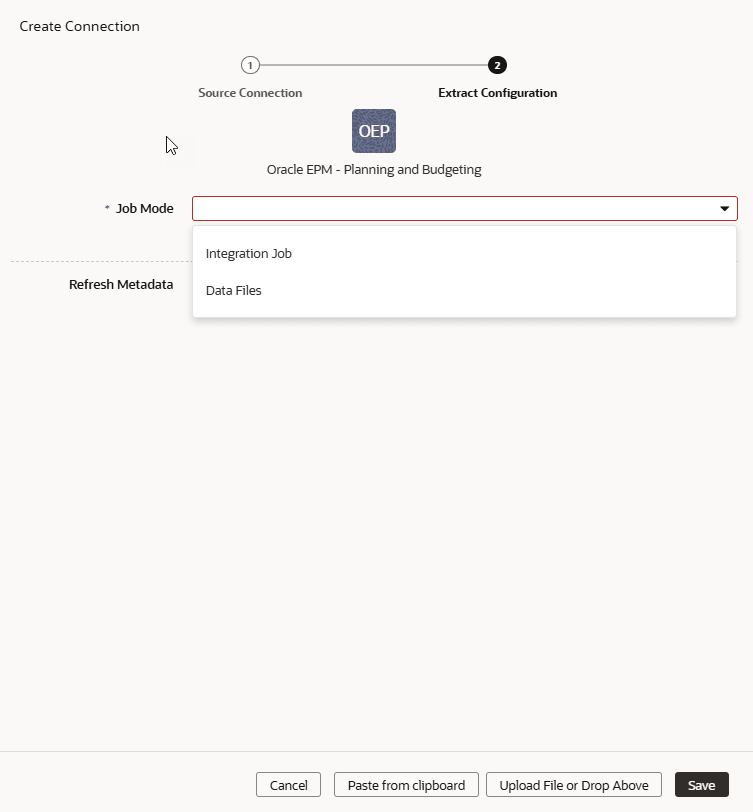

Load Data from Oracle Enterprise Performance Management into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Enterprise Performance Management (EPM) SaaS instance and use it to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases.

- Financial Close and Consolidation (FCCS)

- Planning and Budgeting (PBCS)

- Profitability and Cost Management (PCMCS)

Note:

The EPM connectors display the default datatype and size; you must edit these values as applicable while creating data augmentations.- Oracle EPM - Financial Close and Consolidation

- Oracle EPM - Planning and Budgeting

- Oracle EPM - Profitability and Cost Management

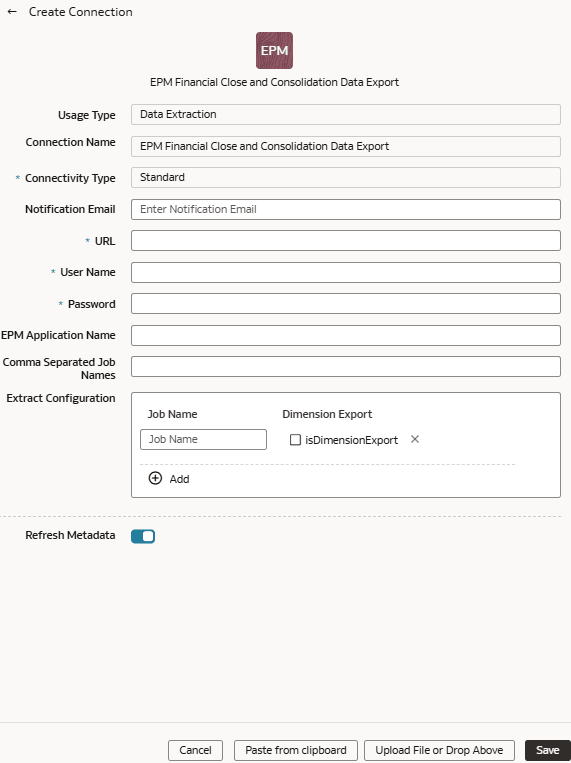

Load Data from EPM Export Data Instance into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from EPM Export Data instance and use it to create data augmentations.

- Financial Close and Consolidation (FCCS)

- Planning and Budgeting (PBCS)

- Profitability and Cost Management (PCMCS)

Note:

The EPM connectors display the default datatype and size; you must edit these values as applicable while creating data augmentations.- EPM Financial Close and Consolidation Data Export

- EPM Planning and Budgeting Data Export

- EPM Profitability and Cost Management Data Export

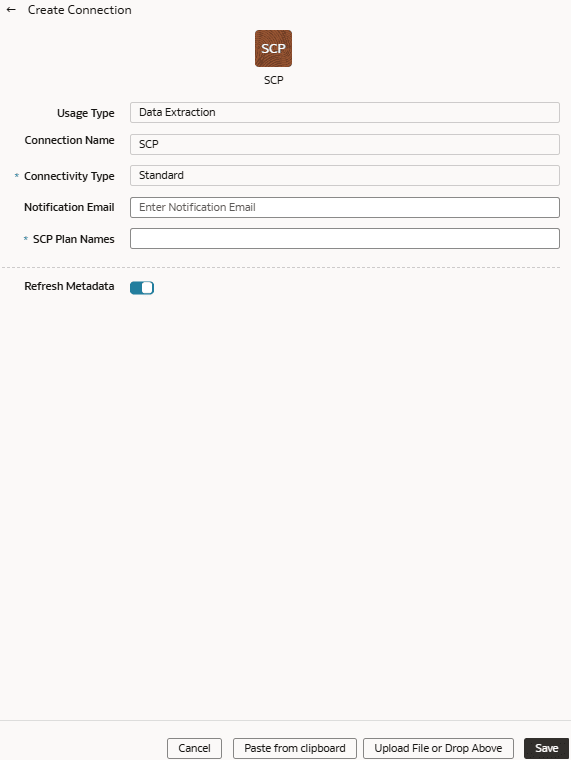

Load Data from Fusion Supply Chain Planning into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from a Fusion Supply Chain Planning instance.

You can later use this data to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases. Establish the connection from NetSuite Analytics Warehouse to your Fusion Supply Chain Planning instance to start data acquisition followed by augmentation.

Note:

Oracle Fusion SCM Analytics is a prerequisite to use the Fusion Supply Chain Planning connector.Load Data from Google Analytics into NetSuite Analytics Warehouse

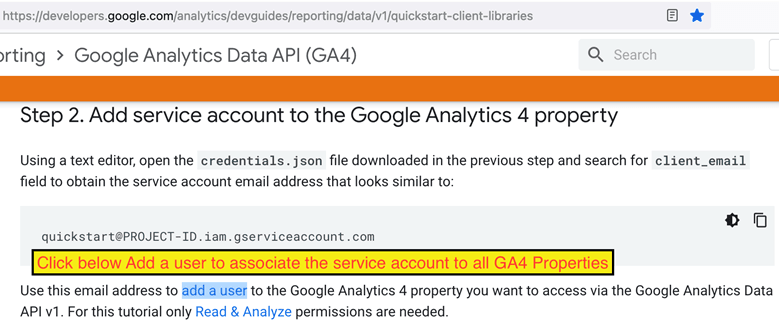

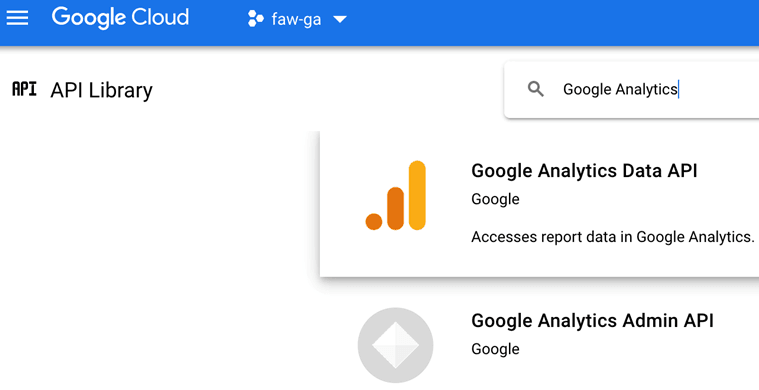

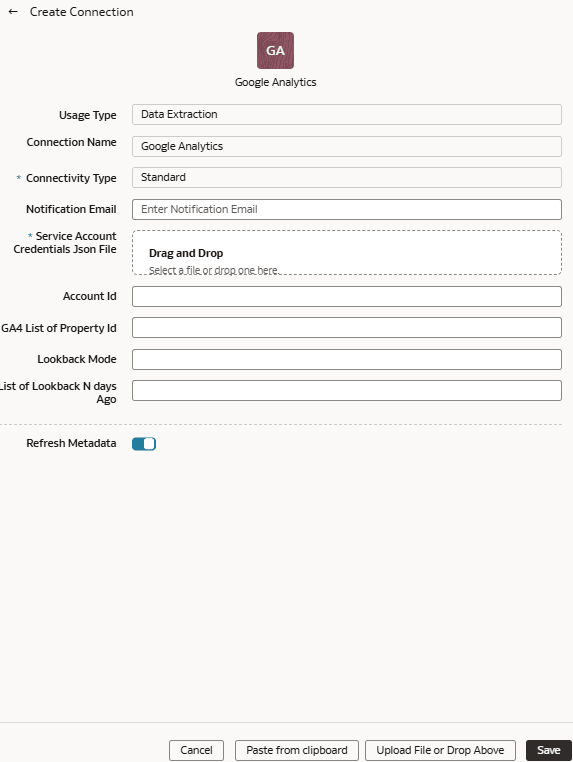

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Google Analytics SaaS instance and use it to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases.

- NetSuite Analytics Warehouse supports Google Analytics extractor for GA4 properties and doesn’t support the previous version – Google Universal Analytics (UA) properties.

- DataStores are the list of GA4 properties.

- DataStore columns are the list of Dimensions and Metrics for a GA4 property.

- DataExtract runs the report based on user selection for a GA4 property as DataStore and Dimensions and Metrics as DataStore columns.

- MetaExtract fetches metadata for all the available GA4 properties (DataStores) and its Dimensions and Metrics (DataStoreColumns).

- This connector supports limited number of Google Analytics metrics. See Dimensions Metrics Explorer to know what is available.

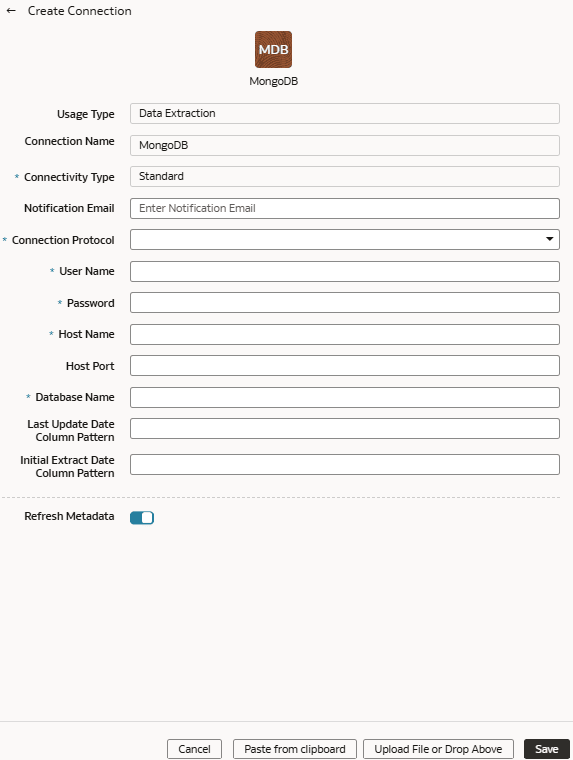

Load Data from MongoDB into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Mongo database and use it to create data augmentations.

Ensure that MongoDB is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

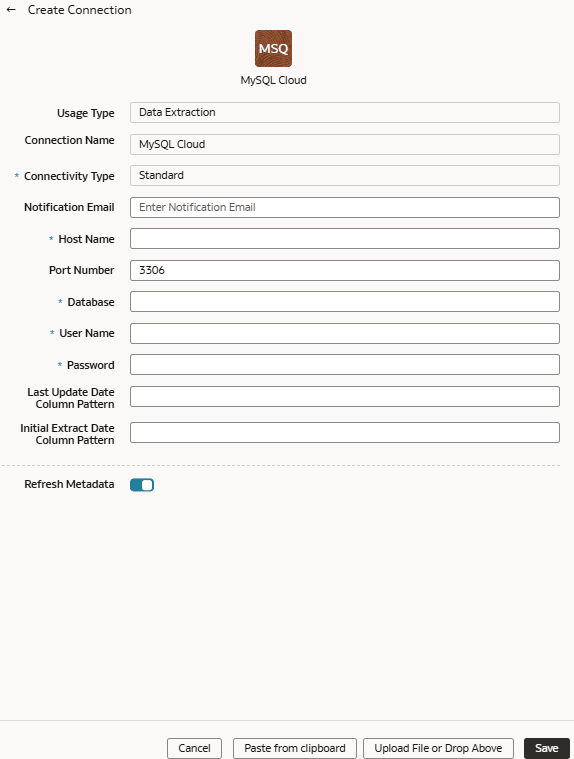

Load Data from MySQL Cloud Database into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the MySQL Cloud database and use it to create data augmentations.

Ensure that MySQL Cloud is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

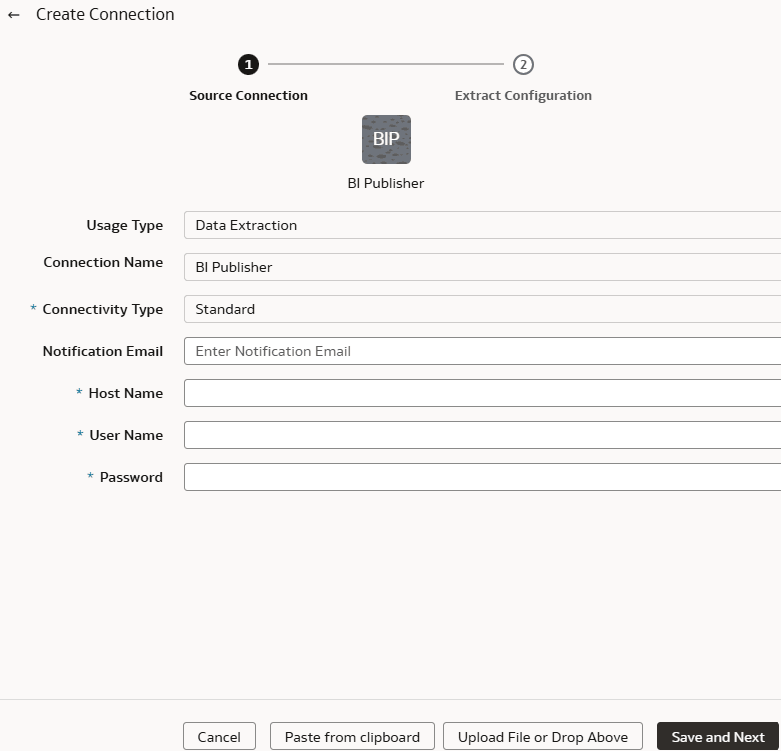

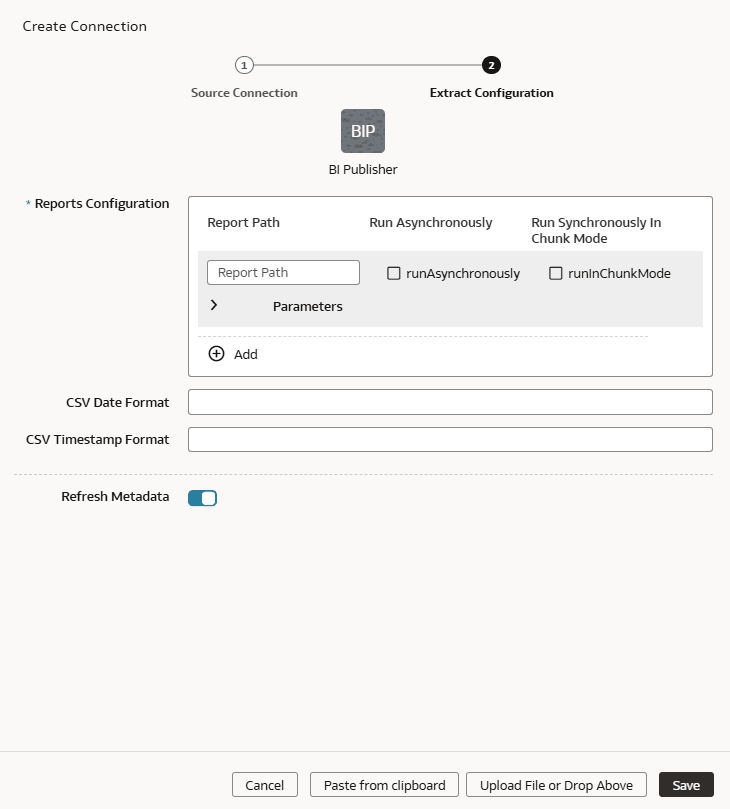

Load Data from Oracle Analytics Publisher into NetSuite Analytics Warehouse

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Analytics Publisher reports and use it to create data augmentations for various use cases.

- The Analytics Publisher in Oracle Fusion Cloud Applications for data augmentation.

- Only those reports that complete within the Analytics Publisher report execution timeout limit that's typically 300 seconds.

- The connector only supports CSV file formats with a comma-separated delimiter. Other delimiters are not currently supported.

The Oracle BI Publisher connector workflow must observe the security rules of Oracle Fusion Cloud Applications. You must ensure that the password rotation and update are done on time before executing the Oracle BI Publisher connector pipeline. Otherwise, those pipeline refreshes hang and eventually get deleted, and the data source is disabled until you update the password and resubmit the refresh request.

Ensure that Oracle BI Publisher is enabled on the Enable Features page prior to creating this connection. See Enable Generally Available Features.

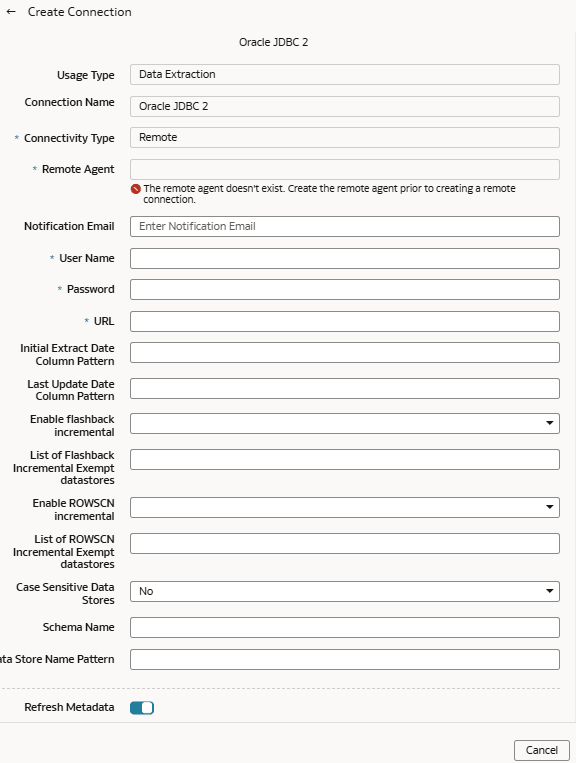

Load Data from Oracle Database Using JDBC into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use an extract service remote agent to connect to an Oracle database using JDBC and use the data to create data augmentations.

Ensure that Remote Agent and Oracle JDBC are enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

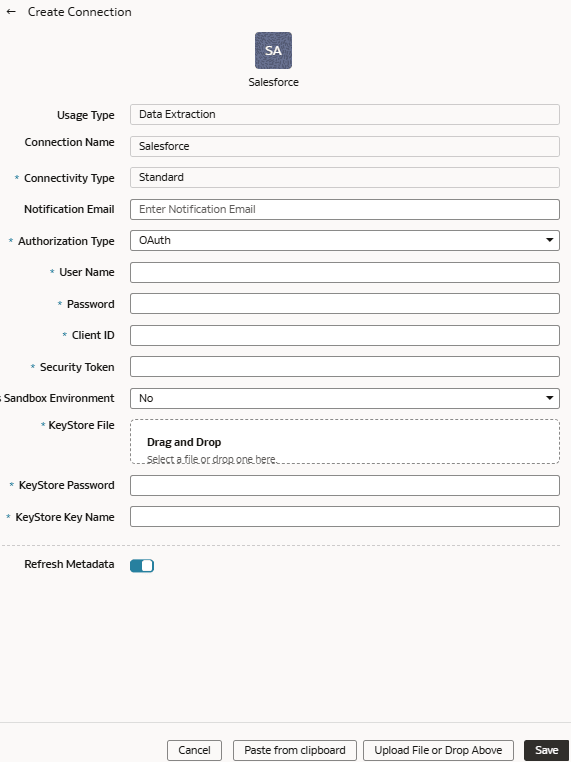

Load Data from Salesforce into NetSuite Analytics Warehouse

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Salesforce SaaS instance and use it to create data augmentations.

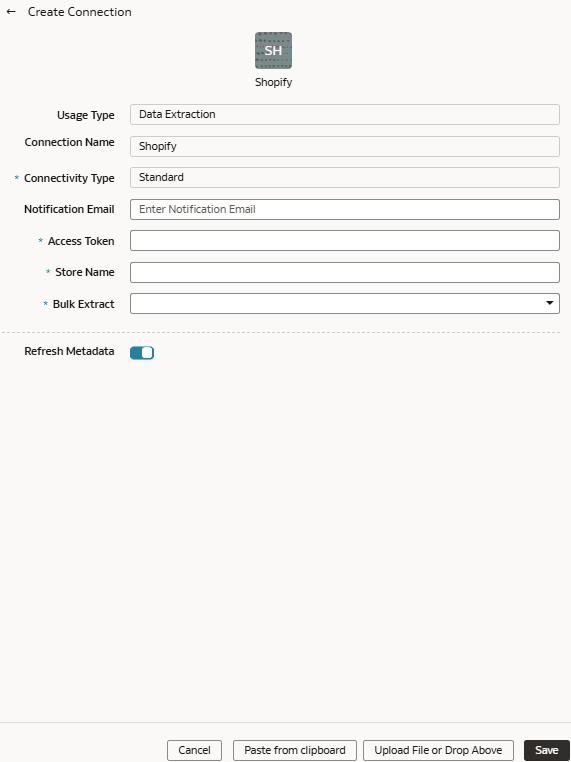

Load Data from Shopify into NetSuite Analytics Warehouse

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Shopify SaaS instance and use it to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases.

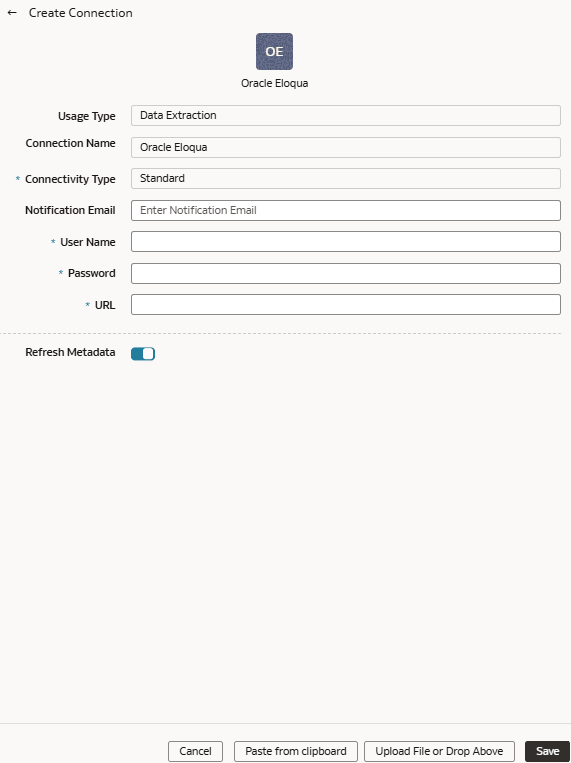

Connect with Your Oracle Eloqua Data Source (Preview)

If you’ve subscribed for Oracle Fusion CX Analytics and want to load data from your Oracle Eloqua source into NetSuite Analytics Warehouse, then create a connection using the Eloqua connection type.

The Oracle Eloqua data that you load into NetSuite Analytics Warehouse enables you to augment the data in your warehouse and create varied customer experience-related analytics. Ensure that Oracle Eloqua is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

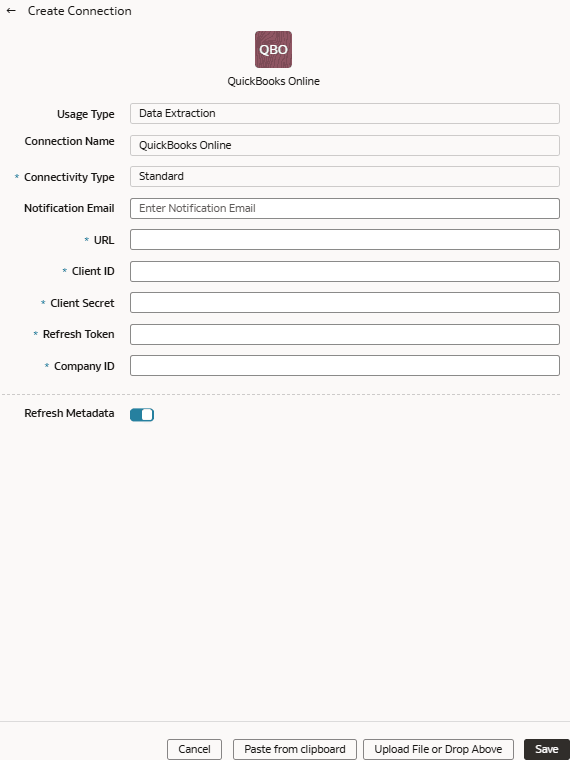

Load Data from QuickBooks Online into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from QuickBooks Online and use it to create data augmentations.

Ensure that QuickBooks Online is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

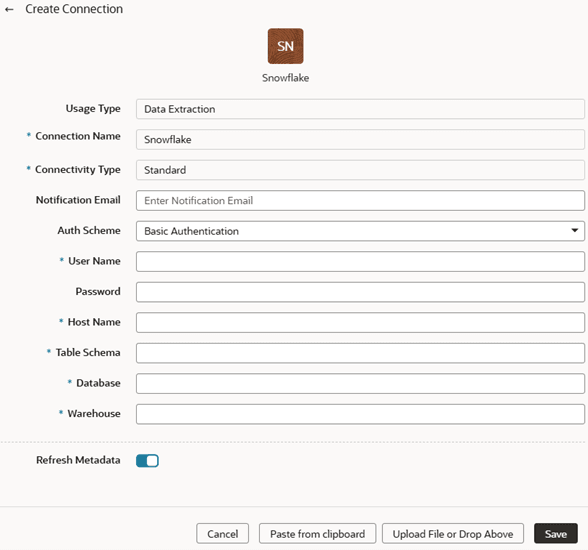

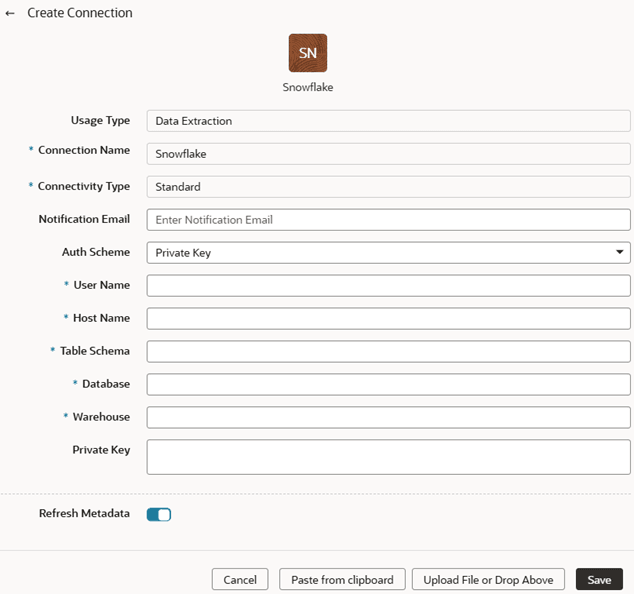

Load Data from Snowflake into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from a Snowflake instance.

Note:

Snowflake some times requires API calls to originate from a known IP address. If you're experiencing connection issues due to an unauthorized IP, then submit an Oracle Support ticket to obtain the necessary Oracle IP address for your Snowflake allowlist.Ensure that Snowflake is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

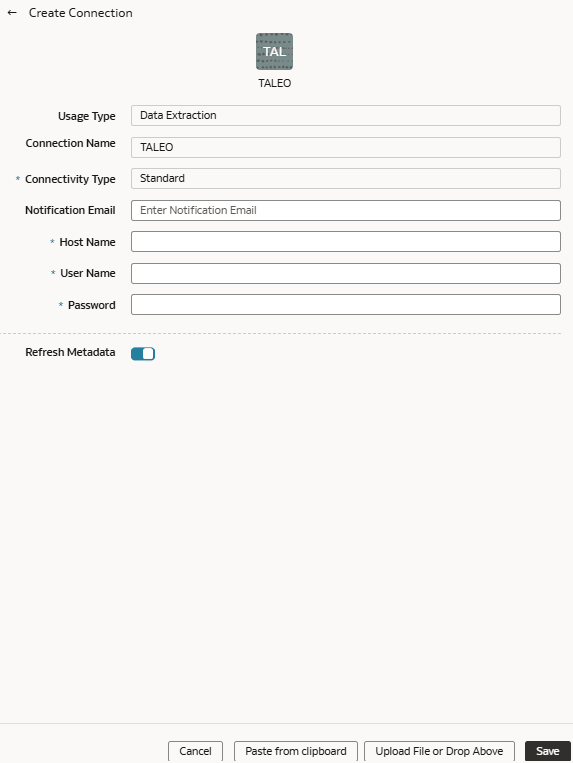

Load Data from Taleo into NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the NetSuite Analytics Warehouse extract service to acquire data from the Taleo instance and use it to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases.

Ensure that Taleo is enabled on the Enable Features page prior to creating this connection. See Make Preview Features Available.

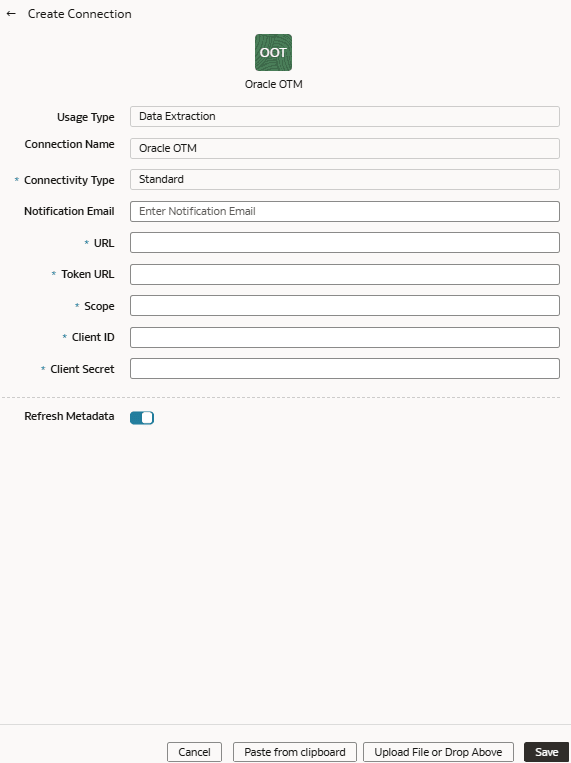

Load Data from Oracle Transportation Management Cloud Service into Oracle NetSuite Analytics Warehouse (Preview)

As a service administrator, you can use the Oracle NetSuite Analytics Warehouse extract service to acquire data from an Oracle Transportation Management Cloud Service SaaS instance.

You can later use this data to create data augmentations for various Enterprise Resource Planning and Supply Chain Management use cases. Establish the connection from NetSuite Analytics Warehouse to your Oracle Transportation Management Cloud Service instance to start data acquisition followed by augmentation.

Note:

Oracle Fusion SCM Analytics is a prerequisite to use the "Oracle Transportation Management" connector.