How Do I Use YAML to Create or Configure a Pipeline?

You can use YAML for creating a new pipeline or configuring an existing one:

What Is the Format for a YAML Pipeline Configuration?

Here's a pipeline's configuration with the default values in YAML format:

pipeline:

name: "" # pipeline name - if omitted, name is constructed from repository and file name

description: "" # pipeline description

auto-start: true # automatically start pipeline if any job in pipeline is run

# if false, pipeline will start only if manually started

# or a trigger action item is activated

auto-start: # implied true

triggers-only: false # if true, autostart only for jobs that have no preceding jobs

allow-external-builds: true # jobs in pipeline can run independently while pipeline is running

disabled: false # if true, pipeline will not run

periodic-trigger: "" # cron pattern with 5 elements (minute, hour, day, month, year)

# the pipeline is started (beginning with the Start item) periodically

triggers: # define trigger action items of periodic, poll, or commit types

# there may be one or more of each type

- periodic: # define trigger action item of periodic type; build pipeline every so often

name: "" # required, trigger name - must be unique trigger name; may not be "Start"

cron-pattern: "" # required, cron pattern specifying Minute Hour Day Month Year, e.g., "? 0 * * *"

- poll: # define trigger action item of poll type; poll repository every so often

name: "" # required, trigger name - must be unique trigger name; may not be "Start"

cron-pattern: "" # required, cron pattern as above

url: "" # required, git repository URL

branch: "" # required, git repository branch - trigger activated if changes detected in branch

exclude-users: "" # user identifier of committer to ignore

# if more than one user, use multi-line text, one user per line

trigger-when: INCLUDE # activate only if change to files in file-pattern; alternative EXCLUDE

# if EXCLUDE, activate only for change to files not in file-pattern

file-pattern: "" # file(s) to INCLUDE/EXCLUDE; may be ant or wildcard-style file/folder pattern

# if more than one pattern, use multi-line text, one pattern per line

# for example...

exceptions: "" # exceptions to file-pattern above

# if more than one exception file pattern, use multi-line text, one pattern per line

#

# Example of multi-line pattern - note that these lines can't have comments, as they would be part of text

file-pattern: |

README*

*.sql

- commit: # define trigger action item of commit type; automatically run pipeline on commit

name: "" # required, trigger name - must be unique trigger name; may not be "Start"

url: "" # required, git repository URL for local project repository

branch: "" # git repository branch name

# required: must specify branch or include/exclude/except branch patterns

include: "" # branch patterns to include; branch name, wildcard or regex

# if more than one pattern, use multi-line text, one pattern per line

exclude: "" # branch patterns to ignore; specify either include or exclude, not both

# if more than one pattern, use multi-line text, one pattern per line

except: "" # branch pattern exceptions to include or exclude above

# if more than one pattern, use multi-line text, one pattern per line

exclude-users: "" # user identifier of committer to ignore

# if more than one user, use multi-line text, one user per line

trigger-when: INCLUDE # activate only if change to files in file-pattern; alternative EXCLUDE

# if EXCLUDE, activate only for change to files not in file-pattern

file-pattern: "" # file(s) to INCLUDE/EXCLUDE; may be ant or wildcard-style file/folder pattern

# if more than one pattern, use multi-line text, one pattern per line

exceptions: "" # exceptions to file-pattern above

# if more than one exception file pattern, use multi-line text, one pattern per line

start: # required begins an array of job names, or parallel, sequential, or on groups

- JobName # this job runs first, and so on (start is a sequential group)

# Groups:

- parallel: # items in group run in parallel

- sequential: # items in group run sequentially

- on succeed,fail,test-fail: # items in group run sequentially if preceding job result matches condition

# can specify one or more of conditions:

# succeed (success), fail (failure), or test-fail (post-fail)

# Examples:

- parallel: # jobs A, B and C run in parallel, job D runs after they all finish

- A

- B

- C

- D

- on succeed: # if job D succeeds, E builds, otherwise F builds

- E

- on fail, test-fail:

- F

#

start: # Jobs that trigger pipelines can be specified.

- trigger: # A trigger section appears before the job(s) it triggers

- JobA # trigger is a "parallel" second - JobA and JobB are independent

- JobB

- JobName # This job runs first. It can be started when the pipeline is run, or if

# either of the trigger jobs JobA or JobB is built successfully.

# Triggers assume that auto-start is true.

start:

- A

- parallel:

- B

- sequential: # A trigger cannot appear in a parallel section

- trigger: # The jobs triggered are the next in sequence

- Trigger1

- C

- trigger: # But can appear anywhere in a sequential section

- Trigger2

- D

- trigger: # A trigger at the end of the start section is an independent graph

- sequential: # not connected to anything that precedes it.

- X

- Y

- Z

#

# on sections can "join" - like an if/then/else followed by something else

start:

- A

- on fail:

- F # If A fails, build F

- on test-fail:

- T # If the tests for A fail, build T

- on succeed:

- <continue> # If A succeeds, fall through to whatever follows the on conditions for A

- B # B is built if A, F, or T succeed

#

# A job run in parallel (or conditionally) can end the chain

start:

- A

- parallel: # Run B, C, and D in parallel

- B

- C

- end:

- D # There is no arrow from D

- E # E is run if B and C succeed

#

start:

- A

- on fail:

- end:

- F # If A fails, build F and end the pipeline

- on test-fail:

- T # If the tests for A fail, build T

- on succeed:

- <continue> # If A succeeds, fall through to whatever follows the on conditions for A

- B # B is built if A or T succeed

# -------------------------------------------------------------------------------

# Not all pipelines you can draw can be represented in hierarchical form as above.

# To allow a YAML definition of any pipeline graph, you can use a graph notation

# similar to the digraph representation supported by Dot/GraphViz.

# For example, the pipeline with triggers above can be written as a graph.

graph: # (Both graph: and start: cannot be used in the same pipeline.)

- JobA -> JobName # There is a link from JobA to JobName

- JobB -> JobName # There is a link from JobB to JobName

- <Start> -> JobName # There is a link from Start to JobName

# The representation <Start> distinguishes the special "Start" node that

# appears in every pipeline from a job named Start.

#

# Conditional links can be represented using the ? and a list of one or more conditions.

# For example, the partial pipeline above beginning with 'parallel' can be represented as:

graph:

- <Start> -> A

- <Start> -> B

- <Start> -> C

- A -> D

- B -> D

- C -> D

- D -> E ? succeed # If D succeeds, E is built

- D -> F ? fail, test-fail # If D fails or tests fail, F is built

# "succeed" is the default when no ? is specified.

#

# Any combination of succeed (success), fail (failure), or test-fail (post-fail)

# can be written in a comma-separated list after the question mark.

#

# Not every graph that can be specified in this way is a valid pipeline.

# For example, graphs with cycles are not allowed.

#

# "Joins" like the A, B, C converging on D above only work (D gets built)

# if all of A, B, and C succeed. If, for example, B fails, D will not be built.

# However, joins on nodes that are directly downstream from [Start] are a

# special case. If any job triggers these nodes, they will be run.

# This special case allows the triggers: section to work as expected.

# (This is not new behavior in 22.01.0.)

graph:

- <Start> -> A

- <Start> -> B

- A -> C # Links from A to C and B to C are to the same node (and job) C

- B -> C

# on the other hand...

graph:

- <Start> -> A

- <Start> -> B

- A -> C # Links from A to C and B to C$2 are to the same job C

- B -> C$2 # but to different nodes

# In other words, the job C appears in two different places in the pipelineYAML Pipeline Configuration Examples

Here are some examples of different YAML pipeline configurations:

| YAML Definition | Pipeline Configuration |

|---|---|

pipeline:

name: My Pipeline

description: YAML pipeline configuration

auto-start: true

allow-external-builds: true

start:

- Job 1

- Job 2

- Job 3

|

Job 2 runs after Job 1 completes successfully. Then Job 3 runs after Job 2 completes successfully. The pipeline automatically

starts if any job in the pipeline is run. The default settings are used even if

they all aren't listed in the YAML file:

|

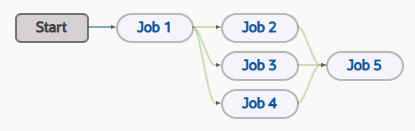

pipeline:

name: My Pipeline

auto-start: true

start:

- Job 1

- parallel:

- Job 2

- Job 3

- Job 4

- Job 5 |

Jobs 2, 3, and 4 run in parallel after Job 1 completes successfully. Job 5 runs after the three parallel jobs complete successfully. The pipeline will start if any job in the pipeline runs. |

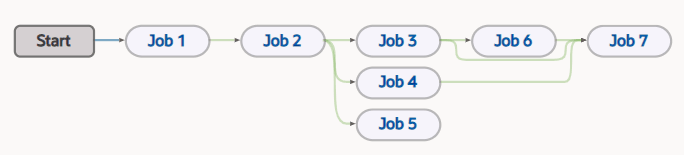

pipeline:

name: My Pipeline

start:

- Job 1

- Job 2

- parallel:

- sequential

- Job 3

- Job 6

- Job 4

- Job 5

- Job 7 |

Job 2 runs after Job 1 completes successfully. Jobs 3, 4, and 5 run in parallel after Job 2 completes successfully. Job 6 runs after Job 3 completes successfully. Job 7 runs after Jobs 6, 3, and 4 complete successfully. |

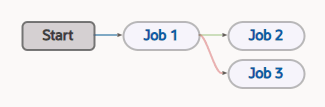

pipeline:

name: My Pipeline

start:

- Job 1

- on succeed:

- Job 2

- on fail:

- Job 3

|

If Job 1 runs successfully, Job 2 runs. If Job 1 runs and fails, Job 3 runs. |

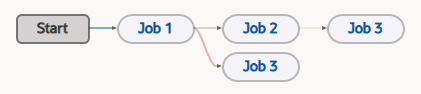

pipeline:

name: My Pipeline

start:

- Job 1

- on succeed:

- Job 2

- on test-fail:

- Job 3

- on fail:

- Job 3 |

If Job 1 runs successfully, Job 2 is run. If Job 2 runs successfully but fails tests or any post build action, or if Job 1 fails, Job 3 is run. Job 3 won't run if Job1 completes successfully. |

Set Dependency Conditions in Pipelines Using YAML

When you create a pipeline that includes a dependency between a parent and a child job, by default, the build of the child job will run after the parent job’s build completes successfully. You can configure the dependency to run a build of the child job after the parent job’s build fails too, either by using the pipeline designer or by setting an "on condition" in YAML to configure the result condition.

The pipeline designer supports Successful, Failed, or Test Failed conditions (see Configure the Dependency Condition). YAML supports additional conditions you can use. Here they are, with the build results they are mapped to:

- "succeed" and "success" map to a "SUCCESSFUL" build result

- "fail" and "failure" map to a "FAILED" build result

- "test-fail" and "post-fail" map to a "POSTFAILED" build result

None of these conditions match when a job is aborted, canceled, or restarted, so the pipeline never proceeds beyond that job.

See YAML Pipeline Configuration Examples to learn more about using and setting some of these dependency conditions in YAML. The fourth example shows how to use the "on succeed" and "on fail" settings. The fifth example shows how to use the "on succeed", "on fail", and "on post-fail" settings.

You can use the new public API to view the pipeline instance log to see what happened with the builds in the pipeline, after the fact. Use this format to get the log:

GET pipelines/{pipelineName}/instances/{instanceId}/log

Define and Use Triggers in YAML

Triggers are artifacts in a pipeline that aren't jobs but are just nodes, called action items. A job or multiple jobs can be used as triggers, but there is an overhead cost associated with such use. Instead of using trigger jobs, you can specify a new category of action items in the YAML pipeline configuration to define triggers. Trigger action items can start up on their own and then trigger the rest of the pipeline. This is a YAML only feature. To understand triggers, it helps to explore action items.

What Are Action Items?

Action items, a category of special-purpose executable pipeline items, are used to automate actions when jobs and tasks are too heavyweight. An action is a short-running activity that's performed locally in the build system. This special-purpose long-lived executable entity appears as a node in a pipeline and can be started by a user action, an automated action, or by entry from an upstream item.

Actions are single-purpose where each action does one thing. When an action is started, it performs some action, and completes with a result condition. An action is configurable but not programmable and should never contain user-written code in any form. An action has a category, like “trigger”, and a sub-category, like “periodic” or “polling”, that defines the item type. Each action has a name that is unique within a pipeline. If the name isn't configured, a default name will be supplied based on the item type, for example, “PERIODIC-1”. The name “Start” is reserved. The name is required and represents a configuration of the action.

What Is a Trigger?

A trigger is an action item that is based on some user or automated event that starts executing a pipeline at a specific point beginning with the nodes directly downstream of the trigger. If a trigger has upstream connections, and is invoked from an upstream connection, the trigger acts as a pass-through. It completes immediately and, if it has any downstream connections, the downstream items are initiated.

There are several subcategories of triggers:

- Periodic – The trigger is started periodically, based on a cron schedule.

- Polling – The trigger is started if SCM polling detects that commits have been pushed to a specified repository and branch since the last poll. Polling is based on a cron schedule. The repository URL and branch name are set as downstream parameter values with user-configurable names.

- Commit – The trigger is started if a commit is pushed to a specified local project repository and a specified set of branches. The repository URL and branch name are set as downstream parameter values with user-configurable names.

- Manual – The trigger is started manually. Start, the default manual trigger, is present in every pipeline. If Start is the only manual trigger, the pipeline starts there and executes the next downstream job(s). If a pipeline includes a manual trigger job, it can be started in the UI and execute its next downstream job, bypassing Start. If the pipeline has multiple trigger jobs, the user needs to choose which of them to initiate the pipeline run with.

Periodic Triggers

Here's an example that shows how to use a periodic trigger:

pipeline:

name: PeriodicPipeline

description: "Trigger defined in periodic, used in start"

auto-start:

triggers-only: true

allow-external-builds: false

triggers:

- periodic:

name: MidnightUTC

cron-pattern: "0 0 * * *"

start:

- trigger:

- <MidnightUTC>

- JobA3

Notice that the periodic trigger is defined with a name and a cron pattern. The reference to the trigger action item is enclosed in angle brackets, <MidnightUTC> in this case, to differentiate it from a job, such as JobA3.

-

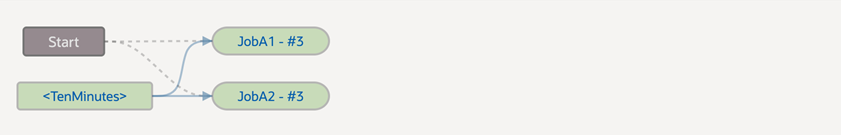

In the Pipelines tab on the Builds page, trigger action items are represented as squared off blocks, like the Start item or the <TenMinutes> item. The pipeline in this diagram was started by the action item with the periodic trigger <TenMinutes>. This trigger runs the pipeline every ten minutes.

Description of the illustration pipeline-trigger-start.pngNotice that a solid line goes from it to JobA1 and JobA2, but a dotted line goes from Start through its trigger to its downstream jobs. This is so, because the pipeline wasn't initiated from Start. The trigger item and the executed jobs are shaded, indicating the pipeline's execution path.

-

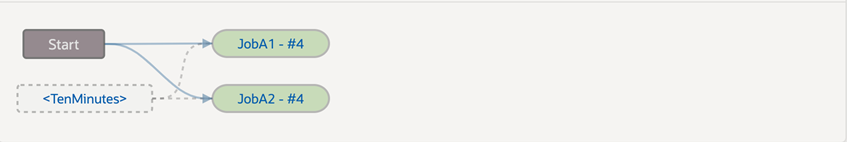

You could manually start the pipeline too, as this diagram shows.

Description of the illustration pipeline-manual-start.pngIn this case, the execution passes from Start, the default trigger, to JobA1 and then to JobA2. The graphic representation shows the execution path with solid lines. The <TenMinutes> periodic trigger job, outlined with dotted lines, isn't shaded because it wasn't executed. The dotted lines from it to its downstream jobs further indicate an execution path not taken.

Polling Triggers

This polling pipeline only runs at midnight UTC, as specified by the cron pattern. Additional parameters can be used too. See What Is the Format for a YAML Pipeline Configuration?.

pipeline:

name: PollingPipeline

auto-start: false

triggers:

- poll:

name: Poller

cron-pattern: "0 0 * * *"

url: <git-repo-url>

branch: main

start:

- <Poller>

- AWhen the pipeline is started manually, the execution flow goes through the trigger action item to job A. In the Pipeline Designer, the trigger has no hue and has a dotted line border. There are dotted lines from Start to the trigger item to job A. When the polling mechanism detects a change, the pipeline is started by the trigger. This is shown with a dotted line from Start to the trigger item, the trigger item has a dark hue, and there is a solid line from the trigger item to job A.

Commit Triggers

A commit trigger automatically runs when a commit happens.

pipeline:

name: CommitPipeline

auto-start: false

triggers:

- commit

name: OnCommit

url: <git-repo-url>

branch: main

start:

- <OnCommit>

- AAdditional parameters can be used too. See What Is the Format for a YAML Pipeline Configuration?.

Control How a Pipeline Is Automatically Started

The auto-start option automatically starts a pipeline if any job in pipeline is run. The default setting is "true". If the option is set to "false", the pipeline will start only if it is manually started or if a trigger action item is activated. Starting pipelines in the middle can be problematic, since preceding or parallel steps in the pipeline could set up conditions for follow-on steps. This behavior can be controlled using the auto-start option.

The first pipeline job that follows Start is a trigger only if it is the only job triggered by Start. Either the entire pipeline or only parts of the pipeline that have defined trigger jobs will automatically start.

View Logs for Pipelines Started by a Trigger Job

A triggered pipeline starts when the trigger job begins executing. For a pipeline that contains two jobs, Job A and Job B, where the Job A triggers the pipeline, the pipeline starts when the trigger job starts. The pipeline log reflects this:

[2023-01-22 15:21:15] Started by job Job A build #1 (BUILDING} which was initiated by maxyz

[2023-01-22 15:21:15] Job [Job A] finished build #1 (SUCCESSFUL}

[2023-01-22 15:21:15] Job [Job B] started build

[2023-01-22 15:21:40] Job [Job B] finished build #1 (SUCCESSFUL}

[2023-01-22 15:22:05] Pipeline end. Run time 53 secExamine Pipeline Logs with Commit or SCM Polling Trigger Action Items

Poll logs for pipelines are very similar in format to logs for jobs. By examining these pipeline logs, you can determine how pipelines were triggered and see exactly what was executed during the run. SCM polling and commit logs show action items, but not embedded triggers.

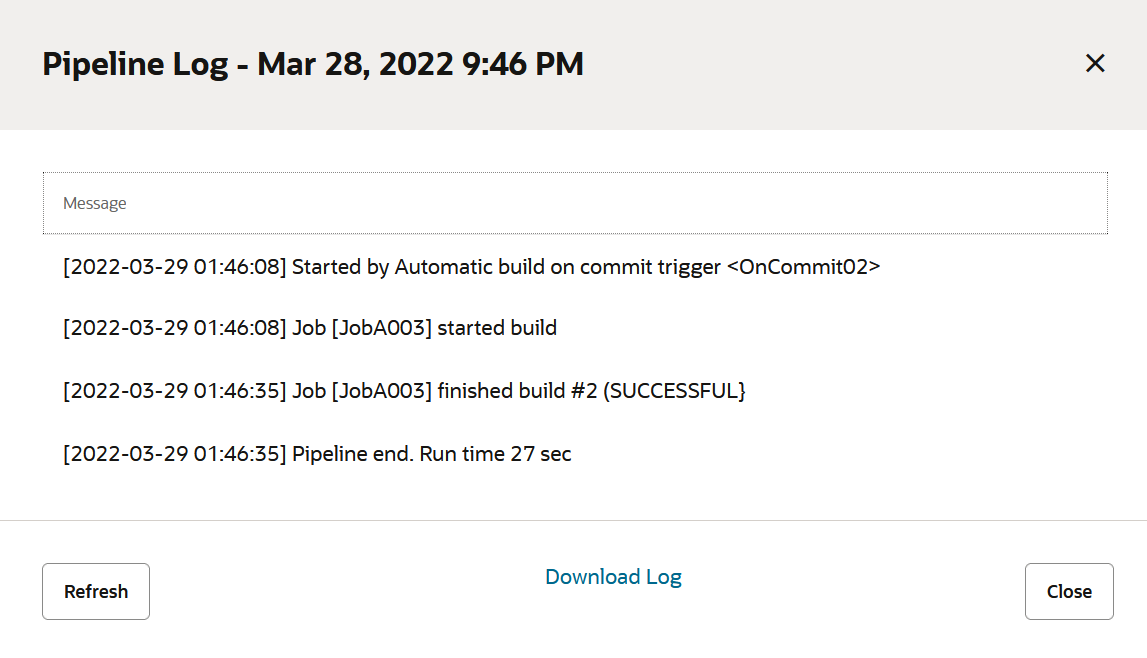

Commit Pipeline Log

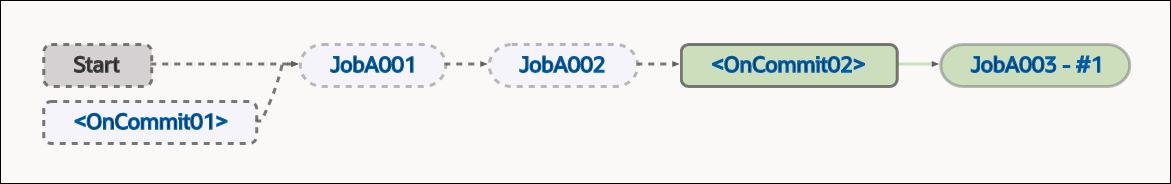

Here's a pipeline diagram with two triggers, <OnCommit01> and <OnCommit02>.

Description of the illustration committype01-diagram.png

Notice that the first trigger (<OnCommit01>) is shown in gray and its downstream jobs are too? That's because they weren't executed.

The first line in the log reveals how the pipeline was started -

automatically by the commit trigger <OnCommit02>:

A commit automatically triggered the pipeline, which started build (build #2) that executed JobA003. The pipeline run was successful and took 27 seconds to run. The two jobs upstream from the second trigger (JobA001 and JobA002) were not executed because their trigger (<OnCommit01>) didn't initiate the pipeline run. Had that happened, the second trigger would have been a pass-through and the build would have executed three jobs in succession.

SCM Poll Pipeline Log

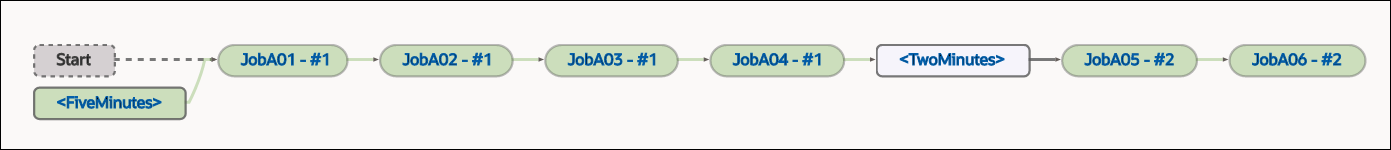

Here's a pipeline diagram that has two triggers, <FiveMinutes> and <TwoMinutes>.

Description of the illustration scmpolltype01-diagram.bmp

Notice that the pipeline's second trigger (<TwoMinutes>) is shaded with a lighter color. It wasn't executed, so it didn't have any effect on the pipeline run, even though it was in its path. In this case, the execution flow passed through it and continued executing downstream jobs.

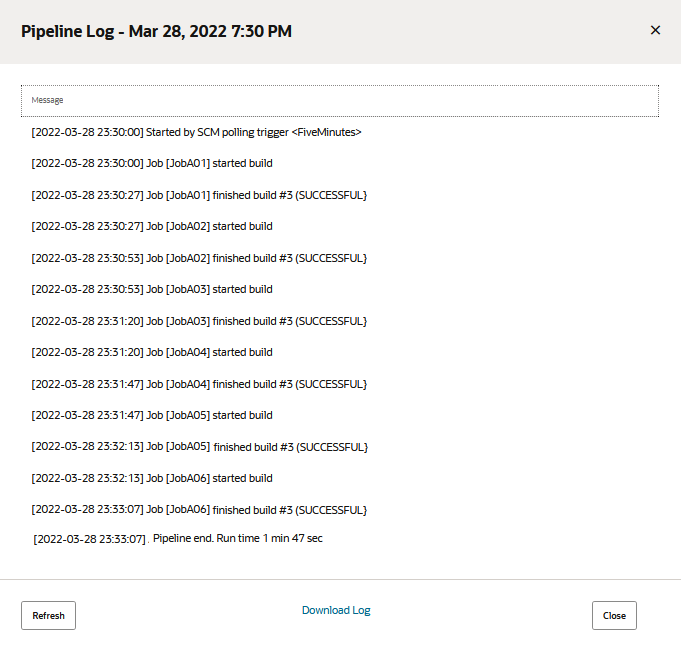

The first line in the log reveals how the pipeline was started - by the

SCM polling trigger <FiveMinutes>:

We can see that the trigger started build #3 with JobA01 then, upon successful completion, started job JobA02. Successive jobs JobA03 through JobA06 ran after the previous job successfully completed. After the last job (JobA06) in build #3 finished, the pipeline ended. We can also see how long it took the pipeline to run and whether all the jobs in the build were successful or not. In this case, they were.

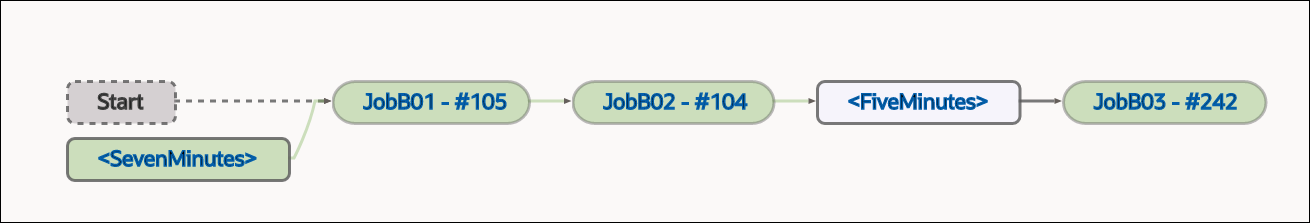

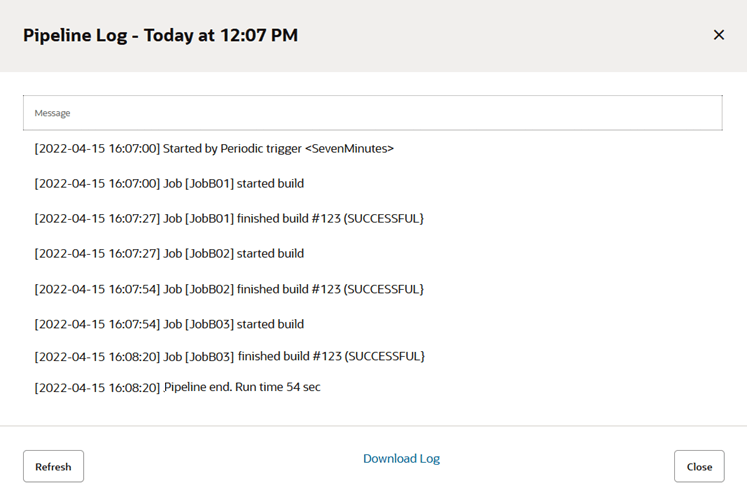

Periodic Pipeline Log

As a bonus, here's a pipeline diagram that shows a build (build #123) that was started by a the first of two periodic triggers (<SevenMinutes>, not the second trigger (<FiveMinutes>).

Description of the illustration periodictype01-diagram.png

As in the previous pipeline diagram, the second trigger, which wasn't executed, is shown in a lighter shade. Start, the default trigger, is shown in gray since it wasn't executed either. Had the pipeline been manually started, the first trigger would have been ignored but the job execution would've been the same as it was in the current run.

Here's the log:

We can see that all three jobs were successfully executed and the pipeline run lasted less than a minute. There was no mention of the pass-through trigger (<FiveMinutes>) that wasn't executed.