Using systemd to Manage cgroups v2

The preferred method of managing resource allocation with cgroups v2 is

to use the control group functionality provided by systemd.

Note:

For information on enablingcgroups v2

functionality on the system, see Oracle Linux 10: Managing Kernels and System

BootBy default, systemd creates a cgroup folder for each

systemd service set up on the host. systemd names

these folders using the format servicename.service,

where servicename is the name of the service associated with the

folder.

To see a list of the cgroup folders systemd creates for

the services, run the ls command on the

system.slice branch of the cgroup file

system as shown in the following sample code block:

ls /sys/fs/cgroup/system.slice/

... ... ...

app_service1.service cgroup.subtree_control httpd.service

app_service2.service chronyd.service ...

... crond.service ...

cgroup.controllers dbus-broker.service ...

cgroup.events dtprobed.service ...

cgroup.freeze firewalld.service ...

... gssproxy.service ...

... ... ...-

The folders app_service1.

serviceand app_service2.servicerepresent custom application services that might run on the system.

In addition to service control groups, systemd also creates a

cgroup folder for each user on the host. To see the

cgroups created for each user you can run the ls

command on the user.slice branch of the cgroup

file system as shown in the following sample code block:

ls /sys/fs/cgroup/user.slice/

cgroup.controllers cgroup.subtree_control user-1001.slice

cgroup.events cgroup.threads user-982.slice

cgroup.freeze cgroup.type ...

... ... ...

... ... ...

... ... ...In the preceding code block:

-

Each user

cgroupfolder is named using the formatuser-UID.slice. So, control groupuser-1001.sliceis for a user whoseUIDis 1001, for example.

systemd provides high-level access to the cgroups and

kernel resource controller features so you don't have to access the file system

directly. For example, to set the CPU weight of a service called

app_service1.service, run the systemctl

set-property command as follows:

sudo systemctl set-property app_service1.service CPUWeight=150Thus, systemd enables you to manage resource distribution at an

application level, rather than the process PID level used when configuring

cgroups without using systemd functionality.

About Slices and Resource Allocation in systemd

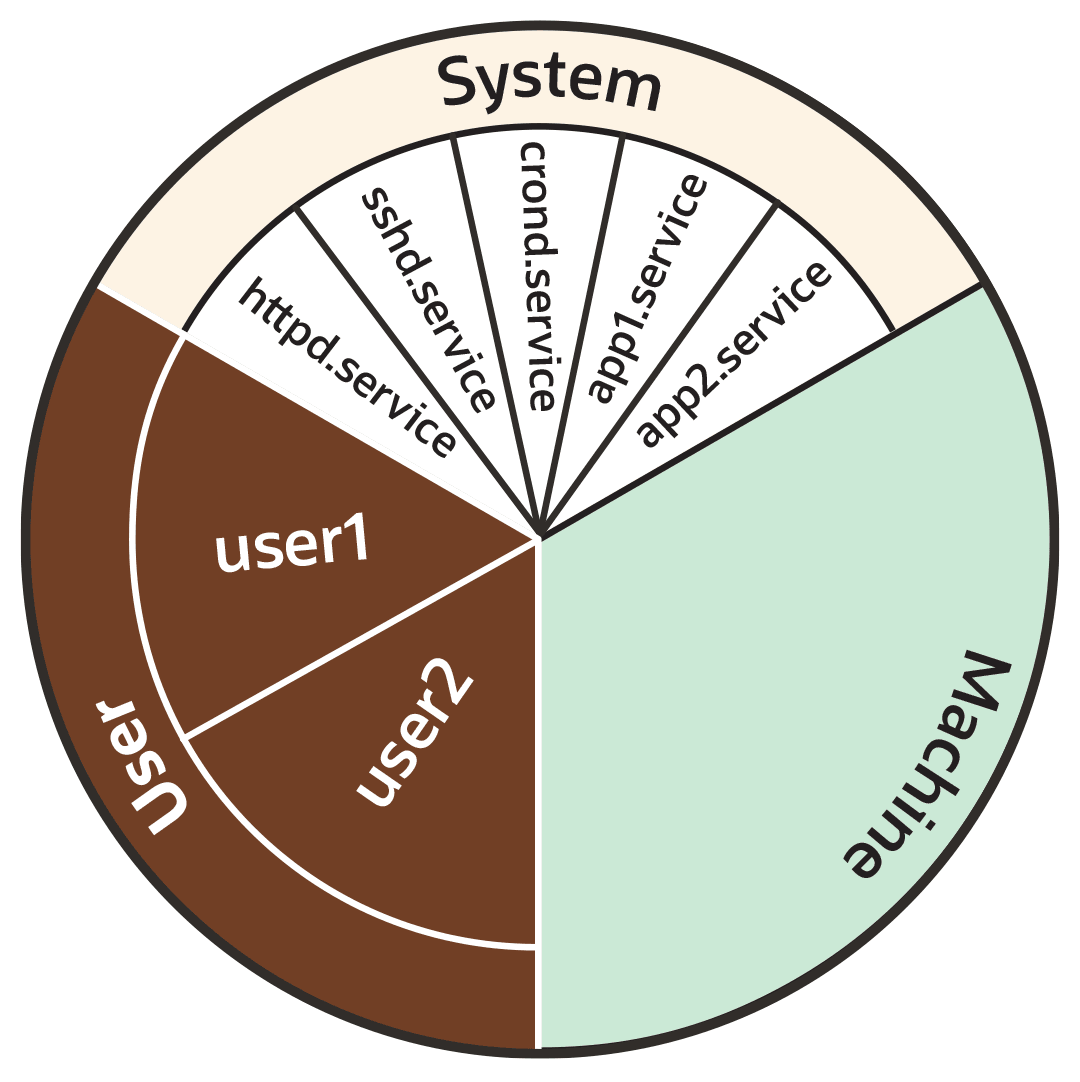

This section looks at the way systemd initially divides each of the default kernel controllers, for example CPU, memory and blkio, into portions called "slices" as illustrated by the following example pie chart:

Note:

You can also create custom slices for resource distribution, as shown in section Setting Resource Controller Options and Creating Custom Slices.Figure 8-1 Pie chart illustrating distribution in a resource controller, such as CPU or Memory

As the preceding pie chart shows, by default each resource controller is divided equally between the following 3 slices:

-

System (

system.slice). -

User (

user.slice). -

Machine (

machine.slice).

The following list looks at each slice more closely. For the purposes of discussion, the examples in the list focus on the CPU controller.

- System (

system.slice) -

This resource slice is used for managing resource allocation amongst daemons and service units.

As shown in the preceding example pie chart, the system slice is divided into further sub-slices. For example, in the case of CPU resources, we might have sub-slice allocations within the system slice that include the following:-

httpd.service(CPUWeight=100) -

sshd.service(CPUWeight=100) -

crond.service(CPUWeight=100) -

app1.

service(CPUWeight=100) -

app2.

service(CPUWeight=100)

serviceand app2.servicerepresent custom application services that might run on the system. -

- User (

user.slice) - This resource slice is used for managing resource allocation amongst user sessions. A single slice is created for each

UIDirrespective of how many logins the associated user has active on the server. Continuing with our pie chart example, the sub-slices might be as follows:-

user1 (

CPUWeight=100,UID=982) -

user2 (

CPUWeight=100,UID=1001)

-

- Machine (

machine.slice) - This slice of the resource is used for managing resource allocation amongst hosted virtual machines, such as KVM guests, and Linux Containers. The machine slice is only present on a server if the server is hosting virtual machines or Linux Containers.

Note:

Share allocations don't set a maximum limit for a resource.

In the preceding examples, the slice user.slice has 2 users:

user1 and user2. Each user is allocated an equal

share of the CPU resource available to the parent user.slice. However, if

the processes associated with user1 are idle, and don't require any CPU

resource, then its CPU share is available for allocation to user2 if

needed. In such a situation, user2 might even be allocated the entire CPU

resource apportioned to the parent user.slice if it's required by other

users.

To cap CPU resource, you would need to set the CPUQuota property to the required percentage.

Slices, Services, and Scopes in the cgroup Hierarchy

The pie chart analogy used in the preceding sections is a helpful way to conceptualize

the division of resources into slices. However, in terms of structural organization, the

control groups are arranged in a hierarchy. You can view the systemd

control group hierarchy on the system by running the systemd-cgls

command as follows:

Tip:

To see the entire cgroup hierarchy, starting from the root slice -.slice, as in the following example, ensure you run systemd-cgls from outside of the control group mount point /sys/fs/cgroup/. Otherwise, If you run the command from within /sys/fs/cgroup/, the output starts from the cgroup location from which the command was run. See systemd-cgls(1) for more information.

systemd-cglsControl group /:

-.slice

...

├─user.slice (#1429)

│ → user.invocation_id: 604cf5ef07fa4bb4bb86993bb5ec15e0

│ ├─user-982.slice (#4131)

│ │ → user.invocation_id: 9d0d94d7b8a54bcea2498048911136c8

│ │ ├─session-c1.scope (#4437)

│ │ │ ├─2416 /usr/bin/sudo -u ocarun /usr/libexec/oracle-cloud-agent/plugins/runcommand/runcommand

│ │ │ └─2494 /usr/libexec/oracle-cloud-agent/plugins/runcommand/runcommand

│ │ └─user@982.service … (#4199)

│ │ → user.delegate: 1

│ │ → user.invocation_id: 37c7aed7aa6e4874980b79616acf0c82

│ │ └─init.scope (#4233)

│ │ ├─2437 /usr/lib/systemd/systemd --user

│ │ └─2445 (sd-pam)

│ └─user-1001.slice (#7225)

│ → user.invocation_id: ce93ad5f5299407e9477964494df63b7

│ ├─session-2.scope (#7463)

│ │ ├─20304 sshd: oracle [priv]

│ │ ├─20404 sshd: oracle@pts/0

│ │ ├─20405 -bash

│ │ ├─20441 systemd-cgls

│ │ └─20442 less

│ └─user@1001.service … (#7293)

│ → user.delegate: 1

│ → user.invocation_id: 70284db060c1476db5f3633e5fda7fba

│ └─init.scope (#7327)

│ ├─20395 /usr/lib/systemd/systemd --user

│ └─20397 (sd-pam)

├─init.scope (#19)

│ └─1 /usr/lib/systemd/systemd --switched-root --system --deserialize 28

└─system.slice (#53)

...

├─dbus-broker.service (#2737)

│ → user.invocation_id: 2bbe054a2c4d49809b16cb9c6552d5a6

│ ├─1450 /usr/bin/dbus-broker-launch --scope system --audit

│ └─1457 dbus-broker --log 4 --controller 9 --machine-id 852951209c274cfea35a953ad2964622 --max-bytes 536870912 --max-fds 4096 --max-matches 131072 --audit

...

├─chronyd.service (#2805)

│ → user.invocation_id: e264f67ad6114ad5afbe7929142faa4b

│ └─1482 /usr/sbin/chronyd -F 2

├─auditd.service (#2601)

│ → user.invocation_id: f7a8286921734949b73849b4642e3277

│ ├─1421 /sbin/auditd

│ └─1423 /usr/sbin/sedispatch

├─tuned.service (#3349)

│ → user.invocation_id: fec7f73678754ed687e3910017886c5e

│ └─1564 /usr/bin/python3 -Es /usr/sbin/tuned -l -P

├─systemd-journald.service (#1837)

│ → user.invocation_id: bf7fb22ba12f44afab3054aab661aedb

│ └─1068 /usr/lib/systemd/systemd-journald

├─atd.service (#3961)

│ → user.invocation_id: 1c59679265ab492482bfdc9c02f5eec5

│ └─2146 /usr/sbin/atd -f

├─sshd.service (#3757)

│ → user.invocation_id: 57e195491341431298db233e998fb180

│ └─2097 sshd: /usr/sbin/sshd -D [listener] 0 of 10-100 startups

├─crond.service (#3995)

│ → user.invocation_id: 4f5b380a53db4de5adcf23f35d638ff5

│ └─2150 /usr/sbin/crond -n

...

The preceding sample output shows how all "*.slice" control groups reside under the root slice -.slice. Beneath the root slice you can see the user.slice and system.slice control groups, each with their own child cgroup sub-slices.

Examining the systemd-cgls command output you can see how, except for

root -.slice , all processes are on leaf nodes. This arrangement is

enforced by cgroups v2, in a rule called the "no internal processes"

rule. See cgroups (7) for more information about the "no internal

processes" rule.

The output in the preceding systemd-cgls command example also shows how slices can have descendent child control groups that are systemd scopes. systemd scopes are reviewed in the following section.

systemd Scopes

systemd scope is a systemd unit type that groups together system service worker processes that have been launched independently of systemd. The scope units are transient cgroups created programmatically using the bus interfaces of systemd.

For example, in the following sample code, the user with UID 1001 has run the systemd-cgls command, and the output shows session-2.scope has been created for processes the user has spawned independently of systemd (including the process for the command itself , 21380 sudo systemd-cgls):

Note:

In the following example, the command has been run from within the control group mount point/sys/fs/cgroup/. Hence, instead of the root slice, the output starts from the cgroup location from which the command was run.

sudo systemd-cglsWorking directory /sys/fs/cgroup:

...

├─user.slice (#1429)

│ → user.invocation_id: 604cf5ef07fa4bb4bb86993bb5ec15e0

│ → trusted.invocation_id: 604cf5ef07fa4bb4bb86993bb5ec15e0

...

│ └─user-1001.slice (#7225)

│ → user.invocation_id: ce93ad5f5299407e9477964494df63b7

│ → trusted.invocation_id: ce93ad5f5299407e9477964494df63b7

│ ├─session-2.scope (#7463)

│ │ ├─20304 sshd: oracle [priv]

│ │ ├─20404 sshd: oracle@pts/0

│ │ ├─20405 -bash

│ │ ├─21380 sudo systemd-cgls

│ │ ├─21382 systemd-cgls

│ │ └─21383 less

│ └─user@1001.service … (#7293)

│ → user.delegate: 1

│ → trusted.delegate: 1

│ → user.invocation_id: 70284db060c1476db5f3633e5fda7fba

│ → trusted.invocation_id: 70284db060c1476db5f3633e5fda7fba

│ └─init.scope (#7327)

│ ├─20395 /usr/lib/systemd/systemd --user

│ └─20397 (sd-pam)Setting Resource Controller Options and Creating Custom Slices

systemd provides the following methods for setting resource controller

options, such as CPUWeight, CPUQuota, and so on, to

customize resource allocation on the system:

-

Using service unit files.

-

Using drop-in files.

-

Using the

systemctl set-propertycommand.

The following sections provide example procedures for using each of these methods to configure resources and slices in the system.

Using systemctl set-property

The systemctl set-property command places the configuration files under the following location:

/etc/systemd/system.controlCaution:

You must not manually edit the files systemctl set-property command creates.

Note:

The systemctl set-property command doesn't recognize every

resource-control property used in the system-unit and drop-in files covered

earlier in this topic.

The following procedure shows how you can use the systemctl

set-property command to configure resource allocation: