36 Using the Site Capture Application

Topics:

36.1 Site Capture Model

You can initiate a crawl session manually from the Site Capture interface, or you can trigger one by the completing a WebCenter Sites RealTime publishing session. In each scenario, the crawler downloads the website to disk in either a static or archive mode, depending on how you choose to run the crawler.

Topics:

36.1.1 Capture Modes

When you download a site in either static or archive mode, the same files (html, css, and so on) are stored to disk, but with several differences. For example, statically downloaded sites are available only in the file system, whereas archived sites are available in both the file system and the Site Capture interface. Capture mode, then, determines how crawlers download sites and how you manage the results.

Table 36-1 Static Capture Mode and Archive Mode

| Static Mode | Archive Mode |

|---|---|

|

Static mode supports rapid deployment, high availability scenarios. |

Archive mode is used to maintain copies of websites on a regular basis for compliance purposes or similar reasons. |

|

In static mode, a crawled site is stored as files ready to be served. Only the latest capture is kept (the previously stored files are overwritten). |

In archive mode, all crawled sites are kept and stored as zip files (archives) in time-stamped folders. Pointers to the zip files are created in the Site Capture database. |

|

You can initiate static crawl sessions manually from the application interface or after a publishing session. However, you can manage downloaded sites from the Site Capture file system only. |

Like static sessions, you can manually initiate archive crawl sessions from the Site Capture interface or after a publishing session. However, because the zip files are referenced by pointers in the Site Capture database, you can manage them from the Site Capture interface. You can download the files, preview the archived sites, and set capture schedules. |

For any capture mode, logs are generated after the crawl session to provide such information as crawled URLs, HTTP status, and network conditions. In static capture, you must obtain the logs from the file system. In archive capture, you can download them from the Site Capture interface. For any capture mode, you have the option of configuring crawlers to email reports as soon as they are generated.

36.1.2 Crawlers

Starting any type of site capture process requires you to define a crawler in the Site Capture interface. To help you get started quickly, Site Capture comes with two sample crawlers, Sample and FirstSiteII. This guide assumes the crawlers were installed during the Site Capture installation process and uses the Sample crawler primarily.

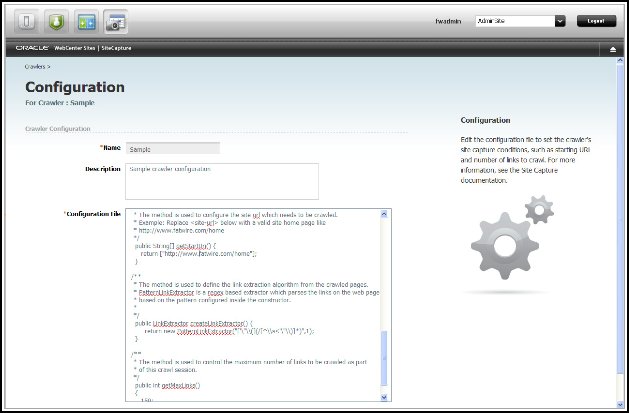

To create your own crawler, you must name the crawler (typically, after the target site), and upload a text file named CrawlerConfigurator.groovy, which controls the site capture process. You must code the groovy file with methods in the BaseConfigurator class that specify at least the starting URIs and link extraction logic for the crawler. Although the groovy file controls the site capture process, the capture mode is set outside the file.

To use a crawler for publishing-triggered site capture, you must take an additional step: you must name the crawler and specify its capture mode on the publishing destination definition on the source system that is integrated with Site Capture, as described in the Configuring Site Capture with the Configurator in Installing and Configuring Oracle WebCenter Sites. (On every publishing destination definition, you can specify one or more crawlers, but only a single capture mode.) Information about the successful start of crawler sessions is stored in the Site Capture file system and in the log files (futuretense.txt, by default) of the source and target systems.

The exercises in this chapter cover both types of crawler scenarios: manual and publishing-triggered.

36.2 Logging in to the Site Capture Application

You access the Site Capture application by logging in to WebCenter Sites.

36.3 Using the Default Crawlers

You should have installed the default crawlers, Sample and FirstSiteII, with the Site Capture application, and they are displayed in its interface. To define your own crawlers, see Defining a Crawler.

Topics:

36.3.1 Sample Crawler

You can use the Sample crawler to download any site. The purpose of the Sample crawler is to help you quickly download the site and to provide you with required configuration code, which you can reuse when creating your own crawlers. The Sample crawler is minimally configured with the required methods and an optional method that limits the duration of the crawl by limiting the number of links to crawl.

-

The required methods are

getStartURiandcreateLinkExtractor(which defines the logic for extracting links from crawled pages). -

The optional method is

getMaxLinks, which specifies the number of links to crawl.

For more information about these methods, see Crawler Customization Methods in Developing with Oracle WebCenter Sites.

36.3.2 FirstSiteII Crawler

The FirstSiteII crawler is used to download the dynamic FirstSiteII sample website as a static site. The purpose of the crawler is to provide you with advanced configuration code that shows how to create a custom link extractor and resource rewriter, using the LinkExtractor and ResourceRewriter interfaces. See Interfaces in Developing with Oracle WebCenter Sites.

36.4 Setting Up a Site Capture Operation

In this section, you will step through the process of creating and running your own crawler to understand how the Site Capture interface and file system are organized.

Topics:

36.4.1 Creating a Starter Crawler Configuration File

Before you can create a crawler, you must have a configuration file that controls the site capture process for the crawler. The fastest way to create a useful file is to copy sample code and recode, as necessary.

-

Copy the Sample configuration file to your local computer in either of these ways:

-

Log in to the Site Capture application. If the Crawlers page lists the Sample crawler, do the following (otherwise, skip to the item directly below):

-

Point to Sample and select Edit Configuration.

-

Go to the Configuration File field, copy its code to a text file on your local computer, and save the file as

CrawlerConfigurator.groovy.

-

-

Go to the Site Capture host computer and copy the

CrawlerConfigurator.groovyfile from<SC_INSTALL_DIR>/fw-site-capture/crawler/Sample/app/to your local computer.Note:

Every crawler is controlled by its own

CrawlerConfigurator.groovyfile. The file is stored in a custom folder structure. For example:When you define a crawler, Site Capture creates a folder bearing the name of the crawler (

<crawlerName>, orSamplein our scenario) and places that folder in the following path:<SC_INSTALL_DIR>/fw-site-capture/crawler/. Within the<crawlerName>folder, Site Capture creates an/appsubfolder to which it uploads thegroovyfile from your local computer.When the crawler is used for the first time in a given mode, Site Capture creates additional subfolders (in

/<crawlerName>/) to store sites captured in that mode. See Managing Statically Captured Sites.

-

-

Your sample

groovyfile specifies a sample starting URI, which you will reset when you create the crawler in the next step. (In addition to the starting URI, you can set crawl depth and similar parameters, call post-crawl commands, and implement interfaces to define logic specific to your target sites.)At this point, you have the option to either customize the downloaded

groovyfile now, or first create the crawler and then customize itsgroovyfile (which is editable in the Site Capture interface). To do the latter, continue to the next step Defining a Crawler.

36.4.2 Defining a Crawler

To define a crawler:

-

Go to the Crawlers page and click Add Crawler.

-

On the Add Crawlers page:

-

Name the crawler after the site to be crawled.

Note:

-

After you save a crawler, it cannot be renamed.

-

This guide assumes that every custom crawler is named after the target site and is not used to capture any other site.

-

-

Enter a description (optional). For example: "This crawler is reserved for publishing-triggered site capture" or "This crawler is reserved for scheduled captures."

-

In the Configuration File field, browse to the

groovyfile that you created in Creating a Starter Crawler Configuration File. -

Save the new crawler.

Your

CrawlerConfigurator.groovyfile is uploaded to the<SC_INSTALL_DIR>/fw-site-capture/crawler/<crawlerName>/appfolder on the Site Capture host computer. You can edit the file directly in the Site Capture interface.

-

-

Continue to Editing the Crawler Configuration File.

36.4.3 Editing the Crawler Configuration File

From the Site Capture interface, you can recode the entire crawler configuration file. In this example, we simply set the starting URI.

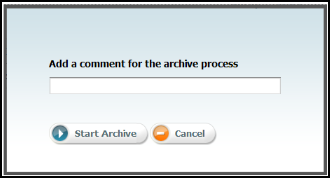

36.4.4 Starting a Crawl

You can start a crawl in several ways. If you used a crawler in one mode, you can rerun it in a different mode:

36.4.4.3 Schedule the Crawler for Archive Capture

Only archive captures can be scheduled. For a given crawler, you can create multiple schedules – for example, one for capturing periodically, and another for capturing at a particular and unique time.

Note:

If you set multiple schedules, ensure that they do not overlap.

- Go the Crawlers page, point to the crawler that you created and select Schedule Archive.

- Click Add Schedule and make selections on all calendars: Days, Dates, Months, Hours, and Minutes.

- Click Save and add another schedule if necessary.

36.4.4.4 About Publishing a Site in RealTime Mode

If you configure your WebCenter Sites publishing systems to communicate with the Site Capture application, you can set up a RealTime publishing process to call one or more crawlers to capture the newly published site. For instructions, see Enabling Publishing-Triggered Site Capture.

36.4.5 About Managing Captured Data

For more information about accessing various data associated with static and archive captures, see Managing Statically Captured Sites.

Notes and Tips for Managing Crawlers and Captured Data presents a collection of notes and tips to bear in mind when managing crawlers and captured data.

36.5 Enabling Publishing-Triggered Site Capture

An administrative user can configure as many publishing destination definitions for Site Capture as necessary and can call as many crawlers as necessary. The following are the main steps for enabling publishing-triggered site capture:

36.5.1 About Integrating the Site Capture Application with Oracle WebCenter Sites

You can enable site capture after a RealTime publishing session only if the Site Capture application is first integrated with the source and target systems used in the publishing process. If Site Capture is not integrated, see Integrating Site Capture with the WebCenter Sites Publishing Process in Installing and Configuring Oracle WebCenter Sites for integration instructions, then continue with the steps below.

36.5.2 Configuring a RealTime Publishing Destination Definition for Site Capture

When configuring a publishing destination definition, you name the crawlers that are called after the publishing session. You also specify the capture mode.

-

Go to the WebCenter Sites source system that is integrated with the Site Capture application.

-

Create a RealTime publishing destination definition pointing to the WebCenter Sites target system that is integrated with Site Capture. See Adding a New RealTime Destination Definition.

-

In the More Arguments section of the publishing destination definition, name the crawlers to be called after the publishing session, and set the capture mode by using the following parameters:

-

CRAWLERCONFIG: Specify the name of each crawler. If you use multiple crawlers, separate their names with a semicolon (;).Examples:

For a single crawler:

CRAWLERCONFIG=crawler1For multiple crawlers:

CRAWLERCONFIG=crawler1;crawler2;crawler3Note:

The crawlers that you specify here must also be configured and identically named in the Site Capture interface. Crawler names are case-sensitive.

-

CRAWLERMODE: To run an archive capture, set this parameter todynamic. By default, static capture is enabled.Example:

CRAWLERMODE=dynamicNote:

-

If

CRAWLERMODEmode is omitted or set to a value other thandynamic, static capture starts when the publishing session ends. -

You can set both crawler parameters in a single statement as follows:

CRAWLERCONFIG=crawler1;crawler2&CRAWLERMODE=dynamic -

While you can specify multiple crawlers, you can set only one mode. All crawlers run in that mode. To run some crawlers in a different mode, configure another publishing destination definition.

-

-

-

-

Continue to the next section.

36.5.3 Matching Crawlers

Crawlers named in the publishing destination definition must exist in the Site Capture interface. Do the following:

- Verify that crawler names in the destination definition and Site Capture interface are identical. The names are case-sensitive.

- Ensure that a valid starting URI for the target site is set in each crawler configuration file. For information about navigating to the crawler configuration file, see Editing the Crawler Configuration File. For more information about writing configuration code, see the Coding the Crawler Configuration File in Developing with Oracle WebCenter Sites.

36.5.4 Managing Site Capture

To manage site capture:

-

When you have enabled publishing-triggered site capture, you are ready to publish the target site. When publishing ends, site capture begins. The crawlers capture pages in either static or archive mode, depending on how you set the

CRAWLERMODEparameter in the publishing destination definition (step b in Configuring a RealTime Publishing Destination Definition for Site Capture). -

To monitor the site capture process.

-

For static capture, the Site Capture interface does not display any information about the session, nor does it make the captured site available to you.

-

To determine that the crawlers were called, open the

futuretense.txtfile on the source or target WebCenter Sites system.Note:

The

futuretense.txtfile on the WebCenter Sites source and target systems contains crawler startup status for any type of crawl: static and archive. -

To monitor the capture process, go to the Site Capture file system and review the files that are listed in step 2 in Run the Crawler Manually in Static Mode.

-

-

For dynamic capture, you can view the status of the crawl from the Site Capture interface.

-

Go to the Crawlers page, point to the crawler, and select Jobs from the pop-up menu.

-

On the Job Details page, click Refresh next to the Job State until you see "Finished." (Possible value for Job State are Scheduled, Running, Finished, Stopped, or Failed.) For more information about the Job Details page, see steps 3 and 4 in Run the Crawler Manually in Archive Mode.

-

-

-

Managing captured data.

When the crawl session ends, you can manage the captured site and associated data as follows:

-

For a statically captured site, go to the Site Capture file system. For more information, see Managing Statically Captured Sites.

-

For an archived site, use the Site Capture interface to preview the site and download the zip file and logs. For more information, see About Managing Archived Sites.

-

36.6 Managing Statically Captured Sites

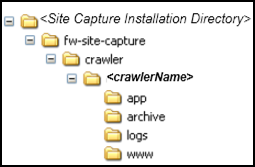

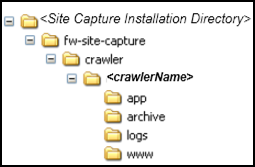

For every crawler that a user creates in the Site Capture interface, Site Capture creates an identically named folder in its file system. This custom folder, <crawlerName>, is used to organize the configuration file, captures, and logs for the crawler as shown in using-site-capture-application.html#GUID-023C4D76-7BFF-43F6-A0D3-0DA764DA9860__CHDBCFCJ, while describes the <crawlerName> folder and its contents.

Note:

To access static captures and logs, you must use the file system. Archive captures and logs are managed from the Site Capture interface (their location in the file system is included in this section).

Figure 36-4 Site Capture Custom Folders: <crawlerName>

Description of "Figure 36-4 Site Capture Custom Folders: <crawlerName>"

Table 36-2 <crawlerName> Folder and its Contents

| Folder | Description |

|---|---|

|

|

Represents a crawler. For every crawler that a user defines in the Site Capture interface, Site Capture creates a Note: In addition to the subfolders (described below), the |

|

|

Contains the |

|

|

The The Note: Archive captures are accessible from the Site Capture interface. Each zip file contains a URL log named |

|

|

Contains the latest statically captured site only (when the same crawler is rerun in static mode, it overwrites the previous capture). The site is stored as The Note: Static captures are accessible from the Site Capture file system only. |

|

|

Contains log files with information about crawled URLs. Log files are stored in the

Note: If the crawler captured in both static mode and archive mode, the The |

The folders under logs/yyyy/mm/ contain the following logs:

-

<yyyy-mm-dd-hh-mm-ss>-audit.log -

<yyyy-mm-dd-hh-mm-ss>-links.txt -

<yyyy-mm-dd-hh-mm-ss>-report.txt

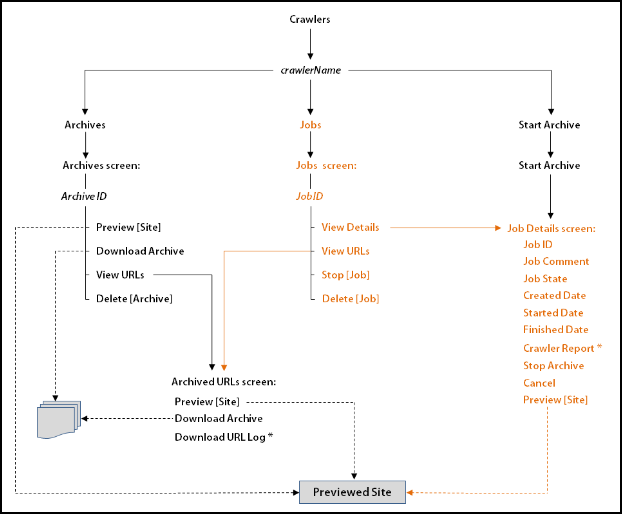

36.7 About Managing Archived Sites

You can manage archived sites from different forms of the Site Capture interface. The following figure shows some pathways to various information: archives, jobs, site preview, crawler report, and URL log:

-

For example, to preview a site, start at the Crawlers form, point to a crawler (crawlerName), select Archives from the pop-up menu (which opens the Archives form), point to an Archive ID, and select Preview from the pop-up menu.

-

Dashed lines represent multiple paths to the same option. For example, to preview a site, you can follow the Archives path, Jobs path, or Start Archive path for the crawler. To download an archive, you can follow the Archives path or the Jobs path.

-

The crawler report and URL log are marked by an asterisk (*).

36.8 Notes and Tips for Managing Crawlers and Captured Data

The following topics summarize notes and tips for managing crawlers and captured data:

36.8.1 Tips for Creating and Editing Crawlers

When creating crawlers and editing their configuration code, consider the following information:

-

Crawler names are case-sensitive.

-

Every crawler configuration file is named

CrawlerConfigurator.groovy. You must not change this name. -

You can configure a crawler to start at one or more seed URIs on a given site and to crawl one or more paths. You can use additional Java methods to set parameters such as crawl depth, call post-crawl commands, specify session timeout, and more. You can implement interfaces to define logic for extracting links, rewriting URLs, and sending email after a crawl session. See Coding the Crawler Configuration File in Developing with Oracle WebCenter Sites.

-

When a crawler is created and saved, its

CrawlerConfigurator.groovyfile is uploaded to the Site Capture file system and made editable in the Site Capture interface. -

While a crawler is running a static site capture process, you cannot use it to run a second static capture process.

-

While a crawler is running an archive capture process, you can use it to run a second archive capture process. The second process is marked as Scheduled and starts after the first process ends.

36.8.2 Notes for Deleting a Crawler

If you must delete a crawler (which includes all of its captured information), do so from the Site Capture interface, not the file system. Deleting a crawler from the interface prevents broken links. For example, if a crawler ran in archive mode, deleting it from the interface removes two sets of information - the archives and logs, and database references to those archives and logs. Deleting the crawler from the file system retains database references to archives and logs that no longer exist, thus creating broken links in the Site Capture interface.

36.8.3 Notes for Scheduling a Crawler

Only archive crawls can be scheduled.

-

When setting a crawler schedule, consider the publishing schedule for the site and avoid overlapping the two.

-

You can create multiple schedules for a single crawler – for example, one schedule to call the crawler periodically, and another schedule to call the crawler at one particular and unique time.

-

When creating multiple schedules, ensure they do not overlap.

36.8.4 About Monitoring a Static Crawl

To determine whether a static crawler session is in progress or completed, look for the crawler lock file in the <SC_INSTALL_DIR>/fw-site-capture/<crawlerName>/logs folder. The lock file is transient. It is created at the start of the static capture process to prevent the crawler from being called for an additional static capture. The lock file is deleted when the crawler session ends.

36.8.5 About Stopping a Crawl

Before running a crawler, consider the number of links to be crawled and the crawl depth, both of which determine the duration of the crawler session.

-

If you must terminate an archive crawl, use the Site Capture interface. (Select Stop Archive on the Job Details form.)

-

If you must terminate a static crawl, you must stop the application server.

36.8.6 About Downloading Archives

Avoid downloading large archive files (exceeding 250MB) from the Site Capture interface. Instead, use the getPostExecutionCommand to copy the files from the Site Capture file system to your preferred location.

You can obtain archive size from the crawler report, on the Job Details form. Paths to the Job Details form are shown in using-site-capture-application.html#GUID-259FA0E4-2015-40FB-BF68-1EAECF2FB4DB__CHDHCCEA. See getPostExecutionCommand in Developing with Oracle WebCenter Sites.

36.8.7 Notes About Previewing Sites

If your archived site contains links to external domains, its preview is likely to include those links, especially when the crawl depth and number of links to crawl are set to large values (in the groovy file). Although the external domains can be browsed, they are not archived.

36.8.8 Tips for Configuring Publishing Destination Definitions

-

If you are running publishing-triggered site capture, you can set crawler parameters in a single statement on the publishing destination definition:

CRAWLERCONFIG=crawler1;crawler2&CRAWLERMODE=dynamic -

While you can specify multiple crawlers on a publishing destination definition, you can set one capture mode only. All crawlers run in that mode. To run some crawlers in a different mode, configure another publishing destination definition.

36.8.9 About Accessing Log Files

-

For statically captured sites, log files are available in the Site Capture file system only:

-

The

inventory.dbfile, which lists statically crawled URLs, is located in the/fw-site-capture/crawler/<crawlerName>folder.

Note:

The

inventory.dbfile is used by the Site Capture system. It must not be deleted or modified.-

The

crawler.logfile is located in the<SC_INSTALL_DIR>/fw-site-capture/logs/folder. (Thecrawler.logfile uses the term "VirtualHost" to mean "crawler.")

-

-

For statically captured and archived sites, a common set of log files exists in the site Capture file system:

-

audit.log,which lists the crawled URLs, timestamps, crawl depth, HTTP status, and download time. -

links.txt, which lists the crawled URLs -

report.txt, which is the crawler report

The files named above are located in the following folder:

/fw-site-capture/crawler/<crawlerName>/logs/yyyy/mm/ddNote:

For archived sites,

report.txtis also available in the Site Capture interface, on the Job Details form, where it is called the Crawler Report. (Paths to the Job Details form are shown in using-site-capture-application.html#GUID-259FA0E4-2015-40FB-BF68-1EAECF2FB4DB__CHDHCCEA). -

-

The archive process also generates a URL log for every crawl. The log is available in two places:

-

In the Site Capture file system, where it is called

__inventory.db. This file is located within the zip file in the following folder:/fw-site-capture/crawler/<crawlerName>/archive/yyyy/mm/ddNote:

The

__inventory.dbfile is used by the Site Capture system. It must not be deleted or modified. -

In the Site Capture interface, in the Archived URLs form (whose path is shown in using-site-capture-application.html#GUID-259FA0E4-2015-40FB-BF68-1EAECF2FB4DB__CHDHCCEA).

-

36.9 General Directory Structure

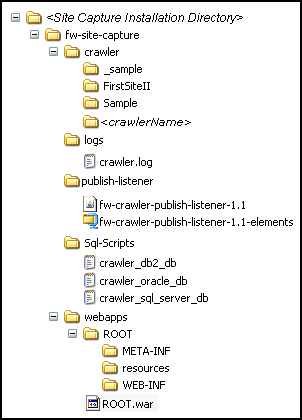

The Site Capture file system provides the framework in which Site Capture organizes custom crawlers and their captured content. The file system is created during the Site Capture installation process to store installation-related files, property files, sample crawlers, and sample code used by the FirstSiteII crawler to control its site capture process.

The following figure shows the folders most frequently accessed in Site Capture to help administrators find commonly used Site Capture information. All folders, except for <crawlerName>, are created during the Site Capture installation process. For information about <crawlerName> folders, see the following table and Custom Folders.

Table 36-3 Site Capture Frequently Accessed Folders

| Folder | Description |

|---|---|

|

|

The parent folder. |

|

|

Contains all Site Capture crawlers, each stored in its own crawler-specific folder. |

|

|

Contains the source code for the FirstSiteII sample crawler. Note: Folder names beginning with the underscore character ("_") are not treated as crawlers. They are not displayed in the Site Capture interface. |

|

|

Represents a crawler named "Sample." This folder is created only if the "Sample" crawler is installed during the Site Capture installation process. The When the Sample crawler is called in static or archive mode, subfolders are created within the |

|

|

Contains the |

|

|

Contains the following files required for installing Site Capture for publishing-triggered crawls:

|

|

|

Contains the following scripts, which create database tables that are required by Site Capture to store its data:

|

|

|

Contains the |

|

|

Contains the |

|

|

Contains the following files:

|

36.10 Custom Folders

A custom folder is created for every crawler that a user creates in the Site Capture interface. The custom folder, <crawlerName>, is used to organize the configuration file, captures, and logs for the crawler, as summarized in the following figure.

Figure 36-8 Site Capture Custom Folders: <crawlerName>

Description of "Figure 36-8 Site Capture Custom Folders: <crawlerName>"