15 Managing Database Growth

This chapter includes the following sections:

-

Deleting Large Numbers of Flow Instances, Adapter Reports, and Fault Alerts

-

Removing Records from the Runtime Tables Without Dropping the Tables

Note:

Table partitioning is an advanced database task and must only be performed by an experienced database administrator (DBA).

For additional information about troubleshooting database growth issues, see Parallel Purging and Table Partitioning Issues, Extending Tablespaces to Avoid Problems at Runtime, and Resolving Database Growth Issues Caused by a High Volume of Transactions.

For information about database growth management strategies, see Developing a Database Growth Management Strategy.

Introduction to Managing Database Growth

When the amount of data in the Oracle SOA Suite database grows very large, maintaining the database can become difficult. To address this challenge, several methods for managing database growth are provided, as described in Table 15-1.

Table 15-1 Database Growth Strategies

| Environment | Use | See Section |

|---|---|---|

|

Small development installations with more than 100K rows in the database |

Auto Purge page of Oracle Enterprise Manager Fusion Middleware Control or the looped purge script |

Deleting Large Numbers of Instances with Oracle Enterprise Manager Fusion Middleware Control or |

|

Medium installations that generate less than 10 GB of data per day and retain less than 500 GB of data |

Auto Purge page of Oracle Enterprise Manager Fusion Middleware Control or a scheduled parallel purge with optimal thread count |

Deleting Large Numbers of Instances with Oracle Enterprise Manager Fusion Middleware Control or |

|

Large installations that generate more than 10 GB of data per day or retain more than 500 GB of data |

|

Deleting Large Numbers of Flow Instances, Adapter Reports, and Fault Alerts |

|

Truncate scripts |

Removing Records from the Runtime Tables Without Dropping the Tables |

Developing a Purging and Partitioning Methodology

This section summarizes the main points into an action plan that you can follow to purge and partition the dehydration store. Purging is an essential part of any plan and should be performed when data is consuming too much space or you have some other reason for removing the data.

There are three main strategies for reducing the size of the schemas:

-

Purge script, which can be executed through either of the following methods:

-

Automatically from the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control

-

Manually in SQL*Plus

-

-

Purge script + partitioning (or, more correctly, dropping table partitions)

-

Partitioning all tables

The purge script uses standard SQL DELETE statements to remove rows from the BPEL tables. For most sites, this is sufficient. However, some sites accumulate so much data that the purge script takes too long to run. In this case, partitioning becomes the better solution. The trade off is that partitioning involves significantly more database maintenance. Moreover, partitioning is an advanced technique and requires a knowledgeable and skilled DBA. By contrast, running the purge script is straightforward and does not require significant DBA knowledge.

Try to profile the input messages, database growth rate, and how much data is purged in the purge process. If the input rate and purge rate match, then regular purging is sufficient. Otherwise, consider partitioning.

If you use partitioning, Oracle recommends that you add disk space and eventually drop the partition. However, this creates additional requirements for managing disk capacity, deciding on the correct partition size, and so on. Do not use partitioning and then rely on the purge script to reclaim disk space.

Note:

Partitioning functionality is available only if you purchase the Oracle Partitioning option in Oracle Database.

Deleting Large Numbers of Flow Instances, Adapter Reports, and Fault Alerts

You can delete flow instances, adapter reports, and fault alerts with the purge scripts (invoked either automatically from the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control or manually in SQL*Plus).

Note the following details:

-

The purge scripts delete instances that have completed or are in error (have faulted). For more information, see Purge States.

-

The purge scripts do not delete instances that are in-flight or can be recovered (are in a recovery required state).

-

The purge scripts delete all Oracle SOA Suite-related tables except for Oracle B2B. If you have an installation in which Oracle SOA Suite and Oracle B2B are co-located, ensure that you also invoke the Oracle B2B purge scripts. If you have separate Oracle SOA Suite and Oracle B2B installations, you must run only the appropriate purge scripts on the respective product schemas. For information about purging Oracle B2B, see Purging Data and B2B Command-Line Tools in Using Oracle B2B.

-

Beginning with 12c (12.1.3), Oracle Enterprise Manager Fusion Middleware Control and the purge scripts delete the

MEDIATOR_RESEQUENCER_MESSAGEandMEDIATOR_GROUP_STATUStables. -

Installers must configure the database scheduler timezone properly or the purge job may run at unexpected times.

-

You can delete the following tables by running the purge scripts from SQL*Plus or from the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control:

-

MEDIATOR_GROUP_STATUS -

EIS_CONNECTION_DOWN_TIMEandMESSAGE_STATISTICS(JCA adapter reports). -

FAULT_ALERT

-

-

Group information for resequencing groups is not deleted. Groups contain the necessary information about the next sequence ID for that group. Purging this information is the same as starting the group from the initial sequence ID, which may not be your intent.

The following sections describe how to invoke the purge scripts from the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control or from SQL*Plus to delete flow instances, adapter reports, and fault alerts:

Note:

There is no purge script support on the IBM DB2 database.

Purge States

Instances in the following states are purged with the Oracle Enterprise Manager Fusion Middleware Control or the purge scripts:

-

Completed successfully

-

Faulted

-

Terminated by user

-

Aborted

-

Unknown (instance tracking is disabled)

Purging of the following instance states is not supported:

-

Instances pending recovery at the BPEL process service engine level or SOA composite application level

-

Running instances

To purge these instances, you must first move them to one of the instance states supported by the purge scripts.

Deleting Large Numbers of Instances with Oracle Enterprise Manager Fusion Middleware Control

Use the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control to schedule and execute jobs that automatically remove older flow instances, adapter reports, and fault alerts data from the database.

Note:

-

Oracle recommends that you enable automatic purging on the Auto Purge page to optimize runtime environment performance. The status of automatic purging is displayed in the Key Configuration section of the Dashboard page at the SOA Infrastructure and individual partition levels. Automatic purging is automatically enabled for new 12c installations, but not for upgraded environments.

-

Ensure that you back up important data before enabling or changing purge configurations.

-

The Auto Purge page is not available if you are using the Java database included with the SOA Developer Install option. Use the

truncate_soa_javadb.sqlscript to purge the database.

-

Access this page through one of the following options:

From the SOA Infrastructure Menu... From the SOA Folder in the Navigator... From the Key Configuration Section of the Dashboard Page... From the Key Configuration Section of the Dashboard Page... -

Select SOA Administration > Auto Purge.

-

Right-click soa-infra.

-

Select SOA Administration > Auto Purge.

-

Click the Related Links icon.

-

Select Auto Purge.

-

Click the icon to the right of Auto Purge Status.

-

In the message that is displayed, click Set Up Auto Purge.

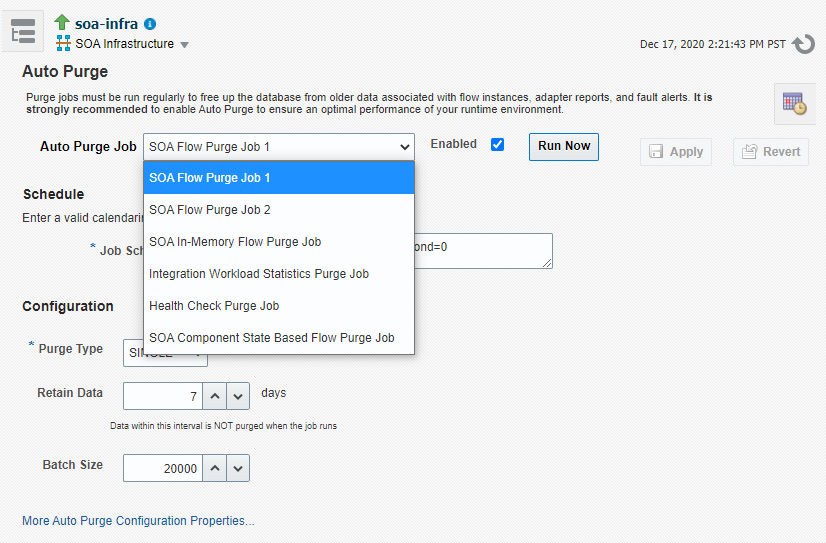

The Auto Purge page is displayed.

-

-

Select values appropriate to your environment, and click Apply.

Field Description Auto Purge Job

Select a predefined database purge job to run:

-

SOA Flow Purge Job 1 to purge on a schedule appropriate for weekdays (Monday through Friday at midnight). This job is automatically enabled.

-

SOA Flow Purge Job 2 to purge on a weekend schedule (Saturday and Sunday) that may be more aggressive.

-

SOA In-Memory Flow Purge Job to purge in-memory flow-related records. This purges only the in-memory instances that are persisted to the database. This job is enabled when you set

inMemoryEnvironmenttotruein the SOA common properties in Oracle Enterprise Manager Fusion Middleware Control. -

Integration Workload Statistics Purge Job to purge the Integration Workload Statistics (IWS) snapshot data from the SOA database. By default, the job is scheduled to run daily at midnight. For information about IWS reports, see Monitoring and Troubleshooting SOA-Wide Issues Using IWS Reports.

-

Health Check Purge Job to purge SOA Health Check reports. For information about SOA Health Check, see Using SOA Health Check.

-

SOA Component State Based Flow Purge Job to purge flows and related tables based on the states of the components (other purge jobs are based on the flow instance state). This handles cases where the flow instance state is not in sync or turned off. This job is enabled when you set

CaptureFlowInstanceStatetofalsein the SOA common properties in Oracle Enterprise Manager Fusion Middleware Control.

Note: You cannot add jobs.

Warning: When you enable or disable an auto purge job, you must save or revert your changes before selecting a different job or navigating away from this page. Otherwise, any unsaved changes made to the currently selected job are lost.

Enabled

Select to enable automatic database purging with the database purge job selected from the Auto Purge Job list.

The purge interval begins when it is enabled. For example, If you specify seven days in the Retain Data field, data is retained from the day you re-enabled this checkbox. Newer data is retained for seven days from when it was created.

Calendaring Expression icon

Click to view examples of job scheduling syntax. Copy and paste the syntax appropriate to your environment into the Job Schedule field and modify as necessary. Click More Info to access documentation about how to configure job frequency by setting the

repeat_intervalattribute.Job Schedule

Specify a job execution schedule to purge instances. The default schedule is to perform a daily purge at midnight. This is a required field. To specify the schedule, use valid calendaring expressions. Click the information icon or the Calendaring Expression icon to view examples of commonly used expressions. The scheduling syntax is not case sensitive.

Purge Type

Select the type of purge script to run. This is a required field.

-

Single: Single, loop purged script that performs a batched purge.

-

Parallel: Functionality is the same as the single, looped purge script. However, this option enables the

dbms_schedulerpackage to spawn multiple purge jobs, with each job working on subset data.Note: If you have a multiple CPU host, use of the parallel script can be beneficial. However, Oracle recommends that you enable the parallel script only during off hours. In addition, when purging data during off hours, Oracle recommends that you drop indexes before purging large amounts of data and then add the indexes back in. This speeds up the purge process, and also keeps indexes from becoming unbalanced.

For more information about the single (looped) and purge parallel scripts, see Looped Purge Script and Looped Purge in Parallel Script with dbms_scheduler.

Retain Data

Specify the time interval in days for which to retain data. Data within this interval is not purged when the job runs. The default value is seven days. For example, if you specify a data retention interval of seven days, data is protected from purging for the seven days since it was created. Older data already in the system is retained seven days from when automatic purging was enabled.You can ignore the retain data filter by setting this property to

-1.Maximum Flows to Purge

Select the maximum number of instance flows to purge in a single job run.

Batch Size

Select the maximum number of business flows to delete at a time. The default value is

20000.This field is displayed if Parallel is selected from the Purge Type list.

Degree of Parallel

Select the number of job executions to run in parallel. The default value is

4.This field is displayed if Parallel is selected from the Purge Type list.

-

-

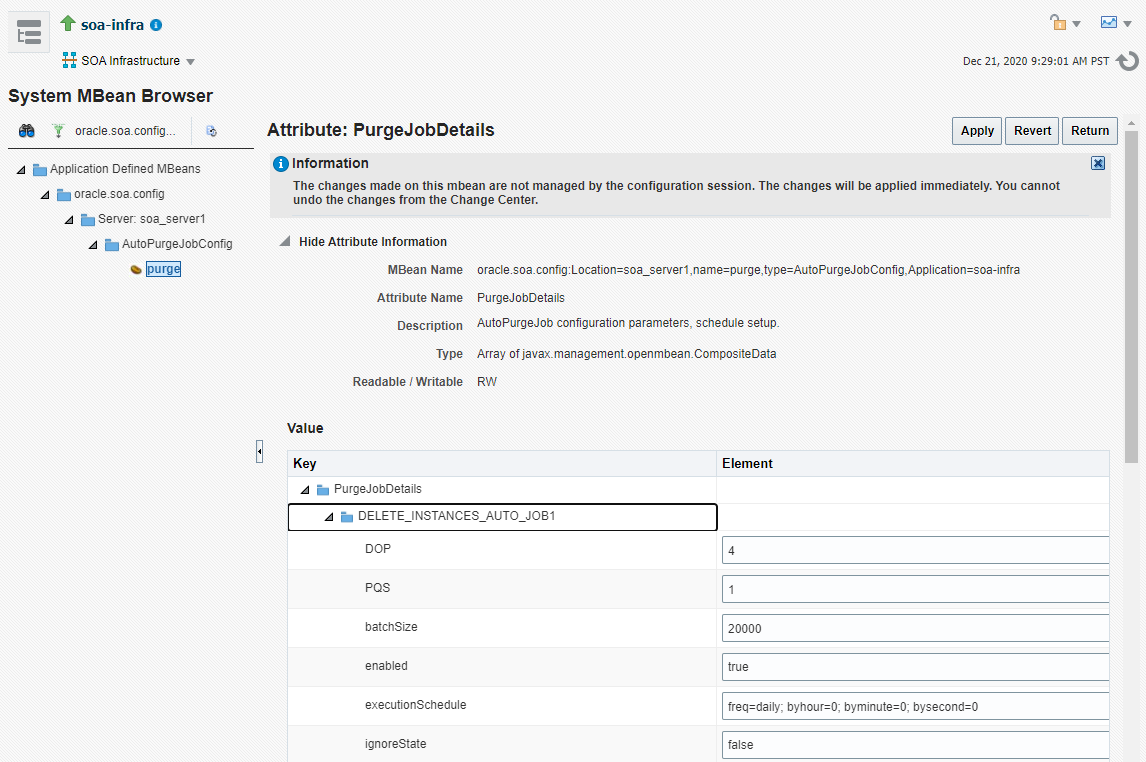

To view and configure advanced configuration properties in the System MBean Browser, click More Auto Purge Configuration Properties.

-

Click PurgeJobDetails.

-

Expand a job to display all properties for single and parallel purges. When either the single or parallel purge type is executed, the appropriate property values for the selected type are executed.

Note:

If you need to edit the advanced purge properties, do so with extreme care. For example, do not change the job names.

-

View or change values, then click Apply.

Field Description DOP

Defines the number of job executions to run in parallel. The default value is

4.PQS

Displays the number of parallel query slaves. You can add additional slaves to improve performance of expensive SQL commands.

batchSize

Displays the maximum number of business flows to delete at a time. The default value is

20000.Enabled

Indicates if the database purge job is enabled.

executionSchedule

Displays the job scheduling syntax.

ignoreState

When set to

true, purges all open and closed instances within the specified date range. The default value isfalse.Note: Use this parameter cautiously because purging open instances may leave your system in an inconsistent state.

maxCount

Displays the maximum number of flows to purge.

maxCreationPeriodDays

Displays the maximum creation period in days. This property is used with minCreationPeriodDays for selecting the flows created between a certain period.

maxRuntime

Expiration at which the purge script exits the loop. The default value is

60. This value is specified in minutes.minCreationPeriodDays

Displays the minimum creation period in days. This property is used with maxCreationPeriodDays for selecting the flows created between a certain period.

purgePartitionedComponent

Indicates if partitioned tables should be purged. If set to

true, the same purge job is invoked to delete partitioned data. The default value isfalse.Note: If tables are partitioned, you do not want to purge them because they are maintained by a

DROPstatement.purgeType

Displays either single or parallel.

retentionPeriodDays

Specifies the time interval in days for which to retain data. Data within this interval is not purged when the purge job runs.

sqlTrace

If set to

true, indicates that SQL Trace is set.For information about SQL Trace, see the Oracle Database SQL Tuning Guide.

Note:

Retention period does not operate at the granularity of hours. So, if the retention period is set to 1 day, it does not mean that a job will be purged within 24 hours of its completion. Instead, it can take up to 48 hours (that is, with a retention period of 1 day, if a flow completes at 12:01am on the 20th, it will not be purged until 12:00am on the 22nd).

Deleting Large Numbers of Instances with SQL*Plus

Note:

When upgrading Oracle SOA Suite Release 11g to 12c, do not start the Upgrade Assistant while the purge scripts are running. Wait until the purge is complete before starting the upgrade process. The upgrade fails if the purge scripts are running while using the Upgrade Assistant to upgrade your schemas. For more information about upgrading, see Upgrading Oracle SOA Suite and Business Process Management.

You can run the purge scripts in SQL*Plus to automatically remove older flow instances, adapter reports, and fault alerts data from the database. There are two types of purge scripts:

-

Looped purge script

-

Looped purge in parallel script with

dbms_scheduler

Looped Purge Script

The master purge script includes a looping construct that allows for a batched purge. You can also provide this script with a max_runtime parameter that stops looping after the value for this parameter is exceeded.

The master script drives the purge of SOA database tables. You can use the delete_instances procedure to purge SOA database tables.

Note:

Set max_runtime to a higher value if there are many instances to purge. In this case, you should expect to wait for a longer time before the script exits. Alternatively, use a smaller batch size if you want the purge script to exit sooner.

delete_instances Procedure

Use the delete_instances procedure to delete instances. The following example shows the syntax:

procedure delete_instances (

min_creation_date in timestamp,

max_creation_date in timestamp,

batch_size in integer,

max_runtime in integer,

retention_period in timestamp,

purge_partitioned_component in boolean,

ignore_state in boolean,

composite_name in varchar2,

composite_revision in varchar2,

soa_partition_name in varchar2,

sql_trace in boolean,

PQS integer,

keep_workflow_inst in boolean,

compbased in boolean

);

Table 15-2 describes the script parameters.

Table 15-2 delete_instances Procedure Parameter Descriptions

| Parameter | Description |

|---|---|

|

|

Minimum creation period in days for the business flow instances. |

|

|

Maximum creation period in days for the business flow instances. |

|

|

Maximum number of flows selected for deletion and committed in one execution of the single loop purge. The default value is |

|

|

Expiration at which the purge script exits the loop. The default value is |

|

|

Specify the time interval in days for which to retain data. Data within this interval is not purged when the job runs. The default value is seven days. The retention period is based on the entire flow. The period is compared to This parameter checks for and deletes records in the Specify a retention period if you want to retain the business flow instances based on the In this example, the min_creation_date = 1st June 2011

max_creation_date = 30 June 2011

retention_period = 1st July 2011This deletes all business flow instances in which the |

|

|

Indicates if partitioned tables should be purged. If set to Note: If tables are partitioned, you do not want to purge them because they are maintained by a |

|

|

When set to Note: Use this parameter cautiously because purging open instances may leave your system in an inconsistent state. |

|

|

The name of the SOA composite application. This parameter, along with the |

|

|

The revision number of the SOA composite application. |

|

|

The partition in which the SOA composite application is included. |

|

|

When set to GRANT ALTER SESSION TO SOA_INFRA Note: Only use this parameter for debugging because it impacts performance. |

|

|

Displays the number of parallel query slaves. You can add additional slaves to improve performance of expensive SQL commands. |

keep_workflow_inst |

Default: false.

When set to true, workflow-related tables

are not purged. Only SOA tables unrelated to workflow are

purged.

|

compbased |

Default: false.

When set to true, purge is based on

component states, without regard to the flow instance

state. This is useful if

captureFlowInstanceState is set to

false, for runtime performance

improvement of parallel flows. Or, if the flow instance

state is not properly updated or in the incorrect state

even when all components are in completed state, purge can

still proceed. Note that setting this parameter to

true adds load on the purge process

as component states must be checked.

|

Note:

-

If you do not provide a value for

retention_period, the value for this property defaults to the value ofmax_creation_date(this is, ifretention_periodequalsnull, thenretention_period=max_creation_date). This consequence also applies to the script parameters described in Looped Purge in Parallel Script with dbms_scheduler. -

If you are not upgrading from Release 11g to 12c, the

max_creation_dateandmin_creation_dateparameters are optional. The purge can be run entirely byretention_period, which is also optional. -

The purge scripts are restricted to purging only the database and existing rows in a table. There is no way for the purge script to look at runtime execution. Therefore, assume you attempt an automatic recovery immediately after an active row has been deleted with the purge scripts (with the

ignore_stateparameter set totrue). Because of this, a row is created after the purge has been executed. This row remains dangling because theSCA_FLOW_INSTANCEtable row has already been deleted.

Looped Purge in Parallel Script with dbms_scheduler

This script is functionally the same as the looped purge script described in Looped Purge Script. However, this script uses the dbms_scheduler package to spawn multiple purge jobs, with each job working on subset data.

Note:

If you have a multiple CPU host, use of the parallel script can be beneficial. However, Oracle recommends that you enable the parallel script only during off hours. In addition, when purging data during off hours, Oracle recommends that you drop indexes before purging large amounts of data and then add the indexes back in. This speeds up the purge process, and also keeps indexes from becoming unbalanced.

delete_instances_in_parallel Procedure

Use the delete_instances_in_parallel procedure to delete instances

in parallel. The following example shows the

syntax.

PROCEDURE delete_instances_in_parallel (

min_creation_date in timestamp,

max_creation_date in timestamp,

batch_size in integer,

max_runtime in integer,

retention_period in integer,

DOP in integer,

max_count integer,

purge_partitioned_component in boolean,

ignore_state in boolean,

composite_name in varchar2,

composite_revision in varchar2,

soa_partition_name in varchar2,

sql_trace in boolean,

keep_workflow_inst in boolean,

compbased in boolean

);Table 15-3 describes the script parameters.

Table 15-3 delete_instances_in_parallel Procedure Parameter Descriptions

| Parameter | Description |

|---|---|

|

|

Minimum creation period in days for the business flow instances. |

|

|

Maximum creation period in days for the business flow instances. |

|

|

Maximum number of flows selected for deletion. The default value is |

|

|

Expiration time at which the purge script exits the loop. The default value is |

|

|

Specify the time interval in days for which to retain data. Data within this interval is not purged when the job runs. The default value is seven days. The retention period is based on the entire flow. The period is compared to |

|

|

Defines the number of job executions to run in parallel. The default value is |

|

|

Defines the number of rows processed (not the number of rows deleted). A big |

|

|

You can invoke the same purge to delete partitioned data. The default value is Note: If tables are partitioned, you do not want to purge them because they are maintained by a |

|

|

When set to Note: Use this parameter cautiously because purging open instances may leave your system in an inconsistent state. |

|

|

The name of the SOA composite application. This parameter, along with the |

|

|

The revision number of the SOA composite application. |

|

|

The partition in which the SOA composite application is included. |

|

|

When set to GRANT ALTER SESSION TO SOA_INFRA Note: Only use this parameter for debugging because it impacts performance. |

keep_workflow_inst |

Default:

false. When set to

true, workflow-related tables are

not purged. Only SOA tables unrelated to workflow

are purged.

|

compbased |

Default:

false. When set to

true, purge is based on component

states, without regard to the flow instance state.

This is useful if

captureFlowInstanceState is set

to false, for runtime performance

improvement of parallel flows. Or, if the flow

instance state is not properly updated or in the

incorrect state even when all components are in

completed state, purge can still proceed. Note

that setting this parameter to

true adds load on the purge

process as component states must be

checked.

|

Running the Purge Scripts

As an alternative to the steps here, you can also run these scripts by following the steps in Deleting Large Numbers of Instances with Oracle Enterprise Manager Fusion Middleware Control.

To run the purge scripts:

-

In SQL*Plus, connect to the database

ASSYSDBA:CONNECT SYS AS SYSDBA

-

Run the following SQL commands:

GRANT EXECUTE ON DBMS_LOCK TO USER; GRANT CREATE ANY JOB TO USER;

where

USERis thesoainfraaccount to run the scripts. These privileges are required to run the scripts. -

Load the purge scripts by running the main purge script at

MW_HOME/soa/common/sql/soainfra/sql/oracle/14120/soa_purge14/soa_purge_scripts.sql.For a parallel purge, the debug logs from the jobs spawned by a parallel purge are logged into files created in the directory named

SOA_PURGE_DIR. This directory must be accessible to the Oracle database. -

Create

SOA_PURGE_DIRand grant write permissions to thesoainfrauser.mkdir -p /tmp/purgelog CREATE OR REPLACE DIRECTORY SOA_PURGE_DIR AS 'SERVER_DIRECTORY'where

SERVER_DIRECTORYis the name of the directory to create (for example,'/tmp/purgelog/'). Note the required single quotes around the directory path. -

If you want to run the scripts in debug mode, run

common/debug_on.sqland setserverouttoonin SQL*Plus. This step is optional.SET SERVEROUT ON

The logs from the spawned jobs are logged into the directory created in Step 4 (separate files per job). The rest of the logs are displayed on

stdout(or thespoolfile, if configured).There are two options for purging:

-

Looped purge

-

Parallel purge

-

-

Run the purge scripts as shown below. Examples are provided for both options.

-

For looped purge:

DECLARE MAX_CREATION_DATE timestamp; MIN_CREATION_DATE timestamp; batch_size integer; max_runtime integer; retention_period timestamp; composite_name varchar2(500); composite_revision varchar2(50); soa_partition_name varchar2(200); PQS integer; ignore_state boolean; BEGIN MIN_CREATION_DATE := to_timestamp('2019-10-01','YYYY-MM-DD'); MAX_CREATION_DATE := to_timestamp('2019-10-31','YYYY-MM-DD'); max_runtime := 60; retention_period := to_timestamp('2019-10-31','YYYY-MM-DD'); batch_size := 10000; composite_name := 'example_composite'; composite_revision := '1.0'; soa_partition_name := 'default'; ignore_state := false; PQS := 5; soa.delete_instances( min_creation_date => MIN_CREATION_DATE, max_creation_date => MAX_CREATION_DATE, batch_size => batch_size, max_runtime => max_runtime, retention_period => retention_period, purge_partitioned_component => false, ignore_state => ignore_state, sql_trace => true); END; -

For parallel purge:

DECLARE max_creation_date timestamp; min_creation_date timestamp; batch_size integer; max_runtime integer; retention_period timestamp; composite_name varchar2(500); composite_revision varchar2(50); soa_partition_name varchar2(200); PQS integer; DOP integer; max_count integer; ignore_state boolean; BEGIN min_creation_date := to_timestamp('2019-10-01','YYYY-MM-DD'); max_creation_date := to_timestamp('2019-10-31','YYYY-MM-DD'); batch_size integer; max_runtime integer; retention_period := to_timestamp('2019-10-31','YYYY-MM-DD'); composite_name := 'michael_composite'; composite_revision := '1.0'; soa_partition_name := 'default'; ignore_state := true; PQS := 5; DOP := 2; max_count := 100000; soa.delete_instances_in_parallel( min_creation_date => min_creation_date, max_creation_date => max_creation_date, batch_size => batch_size, max_runtime => max_runtime, retention_period => retention_period, DOP => DOP, max_count => max_count, purge_partitioned_component => false, ignore_state => ignore_state, sql_trace => true); END;

-

Resolving Dead Locks After Running the Looped Purge in Parallel Script

You may observe a dead lock in the thread logs for one thread after running the looped purge in parallel script. The following example shows the error found in the thread logs:

SOA_PURGE_LOG_THREAD1 (total of 4 threads) 17-JUL-2012 03:03:48 : Purge AUDIT_DETAILS. Error Code = -60, Error Message = ORA-00060: deadlock detected while waiting for resource 17-JUL-2012 03:03:48 : ERROR(delete_inst_in_parallel_job. Error Code = -60, Error Message = ORA-00060: deadlock detected while waiting for resource

To resolve the dead lock issue, rebuild the AUDIT_DETAILS table and increase the values for either of the following:

-

Increase

PCTFREE(to allow for more interested transaction list (ITL) allocation). -

Increase

INITRANS(initial ITLs). This option is described below.

To recreate the AUDIT_DETAILS table and increase the INITRANS value:

Purging the Instances of a Specific SOA Composite Application

You can purge the instances of a specific SOA composite application and leave the instances of other composites unpurged. This action enables you to purge certain flows more frequently than others due to high volume or retention period characteristics.

The purge scripts include an option for purging based on COMPOSITE_DN. Purging based on COMPOSITE_DN is supported with the parameters composite_name and composite_revision.

The purge logic is based on flows IDs, and not COMPOSITE_IDs. Therefore, apart from the intended COMPOSITE_DNs, other composites sharing the same flow ID may get deleted. The following scenarios may occur:

-

A business flow instance is closed, but the flow is still open:

In a scenario in which composite A calls composite B, the purge intends to delete instances of composite A. However, there may be a case in which an instance of composite A is closed, but the corresponding composite B instance is still open. Therefore, because the overall flow is still in an open state, the composite A instance (even though closed) is not purged.

-

The business flow instance is closed and the flow is also closed:

Composite A again calls composite B. The purge intends to delete instances of composite A. Therefore, in a case in which composite A is closed and composite B is also closed, because the overall flow is closed, both business flow instances A and B are purged.

These scenarios maintain the consistency of the flow.

For information about the composite_name and composite_revision parameters, see Looped Purge Script and Looped Purge in Parallel Script with dbms_scheduler.

Resequenced Message Purge States for Oracle Mediator

The purge scripts include purge commands to purge the information persisted in the Oracle Mediator resequencer tables (MEDIATOR_GROUP_STATUS and MEDIATOR_RESEQUENCER_MESSAGE). The following information is purged from the resequencer tables when you run the purge scripts:

-

Completed and aborted messages for all resequencer types

-

Timed out messages for standard resequencers

-

Groups in a ready state for best effort and FIFO (first in/first out) resequencers (these are the only groups that can be purged)

To allow fault recovery and message processing to be completed, the purge scripts do not purge all resequenced message information. In addition, standard resequencer groups store information that should not be purged. The following are not purged when you run the purge scripts:

-

Faulted messages for all resequencer types

-

Running messages for all resequencer types

-

Group information for standard resequencers

-

Groups in a state other than ready for best effort and FIFO resequencers

Note:

The purge scripts for the Oracle Mediator resequencer purge messages first and then move on to groups. If there are messages for a group in the MEDIATOR_RESEQUENCER_MESSAGE table, the group cannot be deleted.

The above describes processing for both looped and parallel processing of the purge scripts, and regardless of whether instance tracking is enabled or disabled. Before any sequence groups are purged, a check is performed to verify that all messages associated with the group are processed.

Below is a list of group state codes used in the resequencer tables:

-

0: Ready

-

1: Locked

-

2: Error

-

4: Timed out

-

6: Group error

Below is a list of message state codes used in the resequencer tables:

-

0: Ready

-

1: Locked

-

2: Completed

-

3: Error

-

4: Timed out (this is ignored)

-

5: Aborted

Monitoring the Status of Purging

You can monitor purge jobs executed from Oracle Enterprise Manager Fusion Middleware Control with the SQL commands described in Table 15-4.

Table 15-4 SQL*Plus Commands for Monitoring the Status of Purging

| To... | Execute This Command... |

|---|---|

|

Show the job history and status |

SQL> select log_date, status from user_scheduler_job_ log where job_name = 'DELETE_INSTANCES_AUTO_JOB1' order by log_date;

For information about selecting the job on the Auto Purge page, see Deleting Large Numbers of Instances with Oracle Enterprise Manager Fusion Middleware Control. |

|

Show a running job |

SQL> select session_id, running_instance, elapsed_time, cpu_used from user_scheduler_running_jobs where job_name = 'DELETE_INSTANCES_AUTO_JOB1'; |

|

Show job details |

SQL> select log_date, status, req_start_date, actual_start_date, run_duration from user_scheduler_job_run_details where job_name = 'DELETE_INSTANCES_AUTO_JOB1' order by log_date; |

|

Find the job schedule |

SQL> select SCHEDULE_TYPE,START_DATE,REPEAT_INTERVAL from user_scheduler_schedules where schedule_name = 'DELETE_INSTANCES_AUTO_SCH1';

|

|

Change the default job schedule |

BEGIN

DBMS_SCHEDULER.set_attribute (

name => 'DELETE_INSTANCES_AUTO_SCH1',

attribute => 'repeat_interval',

value => 'freq=daily; byhour=0; byminute=0; bysecond=0');

END; |

|

Change the job schedule (for this example, to hourly at the thirtieth minute) |

BEGIN

DBMS_SCHEDULER.set_attribute (

name => 'DELETE_INSTANCES_AUTO_SCH1',

attribute => 'repeat_interval',

value => 'freq=hourly; byminute=30');

END; |

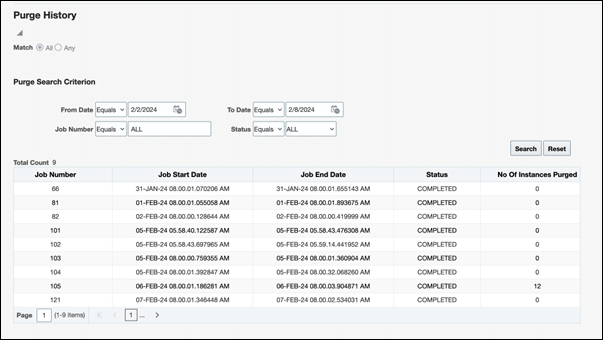

View Purge History

- Date: Specify the From Date and To Date to filter the auto purge jobs.

By default, the To Date is set to the current date, and the From Date is set to one week before the current date.

- Job Number: Specify the auto purge job number.

Note:

When you filter jobs by job number, only the job number parameter is considered. Other filter values are excluded from the search. - Status: Specify the status of the auto purge job you wish to filter. It can be either

ALL,RUNNING, orCOMPLETED.

Generating a Database SQL Trace

The purge scripts include a parameter to generate a SQL trace:

-

If running the purge scripts directly from SQL*Plus, the parameter is named a

sql_traceparameter. -

If running the purge scripts from the Auto Purge page in Oracle Enterprise Manager Fusion Middleware Control, the parameter is named sqlTrace (located beneath the More Auto Purge Configuration Properties link).

Setting this parameter to true generates a database SQL trace that is placed in the database user dump directory. For the parallel purge script, the SQL trace is also generated for each subordinate parallel purge jobs (J000, J001, and so on).

If parallel query slaves have been enabled in the purge scripts, then trace files are created. The trace files are generated when sql_trace is set. With the parallel purge script, multiple trace files are produced named J*.

For information about SQL Trace, see the Oracle Database SQL Tuning Guide.

Partitioning Component Tables

The runtime and schema code for the following components has been modified to store the flow creation date column with their transactional tables.

-

Oracle BPEL Process Manager

-

Oracle Mediator

-

Human workflow

-

Oracle B2B

-

SOA Infrastructure

-

Oracle BPM Suite

The CPST_CREATED_DATE column contains the flow creation date time populated by the instance tracking code. This is available as the normalized message property oracle.integration.platform.instance.CommonConstants.SCA_FLOW_INSTANCE_CREATED_TIME.

All SOA components are partitioned on the same partition key. These partitioned components use the same time range and partition ID.

Note:

Before performing complete or partial partitioning, run the purge scripts.

Partitioning the Database with the Repository Creation Utility

You can select a database profile when running the Repository Creation Utility. The profile determines the size of the SOA database and enables you to use existing performance features of the Oracle database for Oracle SOA Suite-related storage.

-

Large: Provides a large partitioned schema. This selection is for databases of 200 GB or more.

-

Small: Provides a small schema with no partitions.

For more information, see Section "Custom Variables" of Creating Schemas with the Repository Creation Utility.

Partitioning the Component Database Tables

Oracle SOA Suite has been instrumented with partition keys that enable DBAs to take advantage of Oracle RDBMS partitioning features and capabilities. This action enables the schema tables to be range-partitioned on time intervals. This is useful when you must reduce the database maintenance window of large tables. (Though not discussed in this chapter, this also provides for the possibility of archiving partitioned data.)

The task of partitioning the Oracle SOA Suite tables must be performed by an experienced DBA. Since partitioning tables is considered a core DBA skill, this chapter does not provide detailed, step-by-step instructions on how to partition tables. Rather, it provides the DBA with the knowledge and understanding of Oracle SOA Suite schemas and their associated scripts. With this knowledge, the DBA can customize any partitioning strategy for their environment, and incorporate any tuning parameters in response to the performance of their database. Tuning is never a one-size-fits-all proposition or a one-off configuration change. Rather, it is an iterative process of monitoring and tuning.

The following components are associated with their own database schemas:

-

Oracle BPEL Process Manager

-

Oracle Mediator

-

Human workflow

-

Oracle B2B

-

SOA Infrastructure

-

Oracle BPM Suite

For more information about table partitioning, see the Oracle database administration documentation library located at the following URL:

http://www.oracle.com/technetwork/indexes/documentation/index.htmlNote:

-

A hash subpartition is an option the DBA may want to explore, especially for tables with large object (LOB) segments. This can assist with high water (HW) enqueue contention.

-

A global hash index on primary keys that are monotonically increasing (like

CIKEY) may relieve block contention.

Referential Integrity and Equipartioning

For performance reasons, the Oracle BPEL Process Manager, Oracle Mediator, human workflow, Oracle B2B, SOA Infrastructure, and Oracle BPM Suite schemas have no foreign key constraints to enforce integrity. This fact discounts the use of the RDBMS feature known as referential partitioning. This feature provides significant benefits because it equipartitions master and detail tables across foreign key constraints. Equipartioning means that the associated dependent table rows are in a database partition with the same partition key interval as their master table rows.

One benefit of this feature is that the state (for example, completed, faulted, and so on) of each detail row in the equipartition can be inferred from its associated master table row.

Although the RDBMS referential partitioning feature cannot be used, similar behavior can be mimicked to achieve some of the same benefits. The Oracle BPEL Process Manager, Oracle Mediator, human workflow, Oracle B2B, SOA Infrastructure, and Oracle BPM Suite components ensure that the partition key of every detail table row is the same as the partition key of its master table row (that is, the date (timestamp) that is the partition key is pushed down). To then complete the setup, the DBA must ensure that the master and detail tables are range-partitioned on the same intervals. Some examples are provided in subsequent sections of this chapter.

Note:

You may decide that referential integrity of aged partitions is not a concern for your site. For example, the site may have ample disk space, allowing data to significantly age, or there may be no apparent, adverse impact of allowing unreferenced data to be stored in the dependent tables.

Range Interval Partitioning

Range interval partitioning is a Release 12c extension of the Release 11g range partitioning feature. With range partitioning, you had to manually allocate each partition. With range interval partitioning, you do not need to manually allocate partitions. Partitions of a specified interval are automatically created when the interval value of the partition key that you assign exceeds all of the existing range partitions. The verification scripts support range interval partitioning.

For more information about range interval partitioning, see Oracle Database VLDB and Partitioning Guide.

Equipartitioning and Range Interval Partitioning

A goal of equipartitioning is to ensure that all dependent table partitions contain the complete set of associated rows for their master table partitions. This is accomplished as follows:

-

A partition key value is propagated down from the master key to all dependents so that they have the same range.

-

The master key contains the state of each flow (open, closed). When the master is checked and everything is closed, dependent tables with the same partition range can be dropped.

The range interval partitioning verification scripts check the following for each dependent table partition against the master partition:

-

The same interval definition (each table requires the same interval definition for monthly, weekly, number of days, and so on).

-

The upper and lower bound high values are automatically maintained by the database.

-

-

Partitions that must be dropped require the same upper bound high value.

Range Interval Partitioning Example

The following example describes how range interval partitioning works. For this example, the SCA_FLOW_INSTANCE master table is used.

CREATE TABLE SCA_FLOW_INSTANCE

(FLOW_ID INTEGER NOT NULL;

. . .

. . .

CREATED_TIME TIMESTAMP NOT NULL)

PARTITION BY RANGE (CREATED_TIME)

INTERVAL(NUMTOYMINTERVAL(1, 'MONTH'))

PARTITION p0 VALUES LESS THAN (TO_DATE('1-2-2007', 'DD-MM-YYYY')));

Table 15-5 describes the syntax shown in the preceding example.

Table 15-5 Range Interval Partitioning Example

| Syntax | Description |

|---|---|

PARTITION BY RANGE (CREATED_TIME) |

The partition key (for example, a date of July 1, 2013, which is specified as |

INTERVAL(NUMTOYMINTERVAL(1, 'MONTH')) |

The interval (for this example, one month is specified). Therefore, if a partition key is specified for July 1 (as Note: All Oracle SOA Suite tables must be created with this same interval value so that the upper and lower bounds are created with respect to equipartitioning. |

PARTITION p0 VALUES LESS THAN (TO_DATE

('1-2-2007', 'DD-MM-YYYY'))); |

The first partition must be a range partition. This is the transition point after which all partitions are automatically allocated. It is recommended that the first range partition have a The date for this partition makes it virtual metadata. This date makes it easier to drop the partition, if necessary. |

The SQL command shown in the following example identifies the first range partition created. A value of No in the last column indicates that this is a range partition, and not an interval partition.

SQL> select partition position, partition name, high value, interval

from user_tab_partitions

where table_name = 'SCA_FLOW_INSTANCE';

--------------------------------------------------------------

1 P0 TIMESTAMP' 2007-02-01 00:00:00' No

The SQL command shown in the following example identifies the system name, the upper bound high value, and whether this is an interval partition. A value of Yes in the last column indicates that this is an interval partition. The second row in the output is dropped. The first row is simply metadata and is not dropped. The partitions are automatically allocated as you insert the date. System names are automatically generated and partitions are allocated as necessary based on the date.

SQL> INSERT INTO SCA_FLOW_INSTANCE VALUES(..TO_TIMESTAMP('1-5-2013', 'DD-MM-YYYY')...};

--------------------------------------------------------------

1 P0 TIMESTAMP' 2007-02-01 00:00:00' No

2 SYS_P532 TIMESTAMP' 2013-06-01 00:00:00' Yes

The SQL command shown in the following example identifies the second and third rows as the partitions to drop. Because this is performed over an earlier month (March 2013 instead of May 2013), another partition is inserted. The positions of the partitions are changed.

SQL> INSERT INTO SCA_FLOW_INSTANCE VALUES(..TO_TIMESTAMP('1-3-2013', 'DD-MM-YYYY')...};

--------------------------------------------------------------

1 P0 TIMESTAMP' 2007-02-01 00:00:00' No

2 SYS_P578 TIMESTAMP' 2013-04-01 00:00:00' Yes

3 SYS_P532 TIMESTAMP' 2013-06-01 00:00:00' Yes

Introduction to Partition Key Selection

The following factors were considered when selecting the schema partition keys:

-

Convey or imply state (for example, completed) for referential integrity

-

Allow range partitioning on time intervals for maintenance operations

-

Be static to avoid row movement that may lead to unreferenced data

-

Be static to avoid row movement when table maintenance operations are performed

-

Provide performance benefits for console queries through partition pruning

Configuring Partitions

Partitioning is not configured by default; it is a postinstallation step that must be performed manually. Once you decide to implement partitioning of the database, you must perform some initial configuration tasks only once:

-

Using the information in this chapter, decide which groups you want to partition.

-

For each of those groups, decide which tables you want to partition, remembering that there are some mandatory tables in each group that must be partitioned.

-

For each group, decide on the partition interval.

-

Create the partition scripts to partition the Oracle SOA Suite schemas. No scripts are supplied; each DBA is responsible for creating the partition scripts appropriate for their environment.

Introduction to the Verification Script

A verification script is provided for a DBA to identify when to drop a partition and its equipartitioned dependent table. The verification script also identifies if there are active, long running instances. You can then move these instances to a different partition, and then drop the original partition. The verification scripts support range interval partitioning.

Note:

The verification script does not drop any partitions; it just ensures that partitions are eligible to be dropped. It is important to enable partitioning for all tables for the scripts to get correct data

Component Tables

This section describes partitioning constraints and lists the component tables, the groups to which they belong, and their partition key.

Partitioning Constraints

Note the following table partitioning constraints:

-

You have the choice of the following approach to partitioning:

-

Complete partitioning: All tables of a service component/service engine are partitioned.

-

No partitioning: No tables of a service component/service engine are partitioned.

-

Partial partitioning: Restrict partitioning to specific tables with a high growth rate.

You can partition any of the tables by following these constraints:

-

If you want to partition a dependent table, you must also partition its master table.

-

All tables should be equipartitioned along the same date ranges and the same name.

-

Always partition the

SCA_FLOW_INSTANCEtable. This constraint is essential when the Audit Level property is set to Development or Production for any of the composites. The verification script checks for active flows based on the active business flow instances within that partition. Therefore, if theSCA_FLOW_INSTANCEtable is not partitioned, the entire verification script logic based on the equipartitioning of all the tables fails.

-

-

-

Regardless of the group and component, all tables that are partitioned use the same time range and the partition ID.

Component Tables, Range Partition Keys, and Groups

Table 15-6 through Table 15-11 are divided into three groups.

-

Group 1: This includes tables that are directly related to the end-to-end flow trace of a composite. A majority of the tables fall into this group.

-

Group 1A: This includes a small set of tables that are not directly related to the flow trace.

-

Group 2: This includes a small set of tables that have a dependency on multiple tables from Group 1 and 1A tables. You must first execute the group 1 verification script and drop the group 1 partitions before running the group 2 verification script.

Note:

Groups 1 and 1A are combined in the verification script. Running the verification script does not require you to have knowledge of this classification.

Table 15-6 Component: SOA Infrastructure

| Table | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

Table 15-7 Component: Oracle BPEL Process Manager

| Table | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1A |

|

|

|

1A |

|

|

|

1A |

|

|

|

2 |

Table 15-8 Component: Oracle Mediator

| Table Name | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

2 |

Table 15-9 Component: Human Workflow

| Table | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

Table 15-10 Component: Oracle B2B

| Table | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

Table 15-11 Component: Oracle BPM Suite

| Table | Range Partition Key | Group |

|---|---|---|

|

|

|

1 |

|

|

|

1 |

|

|

|

1 |

Equipartitioning and Interval Partitioning Verification Script Checks

The verification script uses following two tables when performing checking:

-

USER_PART_TABLES -

USER_TAB_PARTITIONS

To check the interval definition that you defined for your table:

SQL> select INTERVAL from USER_PART_TABLES

where table_name = 'table_name';

To check the upper bound high value for a partition:

SQL> select high_value from USER_TAB_PARTITIONS

where table_name = 'table_name'

and partition_name = 'partition_name';Running the Verification Script

MW_HOME/SOA_ORACLE_HOME/rcu/integration/soainfra/sql/verify. There are two versions of the verification script, depending on whether your schema is Interval partitioned or Range partitioned:

-

soa_exec_interval_verify.sql -

soa_exec_verify.sql

The verification script helps determine if a partition can be dropped. When you execute the verification script, a log file and a result file is generated for each partition. The log file should be examined by the database administrator to assess if a partition can be dropped by running the result file.

On examining the log file, the database administrator might find that there are too many active instances, and decide to let the partition age further. If the database administrator finds that only a small number of instances are open, say 5%, the administrator may decide to execute row movement to move these instances to another partition. Once the active instances in a partition have been moved to another partition, the partition can be dropped.

To execute the verification script:

Note:

A verification script is not provided for business rules.

Moving Active, Long Running Instances to a Different Partition

Moving rows is expensive, as the row movement procedure needs to move the rows in all the equi-partitioned tables. When planning a partitioning strategy, ensure that the partitions are allowed to age sufficiently, so that the percentage of open instances is less than 5% of the total number of rows in the partition.

Routines to Assist with Partition Maintenance

Oracle SOA Suite includes several routines to assist with the database side of partition maintenance. Routines include scripts to enable and disable foreign keys, alter intervals for interval-partitioned tables, and restore rows after row movement.

The row movement and other partition maintenance routines were included in 12.1.3 through patch 21181834. The routines have been included in 12.2.1 through patch 21520523. The following routines are described below:

Row Restore (verify_soa.exec_row_restore_1)

This routine is used to restore the Group 1 table partition keys back to their original values. You can run the row restore routine once the flows have been row-migrated, and the appropriate partitions have been dropped. Once the routine completes successfully, you can execute the Row Restore Truncate routine.

BEGIN

verify_soa.exec_row_restore_1;

END;Row Restore Truncate (verify_soa.trunc_verify1_rst_temp_tables)

This routine truncates the table verify_r_group1. Run this only after the Row Restore routine has been successfully run. The Row Restore routine should be repeated until successful. Once you run Row Restore Truncate, the Row Restore routine cannot be repeated. (NOTE: Consider backing up table verify_r_group1 prior to the truncation).

Note:

Consider backing up the table verify_r_group1 before running the truncate routine.

BEGIN

verify_soa.trunc_verify1_rst_temp_tables;

END;Alter Interval (verify_soa.alter_interval)

Use this routine to alter the interval for Interval partitioned tables. The routine generates a SQL script in the PART_DIR directory. The SQL script can then be executed by the SOAINFRA user.

Step 1: Generates the SQL Script

set echo on;

set serverout on;

/*

NUMTOYMINTERVAL(1, ''MONTH'')

NUMTODSINTERVAL(1, ''DAY'')

NUMTODSINTERVAL(7, ''DAY'')

*/

begin

verify_soa.alter_interval('NUMTODSINTERVAL(1, ''DAY'')');

end;

Step 2: Runs the Generated SQL Script

SQL> @SOA_ALTER_INTERVAL_GROUP1.SQLAlter Foreign Keys (verify_soa.alter_FK)

Use to disable the foreign keys before dropping a partition, and then to re-enable the foreign keys after the partition drop command. The routine generates two SQL scipts in the PART_DIR directory. The SQL scripts can be customized to suite your performance requirements. The SQL scripts must be run as the SOAINFRA user.

Step 1: Generates the SQL Scripts:

set echo on;

set serverout on;

begin

verify_soa.alter_fk;

end;Step2: Runs the enable and disable commands, as required:

SQL> @SOA_DISABLE_FK.SQL

SQL> @SOA_ENABLE_FK.SQLUpdate Global Indices

The partition maintenance scripts execute the drop partition statements with the update global index clause. This clause avoids the need for the indices to be rebuilt, but causes contention. To avoid the contention, perform partition maintenance during off-peak hours or maintenance window.

Partial Partitioning of Components

If you have an environment in which some components are partitioned, while other components are not partitioned, the nonpartitioned data set must be purged using the purge scripts described in Deleting Large Numbers of Flow Instances_ Adapter Reports_ and Fault Alerts.

For example, assume human workflow is not partitioned, while other components are partitioned. The verification script reports that all SOA partitions can be dropped using the command for dropping partitions. However, the human workflow tables continue to hold workflow data until the data is purged using the loop/parallel purge scripts.

Removing Records from the Runtime Tables Without Dropping the Tables

The truncate scripts (truncate_soa_oracle.sql and, for the Java database provided with the Oracle SOA Suite Quick Start installation, truncate_soa_javadb.sql) enable you to remove all records from all Oracle SOA Suite runtime tables without dropping the tables. You cannot reclaim database space with the truncate scripts.

The truncate scripts are useful for the following scenarios:

-

To create a production or test environment clone (test-to-production or production-to-test) in which you want to keep the schemas from the production environment so that the production customizations and new job definitions are kept, but all instance data in the SOA Infrastructure (that is, in the cloned database) must be truncated, regardless of state.

-

For testing purposes in which test scenarios must be recreated and rerun.

The truncate scripts provide this option by including truncate statements covering all the runtime tables of the following components:

-

Oracle BPEL Process Manager

-

Oracle Mediator

-

Business rules

-

Oracle B2B

-

SOA Infrastructure

-

Oracle BPM Suite

To remove records from the runtime tables without dropping the tables: