Downstream Extract for Oracle GoldenGate Deployment

A downstream Oracle GoldenGate deployment allows you to offload the processing of the redo logs to an alternative database referred to as a mining database. The mining database does not need to contain any data from the source database, but must be the same database version or higher. This means Oracle version 12c as a source database can use mining database version 19c or higher allowing Oracle GoldenGate to capture changes from an Oracle 12c database without actually running on the Oracle 12 database.

Note:

The source and downstream database servers must have the same endianness and processor types. For example, downstream Extract will work with source Linux (x86) to a downstream Windows (x86) server, but it does not work if the source server is HP-UX but the downstream server is AIX, or the source server is HP PA-RISC but the downstream server is HP-Itanium.-

Real-Time Mining mode: In Real-time mining mode, the redo records are processed from the online or standby redo logs immediately, so, there is no delay in processing the database changes.

-

Archive Mining mode: In archive mining mode, the redo records are not processed until they are archived into a redo log file.

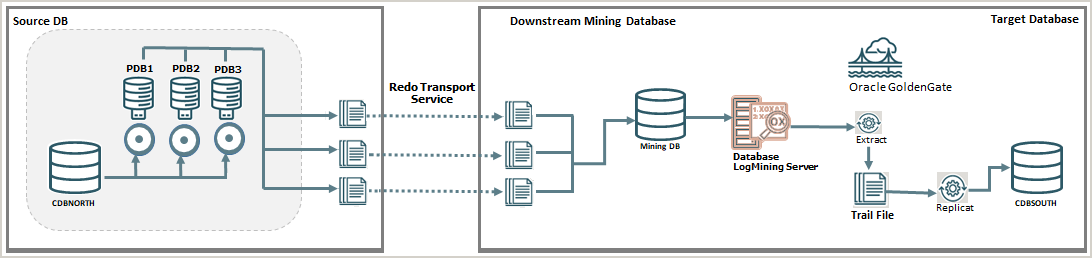

In a typical downstream deployment, the source database, where changes are made to the data, will ship it's redo logs or archive logs to a mining database where the Extract process will mine the redo logs and write out the trail files. Downstream Extract can also be used in situations where Oracle GoldenGate cannot establish a connection with the source database. In the following diagram, CDBNORTH is the source database and CDBSOUTH is the target database. There is a mining database between the source and target with the database logmining server that transfers redo logs from the downstream Extract to the target.

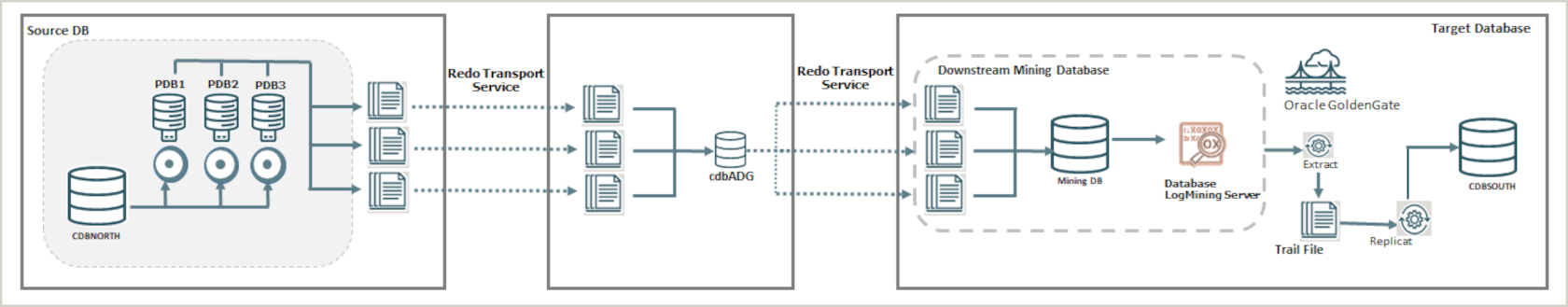

An alternative option is to use a cascading approach. If there is an Active Data Guard standby, you can have the standby ship the redo logs to the mining database instead of shipping the logs from the primary. This option should be used if there is limited network bandwidth and the standby database is in the same region or data center as the mining database.

Note:

The Extract process for Oracle cannot connect to a Data Guard standby or Active Data Guard standby to capture data.

For configuration details about setting up a cascaded downstream environment, see Enable Downstream Extract to Work with ADG.

Configure the Source and Downstream Mining Databases

In this section, you will learn about the parameters and user management configurations required on database side.

Oracle GoldenGate User in Source and Downstream Mining Databases

The Oracle GoldenGate Administrator user needs to exist on each database. In the container databases, a common user is required as the Extract will be used within the Root container.

To set up users for Oracle Database 23ai and higher, the

OGG_CAPTURE and OGG_APPLY user role needs to

be assigned to the new user. Refer to Grant User Privileges for Oracle Database 23ai and Higher for details.

CREATE USER c##ggadmin IDENTIFIED BY password CONTAINER = all;

ALTER USER c##ggadmin SET CONTAINER_DATA = all CONTAINER = current;

GRANT ALTER SESSION TO c##ggadmin CONTAINER = all;

GRANT connect, resource TO c##ggadmin CONTAINER = all;

BEGIN

DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE

( grantee => 'C##GGADMIN'

, privilege_type => 'CAPTURE'

, grant_select_privileges => TRUE

, container => 'ALL'

);

END;

/Note:

The common user (c##ggadmin) is required for the downstream database only.Configure Database Connections

Database Net Service Requirements

The source and downstream databases can be accessed using the Database Net Services in both directions.

In Oracle GoldenGate, connections for the source database

(CDBNORTH_root) and the downstream database (cdbDSC_root) can be

set up. The source database connection is only needed to store dictionary data,

during Extract registeration. You can use the FETCHUSERIDALIAS

parameter to connect to the downstream mining database (cdbDSC_root) for

fetches at runtime. With ADG redirection, the registering of Extract can be

redirected to the source from an ADG. For setting up connections, make sure that you

have the database credentials for these users, to create credentials from the Oracle

GoldenGate deployment.

You can test the Net Service with SQL*Plus, which is part of the Oracle GoldenGate shiphome.

Source Database Prerequisites

-

All databases must include:

ALTER SYSTEM SET ENABLE_GOLDENGATE_REPLICATION = TRUE; -

The source databases must be in archive log mode. To enable archiving on the database, issue the following command that tells the database to create an archive log when switching redo logs.

ALTER DATABASE ARCHIVE LOG; -

(Recommended) All databases must be in Force Logging mode.

ALTER DATABASE FORCE LOGGING; -

Source database must have minimal supplemental logging enabled that includes the

TRANDATA/SCHEMATRANDATAduring the offloading of the Extract process to the Mining database because this is where the transactions are occurring. -

The password files (

orapwSID) of the source and downstream database are identical. So, theremote_login_passwordfilemust be set toSHAREDorEXCLUSIVE.

Downstream Mining Database Prerequisites

-

All databases must include:

ALTER SYSTEM SET ENABLE_GOLDENGATE_REPLICATION = TRUE; -

STREAMS_POOL_SIZEmust be set in Oracle 21c and lower. For exampleALTER SYSTEM SET STREAMS_POOL_SIZE = 1G; -

The mining databases must be in archive log mode. To enable archiving on the database, issue the following command that tells the database to create an archive log when switching redo logs.

ALTER DATABASE ARCHIVE LOG; -

The downstream database must have different settings for the following:

-

DBID -

DBNAME -

DB_UNIQUE_NAME -

GLOBAL_NAME

In a downstream environment, the unique database name matches the database name.

-

-

The password files (

orapwSID) of the source and downstream database are identical. So, theremote_login_passwordfilemust be set toSHAREDorEXCLUSIVE.

Configure Parameters for Downstream Database

Parameter Settings for Downstream Extract in Real-Time Mining Mode

For downstream Extract in Real-Time Mining mode, the primary prerequisite is that the downstream database must have standby redo log files configured correctly.

TRANLOGOPTIONS INTEGRATEDPARAMS (REAL_TIME_MINE Y)

parameter was not already set, you need to set it to enable downstream Extract for

real-time mining

mode:TRANLOGOPTIONS INTEGRATEDPARAMS (DOWNSTREAM_REAL_TIME_MINE Y)Note:

There can be only one real-time Extract on the downstream database for a given source database. Other Extracts have to be non-realtime. This is a restriction from redo transport.ALTER SYSTEM

SET log_archive_config='DG_CONFIG=(CDBNORTH, cdbDSC)';

ALTER SYSTEM set FAL_SERVER = 'CDBNORTH_root';Standby Redo Log Files must exist on the downstream database. If there are n online redo log files at the source, then there should be n+1 standby redo log files at the target of the same size.

Create the Standby Redo Log Files on the Downstream Mining database

The following steps outline the procedure for adding standby redo log files to the downstream mining database. The following summarizes the rules for creating the standby redo logs:

-

Each standby redo log file must be at least as large as the largest redo log file of the redo source database. For administrative ease, Oracle recommends that all redo log files at source database and the standby redo log files at the downstream mining database be of the same size.

-

The standby redo log must have at least one more redo log group than the redo log at the source database, for each redo thread at the source database.

The specific steps and SQL statements that are required to add standby redo log files depend on your environment. See Creating a Physical Standby Database for detailed instructions about adding standby redo log files to a database.

Note:

If there will be multiple source databases sending redo to a single downstream mining database, only one of those sources can send redo to the standby redo logs of the mining database. An Extract process that mines the redo from this source database can run in real-time mode. All other source databases must send only their archived logs to the downstream mining database, and the Extracts that read this data must be configured to run in archived-log-only mode.

To Create the Standby Redo Log Files

Configure the Downstream Mining Database to Archive Standby Redo Log Files Locally

This procedure configures the downstream mining database to archive the standby redo logs that receive redo data from the online redo logs of the source database. Keep in mind that foreign archived logs should not be archived in the recovery area of the downstream mining database.

To Archive Standby Redo Logs Locally:

- At the downstream mining database, set the second archive log destination in the

LOG_ARCHIVE_DEST_ninitialization parameter as shown in the following example.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='LOCATION=/home/arc_dest/srl_dbms1 VALID_FOR=(STANDBY_LOGFILE,PRIMARY_ROLE)'Oracle recommends that foreign archived logs (logs from remote source databases) be kept separate from local mining database log files, and from each other. You must not use the recovery area of the downstream mining database to stage foreign archived logs.

- Enable the

LOG_ARCHIVE_DEST_2parameter you set in the previous step as shown in the following example.ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE

Parameter Settings for Downstream Extract in Archive Mining Mode

For setting up a downstream Extract in either Archive Mining mode or the Real-Time Mining mode, you must set up the redo transport. Include the following parameters when setting up the downstream Extract in Archive-Log-Only mode:

-

LOG_ARCHIVE_CONFIG -

LOG ARCHIVE DESTINATION (LAD)-

LOG_ARCHIVE_DEST_1for local backup of all databases -

LOG_ARCHIVE_DEST_2service for redo transport on the source databaseNote:

LOG_ARCHIVE_DEST_2is independent from the use of Real Time Mining. -

Local database backup destination is set using the Flash Recovery Area

-

-

FAL_SERVER -

All the required system parameters can be set dynamically with

scope=both

The Archive Log Destination (LAD) parameter settings are required for the local backup destination and redo transport services on the source databases.

ALTER SYSTEM SET db_recovery_file_dest = '/u01/app/oracle/fast_recovery_area';

ALTER SYSTEM SET db_recovery_file_dest_size = 100G;

ALTER SYSTEM SET log_archive_dest_1 = 'USE_DB_RECOVERY_FILE_DEST';ALTER SYSTEM SET log_archive_config = 'DG_CONFIG = (CDBNORTH, cdbDSC)';

ALTER SYSTEM

SET log_archive_dest_2='SERVICE=cdbDSC_root

ASYNC

NOREGISTER

VALID_FOR=(ONLINE_LOGFILES, PRIMARY_ROLE)

DB_UNIQUE_NAME=cdbDSC'; To learn more about the LOG_ARCHIVE_DEST parameter and

its attributes, see LOG_ARCHIVE_DEST_n in the Database

Reference guide.

Configure Oracle GoldenGate Extract for Downstream Deployment

In this section, you will learn about the parameters required by Oracle GoldenGate to set up connections with source and downstream databases and configure Extract for downstream database.

Configure Oracle GoldenGate for Downstream Database

-

TRANLOGOPTIONS MININGUSERALIAS: Sets the connection to the downstream mining database for internal metadata queries. -

FETCHUSERIDALIAS: Connects to the source database for fetching data that cannot be natively extracted out of the transaction log. -

TRANLOGOPTIONS INTEGRATEDPARAMS (DOWNSTREAM_REAL_TIME_MINE Y): If set to Y, then Extract captures changes from the standby redo logs files at the downstream database. If not set, then Extract captures from the archive log files.

EXTRACT EXTDSC

USERIDALIAS cgg_CDBNORTH

TRANLOGOPTIONS MININGUSERALIAS cgg_cdbDSC

TRANLOGOPTIONS INTEGRATEDPARAMS (DOWNSTREAM_REAL_TIME_MINE Y)

FETCHUSERIDALIAS cgg_CDBNORTH DOMAIN OracleGoldenGate

EXTTRAIL Downstream/ea

SOURCECATALOG CDBNORTH_PDB1

DDL INCLUDE MAPPED

TABLE HR.*;

-

Add credentials for the source database:

ALTER CREDENTIALSTORE ADD USER c##ggadmin@CDBNORTH_root ALIAS cgg_CDBNORTH DOMAIN OracleGoldenGate PASSWORD password -

Add credentials for the downstream database:

ALTER CREDENTIALSTORE ADD USER c##ggadmin@cdbDSC_root ALIAS cgg_cdbDSC DOMAIN OracleGoldenGate PASSWORD password -

Use

DBLOGINto connect to the source database and downstream database from Oracle GoldenGate:DBLOGIN USERIDALIAS cgg_CDBNORTHMININGDBLOGIN USERIDALIAS cgg_cdbDSCIn this example,

cgg_CDBNORTHis the credential alias for the source database CDBNORTH andcgg_cdbDSCis the credential alias for the mining database. -

Add and register an Extract, edit the parameter file to include the Extract parameters provided previously, add the Extract trail name and location, start the Extract:

ADD EXTRACT extdsc, INTEGRATED TRANLOG, BEGIN NOW REGISTER EXTRACT extdsc, DATABASE CONTAINER (CDBNORTH_PDB01) ADD EXTTRAIL Downstream/ea, EXTRACT extdsc START extdsc

Configure Cascaded Downstream Extract Using Active Data Guard

If a connection to the source database is not possible, GoldenGate can be configured to use an Active Data Guard (ADG) standby database. The standby database is available for read-only connections to perform metadata queries and fetching. In this section, you will learn how to configure a Downstream Extract using ADG and without establishing a connection with the source database.

Enable Downstream Extract to Work with ADG

In a cascaded downstream capture environment, the downstream database does not connect directly to the source database. It uses the Active Data Guard (ADG) for fetching and metadata queries.

Extract must be started using the sourceless option so that it does not

connect to the source database and instead connects to ADG using

FETCHUSERID or FETCHUSERIDALIAS when it needs

to fetch any non-native datatypes. For example, FETCH operations are processed on

the ADG database as this instance is open in read-only mode. Other operations that

cannot be processed on the ADG instance, such as creating the dictionary build, are

redirected from the ADG to the source database.

-

SCHEMATRANDATA -

TRANDATA -

FLUSH SEQUENCE -

TRACETABLE -

HEARTBEATTABLE -

REGISTER EXTRACT

Note:

SCHEMATRANDATA and TRANDATA, even though

the command is executed on the standby redo log, the actual log groups are

created and maintained on the primary database where the actual DML operations

take place.

EXTRACT EXTDSC

NOUSERID

TRANLOGOPTIONS MININGUSERALIAS cgg_cdbDSC_src DOMAIN OracleGoldenGate

TRANLOGOPTIONS INTEGRATEDPARAMS (DOWNSTREAM_REAL_TIME_MINE Y) FETCHUSERIDALIAS cgg_cdbADG_src DOMAIN OracleGoldenGate

EXTTRAIL cascade/ea

SOURCECATALOG CDBNORTH_PDB01

DDL INCLUDE MAPPED

TABLE HR.*;Here are the steps to enable downstream Extract to work with ADG Standby:

-

Add an additional

LOG_ARCHIVE_DESTINATION_N (LAD)on the ADG standby, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_3='service=mining_db_service_name ASYNC NOREGISTER VALID_FOR(STANDBY_LOGFILES,STANDBY_ROLES) DB_UNIQUE_NAME=3rd_db_unique_name' scope=bothThis step transports and generates the

standby_logfilesfor an ADG standby. -

Set the

LOG_ARCHIVE_CONFIGon the ADG standby to ship the logs to the mining database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG = 'DG_CONFIG' = (CDBNORTH, cdbADG_src, cdbDSC)';DB_CONFIGis the database unique name of the first, second, and third databases. -

On the mining database, set up the location to store the incoming standby_logfiles on the mining database as shown in the following example:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='location= /home/arc_dest/dstrm VALID_FOR=(STANDBY_LOGFILE,ALL_ROLES)' scope=bothwhere

/home/arc_dest/dstrmis the location for foreign archive logs. Do not use FRA for storing foreign archive logs. -

Run

LOG_ARCHIVE_CONFIGon the mining database, so that the Extract process is able to read them on the mining database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG ='DG_CONFIG' scope=bothHere,

DB_CONFIGis the database unique name of the first, second, and third databases. -

For a downstream Extract, you need to ensure that the database connections are appropriately configured. When registering the Extract, make sure that

DBLOGINconnection is made to the ADG Standby, that is open for read-only activity. -

To add the Extract and register it, use the following command:

DBLOGIN USERID ggadmin@cdbADG_src, PASSWORD ggadmin MININGDBLOGIN USERID ggadmin@cgg_cdbDSC, password ggadmincdbADG_srcis the ADG not primary.cgg_cdbDSCis the mining database. - Now, register an Extract that uses the

NOUSERIDparameter:ADD EXTRACT exte, INTEGRATED TRANLOG, BEGIN NOW REGISTER EXTRACT exte DATABASE - After the Extract is registered, you can use this Extract to mine data and start the Extract normally.