Monitor

Learn about monitoring Oracle GoldenGate processes for performance and error handling.

Commands Used for Monitoring

You can view information about Extract and Replicat groups from the Oracle GoldenGate MA web interface at various levels. Another alternative is to use the command line interface to monitor various processes.

See Monitor Processes from the Performance Metrics Service.

To learn about command syntax, usage, and examples, see the Command Line Interface Reference for Oracle GoldenGate.

| Command | What it Shows |

|---|---|

INFO {EXTRACT |

REPLICAT} group [DETAIL] |

Run status, checkpoints, approximate lag, and environmental information. |

|

Displays the |

|

Displays statistics on processing volume, such as number of operations performed. |

|

Displays the run status (starting, running, stopped, abended) for Extract and Replicat processes. |

|

Displays the latency between last record processed and timestamp in the data source. |

|

Displays the name of associated process, position of last data processed, maximum file size. |

|

Depending on the process and selected options, returns information about memory pool, lag, TCP statistics, long-running transactions, process status, recovery progress, and more. |

|

Shows contents of the discard file or process report. |

|

Shows contents of the Oracle GoldenGate error log. |

|

Information dependent on the

|

|

Queries for and displays static information. |

|

Displays currently-running parameter values. |

|

Returns information about distribution paths. Before you run this command, ensure that the Distribution Service is running for that deployment. |

|

Retrieves configuration information for a local trail. It shows the name of the trail, the Extract that writes to it, the position of the last data processed, and the assigned maximum file size. |

|

Retrieves configuration information for a remote trail. It shows the name of the trail, the Extract that writes to it, the position of the last data processed, and the assigned maximum file size. |

|

Retrieves information on multiple Extract and Replicat groups as a unit. |

|

Confirms the existence of a checkpoint table and view the date and time that it was created. |

|

Retrieves a list of credentials. |

|

Returns information about the encryption profiles available with the Service Manager. |

|

Displays information about the heartbeat tables configured in the database. |

|

Lists all the authorization profiles in a deployment or information on a specific authorization profile for a specific deployment. |

|

Displays the contents of a currently open master-key wallet. If a wallet store does not exist, a new wallet store file is created. This wallet store file is then used to host different encrypted keys as they are created. |

|

Returns information about managed process profiles. |

|

Returns information about a target-initiated distribution path in the Receiver Service. Before you run this command, ensure that the Receiver Service is running. |

|

Valid for Oracle database only. Determine whether Oracle schema-level

supplemental logging is enabled for the specified schema or if any instantiation

information is available. Use the |

|

Verifies the existence of the specified trace table in the local instance of the database. |

|

Displays different outputs depending on the database. |

|

Get the statistics for the distribution path (DISTPATH) or receiver path (RECVPATH). |

|

Retrieve statistics on multiple Extract and Replicat groups as a unit. Use it with wildcards to affect every Extract and Replicat group that satisfies the wildcard. |

|

Checks the status of multiple Extract and Replicat groups as a unit. |

|

View the status of the specified deployment. |

|

Status of Performance Service. |

|

Displays the status of specified Oracle GoldenGate service. |

Monitor Processes from the Performance Metrics Service

The Performance Metrics Service uses the metrics service to collect and store instance deployment performance results. When you arrive at the Performance Metrics Service OVerview page, you see all the Oracle GoldenGate processes in their current state. You can click a process to view its performance metrics. You can also access service messages and status change details from this page.

Here’s a general overview of the tasks that you can perform from this page.

| Task | Description |

|---|---|

|

Review Messages |

Review Messages from the Messages Overview tab. |

|

Review Status Changes |

Click the Review Status Changes tab to review changes in status of a service. |

Review Messages from Messages Tab

Messages from the Services are displayed in Performance Metrics Service Overview page.

To review the messages sent or received, do the following:Review Status Changes

Real-time status changes to microservices can be monitored from the Performance Metrics Service Status Changes Overview tab.

Status change messages show the date, process name, and its status, which could be running, starting, stopped, or killed.

To view status changes, click Performance Metrics Service from the Service Manager home page, and then click the Status Changes Overview tab. A list of status change messages from the service appears.

If you are searching for specific messages, you can use the search but make sure you click Refresh before you search to ensure that you get the updated status for services.

Note that the search messages appear in different colors to differentiate critical and informational messages.

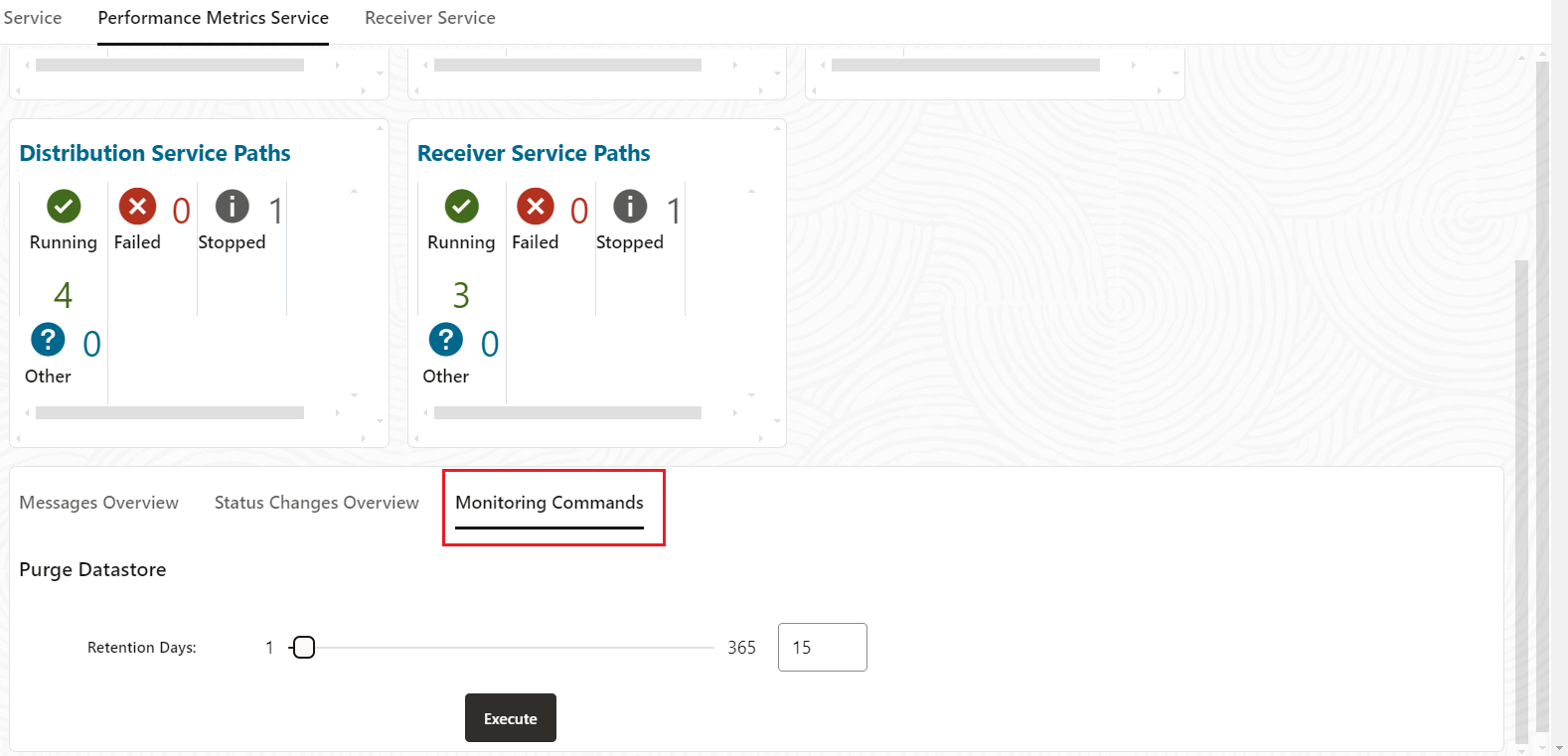

Purge Datastore

You can change the datastore retention and purge it from the Performance Metrics Service Monitoring Commands tab, as shown in the following image:

To view status changes, click Performance Metrics Service from the Service Manager home page, and then click the Monitoring Commands tab.

The current process retention (in days) is displayed.

You can enter the number of retention days or use the sliding icon to set the new period from 1 to 365 days, then Execute to activate the purge. The details of the purge are also displayed.

Protocols for Performance Monitoring for Different Operating Systems

Oracle GoldenGate uses Unix Domain Sockets (UDS) for UNIX-based and Named Pipes (for Windows) techniques to send monitoring points from Extract, Replicat, and other processes to the Performance Monitoring Service of the deployment.

For each deployment, the Performance Metrics Service is local to the host. This makes it more secure to use the Unix Domain Sockets (UDS) protocol or Named Pipes technique in Windows for Inter-process Communication (IPC) with the service and improve overall performance. Named Pipes utilizes a unique file system called NPFS (Named Pipe filesystem) that allows managing the security as any file subject using security checks for file access.

-

UDS is available with Oracle and non-Oracle databases. The UDS file is located in the

$OGG_HOME/var/tempdirectory of the deployment. -

The standard location for named pipes in Windows is

\\ServerName\pipe\PipeName (\\ServerName\pipe\).

Monitor an Extract Recovery

If Extract abends when a long-running transaction is open, it can seem to take a long time to recover when it is started again. To recover its processing state, Extract must search back through the online and archived logs (if necessary) to find the first log record for that long-running transaction. The farther back in time that the transaction started, the longer the recovery takes, in general, and Extract can appear to be stalled.

SEND EXTRACT

command with the STATUS option. One of the following status notations

appears, and you can follow the progress as Extract changes its log read position over

the course of the recovery.

-

In recovery[1] -

Extract is recovering to its checkpoint in the transaction log. This implies that it is reading from either the BR checkpoint files and then archived/online logs, or reading from Recovery Checkpoint in archived/online log.

Monitor Lag

Lag statistics show you how well the Oracle GoldenGate processes are keeping pace with the amount of data that is being generated by the business applications. With this information, you can diagnose suspected problems and tune the performance of the Oracle GoldenGate processes to minimize the latency between the source and target databases.

About Lag

For Extract, lag is the difference, in seconds, between the time that a record was processed by Extract (based on the system clock) and the timestamp of that record in the data source.

For Replicat, lag is the difference, in seconds, between the time that the last record was processed by Replicat (based on the system clock) and the timestamp of the record in the trail.

To view lag statistics, use either the LAG or SEND ER, SEND EXTRACT, SEND REPLICAT commands.

Note:

The INFO command also returns a lag statistic, but this statistic is taken from the last record that was checkpointed, not the current record that is being processed. It is less accurate than LAG or INFO.

Monitor Lag Using Automatic Heartbeat Tables

You can use the default automatic heartbeat table functionality to monitor end-to-end replication lag. Automatic heartbeats are sent from each source database into the replication streams, by updating the records in a heartbeat seed table and a heartbeat table, and constructing a heartbeat history table. Each of the replication processes in the replication path process these heartbeat records and update the information in them. These heartbeat records are inserted or updated into the heartbeat table at the target databases.

The heartbeat tables contain the following information:

-

Source database

-

Destination database

-

Information about the outgoing replication streams:

-

Names of the Extract, Distribution Service, and or Replicat processes in the path

-

Timestamps when heartbeat records were processed by the replication processes.

-

-

Information about the incoming replication streams:

-

Names of the Extract, Distribution Service, and or Replicat processes in the path

-

Timestamps when heartbeat records were processed by the replication processes.

-

Using the information in the heartbeat table and the heartbeat history table, the current and historical lags in each of the replication can be computed.

Replicat can track the current restart position of Extract with

automatic heartbeat tables (LOGBSN). This allows

regenerating the trail files from the source database, if required and

minimizes the redo log retention period of the source database. Also, by

tracking the most recent Extract restart position, the tombstone tables for

automatic Conflict Detection and Resolution (ACDR) tables can be purged more

frequently.

In a bidirectional configuration, the heartbeat table has as many entries as the number of replication paths to neighbors that the database has and in a unidirectional setup, the table at the source is empty. The outgoing columns have the timestamps and the outgoing path, the local Extract and the downstream processes. The incoming columns have the timestamps and path of the upstream processes and local Replicat.

In a unidirectional configuration, the target database will populate only the incoming columns in the heartbeat table.

Note:

The Automatic Heartbeat functionality is not supported on MySQL version 5.5.

Monitor an Extract Recovery

If Extract abends when a long-running transaction is open, it can seem to take a long time to recover when it is started again. To recover its processing state, Extract must search back through the online and archived logs (if necessary) to find the first log record for that long-running transaction. The farther back in time that the transaction started, the longer the recovery takes, in general, and Extract can appear to be stalled.

SEND EXTRACT

command with the STATUS option. One of the following status notations

appears, and you can follow the progress as Extract changes its log read position over

the course of the recovery.

-

In recovery[1] -

Extract is recovering to its checkpoint in the transaction log. This implies that it is reading from either the BR checkpoint files and then archived/online logs, or reading from Recovery Checkpoint in archived/online log.

Heartbeat Table End-To-End Replication Flow

The end-to-end replication process for heartbeat tables relies on using the Oracle GoldenGate trail format. The process is as follows:

-

Add a heartbeat table to each of your databases with the

ADD HEARTBEATTABLEcommand. Add the heartbeat table to all source and target instances and then restart existing Oracle GoldenGate processes to enable heartbeat functionality. Depending on the database, you may or may not be required to create or enable a job to populate the heartbeat table data.See the following sample:DBLOGIN USERIDALIAS alias [DOMAIN domain]|[SYSDBA | SQLID sqlid][SESSIONCHARSET character_set]}ADD HEARTBEATTABLE -

(Optional) For Oracle Databases, you must ensure that the Oracle

DBMS_SCHEDULERis operating correctly as the heartbeat update relies on it. You can query theDBMS_SCHEDULERby issuing:SELECT START_DATE, LAST_START_DATE, NEXT_RUN_DATE FROM DBA_SCHEDULER_JOBSWhere

job_name='GG_UPDATE_HEARTBEATS';Then look for valid entries for

NEXT_RUN_DATE, which is the next time the scheduler will run. If this is a timestamp in the past, then no job will run and you must correct it.A common reason for the scheduler not working is when the parameter

job_queue_processesis set too low (typically zero). Increase the number ofjob_queue_processesconfigured in the database with theALTER SYSTEM SET JOB_QUEUE_PROCESSES = ##;command where##is the number of job queue processes. -

Run an Extract, which on receiving the logical change records (LCR) checks the value in the

OUTGOING_EXTRACTcolumn.-

If the Extract name matches this value, the

OUTGOING_EXTRACT_TScolumn is updated and the record is entered in the trail. -

If the Extract name does not match then the LCR is discarded.

-

If the

OUTGOING_EXTRACTvalue isNULL, it is populated along withOUTGOING_EXTRACT_TSand the record is entered in the trail.

-

-

The Distribution Service on reading the record, checks the value in the

OUTGOING_ROUTING_PATHcolumn. This column has a list of distribution paths.If the value is

NULL, then the column is updated with the current group name (and path if this is a Distribution Service),"*", update theOUTGOING_ROUTING_TScolumn, and the record is written into its target trail file.If the value has a

"*"in the list, then replace it withgroup name[:pathname],"*"', update theOUTGOING_ROUTING_TScolumn, and the record is written into its target trail file. When the value does not have a asterisk (*) in the list and the distribution path name is in the list, then the record is sent to the path specified in the relevantgroup name[:pathname],"*"'pair in the list. If the distribution path name is not in the list, then the record is discarded.Run a Replicat, which on receiving the record checks the value in the

OUTGOING_REPLICATcolumn.-

If the Replicat name matches the value, the row in the heartbeat table is updated and the record is inserted into the history table.

-

If the Replicat name does not match, the record is discarded.

-

If the value is

NULL, the row in the heartbeat and heartbeat history tables are updated with an implicit invocation of the Replicat column mapping.Automatic Replicat Column Mapping:

REMOTE_DATABASE = LOCAL_DATABASE INCOMING_EXTRACT = OUTGOING_EXTRACT INCOMING_ROUTING_PATH = OUTGOING_ROUTING_PATH with "*" removed INCOMING_REPLICAT = @GETENV ("GGENVIRONMENT", "GROUPNAME") INCOMING_HEARTBEAT_TS = HEARTBEAT_TIMESTAMP INCOMING_EXTRACT_TS = OUTGOING_EXTRACT_TS INCOMING_ROUTING_TS = OUTGOING_ROUTING_TS INCOMING_REPLICAT_TS = @DATE ('UYYYY-MM-DD HH:MI:SS.FFFFFF','JTSLCT',@GETENV ('JULIANTIMESTAMP')) LOCAL_DATABASE = REMOTE_DATABASE OUTGOING_EXTRACT = INCOMING_EXTRACT OUTGOING_ROUTING_PATH = INCOMING_ROUTING_PATH OUTGOING_HEARTBEAT_TS = INCOMING_HEARTBEAT_TS OUTGOING_REPLICAT = INCOMING_REPLICAT OUTGOING_HEARTBEAT_TS = INCOMING_HEARTBEAT_TS

-

Additional Considerations:

Computing lags as the heartbeat flows through the system relies on the clocks of the source and target systems to be set up correctly. It is possible that the lag can be negative if the target system is ahead of the source system. The lag is shown as a negative number so that you are aware of their clock discrepancy and can take actions to fix it.

The timestamp that flows through the system is in UTC. There is no time zone associated with the timestamp so when viewing the heartbeat tables, the lag can be viewed quickly even if different components are in different time zones. You can write any view you want on top of the underlying tables; UTC is recommended.

All the heartbeat entries are written to the trail in UTF-8.

The outgoing and incoming paths together uniquely determine a row. Meaning that if you have two rows with same outgoing path and a different incoming path, then it is considered two unique entries.

Heartbeat Table Details

The GG_HEARTBEAT table displays timestamp information of the end-to-end replication time and the timing information at the different components primary and secondary Extract and Replicat.

In a unidirectional environment, only the target database contains information about the replication lag. That is the time when a record is generated at the source database and becomes visible to clients at the target database.

Note:

The automatic heartbeat tables don’t populate theOUTGOING_% columns with data, when both the source and remote

databases have the same name. To change the database name, use the utility

DBNEWID. For details, see the DBNEWID Utility.

| Column | Data Type | Description |

|---|---|---|

|

|

|

Local database where the replication time from the remote database is measured. |

|

|

|

The point in time when a timestamp is generated at the remote database. |

|

|

|

Remote database where the timestamp is generated |

|

|

|

Name of the primary Extract (capture) at the remote database |

|

|

|

Name of the secondary Extract (pump) at the remote database |

|

|

|

Name of the Replicat on the local database. |

|

|

|

Final timestamp when the information is inserted into the |

|

|

|

Timestamp of the generated timestamp is processed by the primary Extract at the remote database. |

|

|

|

Timestamp of the generated timestamp is processed by the secondary Extract at the remote database. |

|

|

|

Timestamp of the generated timestamp is processed by Replicat at the local database. |

|

|

|

Bidirectional/N-way replication: Name of the primary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Name of the secondary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Name of the Replicat on the remote database. |

|

|

|

Bidirectional/N-way replication: Final timestamp when the information is inserted into the table at the remote database. |

|

|

|

Bidirectional/N-way replication: Timestamp of the generated timestamp is processed by the primary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Timestamp of the generated timestamp is processed by the secondary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Timestamp of the generated timestamp is processed by Replicat on the remote database. |

|

|

|

- |

|

|

|

- |

|

|

|

- |

|

|

|

- |

The GG_HEARTBEAT_HISTORY table displays historical timestamp information of the end-to-end replication time and the timing information at the different components primary and secondary Extract and Replicat.

In a unidirectional environment, only the destination database contains information about the replication lag.

Timestamps are managed in UTC time zone. That is the time when a record is generated at the source database and becomes visible to clients at the target database.

| Column | Data Type | Description |

|---|---|---|

|

|

|

Local database where the end-to-end lag is measured. |

|

|

|

Point in time when a timestamp from the remote database receives at the local database. |

|

|

|

Remote database where the timestamp is generated. |

|

|

|

Name of the primary Extract on the remote database. |

|

|

|

Name of the secondary Extract of the remote database. |

|

|

|

Name of the Replicat on the local database. |

|

|

|

Final timestamp when the information is inserted into the |

|

|

|

Timestamp when the generated timestamp is processed by the primary Extract on the remote database. |

|

|

|

Timestamp when the generated timestamp is processed by the secondary Extract on the remote database. |

|

|

|

Timestamp when the generated timestamp is processed by Replicat on the local database. |

|

|

|

Bidirectional/N-way replication: Name of the primary Extract from the local database. |

|

|

|

Bidirectional/N-way replication: Name of the secondary Extract from the local database. |

|

|

|

Bidirectional/N-way replication: Name of the Replicat on the remote database. |

|

|

|

Bidirectional/N-way replication: Final timestamp when the information is persistently inserted into the table of the remote database. |

|

|

|

Bidirectional/N-way replication: Timestamp when the generated timestamp is processed by the primary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Timestamp when the generated timestamp is processed by the secondary Extract on the local database. |

|

|

|

Bidirectional/N-way replication: Timestamp when the generated timestamp is processed by Replicat on the remote database. |

|

|

|

This column is populated by Replicat when it processes this heartbeat record. It populates this column with its current low watermark (LWM) when it processes this record. This allows us to choose a LOGBSN from a heartbeat record which is as of the Replicat LWM. |

|

|

|

This column is populated by Extract and contains the source commit SCN for the heartbeat transaction in the source database. The heartbeat job on the source database cannot populate this value as it will not know the commit SCN apriori. |

|

|

|

This column will be populated by Extract and will contain the current LOGBSN when Extract processes this particular heartbeat record. The heartbeat job on the source database will not populate this value. |

|

|

|

This column will be populated by Extract and will contain the redo timestamp in UTC that corresponds to the current LOGBSN when Extract processes this particular heartbeat record. The heartbeat job on the source database will not populate this value. |

The GG_LAG view displays information about the replication lag between the local and remote databases.

In a unidirectional environment, only the destination database contains information about the replication lag. The lag is measured in seconds.

| Column | Data Type | Description |

|---|---|---|

|

|

|

Local database where the end-to-end replication lag from the remote database is measured. |

|

|

|

Current timestamp of the local database. |

|

|

|

Remote database where the timestamp is generated. |

|

|

|

The age of the most recent heartbeat received from the remote database. |

|

|

|

Replication path from the remote database to the local database with Extract and Replicat components. |

|

|

|

Replication lag from the remote database to the local database. This is the time where the heartbeat where generated at the remote database minus the time where the information was persistently inserted into the table at the local database. |

|

|

|

The age of the most recent heartbeat from the local database to the remote database. |

|

|

|

Replication Path from Local database to the remote database with Extract and Replicat components |

|

|

|

Replication Lag from the local database to the remote database. This is the time where the heartbeat where generated at the local database minus the time where the information was persistently inserted into the table at the remote database. |

|

|

|

Source Extract restart position. |

|

|

|

Remote database unique name is displayed. If no unique

name exists, then the |

|

|

Timestamp |

Timestamp associated with source Extract redo position. |

|

|

Timestamp |

Age of the oldest open transaction at the source database that

Extract is currently processing. This column can be calculated as

|

|

|

String |

Low watermark CSN of the local Replicat when it processed the heartbeat. |

The GG_LAG_HISTORY view displays the history information about the replication lag history between the local and remote databases.

In a unidirectional environment, only the destination database contains information about the replication lag.

The unit of the lag units is in seconds.

| Column | Data Type | Description |

|---|---|---|

|

|

|

Local database where the end-to-end replication lag from the remote database is measured. |

|

|

|

Point in time when a timestamp from the remote database receives on the local database. |

|

|

|

Remote database where the timestamp is generated. |

|

|

String | Remote database name. |

DB_UNIQUE_NAME |

String | Remote database unique

name. If the database unique name doesn't exist, then the

DB_NAME and DB_UNIQUE_NAME will be

same.

In a switchover to standby scenario, the

|

|

|

|

The age of the heartbeat table. |

|

|

|

Replication path from the remote database to local database with Extract and Replicat components. |

|

|

|

Replication lag from the remote database to the local database. This is the time where the heartbeat was generated at the remote database minus the time where the information was persistently inserted into the table on the local database. |

|

|

|

|

|

|

|

Replication path from local database to the remote database with Extract and Replicat components. |

|

|

|

Replication lag from the local database to the remote database. This is the time where the heartbeat was generated at the local database minus the time where the information was persistently inserted into the table on the remote database. |

|

|

|

Source Extract restart position. |

|

|

|

Timestamp associated with source Extract redo position. |

|

|

|

Age of the oldest open transaction at the source database that

Extract is currently processing. This column can be calculated as:

|

|

|

|

Low watermark CSN of the local Replicat when it processed the heartbeat. |

|

|

- |

- |

|

|

- | - |

|

|

- | - |

|

|

- | - |

|

|

- | - |

|

|

- | - |

|

|

- | - |

|

|

- | - |

Update Heartbeat Tables

The HEARTBEAT_TIMESTAMP column in the heartbeat seed table must be

updated periodically by a database job. The default heartbeat interval is 1 minute and

this interval can be specified or overridden using from the command line or the

Administration Service web interface.

For Oracle Database, the database job is created automatically.

For all other supported databases, you must create background jobs to update the heartbeat timestamp using the database specific scheduler functionality.

See ADD HEARTBEATTABLE, ALTER HEARTBEATTABLE for details on updating

the heartbeat table.

Purge the Heartbeat History Tables

The heartbeat history table is purged periodically using a job. The default interval is 30 days and this interval can be specified or overridden using a command line inteface such as Admin Client or the Administration Service web interface.

For Oracle Database, the database job is created automatically.

For all other supported databases, you must create background jobs to purge the heartbeat history table using the database specific scheduler functionality.

Best Practice

Oracle recommends that you:

-

Use the same heartbeat frequency on all the databases to makes diagnosis easier.

-

Adjust the retention period if space is an issue.

-

Retain the default heartbeat table frequency; the frequency set to be 30 to 60 seconds gives the best results for most workloads.

-

Use lag history statistics to collect lag and age information.

Using the Automatic Heartbeat Commands

You can use the heartbeat table commands to control the Oracle GoldenGate automatic heartbeat functionality as follows.

| Command | Description |

|---|---|

|

|

Creates the heartbeat tables required for automatic heartbeat functionality including

the |

|

|

Alters existing heartbeat objects. |

|

|

Alters the heartbeat tables to add the |

|

|

Deletes existing heartbeat objects. |

|

|

Deletes entries in the heartbeat table. |

|

|

Displays heartbeat table information. |

Db2 z/OS: Interpret Statistics for Update Operations

The actual number of DML operations that are executed on the Db2 database might not match the number of extracted DML operations that are reported by Oracle GoldenGate. Db2 does not log update statements if they do not physically change a row, so Oracle GoldenGate cannot detect them or include them in statistics.

Monitor Processing Volume

The STATS commands show you the amount of data that is being

processed by an Oracle GoldenGate process, and how fast it is being moved through the

Oracle GoldenGate system. With this information, you can diagnose suspected problems and

tune the performance of the Oracle GoldenGate processes. These commands provide a

variety of options to select and filter the output.

The STATS commands are: STATS EXTRACT, STATS REPLICAT, or STATS ER command.

You can send interim statistics to the report file at any time with the SEND EXTRACT or SEND REPLICAT command with the REPORT option.

Use the Error Log

Use the Oracle GoldenGate error log to view:

-

a history of commands

-

Oracle GoldenGate processes that started and stopped

-

processing that was performed

-

errors that occurred

-

informational and warning messages

Because the error log shows events as they occurred in sequence, it is a good tool for detecting the cause (or causes) of an error. For example, you might discover that:

-

someone stopped a process

-

a process failed to make a TCP/IP or database connection

-

a process could not open a file

To view the error log, use any of the following:

-

Standard shell command to view the

ggserr.logfile within the root Oracle GoldenGate directory -

VIEW GGSEVTcommand.

You can control the ggserr.log file behavior to:

-

Roll over the file when it reaches a maximum size, which is the default to avoid disk space issues.

-

All messages are appended to the file by all processes without regard to disk space.

-

Disable the file.

-

Route messages to another destination, such as the system log.

This behavior is controlled and described in the

ogg-ggserr.xml file in one of the following locations:

Use the Process Report

Use the process report to view (depending on the process):

-

parameters in use

-

table and column mapping

-

database information

-

runtime messages and errors

-

runtime statistics for the number of operations processed

Every Extract, Replicat process generates a report file. The report can help you diagnose problems that occurred during the run, such as invalid mapping syntax, SQL errors, and connection errors.

To view a process report, use any of the following:

-

standard shell command for viewing a text file

-

Performance Metrics Service

-

VIEW REPORTcommand. -

To view information if a process abends without generating a report, use the following command to run the process from the command shell of the operating system (not Oracle GoldenGate command line) to send the information to the terminal.

process paramfile path.prmWhere:

-

The value for

processis eitherextractorreplicat. -

The value for

path.prmis the fully qualified name of the parameter file, for example:REPLICA PARAMFILE /ogg/dirdat/repora.prm

-

By default, reports have a file extension of .rpt, for example EXTORA.rpt. The default location is the dirrpt sub-directory of the Oracle GoldenGate directory. However, these properties can be changed when the group is created. Once created, a report file must remain in its original location for Oracle GoldenGate to operate properly after processing has started.

To determine the name and location of a process report, use the INFO

EXTRACT, or INFO REPLICAT commands.

Scheduling Runtime Statistics in the Process Report

By default, runtime statistics are written to the report once, at the end of each run. For long or continuous runs, you can use optional parameters to view these statistics on a regular basis, without waiting for the end of the run.

To set a schedule for reporting runtime statistics, use the REPORT

parameter in the Extract or Replicat parameter file to specify a day and time to

generate runtime statistics in the report. See REPORT.

To send runtime statistics to the report on demand, use the SEND EXTRACT or SEND REPLICAT command with the REPORT option to view current runtime statistics when needed.

Viewing Record Counts in the Process Report

Use the REPORTCOUNT parameter to report a

count of transaction records that Extract or Replicat processed since startup. Each

transaction record represents a logical database operation that was performed within a

transaction that was captured by Oracle GoldenGate. The record count is printed to the

report file and to the screen.

Prevent SQL Errors from Filling the Replicat Report File

Use the WARNRATE parameter to set a

threshold for the number of SQL errors that can be tolerated on any target table before

being reported to the process report and to the error log. The errors are reported as a

warning. If your environment can tolerate a large number of these errors, increasing

WARNRATE helps to minimize the size of those files.

Use the Discard File

By default, a discard file is generated whenever a process is started with the

START command. The discard file captures information about Oracle

GoldenGate operations that failed. This information can help you resolve data errors,

such as those that involve invalid column mapping.

The discard file reports such information as:

-

The database error message

-

The sequence number of the data source or trail file

-

The relative byte address of the record in the data source or trail file

-

The details of the discarded operation, such as column values of a DML statement or the text of a DDL statement.

To view the discard file, use a text editor or use the VIEW REPORT command in Admin Client.

The default discard file has the following properties:

-

The file is named after the process that creates it, with a default extension of

.dsc. Example:finance.dsc. -

The file is created in the

dirrptsub-directory of the Oracle GoldenGate installation directory. -

The maximum file size is 50 megabytes.

-

At startup, if a discard file exists, it is purged before new data is written.

You can change these properties by using the DISCARDFILE parameter.

You can disable the use of a discard file by using the NODISCARDFILE

parameter.

If a process is started from the command line of the operating system, it does not

generate a discard file by default. You can use the DISCARDFILE

parameter to specify the use of a discard file and its properties.

Once created, a discard file must remain in its original location for Oracle GoldenGate to operate properly after processing has started.

Maintain Discard and Report Files

By default, discard files and report files are aged the same way. A new discard or report file is created at the start of a new process run. Old files are aged by appending a sequence number from 0 (the most recent) to 9 (the oldest) to their names.

If the active report or discard file reaches its maximum file size before the end of a run (or over a continuous run), the process abends unless there is an aging schedule in effect. Use the DISCARDROLLOVER and REPORTROLLOVER parameters to set aging schedules for the discard and report files respectively. These parameters set instructions for rolling over the files at regular intervals, in addition to when the process starts. Not only does this control the size of the files and prevent process outages, but it also provides a predictable set of archives that can be included in your archiving routine. For more information, see the following documentation:

No process ever has more than ten aged reports or discard files and one active report or discard file. After the tenth aged file, the oldest is deleted when a new report is created. It is recommended that you establish an archiving schedule for aged reports and discard files in case they are needed to resolve a service request.

Table 8-1 Current Extract and Aged Reports

| Permissions | X | Date | Report |

|---|---|---|---|

-rw-rw-rw- |

1 ggs ggs |

4384 Oct 5 14:02 |

TCUST.rpt |

-rw-rw-rw- |

1 ggs ggs |

1011 Sep 27 14:10 |

TCUST0.rpt |

-rw-rw-rw- |

1 ggs ggs |

3184 Sep 27 14:10 |

TCUST1.rpt |

-rw-rw-rw- |

1 ggs ggs |

2655 Sep 27 14:06 |

TCUST2.rpt |

-rw-rw-rw- |

1 ggs ggs |

2655 Sep 27 14:04 |

TCUST3.rpt |

-rw-rw-rw- |

1 ggs ggs |

2744 Sep 27 13:56 |

TCUST4.rpt |

-rw-rw-rw- |

1 ggs ggs |

3571 Aug 29 14:27 |

TCUST5.rpt |

Parameters Used to Interpret Synchronization Lag

The time differences between source and target systems is known as the

synchronization lage. To account for this lag, use the TCPSOURCETIMER |

NOTCPSOURCETIMER parameter in the Extract

parameter file. This parameter adjusts the timestamps of replicated records

for reporting purposes, making it easier to interpret synchronization

lag.