Downstream Extract for Downstream Database Mining

Learn about configuring downstream database mining with Oracle GoldenGate Extract and cascaded downstream database mining using Active Data Guard (ADG).

Configure Extract for a Downstream Deployment

Note:

The source and downstream database servers must have the same endianness and processor types. For example, downstream Extract will work with source Linux (x86) to a downstream Windows (x86) server, but it does not work if the source server is HP-UX but the downstream server is AIX, or the source server is HP PA-RISC but the downstream server is HP-Itanium.-

Real-Time Mining mode: In Real-time mining mode, the redo records are processed from the online or standby redo logs immediately, so, there is no delay in processing the database changes.

-

Archive-Log-Only mode: In archive log mining mode, the redo records are not processed until they are archived into a redo log file.

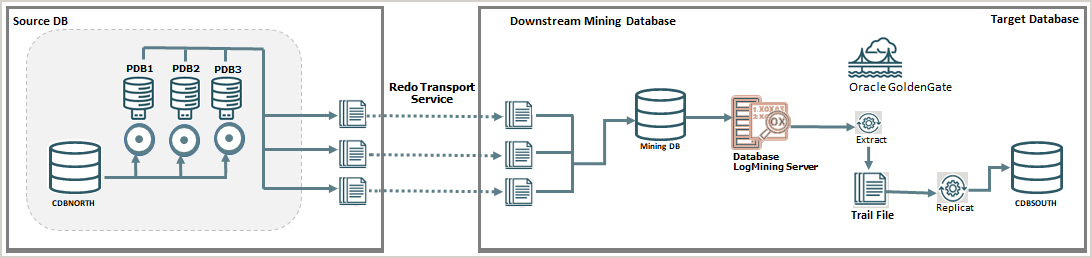

In a typical downstream deployment, the source database, where changes are made to the data, will ship it's redo logs or archive logs to a mining database where the Extract process will mine the redo logs and write out the trail files. In the following diagram, CDBNORTH is the source database and CDBSOUTH is the target database. There is a mining database between the source and target with the database logmining server that transfers redo logs from the downstream Extract to the target.

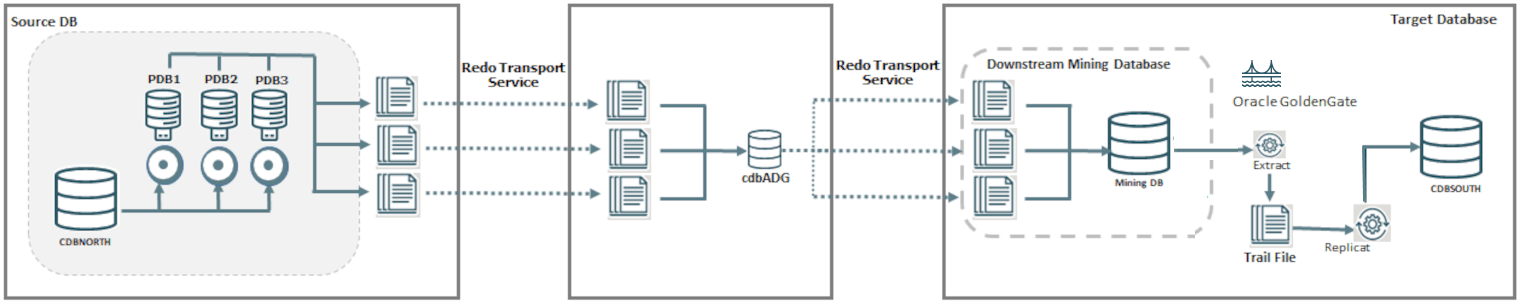

An alternative option is to use a cascading approach. If there is an Active Data Guard standby, you can have the standby ship the redo logs to the mining database instead of shipping the logs from the primary. This option should be used if there is limited network bandwidth and the standby database is in the same region or data center as the mining database.

Note:

The Extract process for Oracle cannot connect to a Data Guard standby or Active Data Guard standby to capture data.

For configuration details about setting up a cascaded downstream environment, see Enable Downstream Extract to Work with ADG.

Evaluate Extract Options for a Downstream Deployment

To configure an Extract on the downstream mining database, consider the following guidelines:

-

Multiple source databases can send their redo data to a single downstream database; however the downstream mining database can accept online redo logs from only one of those source databases. The rest of the source databases must ship archived logs.

-

When online logs are shipped to the downstream database, real-time capture by Extract is possible. Changes are captured as though Extract is reading from the source logs. In order to accept online redo logs from a source database, the downstream mining database must have standby redo logs configured.

-

When using a downstream mining configuration, the source database and mining database must be the same endian and same bit size, which is 64 bits. For example, if the source database was on Linux 64-bit, you can have the mining database run on Windows 64-bit, because they have the same endian and bit size.

-

The initialization parameter

db_block_sizemust be same between source database and mining database.

Prepare the Source Database for the Downstream Deployment

There must be an Extract user on the source database. Extract uses the credentials of this user to do metadata queries and to fetch column values as needed from the source database.

Add the credentials for connecting Extract to the source database from the Microservices Architecture web interface.

Topics:

Add Database Credentials to Connect to the Source Database

To create and run Extract and Replicat processes, you need to set up database credentials to connect Extract/Replicat users to the respective source or target databases.

- Launch the Administration Service interface and log in.

- Click Configuration from the Application Navigation pane.

- Click the plus sign (+) sign next to Credentials.

- Enter the following details in the displayed fields:

Database Credential Options Description Credential Domain Specify a domain name to which the database credential is associated. For example, "OracleGoldenGate" is the default domain name, incase you don't specify a domain name. Credential Alias This is the alias for your database credential. User ID This is the username of the database user.

For Oracle database, if you use the EZconnect syntax to connect to the database, then you can specify the value in this field in the following manner:

dbusername@hostname:port/service_namedbusernameis the database user name.hostnameor IP address of the server where the database is running.portis the port number for connecting to the database server. Usually, this value is 1521.service_nameis the name of the service provided in the tnsnames.ora file for the database connection.Password Password used by database user to log in to the database. - Click Submit.

- Click the Connect to database icon to test that the connection is working correctly. If the connection is successful, the Connect to database icon turns blue.

When you successfully log into your database, you can add and manage checkpoint tables, transaction information (TRANDATA), and heartbeat tables. All of the tables can be searched using the various search fields. As you type, the table is filtered and you can use the search button with the search text.

Configure Redo Transport from Source Database to Downstream Mining Database

To set up the transfer of redo log files from a source database to the downstream mining database, and to prepare the downstream mining database to accept these redo log files, perform the steps given in this topic.

The following summarizes the rules for supporting multiple sources sending redo to a single downstream mining database:

- Only one source database can be configured to send online redo to the standby

redo logs at the downstream mining database. The

log_archive_dest_nsetting for this source database should not have aTEMPLATEclause. - Source databases that are not sending online redo to the standby redo logs of

the downstream mining database must have a

TEMPLATEclause specified in thelog_archive_dest_nparameter. - Each of the source databases that sends redo to the downstream mining database

must have a unique

DBID. You can select theDBIDcolumn from thev$databaseview of these source databases to ensure that the DBIDs are unique. - The

FAL_SERVERvalue must be set to the downstream mining database.FAL_SERVERspecifies theFAL(fetch archive log) server for a standby database. The value is a list of Oracle Net service names, which are assumed to be configured properly on the standby database system to point to the desired FAL servers. The list contains the net service name of any database that can potentially ship redo to the downstream database. - When using redo transport, there could be a delay in processing redo due to network latency. For Extract, this latency is monitored by measuring the delay between LCRs received from source database and reporting it. If the latency exceeds a threshold, a warning message appears in the report file and a subsequent information message appears when the lag drops to normal values. The default value for the threshold is 10 seconds.

Note:

The archived logs shipped from the source databases are called foreign archived logs. You must not use the recovery area at the downstream mining database to store foreign archived logs. Such a configuration is not supported by Extract. Foreign archived logs stored in the Flash Recovery Area (FRA) are not automatically deleted by RMAN jobs. These archived logs must be manually purged.These instructions take into account the requirements to ship redo from multiple sources, if required. You must configure an Extract process for each of those sources.

To configure redo transport:

- Configure database connection to connect the source database with the mining database.

- Configure authentication at each source database and at the downstream mining database to support the transfer of redo data. Redo transport sessions are authenticated using either the Secure Sockets Layer (SSL) protocol or a remote login password file. If a source database has a remote login password file, copy it to the appropriate directory of the mining database system. The password file must be the same at all source databases, and at the mining database.

- At each source database, configure one

LOG_ARCHIVE_DEST_ninitialization parameter to transmit redo data to the downstream mining database. Set the attributes of this parameter as shown in one of the following examples, depending on whether real-time or archived-log-only capture mode is to be used.- Example for real-time capture at the downstream

logmining server, where the source database sends its online redo

logs to the downstream

database:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC NOREGISTER VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'

- Example for archived-log-only capture at the downstream

logmining

server:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DMBSCAP.EXAMPLE.COM ASYNC NOREGISTER VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE) TEMPLATE=/usr/oracle/log_for_dbms1/dbms1_arch_%t_%s_%r.log DB_UNIQUE_NAME=dbmscap'

Note:

When using an archived-log-only downstream mining database, you must specify a value for theTEMPLATEattribute. Oracle also recommends that you use theTEMPLATEclause in the source databases so that the log files from all remote source databases are kept separated from the local database log files, and from each other. - Example for real-time capture at the downstream

logmining server, where the source database sends its online redo

logs to the downstream

database:

- At the source database, set a value of

ENABLEfor theLOG_ARCHIVE_DEST_STATE_ninitialization parameter that corresponds with theLOG_ARCHIVE_DEST_nparameter that corresponds to the destination for the downstream mining database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE - At the source database, and at the downstream mining database, set the

DG_CONFIGattribute of theLOG_ARCHIVE_CONFIGinitialization parameter to include theDB_UNIQUE_NAMEof the source database and the downstream database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1,dbmscap)'

Prepare the Downstream Mining Database to Receive Online Redo Logs

A downstream mining database can accept both archived logs and online redo logs from a source database.

Topics:

Creating the Downstream Mining User Account

When using a downstream mining configuration, there must be an Extract mining user on the downstream database. The mining Extract process uses the credentials of this user to interact with the downstream logmining server.

The downstream mining user is specified by the

TRANLOGOPTIONS parameter with the

MININGUSERALIAS option.

See Add Database Credentials to Connect to the Source Database to assign the correct credentials for the version of your database.

Configure the Mining Database to Archive Local Redo Log Files

This procedure configures the downstream mining database to archive redo data in its online redo logs. These are redo logs that are generated at the downstream mining database.

Archiving must be enabled at the downstream mining database if you want to run Extract in real-time integrated capture mode, but it is also recommended for archive-log-only capture. Extract in integrated capture mode writes state information in the database. Archiving and regular backups will enable you to recover this state information in case there are disk failures or corruption at the downstream mining database.

To Archive Local Redo Log Files:

- Alter the downstream mining database to be in archive log mode. You can do this

by issuing the

following

DDL.STARTUP MOUNT; ALTER DATABASE ARCHIVELOG; ALTER DATABASE OPEN;

- At the downstream mining database, set the first archive log destination in the

LOG_ARCHIVE_DEST_ninitialization parameter as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=/home/arc_dest/local VALID_FOR=(ONLINE_LOGFILE,PRIMARY_ROLE)'

Alternatively, you can use a command like this example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION='USE_DB_RECOVERY_FILE_DEST' valid_for=(ONLINE_LOGFILE,PRIMARY_ROLE)'

Note:

The online redo logs generated by the downstream mining database can be archived to a recovery area. However, you must not use the recovery area of the downstream mining database to stage foreign archived logs or to archive standby redo logs. For information about configuring a fast recovery area, see the Oracle Database Backup and Recovery User’s Guide.

- Enable the local archive destination.

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_1=ENABLE

Configure the Wallet for the Downstream Mining Database

When TDE is enabled on source database and downstream database, then the source wallet or keys should be the same on the downstream mining database and the source database.

Follow these steps to copy the wallet directory from the source database to the downstream mining database:

-

Shutdown the downstream database using the shutdown immediate command.

-

Remove the wallet directory in the downstream database:

rm $OGG_DS_HOME/wallet/* -

Copy the

$OGG_DS_HOME/wallet/*from the source database view to the downstream database view. -

Restart the downstream database.

-

Run checksum on the source database view and downstream database view to ensure that it matches:

cksum $OGG_DS_HOME/wallet/*

Prepare a Downstream Mining Database for Real-time Capture

Topics:

Create the Standby Redo Log Files

The following steps outline the procedure for adding standby redo log files to the downstream mining database. The following summarizes the rules for creating the standby redo logs:

-

Each standby redo log file must be at least as large as the largest redo log file of the redo source database. For administrative ease, Oracle recommends that all redo log files at source database and the standby redo log files at the downstream mining database be of the same size.

-

The standby redo log must have at least one more redo log group than the redo log at the source database, for each redo thread at the source database.

The specific steps and SQL statements that are required to add standby redo log files depend on your environment. See Oracle Data Guard Concepts and Administration Guide for detailed instructions about adding standby redo log files to a database.

Note:

If there are multiple source databases sending redo to a single downstream mining database, only one of those sources can send redo to the standby redo logs of the mining database. An Extract process that mines the redo from this source database can run in real-time mode. All other source databases must send only their archived logs to the downstream mining database, and the Extracts that read this data must be configured to run in archived-log-only mode.To create the standby redo log files:

-

In SQL*Plus, connect to the source database as an administrative user.

-

Determine the size of the source log file. Make note of the results.

SELECT BYTES FROM V$LOG; -

Determine the number of online log file groups that are configured on the source database. Make note of the results.

SELECT COUNT(GROUP#) FROM V$LOG; -

Connect to the downstream mining database as an administrative user.

-

Add the standby log file groups to the mining database. The standby log file size must be at least the size of the source log file size. The number of standby log file groups must be at least one more than the number of source online log file groups. This applies to each instance (thread) in a RAC installation. So if you have "n" threads at the source database, each having "m" redo log groups, you should configure n*(m+1) redo log groups at the downstream mining database.

The following example shows three standby log groups.

ALTER DATABASE ADD STANDBY LOGFILE GROUP 3 ('/oracle/dbs/slog3a.rdo', '/oracle/dbs/slog3b.rdo') SIZE 500M; ALTER DATABASE ADD STANDBY LOGFILE GROUP 4 ('/oracle/dbs/slog4.rdo', '/oracle/dbs/slog4b.rdo') SIZE 500M; ALTER DATABASE ADD STANDBY LOGFILE GROUP 5 ('/oracle/dbs/slog5.rdo', '/oracle/dbs/slog5b.rdo') SIZE 500M; -

Confirm that the standby log file groups were added successfully.

SELECT GROUP#, THREAD#, SEQUENCE#, ARCHIVED, STATUS FROM V$STANDBY_LOG;

The output should be similar to the following:

GROUP# THREAD# SEQUENCE# ARC STATUS ---------- ---------- ---------- --- ---------- 3 0 0 YES UNASSIGNED 4 0 0 YES UNASSIGNED 5 0 0 YES UNASSIGNED

-

Ensure that log files from the source database are appearing in the location that is specified in the

attribute of the localLOCATION

that you set. You might need to switch the log file at the source database to see files in the directory.LOG_ARCHIVE_DEST_n

Configure the Database to Archive Standby Redo Log Files Locally

This procedure configures the downstream mining database to archive the standby redo logs that receive redo data from the online redo logs of the source database. Keep in mind that foreign archived logs should not be archived in the recovery area of the downstream mining database.

To Archive Standby Redo Logs Locally:

- At the downstream mining database, set the second archive log destination in the

LOG_ARCHIVE_DEST_ninitialization parameter as shown in the following example.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='LOCATION=/home/arc_dest/srl_dbms1 VALID_FOR=(STANDBY_LOGFILE,PRIMARY_ROLE)'Oracle recommends that foreign archived logs (logs from remote source databases) be kept separate from local mining database log files, and from each other. You must not use the recovery area of the downstream mining database to stage foreign archived logs.

- Enable the

LOG_ARCHIVE_DEST_2parameter you set in the previous step as shown in the following example.ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE

Enable Downstream Extract to Work with ADG

In a cascaded downstream capture environment, the downstream database does not connect directly to the source database. It uses the Active Data Guard (ADG) as a reference.

Extract must be started using the sourceless option so that it does not

connect to the source database and instead connects to ADG using

FETCHUSERID or FETCHUSERIDALIAS when it needs

to fetch any non-native datatypes. For example, FETCH operations are processed on

the ADG database as this instance is open in read-only mode. Other operations that

cannot be processed on the ADG instance, such as creating the dictionary build, are

redirected from the ADG to the source database.

-

SCHEMATRANDATA -

TRANDATA -

FLUSH SEQUENCE -

TRACETABLE -

HEARTBEATTABLE -

REGISTER EXTRACT

Note:

SCHEMATRANDATA and TRANDATA, even though

the command is executed on the standby redo log, the actual log groups are

created and maintained on the primary database where the actual DML operations

take place.

EXTRACT EXTDSC

NOUSERID

TRANLOGOPTIONS MININGUSERALIAS cgg_cdbDSC_src DOMAIN OracleGoldenGate

TRANLOGOPTIONS INTEGRATEDPARAMS (DOWNSTREAM_REAL_TIME_MINE Y) FETCHUSERIDALIAS cgg_cdbADG_src DOMAIN OracleGoldenGate

EXTTRAIL cascade/ea

SOURCECATALOG CDBNORTH_PDB01

DDL INCLUDE MAPPED

TABLE HR.*;Here are the steps to enable downstream Extract to work with ADG Standby:

-

Add an additional

LOG_ARCHIVE_DESTINATION_N (LAD)on the ADG standby, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_3='service=mining_db_service_name ASYNC NOREGISTER VALID_FOR(STANDBY_LOGFILES,STANDBY_ROLES) DB_UNIQUE_NAME=3rd_db_unique_name' scope=bothThis step transports and generates the

standby_logfilesfor an ADG standby. -

Set the

LOG_ARCHIVE_CONFIGon the ADG standby to ship the logs to the mining database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='dg_config' scope=both;db_configis the database unique name of the first, second, and third databases. -

On the mining database, set up the location to store the incoming

standby_logfileson the mining database:ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='location= DB_RECOVERY_FILE_DEST VALID_FOR=(STANDBY_LOGFILE,ALL_ROLES)' scope=bothThe location is the database recovery file destination.

-

Run

LOG_ARCHIVE_CONFIGon the mining database, so that the Extract process is able to read them on the mining database, as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='dg_config' scope=bothHere, db_config is the database unique name of the first, second, and third databases.

-

For a downstream Extract, you need to ensure that the database connections are appropriately configured. When registering the Extract, make sure that

DBLOGINconnection is made to the ADG Standby, that is open for read-only activity. -

To add the Extract and register it, use the following command:

DBLOGIN USERID ggadmin@cdbADG_src, PASSWORD ggadmin MININGDBLOGIN USERID ggadmin@cgg_cdbDSC, password ggadmincdbADG_srcis the ADG not primary.cgg_cdbDSCis the mining database. - Now, register an Extract that uses the

NOUSERIDparameter:ADD EXTRACT exte, INTEGRATED TRANLOG, BEGIN NOW REGISTER EXTRACT exte DATABASE - After the Extract is registered, you can use this Extract to mine data and start the Extract normally.

Use Cases for Downstream Mining Configuration

Read about the different downstream mining configuration use cases.

Topics:

Case 1: Capture from One Source Database in Real-time Mode

This example captures changes from source database DBMS1 by deploying an Extract at a

downstream mining database DBMSCAP.

The example assumes that you created the necessary standby redo log files as shown in Configure Extract for a Downstream Deployment.

This assumes that the following users exist:

- User

GGADM1in DBMS1 whose credentials Extract will use to fetch data and metadata from DBMS1. This user has the alias of ggadm1 in the Oracle GoldenGate credential store and logs in asggadm1@dbms1. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at the source database. - User

GGADMCAPinDBMSCAPwhose credentials Extract will use to retrieve logical change records from the logmining server at the downstream mining databaseDBMSCAP. This user has the alias of ggadmcap in the Oracle GoldenGate credential store and logs in asggadmcap@dbmscap. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at the mining database.

Topics:

Prepare the Mining Database to Archive its Local Redo

To prepare the mining database to archive its local redo:

- The downstream mining database must be in archive log mode. You can

do this by issuing the following

DDL:

STARTUP MOUNT; ALTER DATABASE ARCHIVELOG; ALTER DATABASE OPEN; - At the downstream mining database, set

log_archive_dest_1to archive local redo:ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=/home/arc_dest/local VALID_FOR=(ONLINE_LOGFILE, PRIMARY_ROLE)' - Enable

log_archive_dest_1.ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_1=ENABLE

Prepare the Mining Database to Archive Redo Received in Standby Redo Logs from the Source Database

To prepare the mining database to archive the redo received in standby redo logs from the source database:

- At the downstream mining database, set log_archive_dest_2 as shown in the

following

example:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='LOCATION=/home/arc_dest/srl_dbms1 VALID_FOR=(STANDBY_LOGFILE,PRIMARY_ROLE)' - Enable log_archive_dest_2 as shown in the following

example:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE - Set

DG_CONFIGat the downstream mining database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1,dbmscap)'

Prepare the Source Database to Send Redo to the Mining Database

To prepare the source database to send redo to the mining database::

- Make sure that the source database is running with the required

compatibility:

The minimum compatibility setting required from integrated capture is 11.1.0.0.0.select name, value from v$parameter where name = 'compatible'; - Set

DG_CONFIGat the source database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1,dbmscap)'; - Set up redo transport at the source

database..

ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination.

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Set up Extract (ext1) on DBMSCAP

To set up Extract (ext1) on DBMSCAP:

- Register Extract with the downstream mining database. In the

credential store, the alias name of

ggadm1is linked to a user connect string ofggadm1@dbms1. The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap.DBLOGIN USERIDALIAS ggadm1MININGDBLOGIN USERIDALIAS ggadmcapREGISTER EXTRACT ext1 DATABASE - Create Extract at the downstream mining

database:

ADD EXTRACT ext1 INTEGRATED TRANLOG BEGIN NOW - Edit Extract parameter file

ext1.prm. The following lines must be present to take advantage of real-time capture. In the credential store, the alias name ofggadm1is linked to a user connect string ofggadm1@dbms1. The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap.USERIDALIAS ggadm1 TRANLOGOPTIONS MININGUSERALIAS ggadmcap TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine Y) - Start

Extract.

START EXTRACT ext1

Note:

You can create multiple Extracts running in real-time Extract mode in the downstream mining database, as long as they all are capturing data from the same source database, such as capturing changes for database DBMS1 in the preceding example.Case 2: Capture from Multiple Sources in Archive-log-only Mode

The following example captures changes from database DBMS1 and

DBMS2 by deploying an Extract at a downstream mining database

DBMSCAP.

It assumes the following users:

-

User

GGADM1inDBMS1whose credentials Extract will use to fetch data and metadata from DBMS1. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user atDBMS1. -

User

GGADM2inDBMS2whose credentials Extract will use to fetch data and metadata fromDBMS2. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at DBMS2. -

User

GGADMCAPinDBMSCAPwhose credentials Extract will use to retrieve logical change records from the logmining server at the downstream mining database. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at the downstream mining databaseDBMSCAP.

This procedure also assumes that the downstream mining database is configured in archive log mode.

Topics:

Prepare the Mining Database to Archive its Local Redo

To prepare the mining database to archive its local redo:

- The downstream mining database must be in archive log mode. You can

do this by issuing the following

DDL.

STARTUP MOUNT; ALTER DATABASE ARCHIVELOG; ALTER DATABASE OPEN; - At the downstream mining database, set

log_archive_dest_1to archive local redo.ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=/home/arc_dest/local VALID_FOR=(ONLINE_LOGFILE, PRIMARY_ROLE)' - Enable

log_archive_dest_1.ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_1=ENABLE - Start

Extract.

START EXTRACT ext1

Note:

You can create multiple Extracts running in real-time Extract mode in the downstream mining database, as long as they all are capturing data from the same source database, such as capturing changes for database DBMS1 in the preceding example.Prepare the Mining Database to Archive Redo from the Source Database

Set DG_CONFIG

ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1,dbms2, dbmscap)'Topics:

Prepare the First Source Database to Send Redo to the Mining Database

To prepare the first source database to send redo to the mining database:

- Make certain that DBMS1 source database is running with the required

compatibility.

The minimum compatibility setting required from capture is 11.1.0.0.0.select name, value from v$parameter where name = 'compatible'; NAME VALUE --------- --------------------- compatible 11.1.0.0.0 - Set

DG_CONFIGat DBMS1 source database.ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1, dbmscap)'; - Set up redo transport at DBMS1 source database. The

TEMPLATEclause is mandatory if you want to send redo data directly to foreign archived logs at the downstream mining database.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER TEMPLATE='/usr/orcl/arc_dest/dbms1/dbms1_arch_%t_%s_%r.log VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination.

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Note:

You can create multiple Extracts running in real-time Extract mode in the downstream mining database, as long as they all are capturing data from the same source database, such as capturing changes for database DBMS1 in the preceding example.Prepare the Second Source Database to Send Redo to the Mining Database

To prepare the second source database to send redo to the mining database:

- Make sure that DBMS2 source database is running with the required

compatibility.

The minimum compatibility setting required from capture is 11.1.0.0.0.select name, value from v$parameter where name = 'compatible'; NAME VALUE --------- --------------------- compatible 11.1.0.0.0 - Set

DG_CONFIGat DBMS2 source database.ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms2, dbmscap)'; - Set up redo transport at DBMS2 source database. The

TEMPLATEclause is mandatory if you want to send redo data directly to foreign archived logs at the downstream mining database.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER TEMPLATE='/usr/orcl/arc_dest/dbms2/dbms2_arch_%t_%s_%r.log VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination.

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Note:

You can create multiple Extracts running in real-time Extract mode in the downstream mining database, as long as they all are capturing data from the same source database, such as capturing changes for database DBMS1 in the preceding example.Case 3: Capturing from Multiple Sources with Mixed Real-time and Archive-log-only Mode

The following example captures changes from database DBMS1, DBMS2 and

DBMS3 by deploying an Extract at a downstream mining database

DBMSCAP.

Note:

This example assumes that you created the necessary standby redo log files as shown in Prepare the Downstream Mining Database to Receive Online Redo Logs.It assumes the following users:

-

User

GGADM1inDBMS1whose credentials Extract will use to fetch data and metadata from DBMS1. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user atDBMS1. -

User

GGADM2inDBMS2whose credentials Extract will use to fetch data and metadata fromDBMS2. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at DBMS2. - User

GGADM3inDBMS3whose credentials Extract will use to fetch data and metadata fromDBMS3. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user atDBMS3. -

User

GGADMCAPinDBMSCAPwhose credentials Extract will use to retrieve logical change records from the logmining server at the downstream mining database. It is assumed that theDBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE()procedure was called to grant appropriate privileges to this user at the downstream mining databaseDBMSCAP.

This procedure also assumes that the downstream mining database is configured in archive log mode.

In this example, the redo sent by DBMS3 will be mined

in real time mode, whereas the redo data sent from DBMS1 and

DBMS2 will be mined in archive-log-only mode.

Topics:

Prepare the Mining Database to Archive its Local Redo

To prepare the mining database to archive its local redo:

- The downstream mining database must be in archive log mode. You can

do this by issuing the following

DDL:

STARTUP MOUNT; ALTER DATABASE ARCHIVELOG; ALTER DATABASE OPEN; - At the downstream mining database, set

log_archive_dest_1to archive local redo.:ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=/home/arc_dest/local VALID_FOR=(ONLINE_LOGFILE, PRIMARY_ROLE)' - Enable

log_archive_dest_1.ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_1=ENABLE

Prepare the Mining Database to Accept Redo from the Source Databases

Because redo data is being accepted in the standby redo logs of the downstream mining database, the appropriate number of correctly sized standby redo logs must exist. If you did not configure the standby logs, see Create the Standby Redo Log Files.

- At the downstream mining database, set the second archive log

destination in the

LOG_ARCHIVE_DEST_ninitialization parameter as shown in the following example. This is needed to handle archive standby redo logs.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='LOCATION=/home/arc_dest/srl_dbms3 VALID_FOR=(STANDBY_LOGFILE,PRIMARY_ROLE)' - Enable the

LOG_ARCHIVE_DEST_STATE_2initialization parameter that corresponds with theLOG_ARCHIVE_DEST_2parameter as shown in the following example:ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE - Set

DG_CONFIGat the downstream mining database to accept redo data from all of the source databases.ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1, dbms2, dbms3, dbmscap)'

Prepare the First Source Database to Send Redo to the Mining Database

To prepare the first source database to send redo to the mining database:

- Make certain that DBMS1 source database is running with the required

compatibility.

select name, value from v$parameter where name = 'compatible'; - Set

DG_CONFIG at DBMS1source database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms1, dbmscap)'; - Set up redo transport at

DBMS1source database. TheTEMPLATEclause is mandatory if you want to send redo data directly to foreign archived logs at the downstream mining database.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER TEMPLATE='/usr/orcl/arc_dest/dbms1/dbms1_arch_%t_%s_%r.log VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Prepare the Second Source Database to Send Redo to the Mining Database

To prepare the second source database to send redo to the mining database::

- Make sure that DBMS2 source database is running with the required

compatibility.

select name, value from v$parameter where name = 'compatible'; - Set

DG_CONFIGat DBMS2 source database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms2, dbmscap)'; - Set up redo transport at

DBMS2source database. TheTEMPLATEclause is mandatory if you want to send redo data directly to foreign archived logs at the downstream mining database.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER TEMPLATE='/usr/orcl/arc_dest/dbms2/dbms2_arch_%t_%s_%r.log VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Prepare the Second Source Database to Send Redo to the Mining Database

To prepare the second source database to send redo to the mining database::

- Make sure that DBMS2 source database is running with the required

compatibility.

select name, value from v$parameter where name = 'compatible'; - Set

DG_CONFIGat DBMS2 source database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms2, dbmscap)'; - Set up redo transport at

DBMS2source database. TheTEMPLATEclause is mandatory if you want to send redo data directly to foreign archived logs at the downstream mining database.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER TEMPLATE='/usr/orcl/arc_dest/dbms2/dbms2_arch_%t_%s_%r.log VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Prepare the Third Source Database to Send Redo to the Mining Database

To prepare the third source database to send redo to the mining database:

- Make sure that DBMS3 source database is running with the required

compatibility.

select name, value from v$parameter where name = 'compatible'; NAME VALUE --------- --------------------- compatible 11.1.0.0.0The minimum compatibility setting required from capture is 11.1.0.0.0.

- Set

DG_CONFIGat DBMS3 source database:ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(dbms3, dbmscap)'; - Set up redo transport at DBMS3 source database. Because DBMS3 is

the source that will send its online redo logs to the standby redo logs at the

downstream mining database, do not specify a

TEMPLATEclause.ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE=DBMSCAP.EXAMPLE.COM ASYNC OPTIONAL NOREGISTER VALID_FOR=(ONLINE_LOGFILES,PRIMARY_ROLE)DB_UNIQUE_NAME=dbmscap'; - Enable the downstream

destination:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;

Topics:

Set up Extracts at Downstream Mining Database

These steps set up Extract at the downstream database to capture from the archived logs sent by DBMS1 and DBMS2.

Topics:

Set up Extract (ext1) to Capture Changes from Archived Logs Sent by DBMS1

Perform the following steps on the DBMSCAP downstream mining database:

- Register Extract with

DBMSCAPfor the DBMS1 source database. In the credential store, the alias name ofggadm1is linked to a user connect string ofggadm1@dbms1.The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap.DBLOGIN USERIDALIAS ggadm1 MININGDBLOGIN USERIDALIAS ggadmcap REGISTER EXTRACT ext1 DATABASE - Add Extract at the mining database

DBMSCAP:ADD EXTRACT ext1 INTEGRATED TRANLOG BEGIN NOW - Edit the Extract parameter file

ext1.prm. In the credential store, the alias name of ggadm1 is linked to a user connect string ofggadm1@dbms1. The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap:USERIDALIAS ggadm1 TRANLOGOPTIONS MININGUSERALIAS ggadmcap TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine N) - Start Extract:

START EXTRACT ext1

Set up Extract (ext2) to Capture Changes from Archived Logs Sent by DBMS2

Perform the following steps on the DBMSCAP downstream mining database:

- Register Extract with the mining database for source database DBMS2.

In the credential store, the alias name of

ggadm2is linked to a user connect string ofggadm2@dbms2.The alias name of ggadmcap is linked to a user connect string ofggadmcap@dbmscap.DBLOGIN USERIDALIAS ggadm2 MININGDBLOGIN USERIDALIAS ggadmcap REGISTER EXTRACT ext2 DATABASE - Create Extract at the mining

database:

ADD EXTRACT ext2 INTEGRATED TRANLOG, BEGIN NOW - Edit the Extract parameter file

ext2.prm. In the credential store, the alias name of ggadm2 is linked to a user connect string ofggadm2@dbms2. The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap:USERIDALIAS ggadm2 TRANLOGOPTIONS MININGUSERALIAS ggadmcap TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine N) - Start Extract:

START EXTRACT ext2

Set up Extract (ext3) to Capture Changes in Real-time Mode from Online Logs Sent by DBMS3

Perform the following steps on the DBMSCAP downstream mining database:

- Register Extract with the mining database for source database DBMS3.

In the credential store, the alias name of

ggadm3is linked to a user connect string ofggadm3@dbms3.The alias name of ggadmcap is linked to a user connect string ofggadmcap@dbmscap.DBLOGIN USERID ggadm3 MININGDBLOGIN USERID ggadmcap REGISTER EXTRACT ext3 DATABASE

- Create Extract at the mining

database:

ADD EXTRACT ext3 INTEGRATED TRANLOG, BEGIN NOW - Edit the Extract parameter file

ext3.prm. In the credential store, the alias name of ggadm3 is linked to a user connect string ofggadm3@dbms3. The alias name ofggadmcapis linked to a user connect string ofggadmcap@dbmscap:USERIDALIAS ggadm3 TRANLOGOPTIONS MININGUSERALIAS ggadmcap TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine N) - Start Extract:

START EXTRACT ext3

Note:

You can create multiple Extracts running in real-time integrated capture mode in the downstream mining database, as long as they all are capturing data from the same source database, such as all capturing for database DBMS3 in the preceding example.