7.5 Realtime Parquet Ingestion into AWS S3 Buckets with Oracle GoldenGate for Distributed Applications and Analytics

This topic covers a step-by-step process that shows how to ingest parquet files into AWS S3 buckets in real-time with Oracle GoldenGate for Distributed Applications and Analytics (GG for DAA).

Parent topic: Quickstarts

7.5.1 Install Dependency Files

Oracle GoldenGate for Big Data uses client libraries in the replication process. You need to download these libraries by using the Dependency Downloader utility available in Oracle GoldenGate for Big Data before setting up the replication process. Dependency downloader is a set of shell scripts that downloads dependency jar files from Maven and other repositories.

Oracle GoldenGate for Big Data uses a 3-step process to ingest parquet into S3 buckets:- Generating local files from trail files (these local files are not accessible in OCI GoldenGate)

- Converting local files to Parquet format

- Loading files into AWS S3 buckets

Oracle GoldenGate for Big Data uses 3 different set of client libraries to create parquet files and loading into AWS S3.

To install the required dependency files:

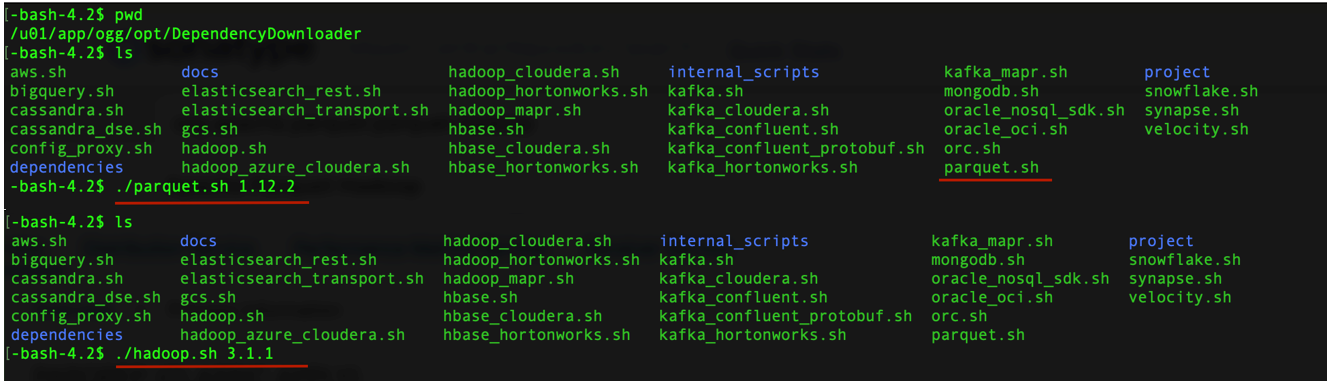

- Go to installation location of Dependency Downloader:

GG_HOME/opt/DependencyDownloader/. - Execute

parquet.sh(see Parquet),hadoop.sh(see HDFS Event Handler, andaws.shwith the required versions.A directory is created inGG_HOME/opt/DependencyDownloader/dependencies. For example,/u01/app/ogg/opt/DependencyDownloader/dependencies/aws_sdk_1.12.30.The following directories are created inFigure 7-26 Executing parquet.sh, hadoop.sh, and aws.sha with the required versions.

GG_HOME/opt/DependencyDownloader/dependencies:/u01/app/ogg/opt/DependencyDownloader/dependencies/aws_sdk_1.12.309/u01/app/ogg/opt/DependencyDownloader/dependencies/hadoop_3.3.0/u01/app/ogg/opt/DependencyDownloader/dependencies/parquet_1.12.3

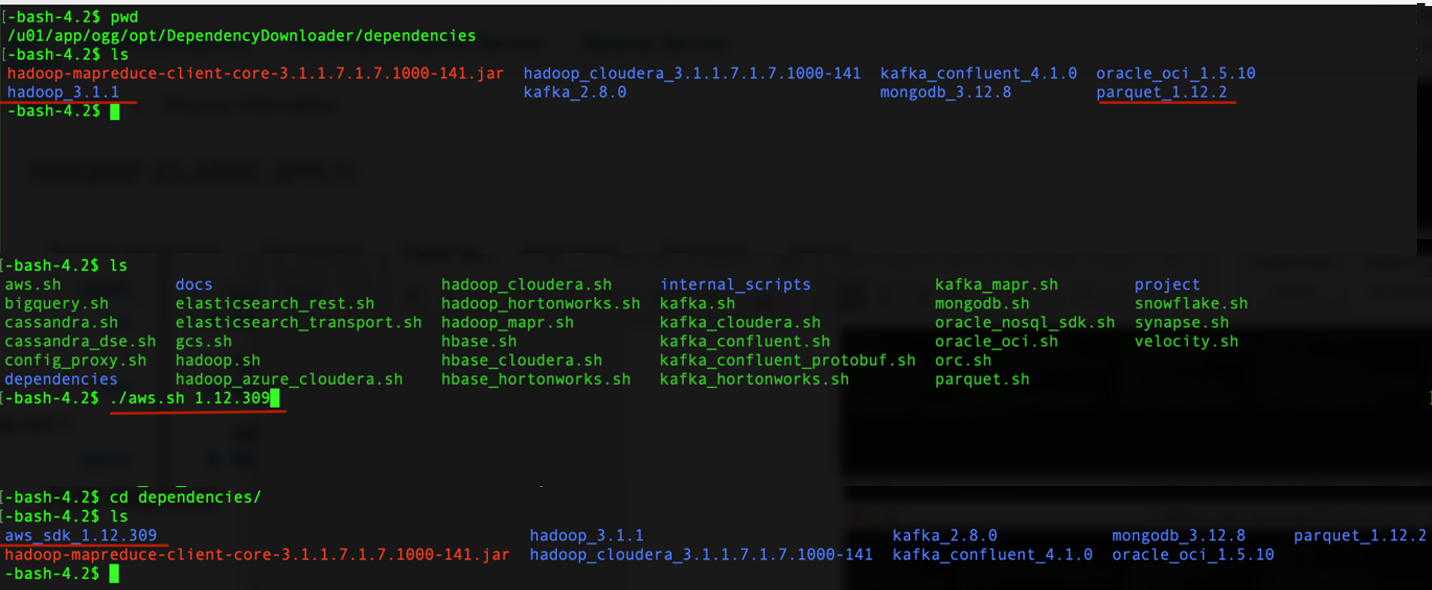

Figure 7-27 S3 Directories

7.5.2 Create a Replicat in Oracle GoldenGate for Big Data

To create a replicat in Oracle GoldenGate for Big Data:

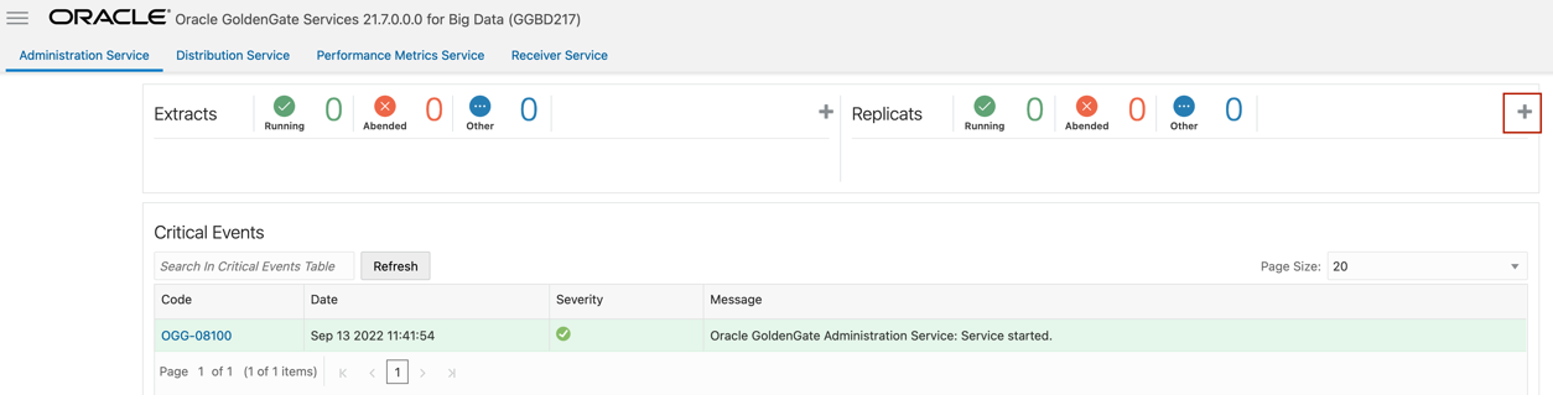

- In the Oracle GoldenGate for Big Data UI, in the Administration

Service tab, click the + sign to add a replicat.

Figure 7-28 Click + in the Administration Service tab.

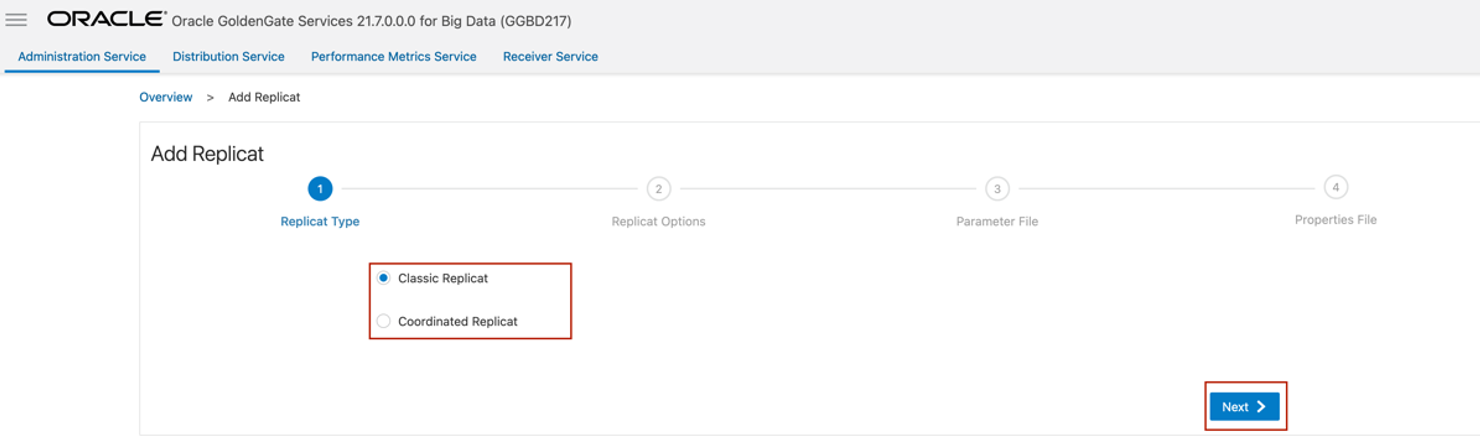

- Select the Replicat Type and click Next.

There are two different Replicat types here: Classic and Coordinated. Classic Replicat is a single threaded process whereas Coordinated Replicat is a multithreaded one that applies transactions in parallel.

Figure 7-29 Select the Replicat Type and click Next.

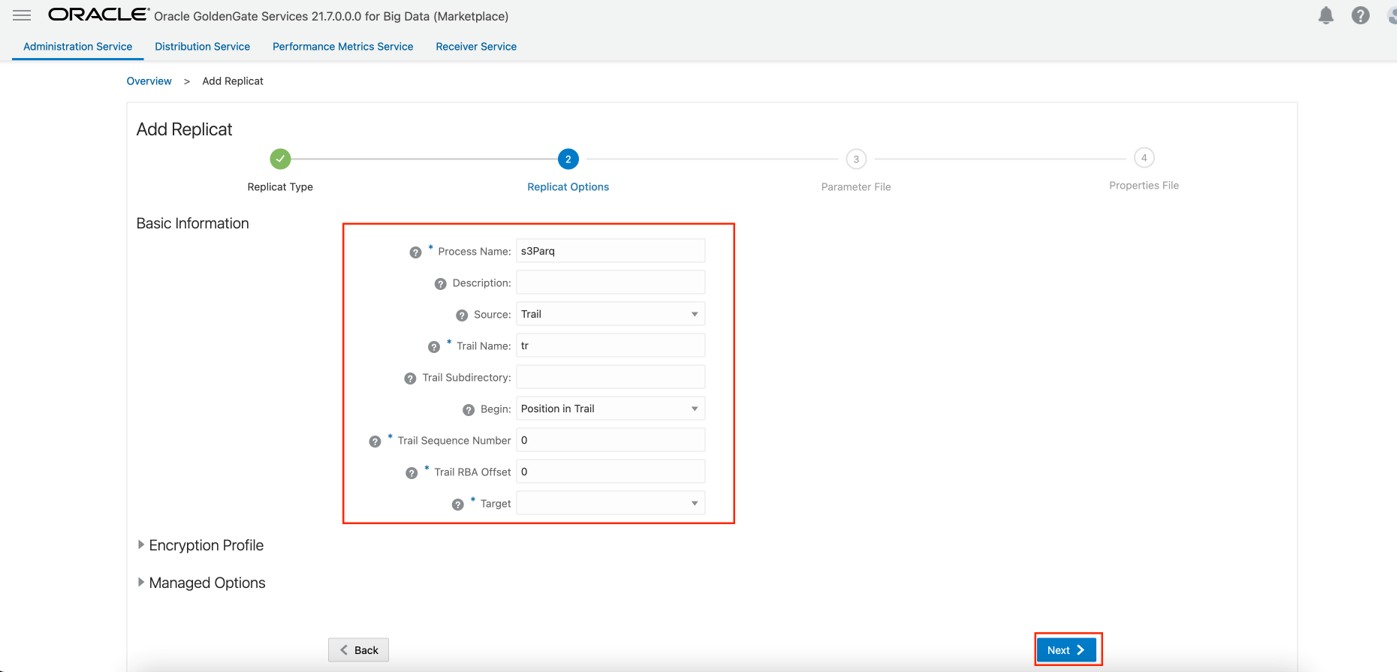

- Enter the basic information, and click Next:

- Process Name: Name of the Replicat

- Trail Name: Name of the required trail file

- Target: Do not fill this field.

Figure 7-30 Process Name, Trail Name, and Target Names

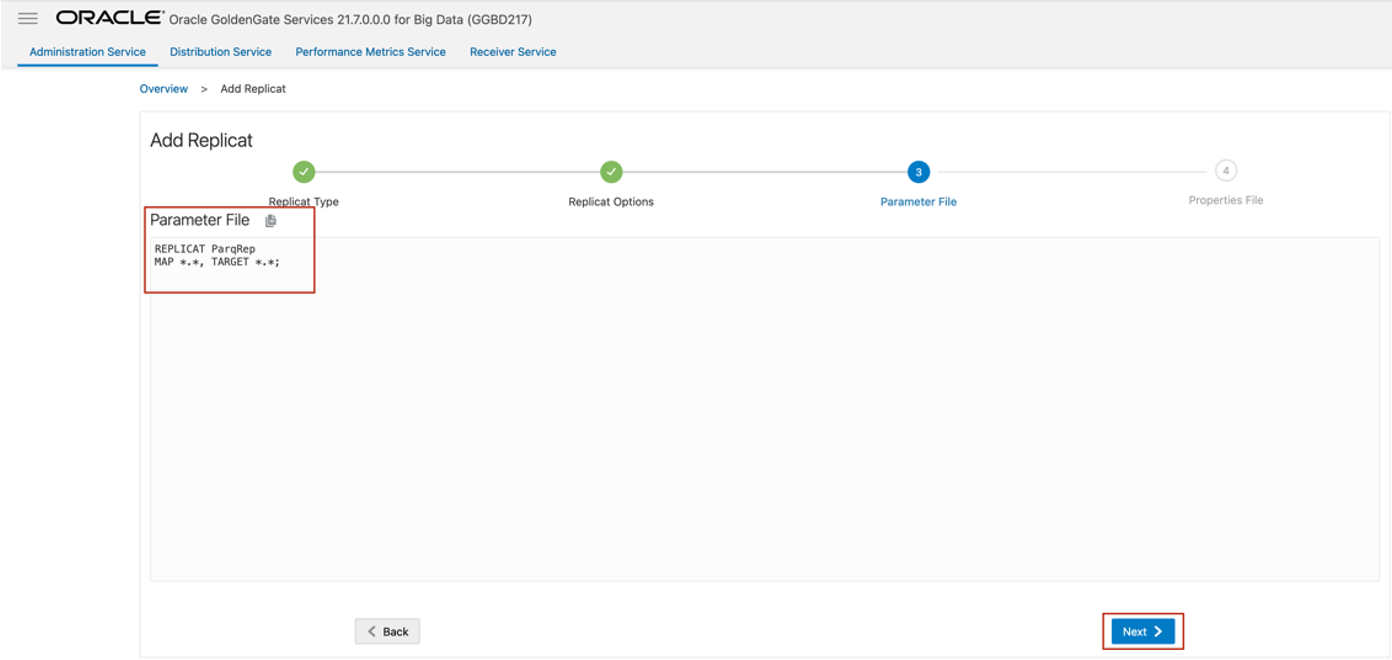

- Enter Parameter File details and click Next. In the

Parameter File, you can either specify source to target mapping or leave it as

is with a wildcard selection. If you select Coordinated Replicat as the

Replicat Type, then provide the following additional parameter:

TARGETDB LIBFILE libggjava.so SET property=<ggbd-deployment_home>/etc/conf/ogg/your_replicat_name.propertiesFigure 7-31 Provide Parameter File details and click Next.

- Copy and paste the following property list into the properties

file, update as needed, and click Create and

Run.

#The File Writer Handler – no need to change gg.handlerlist=filewriter gg.handler.filewriter.type=filewriter gg.handler.filewriter.mode=op gg.handler.filewriter.pathMappingTemplate=./dirout gg.handler.filewriter.stateFileDirectory=./dirsta gg.handler.filewriter.fileRollInterval=7m gg.handler.filewriter.inactivityRollInterval=30s gg.handler.filewriter.fileWriteActiveSuffix=.tmp gg.handler.filewriter.finalizeAction=delete ### Avro OCF – no need to change gg.handler.filewriter.format=avro_row_ocf gg.handler.filewriter.fileNameMappingTemplate=${groupName}_${fullyQualifiedTableName}_${currentTimestamp}.avro gg.handler.filewriter.format.pkUpdateHandling=delete-insert gg.handler.filewriter.format.metaColumnsTemplate=${optype},${position} gg.handler.filewriter.format.iso8601Format=false gg.handler.filewriter.partitionByTable=true gg.handler.filewriter.rollOnShutdown=true #The Parquet Event Handler – no need to change gg.handler.filewriter.eventHandler=parquet gg.eventhandler.parquet.type=parquet gg.eventhandler.parquet.pathMappingTemplate=./dirparquet gg.eventhandler.parquet.fileNameMappingTemplate=${groupName}_${fullyQualifiedTableName}_${currentTimestamp}.parquet gg.eventhandler.parquet.writeToHDFS=false gg.eventhandler.parquet.finalizeAction=delete #TODO Select S3 Event Handler – no need to change gg.eventhandler.parquet.eventHandler=s3 #TODO Set S3 Event Handler- please update as needed gg.eventhandler.s3.type=s3 gg.eventhandler.s3.region=your-aws-region gg.eventhandler.s3.bucketMappingTemplate=target s3 bucketname gg.eventhandler.s3.pathMappingTemplate=ogg/data/${fullyQualifiedTableName} gg.eventhandler.s3.accessKeyId=XXXXXXXXX gg.eventhandler.s3.secretKey=XXXXXXXX #TODO Set the classpath to the Parquet client libries and the Hadoop client with the path you noted in step1 gg.classpath= jvm.bootoptions=-Xmx512m -Xms32m

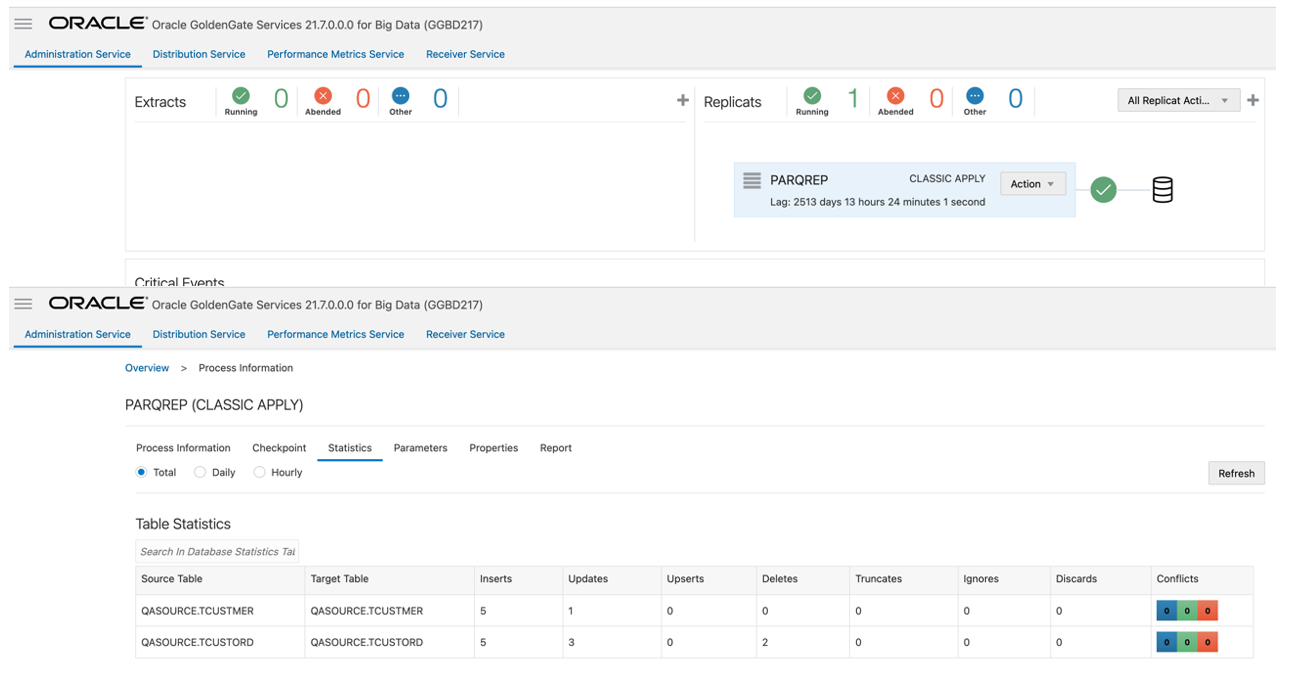

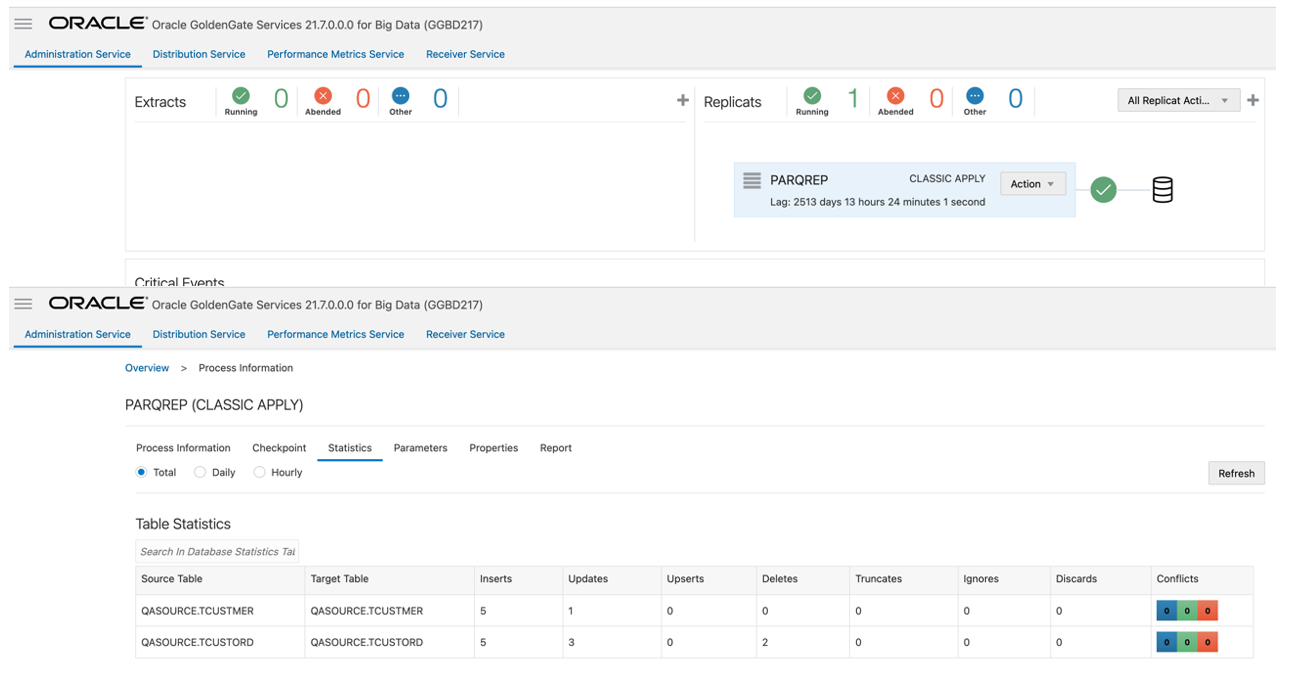

- If replicat starts successfully, then the replicat is in running

state. You can go to action/details/statistics to see the replication

statistics.

Figure 7-32 Replication Statistics

- Go to the AWS S3 console and check the bucket.

Figure 7-33 S3 Bucket

Note:

- If target S3 bucket does not exist, then it will be auto created by Oracle GoldenGate for Big Data. Use Template Keywords to dynamically assign S3 bucket names.

- S3 Event Handler can be configured for the Proxy server. For more information, see Amazon S3.

- You can use different properties to control the behaviour of file writing. You can set file sizes, inactivity periods, and more. For more information, see the blog:Oracle GoldenGate for Big Data File Writer Handler Behavior.

- For Kafka connection issues, see Oracle Support.