7 SAP ABAP BW

This chapter includes the following sections:

7.1 Introduction

The SAP BW Knowledge Modules let Oracle Data Integrator connect to SAP-BW system using SAP Java Connector (SAP JCo) libraries. These adapters allow mass data extraction from SAP-BW systems.

If this is the first time you are using the SAP BW adapter, it is recommended to review Getting Started with SAP ABAP BW Adapter for Oracle Data Integrator.

It contains the complete pre-requisites list and step-by-step instructions including SAP connection testing.

7.1.1 Concepts

The SAP BW Knowledge Modules for Oracle Data Integrator use mature integration methods for SAP-BW system, in order to:

-

Reverse-Engineer SAP BW metadata

-

Extract and load data from SAP BW system (source) to an Oracle or non-Oracle Staging Area

The reverse-engineering process returns the following SAP BW objects inside an ODI model:

-

Each ODS/DSO object is represented as an ODI datastore.

-

Each InfoObject will be represented in ODI as a submodel containing up to three datastores:

-

InfoObjects having master data have a master data datastore containing all InfoObject attributes

-

InfoObjects having attached text data have a text datastore containing all text related attributes

-

InfoObjects having hierarchies defined have a hierarchy datastore containing all hierarchy related attributes

-

-

Each InfoCube will be represented as a single ODI datastore. This datastore includes attributes for all characteristics of all dimensions as well as for all key figures.

-

Each OpenHubDestination is represented as an ODI datastore.

7.1.2 Knowledge Modules

Oracle Data Integrator provides the Knowledge Modules listed in Table 7-1 for handling SAP BW data.

The Oracle Data Integrator SAP BW Knowledge Modules provide integration from SAP BW systems using SAP JCo libraries. This set of KMs has the following features:

-

Reads SAP BW data from SAP BW system.

-

Loads this data into Oracle or non-Oracle Staging Area.

-

Reverse-engineers SAP Metadata and proposes a tree browser to select only the required Metadata.

-

Uses flexfields to map the SAP BW data targets types (InfoCube, InfoObject, ODS/DSO, OpenHub and Text Table) and their attributes.

Table 7-1 SAP BW Knowledge Modules

| Knowledge Module | Description |

|---|---|

|

LKM SAP BW to Oracle (SQLLDR) |

Extracts data from SAP BW system into a flat file and then loads it into Oracle Staging Area using the SQL*LOADER command line utility. |

|

RKM SAP ERP Connection Test |

This RKM is used for testing the SAP connection from Oracle Data Integrator. See Additional Information for SAP ABAP ERP Adapter for more information. |

|

RKM SAP BW |

Reverse-engineering Knowledge Module to retrieve SAP specific metadata for InfoCubes, InfoObjects (including Texts and Hierarchies), ODS/DSO and OpenHubDestinations. |

|

LKM SAP BW to SQL |

Extracts data from SAP BW into a flat file and then loads it into a Staging Area using a JDBC connection. |

7.1.3 Overview of the SAP BW Integration Process

The RKM SAP BW enables Oracle Data Integrator (ODI) to connect to SAP BW system using SAP JCo libraries and perform a customized reverse-engineering of SAP BW metadata.

The LKM SAP BW to Oracle (SQLLDR) and LKM SAP BW to SQL are in charge of extracting and loading data from SAP BW system (source) to an Oracle or non-Oracle Staging Area.

Note:

Access to SAP BW is made through ABAP. As a consequence, the technology used for connecting is SAP ABAP, and the topology elements, as well as the model will be based on the SAP ABAP technology. There is no SAP BW technology in ODI, but SAP BW-specific KMs based on the SAP ABAP technology.

7.1.3.1 Reverse-Engineering Process

Reverse-engineering uses the RKM SAP BW.

This knowledge module extracts the list of SAP BW data objects and optionally displays this list in a Metadata Browser graphical interface. The user selects from this list the SAP BW objects to reverse-engineer.

In the reverse-engineering process, data targets, primary keys, foreign keys and index are reverse-engineered into an Oracle Data Integrator model.

7.1.3.2 Integration Process

Data integration from SAP is managed by the LKM SAP BW to Oracle (SQLLDR) and the LKM SAP BW to SQL.

The LKM SAP BW to Oracle (SQLLDR) is used for mappings sourcing from SAP via ABAP and having a Staging Area located in an Oracle Database and the LKM SAP BW to SQL is used for non-Oracle staging areas.

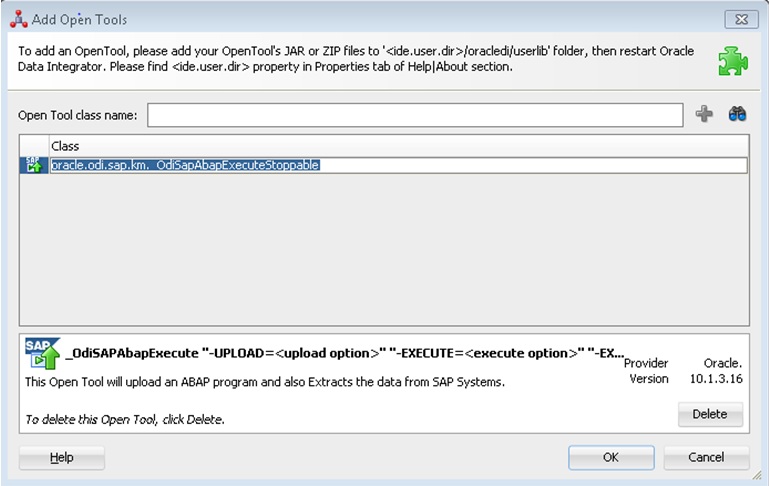

The KM first generates optimized ABAP code corresponding to the extraction process required for a given mapping. This code includes filters and joins that can be processed directly in the source SAP BW server. This ABAP program is automatically uploaded and is executed using the OdiSAPAbapExecute tool to generate an extraction file in SAP.

The KM then transfers this extraction file either to a pre-configured FTP server or to a shared directory. This file is then either downloaded from this server using FTP, SFTP, SCP or copied to the machine where the ODI Agent is located, and is finally loaded either using SQL*Loader or using a JDBC connection to the staging area. The agent can also directly read the extraction file on the FTP server's disk. See File Transfer Configurations for more information.

The rest of the integration process (data integrity check and integration) is managed with other Oracle Data Integration KMs.

7.2 Installation and Configuration

Make sure you have read the information in this section before you start working with the SAP BW data:

7.2.1 System Requirements and Certifications

Before performing any installation you should read the system requirements and certification documentation to ensure that your environment meets the minimum installation requirements for the products you are installing.

The list of supported platforms and versions is available on Oracle Technical Network (OTN):

http://www.oracle.com/technetwork/middleware/data-integrator/overview/index.html.

7.2.2 Technology Specific Requirements

Some of the Knowledge Modules for SAP BW use specific features of SAP-BW system and Oracle database. This section lists the requirements related to these features.

-

A JCo version compatible with adapter must be used. The list of supported JCo versions is available from the Oracle Technology Network (OTN). See System Requirements and Certifications for more information.

-

A JVM version compatible with both Oracle Data Integrator and JCo must be used.

-

The adapter supports two transfer modes for transferring data from SAP system to the ODI agent: data transfer using a Shared Directory and data transfer through FTP. For details and restrictions, see File Transfer Configurations.

Depending on the chosen file transfer mode the following requirements must be met:

-

Data transfer through a Shared Directory (recommended transfer method)

The LKM SAP BW to Oracle (SQLLDR) requires a folder that is shared between the SAP system and the ODI agent. The SAP application server transfers the data by writing it out into a folder that is accessible from the SAP system and the ODI agent machine. This is typically done by sharing a folder of the ODI agent machine with the SAP system. Note that the shared folder does not necessarily have to be located on the ODI agent machine. A shared folder on a third machine is also possible, as long as the shared folder is accessible to both the ODI agent machine and the SAP system.

Note:

For security reasons, folders located on the SAP server should not be shared. You should instead share a folder located of the ODI agent machine with the SAP system, or use a third machine as the shared file server.

The shared folder must be accessible to SAP system and not just to the underlying operating system. This means that the folder needs to be declared in SAP transaction AL11 and the folder opens successfully in AL11.

-

Data transfer through FTP

LKM SAP BW to Oracle (SQLLDR) requires a FTP server to upload data from the SAP BW system. This data is either read locally by the agent executing the mapping (when this agent runs on the FTP server machine), or remotely (when this agent is located on a different machine than the FTP server). This FTP server must be accessible over the network from both the SAP BW machine and the agent machine.

-

-

For "LKM SAP BW to Oracle (SQLLDR)" only: SQL*Loader is required on the machine running the agent when executing mappings using LKM SAP BW to Oracle (SQLLDR). SQL*Loader is used for loading data extracted from SAP to the Oracle staging area.

7.2.3 Connectivity Requirements

Oracle Data Integrator connects to the SAP BW system hosting the SAP BW data using JCo. It also uses a FTP Server or a shared directory to host the data extracted from the SAP system.

This section describes the required connection information:

7.2.3.1 Installing and Configuring JCo

The SAP adapter uses JCo to connect to the SAP system. JCo must be configured before proceeding with the project.

To install and configure JCo:

Note:

For SAP Secure Network Connections, ensure the following:

-

the environment variable

SECUDIRpoints to the directory containing any certificate files. -

the environment variable

SNC_LIBpoints to the directory containingsapcrypto.dll/libsapcrypto.sofor Linux.

Please contact your SAP Administrator for more details on certificates and Crypto Libraries.

7.2.3.2 Installing ODI SAP Components into SAP System

The ODI SAP adapter communicates with the SAP System using a few ODI SAP RFCs. These RFCs are installed by your SAP Basis team using SAP Transport requests. Please contact your SAP administrators for installing the ODI SAP Components and assigning the required SAP user authorizations following the instructions given in Installing ODI SAP Components.

7.2.3.3 Requesting FTP Server Access

This section applies only if you plan to transfer data using FTP. You can skip this section if you use a Shared Directory for the data transfer or if your SAP system is older than the following:

-

SAP ECC6 EHP6 or later

-

SAP BW/BI 7.4 or later

The following steps must be performed before any subsequent steps in this guide can be performed. These steps are typically performed by your SAP Basis team. Failure to perform these setup instructions will lead to FTP failure when running any ODI SAP extraction jobs.

To request FTP server access:

- Login to SAP server.

- Execute transaction SE16.

- Enter

SAPFTP_SERVERSin the Table Name field and click icon to create entries.

icon to create entries. - Enter IP address of the FTP server in the FTP_SERVER_NAME field.

- Enter port of the FTP server in the FTP_Server_Port field.

- Click

icon to save.

icon to save.

7.2.3.4 Validating the SAP Environment Setup

Validating the ODI SAP Setup contains instructions for some basic validation of the SAP environment for the use with the ODI SAP Adapter. Please ask your SAP Basis team to run all validations and provide back the validation results like screen shots and confirmations.

7.2.3.5 Gathering SAP Connection Information

In order to connect to the SAP BW system, you must request the following information from your SAP administrators:

-

for SAP Group Logons:

-

SAP System ID: The three-character, unique identifier of a SAP system in a landscape.

-

Message Server: IP address/ host name of SAP Message Server.

-

Message Server Port: port number or service name of SAP Message Server.

-

Group/Server: Name of SAP Logon Group.

-

-

for SAP Server Logons:

-

SAP BW System IP Address or Hostname: IP address/ host name of the host on which SAP is running.

-

SAP Client Number: The three-digit number assigned to the self-contained unit which is called Client in SAP. A Client can be a training, development, testing or production client or represent different divisions in a large company.

-

SAP System Number: The two-digit number assigned to a SAP instance which is also called Web Application Server or WAS.

-

SAP System ID: The three-character, unique identifier of a SAP system in a landscape.

-

-

SAP BW System IP Address or Hostname: IP address/ host name of the host on which SAP is running.

-

SAP User: SAP User is the unique user name given to a user for logging on the SAP System.

-

SAP Password: Case-sensitive password used by the user to log in.

-

SAP Language: Code of the language used when logging in For example: EN for English, DE for German.

-

SAP Client Number: The three-digit number assigned to the self-contained unit which is called Client in SAP. A Client can be a training, development, testing or production client or represent different divisions in a large company.

-

SAP System Number: The two-digit number assigned to a SAP instance which is also called Web Application Server or WAS.

-

SAP System ID: The three-character, unique identifier of a SAP system in a landscape.

-

SAP SNC Connection Properties (optional) SAP Router String (optional): SAP is enhancing security through SNC and SAP router. It is used when these securities are implemented.

-

SAP Transport Layer Name: This string uniquely identifies a transport layer in a SAP landscape. It allows ODI to create transport requests for later deployment in SAP. Even though there is a default value here, this transport layer name must be provided by your SAP Basis team. Not doing so may result in significant delays during installation.

-

SAP Temporary Directory (

SAP_TMP_DIR): This parameter is used to define the custom work directory on the SAP system. It applies only in case of FTP transfer mode. This path is used for temporary files during extraction. The default value (FlexField value is left empty) is to use theDIR_HOMEpath defined in the SAP profile.Specify a directory on the SAP application server to be used for temporary files. The path must end on slash or backslash depending on the OS the SAP application server runs on. The ODI SAP user must have read and write privileges on this directory.

Caution:

The default value (empty FF value) must be avoided for any critical SAP system, as an excessive temp file during extraction could fill up the respective file system and can cause serious issues on the SAP system. Even for SAP development systems it is strongly recommended to override the default value.

-

SAP BW Version:

3.5or7.0 -

SAP Character Set: The character set is only required if your SAP system is not a UNICODE system. For a complete list of character sets, see Locale Data in the Oracle Database Globalization Support Guide. For example,

EE8ISO8859P2for Croatian Data. For UNICODE systems, useUTF8.

Note:

All the connection data listed above (except SAP SNC Connection Properties and SAP Router String) are mandatory and should be requested from the SAP Administrators. You may consider requesting support during connection setup from your SAP administrators.

7.2.3.6 Gathering FTP Connection Information

The SAP BW system will push data to a server using the FTP protocol. Collect the following information from your system administrator:

-

FTP server name or IP address

-

FTP login ID

-

FTP login password

-

Directory path for storing temporary data files

Validate that the FTP server is accessible both from SAP System and from ODI agent machine.

7.2.3.7 Gathering Shared Directory Information

Gathering Shared Directory information only applies, if you plan to transfer data through a shared directory. The SAP system will push data to a shared folder. For later setup, gather the following information from your system administrator:

-

(UNC) path name of the shared folder

Validate that the shared folder is accessible from both the SAP System and the ODI agent machine and does not require any interactive authentication to be accessed.

Please note that the shared folder must be accessible from the SAP system using the <sid>adm user and from the operating system user that starts the ODI agent.

7.3 Defining the Topology

You must define the two data servers used for SAP integration. The SAP ABAP Data Server and the FTP data server.

7.3.1 Create the File Data Server

This data server corresponds to the FTP server or File Server into which the extraction file will be pushed from SAP and picked up for SQL*Loader / JDBC driver.

7.3.1.1 Create a File Data Server

Create a File data server as described in Creating a File Data Server of Connectivity and Knowledge Modules Guide for Oracle Data Integrator. This section describes the parameters specific to SAP BW.

Depending on the chosen data transfer mode, this data server must point either to:

-

An existing FTP server into which the extraction file will be pushed from SAP and picked up for loading or

-

The shared folder into which the SAP system will write the extraction file and from which SQL*Loader/ ODI Flat File Driver will pick it up. This schema represents the folder in the FTP host where the extraction file will be pushed.

Note that the parameters for the data server depend on the data transfer mode.

-

When transferring data through FTP, set the parameters as follows:

-

Host (Data Server): FTP server IP host name or IP address

-

User: Username to log into FTP server

-

Password: Password for the user

-

-

When transferring data through a Shared Directory, set the parameters as follows:

-

Host (Data Server): n/a

-

User: n/a

-

Password: n/a

-

-

For use with "LKM SAP BW to SQL" these additional parameters must be configured:

-

JDBC driver class: com.sunopsis.jdbc.driver.file.FileDriver

-

JDBC URL: jdbc:snps:dbfile?ENCODING=UTF8

The above URL is for SAP UNICODE systems. For non-UNICODE systems, refer details on ENCODING parameter in Creating a File Data Server of Connectivity and Knowledge Modules Guide for Oracle Data Integrator. The encoding chosen on this URL must match the code page used by the SAP Application Server.

-

See File Transfer Configurations for more information.

7.3.1.2 Create the File Schema

In this File data server create a Physical Schema as described in Creating a File Physical Schema of the Connectivity and Knowledge Modules Guide for Oracle Data Integrator.

This schema represents the folder that is located either in the FTP host or the File Server. Note that this is the folder into which the extraction file will be pushed. Depending on the data transfer mode, specify the Data and Work Schemas as follows:

-

For the FTP file transfer:

-

Directory (Schema): Path on the FTP server to upload or download extraction files from the remote location. This path is used when uploading extraction files from the SAP BW system into the FTP server. It is also used by a remote agent to download the extraction files. Note that this path must use slashes and must end with a slash character.

-

Directory (Work Schema): Local path on the FTP server's machine. This path is used by an agent installed on this machine to access the extraction files without passing via FTP. This access method is used if the FTP_TRANSFER_METHOD parameter of the LKM SAP BW to Oracle (SQLLDR)/ LKM SAP BW to SQL is set to

NONE. The Work Schema is a local directory location. As a consequence, slashes or backslashes should be used according to the operating system. This path must end with a slash or backslash.Path names given on Data and Work schemas are not necessarily the same: the FTP server may provide access to a FTP directory named

/sapfiles- the value for Directory (Schema) - while the files are accessed locally inc:\inetpub\ftproot\sapfiles- the value for Directory (Work Schema).

-

-

For the Shared Directory transfer:

-

Directory (Schema): Path (UNC) of the shared folder to write and read extraction files. SAP System writes the extraction files into this folder. It is also used by a remote agent to copy the extraction files to the ODI agent machine. Note that this path must use slashes or backslashes according to the operating system of the SAP Application Server and must end with a slash or backslash character.

-

Directory (Work Schema): Local path on the server's machine hosting the shared folder. This path is used by an agent installed on this machine to access the extraction files without passing though the shared folder. This access method is used if the FTP_TRANSFER_METHOD parameter of the LKM SAP BW to Oracle (SQLLDR)/ LKM SAP BW to SQL is set to

FSMOUNT_DIRECT. The Work Schema is a local directory location. As a consequence, slashes or backslashes should be used according to the operating system. This path must end with a slash or backslash.

See File Transfer Configurations for more information.

-

Create a File Logical Schema called File Server for SAP ABAP, and map it to the Physical Schema. The name of this Logical Schema name is predefined and must be File Server for SAP ABAP.

7.3.2 Create the SAP ABAP Data Server

This SAP ABAP data server corresponds to the SAP server from which data will be extracted.

7.3.2.1 Create the SAP ABAP Data Server

To configure a SAP ABAP data server:

Except for Data Server Name, all the parameters that you provide while defining the SAP Data Server should be provided by the SAP Administrators. See Gathering SAP Connection Information for more information about these parameters.

7.3.2.2 Create the SAP ABAP Schema

To configure a SAP ABAP schema:

- Create a Physical Schema under the SAP ABAP data server as described in Creating a Physical Schema in Administering Oracle Data Integrator. This schema does not require any specific configuration. Only one physical schema is required under a SAP ABAP data server.

- Create a Logical Schema for this Physical Schema as described in Creating a Logical Schema in Administering Oracle Data Integrator in the appropriate context.

7.4 Setting up the Project

Setting up a project using SAP BW features follows the standard procedure. See Creating an Integration Project of the Developing Integration Projects with Oracle Data Integrator.

Import the following KMs into your Oracle Data Integrator project:

-

RKM SAP BW

-

LKM SAP BW to Oracle (SQLLDR)

-

LKM SAP BW to SQL

In addition to these specific SAP BW KMs, import the standard Oracle LKMs, IKMs, and CKMs to perform data extraction and data quality checks with an Oracle database. See Oracle Database in the Connectivity and Knowledge Modules Guide for Oracle Data Integrator for a list of available KMs.

7.5 Creating and Reverse-Engineering a Model

This section contains the following topics:

7.5.1 Creating a SAP BW Model

Create an SAP BW Model based on the SAP ABAP technology and on the SAP ABAP logical schema using the standard procedure, as described in Creating a Model of the Developing Integration Projects with Oracle Data Integrator.

7.5.2 Reverse-Engineering a SAP BW Model

To perform a Customized Reverse-Engineering with the RKM SAP BW, use the usual procedure, as described in Reverse-engineering a Model of the Developing Integration Projects with Oracle Data Integrator. This section details only the fields specific to SAP BW:

- In the Reverse Engineer tab of the SAP BW Model, select the RKM SAP BW.

- For the RKM SAP BW, set the USE_GUI KM option to

true. - Save the model.

- Click Reverse-Engineer in the Model Editor toolbar.

- The Tree Metadata Browser appears after the session is started. Select the data store object(s) to reverse.

- Click Reverse-Engineer in the Tree Metadata Browser window.

The reverse-engineering process returns the selected data store objects as datastores.

Note:

If the reverse-engineering is executed on a run-time agent, the USE_GUI option should be set to false. This option should be used only when the customized reverse-engineering is started using the agent built-in the Studio.

7.5.3 Reverse-Engineering a PSA Table in SAP BW Model

PSA tables are used to store data of a data source in BW system. Generally when we run load (Extraction) of a data-source in BW system, it gets all active metadata information from partner ERP system, and replicate this in BW system.

For example if we have 10 fields in ERP system and now we have added 2 more fields on it, this will be replicate into to BW system.

This data gets stored in BW system and PSA table holds the data which is extracted from ERP system. PSA tables are stored as a temporary transparent table in BW system and that are created, when we create a mapping of a data-source b/w BW & ERP. PSA is configurable.

Name of the PSA table starts with /BIO/*(Standard) or /BIO/*(Custom).

Pre-requisite:

-

Download the SAP BW ADDON transport request from the Bristlecone site, refer to section "C.2.1.1 Downloading the Transport Request files".

Note: Please use the following link instead mentioned in the referring section:

http://www.bristleconelabs.com/edel/showdownload.html?product=odi_sap_bw_addon. -

Import the downloaded Transport Request into the source SAP BW system, refer the section "C.2.1.2 Installing the Transport Request Files".

Starting the PSA Reverse-Engineering Process

To start the reverse-engineering process of the SAP BW PSA datastores:

-

In the Models tree view, open the SAP BW SourceModel.

-

In the Reverse Engineer tab:

-

Select the Global context.

-

Select the Customized option.

-

Select the RKM SAP ERP you have imported in the SAP BW Demo project.

-

Enter the following parameters in the SAP ERP KM options:

USE_GUI: No

SAP_TABLES_NAME: /BIC/B0000133000

-

Set other parameters according to your SAP configuration.

-

-

From the File menu, click Save to save your changes.

-

Click Reverse Engineer to start the reverse-engineering process.

-

Click OK. The Sessions Started Dialog is displayed.

-

Click OK.

-

Validate in Operator that the session is now in status running.

If session has failed, validate settings. Do not move on until all installation steps have been completed successfully.

-

Check in Model for the reversed PSA table.

Note:

Currently PSA table reversal is only supported in non-GUI mode.

7.6 Designing a Mapping

To create a mapping loading SAP BW data into an Oracle staging area:

- Create a mapping with source datastores from the SAP BW Model. This mapping should have an Oracle target or use an Oracle schema as the Staging Area.

- Create joins, filters, and map attributes for your mapping.

- In the Physical diagram of the mapping, select the access point for the SAP BW source data object(s). The Property Inspector opens for this object.

- In the Loading Knowledge Module tab, select the LKM SAP BW to Oracle (SQLLDR).

7.7 Considerations for SAP BW Integration

7.7.1 File Transfer Configurations

The ODI SAP adapter extracts data using ABAP programs. For transferring the data from SAP system to the ODI agent the adapter supports two transfer modes and different configurations:

7.7.1.1 Transfer using a Shared Directory (recommended)

During the extraction process the ABAP programs write chunks of data into the data file in the shared folder. For better performance this shared folder should be located on the ODI agent machine. In this setup, for LKM SAP BW to Oracle (SQLLDR): SQL*Loader reads locally the data file and loads the data into the Oracle staging area. For LKM SAP BW to SQL: The ODI File Driver reads locally the data file and inserts the data using JDBC into a non-Oracle staging area.

If the folder is not located on the ODI agent machine, then the ODI agent first needs to copy the file from the shared folder to the agent for loading the data using SQL*Loader/ JDBC-Connection in the next step.

Configuration 1: Shared Folder is physically located on the ODI Agent machine (recommended)

This configuration is used, when FTP_TRANSFER_METHOD = FSMOUNT_DIRECT. In this configuration the following data movements are performed:

-

The ABAP program extracts chunks of FETCH_ BATCH SIZE records and writes them into a file in the shared folder.

-

for LKM SAP BW to Oracle (SQLLDR): SQL*Loader reads the data file from this TEMP_DIR and loads the data into the Oracle staging area. For LKM SAP BW to SQL: The ODI File Driver reads the data file from this TEMP_DIR and inserts the data using JDBC into a non-Oracle staging area.

This configuration requires the following Topology settings:

-

Create a File data server pointing to the File server/ODI Agent machine:

-

Host (Data Server): n/a

-

User: n/a

-

Password: n/a

-

-

In this File data server, create a physical schema representing the shared folder. Specify the Data and Work Schemas as follows:

-

Directory (Schema): Path (UNC) of the shared folder used by the ABAP program to write extraction files.

-

Directory (Work Schema): Path for the agent to copy the file. If the file can be copied from the same shared path, then provide the same path as given in the Schema for the agent to copy the file.

Note:

Temporary files such as ctl, bad, dsc will be created in a local temporary folder on the run-time agent. The default temporary directory is the system's temporary directory. On UNIX this is typically /tmp and on Windows c:\Documents and Settings\<user>\Local Settings\Temp. This directory can be changed using the KM option TEMP_DIR.

-

Configuration 2: Shared folder is not physically located on the ODI Agent machine

This configuration is used, when FTP_TRANSFER_METHOD = FSMOUNT. In this configuration the following data movements are performed:

-

The ABAP program extracts chunks of FETCH_ BATCH SIZE records and writes them into a file in the shared folder.

-

The run-time agent copies the file into the directory given by TEMP_DIR option of the LKM.

-

for LKM SAP BW to Oracle (SQLLDR): SQL*Loader reads the data file from this TEMP_DIR and loads the data into the Oracle staging area. For LKM SAP BW to SQL: The ODI File Driver reads the data file from this TEMP_DIR and inserts the data using JDBC into a non-Oracle staging area.

This configuration requires the following Topology settings:

-

Create a File data server pointing to the file server into which the extraction file will be pushed from SAP and picked up from for SQL*Loader.

Set the parameters for this data server as follows:

-

Host (Data Server): n/a

-

User: n/a

-

Password: n/a

-

-

In this File data server create a physical schema representing the shared folder. Specify the Data and Work Schemas as follows:

-

Directory (Schema): Path (UNC) of the shared folder used by the ABAP program to write extraction files, and by the agent to copy the file.

-

Directory (Work Schema): Path (UNC) of the shared folder or the local path name of the mount point of the shared folder on the agent's host.

-

Please note that data files will be copied to the run-time agent from the shared folder in a local temporary folder. The default temporary directory is the system's temporary directory. On UNIX this is typically /tmp and on Windows c:\Documents and Settings\<user>\Local Settings\Temp. This directory can be changed using the KM option TEMP_DIR.

7.7.1.2 FTP based Transfer

At the end of the extraction process these ABAP programs will upload the data file to a FTP server. For better performance this FTP server should be located on the same machine as the run-time agent.

If the agent is not located on the same machine as the FTP server, it will download the file from the FTP server before loading it to the staging area SQL*Loader/ JDBC-Connection. This download operation is performed using FTP, SFTP or SCP.

Figure 7-3 Configuration 1: FTP Server is installed on an ODI Agent machine

Description of "Figure 7-3 Configuration 1: FTP Server is installed on an ODI Agent machine"

The configuration shown in Figure 7-3 is used, when FTP_TRANSFER_METHOD = NONE. In this configuration the following data movements are performed:

-

The ABAP program extracts the data and uploads the data file to the FTP server.

-

For LKM SAP BW to Oracle (SQLLDR): SQL*Loader reads locally the data file and loads the data into the Oracle staging area. For LKM SAP BW to SQL: The ODI File Driver reads locally the data file and inserts the data using JDBC into a non-Oracle staging area.

This configuration requires the following Topology settings:

-

Create a File data server pointing to the FTP server:

-

Host (Data Server): FTP server host name or IP address.

-

User: Username to log into FTP server.

-

Password: Password for the user.

-

-

In this File data server create a physical schema representing the folder in the FTP host where the extraction file will be pushed. Specify the Data and Work Schemas as follows:

-

Directory (Schema): Path on the FTP server for uploading SAP extraction files.

-

Directory (Work Schema): Local path on the FTP server's machine containing the SAP extraction file. The agent and SQL*Loader/ODI Flat File Driver read the extraction files from this location.

-

Figure 7-4 Configuration 2: FTP Server is not installed on ODI Agent machine

Description of "Figure 7-4 Configuration 2: FTP Server is not installed on ODI Agent machine"

The configuration shown in Figure 7-4 is used, when FTP_TRANSFER_METHOD is FTP, SFTP or SCP. In this configuration the following data movements are performed:

-

The ABAP program extracts the data and uploads the data file to the FTP server.

-

The ODI agent downloads the file from the FTP server into the directory given by KM Option TEMP_DIR.

-

For LKM SAP BW to Oracle (SQLLDR): SQL*Loader reads the data file from this TEMP_DIR and loads the data into the Oracle staging area. For LKM SAP BW to SQL: The ODI File Driver reads the data file from this TEMP_DIR and inserts the data using JDBC into a non-Oracle staging area.

This configuration requires the following Topology settings:

-

Create a File data server pointing to the FTP server:

-

Host (Data Server): FTP server host name or IP address.

-

User: User name to log into FTP server.

-

Password: Password for the user.

-

-

In this File data server create a physical schema representing the folder in the FTP host where the extraction file will be pushed. Specify the Data and Work Schemas as follows:

-

Directory (Schema): Path on the FTP server for uploading SAP extraction files.

-

Directory (Work Schema): <undefined>; this path is left blank, as data files are never accessed directly from the FTP server's file system.

-

Considerations and Limitations:

The FTP based data transfer uses the widely spread (S)FTP file transfer and requires all data to be held in SAP's application server memory before transfer. Therefore the required memory per SAP session increases with the amount of data extracted and will set an upper limit to the data volume. This upper limit can be adjusted to a certain extend by increasing the sessions memory settings in SAP.

The required setup for the shared folder based configuration is slightly more complex, but it removes the need for all data to fit into SAP AS' memory and is therefore the recommended extraction method.

7.7.2 Execution Modes

Background Processing

By default, the generated ABAP code will be deployed as an ABAP report. On execution, this report is submitted to the SAP scheduler for background processing. The job status is then monitored by ODI.

The KM option JOB_CLASS defines the priority of the background job. Valid values (corresponding to SAP JOB_CLASS settings) are:

JOB_CLASS = A is Highest Priority

JOB_CLASS = B is Normal Priority

JOB_CLASS = C is Lowest Priority

Dialog Mode Processing

For backward compatibility, the KM option BACKGROUND_PROCESSING can be set to false. The generated ABAP code will then be deployed as an RFC. On execution, this RFC is called by ODI to extract the data.

Dialog mode processing has been deprecated and is currently supported for backward compatibility only. In future releases, dialog processing may be removed entirely.

7.7.3 Controlling ABAP Uploading / ABAP code in production

During development ODI generates ABAP code and uploads it into the SAP development system with every mapping execution. This automatic code upload allows quick development cycles.

Once a Mapping or Package has been unit tested and is ready to be migrated out of the development environment, the generated SAP ABAP code has to be transported to the respective SAP system using SAP's CTS (Change and Transport System) like any other SAP ABAP code. This is standard SAP practice. To facilitate this task, SAP transport requests are automatically created during upload into development. Please contact your SAP administrator for transporting generated ODI SAP ABAP programs.

In case you are working with distinct ODI repositories for dev, test and production, ensure that your ODI scenario matches the ODI ABAP code of the respective SAP system. That is, you have to transport the SAP ABAP code using SAP CTS from your SAP development system to your SAP QA system *and* transport the ODI scenario (which has generated the transported ABAP code) from your ODI development repository to your ODI QA repository. Please see Working with Scenarios of Developing Integration Projects with Oracle Data Integrator for details on how to transport ODI scenarios.

Once outside of development ODI should no longer upload ABAP code, as the ABAP code has been transported by SAP's CTS and such non-development systems usually do not allow ABAP uploading.

Even though uploading can be explicitly turned off by setting the LKM option UPLOAD_ABAP_CODE to No, it usually is turned off using the FlexField "SAP Allow ABAP Upload" defined on the SAP data server in ODI Topology: The ABAP code is only uploaded, if both the LKM option UPLOAD_ABAP_CODE and the Flexfield SAP Allow ABAP Upload are set to Yes. For disabling any upload into production systems it is sufficient to set the Flexfield "SAP Allow ABAP Upload" to 0 in Topology.

Tip:

To configure a mapping that uploads the ABAP code in development but skips the upload in QA or production:

-

Set the KM option

UPLOAD_ABAP_CODEset toYesin all mappings -

Configure the SAP data servers in the Topology as follows:

-

Set the Flexfield SAP Allow ABAP Upload to

1for all SAP development systems -

Set the Flexfield SAP Allow ABAP Upload to

0for all other SAP systems

-

Note:

Before starting the extraction process, ODI verifies that the mapping/scenario matches the code installed in SAP. If there is a discrepancy - for example, if the scenario was modified but the ABAP code was not re-uploaded - an exception is thrown.

In some situations it may be desirable just to install the Mapping's ABAP extraction code and not to extract any data, such as for an automated installation. In this case all mappings can be linked inside a package with the KM option EXECUTE_ABAP_CODE set to False in every mapping. Executing this package will then install all ABAP code, but will not perform any execution.

To avoid the modification of all mappings (setting EXECUTE_ABAP_CODE to False as described above), you can instead disable all SAP ABAP executions by using the FlexField SAP Allow ABAP Execute on the ODI DataServer. If this FlexField is disabled, the ABAP code is not executed even if the KM option EXECUTE_ABAP_CODE is set to True.

Manual Upload

In some cases, automatic upload may not be allowed even in development systems. For such situations the KM option MANUAL_ABAP_CODE_UPLOAD allows manual uploads. If set to true, ODI will create a text file containing the generated ABAP code. Please note that for any ABAP code generation, the DataServer FlexField SAP Allow ABAP Upload must be set to 1. This includes manual upload. By default, the text file is created as "REPORT_" + ABAP_PROG_NAME + ".ABAP" in the system's temp folder ("java.io.tmpdir"). This code is handed over to the SAP administrator, who will install it. Once installed the ODI mapping can be executed with MANUAL_ABAP_CODE_UPLOAD and UPLOAD_ABAP_CODE both set back to false.

7.7.4 Managing ODI SAP Transport Requests

During development, ABAP code is uploaded to the SAP system with every mapping execution. More precisely:

An ODI mapping extracting SAP data generates one or several ABAP extraction programs (e.g. when join location is set to staging and consequently two extraction jobs are created). By default, all ABAP extraction programs of one mapping are assigned to one SAP function group. The ABAP extraction programs for a different mapping will be assigned to a different SAP function group. The default function group name is similar to ZODI_FGR_<Mapping Id>.

During upload, a SAP CTS transport request is created for each ODI Mapping (for each SAP function group). This allows granular deployment of the generated ODI ABAP extraction programs via SAP CTS.

Grouping (Background Processing)

When the ABAP code of multiple ODI Mappings should be grouped into a single transport request for more coarse-grained deployment control, the following steps are needed:

-

Set KM option

SAP_REPORT_NAME_PREFIXfor a common prefix for all ABAP reports. For example, ZODI_DWH_SALES_ on all mappings. -

Choose a KM option

ABAP_PROGRAM_NAMEfor every individual mapping. For example, LOAD01, LOAD02, etc.

These sample settings would result in a single transport request containing the ABAP reports called ZODI_DWH_SALES_LOAD01, ZODI_DWH_SALES_LOAD02, etc.

Grouping (Dialog mode processing, deprecated)

When the ABAP code of multiple ODI Mappings should be grouped into a single transport request for more coarse-grained deployment control, the KM option SAP_FUNCTION_GROUP for all LKMs in these mappings can be set to a user defined value, e.g. ZODI_FGR_DWH_SALES. This then leads to ODI generating all ABAP extraction programs into the same SAP function group which is then attached to a single transport request. Please contact your SAP administrator for valid function group names at your site.

Tip:

The name of the generated ABAP extraction programs is by default similar to ZODI_<Mapping Id>_<SourceSet Id>. This ensures convenient development due to unique program names. While the MappingId never changes, certain changes to an ODI mapping can cause the SourceSetId to change and consequently cause the respective extraction program name to change. Therefore it is recommended to use user-defined program names, once development stabilizes. ABAP program names can be set by defining a value for LKM option ABAP_PROGRAM_NAME, e.g. ZODI_DWH_SALES_DATA01. Please contact your SAP administrator for applicable naming conventions.

Transport Request Description

When ODI creates a transport request, it will set the transport request description to the text provided in KM option SAP_TRANSPORT_REQUEST_DESC and applies to function group defined in KM option FUNCTION_GROUP.

By default, the ODI Step name (which is usually the mapping name) will be used.

Code generation expressions like ODI:<%=odiRef.getPackage("PACKAGE_NAME")%> may be useful when grouping several mappings into one SAP function group/ SAP transport request.

7.7.5 SAP Packages and SAP Function Groups

SAP Packages

All SAP objects installed by the ODI SAP Adapter are grouped into SAP packages :

-

ZODI_RKM_PCKG contains any RKM related objects. These objects are used during development phase.

-

ZODI_LKM_PCKG contains any extraction programs generated by any SAP LKM.

If requested by the SAP administrator, these default names can be overwritten using the KM options SAP_PACKAGE_NAME_ODI_DEV and SAP_PACKAGE_NAME_ODI_PROD. These values must be set during the first-time installation. Later changes require a reinstallation of the ODI SAP Adatper with a prior uninstallation.

SAP Function Groups

All SAP function modules are grouped into SAP function groups:

-

ZODI_FGR contains any function modules needed during development, e.g. for retrieving metadata. These function modules are installed by the RKM during first-time installation.

The default name can be overwritten during first-time RKM installation using RKM option

SAP_FUNCTION_GROUP_ODI_DEV. -

ZODI_FGR_PROD contains any function modules needed at runtime in production, e.g. for monitoring ABAP report execution status. These function modules are installed by the RKM during first-time installation.

The default name can be overwritten during first-time RKM installation using RKM option

SAP_FUNCTION_GROUP_ODI_PROD. -

ZODI_FGR_PROD_... contains any data extraction function modules. Such function modules are generated by the LKM when using deprecated dialog mode (BACKGROUND_PROCESSING = false).

By default every mapping uses its own function group. The default values can be overwritten using the LKM option SAP_FUNCTION_GROUP, which is independent of the two function group names mentioned above.

See Managing ODI SAP Transport Requests for more information.

7.7.6 Log Files

During RKM and LKM execution many messages are logged in ODI's logging facility. These messages in the ODI log files and other log files may contain valuable details for troubleshooting. See Runtime Logging for ODI components of Administering Oracle Data Integrator for details about the ODI's logging facility. Table 7-2 describes the different log files and their usage:

Table 7-2 Log Files

| Default Log File Name | KM / Phase | Content |

|---|---|---|

|

|

RKM |

Execution Log of metadata retrieval |

|

|

RKM |

Information about first time installation of SAP RFC for RKM

Note: Only for RKM based installation. |

|

|

LKM - Generation Time |

Information about code generation for ABAP extractor |

|

|

LKM - Runtime |

Information about installation of ABAP extractor |

|

|

LKM - Runtime |

Information about Delta Extraction |

|

|

LKM - Runtime |

SQL*Loader log file |

|

|

LKM - Runtime |

OS std output during SQL*Loader execution, may contain information, e.g. when SQL*Loader is not installed |

|

|

LKM - Runtime |

OS error output during SQL*Loader execution, may contain information, e.g. when SQL*Loader is not installed |

7.7.7 Limitation of the SAP BW Adapter

The SAP ABAP BW adapter has the following limitations:

-

The Test button for validating SAP Connection definition in ODI's Topology manager is not supported.

-

The SAP BW data store type (InfoCube, InfoObject, ODS/DSO, OpenHub, Hierarchy, and Text Table) cannot be changed after a table has been reverse-engineered.

-

The SAP ABAP KMs only support Ordered Joins.

-

Full Outer join and Right outer joins are not supported.

-

In one-to-many relationships (InfoCube and associated InfoObject join), the first data target should be InfoCube and then InfoObjects and its TextTables.

-

SAP BW MultiCubes are not supported.

MultiCubes are a logical view on physical InfoCubes and do not hold any data per se. Please consider extracting from base InfoCubes and then join the data on staging area using ODI.

-

InfoCubes can be left-outer joined to multiple IOs on source.

Note:

-

Only a single one of these InfoObjects can be joined to its Text table on source.

-

Joining multiple InfoObjects to their respective Text tables is possible on staging.

-

-

Hierarchy datastores cannot be joined on source with any other SAP BW objects.

-

Text datastores of InfoObjects having no master data cannot be joined on source with any other SAP BW objects.

-

OpenHub datastores cannot be joined on source with any other SAP BW objects.

-

Only attribute RSHIENM can be filtered on using a constant string value, for example

HIER_0GL_ACCOUNT.RSHIENM = 'MYHIER1'