7 Federating Caches Across Clusters

This chapter includes the following sections:

- Overview of Federated Caching

Federated caching federates cache data asynchronously across multiple geographically dispersed clusters. Cached data is federated across clusters to provide redundancy, off-site backup, and multiple points of access for application users in different geographical locations. - General Steps for Setting Up Federated Caching

Federated caching is configuration based and in most cases requires no application changes. Setup includes configuring federation participants, topologies, and caches. - Defining Federation Participants

Each Coherence cluster in a federation must be defined as a federation participant. Federation participants are defined in an operational override file. - Changing the Default Settings of Federation Participants

Federation participants can be explicitly configured to override their default settings. - Understanding Federation Topologies

Federation topologies determine how data is federated and synchronized between cluster participants in a federation. - Defining Federation Topologies

A topology definition includes the federation roles that each cluster participant performs in the topology. Multiple topologies can be defined and participants can be part of multiple topologies. - Defining Federated Cache Schemes

Each participant in the cluster must include a federated cache scheme in their respective cache configuration file. - Associating a Federated Cache with a Federation Topology

A federated cache must be associated with a topology for data to be federated to federation participants. - Overriding the Destination Cache

The default behavior of the federation service is to federate data to a cache on the remote participant using same cache name that is defined on the local participant. A different remote cache can be explicitly specified if required. - Excluding Caches from Being Federated

Federated caches can be exclude from being federated to other participants. - Limiting Federation Service Resource Usage

The federation service uses an internal journal record cache to track cache data changes which have not yet been federated to other federation participants. This journal record cache is stored in Elastic Data. The journal record cache can grow large in some circumstances such as when federation is paused, if there is a significant spike in the rate of cache changes, or if a destination participant cluster is temporarily unreachable. - Resolving Federation Conflicts

Applications can implement any custom logic that is needed to resolve conflicts that may arise between concurrent updates of the same entry. - Using a Specific Network Interface for Federation Communication

Federation communication can be configured to use a network interface that is different than the interface used for cluster communication. - Load Balancing Federated Connections

By default, a federation-based strategy is used that distributes connections to federated service members that are being utilized the least. Custom strategies can be created or the default strategy can be modified as required. - Managing Federated Caching

Federated caching should be managed on each cluster participant in the same manner as any non-federated cluster and distributed cache to ensure optimal performance and resource usage.

Parent topic: Advanced Administration

Overview of Federated Caching

Note:

Care should be taken when issuing the following cache operations because they are not supported or federated to the destination participants and will cause inconsistencies in your federated data:- Destroy Cache – If you issue this operation, the local cluster’s cache will be destroyed, but any destination caches will be left intact.

- Truncate Cache – If you issue this operation, the local cluster’s cache will be truncated, but any destination caches will be left intact.

This section includes the following topics:

- Multiple Federation Topologies

- Conflict Resolution

- Federation Configuration

- Management and Monitoring

Parent topic: Federating Caches Across Clusters

Multiple Federation Topologies

Federated caching supports multiple federation topologies. These include:

- active-active

- active-passive

- hub-spoke

- central-federation

The topologies define common federation strategies between clusters and support a wide variety of use cases. Custom federation topologies can also be created as required.

Parent topic: Overview of Federated Caching

Conflict Resolution

Federated caching provides applications with the ability to accept, reject, or modify cache entries being stored locally or remotely. Conflict resolution is application specific to allow the greatest amount of flexibility when defining federation rules.

Parent topic: Overview of Federated Caching

Federation Configuration

Federated caching is configured using Coherence configuration files and requires no changes to application code. An operational override file is used to configure federation participants and the federation topology. A cache configuration file is used to create federated caches schemes. A federated cache is a type of partitioned cache service and is managed by a federated cache service instance.

Parent topic: Overview of Federated Caching

Management and Monitoring

Federated caching is managed using attributes and operations from the FederationManagerMBean, DestinationMBean, OriginMBean and TopologyMBean MBeans. These MBeans make it easy to perform administrative operations, such as starting and stopping federation and to monitor federation configuration and performance statistics. Many of these statistics and operations are also available from the Coherence VisualVM plug-in, Coherence CLI, or Grafana dashboards.

Federation attributes and statistics are aggregated in the federation-status, federation-origin, and federation-destination reports.

In addition, as with any distributed cache, federated services and caches can

be managed and monitored using the attributes operations of the

ServiceMBean MBean and CacheMBean MBean and

related reports and the VisualVM plug-in tabs.

For more information about managing Coherence federation, see Managing Federated Caching.

Parent topic: Overview of Federated Caching

General Steps for Setting Up Federated Caching

To set up federated caching:

- Ensure that all clusters that are participating in the federation are operational and that you know the address (host and cluster port) of at least one cache server in each cluster.

- Configure each cluster with a list of the cluster participants that are in the federation. See Defining Federation Participants.

- Configure each cluster with a topology definition that specifies how data is federated among cluster participants. See Defining Federation Topologies.

- Configure each cluster with a federated cache scheme that is used to store cached data. See Defining Federated Cache Schemes.

- Configure the federated cache on each cluster to use a defined federation topology. See Associating a Federated Cache with a Federation Topology.

Parent topic: Federating Caches Across Clusters

Defining Federation Participants

To define federation participants, include any number of <participant> elements within the <participants> element. Use the <name> element to define a name for the participant and the <remote-addresses> element to define the address and port of at least one cache server or proxy that is located in the participant cluster. Enter the cluster port if you are using the NameService service to look up ephemeral ports. Entering an exact port is typically only used for environments which cannot use the NameService service for address lookups. The following example defines multiple participants and demonstrates both methods for specifying a remote address:

<federation-config>

<participants>

<participant>

<name>LocalClusterA</name>

<remote-addresses>

<socket-address>

<address>192.168.1.7</address>

<port>7574</port>

</socket-address>

</remote-addresses>

</participant>

<participant>

<name>RemoteClusterB</name>

<remote-addresses>

<socket-address>

<address>192.168.10.16</address>

<port>9001</port>

</socket-address>

</remote-addresses>

</participant>

<participant>

<name>RemoteClusterC</name>

<remote-addresses>

<socket-address>

<address>192.168.19.25</address>

<port>9001</port>

</socket-address>

</remote-addresses>

</participant>

</participants>

</federation-config>

The <address> element also supports external NAT addresses that route to local addresses; however the external and local addresses must use the same port number.

Parent topic: Federating Caches Across Clusters

Changing the Default Settings of Federation Participants

The default settings include:

-

The federation state that a cluster participant is in when the cluster is started.

-

The connect time-out to a destination cluster.

-

The send time-out for acknowledgement messages from a destination cluster.

-

The maximum bandwidth, per member, for sending federated data to a destination participant. This value is loaded from the source member's configuration of the destination participant.

Note:

The value of maximum bandwidth can be specified as a combination of a decimal factor and a unit descriptor such as Mbps, KBps, and so on. If no unit is specified, a unit of bps (bits per second) is assumed. -

The location meta-data for the participant

To change the

default settings of federation participants, edit the operational override file for the

cluster and modify the <participant> definition. Update the value

of each setting as required. For

example:

<participant>

<name>ClusterA</name>

<initial-action>start</initial-action>

<connect-timeout>1m</connect-timeout>

<send-timeout>5m</send-timeout>

<max-bandwidth>100Mbps</max-bandwidth>

<geo-ip>Philadelphia</geo-ip>

<remote-addresses>

<socket-address>

<address>192.168.1.7</address>

<port>7574</port>

</socket-address>

</remote-addresses>

</participant>

Parent topic: Federating Caches Across Clusters

Understanding Federation Topologies

The supported federation topologies are:

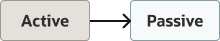

Active-Passive Topologies

Active-passive topologies are used to federate data from an active cluster to a passive cluster. Data that is put into active cluster is federated to the passive cluster. If data is put into the passive cluster, then it does not get federated to the active cluster. Consider using active-passive topologies when a copy of cached data is required for read-only operations or an off-site backup is required.

Figure 7-1 provides conceptual view of an active-passive topology.

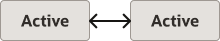

Active-Active Topologies

Active-active topologies are used to federate data between active clusters. Data that is put into one active cluster, is federated at the other active clusters. The active-active topology ensures that cached data is always synchronized between clusters. Consider using an active-active topology to provide applications in multiple geographical location with access to a local cluster instance.

Figure 7-2 provides a conceptual view of an active-active topology.

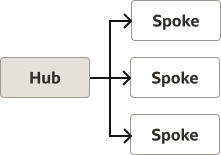

Hub and Spoke Topologies

Hub and spoke topologies are used to federate data from a single hub cluster to multiple spoke clusters. The hub cluster can only send data and spoke clusters can only receive data. Consider using a hub and spoke topology when multiple geographically dispersed copies of a cluster are required. Each spoke cluster can be used by local applications to perform read-only operations.

Figure 7-3 provides a conceptual view of a hub and spoke topology.

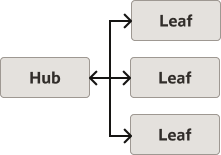

Central Federation Topologies

Central federation topologies are used to federate data from a single hub to multiple leaf clusters. In addition, each leaf can send data to the hub cluster and the hub cluster re-sends (repeats) the data to all the other leaf clusters. Consider using a central federation topology to provide applications in multiple geographical location with access to a local cluster instance.

Figure 7-4 provides a conceptual view of a central federation topology.

Custom Federation Topologies

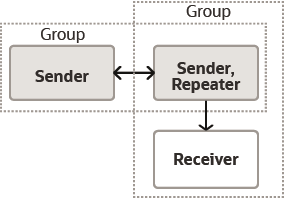

Custom federation topologies are used to create free-from topologies. Clusters are organized into groups and each cluster is designated with a role in the group. The roles include: sender, receiver, or repeater. A sender participant only federates changes occurring on the local cluster. A repeater federates both local cluster changes as well changes it receives from other participants. Only sender and repeater clusters can federate data to other clusters in the group. Consider creating a custom federation topology if the pre-defined federation topologies do not address the federation requirements of a cache.

Figure 7-5 provides a conceptual view of a custom federation topology in one possible configuration.

Parent topic: Federating Caches Across Clusters

Defining Federation Topologies

Federation topologies are defined in an operational override file within the <federation-config> element. If you are unsure about which federation topology to use, then see Understanding Federation Topologies before completing the instructions in this section.

Note:

If no topology is defined, then all the participants are assumed to be in an active-active topology.

This section includes the following topics:

- Defining Active-Passive Topologies

- Defining Active-Active Topologies

- Defining Hub and Spoke Topologies

- Defining Central Federation Topologies

- Defining Custom Topologies

Parent topic: Federating Caches Across Clusters

Defining Active-Passive Topologies

To configure active-passive topologies edit the operational override file and include an <active-passive> element within the <topology-definitions> element. Use the <name> element to include a name that is used to reference this topology. Use the <active> element to define active participants and the <passive> element to define passive participants. For example:

<federation-config>

...

<topology-definitions>

<active-passive>

<name>MyTopology</name>

<active>LocalClusterA</active>

<passive>RemoteClusterB</passive>

</active-passive>

</topology-definitions>

</federation-config>

With this topology, changes that are made on LocalClusterA are federated to RemoteClusterB, but changes that are made on RemoteClusterB are not federated to LocalClusterA.

Parent topic: Defining Federation Topologies

Defining Active-Active Topologies

To configure active-active topologies edit the operational override file and include an <active-active> element within the <topology-definitions> element. Use the <name> element to include a name that is used to reference this topology. Use the <active> element to define active participants. For example:

<federation-config>

...

<topology-definitions>

<active-active>

<name>MyTopology</name>

<active>LocalClusterA</active>

<active>RemoteClusterB</active>

</active-active>

</topology-definitions>

</federation-config>

With this topology, changes that are made on LocalClusterA are federated to RemoteClusterB and changes that are made on RemoteClusterB are federated to LocalClusterA.

Parent topic: Defining Federation Topologies

Defining Hub and Spoke Topologies

To configure hub and spoke topologies edit the operational override file and include a <hub-spoke> element within the <topology-definitions> element. Use the <name> element to include a name that is used to reference this topology. Use the <hub> element to define the hub participant and the <spoke> element to define the spoke participants. For example:

<federation-config>

...

<topology-definitions>

<hub-spoke>

<name>MyTopology</name>

<hub>LocalClusterA</hub>

<spoke>RemoteClusterB</spoke>

<spoke>RemoteClusterC</spoke>

</hub-spoke>

</topology-definitions>

</federation-config>

With this topology, changes that are made on LocalClusterA are federated to RemoteClusterB and RemoteClusterC, but changes that are made on RemoteClusterB and RemoteClusterC are not federated to LocalClusterA.

Parent topic: Defining Federation Topologies

Defining Central Federation Topologies

To configure central federation topologies edit the operational override file and include a <central-replication> element within the <topology-definitions> element. Use the <name> element to include a name that is used to reference this topology. Use the <hub> element to define the hub participant and the <leaf> element to define the leaf participants. For example:

<federation-config>

...

<topology-definitions>

<central-replication>

<name>MyTopology</name>

<hub>LocalClusterA</hub>

<leaf>RemoteClusterB</leaf>

<leaf>RemoteClusterC</leaf>

</central-replication>

</topology-definitions>

</federation-config>

With this topology, changes that are made on LocalClusterA are federated to RemoteClusterB and RemoteClusterC. Changes that are made on RemoteClusterB or RemoteClusterC are federated to LocalClusterA, which re-sends the data to the other cluster participant.

Parent topic: Defining Federation Topologies

Defining Custom Topologies

To configure custom topologies edit the operational override file and include a <custom-topology> element within the <topology-definitions> element. Use the <name> element to include a name that is used to reference this topology. Use the <group> element within the <groups> element to define the role (sender, repeater, or receiver) for each the participant in the group. For example:

<federation-config>

...

<topology-definitions>

<custom-topology>

<name>MyTopology</name>

<groups>

<group>

<sender>LocalClusterA</sender>

<sender>RemoteClusterB</sender>

</group>

<group>

<repeater>LocalClusterA</repeater>

<receiver>RemoteClusterC</receiver>

</group>

</groups>

</custom-topology>

</topology-definitions>

</federation-config>

With this topology, changes that are made on LocalClusterA or RemoteClusterB are federated to RemoteClusterC. Any changes made on RemoteClusterC are not federated to LocalCluster A or RemoteClusterB.

Parent topic: Defining Federation Topologies

Defining Federated Cache Schemes

<federated-scheme> element is used to define federated caches. Any number of federated caches can be defined in a cache configuration file. See federated-scheme in Developing Applications with Oracle Coherence.

The federated caches on all participants must be managed by the same federated service instance. The service is specified using the <service-name> element.

Example 7-1 defines a basic federated cache scheme that uses federated as the scheme name and federated as the service instance name. The scheme is mapped to the cache name example. The <autostart> element is set to true to start the federated cache service on a cache server node.

Example 7-1 Sample Federated Cache Definition

<?xml version="1.0" encoding="windows-1252"?>

<cache-config xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://xmlns.oracle.com/coherence/coherence-cache-config"

xsi:schemaLocation="http://xmlns.oracle.com/coherence/coherence-cache-config

coherence-cache-config.xsd">

<caching-scheme-mapping>

<cache-mapping>

<cache-name>example</cache-name>

<scheme-name>federated</scheme-name>

</cache-mapping>

</caching-scheme-mapping>

<caching-schemes>

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme/>

</backing-map-scheme>

<autostart>true</autostart>

</federated-scheme>

</caching-schemes>

</cache-config>

Parent topic: Federating Caches Across Clusters

Associating a Federated Cache with a Federation Topology

Note:

If no topology is defined (all participants are assumed to be in an active-active topology) or if only one topology is defined, then a topology name does not need to be specified in a federated scheme definition.

To associate a federated cache with a federation topology, include a <topology> element within the <topologies> element and use the <name> element to references a federation topology that is defined in an operational override file. For example:

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<topologies>

<topology>

<name>MyTopology</name>

</topology>

</topologies>

</federated-scheme>

A federated cache can be associated with multiple federation topologies. For example:

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<topologies>

<topology>

<name>MyTopology1</name>

</topology>

<topology>

<name>MyTopology2</name>

</topology>

</topologies>

</federated-scheme>

Parent topic: Federating Caches Across Clusters

Overriding the Destination Cache

<service-name> element.

To override the default destination cache, include a <cache-name> element and set the value to the name of the destination cache on the remote participant. For example:

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<topologies>

<topology>

<name>MyTopology</name>

<cache-name>fed-remote</cache-name>

</topology>

</topologies>

</federated-scheme>

Parent topic: Federating Caches Across Clusters

Excluding Caches from Being Federated

To exclude a cache from being federated, add the <federated> element set to false as part of the cache mapping definition. For example:

<caching-scheme-mapping>

<cache-mapping>

<cache-name>example</cache-name>

<scheme-name>federated</scheme-name>

</cache-mapping>

<cache-mapping>

<cache-name>excluded-example</cache-name>

<scheme-name>federated</scheme-name>

<federated>false</federated>

</cache-mapping>

</caching-scheme-mapping>

...Parent topic: Federating Caches Across Clusters

Limiting Federation Service Resource Usage

The federation service uses an internal journal record cache to track cache data changes which have not yet been federated to other federation participants. This journal record cache is stored in Elastic Data. The journal record cache can grow large in some circumstances such as when federation is paused, if there is a significant spike in the rate of cache changes, or if a destination participant cluster is temporarily unreachable.

Federation and Elastic Data have configuration options which can limit the total size of the federation journal record cache and limit total memory and disk usage.

Limiting the Total Size of the Federation Journal Record Cache

The federated cache scheme <journalcache-highunits> element can be used to set the maximum allowable size of the federation internal journal record cache. When this limit is reached, the federation service moves the destination participants to ERROR state and removes all pending entries from the journal record cache.

After the cause of the large backlog is determined, the FederationManager MBean or other federated caching management options can be used to restart federation to the participants. For a list of alternative caching management options, see Managing Federated Caching.

To limit federation service resources usage, edit a federated cache scheme and set the <journalcache-highunits> element to the memory limit or number of cache entries allowed in the internal cache before the limit is reached. In the following example, the journal record cache is limited to 2GB in size:

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<journalcache-highunits>2G</journalcache-highunits>

</federated-scheme>Note:

Valid values for <journalcache-highunits> are memory values in G, K, M, (for example, 1G, 2K, 3M) or positive integers and zero. A memory value is treated as a memory limit on federation's backlog. If no units are specified, then the value is treated as a limit on the number of entries in the backlog. Zero implies no limit. The default value is 0.

Configuring Elastic Data for Federation

There are some Elastic Data configuration settings which are especially relevant for federation's use of Elastic Data.

Limiting Elastic Data's Memory Usage

By default, the Elastic Data RAM journal will use up to 25% of the JVM's heap size. If Elastic Data is only being used for federation, the RAM journal's <maximum-size> can be set to a lower limit, such as 5%. See ramjournal-manager in Developing Applications with Oracle Coherence.

Setting Elastic Data's File System Directory and Maximum Size

Unless otherwise specified, Elastic Data's flash journal uses the JVM operating system's temporary directory. You can change the location of journal files with the flashjournal-manager <directory> subelement.

By default, flash journal files will be 2GB in size. You can set flash journal to use smaller file sizes and to limit the total disk space used by flash journal with the flashjournal-manager <maximum-size> subelement. At minimum, flash journal's maximum size should be less than the total disk space capacity reserved for use by the flash journal.

Note that the flash journal maximum size should be larger than the federated scheme's <journalcache-highunits>, so that federation can gracefully clean up the federation journal record cache prior to reaching the flash journal maximum size.

See flashjournal-manager in Developing Applications with Oracle Coherence.

Parent topic: Federating Caches Across Clusters

Resolving Federation Conflicts

This section includes the following topics:

- Processing Federated Connection Events

- Processing Federated Change Events

- Federating Events to Custom Participants

Parent topic: Federating Caches Across Clusters

Processing Federated Connection Events

Federated connection events (FederatedConnectionEvent) represent the communication between participants of a federated service. Event types include: CONNECTING, DISCONNECTED, BACKLOG_EXCESSIVE, BACKLOG_NORMAL, and ERROR events. See Federated Connection Events in Developing Applications with Oracle Coherence.

To process federated connection events:

-

Create an event interceptor to process the desired event types and implement any custom logic as required. See Handling Live Events in Developing Applications with Oracle Coherence. The following example shows an interceptor that processes

ERRORevents and prints the participant name to the console.Note:

Federated connection events are raised on the same thread that caused the event. Interceptors that handle these events must never perform blocking operations.

package com.examples import com.tangosol.internal.federation.service.FederatedCacheServiceDispatcher; import com.tangosol.net.events.EventDispatcher; import com.tangosol.net.events.EventDispatcherAwareInterceptor; import com.tangosol.net.events.federation.FederatedConnectionEvent; import com.tangosol.net.events.annotation.Interceptor; import java.util.Map; @Interceptor(identifier = "testConnection", federatedConnectionEvents = FederatedConnectionEvent.Type.ERROR) public class ConnectionInterceptorImp implements EventDispatcherAwareInterceptor<FederatedConnectionEvent> { @Override public void onEvent(FederatedConnectionEvent event) { System.out.println("Participant in Error: " + event.getParticipantName()); } @Override public void introduceEventDispatcher(String sIdentifier, EventDispatcher dispatcher) { if (dispatcher instanceof FederatedCacheServiceDispatcher) { dispatcher.addEventInterceptor(sIdentifier, this); } } } -

Register the interceptor in a federated cache scheme. See Registering Event Interceptors in Developing Applications with Oracle Coherence. For example:

<federated-scheme> <scheme-name>federated</scheme-name> <service-name>federated</service-name> <backing-map-scheme> <local-scheme /> </backing-map-scheme> <autostart>true</autostart> <interceptors> <interceptor> <name>MyInterceptor</name> <instance> <class-name> com.examples.ConnectionInterceptorImp </class-name> </instance> </interceptor> </interceptors> <topologies> <topology> <name>MyTopology</name> </topology> </topologies> </federated-scheme> -

Ensure the interceptor implementation is found on the classpath at runtime.

Parent topic: Resolving Federation Conflicts

Processing Federated Change Events

Federated change events (FederatedChangeEvent) represent a transactional view of all the changes that occur on the local participant. All changes that belong to a single partition are captured in a single FederatedChangeEvent object. From the event, a map of ChangeRecord objects that are indexed by cache name is provided and the participant name to which the change relates is also accessible. Through the ChangeRecord map, you can accept the changes, modify the values, or reject the changes. The object also provides methods to extract or update POF entries using the PofExtractor and PofUpdater APIs.

Event types include: COMMITTING_LOCAL, COMMITTING_REMOTE, and REPLICATING events. REPLICATING events are dispatched before local entries are federated to remote participants. This event is used to perform changes to the entries prior to federation. Any changes performed in the REPLICATING event interceptor are not reflected in the local caches. COMMITTING_LOCAL events are dispatched before entries are inserted locally. It is designed to resolve any local conflicts. COMMITTING_REMOTE events are dispatched before entries from other participants are inserted locally. It is designed to resolve the conflicts between federating entries and local entries. Any changes performed when processing COMMITTING_LOCAL and COMMITTING_REMOTE events are reflected in the local participant caches.

Note:

-

In an active-active federation topology, modifications that are made to an entry when processing

COMMITTING_REMOTEevents are sent back to the originating participant. This can potentially end up in a cyclic loop where changes keep looping through the active participants. -

Interceptors that capture

COMMITTING_LOCALevents are not called for passive spoke participants. -

Synthetic operations are not included in federation change events.

To process federated change events:

-

Create an event interceptor to process the desired event types and implement any custom logic as required. See Handling Live Events in Developing Applications with Oracle Coherence. The following example shows an interceptor that processes

REPLICATINGevents and assigns a key name before the entry is federated.package com.examples import com.tangosol.coherence.federation.events.AbstractFederatedInterceptor; import com.tangosol.coherence.federation.ChangeRecord; import com.tangosol.coherence.federation.ChangeRecordUpdater; import com.tangosol.net.events.annotation.Interceptor; import com.tangosol.net.events.federation.FederatedChangeEvent; @Interceptor(identifier = "yourIdentifier", federatedChangeEvents = FederatedChangeEvent.Type.REPLICATING) public static class MyInterceptor extends AbstractFederatedInterceptor<String, String> { public ChangeRecordUpdater getChangeRecordUpdater() { return updater; } public class ChangeRecordUpdate implements ChangeRecordUpdater<String, String> { @Override public void update(String sParticipant, String sCacheName, ChangeRecord<String, String> record) { if (sParticipant.equals("NewYork") && (record.getKey()).equals("key")) { record.setValue("newyork-key"); } } } private ChangeRecordUpdate updater = new ChangeRecordUpdate(); } -

Register the interceptor in a federated cache scheme. See Registering Event Interceptors in Developing Applications with Oracle Coherence. For example:

<federated-scheme> <scheme-name>federated</scheme-name> <service-name>federated</service-name> <backing-map-scheme> <local-scheme /> </backing-map-scheme> <autostart>true</autostart> <interceptors> <interceptor> <name>MyInterceptor</name> <instance> <class-name> com.examples.MyInterceptor </class-name> </instance> </interceptor> </interceptors> <topologies> <topology> <name>MyTopology</name> </topology> </topologies> </federated-scheme> -

Ensure the interceptor implementation is found on the classpath at runtime.

Parent topic: Resolving Federation Conflicts

Federating Events to Custom Participants

Federated ChangeRecord objects can be federated to custom, non-cluster participants in addition to other cluster members. For example, ChangeRecord objects can be saved to a log, message queue, or perhaps one or more databases. Custom participants are implemented as event interceptors for the change records. Custom participants are only receiver participants.

To federate ChangeRecord objects to custom participants:

-

Create a

FederatedChangeEventinterceptor to processREPLICATINGevent types and implement any custom logic forChangeRecordobjects. See Handling Live Events in Developing Applications with Oracle Coherence. The following example shows an interceptor forREPLICATINGevents that processes federation change records. Note that theMapofChangeRecordobjects can be from multiple caches. For each entry in theMap, the key is the cache name and the value is a list ofChangeRecordobjects in that cache.@Interceptor(identifier = "MyInterceptor", federatedChangeEvents = FederatedChangeEvent.Type.REPLICATING) public class MyInterceptorImplChangeEvents implements EventInterceptor<FederatedChangeEvent> { @Override public void onEvent(FederatedChangeEvent event) { final String sParticipantName = "ForLogging"; if (sParticipantName.equals(event.getParticipant())) { Map<String, Iterable<ChangeRecord<Object, Object>>> mapChanges = event.getChanges(); switch (event.getType()) { case REPLICATING: m_cEvents++; for (Map.Entry<String, Iterable<ChangeRecord<Object, Object>>> entry : mapChanges.entrySet()) { for (ChangeRecord<Object, Object> record : entry.getValue()) { if (record.isDeleted()) { System.out.printf("deleted key: " + record.getKey() + "\n"); } else { System.out.printf("modified entry, key: " + record.getKey() + ", value: " + record.getModifiedEntry().getValue()); } } } break; default: throw new IllegalStateException("Expected event of type " + FederatedChangeEvent.Type.REPLICATING + ", but got event of type: " + event.getType()); } } } public long getMessageCount() { return m_cEvents; } private volatile long m_cEvents; } -

Configure a custom participant in the operational configuration file using

interceptoras the participant type and register the interceptor class using the<interceptor>element. For example:<participant> <name>ForLogging</name> <send-timeout>5s</send-timeout> <participant-type>interceptor</participant-type> <interceptors> <interceptor> <name>MyInterceptor</name> <instance> <class-name>example.MyInterceptorImplChangeEvents</class-name> </instance> </interceptor> </interceptors> </participant>Note:

You can either register the interceptor class in the participant configuration (as shown) or in a federated cache schema. If you register the interceptor class in the participant configuration, then it applies to all the federated cache services that use the participant. Specify the interceptor in a federated cache scheme if you want to control which services use the interceptor. See federated-scheme in Developing Applications with Oracle Coherence.

-

Include the custom participant as part of the federation topology for which you want to federate events. For example:

<topology-definitions> <active-active> <name>Active</name> <active>BOSTON</active> <active>NEWYORK</active> <interceptor>ForLogging</interceptor> </active-active> </topology-definitions> -

Ensure the interceptor implementation is found on the classpath at runtime.

Parent topic: Resolving Federation Conflicts

Using a Specific Network Interface for Federation Communication

To use a different network configuration for federation communication:

Parent topic: Federating Caches Across Clusters

Load Balancing Federated Connections

Connections between federated service members are distributed equally across federated service members based upon existing connection count and incoming message backlog. Typically, this algorithm provides the best load balancing strategy. However, you can choose to implement a different load balancing strategy as required.

This section includes the following topics:

- Using Federation-Based Load Balancing

- Implementing a Custom Federation-Based Load Balancing Strategy

- Using Client-Based Load Balancing

Parent topic: Federating Caches Across Clusters

Using Federation-Based Load Balancing

federation-based load balancing is the default strategy that is used to balance connections between two or more members of the same federation service. The strategy distribute connections equally across federated service members based upon existing connection count and incoming message backlog.

The federation-based load balancing strategy is configured within a <federated-scheme> definition using a <load-balancer> element that is set to federation. For clarity, the following example explicitly specifies the strategy. However, the strategy is used by default if no strategy is specified and is not required in a federated scheme definition.

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<load-balancer>federation</load-balancer>

<topologies>

<topology>

<name>MyTopology</name>

</topology>

</topologies>

</federated-scheme>

Parent topic: Load Balancing Federated Connections

Implementing a Custom Federation-Based Load Balancing Strategy

The com.tangosol.coherence.net.federation package includes the APIs that are used to balance client load across federated service members.

A custom strategy must implement the FederatedServiceLoadBalancer interface. New strategies can be created or the default strategy (DefaultFederatedServiceLoadBalancer) can be extended and modified as required.

To enable a custom federation-based load balancing strategy, edit a federated scheme and include an <instance> subelement within the <load-balancer> element and provide the fully qualified name of a class that implements the FederatedServiceLoadBalancer interface. The following example enables a custom federation-based load balancing strategy that is implemented in the MyFederationServiceLoadBalancer class:

...

<load-balancer>

<instance>

<class-name>package.MyFederationServiceLoadBalancer</class-name>

</instance>

</load-balancer>

...

In addition, the <instance> element also supports the use of a <class-factory-name> element to use a factory class that is responsible for creating FederatedServiceLoadBalancer instances, and a <method-name> element to specify the static factory method on the factory class that performs object instantiation. See instance in Developing Applications with Oracle Coherence.

Parent topic: Load Balancing Federated Connections

Using Client-Based Load Balancing

The client-based load balancing strategy relies upon a com.tangosol.net.AddressProvider implementation to dictate the distribution of connections across federated service members. If no address provider implementation is provided, each configured cluster participant member is tried in a random order until a connection is successful. See address-provider in Developing Applications with Oracle Coherence.

The client-based load balancing strategy is configured within a <federated-scheme> definition using a <load-balancer> element that is set to client. For example:

<federated-scheme>

<scheme-name>federated</scheme-name>

<service-name>federated</service-name>

<backing-map-scheme>

<local-scheme />

</backing-map-scheme>

<autostart>true</autostart>

<load-balancer>client</load-balancer>

<topologies>

<topology>

<name>MyTopology</name>

</topology>

</topologies>

</federated-scheme>

Parent topic: Load Balancing Federated Connections

Managing Federated Caching

- Coherence VisualVM Plug-in: See Using the Coherence VisualVM Plug-In in Managing Oracle Coherence.

- Coherence Reports: See Understanding the Federation Destination Report in Managing Oracle Coherence.

- Coherence MBeans: See Destination MBean in Managing Oracle Coherence.

- Coherence Command Line Interface: See Coherence CLI.

- Coherence Grafana Dashboards: See Visualizing Metrics in Grafana in Managing Oracle Coherence.

This section includes the following topics:

Parent topic: Federating Caches Across Clusters

Monitoring the Cluster Participant Status

Monitor the status of each cluster participant in the federation to ensure that there are no issues.

Coherence VisualVM Plug-in

Use the Federation tab in the Coherence VisualVM plug-in to view the

status of each cluster participant from the context of the local cluster

participant. That is, each destination cluster participant is listed and its status

is shown. In addition, the federation state of each node in the local cluster

participant is reported in the Outbound tab. Check the Error Description field to

view an error message, if the status of cluster participant is

Error.

Coherence Reports

Use the federation destination report

(federation-destination.txt) to view the status of each

destination cluster participant and the federation state of each node over time.

Coherence MBeans

Use the federation attributes on the DestinationMBean

MBean to view the status of each destination cluster participants and the federation

state of each node of the local cluster participant.

Coherence Command Line Interface

Use the Coherence Command Line Interface (CLI) to monitor and manage Coherence federation clusters from a terminal based interface. For more information on federation commands, see Federation. For more information about CLI, see coherence-cli.

Grafana Dashboards

In Grafana, navigate to the Coherence Federation Summary Dashboard and view the Status column for each Service and Participant. If this value is either a WARNING or an ERROR, you should investigate the Coherence log file in the sending nodes to determine the reason for the status.

Parent topic: Managing Federated Caching

Monitor Federation Performance and Throughput

Monitor federation performance and throughput to ensure that the local cluster participant is federating data to each participant without any substantial delays or lost data. Issues with performance and throughput can be a sign that there is a problem with the network connect between cluster participants or that there is a problem on the local cluster participant.

Coherence VisualVM Plug-in

Use the Federation tab in the Coherence VisualVM plug-in to view the current federation performance statistics and throughput from the local participant to each destination cluster participant. Select a destination cluster participant and view its federation performance statistics, then view the Current Throughput column on the Outbound tab to see the throughput to the selected participant from each node in the local cluster. Select an individual node in the Outbound tab to see its bandwidth utilization and federation performance in the graph tabs, respectively. Lastly, select the Inbound tab to view how efficiently the local cluster participant is receiving data from destination cluster participants.

Coherence Reports

Use the federation destination

report

(federation-destination.txt) and

the federation origin report

(federation-origin.txt) to view

federation performance statistics. The destination

report shows how efficiently each node in the

local cluster participant is sending data to each

destination cluster participant. The federation

origin reports shows how efficiently each node in

the local cluster participant is receiving data

from destination cluster participants.

Coherence MBeans

Use the persistence attributes on

the DestinationMBean MBean and

the OriginMBean MBean to view

federation performance statistics. The

DestinationMBean MBean attribute

shows how efficiently each node in the local

cluster participant is sending data to each

destination cluster participant. The

OriginMBean MBean shows how

efficiently the local cluster participant is

receiving data from destination cluster

participants.

Grafana Dashboards

- The destinations table shows the metrics for each sending node for that destination as well as the status of the connection. For a detailed information about each status value, see Destination MBean in Managing Oracle Coherence.

- The current envelope size indicates the number of messages waiting to be sent to the destination for all nodes.

- The current RAM journal and flash journal that are in use.

- The average apply time, roundtrip delay, and backlog delay for each node.

Note:

The current envelope size will never be zero because there is at least one entry per partition. You should monitor this value. If the value is trending upwards continuously over time (along with the RAM or flash journal), there may be issues with the ability of the members to send data to the destination participant. These values could trend upwards due to a replicate-all operation but should return to normal levels after the operation completes. If they do not, further investigation of the health of the destination participants should be carried out to determine the root cause.Parent topic: Managing Federated Caching