8 Deploying the Active Topology Automator Service

This chapter describes how to deploy and manage ATA service.

Overview of ATA

Oracle Communications Active Topology Automator (ATA) represents the spatial relationships among your inventory entities for the inventory and network topology.

-

ATA provides a graphical representation of topology where you can see your inventory and its relationships at the level of detail that meets your needs.

See ATA Help for more information about the topology visualization.

Use ATA to view and analyze the network and service data in the form of topology diagrams. ATA collects this data from UIM.

You use ATA for the following:

- Viewing the networks and services, along with the corresponding resources, in the form of topological diagrams and graphical maps.

- Planning the network capacity.

- Tracking networks.

- Viewing alarm information.

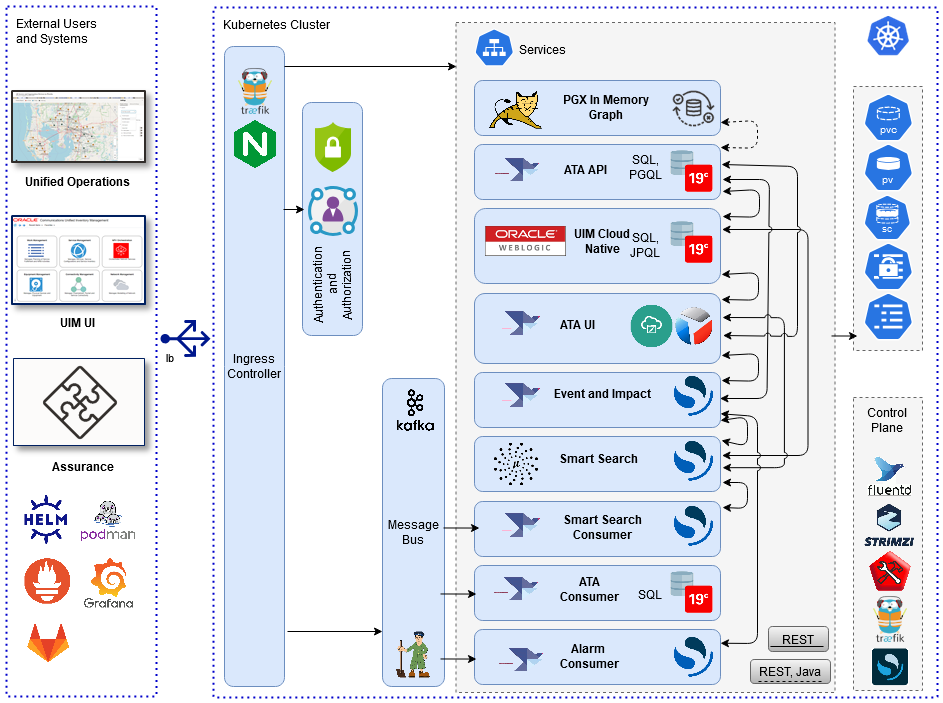

ATA Architecture

The following figure shows a high-level architecture of the ATA service.

UIM as the Producer

UIM communicates with the Topology Service using REST APIs and Kafka Message Bus. UIM is the Producer for Create, Update and Delete operations from UIM that impact Topology. UIM uses REST APIs to communicate directly with the ATA Service while building the messages and can also continue processing when the Topology Service is unavailable.

ATA Consumer

The ATA consumer service is a message consumer for topology updates from UIM using Message Bus service. ATA consumer processes the message of TopologyNodeCreate, TopologyNodeUpdate, TopologyNodeDelete, TopologyEdgeCreate, TopologyEdgeUpdate, TopologyEdgeDelete, TopologyProfileDelete, TopologyProfileDelete, and TopologyProfileUpdate event types. See "ATA Events and Definitions" chapter in Active Topology and Automator Asynchronous Events Guide.

Alarm Consumer

The ATA alarm consumer service consumes TMF642 wrapped in TMF688 messages from the Assurance systems. The alarm consumer supports processing of multiple messages of event types AlarmCreateEvent, AlarmAttributeValueChangeEvent, ClearAlarmCreateEvent, and AlarmDeleteEvent. In incoming alarm messaged from the Assurance system can be of two types, normal alarm (fault) and threshold crossing (performance) alarm. The alarm consumer will extract the alarmed object identifier from the input message and creates and associates the event (alarm) to the inventory resource.

SmartSearch API

SmartSearch API enables you to search, filter, autocompletes, or aggregates the bulk API to batch process for insert, update, or delete top entries from the OpenSearch database.

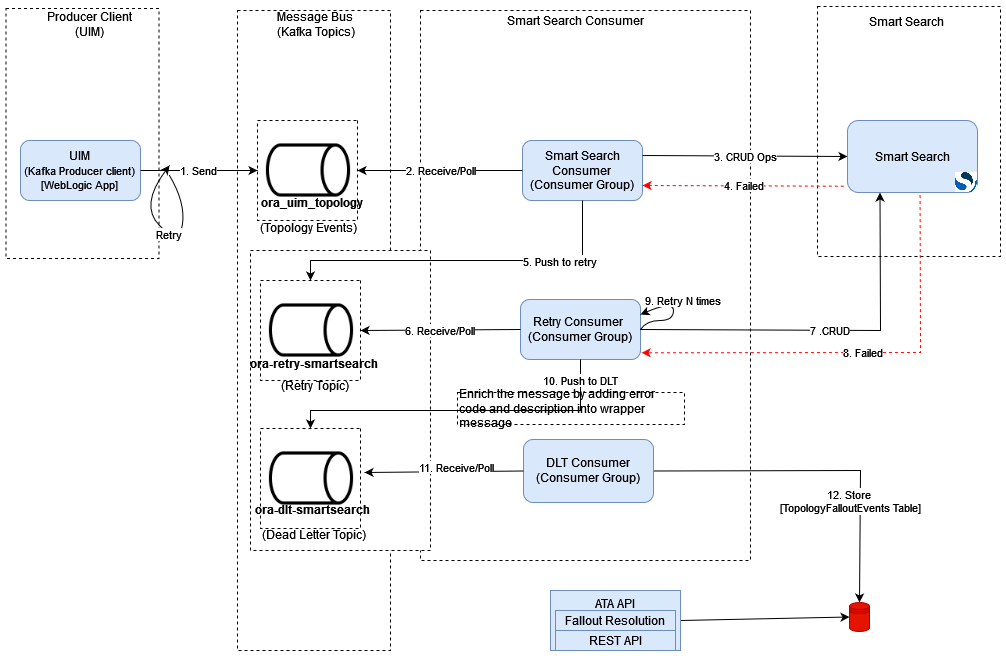

SmartSearch Consumer

SmartSearch is a consumer for UIM. It processes multiple message events such as TopologyNodeCreate, TopologyNodeUpdate, TopologyNodeDelete, TopologyEdgeCreate, TopologyEdgeUpdate, TopologyEdgeDelete and so on.

OpenSearch

OpenSearch is NoSQL database it is an open-source search and analytics suite that makes it easy to ingest, search, visualize, and analyze data.

Topology Graph Database

The ATA Service communicates to the Oracle Databases using the Oracle Property Graph feature with PGQL and standard SQL. It can communicate directly to the database or with the In-Memory Graph for high performance operations. This converged database feature of Oracle Database makes it possible to utilize the optimal processing method with a single database. The Graph Database is isolated and a separate Pluggable Database (PDB) from the UIM Database but runs on the same 19c version for simplified licensing.

Prerequistes and Configuration for Creating ATA Images

You must install the prerequisite software and tools for creating ATA images.

Prerequisites for Creating ATA Images

You require the following prerequisites for creating ATA images:

- Podman on the build machine if Linux version is greater than or equal to 8.

- Docker on the build machine if Linux version is lesser than 8

- ATA Builder Toolkit (ref about the deliverables)

- Install Maven and update path variable with Maven

Home.

Set PATH variable export PATH=$PATH:$MAVEN_HOME/bin - Java, installed with JAVA_HOME set in the

environment.

Set PATH variable export PATH=$PATH:$JAVA_HOME/bin - Bash, to enable the `<tab>` command complete feature.

See "UIM Software Compatibility" in UIM Compatibility Matrix for details about the required and supported versions of these prerequisite software.

Configuring ATA Images

The dependency manifest file describes the input that goes into the ATA images. It is consumed by the image build process. The default configuration in the latest manifest file provides the necessary components for creating the ATA images easily. See "About the Manifest File" for more information.

Creating ATA Images

To create the ATA images:

Note:

See "UIM Software Compatibility" in UIM Compatibility Matrix for the latest versions of software.- Go to WORKSPACEDIR.

- Download graph server war file from Oracle E-Delivery (https://www.oracle.com/database/technologies/spatialandgraph/property-graph-features/graph-server-and-client/graph-server-and-client-downloads.html

→ Oracle Graph Server

<version>→ Oracle Graph Webapps <version> for (Linux

x86-64)) and copy graph server war file to directory

$WORKSPACEDIR/ata-builder/staging/downloads/graph. Ensure only one copy of PGX.war

exists in …/downloads/graph path.

Note:

The log level is set to debug by default in graph server war file. If required, update the log level to error/info in graph-server-webapp-23.3.0.war/WEB-INF/classes/logback.xml before building images. - Download tomcat-<tomcat_version>.tar.gz and copy to $WORKSPACEDIR/ata-builder/staging/downloads/tomcat.

- Download jdk-<jdk_version>_linux-x64_bin.tar.gz and

copy to $WORKSPACEDIR/ata-builder/staging/downloads/java.

Note:

For Tomcat and JDK versions, see Unified Inventory and Topology Microservices - Export proxies in environment variables, fill the details on proxy

settings:

#The eth0 is sample. replace "etho" with your specific interface name. export ip_addr=`ip -f inet addr show eth0|egrep inet|awk '{print $2}'|awk -F/ '{print $1}'` export http_proxy= export https_proxy=$http_proxy export no_proxy=localhost,$ip_addr export HTTP_PROXY= export HTTPS_PROXY=$HTTP_PROXY export NO_PROXY=localhost,$ip_addr - Update $WORKSPACEDIR/ata-builder/bin/gradle.properties with required

proxies.

systemProp.http.proxyHost= systemProp.http.proxyPort= systemProp.https.proxyHost= systemProp.https.proxyPort= systemProp.http.nonProxyHosts=localhost|127.0.0.1 systemProp.https.nonProxyHosts=localhost|127.0.0.1 - Uncomment the proxy block and provide

$WORKSPACEDIR/ata-builder/bin/m2/settings.xml with required

proxies.

<proxies> <proxy> <id>oracle-http-proxy</id> <host>xxxxx</host> <protocol>http</protocol> <nonProxyHosts>localhost|127.0.0.1|xxxxx</nonProxyHosts> <port>xxxxx</port> <active>true</active> </proxy> </proxies> - Copy UI custom icons to directory older $WORKSPACEDIR/ata-builder/staging/downloads/ata-ui/images if you have any customizations for service topology icon. For making customizations, see "Customizing the Images".

- Update the image tag in $WORKSPACEDIR/ata-builder/bin/ata_manifest.yaml

- Run

build-all-imagesscript to create ATA images:$WORKSPACEDIR/ata-builder/bin/build-all-images.shNote:

You can include the above procedure into your CI pipeline as long as the required components are already downloaded to the staging area.

Post-build Image Management

The ATA image builder creates images with names and tags based on the settings in the manifest file. By default, this results in the following images:

- uim-7.7.0.0.0-ata-base-1.2.0.0.0:latest

- uim-7.7.0.0.0-ata-api-1.2.0.0.0:latest

- uim-7.7.0.0.0-ata-pgx-1.2.0.0.0:latest

- uim-7.7.0.0.0-ata-ui-1.2.0.0.0:latest

- uim-7.7.0.0.0-ata-dbinstaller-1.2.0.0.0:latest

- uim-7.7.0.0.0-ata-consumer-1.2.0.0.0:latest

- uim-7.7.0.0.0-alarm-consumer-1.2.0.0.0:latest

- uim-7.7.0.0.0-smartsearch-consumer-1.2.0.0.0:latest

- uim-7.7.0.0.0-impact-analysis-api-1.2.0.0.0:latest

Customizing the Images

Service topology can be customized using a JSON configuration file. See "Customizing Service Topology in ATA from UIM" in UIM System Administrator's Guide for more information. As a part of customization, if custom icons are to be used to represent nodes in service topology, they must be placed in the $WORKSPACEDIR/ata-builder/staging/downloads/ata-ui/images/ folder and ata-ui image must be rebuilt.

Localizing Specification Name in ATA

The specification names in the ATA application can be localized. To achieve this, export the specification bundle from UIM and build the image with customization.

The prerequisite for localizing specification name in ATA is that the specification displays names provided in Service Catalog and Design - Design Studio and deployed to UIM.

To localize the specification name:

- Export UIM App Bundle:

- In UIM UI, navigate to Execute Rule under Administration section in the left pane.

- Select EXPORT_SPECIFICATION_DISPLAY_NAMES_AS_JSON from the dropdown,

ignore the file upload option, and click Process.

Note:

The ora_uim_baserulesets must be deployed before performing this step. - Download uimAppBundle.tar.gz.

- Place uimAppBundle.tar.gz in $BUILDER_HOME/staging/downloads/ata-ui/uimAppBundle builder for image building.

- Customize and build the image.

Creating an ATA Instance

This section describes how to create an ATA instance in your cloud native environment using the operational scripts and the configuration provided in the common cloud native toolkit.

Before you can create an ATA instance, you must validate cloud native environment. See "Planning UIM Installation" for details on prerequisites.

In this section, while creating a basic instance, the project name is considered as sr and instance name is considered as quick.

Note:

Project and Instance names cannot contain any special characters.

Installing ATA Cloud Native Artifacts and Toolkit

Build container images for the following using the ATA cloud native Image Builder:

- ATA Core application

- ATA PGX application

- ATA Consumer application

- Alarm Consumer application

- ATA User Interface application

- ATA database installer

- SmartSearch Consumer application

See "Deployment Toolkits" to download the Common cloud native toolkit archive file. Set the variable for the installation directory by running the following command, where $WORKSPACEDIR is the installation directory of the COMMON cloud native toolkit:

export COMMON_CNTK=$WORKSPACEDIR/common-cntkSetting up Environment Variables

ATA relies on access to certain environment variables to run seamlessly. Ensure the following variables are set in your environment:

- Path to your common cloud native toolkit

- Traefik namespace

- Path to your specification files

To set the environment variables:

- Set the COMMON_CNTK variable to the path of directory where common

cloud native toolkit is extracted as

follows:

$ export COMMON_CNTK=$WORKSPACEDIR/common-cntk - Set the

TRAEFIK_NSvariable for Traefik namespace as follows:$ export TRAEFIK_NS=Treafik Namespace - Set the TRAEFIK_CHART_VERSION variable for Traefik helm chart version.

Refer UIM Compatibility Matrix for appropriate version. The following is a sample

for Traefik chart version

15.1.0.

$ export TRAEFIK_CHART_VERSION=15.1.0 - Set SPEC_PATH variable to the location where application and database

yamls are copied. See "Assembling the Specifications" to copy specification

files if not already

copied.

$ export SPEC_PATH=$WORKSPACEDIR/ata_spec_dir

Registering the Namespace

After you set the environment variables, register the namespace.

To register the namespace, run the following command:

$COMMON_CNTK/scripts/register-namespace.sh -p sr -t targets

# For example, $COMMON_CNTK/scripts/register-namespace.sh -p sr -t traefik

# Where the targets are separated by a comma without extra spaces

Note:

traefik is the name of the target for registration of the

namespace sr. The script uses TRAEFIK_NS to find these targets.

Do not provide the Traefik target if you are not using Traefik.

For Generic Ingress Controller, you do not have to register the namespace. To select the ingress controller, provide the ingressClassName value under the ingress.className field in the applications.yaml file. For more information about ingressClassName, see https://kubernetes.io/docs/concepts/services-networking/ingress/

Creating Secrets

You must store sensitive data and credential information in the form of Kubernetes Secrets that the scripts and Helm charts in the toolkit consume. Managing secrets is out of the scope of the toolkit and must be implemented while adhering to your organization's corporate policies. Additionally, ATA service does not establish password policies.

Note:

The passwords and other input data that you provide must adhere to the policies specified by the appropriate component.

As a prerequisite to use the toolkit for either installing the ATA database or creating a ATA instance, you must create secrets to access the following:

- ATA Database

- UIM Instance Credentials

- Common oauthConfig Secret for authentication

The toolkit provides sample scripts to perform this. These scripts should be used for manual and faster creation of an instance. It does not support any automated process for creating instances. The scripts also illustrate both the naming of the secret and the layout of the data within the secret that ATA requires. You must create secrets before running the install-database.sh or create-applications.sh scripts.

Creating Secrets for ATA Database Credentials

The database secret specifies the connectivity details and the credentials for connecting to the ATA PDB (ATA schema). This is consumed by the ATA DB installer and ATA runtime.

- Run the following script to create the required

secrets:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata create database - Enter the corresponding values as prompted:

- TOPOLOGY DB Admin(sys) Username: Provide Topology Database admin username

- TOPOLOGY DB Admin(sys) Password: Provide Topology Database admin password

- TOPOLOGY Schema Username: Provide username for ATA schema to be created

- TOPOLOGY Schema Password: Provide ATA schema password

- TOPOLOGY DB Host: Provide ATA Database Hostname

- TOPOLOGY DB Port: Provide ATA Database Port

- TOPOLOGY DB Service Name: Provide ATA Service Name

- PGX Client Username: Provide username for PGX Client User to be created

- PGX Client Password: Provide PGX Client Password

- Verify that the following secret is

created:

sr-quick-ata-db-credentials

Creating Secrets for UIM Credentials

The UIM secret specifies the credentials for connecting to the UIM application. This is consumed by ATA runtime.

-

Run the following scripts to create the UIM secret:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata create uim - Enter the credentials and the corresponding values as prompted. The

credentials should be as shown in the following

example:

Provide UIM credentials ...(Format should be http: //<host>:<port>) UIM URL: Provide UIM Application URL, sample https://quick.sr.uim.org:30443 UIM Username: Provide UIM username UIM Password: Provide UIM password Is provided UIM a Cloud Native Environment ? (Select number from menu) 1) Yes 2) No #? 1 Provide UIM Cluster Service name (Format <uim-project>-<uim-instance>-cluster-uimcluster.<project>.svc.cluster.local) UIM Cluster Service name: sr-quick-cluster-uimcluster.sr.svc.cluster.local #Provide UIM Cluster Service name.Note:

- If the OAM IdP is used for authentication, provide the

UIM front-end hostname URL in the format:

https://<instance>.<project>.ohs.<oam-host-suffix>:<loadbalancerport>. -

Provide the default UIM URL, if the SAML protocol is configured for authentication with any IDP (such as IDCS, Keycloak, and so on.) For example:

https://<instance>.<project>.<hostSuffix>:<loadbalancerport>.

- If the OAM IdP is used for authentication, provide the

UIM front-end hostname URL in the format:

- Verify that the following secret is

created:

sr-quick-ata-uim-credentials

Creating Secrets for Authentication Server Details

The OAuth secret specifies details of the authentication server. It is used by ATA to connect o Message Bus. See "Adding Common OAuth Secret and ConfigMap" for more information.

If authentication is enabled on ATA, ensure that you create an oauthConfig secret with the appropriate OIDC details of your identity provider. To create an oauthConfig secret, see "Adding Common OAuth Secret and ConfigMap".

Creating Secrets for SSL enabled on traditional UIM truststore

The inventorySSL secret stores the truststore file of the SSL enabled on traditional UIM, it is required only if Authentication is not enabled on topology and to integrate topology with UIM traditional instance.

- Create truststore file using UIM certificates and to enable SSL on UIM. See "ATA Inventory Management System Administration Overview" in UIM System Administrator's Guide for more information.

- Once you have the certificate of traditional UIM run following command to create

truststore:

keytool -importcert -v -alias uimonprem -file ./cert.pem -keystore ./uimtruststore.jks -storepass ******* - After creating uimtruststore.jks run following command to

create inventorySSL secret and pass the truststore created

above:

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata create inventorySSLThe system prompts for the trustsotre file location and passpharase for truststore. Provide appropriate values.

Installing ATA Service Schema

To install the ATA schema:

- Update values under ata-dbinstaller in

$SPEC_PATH/sr/quick/database.yaml file with values required for ATA schema creation.

Note:

- The YAML formatting is case-sensitive. Use a YAML editor to ensure that you do not make any syntax errors while editing. Follow the indentation guidelines for YAML.

- Before changing the default values provided in the specification file, verify that they align with the values used during PDB creation. For example, the default tablespace name should match the value used when PDB is created.

- Edit the database.yaml file and update the DB installer image to point

to the location of your image as

follows:

ata-dbinstaller: dbinstaller: image: DB_installer_image_in_your_repo tag: DB_installer image tag in your repo - If your environment requires a password to download the container images

from your repository, create a Kubernetes secret with the Docker pull credentials.

See "Kubernetes documentation" for

details. Refer the secret name in the database.yaml. Provide image pull secret and

image pull policy

details.

ata-dbinstaller: imagePullPolicy: Never # The image pull access credentials for the "docker login" into Docker repository, as a Kubernetes secret. # Uncomment and set if required. # imagePullSecret: "" - Run the following script to start the ATA DB installer, which

instantiates a Kubernetes pod resource. The pod resource lives until the DB

installation operation

completes.

$COMMON_CNTK/scripts/install-database.sh -p sr -i quick -f $SPEC_PATH/sr/quick/database.yaml -a ata -c 1 - You can run the script with -h to see the available options.

- Check the console to see if the DB installer is installed successfully.

- If the installation has failed, run the following command to review the

error message in the

log:

kubectl logs -n sr sr-quick-ata-dbinstaller - Clear the failed pod by running the following

command:

helm uninstall sr-quick-ata-dbinstaller -n sr - Run the install-database script again to install the ATA DB installer.

Configuring the applications.yaml File

The applications.yaml file is a Helm override values file to override default values of ATA chart. Update values under chart ata in $SPEC_PATH/<PROJECT>/<INSTANCE>/applications.yaml to override the default values.

The applications.yaml provides a section for values that are common for all microservices. Provide Values under that common section and it is reflected for all services.

Note:

There are common values specified in applications.yaml and database.yaml for the microservices. To override the common value, specify the value for the common value under chart name of microservice. If value under the chart is empty, then common value is considered.

To configure the project specification:

- Edit the applications.yaml to provide the image in your

repository (name and tag) by running the following

command:

vi $SPEC_PATH/$PROJECT/$INSTANCE/applications.yaml # edit the image names, to reflect the ATA image names and location in your docker repository # edit the image tags to reflect the ATA image names and location in your docker repository ata: name: "ata" image: topologyApiName: uim-7.7.0.0.0-ata-api-1.2.0.0.0 pgxName: uim-7.7.0.0.0-ata-pgx-1.2.0.0.0 uiName: uim-7.7.0.0.0-ata-ui-1.2.0.0.0 topologyConsumerName: uim-7.7.0.0.0-ata-consumer-1.2.0.0.0 smartsearchConsumerName: uim-7.7.0.0.0-smartsearch-consumer-1.2.0.0.0 alarmConsumerName: uim-7.7.0.0.0-alarm-consumer-1.2.0.0.0 impactAnalysisApiName: uim-7.7.0.0.0-impact-analysis-api-1.2.0.0.0 topologyApiTag: latest pgxTag: latest uiTag: latest topologyConsumerTag: latest smartsearchConsumerTag: latest alarmConsumerTag: latest impactAnalysisApiTag: latest repository: repositoryPath: - If your environment requires a password to download the container

images from your repository, create a Kubernetes secret with the Docker pull

credentials. See the "Kubernetes documentation" for

details. See the secret name in the applications.yaml for more

information.

# The image pull access credentials for the "docker login" into Docker repository, as a Kubernetes secret. # uncomment and set if required. ata: # imagePullSecret: # imagePullSecrets: # - name: regcred - Set Pull Policy for ATA images in applications.yaml. Set

pullPolicy to Always in case image is

updated.

ata: image: pullPolicy: Never - Update loadbalancerport in applications.yaml. If there is

no external loadbalancer configured for the instance change the value of

loadbalancerport to the ingressController NodePort . If SSL is enabled on

ATA, provide SSL NodePort and if SSL is disabled, provide non-SSL NodePort.

If you use Oracle Cloud Infrastructure LBaaS, or any other external load balancer, if TLS is enabled set

loadbalancerportto 443 else setloadbalancerportto 80 and update the value forloadbalancerhostappropriately.#provide loadbalancer port loadbalancerport: 30305 - To enable authentication, set the flag authentication.enabled to

true. If OAM is used for authentication, provide loadbalancer ip-address

and ohs-hostname under hostAliases. The hostAliases are added under the pods

within the /etc/hosts file. If you use any other IDP and it is not under the

public dns server, you can provide the

hostAliases.

# The enabled flag is to enable or disable authentication authentication: enabled: true hostAliases: - ip: <ip-address> hostnames: - <oam-instance>.<oam-project>.ohs.<hostSuffix> (ex quick.sr.ohs.uim.org) - If Authentication is not enabled on ATA and want to integrate ATA with

traditonal SSL enabled UIM, you have to create inventorySSL secret and enable

the inventorySSL flag in applications.yaml as shown

below:

# make it true if using on prem inventory with ssl port enabled and authentication is not enabled on topology # always false for Cloud Native inventory # not required in production environment isInventorySSL: true

Configuring ATA

Sample configuration files topology-static-config.yaml.sample, topology-dynamic-config.yaml.sample are provided as the sample files for ATA API service that are under $COMMON_CNTK/charts/ata-app/charts/ata/config/ata-api

To override configuration properties, copy the sample static property file to topology-static-config.yaml and sample dynamic property file to topology-dynamic-config.yaml. Provide key value to override the default value provided out-of-the-box for any specific system configuration property. The properties defined in property files are fed into the container using Kubernetes configuration maps. Any changes to these properties require the instance to be upgraded. Pods are restarted after configuration changes to topology-static-config.yaml.

Max Rows

Modify the following setting to limit the number of records returned in LIMIT queries:

topology:

query:

maxrows: 5000

Date Format

Any modifications to the date format used by all dates must be consistently applied to all consumers of the APIs.

topology:

api:

dateformat: yyyy-MM-dd'T'HH:mm:ss.SSS'Z'

Alarm Types

The out of the box alarm types utilize industry standard values. If you want to display a different value, modify the value accordingly:

For example: To modify the COMMUNICATIONS_ALARM change the value to COMMUNICATIONS_ALARM: Communications

alarm-types:

COMMUNICATIONS_ALARM: COMMUNICATIONS_ALARM

PROCESSING_ERROR_ALARM: PROCESSING_ERROR_ALARM

ENVIRONMENTAL_ALARM: ENVIRONMENTAL_ALARM

QUALITY_OF_SERVICE_ALARM: QUALITY_OF_SERVICE_ALARM

EQUIPMENT_ALARM: EQUIPMENT_ALARM

INTEGRITY_VIOLATION: INTEGRITY_VIOLATION

OPERATIONAL_VIOLATION: OPERATIONAL_VIOLATION

PHYSICAL_VIOLATION: PHYSICAL_VIOLATION

SECURITY_SERVICE: SECURITY_SERVICE

MECHANISM_VIOLATION: MECHANISM_VIOLATION

TIME_DOMAIN_VIOLATION: TIME_DOMAIN_VIOLATION

Event Status

ATA supports 3 types of events: 'Raised' for new events, 'Updated' for existing events with updated information and 'Cleared' for events that have been Closed.

To modify the 'CLEARED' event change the value to CLEARED: closed

event-status:

CLEARED: CLEARED

RAISED: RAISED

UPDATED: UPDATED

Event Severity

ATA supports various types of event severity on a Device. The severity from most severe to least severe is CRITICAL(1), MAJOR(5), WARNING(10), INTERMEDIATE(15), MINOR(20), CLEARED(25) and None(999).

Internally, a numeric value is used to identify the severity hierarchy. The top three most severe events are tracked in ATA.

To modify the 'INTERMEDIATE' severity change the value to INTERMEDIATE: moderate

severity:

CLEARED: CLEARED

INDETERMINATE: INDETERMINATE

CRITICAL: CRITICAL

MAJOR: MAJOR

MINOR: MINOR

WARNING: WARNING

Path Analysis Cost Values

ATA supports 3 different types of numeric cost values for each edge/connectivity maintained in topology. The cost type label is configured based on your business requirements and data available.

You select the cost parameter to evaluate while using path analysis. The cost values are maintained externally using the REST APIs.

To modify 'costValue3' from Distance to Packet Loss change the value to costValue3: PacketLoss after updating the data values.

pathAnalysis:

costType:

costValue1: Jitter

costValue2: Latency

costValue3: Distance

Path Analysis Alarms

Alarms can be used by path analysis to exclude devices in the returned paths. The default setting is to exclude devices with any alarm.

To allow Minor and Greater alarms modify the setting to:

excludeAlarmTypes: Critical and Greater, Major and Greater

All Paths Limit

To improve the response time, modify the max number of paths returned when using 'All' Paths.

Configuring Topology Consumer

The sample configuration files topology-static-config.yaml.sample and topology-dynamic-config.yaml.sample are provided under $COMMON_CNTK/charts/ata-app/charts/ata/config/ata-api.

To override configuration properties, copy the sample static property file to topology-static-config.yaml and sample dynamic property file to topology-dynamic-config.yaml. Provide key value to override the default value provided out-of-the-box for any specific system configuration property. The properties defined in property files are provided to the container using Kubernetes configuration maps. Any changes to these properties require the instance to be upgraded. Pods are restarted after configuration changes to topology-static-eexconfig.yaml.

Reduce the Poll size for Retry and dlt Topic

Uncomment or add the configuration values in topology-config.yaml and upgrade the Topology Consumer service.

Maximum Poll Interval and Records

Edit max.poll.interval.ms to increase or decrease the delay between invocations of poll() when using consumer group management and max.poll.records to increase or decrease the maximum number of records returned in a single call to poll().

mp.messaging:

incoming:

toInventoryChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 500

toFaultChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 500

toRetryChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 200

toDltChannel:

# max.poll.interval.ms: 300000

# max.poll.records: 100Partition assignment strategy

The PartitionAssignor is the class that decides which partitions are assigned to which consumer. While creating a new Kafka consumer, you can configure the strategy that can be used to assign the partitions amongst the consumers. You can set it using the configuration partition.assignment.strategy. The partition re-balance (moving partition ownership from one consumer to another) happens, in case of:

- Addition of new Consumer to the Consumer group.

- Removal of Consumer from the Consumer group.

- Addition of New partition to the existing topic.

To change the partition assignment strategy, update the topology-config.yaml for topology consumer and redeploy the POD. The below example configuration shows the CooperativeStickyAssignor strategy. For list of supported partition assignment strategies, see partition.assignment.strategy in Apache Kafka documentation.

mp.messaging

connector:

helidon-kafka:

partition.assignment.strategy: org.apache.kafka.clients.consumer.CooperativeStickyAssignorConfiguring Alarm Consumer

Sample configuration files alarm-consumer-static-config.yaml.sample and alarm-consumer-dynamic-config.yaml.sample are provided under $COMMON_CNTK/charts/ata-app/charts/ata/config/alarm-consumer.

To override configuration properties, copy the sample static property file to alarm-consumer-static-config.yaml and sample dynamic property file to alarm-consumer-dynamic-config.yaml. Provide key value to override the default value provided out-of-the-box for any specific system configuration property. The properties defined in property files are provided to the container using Kubernetes configuration maps. Any changes to these properties require the instance to be upgraded. Pods are restarted after configuration changes to alarm-consumer-static-config.yaml.

Configuring Incoming Channel

For performance improvement tuning tn-comment or add the following in alarm-consumer-static-config.yaml file to override the default configuration:

- Edit max.poll.interval.ms to increase or decrease the delay between invocations of poll() while using the consumer group management.

- Edit max.poll.records to increase or decrease the maximum number of

records returned in a single call to

poll().

mp.messaging: incoming: toFaultChannel: # max.poll.interval.ms: 300000 # max.poll.records: 500 toRetryChannel: # max.poll.interval.ms: 300000 # max.poll.records: 150 toDltChannel: # max.poll.interval.ms: 300000 # max.poll.records: 100

Impact Analysis API

The impact analysis API is as follows:

impactAnalysis:

url: http://localhost:8084Customizing Device Mapping

ATA alarm consumer processes alarms that are based on TMF-642 specification. The TMF-642 alarm specification has the following section that has details about network entity on which the alarm is raised or problem has occurred.

"alarmedObject": {

"@referredType": "OT_AID",

"@type": "AlarmedObjectRef",

"id": "LSN/EMS_XDM_33/9489:73:12295",

"name": ""

}In the alarmedObject JSON data, the id field will have the details for the network entity. In ATA alarm-consumer processing the alarmedObject.id field has device information of the topology managed by the ATA. By default, alarm consumer expects the alarmedObject.id to be a ":" delimited data. The delimiter is used to understand the device information of which the first part of ":" is expected to be the device name. Therefore, ATA alarm consumer will extract the first part of the ":" delimited data and then tries to find the device name in the database.

This mapping of alarmedOdject.id as a device name in database is default in ATA alarm consumer. The default can be extended. The following section explains the customization of the device mapping.

deviceMapping:

inventory:

lookupFields: # ordered ascending, only four values supported [name,id,deviceIdentifier,ipv4,ipv6]

- name #default

- id

- deviceIdentifier

- ipv4

- ipv6

customizeDeviceLookup:

enabled: trueThe above YAML configuration is used to change the device mapping.

Table 8-1 Device Mapping Fields

| Field | Description |

|---|---|

| deviceMapping.lookupFields |

This is an array field that can have only the values : name, id, deviceIdentifier, ipv4, ipv6. There are names of the fields in persisted data which will be used to search the device that is mentioned in the alarmedObject.id field. The name filed is default. That means, the first part of the ':' of alarmedObject.id field by default get searched with name. The order of the array is followed. Therefore, if array is updated in id, deviceIdentifier, ipv4, ipv6, or name fields, the alarm consumer will take the first part of ':' of alarmedObject.id field and then search it in database with the corresponding id, since the array first element is id. Only the first three entries of the array are considered for searching. That means, for name, id, deviceIdentifier, ipv4, and ipv6 settings, it will search with the name first and if not found then id, if not found then deviceidentifier. Once a device is found, no further matching will be performed. In case no device is found or multiple devices found, see "Fallout Events Resolution" for more information. |

| deviceMapping.customizeDeviceLookup.enabled |

This is a Boolean value. If it is true, the alarm consumer enables extensibility to its user to provide groovy script, which should return a single value by processing either alarmedObject or alarm (TMF-642). This single value which is expected to be returned from the Groovy that is used to match in the database. The sample Groovy script is as follows: |

import groovy.json.JsonSlurper

def getDeviceIds(alarmedObject,alarm){

def jsonSlurper = new JsonSlurper()

def alo = jsonSlurper.parseText(alarmedObject)

def aloId = alo.id

def device = aloId.split(":")[0].trim()

/*

* The return type must be type of String.

* The string value will be used to search in database which expected to be matched with a device/ subdevice.

*/

return device

}This Groovy script must meet the following criteria:

- Groovy script is configured using config-map provided in the deployment.

- Groovy script is named as DeviceMapping.groovy.

Note:

You cannot change the name. - Groovy script has a method named as

getDeviceIds. - The

getDeviceIdsmethod has two arguments of type String. - The getDeviceIds method returns a single value of the device name that is to be searched in the database.

Mounting Groovy Scripts To Alarm Consumer

To mount Groovy scripts to alarm consumer pod:

- Edit the script in $COMMON_CNTK/charts/ata-app/charts/ata/config/alarm-consumer/DeviceMapping.groovy location.

- Upgrade ATA instance as

follows:

$COMMON_CNTK/scripts/upgrade-applications.sh -p project -i instance -f $SPEC_PATH/project/instance/applications.yaml -a ata

Integrate ATA Service with Message Bus Service

To integrate ATA API service with Message Bus service:

- In the file $SPEC_PATH/sr/quick/applications.yaml, uncomment the section messagingBusConfig.

- Provide namespace and instance name on which the Messaging Bus service is deployed.

- Security protocol is SASL_PLAINTEXT if authentication is enabled on Message bus service. If authentication is not enabled on the Message Bus service, the security protocol is PLAINTEXT.

A sample configuration when authentication is enabled and Messaging Bus is deployed on instance 'quick' and namespace 'sr' is as follows:

applications.yaml

authentication:

enabled: true

messagingBusConfig:

namespace: sr

instance: quickIntegrating ATA with Authorization Service

If Authorization Service is installed, you can integrate ATA with Authorization service by updating $SPEC_PATH/project/instance/applications.yaml and uncommenting the following properties and provide appropriate values:

authorizationServiceConfig:

namespace: <project> #namespace on which authorization service is deployed

instance: <instance> #instance name on which authorization service is deployedCreating an ATA Instance

To create an ATA instance in your environment using the scripts that are provided with the toolkit:

- Run the following command to create an ATA

instance:

$COMMON_CNTK/scripts/create-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ataThe create-applications script uses the helm chart located in $COMMON_CNTK/charts/ata-app to create and deploy a ata service.

- If the scripts fail, see the Troubleshooting Issues section at the end of this topic, before you make additional attempts.

For more information on creating an ATA instance, see "Creating an ATA Instance"

Accessing ATA Instance

Proxy Settings

To set the proxy settings:

- In the browser's network no-proxy settings include

*<hostSuffix>. For example,

*uim.org. - In

/etc/hostsinclude etc/hosts<k8s cluster ip or loadbalancerIP> <instance>.<project>.topology.<hostSuffix> for example: <k8s cluster ip or external loadbalancer ip> quick.sr.topology.uim.org

Exercise ATA service endpoints

If TLS is enabled on ATA, exercise endpoints using Hostname <topology-instance>.<topology-project>.topology.uim.org.

ATA UI endpoint format: https://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/apps/ata-ui

ATA API endpoint format: https://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/topology/v2/vertex

- ATA UI endpoint: https://quick.sr.topology.uim.org:30443/apps/ata-ui

- ATA API endpoint: https://quick.sr.topology.uim.org:30443/topology/v2/vertex

If TLS is not enabled on ATA, exercise endpoints:

ATA UI endpoint format: http://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/apps/ata-ui

ATA API endpoint format: http://<topology-instance>.<topology-project>.topology.<hostSuffix>:<port>/topology/v2/vertex

Validating the ATA Instance

To validate the ATA instance:

- Run the following to check the status of ata instance deployed.

$COMMON_CNTK/scripts/application-status.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ataThe application-status script returns the status of ATA service deployments and pods status.

- Run the following endpoint to monitor health of

ata:

https://quick.sr.topology.uim.org:<loadbalancerport>/health - Run the following ATA service endpoints to add entry in /etc/hosts

<k8s cluster ip or external loadbalancer ip> quick.sr.topology.uim.org:

- ATA UI endpoint:

https://quick.sr.topology.uim.org:30443/apps/ata-ui - ATA API endpoint:

https://quick.sr.topology.uim.org:30443/topology/v2/vertex

- ATA UI endpoint:

Deploying the Graph Server Instance

Graph Server or Pgx Server instance is needed for Path Analysis. By default, replicaCount of pgx(graph) server pods is set to '0'. For path analysis to function , set the replicaCount of pgx pods to '2' and upgrade instance. See "Upgrading the ATA Instance" for more information.

A cron job must be scheduled to periodically reload the active ata-pgx pod.

pgx:

pgxName: "ata-pgx"

replicaCount: 2

java:

user_mem_args: "-Xms8000m -Xmx8000m -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/logMount/$(APP_PREFIX)/ata/ata-pgx/"

gc_mem_args: "-XX:+UseG1GC"

options:

resources:

limits:

cpu: "4"

memory: 16Gi

requests:

cpu: 3500m

memory: 16Gi

Scheduling the Graph Server Restart CronJob

Once the instance is created succesfully, cronjob needs to schedule for ata-pgx pod restarts. For a scheduled period of time, one of the ata-pgx pod is restarted and all incoming requests are routed to other unfified-topology-pgx pod seamlessly.

Update the script $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.sh to include required environment variables - KUBECONFIG, pgx_ns, pgx_instance. For a basic instance, pgx_ns is sr and pgx_instance is quick.

export KUBECONFIG=<kube config path>

export pgx_ns=<ata project name>

export pgx_instance=<ata instance name>

pgx_pods=`kubectl get pods -n $pgx_ns --sort-by=.status.startTime -o name | awk -F "/" '{print $2}' | grep $pgx_instance-ata-pgx`

pgx_pod_arr=( $pgx_pods )

echo "Deleting pod - ${pgx_pod_arr[0]}"

kubectl delete pod ${pgx_pod_arr[0]} -n $pgx_ns --grace-period=0

The following crontab is scheduled for every day midnight. Scheduled time may vary depending on the volume of data.

Variable $COMMON_CNTK should be set in environment where cronjob runs or replace $COMMON_CNTK with complete path.

crontab –e 0 0 * * * $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.sh > $COMMON_CNTK/samples/cronjob-scripts/pgx-restart.logAffinity on Graph Server

If multiple PGX pods are scheduled on the same worker node, the memory consumption by these PGX pods becomes very high. To address this, include the following affinity rule in applications.yaml, under the ata chart to avoid scheduling of multiple PGX pods on the same worker node.

The following podantiaffinity rule uses the app= <topology-project>-<topology-instance>-ata-pgx label. Update the label with the corresponding project and instance names for ATA service. For example: sr-quick-ata-pgx.

ata:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- <topology-project>-<topology-instance>-ata-pgx

topologyKey: "kubernetes.io/hostname"

Upgrading the ATA Instance

Upgrading ATA is required when there are updates made to applications.yaml and topology-static-config.yaml and topology-dynamic-config.yaml configuration files.

Run the following command to upgrade ATA service.

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ataAfter script execution is done, validate the ATA service by running application-status script.

Restarting the ATA Instance

To restart the ATA instance:

-

Run the following command to restart ATA service

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata -r all -

After running the script, validate the ATA service by running application-status script.

-

To restart ata-api/ata-ui/ata-pgx, run the above command by passing -r with service name as follows:

-

To restart ATA API

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata -r ata-api -

To restart ATA PGX

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata -r ata-pgx -

To restart ATA UI:

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata -r ata-ui -

To restart ATA Consumer:

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ata -r ata-consumer -

To restart Alarm Consumer:

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ata -r alarm-consumer - To restart SmartSearch

Consumer:

$COMMON_CNTK/scripts/restart-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ata -r smartsearch-consumer

Deleting and Recreating a ATA Instance

- Run the following command to delete the ATA

service:

$COMMON_CNTK/scripts/delete-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ata - Run the following command to delete the ATA

schema:

$COMMON_CNTK/scripts/install-database.sh -p sr -i quick -f $COMMON_CNTK/samples/database.yaml -a ata -c 2 - Run the following command to create the ATA

schema:

$COMMON_CNTK/scripts/install-database.sh -p sr -i quick -f $COMMON_CNTK/samples/database.yaml -a ata -c 1 - Run the following command to create the ATA

service:

$COMMON_CNTK/scripts/create-applications.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ata

Alternate Configuration Options for ATA

You can configure ATA using the following alternate options.

Setting up Secure Communication using TLS

When ATA service is involved in secure communication with other systems, either as the server or as the client, you should additionally configure SSL/TLS.The procedures for setting up TLS use self-signed certificates for demonstration purposes. However, replace the steps as necessary to use signed certificates. To generate common self-signed certificates, see "SSL Certificates".

To setup secure communication using TLS:

- Edit the $SPEC_PATH/sr/quick/applications.yaml and set tls

enabled to

true.

tls: enabled: true - Create the ingressTLS secret to pass the generated certificate

and key pem

files.

$COMMON_CNTK/scripts/manage-app-credentials.sh -p sr -i quick -f $COMMON_CNTK/samples/applications.yaml -a ata create ingressTLS - The script prompts for the following detail:

- Ingress TLS Certificate Path (PEM file): <path_to_commoncert.pem>

- Ingress TLS Key file Path (PEM file): <path_to_commonkey.pem>

- Verify that the following secrets are created

successfully.

sr-quick-ata-ingress-tls-cert-secret - Create ATA instance as usual. Access ATA endpoints using hostname <topology-instance>.<topology-instance>.topology.uim.org

- Add entry in /etc/hosts <k8s cluster ip or external loadbalancer ip> quick.sr.topology.uim.org

- ATA UI endpoint:

https://quick.sr.topology.uim.org:30443/apps/ata-ui - ATA API endpoint:

https://quick.sr.topology.uim.org:30443/topology/v2/vertex

Supporting the Wildcard Certificate

Smartsearch supports wildcard certificates. You can generate the wildCard certificates with the hostSuffix value provided in applications.yaml. The default is uim.org.

You must change the subDomainNameSeperator value from period(.) to hyphen(-) so that the incoming hostnames match the wild card DNS pattern.

#Uncooment and provide the value of subDomainNameSeparator, default is "."

#Value can be changed as "-" to match wild-card pattern of ssl certificates.

#Example hostnames for "-" quick-sr-topology.uim.org

subDomainNameSeparator: "-"Using Annoation-Based Generic Ingress Controller

ATA supports standard Kubernetes ingress API and has samples for integration. In the following configuration, the required annotations for ATA for NGINX, are provided.

Any Ingress Controller, which conforms to the standard Kubernetes ingress API and supports annotations required by ATA should work, although Oracle does not certify individual Ingress controllers to confirm this generic compatibility.

To use annotation-based generic ingress controller:

- Update applications.yaml to provide the following annotations that

enable stickiness through

cookies:

# Valid values are TRAEFIK, GENERIC ingressController: "GENERIC" ingress: className: nginx ##provide ingressClassName value, default value for nginx ingressController is nginx. # This annotation is required for nginx ingress controller. annotations: nginx.ingress.kubernetes.io/affinity: "cookie" nginx.ingress.kubernetes.io/affinity-mode: "persistent" nginx.ingress.kubernetes.io/session-cookie-name: "nginxingresscookie" nginx.ingress.kubernetes.io/proxy-body-size: "50m" smartSearch: #uncomment and provide applications specific annotations if required, these will get added to list of annotations specified in common section. ingress: className: nginx-prod ##provide ingressClassName value, default value for nginx ingressController is nginx. annotations: nginx.ingress.kubernetes.io/rewrite-target: /$2

Enabling Authentication for ATA

This section provides you with information on enabling authentication for ATA.

The samples, for using IDCS as Identity Provider, are packaged with ATA. To use any Identity Provider of your choice, you must follow the corresponding configuration instructions.

Registering ATA in Identity Provider

You can register ATA as a Confidential application in Identity Provider. To do so:

- Access the IDCS console and log in as administrator.

- Navigate to the Domains and select the domain (Default domain) to add Helidon application as Confidential application.

- Click Add application to register Helidon application as

Confidential application.

- Choose Confidential Application and click Launch workflow.

- Enter the name as ATA Application and description as ATA Application.

- Select Enforce grants as authorization checkbox under Authentication and authorization section.

- Click Next at the bottom of the page.

- Choose Configure this application as a resource server now radio button under Resource server configuration.

- Enter Primary Audience as https://<topology-hostname>:<loadbalancer-port>/.

- Select Add secondary audience and enter IDCS URL as Secondary audience.

- Select Add scopes and add ataScope as allowed scope.

- Select Configure this application as a client now radio button under the Client configuration section.

- Select Resource owner, Client credentials, and Authorization code check boxes.

- Select Allow HTTP URLs check box only if your ATA application is not SSL enabled.

- Enter the following Redirect URLs:

- https://<ata-hostname>:<loadbalancer-port>/topology

- https://<ata-hostname>:<loadbalancer-port>/redirect/ata-ui/

- https://<ata-hostname>:<loadbalancer-port>/sia

- Enter Post-logout redirect URL as https://<ata-hostname>:<loadbalancer-port>/apps/ata-ui (provide your Helidon application's home page URL).

- Enter Logout URL as https://<ata-hostname>:<loadbalancer-port>/oidc/logout (provide your Helidon application's logout URL).

- (Optional) Select Bypass consent button for skipping the consent page after IDCS login.

- Select Anywhere radio button for Client IP address.

- Click Next and click Finish.

- Click Activate to create application (ATA Application).

- Click Activate application from the pop-up window.

- Click Users on the left side pane to assign users.

- Click Assign users to add domain users to the registered application.

- Choose the desired users from the pop-up window and click Assign.

- (Optional) Click Groups on the left-side pane to assign

groups.

- Click Assign groups to add domain groups to the registered application.

- Choose the desired groups from the pop-up window and click Assign.

Common Secret and Properties

You create a secret and config map with OAuth client details, which will be required for Message Bus and ATA.

Getting Client Credentials

You can get client credential details by navigating to your Oauth client on IDP. In case of IDCS, you can follow these steps to get the details.

Access the IDCS console and log in as Administrator. To get client credentials:

- Navigate to Domains and select the domain (Default domain) to add Helidon application as Confidential application.

- Click on the ATA Application name from the table.

- Scroll to view the Client secret under the General Information section.

- Click Show secret link to open a pop-up window showing the client secret.

- Copy the link and store it to use in the Helidon application configuration.

Creating the OAuth Secrets and ConfigMap

To create OauthConfig secret with OIDC, see "Adding Common OAuth Secret and ConfigMap".

The sample for IDCS is as follows:

Identity Provider Uri: https://idcs-df3063xxxxxxxxxxx.identity.pint.oc9qadev.com:443

Client Scope: https://quick.sr.topology.uim.org:30443/first_scope

Client Audience: https://quick.sr.topology.uim.org:30443/

Token Endpoint Uri: https://idcs-df3063xxxxxxxx.identity.pint.oc9qadev.com:443/oauth2/v1/token

Valid Issue Uri: https://identity.oraclecloud.com/

Introspection Endpoint Uri: https://idcs-df3063xxxxxxx.identity.pint.oc9qadev.com:443/oauth2/v1/introspect

JWKS Endpoint Uri: https://idcs-df3063xxxxxxxxxxx.identity.pint.oc9qadev.com:443/admin/v1/SigningCert/jwk

Cookie Name: OIDCS_SESSION

Cookie Encryption Password: lpmaster

Provide Truststore details ...

Certificate File Path (ex. oamcert.pem): ./identity-pint-oc9qadev-com.pem

Truststore File Path (ex. truststore.jks): ./truststore.jks

Truststore Password: xxxxx #provide Truststore passwordNote:

For more details on IDCS, see "Common Configuration Options For all Services".

Choosing Worker Nodes for ATA Service

By default, ATA has its pods scheduled on all worker nodes in the Kubernetes cluster in which it is installed. However, in some situations, you may want to choose a subset of nodes where pods are scheduled.

For example:

Limitation on the deployment of ATA on specific worker nodes per each team for reasons such as capacity management, chargeback, budgetary reasons, and so on.

To choose a subset of nodes where pods are scheduled, you can use the configuration in the applications.yaml file.

Sample node affinity configuration(requiredDuringSchedulingIgnoredDuringExecution) for ATA service:

applications.yaml

ata:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: name

operator: In

values:

- south_zone

Kubernetes pod is scheduled on the node with label name as south_zone. If node with label name: south_zone is not available, pod will not be scheduled.

Sample node affinity configuration (preferredDuringSchedulingIgnoredDuringExecution:) for ATA service:

applications.yaml

ata:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: name

operator: In

values:

- south_zone

Kubernetes pod is scheduled on the node with label name as south_zone. If node with label name: south_zone is not available, pod will still be scheduled on another node.

Setting up Persistent Storage

Follow the instructions mentioned in UIM Cloud Native Deployment guide for configuring Kubernetes persistent volumes.

To create persistent storage:

- Update applications.yaml to enable storage volume for ATA service

and provide the persistent volume

name.

storageVolume: enabled: true pvc: sr-nfs-pvc #Specify the storage-volume name - Update database.yaml to enable storage volume for ATA dbinstaller and

provide the persistent volume

name.

storageVolume: enabled: true type: pvc pvc: sr-nfs-pvc #Specify the storage-volume name

After the instance is created, you must see the directories ata and ata-dbinstaller in your PV mount point, if you have enabled logs.

Managing ATA Logs

To customize and enable logging, update the logging configuration files for the application.

- Customize ata-api service logs:

- For service level logs update file $COMMON_CNTK/charts/ata-app/charts/ata/config/ata-api/logging-config.xml

- For Helidon-specific logs update file

$COMMON_CNTK/charts/ata-app/charts/ata/config/ata-api/logging.properties. By

default console handler is used, you can provide filehandler as well

uncomment below lines and provide <project> and <instance> names

for location to save

logs

handlers=io.helidon.common.HelidonConsoleHandler,java.util.logging.FileHandler java.util.logging.FileHandler.formatter=java.util.logging.SimpleFormatter java.util.logging.FileHandler.pattern=/logMount/sr-quick/ata/ata-api/logs/TopologyJULMS-%g-%u.log

- Customize ata-pgx service logs:

Update file $COMMON_CNTK/charts/ata-app/charts/ata/config/pgx/logging-config.xml

- Customize ata-ui service logs:

Update file $COMMON_CNTK/charts/ata-app/charts/ata/config/ata-ui/logging.properties

- Update the logging configuration files and upgrade the ata m-s

application:

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $SPEC_PATH/applications.yaml -a ata - Customize the ata-alarm-consumer service log as follows:

- For service-level logs update

file:

$COMMON_CNTK/charts/ata-app/charts/ata/config/alarm-consumer/logging-config.xml - For Helidon-specific logs update

file:

$COMMON_CNTK/charts/ata-app/charts/ata/config/alarm-consumer/logging.properties.

- For service-level logs update

file:

- Customize SmartSearch Consumer service log:

- For service level logs update the $COMMON_CNTK/charts/ata-app/charts/ata/config/smartsearch-consumer/logging-config.xml file.

- For Helidon-specific logs update the $COMMON_CNTK/charts/ata-app/charts/ata/config/smartsearch-consumer/logging.properties file.

Viewing Logs using OpenSearch

You can view and analyze the Application logs using OpenSearch.

The logs are generated as follows:

- Fluentd collects the application logs that are generated during cloud native deployments and sends them to OpenSearch.

- OpenSearch collects all types of logs and converts them into a common format so that OpenSearch Dashboard can read and display the data.

- OpenSearch Dashboard reads the data and presents it in a simplified view.

See "Deleting the OpenSearch and OpenSearch Dashboard Service" for more information.

Managing ATA Metrics

Run the following endpoint to monitor metrics of ATA:

https://instance.project.topology.<hostSuffix>:<loadbalancerport>/metricsPrometheus and Grafana setup

See "Setting Up Prometheus and Grafana" for more information.

Adding scrape Job in Prometheus

Add the following Scrape job in Prometheus Server. This can be added by editing the config map used by the Prometheus server:

- job_name: 'topologyApiSecuredMetrics'

oauth2:

client_id: <client-id>

client_secret: <client-secret>

scopes:

- <Scope>

token_url: <OAUTH-TOKEN-URL>

tls_config:

insecure_skip_verify: true

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_label_app]

action: keep

regex: (<project>-<instance>-ata-api)

- source_labels: [__meta_kubernetes_pod_container_port_number]

action: keep

regex: (8080)

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_nameNote:

If Authentication is not enabled on ATA, remove oauth section from above mentioned job.

Allocating Resources for ATA Service Pods

To increase performance of the service, applications.yaml has configuration to provide JVM memory settings and pod resources for ATA Service.

There are separate configurations provided for ata-api, topology-consumer, alarm-consumer, smartsearch-consumer, pgx, and ata-ui services. Provide required values under the service name under ata application.

ata:

topologyApi:

apiName: "ata-api"

replicaCount: 3

java:

user_mem_args: "-Xms2000m -Xmx2000m -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/logMount/$(APP_PREFIX)/ata/ata-api/"

gc_mem_args: "-XX:+UseG1GC"

options:

resources:

limits:

cpu: "2"

memory: 3Gi

requests:

cpu: 2000m

memory: 3Gi

Scaling Up or Scaling Down the ATA Service

Provide replica count in applications.yaml to scale up or scale down the ATA pods. Replica count can be configured for ata-api, topology-consumer, alarm consumer, pgx, and ata-ui pods individually by updating applications.yaml.

Update applications.yaml to increase replica count to 3 for ata-api deployment.

ata:

topologyApi:

replicaCount: 3

Apply the change in replica count to the running Helm release by running the upgrade-applications script.

$COMMON_CNTK/scripts/upgrade-applications.sh -p sr -i quick -f $SPEC_PATH/sr/quick/applications.yaml -a ataEnabling GC Logs for ATA

By default, GC logs are disabled, you can enable them and view the logs at the corresponding folders inside location /logMount/sr-quick/ata.

To Enable GC logs, update $SPEC_PATH/sr/quick/applications.yaml file as follows:

- Under

gcLogsmakeenabledastrueyou can uncommentgcLogsoptions under ata to override the common values. - To configure the maximum size of each file and limit for number of files you need to

set

fileSizeandnoOfFilesinsidegcLogsas follows:gcLogs: enabled: true fileSize: 10M noOfFiles: 10

Debugging and Troubleshooting

Common Problems and Solutions

-

ATA DBInstaller pod is not able to pull the dbinstaller image.

NAME READY STATUS RESTARTS AGE project-instance-unifed-topology-dbinstaller 0/1 ErrImagePull 0 5s ### OR NAME READY STATUS RESTARTS AGE project-instance-unifed-topology-dbinstaller 0/1 ImagePullBackOff 0 45sTo resolve this issue

- Verify that the image name and the tag provided in database.yaml for ata-dbinstaller and that it is accessible from the repository by the pod.

- Verify that the image is copied to all worker nodes.

- If pulling image from a repository, verify the image pull policy and image pull secret in database.yaml for ata-dbinstaller.

-

ATA API, PGX and UI pod is not able to pull the images.

To resolve this issue

- Verify that the image names and the tags are provided in applications.yaml for ata and that it is accessible from the repository by the pod.

- Verify that the image is copied to all worker nodes

- If pulling image from a repository, verify the image pull policy and image pull secret in applications.yaml for ATA service.

-

ATA pods are in crashloopbackoff state.

To resolve this issue, describe the Kubernetes pod and find the cause for the issue. It could be because of missing secrets.

- ATA API pod did not come

up.

NAME READY STATUS RESTARTS AGE project-instance-ata-api 0/1 Running 0 5sTo resolve this issue, verify that the Message Bus bootstrap server provided in topology-static-config.yaml is a valid one.

Test Connection to PGX server

To troubleshoot PGX service, connect to pgx service using graph client by running the following command.

Connect to pgx service endpoint http://<LoadbalancerIP>:<LoadbalancerPort>/<topology-project>/<topology-instance>/pgx by providing pgx client user credentials.

C:\TopologyService\oracle-graph-client-22.1.0\oracle-graph-client-22.1.0\bin>opg4j -b http://<hostIP>:30305/sr/quick/pgx -u <PGX_CLIENT_USER>

password:<PGX_CLIENT_PASSWORD>

For an introduction type: /help intro

Oracle Graph Server Shell 22.1.0

Variables instance, session, and analyst ready to use.

Fallout Events Resolution

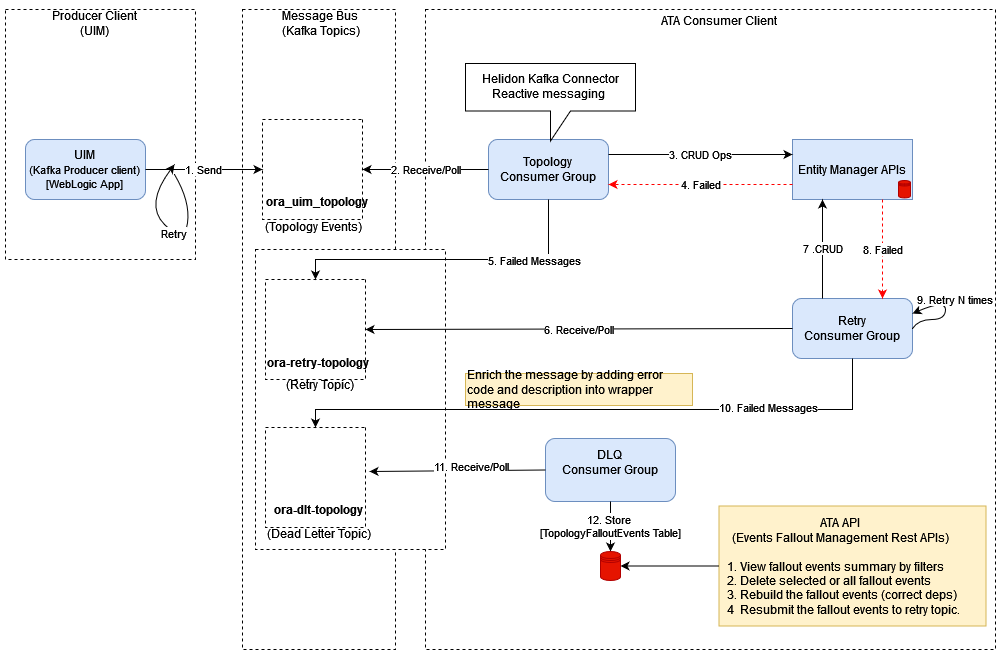

The fallout events resolution process starts from analyzing the events (or messages) from the TOPOLOGY_FALLOUT_EVENTS table. The main intent of this fallout events resolution is to make the message consumer client (such as topology) data in synchronization with the producer client (such as UIM) data by correcting the fallout events which are failed in processing by the consumer clients. Correcting the failed events means rebuilding, resubmitting, editing or ignoring.

Note:

This resolution is a manual administrative task that should be performed periodically.At first, these events (or messages) are collected in the TOPOLOGY_FALLOUT_EVENTS table. The message consumer services subscribe and consume the events from the message bus on a specific topic (such as ora-uim-topology) and process them accordingly. Process means getting the event type from the message and create, update, or delete the entity from the corresponding downstream services for each event. For any failures occurred, the system retries for the configured number of times. If there are any failures that are still being processed, the corresponding messages are produced to the consumer service-specific dead-letter-topic (such as ora-dlt-topology). From this dead-letter topic, the events (or messages) are added to the TOPOLOGY_FALLOUT_EVENTS table by another subscriber.

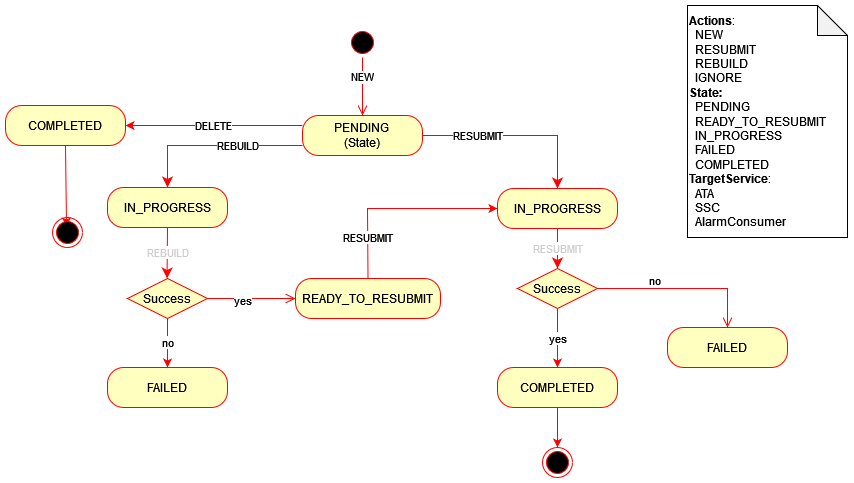

The general fallout events resolution process flow is illustrated in the following image. Some of process steps are applicable only to specific message consumer services. For example, the REBUILD setup is applicable only to the topology consumer service.

The following actions can be performed on fallout events. The actions can be performed using the REST APIs provided as part of ATA-API.

Table 8-2 Fallout Events Actions

| Action | Description |

|---|---|

| NEW | The event is added to the TOPOLOGY_FALLOUT_EVENTS table if the action is NEW. |

| REBUILD | This action corrects the missing dependencies.

Note: This action is applicable only to the topology consumer. |

| RESUBMIT | The resubmit action resubmits the event or message into the retry topic (such as ora-retry-topology) of the specific target service for reprocessing. |

| DELETE | Clears the event or messages from the fallout tables. |

| EDIT | Edits the specific event or message from the fallout table. |

The following are various states that an event can be based on the corresponding actions.

Table 8-3 Fallout Events States

| State | Description |

|---|---|

| PENDING | The fallout event is in pending state for the newly arrived fallouts. |

| IN_PROGRESS | The fallout event is in progress for either RESUBMIT or REBUILD actions. |

| READY_TO_RESUBMIT | The fallout event is processed by the REBUILD action and is ready for RESUBMIT. |

| FAILED | The fallout event processing is failed for either RESUBMIT or REBUILD. |

| COMPLETED | The fallout event processing is completed for RESUBMIT. |

You can use the fallout REST APIs for resolving these failures. See REST API for ATA for full list of REST APIs.

Table 8-4 REST APIs and the Corresponding Resolutions

| REST API | Resolution |

|---|---|

| /topology/v2/fallout/events/summary | Get a brief summary of the fallout events with status. |

| /topology/v2/fallout/events/rebuild/jobs | Rebuild to correct the missing dependencies. |

| /topology/v2/fallout/events/resubmit | Resubmit to retry the topic to re-process the event or message. |

|

/topology/v2/fallout/events /topology/v2/fallout/events/eid/{eid} |

Clear or delete the event or message from the fallout table. |

| /topology/v2/fallout/events/eid/{eid} | To edit the event or message in the fallout table and resubmit again. |

Fallout Events Resolution for Topology Consumer

The following figure illustrates the fallout events resolution process flow for topology consumer.

Figure 8-2 Process Flow of Fallout Events Resolution for Topology Consumer

These failed events in the TOPOLOGY_FALLOUT_EVENTS table can be rebuilt and resubmitted for the specific target service identifier. The target service value used for the topology consumer is ATA. When a fallout event comes into the table, it is in PENDING state. These events can be Rebuilt or Resubmitted as follows:

-

REBUILD: This action processes the Fallout Event and gets any out of sync data from UIM into ATA through the Database Link.

-

RESUBMIT: This action takes the events from the TOPOLOGY_FALLOUT_EVENTS table in PENDING or READY_TO_RESUBMIT states and moves them back into the ora_retry_topology topic for re-processing.

Prerequisites for REBUILD

- Before Rebuild is performed, the ATA Schema user should have the

following privileges:

- CREATE JOB

- ALTER SYSTEM

- CREATE DATABASE LINK

- Ensure a Database Link exists from ATA schema to UIM schema with the name REM_SCHEMA. That is, ATA schema user should be able to access the objects from UIM schema. For more information, see https://docs.oracle.com/en/database/oracle/oracle-database/19/sqlrf/CREATE-DATABASE-LINK.html#GUID-D966642A-B19E-449D-9968-1121AF06D793

Performing REBUILD Action

You can perform the Rebuild action in the following ways:

-

DBMS Job Scheduling: In this approach the REBUILD action on the Fallout Events in “PENDING” state is scheduled to run for every 6 hours. The frequency at which the job runs automatically can be configured by changing the repeat_interval.

BEGIN DBMS_SCHEDULER.create_job ( job_name => 'FALLOUT_DATA_REBUILD', job_type => 'PLSQL_BLOCK', job_action => 'BEGIN PKG_FALLOUT_CORRECTION.SCHEDULE_FALLOUT_JOBS(commitSize => 1000, cpusJobs => 4, waitTime => 2); END;', start_date => SYSTIMESTAMP, repeat_interval => 'FREQ=HOURLY; INTERVAL=6', enabled => TRUE ); END; / -

On-Demand REST API Call: In this approach, the REBUILD action on the Fallout Events in PENDING state are invoked through REST API before invoking the Rebuild API:

-

POST - fallout/events/rebuild – To rebuild the Fallout Events on demand as and whenever required.

-

DELETE - fallout/events/scheduledJobs – To drop any running or previously scheduled jobs.

-

Performing RESUBMIT Action

Resubmit Action is performed through a REST call and it takes the fallout events in “READY_TO_RESUBMIT” (post Rebuild) and PENDING states based on the query parameters and pushed the events into the “ora_retry_topology” topic:

POST -

fallout/events/resubmit?targetService=ATA – To resubmit the Fallout Events on demand

for topology consumer.

For more information on APIs available, see ATA REST API Guide.

Fallout Events Resolution for SmartSearch Consumer

The events will be stored into topology fallout event table when the

SmartSearch consumer service is not able to process successfully due to some possible

technical and data validation issues.

Description of the illustration fallout_res_events_ssconsumer.png

The fallout resolution for a SmartSearch consumer is as follows:

- The message producer sends event and message to the topic (ora_uim_topology).

- The SmartSearch consumer receives the event and message from the topic (ora_uim_topology).

- It invokes the SmartSearch bulk API to persist the event or message in Open Search.

- If the SmartSearch bulk API not able to process the even or message, or SmartSearch consumer is not able to process then the SmartSearch consumer sends a message to retry the topic (ora-retry-smartsearch).

- The SmartSearch consumer retries again to process. If still not processed, then the SmartSearch consumer sends an event message to the Dead Letter topic (ora-dlt-smartsearch).

- The DLT process of SmartSearch consumer gets event or message from the Dead Letter topics that persist in the TOPOLOGY_FALLOUT_EVENTS table.

The fallout resolution starts analyzing from the topology fallout events for the specific target service. The target service value used for SmartSearch consumer is SSC. The resolution can be performed with the help of fallout resolution REST APIs.

Table 8-5 Fallout Events REST APIs

| REST API | Resolution |

|---|---|

| /topology/v2/fallout/events/events/summary?targetService=SSC | Get the fallout events summary for the SmartSearch consumer. |

| /topology/v2/fallout/events/events?targetService=SSC | Get all events by matching the state and action for the

SmartSearch consumer.

Query Parameters:

|

| /topology/v2/fallout/events/resubmit | Re-submit the matched events of SmartSearch consumer for

re-processing

Query Parameters:

|

| /topology/v2/fallout/events/eid/{ENTITY_ID} | Update a specific fallout. |

|

/topology/v2/fallout/events /topology/v2/fallout/events/eid/{ENTITY_ID} |

To delete matching the fallout events for SmartSearch

consumer. The parameter values are as follows:

|

For full list of REST APIs, see REST API for ATA for Inventory and Automation.

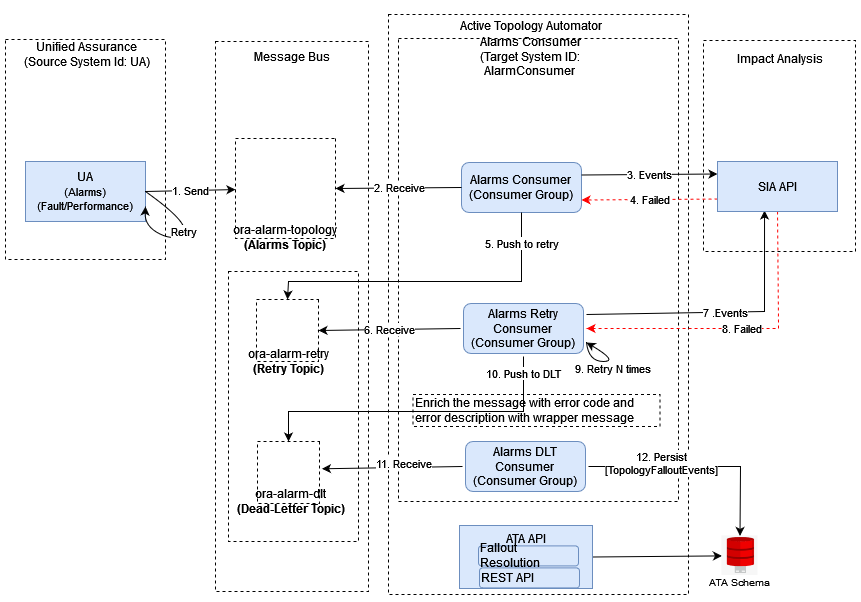

Fallout Events Resolution for Alarm Consumer

The following image illustrates an alarm event (or message) processing flow in alarm consumer.

Figure 8-3 Fallout Events Resolution for Alarm Consumer

Troubleshooting the Alarm Fallouts

Alarm fallouts are the alarms that could not be processed in alarm consumer because of the exceptions or errors occurred during processing of the alarm. Following are the major fallout scenarios identified:

- Incoming alarm has an invalid JSON structure or invalid TMF-642 structure.

- No device is found to which the incoming alarm can be mapped.

- Multiple devices are found for the incoming alarm.

- Processed alarm could not be forwarded to SIA API.

In the mentioned fallout scenarios, alarm-consumer is configured to retry the processing of the same alarm. This helps to address the possible intermittent issues such as connectivity and temporary data unavailability. In case the retry processing of the alarm is a fallout scenario, the details of the fallout information along with the alarm information will be stored into database. The persisted fallout alarms can be reviewed manually. In the process of review of the fallout alarm, the reviewer can add modification or correction to the alarm data and send the fallout alarm for reprocessing.

Note:

All fallout alarms are persisted. The alarms that have invalid JSON structure or invalid TMF-642 structure will not be persisted. This non-persisted fallout alarms are dropped from the alarm-consumer and alarm details cannot be verified later.

The TARGETSYSTEMID for all alarm fallouts for the alarm consumer will be AlarmConsumer. Therefore, while running a fallout resolution, the target service value will be used as AlarmConsumer.

The alarm consumer fallout alarms can be used for reprocessing, using the fallout resolution APIs:

- To list all alarm consumer fallout alarms:

- Method - GET

- URI -

/topology/v2/fallout/events?targetService=AlarmConsumer

- To find a specific fallout alarm:

- Method - GET, URI - /topology/v2/fallout/events/eid/<eid value>

- Update fallout alarm data:

- Method - PUT

- URI -

/topology/v2/fallout/events/eid/<eid value> - Request Body - Includes the fallout event details found using the above mentioned APIs.

- To send for reprocessing:

- Method - PUT

- URI -

/topology/v2/fallout/events/resubmit?state=PENDING&action=NEW&targetService=AlarmConsumer

ATA Support for Offline Maps

ATA support for map visualization is provided by the third-party service providers such as Open Street Maps (OSM), MapBox, Carto, Esri, and Web Map Service (WMS).

ATA integrates with these service providers and they provide the required components and computing resources, so that you can avoid setting up and maintaining a local tile server.

Oracle offers the following options to support offline maps:

- Allowlisting map URLs

- Setting up a local tile server

Allowlisting Map URLs

In highly secured installations, you may not provide internet access to the location. In such situations, Oracle recommends using an allowlist solution so the base maps can include the streets, cities, buildings, and so on.

For the map tiles to render, allowlist the following URLs:

- Tile 1:

- http://a.tile.openstreetmap.org/11/472/824.png

- http://b.tile.openstreetmap.org/11/472/825.png

- http://c.tile.openstreetmap.org/11/472/825.png

- Tile 2: