14 Debugging and Troubleshooting

This chapter provides information about debugging and troubleshooting issues that you may face while setting up OSM cloud native environment and creating OSM cloud native instances.

- Setting Up Java Flight Recorder (JFR)

- Troubleshooting Issues with Traefik, OSM UI, and WebLogic Administration Console

- Common Error Scenarios

- Known Issues

Setting Up Java Flight Recorder (JFR)

The Java Flight Recorder (JFR) is a tool that collects diagnostic data about running Java applications. OSM cloud native leverages JFR. See Java Platform, Standard Edition Java Flight Recorder Runtime Guide for details about JFR.

You can change the JFR settings provided with the toolkit by updating the appropriate values in the instance specification.

To analyze the output produced by the JFR, use Java Mission Control. See Java Platform, Standard Edition Java Mission Control User's Guide for details about Java Mission Control.

JFR is turned on by default in all managed servers. You can disable this

feature by setting the enabled flag to false.

max_age parameter in the instance

specification:# Java Flight Recorder (JFR) Settings

jfr:

enabled: true

max_age: 4hPersisting JFR Data

JFR data can be persisted outside of the container by re-directing it to persistent storage through the use of a PV-PVC. See "Setting Up Persistent Storage" for details.

Once the storage has been set up, enable storageVolume and set the PVC

name. Once enabled, JFR data is re-directed automatically.

# The storage volume must specify the PVC to be used for persistent storage.

storageVolume:

enabled: true

pvc: storage-pvcTroubleshooting Issues with Traefik, OSM UI, and WebLogic Administration Console

This section describes how to troubleshoot issues with access to the OSM UI clients, WLST, and WebLogic Administration Console.

It is assumed that Traefik is the Ingress controller being used and

the domain name suffix is osm.org. You can modify the

instructions to suit any other domain name suffix that you may have

chosen.

Table 14-1 URLs for Accessing OSM Clients

| Client | If Not Using Oracle Cloud Infrastructure Load Balancer | If Using Oracle Cloud Infrastructure Load Balancer |

|---|---|---|

| OSM Task Web Client | http://instance.project.osm.org:30305/OrderManagement | http://instance.project.osm.org:80/OrderManagement |

| WLST | http://t3.instance.project.osm.org:30305 | http://t3.instance.project.osm.org:80 |

| WebLogic Admin Console | http://admin.instance.project.osm.org:30305/console | http://admin.instance.project.osm.org:80/console |

Error: Http 503 Service Unavailable (for OSM UI Clients)

This error occurs if the managed servers are not running.

To resolve this issue:

- Check the status of the managed servers and ensure that at least one managed

server is up and

running:

kubectl -n project get pods - Log into WebLogic Admin Console and navigate to the Deployments section and

check if the State column for oms shows Active. The value in the Targets

column indicates the name of the cluster.

If the application is not Active, check the managed server logs and see if there are any errors. For example, it is likely that the OSM DB Connection pool could not be created. The following could be the reasons for this:

- DB connectivity could not be established due to reasons such as password expired, account locked, and so on.

- DB Schema health check policy failed.

Resolution: To resolve this issue, address the errors that prevent the application from becoming Active. Depending on the nature of the corrective action you take, you may have to perform the following procedures as required:- Upgrade the instance, by running upgrade-instance.sh

- Upgrade the domain, by running upgrade-domain.sh

- Delete and create a new instance, by running delete-instance.sh followed by create-instance.sh

Security Warning in Mozilla Firefox

If you use Mozilla Firefox to connect to an OSM cloud native instance via

HTTP, your connection may fail with a security warning. You may notice that the URL you

entered automatically change to https://. This can happen even if HTTPS is

disabled for the OSM instance. If HTTPS is enabled, it only happens if you are using a

self-signed (or otherwise untrusted) certificate.

If you wish to continue with the connection to the OSM instance using HTTP, in the configuration settings for your Firefox browser (URL: "about:config"), set the network.stricttransportsecurity.preloadlist parameter to FALSE.

Error: Http 404 Page not found

This is the most common problem that you may encounter.

To resolve this issue:

- Check the Domain Name System (DNS) configuration.

Note:

These steps apply for local DNS resolution via the hosts file. For any other DNS resolution, such as corporate DNS, follow the corresponding steps.The hosts configuration file is located at:- On Windows: C:\Windows\System32\drivers\etc\hosts

- On Linux: /etc/hosts

Check if the following entry exists in the hosts configuration file of the client machine from where you are trying to connect to OSM:- Local installation of Kubernetes without Oracle Cloud Infrastructure

load

balancer:

Kubernetes_Cluster_Master_IP instance.project.osm.org t3.instance.project.osm.org admin.instance.project.osm.org - If Oracle Cloud Infrastructure load balancer is

used:

Load_balancer_IP instance.project.osm.org t3.instance.project.osm.org admin.instance.project.osm.org

- Check the browser settings and ensure that *.osm.org is added to the No proxy list, if your proxy cannot route to it.

- Check if the Traefik pod is running and install or update the Traefik Helm

chart:

kubectl -n traefik get pod NAME READY STATUS RESTARTS AGE traefik-operator-657b5b6d59-njxwg 1/1 Running 0 128m - Check if Traefik service is

running:

If the Traefik service is not running, install or update the Traefik Helm chart.kubectl -n traefik get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE oci-lb-service-traefik LoadBalancer 192.0.2.1 203.0.113.25 80:31115/TCP 20d <---- Is expected in OCI environment only -- traefik-operator NodePort 192.0.2.25 <none> 443:30443/TCP,80:30305/TCP 141m traefik-operator-dashboard ClusterIP 203.0.113.1 <none> 80/TCP 141m - Check if Ingress is configured, by running the following

command:

If Ingress is not running, install Ingress by running the following commands:kubectl -n project get ing NAME HOSTS ADDRESS PORTS AGE project-instance-traefik instance.project.osm.org,t3.instance.project.osm.org,admin.instance.project.osm.org 80 89m$OSM_CNTK/scripts/create-ingress.sh -p project -i instance -s specPath - Check if the Traefik back-end systems are registered, by using one of the

following options:

- Run the following commands to check if your project namespace is being

monitored by Traefik. Absence of your project namespace means that your managed server

back-end systems are not registered with

Traefik.

$ cd $OSM_CNTK $ source scripts/common-utils.sh $ find_namespace_list 'namespaces' traefik traefik-operator "traefik","project_1", "project_2" - Check the Traefik Dashboard and add the following DNS entry in your

hosts configuration

file:

Add the same entry regardless of whether you are using Oracle Cloud Infrastructure load balancer or not. Navigate to:Kubernetes_Access_IP traefik.osm.orghttp://traefik.osm.org:30305/dashboard/and check the back-end systems that are registered. If you cannot find your project namespace, install or upgrade the Traefik Helm chart. See "Installing the Traefik Ingress Controller as Alternate (Deprecated)".

- Run the following commands to check if your project namespace is being

monitored by Traefik. Absence of your project namespace means that your managed server

back-end systems are not registered with

Traefik.

Reloading Instance Backend Systems

If your instance's ingress is present, yet Traefik does not recognize the URLs of your instance, try to unregister and register your project namespace again. You can do this by using the unregister-namespace.sh and register-namespace.sh scripts in the toolkit.

Note:

Unregistering a project namespace will stop access to any existing instances in that namespace that were working prior to the unregistration.Debugging Traefik Access Logs

To increase the log level and debug Traefik access logs:

- Run the following

command:

A new instance of the Traefik pod is created automatically.$ helm upgrade traefik-operator traefik/traefik --version 9.11.0 --namespace traefik --reuse-values --set logs.access.enabled=true - Look for the pod that is created most

recently:

$ kubectl get po -n traefik NAME READY STATUS RESTARTS AGE traefik-operator-pod_name 1/1 Running 0 0 5s $ kubectl -n traefik logs -f traefik-operator-pod_name - Enabling access logs generates large amounts of information in the logs.

After debugging is complete, disable access logging by running the following

command:

$ helm upgrade traefik-operator traefik/traefik --version 9.11.0 --namespace traefik --reuse-values --set logs.access.enabled=false

Cleaning Up Traefik

Note:

Clean up is not usually required. It should be performed as a desperate measure only. Before cleaning up, make a note of the monitoring project namespaces. Once Traefik is re-installed, run $OSM_CNTK/scripts/register-namespace.sh for each of the previously monitored project namespaces.

Warning: Uninstalling Traefik in this manner will interrupt access to all OSM instances in the monitored project namespaces.

helm uninstall traefik-operator -n traefikCleaning up of Traefik does not impact actively running OSM instances. However, they cannot be accessed during that time. Once the Traefik chart is re-installed with all the monitored namespaces and registered as Traefik back-end systems successfully, OSM instances can be accessed again.

Setting up Logs

As described earlier in this guide, OSM and WebLogic logs can be stored in the individual pods or in a location provided via a Kubernetes Persistent Volume. The PV approach is strongly recommended, both to allow for proper preservation of logs (as pods are ephemeral) and to avoid straining the in-pod storage in Kubernetes.

Note:

Replace ms1 with the appropriate managed server or with "admin".When a PV is configured, logs are available at the following path starting from the root of the PV storage:

project-instance/logs.

The following logs are available in the location (within the pod or in PV) based on the specification:

- admin.log - Main log file of the admin server

- admin.out - stdout from admin server

- admin_nodemanager.log: Main log from nodemanager on admin server

- admin_nodemanager.out: stdout from nodemanager on admin server

- admin_access.log: Log of http/s access to admin server

- ms1.log - Main log file of the ms1 managed server

- ms1.out - stdout from ms1 managed server

- ms1_nodemanager.log: Main log from nodemanager on ms1 managed server

- ms1_nodemanager.out: stdout from nodemanager on ms1 managed server

- ms1_access.log: Log of http/s access to ms1 managed server

All logs in the above list for "ms1" are repeated for each running managed server, with the logs being named for their originating managed server in each case.

In addition to these logs:

- Each JMS Server configured will have its log file with the name server_msn-jms_messages.log (for example: osm_jms_server_ms2-jms_messages.log).

- Each SAF agent configured will have its log file with the name server_msn-jms_messages.log (for example: osm_saf_agent_ms1-jms_messages.log).

OSM Cloud Native and Oracle Enterprise Manager

OSM cloud native instances contain a deployment of the Oracle Enterprise Manager application, reachable at the admin server URL with the path "/em". However, the use of Enterprise Manager in this Kubernetes context is not supported. Do not use the Enterprise Manager to monitor OSM. Use standard Kubernetes pod-based monitoring and OSM cloud native logs and metrics to monitor OSM.

Recovering an OSM Cloud Native Database Schema

When the OSM DB Installer fails during an installation, it exits with an error message. You must then find and resolve the issue that caused the failure. You can re-run the DB Installer after the issue (for example, space issue or permissions issue) is rectified. You do not have to rollback the DB.

Note:

Remember to uninstall the failed DB Installer helm chart before rerunning it. Contact Oracle Support for further assistance.It is recommended that you always run the DB Installer with the logs directed to a Persistent Volume so that you can examine the log for errors. The log file is located at: filestore/project-instance/db-installer/{yyyy-mm-dd}-osm-db-installer.log.

In addition, to identify the operation that failed, you can look in the filestore/project-instance/db-installer/InstallPlan-OMS-CORE.csv CSV file. This file shows the progress of the DB Installer.

- Delete the new schema or use a new schema user name for the subsequent installation.

- Restart the installation of the database schema from the beginning.

- Find the issue that caused the upgrade failure. See "Finding the Issue that Caused the OSM Cloud Native Database Schema Upgrade Failure" for details.

- Fix the issue. Use the information in the log or error messages to fix the issue before you restart the upgrade process. For information about troubleshooting log or error messages, see OSM Cloud Native System Administrator's Guide.

- Restart the schema upgrade procedure from the point of failure. See "Restarting the OSM Database Schema Upgrade from the Point of Failure" for details.

Finding the Issue that Caused the OSM Cloud Native Database Schema Upgrade Failure

There are several files where you can look to find information about the issue. By default, these files are generated in the managed server pod, but can be re-directed to a Persistent Volume Claim (PVC) supported by the underlying technology that you choose. See "Setting Up Persistent Storage" for details.

To access these files after the DB installer pod is deleted, re-direct all logs to the PVC.

See the following files for details about the issue:

- The database installation plan action spreadsheet file: This file

contains a summary of all the installation actions that are part of this OSM

database schema installation or upgrade. The actions are listed in the order that

they are performed. The spreadsheet includes actions that have not yet been

completed. To find the action that caused the failure, check the following files and

review the Status column:

- filestore/project-instance/db-installer/InstallPlan-OMS-CORE.csv

- filestore/project-instance/db-installer/InstallPlan-OMS_CLOUD-CORE.csv

- The database installation log file: This file provides a more detailed description of all the installation actions that have been run for this installation. The issue that caused the failure is located in the filestore/project-instance/db-installer/{yyyy-mm-dd}-osm-db-installer.log file. The failed action, which is the last action that was performed, is typically listed at the end of log file.

- semele$plan_actions: This contains the same information as the database plan action spreadsheet. Compare this table to the spreadsheet in cases of a database connection failure.

- semele$plan: This contains a summary of the installation that has been performed on this OSM database schema.

Restarting the OSM Database Schema Upgrade from the Point of Failure

In most cases, restarting the OSM database schema upgrade consists of pointing the installer to the schema that was partially upgraded, and then re-running the installer.

Note:

This task requires a user with DBA role.- Most migration actions are part of a single transaction, which is rolled back in the event of a failure. However, some migration actions involve multiple transactions. In this case, it is possible that some changes were committed.

- Most migration actions are repeatable, which means that they can safely be re-run even if they were committed. However, if a failed action is not repeatable and it committed some changes, either reverse all the changes that were committed and set the status to FAILED, or complete the remaining changes and set the status to COMPLETE.

To restart the upgrade after a failure:

- Determine which action has failed and the reason for the failure.

- If the status of the failed action is STARTED, check the database to

see whether the action is completed or still running. If it is still running, either

end the session or wait for the action to finish.

Note:

The transaction might not finish immediately after the connection is lost, depending on how fast the database detects that the connection is lost and how long it takes to roll back. - Fix the issue that caused the failure.

Note:

If the failure is caused by a software issue, contact Oracle Support. With the help of Oracle Support, determine whether the failed action modified the schema and whether you must undo any of those changes. If you decide to undo any changes, leave the action status set to FAILED or set it to NOT STARTED. When you retry the upgrade, the installer starts from this action. If you manually complete the action, set the status to COMPLETE, so that the installer starts with the next action. Do not leave the status set to STARTED because the next attempt to upgrade will not be successful. - Restart the upgrade by running the installer.

The installer restarts the upgrade from the point of failure.

Resolving Improper JMS Assignment

While running OSM cloud native with more than one managed server, sometimes, the incoming orders and the resulting workload may not get distributed evenly across all managed servers.

While there are multiple causes for improper distribution (including the use of an incorrect JMS connection factory to inject order creation messages), one possible cause is the improper assignment of JMS servers to managed servers. For even distribution of workload, each managed server that is running must host its corresponding JMS server.

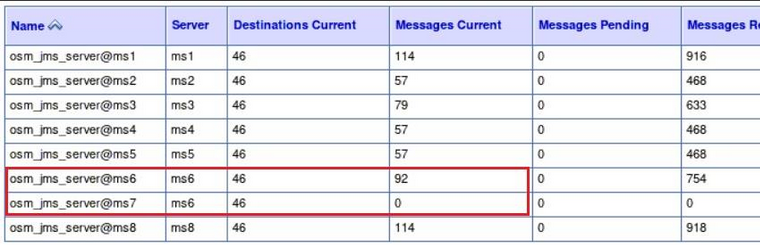

The following figure shows an example of improper JMS assignment.

Figure 14-1 Example of Improper JMS Assignment

In the figure, osm_jms_server@ms7 is incorrectly running on ms6 even though its native host ms7 is running. It can be normal for more than one JMS server to be running on a managed server as long as the additional JMS servers do not have a native managed server that is online.

Workaround

As a workaround, terminate the Kubernetes pod for the managed server that has been left underutilized. In the above example, the pod for ms7 should be terminated. The WebLogic Operator recreates the managed server pod, and that should trigger the migration of osm_jms_server@ms7 back to ms7.

Resolution

To resolve this issue, tune the time setting for InitialBootDelaySeconds and PartialClusterStabilityDelaySeconds. See the WebLogic Server documentation for more details.

To tune the time setting:

-

Add the following Clustering fragment to the instance specification:

Clustering: InitialBootDelaySeconds: 10 PartialClusterStabilityDelaySeconds: 30 -

Increase the value for the following parameters from the base WDT model:

- InitialBootDelaySeconds. The default value in base WDT is 2.

- PartialClusterStabilityDelaySeconds. The default value in base WDT is 5.

Note:

The default values for these parameters in WebLogic Server are 60 and 240 respectively. The actual values required depend on the environmental factors and must be arrived at by tuning. Higher values can result in slower placement of JMS servers. While this is not a factor during OSM startup, it will mean more time could be taken when a managed server shuts down before its JMS server migrates and comes up on a surviving managed server. Orders with messages pending delivery in that JMS server will be impacted by this, but the rest of the system is unaffected.Common Problems and Solutions

This section describes some common problems that you may experience because you have run a script or a command erroneously or you have not properly followed the recommended procedures and guidelines regarding setting up your cloud environment, components, tools, and services in your environment. This section provides possible solutions for such problems.

Domain Introspection Pod Does Not Start

There may be a case where introspector doesn't start. This could mean that the operator is not monitoring your namespace or your namespace is not tagged to the correct label which the operator is monitoring.

For more information about operator monitoring, see: https://oracle.github.io/weblogic-kubernetes-operator/managing-operators/common-mistakes/#forgetting-to-configure-the-operator-to-monitor-a-namespace

Domain Introspection Pod Status

kubectl get pods -n namespace

## healthy status looks like this

NAME READY STATUS RESTARTS AGE

project-instance-introspect-domain-job-hzh9t 1/1 Running 0 3sNAME READY STATUS RESTARTS AGE

project-instance-introspect-domain-job-r2d6j 0/1 ErrImagePull 0 5s

### OR

NAME READY STATUS RESTARTS AGE

project-instance-introspect-domain-job-r2d6j 0/1 ImagePullBackOff 0 45sThis shows that the introspection pod status is not healthy. If the image can be pulled, it is possible that it took a long time to pull the image.

To resolve this issue, verify that the image name and the tag and that it is accessible from the repository by the pod.

You can also try the following:

- Increase the value of

podStartupDeadlineSecondsin the instance specification.Start with a very high timeout value and then monitor the average time it takes, because it depends on the speed with which the images are downloaded and how busy your cluster is. Once you have a good idea of the average time, you can reduce the timeout values accordingly to a value that includes the average time and some buffer.

- Pull the container image manually on all Kubernetes nodes where the OSM cloud native pods can be started up.

Domain Introspection Errors Out

Some times, the domain introspector pod runs, but ends with an error.

kubectl logs introspector_pod -n projectThe following are the possible causes for this issue:

- RCU Schema is pre-existing: If the logs shows the following, then RCU

schema could be

pre-existing:

This could happen because the database was reused or cloned from an OSM cloud native instance. If this is so, and you wish to reuse the RCU schema as well, provide the required secrets. For details, see "Reusing the Database State".WLSDPLY-12409: createDomain failed to create the domain: Failed to write domain to /u01/oracle/user_projects/domains/domain: wlst.writeDomain(/u01/oracle/user_projects/domains/domain) failed : Error writing domain: 64254: Error occurred in "OPSS Processing" phase execution 64254: Encountered error: oracle.security.opss.tools.lifecycle.LifecycleException: Error during configuring DB security store. Exception oracle.security.opss.tools.lifecycle.LifecycleException: The schema FMW1_OPSS is already in use for security store(s). Please create a new schema.. 64254: Check log for more detail.If you do not have the secrets required to reuse the RCU instance, you must use the OSM cloud native DB Installer to create a new RCU schema in the DB. Use this new schema in your

rcudbsecret. If you have login details for the old RCU users in yourrcudbsecret, you can use the OSM cloud native DB Installer to delete and re-create the RCU schema in place. Either of these options gives you a clean slate for your next attempt.Finally, it is possible that this was a clean RCU schema but the introspector ran into an issue after RCU data population but before it could generate the wallet secret (opssWF). If this is the case, debug the introspector failure and then use the OSM cloud native DB Installer to delete and re-create the RCU schema in place before the next attempt.

- Fusion MiddleWare cannot access the RCU: If the introspector logs show

the following error, then it means that Fusion MiddleWare could not access the

schema inside the RCU DB.

Typically, this happens when wrong values are entered while creating secrets for this deployment. Less often, the cause is a corrupted RCU DB or an invalid one. Re-create your secrets, verifying the credentials and drop and re-create the RCU DB.WLSDPLY-12409: createDomain failed to create the domain: Failed to get FMW infrastructure database defaults from the service table: Failed to get the database defaults: Got exception when auto configuring the schema component(s) with data obtained from shadow table: Failed to build JDBC Connection object:

Recovery After Introspection Error

If the introspection fails during instance creation, once you have gathered the required information and have a solution, delete the instance and then re-run the instance creation script with the fixed specification, model extension, or other environmental failure cause.

If the introspection fails while upgrading a running instance, then do the following:

- Make the change to fix the introspection failure. Trigger an instance upgrade. If this results in successful introspection, the recovery process stops here.

- If the instance upgrade in step 1 fails to trigger a fresh

introspection, then do the following:

- Rollback to the last good Helm release by first running the

helm history -n project project-instancecommand to identify the version in the output that matches the running instance (that is, before the upgrade that led to introspection failure). The timestamp on each version helps you identify the version. Once you know the "good" version, rollback to that version by running:helm rollback -n project project-instance version. Monitor the pods in the instance to ensure only the Admin server and the appropriate number of Managed Server pods are running. - Upgrade the instance with the fixed specification.

- Rollback to the last good Helm release by first running the

Instance Deletion Errors with Timeout

You use the delete-instance.sh script to delete an instance that is no longer required. The script attempts to do this in a graceful manner and is configured to wait up to 10 minutes for any running pods to shut down. If the pods remain after this time, the script times out and exits with an error after showing the details of the leftover pods.

The leftover pods can be OSM pods (Admin Server, Managed Server) or the DBInstaller pod.

For the leftover OSM pods, see the WKO logs to identify why cleanup has not run. Delete the pods manually if necessary, using the kubectl delete commands.

For the leftover DBInstaller pod, this happens only if

install-osmdb.sh is interrupted or if it failed in its last run. This should

have been identified and handled at that time itself. However, to complete the cleanup,

run helm ls -n

project to find the failed DBInstaller release, and then invoke helm uninstall -n project

release. Monitor the pods in the project namespace until the DBInstaller pod

disappears.

OSM Cloud Native Toolkit Instance Create and Update Scripts Timeout; Pods Show Readiness "0/1"

If your create-instance.sh or upgrade-instance.sh scripts timeout, and you see that the desired managed server pods are present, but one or more of them show "0/1" in the "READY" column, this could be because OSM hit a fatal problem while starting up. The following could be the causes for this issue:

- A mismatch in the OSM schema found and the expected version: If this is

the case, the OSM managed server log shows the following

issue:

To resolve this issue, check the container image used for the DB installer and the OSM domain instances. They should match.Error: The OSM application is not compatible with the schema code detected in the OSM database. Expected version[7.4.0.0.68], found version[7.4.0.0.70] This likely means that a recent installation or upgrade was not successful. Please check your install/upgrade error log and take steps to ensure the schema is at the correct version. - OSM internal users are missing: This can happen if there are issues

with the configuration of the external authentication provider and the standard OSM

users (for example, oms-internal) and the group association is not loaded.

The managed server log shows something like the

following:

To resolve this issue, review your external authentication system to validate users and groups. Review your configuration to ensure that the instance is configured for the correct external authenticator.<Error> <Deployer> <BEA-149205> <Failed to initialize the application "oms" due to error weblogic.management.DeploymentException: The ApplicationLifecycleListener "com.mslv.oms.j2ee.LifecycleListener" of application "oms" has a run-as user configured with the principal name "oms-internal" but a principal of that name could not be found. Ensure that a user with that name exists.

OSM Cloud Native Pods Do Not Distribute Evenly Across Worker Nodes

In some occasions, OSM cloud native pods do not distribute evenly across the worker nodes.

$ $OSM_CNTK/samples/image-primer.sh -p project image-name:image-tagname+tag

combination, regardless of which project uses that image or how many instances are

created with it.

This script is offered as a sample and may need to be customized for your environment. If you are using an image from a repository that requires pull credentials, edit the image-primer.sh script to uncomment these lines and add your pull secret:

#imagePullSecrets:

#- name: secret-nameIf you are planning to target OSM cloud native to specific worker nodes, edit the sample to ensure only those nodes are selected (typically by using a specific label value) as per standard Kubernetes configuration. See the Kubernetes documentation for DaemonSet objects.

User Workgroup Association Lost

During cartridge deployment, if users are not present in LDAP or if LDAP is not accessible, the user workgroup associations could get deleted.

To resolve this issue, restore the connectivity to LDAP and the users. You may need to redo the workgroup associations.

Changing the WebLogic Kubernetes Operator Log Level

Some situations may require analysis of the WKO logs. These logs can be certain kinds of introspection failures or unexpected behavior from the operator. The default log level for the Operator is INFO.

For information about changing the log level for debugging, see the documentation at: https://oracle.github.io/weblogic-kubernetes-operator/managing-operators/troubleshooting/#operator-and-conversion-webhook-logging-level.

Deleting and Re-creating the WLS Operator

You may need to delete a WLS operator and re-create it. You do this when you want to use a new version of the operator where upgrade is not possible, or when the installation is corrupted.

When the controlling operator is removed, the existing OSM cloud native instances continue to function. However, they cannot process any updates (when you run upgrade-instance.sh) or respond to the Kubernetes events such as the termination of a pod.

To avoid common mistakes during the installation of WKO, refer to the WKO troubleshooting information at: https://oracle.github.io/weblogic-kubernetes-operator/managing-operators/common-mistakes/#forgetting-to-configure-the-operator-to-monitor-a-namespace.

To uninstall WKO, follow the steps in WKO documentation at: https://oracle.github.io/weblogic-kubernetes-operator/managing-operators/installation/#uninstall-the-operator.

Re-register your namespaces using the register-namespace.sh and unregister-namespace.sh scripts in the cloud native toolkit.

You can install the operator by following the instructions in WKO documentation at: https://oracle.github.io/weblogic-kubernetes-operator/managing-operators/installation/#install-the-operator. Then, register all the projects again, one by one. See "Registering the Namespace" for details.

Lost or Missing opssWF and opssWP Contents

For an OSM instance to successfully connect to a previously initialized set of DB schemas, it needs to have the opssWF (OPSS Wallet File) and opssWP (OPSS Wallet-file Password) secrets in place. The $OSM_CNTK/scripts/manage-instance-credentials.sh script can be used to set these up if they are not already present.

If these secrets or their contents are lost, you can delete and recreate the RCU schemas (using $OSM_CNTK/scripts/install-osmdb.sh with command code 5). This deletes data (such as some user preferences and so on) stored in the RCU schemas. On the other hand, if there is a WebLogic domain currently running against that DB (or its clone), the "exportEncryptionKey" offline WLST command can be run to dump out the "ewallet.p12" file. This command also takes a new encryption password. For details about WLST Command Reference for Infrastructure Security, see Oracle Fusion MiddleWare documentation. The contents of the resulting ewallet.p12 file can be used to recreate the opssWF secret, and the encryption password can be used to recreate the opssWP secret. This method is also suitable when a DB (or the clone of a DB) from a traditional OSM installation needs to be brought into OSM cloud native.

Clock Skew or Delay

When submitting JMS message over the Web Service queue, you might see the following in the SOAP response:

Security token failed to validate.

weblogic.xml.crypto.wss.SecurityTokenValidateResult@5f1aec15[status: false][msg UNT

Error:Message older than allowed MessageAge]

Oracle recommends synchronizing the time across all machines that are involved in communication. See "Synchronizing Time Across Servers" for more details. Implement Network Time Protocol (NTP) across the hosts involved, including the Kubernetes cluster hosts.

It is also possible to temporarily fix this through configuration by adding the following properties to java_options in the project specification for each managed server.managedServers: project:

#JAVA_OPTIONS for all managed servers at project level java_options:

-Dweblogic.wsee.security.clock.skew=72000000

-Dweblogic.wsee.security.delay.max=72000000Known Issues

This section describes known issues that you may come across, their causes, and the resolutions.

The OSM Email plugin is currently not supported. Users who require this capability can create their own plugin for this purpose.

SituationalConfig NullPointerException

In the managed server logs, you might notice a stacktrace that indicates a NullPointerException in situational config.

This exception can be safely ignored.

Connectivity Issues During Cluster Re-size

When the cluster size changes, whether from the termination and re-creation of a pod, through an explicit upgrade to the cluster size, or due to a rolling restart, transient errors are logged as the system adjusts.

These transient errors can usually be ignored and stop after the cluster has stabilized with the correct number of Managed Servers in the Ready state.

If the error messages were to persist after a Ready state is achieved, then looking for secondary symptoms of a real problem would be appropriate. Such connectivity errors could result in orders that were inexplicably stuck or were otherwise processing abnormally.

- An MDB is unable to connect to a JMS destination. The specific MDB and JMS

destination can vary, such as:

-

<The Message-Driven EJB OSMInternalEventsMDB is unable to connect to the JMS destination mslv/oms/oms1/internal/jms/events. -

<The Message-Driven EJB DeployCartridgeMDB is unable to connect to the JMS destination mslv/provisioning/internal/ejb/deployCartridgeQueue.

-

- Failed to Initialize JNDI context. Connection refused; No available router to destination. This type of error is seen in an instance where SAF is configured.

- Failed to process events for event type[AutomationEvents].

- Consumer destination was closed.

Upgrade Instance failed with spec.persistentvolumesource: Forbidden: is immutable after creation.

Error: UPGRADE FAILED: cannot patch "<project>-<instance>-nfs-pv" with kind

PersistentVolume: PersistentVolume "<project>-<instance>-nfs-pv" is invalid: spec.persistentvolumesource:

Forbidden: is immutable after creation

Error in upgrading osm helm chart- Disable NFS by setting the

nfs.enabledparameter to false and run the upgrade-instance script. This removes the PV from the instance. - Enable it again by changing

nfs.enabled:to true with the new values of NFS and then run upgrade-instance.

JMS Servers for Managed Servers are Reassigned to Remaining Managed Servers

When scaling down, the JMS servers for managed servers that do not exist are getting reassigned to remaining managed servers.

Jun 15, 2020 11:01:32,821 AM UTC> <Info> <oracle.communications.ordermanagement.automation.plugin.JMSDestinationListener> <BEA-000000> <

All local JMS destinations: ms1

JNDI JMS Server WLS Server Migratable Target Local Member Type Partition

---------------------------------------------------------------------------- ------------------ ---------- ----------------- -------- ----------------------------- ---------

osm_jms_server@ms1@mslv/oms/oms1/internal/jms/events osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms1@oracle.communications.ordermanagement.SimpleResponseQueue osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

>osm_jms_server@ms1 is targeting ms1.

<Jun 15, 2020 11:02:20,461 AM UTC> <Info> <oracle.communications.ordermanagement.automation.plugin.JMSDestinationListener> <BEA-000000> <

All local JMS destinations: ms1

JNDI JMS Server WLS Server Migratable Target Local Member Type Partition

---------------------------------------------------------------------------- ------------------ ---------- ----------------- -------- ----------------------------- ---------

osm_jms_server@ms1@mslv/oms/oms1/internal/jms/events osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms1@oracle.communications.ordermanagement.SimpleResponseQueue osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms2@mslv/oms/oms1/internal/jms/events osm_jms_server@ms2 ms2 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms2@oracle.communications.ordermanagement.SimpleResponseQueue osm_jms_server@ms2 ms2 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

>osm_jms_server@ms1 is targeting ms1 and

osm_jms_server@ms2 is targeting ms2.

<Jun 15, 2020 11:02:20,461 AM UTC> <Info> <oracle.communications.ordermanagement.automation.plugin.JMSDestinationListener> <BEA-000000> <

All local JMS destinations: ms1

JNDI JMS Server WLS Server Migratable Target Local Member Type Partition

---------------------------------------------------------------------------- ------------------ ---------- ----------------- -------- ----------------------------- ---------

osm_jms_server@ms1@mslv/oms/oms1/internal/jms/events osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms1@oracle.communications.ordermanagement.SimpleResponseQueue osm_jms_server@ms1 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms2@mslv/oms/oms1/internal/jms/events osm_jms_server@ms2 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

osm_jms_server@ms2@oracle.communications.ordermanagement.SimpleResponseQueue osm_jms_server@ms2 ms1 true MEMBER_TYPE_CLUSTERED_DYNAMIC DOMAIN

>osm_jms_server@ms2 is not deleted and is

targeting ms1.

This is completely expected behavior. This is a WebLogic feature and not to be mistaken for any inconsistency in the functionality.

Image Build Failure Due to OPatch Error

You may face the following error while building images using the OSM cloud native builder toolkit:

OPatch failed with error code 73

[SEVERE ] Build command failed with error: OPatch failed to restore OH '/u01/oracle'. Consult OPatch document to restore the home manually before proceeding.

UtilSession failed: ApplySession failed in system modification phase... 'ApplySession::apply failed: oracle.glcm.opatch.common.api.install.HomeOperationsException: A failure occurred while processing patch: 31676526'

Error: building at STEP "RUN /u01/oracle/OPatch/opatch apply -silent -oh /u01/oracle -nonrollbackable /tmp/imagetool/patches/p31676526_122140_Generic.zip": while running runtime: exit status 73The root cause for this error is that Podman's default value for number of open files is too low for an OPatch invocation.

The way to resolve this error is to configure the build system's Linux to have a higher

hard limit for open files. The current hard limit on the number of open files can be

known by running ulimit -n -H on the host. For more information, refer

to Prerequisites for Creating OSM Images.