9 Integrating ASAP

Typical usage of ASAP involves the ASAP application receiving work orders from upstream. Upstream interacts with ASAP using t3/t3s or http/https. This chapter examines the considerations involved in integrating ASAP cloud native instances into a larger solution ecosystem.

This section describes the following topics and tasks:

- Integrating with ASAP cloud native instances

- Applying the WebLogic patch for external systems

- Configuring SAF on External Systems

- Setting up Secure Communication with SSL/TLS

Integrating With ASAP Cloud Native Instances

Functionally, the interaction requirements of ASAP do not change when ASAP is run in a cloud native environment. All of the categories of interaction that are applicable for connectivity with traditional ASAP instances are applicable and must be supported for ASAP cloud native.

Note:

Connectivity with SRT are not supported in ASAP cloud native environment.Connectivity Between the Building Blocks

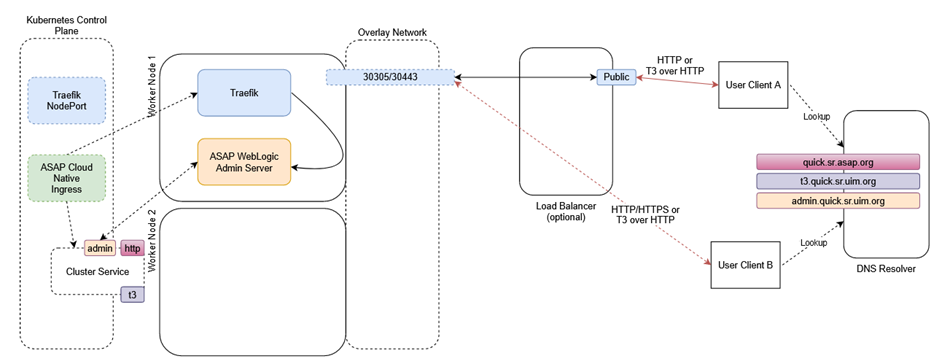

The following diagram illustrates the connectivity between the building blocks in an ASAP cloud native environment using an example:

Figure 9-1 Connectivity Between Building Blocks in ASAP Cloud Native Environment

Description of "Figure 9-1 Connectivity Between Building Blocks in ASAP Cloud Native Environment"

Invoking the ASAP cloud native Helm chart creates a new ASAP instance. In the above illustration, the name of the instance is "quick" and the name of the project is "sr". The instance consists of an ASAP pod and a Kubernetes service.

The Cluster Service contains endpoints for both HTTP and T3 traffic. The instance creation script creates the ASAP cloud native Ingress object. The Ingress object has metadata to trigger the Traefik ingress controller as a sample. Traefik responds by creating new front-ends with the configured "hostnames" for the cluster (quick.sr.asap.org and t3.quick.sr.uim.org in the illustration). The IngressRoute connects the hostname to the service exposed on the pod. The service is created on the ASAP WebLogic admin server port.

The prior installation of Traefik has already exposed Traefik itself using the selected port number on each worker node.

Inbound HTTP Requests

An ASAP instance is exposed outside of the Kubernetes cluster for HTTP access via an Ingress Controller and potentially a Load Balancer.

Because the Traefik port is common to all ASAP cloud native instances in the cluster, Traefik must be able to distinguish between the incoming messages headed for different instances. It does this by differentiating on the basis of the "hostname" mentioned in the HTTP messages. This means that a client (User Client B in the illustration) must believe it is talking to the "host" dev2.mobilecom.asap.org when it sends HTTP messages to Traefik port on the access IP. This might be the Master node IP, or IP address of one of the worker nodes, depending on your cluster setup. The "DNS Resolver" provides this mapping.

In this mode of communication, there are concerns around resiliency and load distribution. For example, If the DNS Resolver always points to the IP address of Worker Node 1 when asked to resolve dev2.mobilecom.asap.org, then that Worker node ends up taking all the inbound traffic for the instance. If the DNS Resolver is configured to respond to any *.mobilecom.asap.org requests with that IP, then that worker node ends up taking all the inbound traffic for all the instances. Since this latter configuration in the DNS Resolver is desired, to minimize per-instance touches, the setup creates a bottleneck on Worker node 1. If Worker node 1 were to fail, the DNS Resolver would have to be updated to point *.mobilecom.asap.org to Worker node 2. This leads to an interruption of access and requires intervention. The recommended pattern to avoid these concerns is for the DNS Resolver to be populated with all the applicable IP addresses as resolution targets (in our example, it would be populated with the IPs of both Worker node 1 and node 2), and have the Resolver return a random selection from that list.

An alternate mode of communication is to introduce a load balancer configured to balance incoming traffic to the Traefik ports on all the worker nodes. The DNS Resolver is still required, and the entry for *.mobilecom.asap.org points to the load balancer. Your load balancer documentation describes how to achieve resiliency and load management. With this setup, a user (User Client A in our example) sends a message to dev2.mobilecom.asap.org, which actually resolves to the load balancer - for instance, http://dev2.mobilecom.asap.org:8080/OrderManagement/Login.jsp. Here, 8080 is the public port of the load balancer. The load balancer sends this to Traefik, which routes the message, based on the "hostname" targeted by the message to the HTTP channel of the ASAP cloud native instance.

Note:

Access to the WebLogic Admin console is provided for review and debugging use only. Do not use the console to change the system state or configuration. As a result, any such manual changes (whether using the console or using WLST or other such mechanisms) are not retained in pod reschedule or reboot scenarios. The only way to change the state or configuration of the WebLogic domain or the ASAP installation is inside the ASAP image.Inbound JMS Requests

JMS messages use the T3 protocol. Since Ingress Controllers and Load Balancers do not understand T3 for routing purposes, ASAP cloud native requires all incoming JMS traffic to be "T3 over HTTP". Hence, the messages are still HTTP but contain a T3 message as a payload. ASAP cloud native requires the clients to target the "t3 hostname" of the instance - t3.dev2.mobilecom.asap.org, in the example. This "t3 hostname" should behave identically as the regular "hostname" in terms of the DNS Resolver and the Load Balancer. Traefik however not only identifies the instance this message is meant for (dev2.mobilecom) but also that it targets the T3 channel of instance.

The "T3 over HTTP" requirement applies for all inbound JMS messages - whether generated by direct or foreign JMS API calls or generated by SAF. The procedure in SAF QuickStart explains the setup required by the message producer or SAF agent to achieve this encapsulation. If SAF is used, the fact that T3 is riding over HTTP does not affect the semantics of JMS. All the features such as reliable delivery, priority, and TTL, continue to be respected by the system. See "Applying the WebLogic Patch for External Systems".

An ASAP instance can be configured for secure access, which includes exposing the T3 endpoint outside the Kubernetes cluster for HTTPS access. See "Configuring Secure Incoming Access with SSL" for details on enabling SSL.

Applying the WebLogic Patch for External Systems

When an external system is configured with a SAF sender towards ASAP cloud

native, using HTTP tunneling, a patch is required to ensure the SAF sender can connect

to the ASAP cloud native instance. This is regardless of whether the connection resolves

to an ingress controller or to a load balancer. Each such external system that

communicates with ASAP through SAF must have the WebLogic patch 30656708 installed and

configured, by adding -Dweblogic.rjvm.allowUnknownHost=true to the WebLogic startup parameters.

/etc/hosts file:

0.0.0.0 project-instance-ms1

0.0.0.0 project-instance-ms2

0.0.0.0 project-instance-ms3

0.0.0.0 project-instance-ms4

0.0.0.0 project-instance-ms5

0.0.0.0 project-instance-ms6

0.0.0.0 project-instance-ms7

0.0.0.0 project-instance-ms8

0.0.0.0 project-instance-ms9

0.0.0.0 project-instance-ms10

0.0.0.0 project-instance-ms11

0.0.0.0 project-instance-ms12

0.0.0.0 project-instance-ms13

0.0.0.0 project-instance-ms14

0.0.0.0 project-instance-ms15

0.0.0.0 project-instance-ms16

0.0.0.0 project-instance-ms17

0.0.0.0 project-instance-ms18Configuring SAF On External Systems

To create SAF and JMS configuration on your external systems to communicate with the ASAP cloud native instance, use the configuration samples provided as part of the SAF sample as your guide.

The ASAP SAF agent persistent store must be a JDBC Persistent Store.

It is important to retain the "Per-JVM" and "Exactly-Once" flags as provided in the sample.

All connection factories must have the "Per-JVM" flag, as must SAF foreign destinations.

Each external queue that is configured to use SAF must have its QoS set to "Exactly-Once".

When an external system is configured with a SAF sender towards ASAP cloud native, using HTTP tunneling, you must install the WebLogic patch 30656708. You must configure by adding the following to the WebLogic startup parameters when the connection resolves to an ingress controller or to a load balancer:

-Dweblogic.rjvm.allowUnknownHost=true

0.0.0.0 project-instance-ms1

0.0.0.0 project-instance-ms2Enabling Domain Trust

To enable domain trust, in your domain configuration, under Advanced, edit the Credential and ConfirmCredential fields with the same password you used to create the global trust secret in ASAP cloud native.

Setting Up Secure Communication with SSL/TLS

When ASAP cloud native is involved in secure communication with other systems, you should additionally configure SSL/TLS. The configuration may involve the WebLogic domain, the ingress controller, or the URL of remote endpoints, but it always involves participating in an SSL handshake with the other system. The procedures for setting up SSL use self-signed certificates for demonstration purposes. However, replace the steps as necessary to use signed certificates.

If an external client is communicating with ASAP cloud native instance by using SSL/TLS, you should configure secure incoming access with SSL.

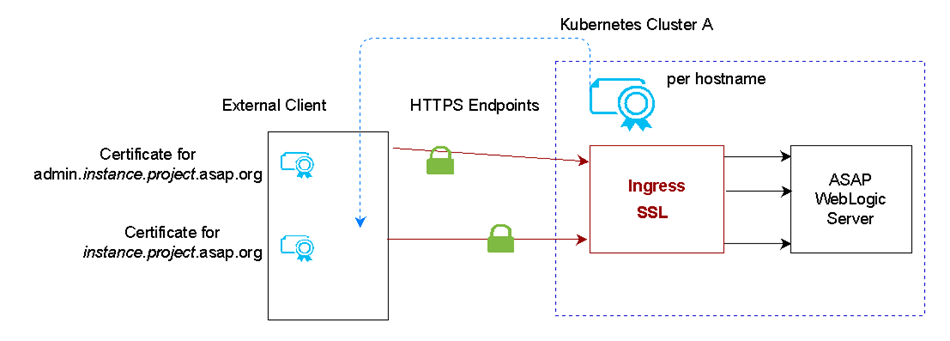

Configuring Secure Incoming Access with SSL

This section demonstrates how to secure incoming access to ASAP cloud native. In this scenario, SSL termination happens at the ingress. The traffic coming in from external clients must use one of the HTTPS endpoints. When SSL terminates at the ingress, it also means that communication within the cluster from Traefik ASAP cloud native instances is not secured.

The ASAP cloud native toolkit provides the sample configuration for Traefik

ingress. If you use Voyager or other ingress, you can look at the

$ASAP-CNTK/charts/asap/templates/traefik-ingress.yaml file to see

what configuration is applied.

Generating SSL Certificates for Incoming Access

The following illustration shows when certificates are generated.

When ASAP cloud native dictates secure communication, then it is responsible for generating the SSL certificates. These certificates must be provided to the appropriate client.

Setting Up ASAP Cloud Native for Incoming Access

Note:

Traefik 2.x moved to use IngressRoute (a CustomResourceDefinition) instead of the Ingress object. If you are using Traefik, change all references ofingress to

ingressroute in the preceding and the following commands

:

name: cne1-traefik

namespace: sr

resourceVersion: "1661456"

uid: d67aa1ee-8c8f-423a-9969-3f5df33216d2

spec:

entryPoints:

- web

routes:

- kind: Rule

match: Host(<hostname>)

services:

- name: cne1-service

port: 7890To set up ASAP cloud native for incoming access:

- Generate key pairs for each hostname corresponding to an endpoint that

ASAP cloud native exposes to the outside

world:

# Create a directory to save your keys and certificates. This is for sample only. Proper management policies should be used to store private keys. mkdir $ASAP_CNTK/charts/asap/ssl # Generate key and certificates openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout $ASAP_CNTK/charts/asap/ssl/admin.key -out $ASAP_CNTK/charts/asap/ssl/admin.crt -subj "/CN=admin.instance.project.asap.org" # Create secrets to hold each of the certificates. The secret name must be in the format below. Do not change the secret names kubectl create secret -n project tls project-instance-admin-tls-cert --key $ASAP_CNTK/charts/asap/ssl/admin.key --cert $ASAP_CNTK/charts/asap/ssl/admin.crt - Edit the

values.yamlfile and setincomingto true:ingress: sslIncoming: true - After creating the instance by running the

create-instance.shscript, you can validate the configuration by describing the ingress controller for your instance. You should see each of the certificates you generated, terminating one of the hostnames:kubectl get ingress -n projectOnce you have the name of your ingress, run the following command:

kubectl describe ingress -n project ingress TLS: project-instance-admin-tls-cert terminates admin.instance.project.asap.org

Now the ASAP instance is created with the secure connection to the ingress controller.

Configuring Incoming HTTP and JMS Connectivity for External Clients

This section describes how to configure incoming HTTP and JMS connectivity for external clients.

Note:

Remember to have your DNS resolution set up on any remote hosts that will connect to the ASAP cloud native instance.Incoming HTTPS Connectivity

External Web clients that are connecting to ASAP cloud native must be configured to accept the certificates from ASAP cloud native. They will then connect using the HTTPS endpoint and port 30443.

Incoming JMS Connectivity

For external servers that are connected to ASAP cloud native through JMS queues, the certificate for the t3 endpoint needs to be copied to the host where the external client is running.

If your external WebLogic configuration uses "CustomIdentityAndJavaSTandardTrust", follow these instructions to upload the certificate to the Java Standard Trust. If, however, you are using a CustomTrust, then you must upload the certificate into the custom trust keystore.

The keytool is found in the bin directory of your JDK

installation. The alias should uniquely describe the environment where this certificate

is from.

./keytool -importcert -v -trustcacerts -alias alias -file /path-to-copied-t3-certificate/t3.crt -keystore /path-to-jdk/jdk1.8.0_431/jre/lib/security/cacerts -storepass default_password

# For example

./keytool -importcert -v -trustcacerts -alias asapcn -file /scratch/t3.crt -keystore /jdk1.8.0_431/jre/lib/security/cacerts -storepass default_passwordDebugging SSL

To debug SSL, do the following:

- Verify Hostname

- Enable SSL logging

Verifying Hostname

When the keystore is generated for the on-premise server, if FQDN is not specified, then you may have to disable hostname verification. This is not secure and should only be done in development environments.

build_env.sh script and add the following Java

options:

#JAVA_OPTIONS for all managed servers at project level

java_options: "-Dweblogic.security.SSL.ignoreHostnameVerification=true"Enabling SSL Logging

When trying to establish the handshake between servers, it is important to enabling the SSL-specific logging.

build_env.sh script and append the following Java

options:

project:

#JAVA_OPTIONS for all managed servers at project level

java_options: "-Dweblogic.StdoutDebugEnabled=true -Dssl.debug=true -Dweblogic.security.SSL.verbose=true -Dweblogic.debug.DebugSecuritySSL=true -Djavax.net.debug=ssl"