Migrate Databases with Migration Workbench

Migrate Using Database Migration Workbench User Interface

Migrating Databases with Database Migration Workbench user interface is a simple intuitive process that will guide you through setting up and moving your on-premises databases to different Oracle database solutions.

Note:

Underneath Data Migration, click on the arrow next to Getting Started to see the flow diagram for Database Migration Workbench.- Full Database: Migrates the entire database from on premise to on premises, PDB or Oracle Cloud. For step by step instructions see: Migrate a Full Database.

- Schema: Migrates only the schema, this is recommended as a starting point when migrating with Migration Workbench to ensure proper migration when performing a Full Database migration. For step by step instructions see: Migrate Selected Schema.

- Transportable Tablespace: Moves database tablespaces to either a newly created or existing PDB, providing minimum downtime. For step by step instructions see: Migrate Using Transportable Tablespace.

Note:

Transportable Tablespace from the Migration Workbench user interface is available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).Migrate Using Data Pump

Data Pump provides an Export and Import way of moving data and metadata from one database to another. The following is a list of available migrations using Data Pump.

Note:

Oracle Enterprise Manager's Migration Workbench now supports authentication using Database Kerberos Credentials and Database Kerberos Keytab Credentials with Data Pump migrations. Microsoft Active Directory users can be exclusively mapped to a database account and authenticated with a Kerberos password or a Keytab file without password and human interaction. If these are configured they will appear from the credential drop down menu.Data Pump Migration for a Single Instance Database

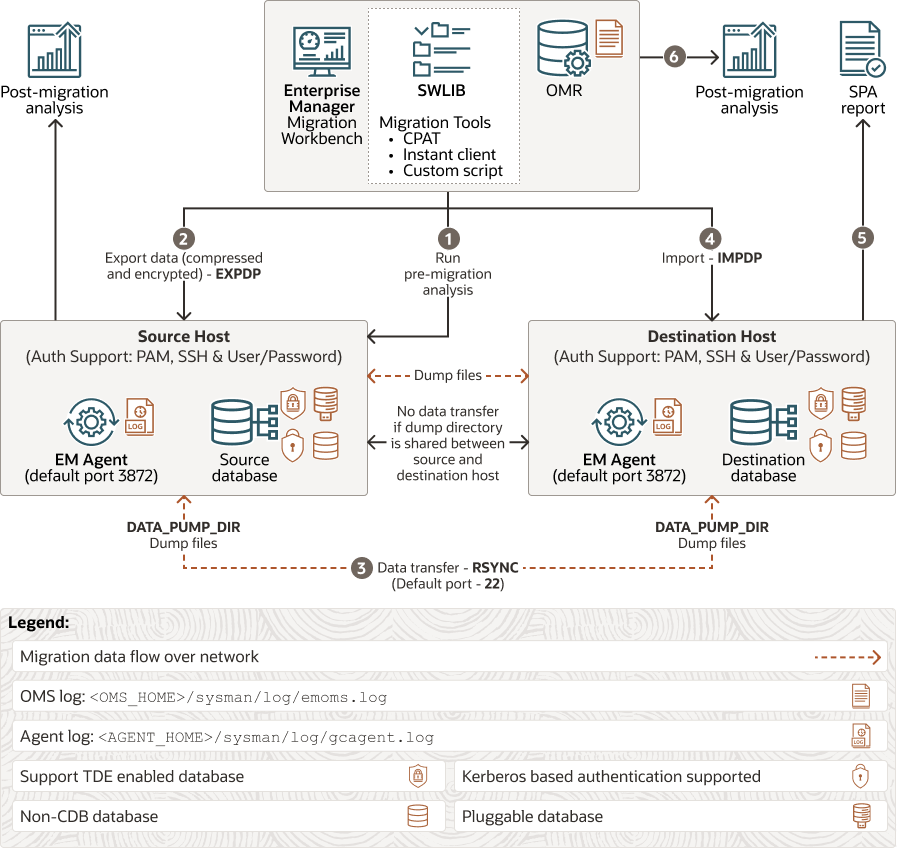

The following is a complete diagram for a single instance database performing a Data Pump migration:

Figure 3-4 Data Pump Migration for a Single Instance Database

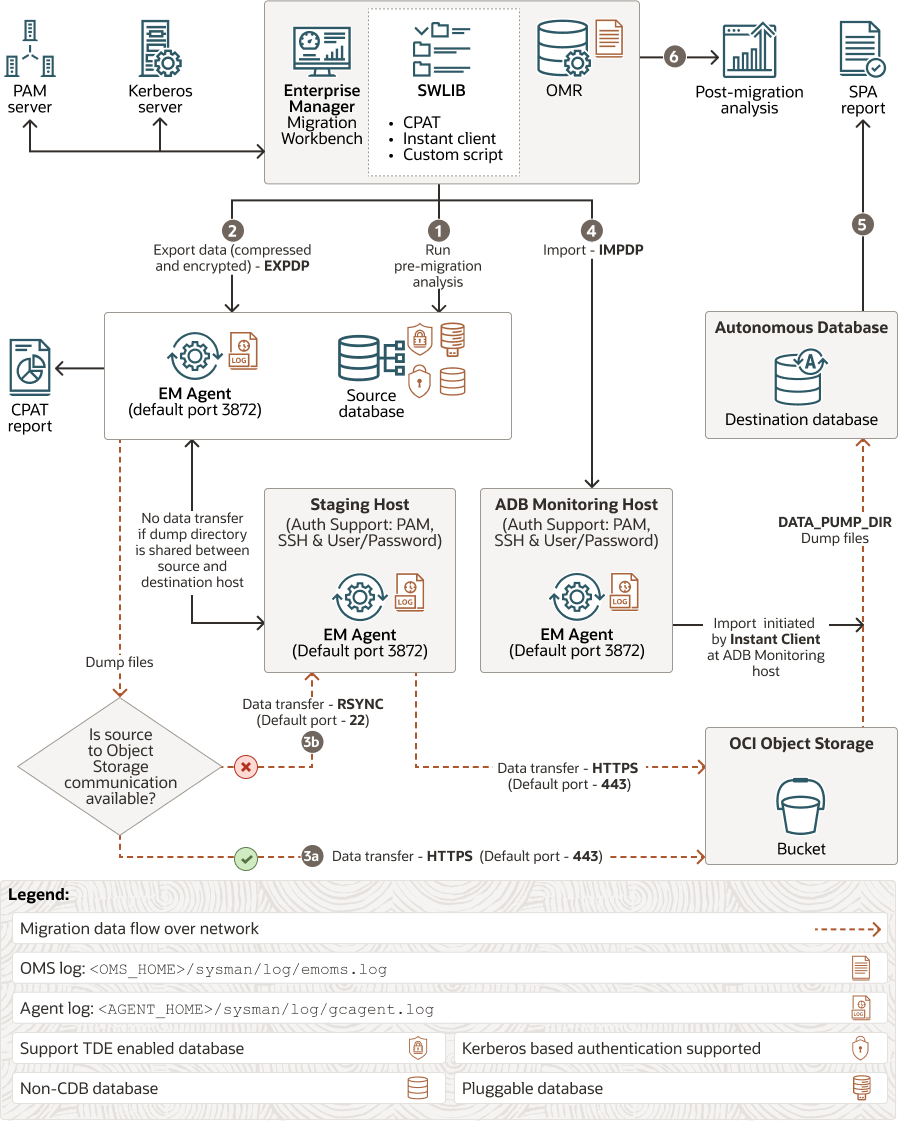

Data Pump Migration for an Autonomous Database

The following is a complete diagram for an autonomous database performing a Data Pump migration

Figure 3-5 Data Pump Migration for an Autonomous Database

Migrate a Full Database

Follow these steps to migrate a full database using Migration Workbench and Data Pump:

- From Databases, select Migration and Consolidation, then Database Migration Workbench, this loads the Database Migration screen. Select Data Pump under Migration Method.

Click Create Migration Activity.

- Enter a migration name under Name Activity. Under Migrate select Full Database.

- Select Source Database, click on the bar and select a database or search for a database.

Click on the Source Database to be migrated.

- Select Destination Database, click on the bar and select a database, search for a database or create a new PDB.

Note:

When selecting any non ADB migration you have an option to create a new PDB in the drop down menu. A dialog appears for you to enter required information for a new PDB.Click on the Destination Database that will receive the migration.

- Select Source Database, click on the bar and select a database or search for a database.

- Upload Migration Tools: (Optional) Enterprise Manager automatically downloads the latest version of the tools, however if they are not available from the Software Library for your operating system you will need to upload them, click on Upload Migration Tools. For more information see Upload Migration Tools.

- Select Upload Instant Client to upload Instance Client files to the Software Library. This is only needed for ADB migrations only.

- Select Cloud Pre-migration Advisor Tool (CPAT) to upload the CPAT files to the Software Library. For Oracle Enterprise Manager 13 Release 5 Update 3 (13.5.0.3) and below CPAT is not available, use Schema Advisor for Autonomous Database migrations.

Select whether to upload the ZIP files from a local or agent machine, click on Upload and Continue.

- The Add Details page shows a summary of the information entered:

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named).

Note:

Oracle Enterprise Manager's Migration Workbench now supports authentication using Database Kerberos Credentials and Database Kerberos Keytab Credentials with Data Pump migrations. Microsoft Active Directory users can be exclusively mapped to a database account and authenticated with a Kerberos password or a Keytab file without password and human interaction. If these are configured they will appear from the credential drop down menu. - Destination: Ensure the information is correct and select the Database Credentials and Monitoring Agent Host Credentials (Preferred or Named). If required you can specify the Service Name.

Enter the Wallet Password for TDE enabled databases.

- Action:

Note:

Do not useSYSDBAas the named credentials for Data Pump Export and Import.SYSDBAshould only be used at the request of Oracle technical support.- Select Recompile Invalid Objects After Migration to recompile all invalid objects within schema once a migration successfully completes.

- Under Source Data Pump Directory

For On Premise to ADB: Enter and confirm the Encryption Password, enter the Cloud Storage URL, Bucket Name and Credentials.

For On Premise to non ADB: Enter and confirm the Encryption Password. Cloud Storage URL and Bucket Name are not needed as the dump is passed from host to host.

- Under Database OCI Auth Credential the default cloud credential will be shown and preselected, you can select a different credential from the list if required. This field is for ADB migrations only.

- Enter and confirm the Encryption Password if required.

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named).

- In the Customize page, set the export and import options as needed:

- Export Options:

- EXCLUDE: Tables that are to be excluded and not migrated.

- ENCRYPTION: Password to encrypt/decrypt datapump dump files.

- ENCRYPTION ALGORITHM: Specifies which cryptographic algorithm should be used for encryption (AES128, AES192, AES256).

- CONTENT: Select whether to export DATA, METADATA, or both. It is recommended to first perform a METADATA only migration as a test before performing a DATA migration.

- PARALLEL: Specifies the maximum number of processes of active execution operating on behalf of the export job.

- Import Options:

- TABLE EXISTS ACTION: Action to be taken if the table exists at the destination database (skip or replace).

- DATA_OPTIONS: This parameter designates how certain types of data should be handled during import operations.

- PARALLEL: Specifies the maximum number of processes of active execution operating on behalf of the import job.

- REMAP SCHEMA: Loads all objects from the source schema into a target schema.

- REMAP TABLESPACE: Remaps all objects selected for import with persistent data in the source tablespace to be created in the target tablespace.

- TRANSFORM: Enables you to alter object creation DDL for objects being imported.

- Upload Options: Select whether a Monitoring Agent from the Source Database or a Different Agent will be used. If you select Different Agent enter the Agent, Host Credential and Stage Directory where it is located.

- Select Compare Performance After Migration to configure and allow performance and comparison analytics after migration using SQL Performance Analyzer (SPA).

Note:

When running SPA make sure to export your schema as it is needed to stage the tuning set. Failure to do so will generate an error while performing validations.SPA is not available for Metadata only Data pump migrations.

- Custom Scripts: Enter any custom Pre or Post scripts you may require for the migration. These scripts must be uploaded to the Software Library beforehand.

- Script Location: Location of the Pre/Post scripts within the Software Library.

- Pre-Migration Script: Default is No, select the script you wish to run.

- Post-Migration Script: Default is No, select the script you wish to run.

- Post-Migration SQL Script: Default is No, select the script you wish to run.

- Export Options:

- The Review & Submit page, allows you to review all information before proceeding to migrate and can run tests to ensure a proper migration. We recommend you run the Cloud Pre-Migration Advisor Tool (CPAT) by clicking Check Migration Readiness under Source. This will open a new window where CPAT will run and analyze your databases for any migration blockers. When complete, the CPAT report will show:

- Failed: Errors that will cause a fatal error resulting in an unsuccessful migration. Fix these issues before continuing with the migration.

- Action Required: Issues that will most likely cause an unsuccessful migration. Fix these issues before continuing with the migration.

- Review Required: The migration may succeed or partly succeed, however not everything may work or be exactly like at the source database causing post migration cleanup work. It is recommended to also fix these issues before migrating

- Review Suggested: Migration will succeed and applications will likely see no functional difference but Database Administrators need to evaluate each for unforeseen issues.

- Passed: CPAT is analysis indicates that migration will be successful with no difference in behavior.

Click Validate to validate the inputs to your migration activity. A pop up, where the pre-check runs will appear.

Once all issues presented by Analyze Source and Validation are resolved, click Submit to migrate.

- A pop up will require you to confirm that you have done the source analysis and when to start the migration Immediately or Later (requires a date and time when the migration will start).

Click Submit.

Migrate Selected Schema

A schema migration can be performed on a database to update or revert a database's schema to newer or older version.

- From Databases, select Migration and Consolidation, then Database Migration Workbench, this loads the Database Migration screen. Select Data Pump under Migration Method.

Click Create Migration Activity.

- Enter a migration name under Name Activity. Under Migrate select Full Database.

- Select Source Database, click on the bar and select a database or search for a database.

Click on the Source Database to be migrated.

- Select Destination Database, click on the bar and select a database or search for a database.

Note:

When selecting any non ADB migration you have an option to create a new PDB in the drop down menu. A dialog appears for you to enter required information for a new PDB.Click on the Destination Database that will receive the migration or enter the name for the new PDB to be created.

- Select Source Database, click on the bar and select a database or search for a database.

- Upload Migration Tools: (Optional) Enterprise Manager automatically downloads the latest version of the tools, however if they are not available from the Software Library for your operating system you will need to upload them, click on Upload Migration Tools. For more information see Upload Migration Tools.

- Select Upload Instant Client to upload Instance Client files to the Software Library. This is only needed for ADB migrations only.

- Select Cloud Pre-migration Advisor Tool (CPAT) to upload the CPAT files to the Software Library. For Oracle Enterprise Manager 13 Release 5 Update 3 (13.5.0.3) and below CPAT is not available, use Schema Advisor.

Select whether to upload the ZIP files from a local or agent machine, click on Upload and Continue.

- The Add Details page shows a summary of the information entered:

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named). When selecting Named credentials select the credential from the drop down menu.

Note:

Oracle Enterprise Manager's Migration Workbench now supports authentication using Database Kerberos Credentials and Database Kerberos Keytab Credentials with Data Pump migrations. Microsoft Active Directory users can be exclusively mapped to a database account and authenticated with a Kerberos password or a Keytab file without password and human interaction. If these are configured they will appear from the credential drop down menu. - Destination: Ensure the information is correct and select the Database Credentials and Monitoring Agent Host Credentials (Preferred or Named). When selecting Named credentials select the credential from the drop down menu. If required you can specify the Service Name, a default is loaded if not.

Enter the Wallet Password for TDE enabled databases.

- Action:

Note:

Do not useSYSDBAas the named credentials for Data Pump Export and Import.SYSDBAshould only be used at the request of Oracle technical support.- Select Recompile Invalid Objects After Migration to allow Migration Workbench to recompile all invalid objects within schema once a migration successfully completes. This option is unchecked by default

- Under Source Data Pump Directory

For On Premise to Autonomous Database: Enter and confirm the Encryption Password, enter the Cloud Storage URL, Bucket Name and Credentials.

For On Premise to non Autonomous Database: Enter and confirm the Encryption Password. Cloud Storage URL and Bucket Name are not needed, the process does not stage the dump files to cloud storage.

- Under Database OCI Auth Credential the default cloud credential will be shown and preselected, you can select a different credential from the list if required. This field is for ADB migrations only.

- Enter and confirm the Encryption Password if required.

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named). When selecting Named credentials select the credential from the drop down menu.

- In the Customize page, set the following:

Note:

- In schema based migrations, a combo box will appear above Export Options, select the schema to be migrated. The process will honor the sequence in which the schema are input in case there is a schema dependency.

- Select the Question mark next to the Export or Import option for more details.

- Export Options:

- EXCLUDE: Select which tables are to be excluded and not migrated.

- ENCRYPTION: Password to encrypt/decrypt datapump dump files.

- ENCRYPTION ALGORITHM: Specifies which cryptographic algorithm should be used to perform the encryption (AES128, AES192, AES256)

- CONTENT: Enables you to determine whether the migration should export DATA only, METADATA only or both. It is recommended to first perform a METADATA only migration as a test before performing a DATA migration.

- PARALLEL: Specifies the maximum number of processes of active execution operating on behalf of the export job.

- Import Options:

- TABLE EXISTS ACTION: Action to be taken if the table exists at the destination database (skip or replace).

- DATA_OPTIONS: This parameter designates how certain types of data should be handled during import operations.

- PARALLEL: Specifies the maximum number of processes of active execution operating on behalf of the import job.

- REMAP SCHEMA: Loads all objects from the source schema into a target schema.

- REMAP TABLESPACE: Remaps all objects selected for import with persistent data in the source tablespace to be created in the target tablespace.

- TRANSFORM: Enables you to alter object creation DDL for objects being imported.

- Upload Options: Select with the radio buttons whether a Monitoring Agent from the Source Database or a Different Agent will be used. If you select Different Agent enter the Agent, Host Credential and Stage Directory where it is located.

- Select Compare Performance After Migration to configure and allow performance and comparison analytics after migration using SQL Performance Analyzer (SPA).

Note:

When running SPA make sure to export your SQL Tuning set schema from where the SQL Workload is captured. It is needed to properly stage the tuning set. Failure to do so will generate an error while performing validations.SPA is not available for Metadata only Data pump migrations.

- Custom Scripts: Enter any custom Pre or Post scripts you may require for the migration. These scripts must be uploaded to the Software Library beforehand.

- Script Location: Location of the Pre/Post scripts within the Software Library.

- Pre-Migration Script: Default is No, select the script you wish to run.

- Post-Migration Script: Default is No, select the script you wish to run.

- Post-Migration SQL Script: Default is No, select the script you wish to run.

- The Review & Submit page, allows you to review all information before proceeding to migrate and can run tests to ensure a proper migration. We recommend you run the Cloud Pre-Migration Advisor Tool (CPAT) by clicking Check Migration Readiness under Source. This will open a new window where CPAT will run and analyze your databases for any migration blockers. When complete the CPAT report will show:

- Failed: Errors that will cause a fatal error resulting in an unsuccessful migration. Fix these issues before continuing with the migration.

- Action Required: Issues that will most likely cause an unsuccessful migration. Fix these issues before continuing with the migration.

- Review Required: The migration may succeed or partly succeed, however not everything may work or be exactly like at the source database causing post migration cleanup work. It is recommended to also fix these issues before migrating

- Informational: Migration will succeed and applications will likely see no functional difference but Database Administrators need to evaluate each for unforseen issues.

- Passed: CPAT is analysis indicates that migration will be successful with no difference in behavior.

Note:

Cloud Pre-Migration Advisor Tool (CPAT) is available on Oracle Enterprise Manager 13 Release 5 Update 4 (13.5.0.4) and onward. If you are using an earlier version Schema Advisor needs to be used instead.Validate your migration inputs, click the Validate button. A pop up, where the pre-check runs will appear.

Once all issues presented by Check Migration Readiness and Validation are resolved, click Submit to migrate.

- A pop up will require you to confirm that you have done the Check Migration Readiness and when to start the migration Immediately or Later (requires a date and time when the migration will start).

Click Submit.

Migrate Using Transportable Tablespace

Transportable Tablespace provides a fast and efficient mechanism to move large volumes of data from one database system to another using a Near Zero Downtime (NZDT) way to create a destination database. This is achieved by using full and incremental backups of the source database, while retaining the original source database.

Note:

Migration Workbench Transportable Tablespace migrations are available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).Destination databases can be both a non-CDB as well as PDB starting with Oracle Enterprise Manager 13 Release 5 Update 10 (13.5.0.10).

The pre-migration analyzer (migration readiness check) will run as part of a Transportable Tablespace deployment procedure starting with Oracle Enterprise Manager 13 Release 5 Update 15 (13.5.0.15)

Migrate a Full Database with Transportable Tablespace

This method performs a full database migration on-premises to on-premises using TTS instead of Data Pump.

- Click on Databases, select Migration and Consolidation then Database Migration Workbench, this loads the Database Migration screen.

Click Create Migration Activity.

- Enter a migration name under Name Activity. Under Migrate select Full Database.

- Select Source Database, click on the bar and select a database or search for a database.

Click on the Source Database to be migrated.

- Select Destination Database, click on the bar and select a database or search for a database. You also have an option to create a new PDB in the drop down menu.

A dialog appears for you to enter required information for a new PDB:

- Container Database

- PDB Name

- Administrator Name

- Administrator Password

- Confirm Password

Click on the Destination Database that will receive the migration.

- Select Source Database, click on the bar and select a database or search for a database.

- The Add Details page shows a summary of the information entered:

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named).

Starting with Oracle Enterprise Manager 13.5 Release Update 12 (13.5.0.12) PDB administrator can be used as a named credential for a new PDB.

For Transportable Tablespace migration use SYSDBA for the Database Credential, and use the Oracle Home Owner for the Host credential.Enter the Wallet Password for TDE enabled databases.

- Destination: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named).

For Transportable Tablespace migration use SYSDBA for the Database Credential, and use the Oracle Home Owner for the Host credential.

Enter the Wallet Password for TDE enabled databases.

- Action:

- Select Compare Performance After Migration to run SQL Tuning Set (STS) after migration.

- Enter the Source Working Directory, use the default or a directory of your choice.

- Enter the Destination Working Directory, use the default or a directory of your choice.

- Enter the Destination Storage Directory, supported storage is Filesystem and Oracle Automatic Storage Management (ASM).

- Enter and confirm the Encryption Password for the metadata dumps.

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named).

- In the Customize page, set the following:

- Migration Details: Select the Tablespaces to be Migrated. By default all Tablespaces will be migrated.

- Compare Performance After Migration: Select whether to use a new SQL Tuning Set (STS) or use an existing and set for how many days STS will run.

- Custom Scripts: Enter any custom Pre or Post scripts you may require for the migration. These scripts must be uploaded to the Software Library beforehand.

- Script Location: Location of the Pre/Post scripts within the Software Library.

- Pre-Migration Script: Default is No, select the script you wish to run.

- Post-Migration Script: Default is No, select the script you wish to run.

- Post-Migration SQL Script: Default is No, select the script you wish to run.

- The Review & Submit page, allows you to review all information before proceeding to migrate, can run validation tests, and presents a report to ensure a proper migration.

Starting with Oracle Enterprise Manager 13.5 Release Update 12 (13.5.0.12) you can perform a Migration Readiness Check analysis before starting a TTS migration.

To analyze your migration activity inputs and view the report, click the Check Migration Readiness button.

The Migration Readiness Report shows the following information:

- The Summary section shows the Status for the analysis and Recommendation. For further details you can click on Database Detail and Analyzed Tablespaces.

- The Results Breakdown by Severity shows a bar graph with the number of issues found grouped by severity. These are presented left to right from most serious to non-issue, these are:

- Failed: The source and destination databases selected are not compatible or suitable for migration and need to be changed or the particular validation could not be completed due to internal errors.

- Action Required: Validation failed, review and take action to resolve the issue. The migration will fail if the issue is not corrected before submission.

- Review Required: Warning message, review and if necessary take action to correct. Migration may be impacted.

- Review Suggested: Informational message, read and review.

- Passed: Validation succeeded, you can proceed with the migration.

- The lower section shows the Result Detail, to view in detail issues from a specific severity field click on the arrow to expand each field. To view the specific details for an issue, click the arrow to expand the issue details.

Next click Validate to analyze and validate the migration activity inputs.

Once all issues presented by the Migration Readiness Report are resolved, and the migration activity inputs have been validated click Submit

- A pop up will require you to confirm when to start the migration Immediately or Later (requires a date and time when the migration will start).

Click Submit.

Perform a Multiphase Migration with Transportable Tablespace

This section will discuss the multiphase migration approach by performing incremental backups of the source database, while retaining the original target database.

When performing a Multiphase Migration with Transportable Tablespace (TTS) you have more control over the backup phases unlike a a Transportable Tablespace Single phase Migration. A Transportable Tablespace Single phase Migration is performed in one go, starting at the scheduled time and running until complete. In a TTS Multiphase Migration, you can take an initial backup, return at any other time for an incremental backup by selecting Update Backup, or simply initiate the final migration step by selecting Complete Migration. This feature is available starting with Oracle Enterprise Manager 13 Release 5 Update 7 (13.5.0.7).

Creating the Initial Backup

- Click on Databases, select Migration and Consolidation then Database Migration Workbench, this loads the Database Migration screen. Select Transportable Tablespace under Migration Method.

Click Create Migration Activity.

- Enter a migration name under Name Activity. Under Migrate select Full Database.

- Select Source Database, click on the bar and select a database or search for a database.

Click on the Source Database to be migrated.

- Select Destination Database, click on the bar and select a database or search for a database.

Note:

When selecting any non CDB migration you have an option to create a new PDB in the drop down menu. A dialog appears for you to enter required information for a new PDB.Click on the Destination Database that will receive the migration.

- Select Source Database, click on the bar and select a database or search for a database.

- The Add Details page shows a summary of the information entered:

- Source: Ensure the information is correct, select the Agent host credentials and database credentials (Preferred or Named).

Starting with Oracle Enterprise Manager 13.5 Release Update 12 (13.5.0.12) PDB administrator can be used as a named credential for a new PDB.

For Transportable Tablespace migration use SYSDBA for the database credential, and use the Oracle Home Owner for the host credential.

Enter the Wallet Password for TDE enabled databases.

- Destination: Ensure the information is correct, select the Agent host credentials and database credentials (Preferred or Named).

For Transportable Tablespace migration use SYSDBA for the database credential, and use the Oracle Home Owner for the host credential.

Enter the Wallet Password for TDE enabled databases.

- Action:

- Select Compare Performance After Migration to run SQL Tuning Set (STS) after migration.

- Enter the Source Working Directory, use the default or a directory of your choice.

- Enter the Destination Working Directory, use the default or a directory of your choice.

- Enter the Destination Storage Directory, supported storage is Filesystem and Oracle Automatic Storage Management (ASM).

- Enter and confirm the Encryption Password for the metadata dumps.

- Source: Ensure the information is correct, select the Agent host credentials and database credentials (Preferred or Named).

- In the Customize page, set the following:

- Migration Details:

- Select the Tablespaces to be Migrated or select All Tablespaces to migrate the entire set.

- Under Migration Phase select Create Backup.

- Compare Performance After Migration: Select whether to use a new SQL Tuning Set (STS) or use an existing and set for how many days STS will run.

- Custom Scripts: Enter any custom Pre or Post scripts you may require for the migration. These scripts must be uploaded to the Software Library beforehand.

- Script Location: Location of the Pre/Post scripts within the Software Library.

- Pre-Migration Script: Default is No, select the script you wish to run.

- Post-Migration Script: Default is No, select the script you wish to run.

- Post-Migration SQL Script: Default is No, select the script you wish to run.

- Migration Details:

- The Review & Submit page, allows you to review all information before proceeding to migrate, can run validation tests, and presents a report to ensure a proper migration.

Starting with Oracle Enterprise Manager 13.5 Release Update 12 (13.5.0.12) you can perform a pre-migration analysis before starting a TTS migration. To analyze your migration activity inputs and view the report, click the Check Migration Readiness button.

The Pre-Migration Report shows the following information:

- The Summary section shows the Status for the analysis and Recommendation. For further details you can click on Database Detail and Analyzed Tablespaces.

- The Results Breakdown by Severity shows a bar graph with the number of issues found grouped by severity. These are presented left to right from most serious to non-issue, these are:

- Failed: The source and destination databases selected are not compatible or suitable for migration and need to be changed or the particular validation could not be completed due to internal errors.

- Action Required: Validation failed, review and take action to resolve the issue. The migration will fail if the issue is not corrected before submission.

- Review Required: Warning message, review and if necessary take action to correct. Migration may be impacted.

- Review Suggested: Informational message, read and review.

- Passed: Validation succeeded, you can proceed with the migration.

- The lower section shows the Result Detail, to view in detail issues from a specific severity field click on the arrow to expand each field. To view the specific details for an issue, click the arrow to expand the issue details.

Once all issues presented by the Pre-migration Report are resolved, click Submit to migrate.

- A pop up will require you to confirm when to start the migration Immediately or Later (requires a date and time when the migration will start).

Click Submit.

Complete a Multiphase Transportable Tablespace Migration

After submitting a Create Backup migration you can perform an incremental backup or complete the multiphase migration.

- Once the backup completes, click on Enterprise from the main menu bar, select Migration and Consolidation then Database Migration Workbench, this loads the Database Migration screen.

- In the Migration Activity page, look for a red exclamation icon and Status as Awaiting User Action. Go to the action menu to the left of the Migration Activity with pending action and select Complete Migration. A pop up will require you to confirm whether to:

- Update Backup: Takes an incremental backup.

Note:

If the database has undergone significant updates since the initial backup, it is recommended you choose this option. This will take an incremental backup of the database without incurring in downtime. - Complete Migration: Performs final backup and complete migration. In this step, an incremental backup is taken as well as an export/import of metadata into the destination database. It is recommended that the finals step be triggered during a scheduled maintenance window since a Complete Migration sets the tablespace in Read Only mode.

Note:

Complete Migration sets the tablespace in Read Only mode during the export and import of data.

- Update Backup: Takes an incremental backup.

- In the Migration Activities page, expand the left hand arrow to view the additional incremental backups performed and their status.

- Incremental backups will be labeled as: Incremental Database Migration WD MM DD YYYY HH:MM:SS UTC.

- Complete Migrations will be labeled as: Final Database Migration WD MM DD YYYY HH:MM:SS UTC

- Repeat steps 2 and 3 for further incremental Update Backups and to Complete Migration.

Migrate Selected Tablespaces Only

In this option only the selected tablespaces are migrated. This option allows for only certain parts of a database being migrated. Tablespaces are migrated in the order selected in case of dependencies.

Note:

Full and Multiphase migration options can also be performed using only selected tablespaces.- Click on Databases, select Migration and Consolidation then Database Migration Workbench, this loads the Database Migration screen.

Click Create Migration Activity.

- Enter a migration name under Name Activity. Under Migrate select Tablespaces.

- Select Source Database, click on the bar and select a database or search for a database.

Click on the Source Database to be migrated.

- Select Destination Database, click on the bar and select a database or search for a database.

Click on the Destination Database that will receive the migration.

- Select Source Database, click on the bar and select a database or search for a database.

- The Add Details page shows a summary of the information entered:

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named). For Transportable Tablespace migration use SYSDBA for the Database Credential, and use the Oracle Home Owner for the Host credential.

Enter the Wallet Password for TDE enabled databases.

- Destination: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named). For Transportable Tablespace migration use SYSDBA for the Database Credential, and use the Oracle Home Owner for the Host credential.

Enter the Wallet Password for TDE enabled databases.

- Action:

- Select Compare Performance After Migration to run SQL Tuning Set (STS) after migration.

- Enter the Source Working Directory, use the default or a directory of your choice.

- Enter the Destination Working Directory, use the default or a directory of your choice.

- Enter the Destination Storage Directory, supported storage is Filesystem and Oracle Automatic Storage Management (ASM).

- Enter and confirm the Encryption Password for the metadata dumps.

- Source: Ensure the information is correct, select the Agent Host Credentials and Database Credentials (Preferred or Named). For Transportable Tablespace migration use SYSDBA for the Database Credential, and use the Oracle Home Owner for the Host credential.

- In the Customize page, set the following:

- Migration Details: Select the Tablespaces to be Migrated.

- Compare Performance After Migration: Select whether to use a new SQL Tuning Set (STS) or use an existing and set for how many days STS will run.

- Custom Scripts: Enter any custom Pre or Post scripts you may require for the migration. These scripts must be uploaded to the Software Library beforehand.

- Script Location: Location of the Pre/Post scripts within the Software Library.

- Pre-Migration Script: Default is No, select the script you wish to run.

- Post-Migration Script: Default is No, select the script you wish to run.

- Post-Migration SQL Script: Default is No, select the script you wish to run.

- The Review & Submit page, allows you to review all information before proceeding to migrate, can run validation tests, and presents a report to ensure a proper migration.

Starting with Oracle Enterprise Manager 13.5 Release Update 12 (13.5.0.12) you can perform a pre-migration analysis before starting a TTS migration. To analyze your migration activity inputs and view the report, click the Check Migration Readiness button.

The Pre-Migration Report shows the following information:

- The Summary section shows the Status for the analysis and Recommendation. For further details you can click on Database Detail and Analyzed Tablespaces.

- The Results Breakdown by Severity shows a bar graph with the number of issues found grouped by severity. These are presented left to right from most serious to non-issue, these are:

- Failed: The source and destination databases selected are not compatible or suitable for migration and need to be changed or the particular validation could not be completed due to internal errors.

- Action Required: Validation failed, review and take action to resolve the issue. The migration will fail if the issue is not corrected before submission.

- Review Required: Warning message, review and if necessary take action to correct. Migration may be impacted.

- Review Suggested: Informational message, read and review.

- Passed: Validation succeeded, you can proceed with the migration.

- The lower section shows the Result Detail, to view in detail issues from a specific severity field click on the arrow to expand each field. To view the specific details for an issue, click the arrow to expand the issue details.

Once all issues presented by the Pre-migration Report are resolved, click Submit to migrate.

- A pop up will require you to confirm when to start the migration Immediately or Later (requires a date and time when the migration will start).

Click Submit.

Migrate via REST API

This topic outlines the steps required to migrate Database, Schema or Transportable Tablespace via REST API.

- Data Pump Migration REST API

- Transportable Tablespace Migration using REST API

Note:

Transportable Tablespace migrations are available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).

Data Pump Migration REST API

Data Pump provides an Export and Import way of moving data and metadata from one database to another. The following is a list of available REST API data pump migrations.

Full Database Data Pump REST API Migration

The following is a sample code for a full database data pump migration using REST API.

Perform a full migration operation using Data Pump

To perform a full migration using the Data Pump method use the following REST request. This sample code loads data from an on-premises database into a cloud database:Note:

- Do not use

SYSDBAas the named credentials for Data Pump Export and Import.SYSDBAshould only be used at the request of Oracle technical support. POSTcan be used if user wants the OMS to pull the files from the Host. The Host needs to be discovered in Enterprise Manager.

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

Migrate Schema Using REST API

The following is a REST API sample code for a schema migration using REST API

Perform a complete Migration Operation using Data Pump

To perform a Schema Migration using the Data Pump method use the following REST request. This sample code loads data from an on-premises database into a cloud database:Note:

- Do not use

SYSDBAas the named credentials for Data Pump Export and Import.SYSDBAshould only be used at the request of Oracle technical support. POSTcan be used if user wants the OMS to pull the files from the Host. The Host needs to be discovered in Enterprise Manager.

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

Transportable Tablespace Migration using REST API

Transportable Tablespace provides a Near Zero Downtime (NZDT) way to create a duplicate database from incremental backups of the source database, while retaining the original target database.

Note:

Migration Workbench Transportable Tablespace migrations are available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).Migration Workbench can support On-Premise to On-Premise migrations and On-Premise to PDB Transportable Tablespace migrations starting with Oracle Enterprise Manager 13 Release 5 Update 10 (13.5.0.10) .

The pre-migration analyzer (migration readiness check) will run as part of a Transportable Tablespace deployment procedure starting with Oracle Enterprise Manager 13 Release 5 Update 15 (13.5.0.15)

Validate a Transportable Tablespace Migration With REST API

It is recommended that before you execute a Transportable Tablespace Migration, you first perform a validation.

Validate a Transportable Tablespace Migration

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Request | |

| Response | |

Transportable Tablespace Validation Results

To review results of a Transportable Tablespace Migration Validation run the following command:

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | "status": "SUCCESS",

"description": " Database is not standby(which is valid),Database is valid container database,Database is in archive mode (which is valid),Database has primary role (which is valid)",

"name": "Destination database status validation"

},

{

"id": "DESTINATION_WORKING_DIRECTORY_VALIDATION",

"status": "SUCCESS",

"description": " Working directory is validated successfully",

"name": "Destination working directory validation"

},

{

"id": "DESTINATION_DISK_SPACE_VALIDATION",

"status": "SUCCESS",

"description": " \n \tINFORMATION : /scratch/ has enough space. Required space is 7020 MB , available space is 199805 MB.",

"name": "Destination target disk space validation"

},

{

"id": "DESTINATION_TARGET_PRIVILEGES_VALIDATION",

"status": "SUCCESS",

"description": " User has VIEW_TARGET privilege,User has CONNECT_TARGET privilege,User has CONFIGURE_TARGET privilege,User has GET_CREDENTIAL privilege for oracle_database,User has PERFORM_OPERATION privilege,User has GET_CREDENTIAL privilege for host,User has ORACLE_HOME_OWNER privilege",

"name": "Destination target privilege validation"

},

{

"id": "SOURCE_DATABASE_ENCRYPTION_VALIDATION",

"status": "SUCCESS",

"description": " Database is not encrypted (which is valid)",

"name": "Source database encryption validation"

},

{

"id": "DESTINATION_DATABASE_ENCRYPTION_VALIDATION",

"status": "SUCCESS",

"description": " Database is not encrypted (which is valid)",

"name": "Destination database encryption validation"

},

{

"id": "COMPATIBILITY_VALIDATION",

"status": "SUCCESS",

"description": " Source & Destination database are compatible",

"name": "Compatibility validation"

},

{

"id": "PDB_VALIDATION",

"status": "SUCCESS",

"description": " PDB name is valid",

"name": "PDB validation"

},

{

"id": "DESTINATION_SPA_VALIDATION",

"status": "SUCCESS",

"description": " Database user has all the required privileges(s) for analysing performance using SPA",

"name": "Destination database SPA validation"

},

{

"id": "STORAGE_VALIDATION",

"status": "SUCCESS",

"description": " Storage validation successfull",

"name": "Storage validation"

}

],

"totalValidation": 20,

"successValidation": 20,

"validationPerformed": 20,

"skippedValidation": 0,

"failedValidation": 0,

"ignorableValidation": 0,

"warningValidation": 0,

"status": "COMPLETED"

} |

Transportable Tablespace Full Migration Using REST API

The following is a sample code for a Transportable Tablespace Full migration using REST API. It is recommended you first validate and analize your results before doing a Transportable Tablespace migration.

Perform a Transportable Tablespace Migration

Starting with Enterprise Manager 13 Release 5 Update 5 (13.5.0.5) you can now perform tablespace migrations using Migration Workbench.

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Request | |

| Response | |

Transportable Tablespace Multiphase Migration REST API

Transportable Tablespace migrations can also be performed with incremental backups giving you more control of your migration.

In a TTS Multiphase Migration, you can take an initial backup, return at any other time for an incremental backup by requesting action INCREMENTAL_ROLLFORWARD, or simply initiate the final migration step by requesting action FINAL_CUTOVER.

This feature is available starting with Oracle Enterprise Manager 13 Release 5 Update 7 (13.5.0.7). This is a sample REST API for an incremental backup.

TTS Incremental Backup

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Request | |

| Response | |

Complete a Transportable Tablespace Migration

After you have performed one or several incremental backups use this REST API code sample to finalize the migration. In this step, an incremental backup is taken as well as an export/import of metadata into the destination database. It is recommended that the finals step be triggered during a scheduled maintenance window since a Complete Migration sets the tablespace in Read Only mode.

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Request | |

| Response | |

Transportable Tablespace Only Migration With REST API

With Transportable Tablespace Migrations you can perform a Tablespace only migration. It can be all the tablespaces or just a selection of these.

Note:

Full and Multiphase migration options can also be performed using only selected tablespaces.tableSpaceList parameter with a complete list of all tablespaces to be migrated. The following sample code is an example of a tablespace only migration:

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Request | |

| Response | |

Transportable Tablespace Validation Results

To review results of a Transportable Tablespace Migration Validation run the following command:

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | "status": "SUCCESS",

"description": " Database is not standby(which is valid),Database is valid container database,Database is in archive mode (which is valid),Database has primary role (which is valid)",

"name": "Destination database status validation"

},

{

"id": "DESTINATION_WORKING_DIRECTORY_VALIDATION",

"status": "SUCCESS",

"description": " Working directory is validated successfully",

"name": "Destination working directory validation"

},

{

"id": "DESTINATION_DISK_SPACE_VALIDATION",

"status": "SUCCESS",

"description": " \n \tINFORMATION : /scratch/ has enough space. Required space is 7020 MB , available space is 199805 MB.",

"name": "Destination target disk space validation"

},

{

"id": "DESTINATION_TARGET_PRIVILEGES_VALIDATION",

"status": "SUCCESS",

"description": " User has VIEW_TARGET privilege,User has CONNECT_TARGET privilege,User has CONFIGURE_TARGET privilege,User has GET_CREDENTIAL privilege for oracle_database,User has PERFORM_OPERATION privilege,User has GET_CREDENTIAL privilege for host,User has ORACLE_HOME_OWNER privilege",

"name": "Destination target privilege validation"

},

{

"id": "SOURCE_DATABASE_ENCRYPTION_VALIDATION",

"status": "SUCCESS",

"description": " Database is not encrypted (which is valid)",

"name": "Source database encryption validation"

},

{

"id": "DESTINATION_DATABASE_ENCRYPTION_VALIDATION",

"status": "SUCCESS",

"description": " Database is not encrypted (which is valid)",

"name": "Destination database encryption validation"

},

{

"id": "COMPATIBILITY_VALIDATION",

"status": "SUCCESS",

"description": " Source & Destination database are compatible",

"name": "Compatibility validation"

},

{

"id": "PDB_VALIDATION",

"status": "SUCCESS",

"description": " PDB name is valid",

"name": "PDB validation"

},

{

"id": "DESTINATION_SPA_VALIDATION",

"status": "SUCCESS",

"description": " Database user has all the required privileges(s) for analysing performance using SPA",

"name": "Destination database SPA validation"

},

{

"id": "STORAGE_VALIDATION",

"status": "SUCCESS",

"description": " Storage validation successfull",

"name": "Storage validation"

}

],

"totalValidation": 20,

"successValidation": 20,

"validationPerformed": 20,

"skippedValidation": 0,

"failedValidation": 0,

"ignorableValidation": 0,

"warningValidation": 0,

"status": "COMPLETED"

} |

Migrate via EM CLI

migrate_db woth a JSON file.

Note:

Before attempting a migration usingmigrate_db on Oracle databases 13.3.2.0.0 and later, make sure patch 12.1.0.2.210119 is deployed.

- Migrate Using Data Pump

- Migrate Using Transportable Tablespace

Note:

Transportable Tablespace migrations are available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).

Migrate Using Data Pump

Data Pump provides an Export and Import way of moving data and metadata from one database to another. The following is a list of available migrations using Data Pump.

Before attempting a Full Database or Schema migration it is recommended you run the Cloud Premigration Advisor Tool (CPAT). For more information see: Perform a CPAT Analysis with EM CLI.

Full Database Data Pump Migration using EM CLI

The entire database from on premise to on premise, PDB or Oracle Cloud.

Full Database Data Pump Migration Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "MIGRATE",

"options": "SPA",

"type": "DATAPUMP"

"proxy":{ --> Required if you have a proxy connection setup

"host":"<proxy-host>",

"port":"<proxy-port>"

},

"source":[{

"databaseInfo":{

"targetName":"<db-pdb-target-name>",

"targetType":"<db-pdb-target-type>",

"dbCredName":"<db-cred-name:em-cred-owner>", --> If not provided, preferred credentials will be used

"hostCredName":"<host-cred-name:em-cred-owner>" --> If not provided, preferred credentials will be used

},

"export":{

"args":{

"LOGTIME":"ALL",

"METRICS":"YES",

"COMPRESSION":"ALL",

"COMPRESSION_ALGORITHM":"MEDIUM",

"KEEP_MASTER":"YES",

"PARALLEL":"1",

"directory":"<dump directory name>",

"EXCLUDE":"index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link",

"ENCRYPTION":"ALL",

"ENCRYPTION_PASSWORD":"<Encryption Password>",

"ENCRYPTION_ALGORITHM":"AES128"

}

}

}],

"storage":{

"type":"OBJECTSTORE"

"useProxy": <boolean, true/false> --> If true, the proxy from main section will be used to upload objects to OCI Object store

"objectstore":{

"credName":".."

"baseUrl":"..",

"bucketName":".."

},

},

"destination":[{

"useProxy": <boolean, true/false> --> If true, the proxy from main section will be used to connect to ADB

"databaseInfo":{

"targetName":"<adb-target-name>",

"targetType":"<adb-target-type>",

"dbCredName":"<db-cred-name:em-cred-owner>", --> If not provided, preferred credentials will be used

"hostCredName":"<adb monitoring host-cred-name:em-cred-owner>" --> If not provided, preferred credentials will be used

"tdeWalletPassword": "<password>", --> TDE wallet password is required when a new PDB is created at the

destination

},

import": {

"args": {

"TABLE_EXISTS_ACTION": "SKIP",

"PARALLEL": "6",

"TRANSFORM": "SEGMENT_ATTRIBUTES:N,DWCS_CVT_IOTS:Y,CONSTRAINT_USE_DEFAULT_INDEX:Y",

"REMAP_TABLESPACE" : "<source_tablespace:target_tablespace> [, <source_tablespace:target_tablespace>, ...]"

"NOLOGFILE": "YES",

"DIRECTORY": "DATA_PUMP_DIR"

},

"dbOciAuthCred": "<cloud-cred-name:cloud-cred-owner>"

},

"tnsName": "<adb-service-name>",

"recomplieInvalidObjects": <true|false>, -->After migration recompiles all invalid objects within the migrated schemas

}],

"analyzePerformance": --optional

{

"useSPA": true/false,

"createSTS": true/false,

"usePreferredSTS”: true/false -->optional

"stsName": "stsname01" -->optional

"stsOwner": "stsOwnedSchema" -->optional

"stsOwner": "EXPUSER" -->optional

"baselineDurationDays": 7 -->optional, set duration for SPA to analyze

}

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Data Pump Schema Migration using EM CLI

Migrates only the schema, this is recommended as a starting point when migrating with Migration Workbench to ensure proper migration when performing a Full Database migration.

Migrate Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "MIGRATE",

"options": "SPA",

"type": "SCHEMA"

"proxy":{ --> Required if you have a proxy connection setup

"host":"<proxy-host>",

"port":"<proxy-port>"

},

"source":[{

"databaseInfo":{

"targetName":"<db-pdb-target-name>",

"targetType":"<db-pdb-target-type>",

"dbCredName":"<db-cred-name:em-cred-owner>", --> If not provided, preferred credentials will be used

"hostCredName":"<host-cred-name:em-cred-owner>" --> If not provided, preferred credentials will be used

},

"export":{

"args":{

"LOGTIME":"ALL",

"METRICS":"YES",

"COMPRESSION":"ALL",

"COMPRESSION_ALGORITHM":"MEDIUM",

"KEEP_MASTER":"YES",

"PARALLEL":"1",

"directory":"<dump directory name>",

"EXCLUDE":"index,cluster,indextype,materialized_view,materialized_view_log,materialized_zonemap,db_link",

"ENCRYPTION":"ALL",

"ENCRYPTION_PASSWORD":"<Encryption Password>",

"ENCRYPTION_ALGORITHM":"AES128"

}

}

}],

"storage":{

"type":"OBJECTSTORE"

"useProxy": <boolean, true/false> --> If true, the proxy from main section will be used to upload objects to OCI Object store

"objectstore":{

"credName":".."

"baseUrl":"..",

"bucketName":".."

},

},

"destination":[{

"useProxy": <boolean, true/false> --> If true, the proxy from main section will be used to connect to ADB

"databaseInfo":{

"targetName":"<adb-target-name>",

"targetType":"<adb-target-type>",

"dbCredName":"<db-cred-name:em-cred-owner>", --> If not provided, preferred credentials will be used

"hostCredName":"<adb monitoring host-cred-name:em-cred-owner>" --> If not provided, preferred credentials will be used

"tdeWalletPassword": "<password>", --> TDE wallet password is required when a new PDB is created at the

destination

},

import": {

"args": {

"TABLE_EXISTS_ACTION": "SKIP",

"PARALLEL": "6",

"TRANSFORM": "SEGMENT_ATTRIBUTES:N,DWCS_CVT_IOTS:Y,CONSTRAINT_USE_DEFAULT_INDEX:Y",

"REMAP_TABLESPACE" : "<source_tablespace:target_tablespace> [, <source_tablespace:target_tablespace>, ...]"

"NOLOGFILE": "YES",

"DIRECTORY": "DATA_PUMP_DIR"

},

"dbOciAuthCred": "<cloud-cred-name:cloud-cred-owner>"

},

"tnsName": "<adb-service-name>",

"recomplieInvalidObjects": <true|false>, -->After migration recompiles all invalid objects within the migrated schemas

}],

"analyzePerformance": --optional

{

"useSPA": true/false,

"createSTS": true/false,

"usePreferredSTS”: true/false -->optional

"stsName": "stsname01" -->optional

"stsOwner": "stsOwnedSchema" -->optional

"stsOwner": "EXPUSER" -->optional

"baselineDurationDays": 7 -->optional, set duration for SPA to analyze

}

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Migrate Using Transportable Tablespace

Transportable Tablespace provides a Near Zero Downtime (NZDT) way to create a duplicate database from incremental backups of the source database, while retaining the original target database.

Note:

Migration Workbench Transportable Tablespace migrations are available starting with Oracle Enterprise Manager 13 Release 5 Update 5 (13.5.0.5).Migration Workbench can support On-Premise to On-Premise migrations and On-Premise to PDB Transportable Tablespace migrations starting with Oracle Enterprise Manager 13 Release 5 Update 10 (13.5.0.10) .

The pre-migration analyzer (migration readiness check) will run as part of a Transportable Tablespace deployment procedure starting with Oracle Enterprise Manager 13 Release 5 Update 15 (13.5.0.15)

Validate a Transportable Tablespace Migration Using EM CLI

It is recommended that before you execute a Transportable Tablespace Migration, you first perform a validation.

Validate a Transportable Tablespace Migration

emcli migrate_db

-file="/home/migrations/TTS_Migration.JSON"TTS_Migration.JSON contains the following parameters:{

"action": "VALIDATE",

"type": "ONLINE_TTS",

"source":[{

"databaseInfo":{

"targetName":"<source target name>",

"targetType":"<oracle_database>",

"dbCredName" : "<namedcred:owner>",

"hostCredName" : "<sourcehostcred:owner>"

"tdeWalletPassword": "<password>"

},

"stageDir":"<directory location in source host>",

"tableSpaceList":"<comma separated or empty>"

}],

"encryptionPassword": "<password>",

"destination":[{

"databaseInfo":{

"targetName" : "<destination target name>",

"targetType" : "<oracle_database>",

"dbCredName" : "<namedcred:owner>",

"hostCredName" : "<destinationhostcred:owner>",

"tdeWalletPassword": "<password>"

"newPdbInfo":{

"name":"<new pdb name>",

"adminUser":"<new pdb admin username>",

"adminPassword":"<new pdb admin username password>"

}

},

"stageDir":"<directory location in destination host>"

}],

"storage":{

"type":"<FILESYSTEM or ASM>",

"storageDir":"<directory location in destination host>",

"asmCredName":"asmCred:owner"

},

"analyzePerformance":{

"useSPA": false

}

"customScripts": {

"preMigrationScriptUrn": "<SWLIB URN>,

"postMigrationScriptUrn": "<SWLIB URN>",

"postMigrationSqlScriptUrn": "<SWLIB URN>"

}

}View Transportable Tablespace Validation Results

emcli migrate_db

-file="/home/migrations/TTS_Verify.JSON"TTS_Verify.JSON contains the following parameters:{

"action": "VALIDATION_RESULTS",

"executionId": "<executionId>"

}Transportable Tablespace Full Migration Using EM CLI

The following is an example of a Transportable Tablespace migration using EM CLI

emcli migrate_db

-file="/home/migrations/TTS_Migration.JSON"TTS_Migration.JSON contains the following parameters:{

"action": "MIGRATE",

"type": "ONLINE_TTS",

"source":[{

"databaseInfo":{

"targetName":"<source target name>",

"targetType":"<oracle_database>",

"dbCredName" : "namedcred:owner",

"hostCredName" : "sourcehostcred:owner"

"tdeWalletPassword": "<password>"

},

"stageDir":"<directory location in source host>",

"tableSpaceList":"<comma separated or empty>"

}],

"encryptionPassword": "<password>",

"destination":[{

"databaseInfo":{

"targetName" : "<destination target name>",

"targetType" : "<oracle_database>",

"dbCredName" : "namedcred:owner",

"hostCredName" : "destinationhostcred:owner",

"tdeWalletPassword": "<password>"

"newPdbInfo":{

"name":"<new pdb name>",

"adminUser":"<new pdb admin username>",

"adminPassword":"<new pdb admin username password>"

}

},

"stageDir":"<directory location in destination host>"

}],

"storage":{

"type":"FILESYSTEM or ASM",

"storageDir":"<directory location in destination host>",

"asmCredName":"<asmCred:owner>"

},

"analyzePerformance":{

"useSPA": false

}

"customScripts": {

"preMigrationScriptUrn": "<SWLIB URN>,

"postMigrationScriptUrn": "<SWLIB URN>",

"postMigrationSqlScriptUrn": "<SWLIB URN>"

}

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Transportable Tablespace Multiphase Migration Using EM CLI

Transportable Tablespace migrations can also be performed with incremental backups giving you more control of your migration.

Transportable Tablespace migrations can also be performed with incremental backups giving you more control of your migration. In a TTS Multiphase Migration, you can take an initial backup, return at any other time for an incremental backup by requesting action INCREMENTAL_ROLLFORWARD, or simply initiate the final migration step by requesting action FINAL_CUTOVER.

This feature is available starting with Oracle Enterprise Manager 13 Release 5 Update 7 (13.5.0.7).

Incremental Backup for a Transportable Tablespace Migration with EM CLI

emcli migrate_db

-file="/home/migrations/TTS_Migration.JSON"TTS_Migration.JSON contains the following parameters:{

"action": "INCREMENTAL_ROLLFORWARD",

"executionId": "<Execution Id>"

}

Finalize a Transportable Tablespace Migration with EM CLI

emcli migrate_db

-file="/home/migrations/TTS_Migration.JSON"TTS_Migration.JSON contains the following parameters:{

"action": "FINAL_CUTOVER",

"executionId": "<Execution Id>"

}Note:

Enter theexecutionId of the parent migration.

For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Transportable Tablespace Only Migration Using EM CLI

It is recommended that before you execute a Transportable Tablespace Migration, you first perform a validation.

Transportable Tablespace Only Migration

Note:

Full and Multiphase migration options can also be performed using only selected tablespaces.emcli migrate_db

-file="/home/migrations/TTS_Migration.JSON"TTS_Migration.JSON contains the following parameters:{

"action": "MIGRATE",

"type": "ONLINE_TTS",

"source":[{

"databaseInfo":{

"targetName":"<source target name>",

"targetType":"<oracle_database>",

"dbCredName" : "<namedcred:owner>",

"hostCredName" : "<sourcehostcred:owner>"

"tdeWalletPassword": "<password>"

},

"stageDir":"<directory location in source host>",

"tableSpaceList":"<tablespace list comma separated"

}],

"encryptionPassword": "<password>",

"destination":[{

"databaseInfo":{

"targetName" : "<destination target name>",

"targetType" : "<oracle_database>",

"dbCredName" : "<namedcred:owner>",

"hostCredName" : "<destinationhostcred:owner>",

"tdeWalletPassword": "<password>"

"newPdbInfo":{

"name":"<new pdb name>",

"adminUser":"<new pdb admin username>",

"adminPassword":"<new pdb admin username password>"

}

},

"stageDir":"<directory location in destination host>"

}],

"storage":{

"type":"<FILESYSTEM or ASM>",

"storageDir":"<directory location in destination host>",

"asmCredName":"asmCred:owner"

},

"analyzePerformance":{

"useSPA": false

}

"customScripts": {

"preMigrationScriptUrn": "<SWLIB URN>,

"postMigrationScriptUrn": "<SWLIB URN>",

"postMigrationSqlScriptUrn": "<SWLIB URN>"

}

}View Transportable Tablespace Validation Results

emcli migrate_db

-file="/home/migrations/TTS_Verify.JSON"TTS_Verify.JSON contains the following parameters:{

"action": "VALIDATION_RESULTS",

"executionId": "<executionId>"

}