3 Database Migration Workbench

About Migration Workbench

The decision to move workloads starts at the application level. As an organization decides, a particular application is chosen to move with its entire stack to a different location or service. In these cases, the challenge for administrators is how to find the right set of schema and/or databases to move. With years of investment into building and fine tuning applications, dependencies within applications and database, it becomes nearly impossible to maintain a stable relationship among all dependent components using traditional methods.

Oracle Enterprise Manager Database Migration Workbench provides an accurate approach to migration and consolidation by eliminating human errors allowing you to easily move your on-premises databases to Oracle Cloud, Multitenant architecture or upgrade your infrastructure. Some advantages of using Database Migration Workbech are: near zero downtime, assured zero data loss, seamless on-premises or Cloud migrations and, MAA and Cloud Security compliant.

For information on Database Migration Workbench licensing, see: Oracle Real Application Testing.

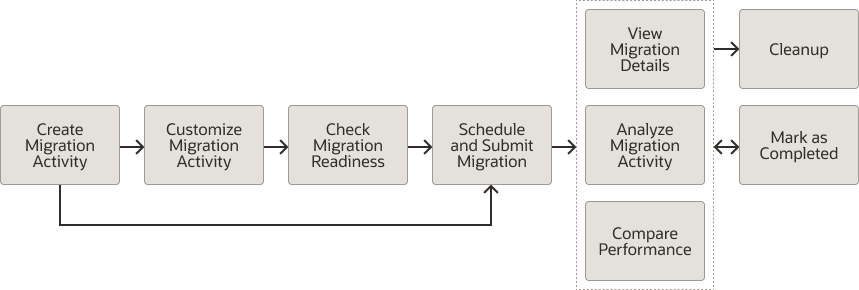

Migration Activity

- Plan: Identify databases to migrate. As part of the Plan activities using the Sizing and Consolidation Advisor, databases are consolidated into a single destination database optimized for size and performance.

- Migrate: The actual migration of the databases, moving data to autonomous and non-autonomous databases. The methods available are Datapump (Logical Data Migration), and Transportable Tablespace (TTS with RMAN).

- Analyze: Monitors and tracks the migration process, after migration users can see log reports that show what migrated successfully and help diagnose any errors that may have occurred. Comparison reports are also available to assess database performance before of after migration.

Figure 3-1 Complete Migration Process with Database Migrations Workbench

Plan a Database Migration with Database Migration Workbench

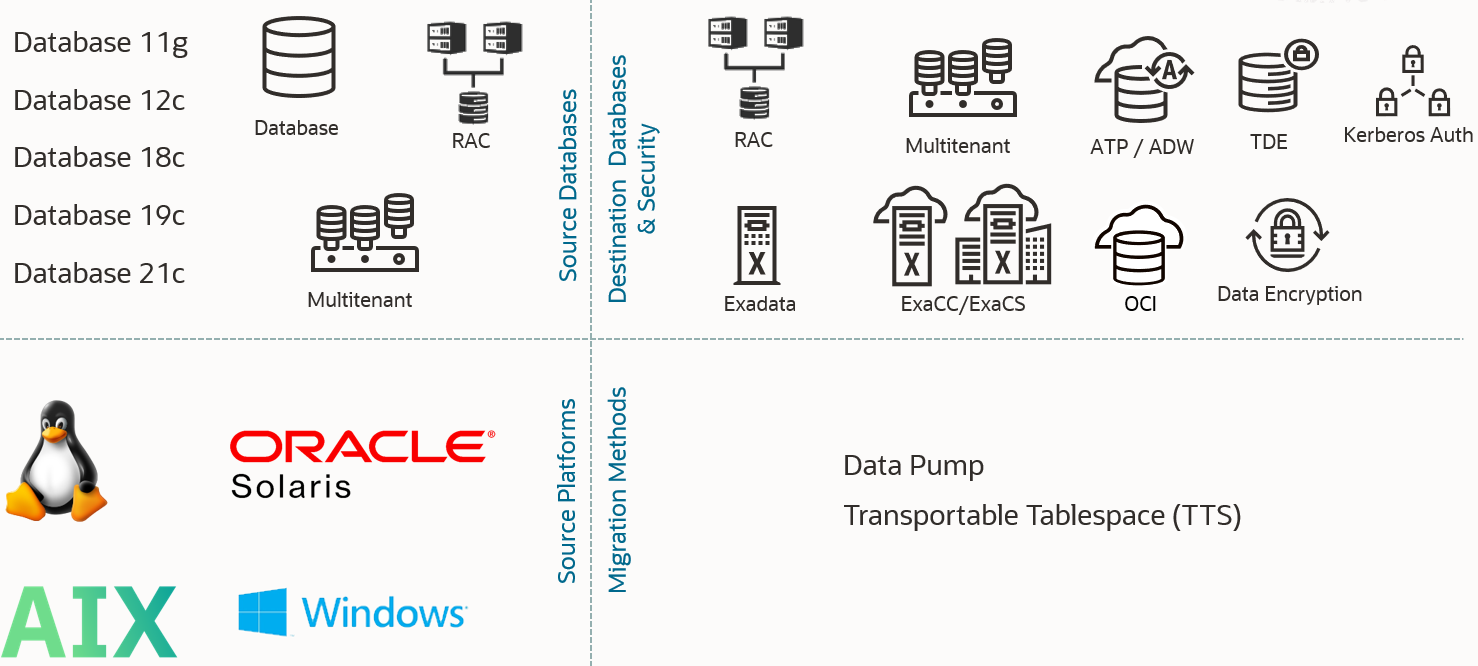

Migration Workbench Support Matrix

Oracle Enterprise Manager Database Migration Workbench provides an accurate approach to migration and consolidation by eliminating human errors allowing you to easily move your on-premises databases to different services like cloud, physical or virtual server, on-premises Exadata.

The Migration Support Matrix shows the different types of migrations you can perform with Enterprise Manager, review the matrix to determine the Migration method most desirable for migrating your database. This will help you choose the specific topics and migration path in this chapter.

- Migrations can be performed with all Enterprise Manager Migration Methods (Migration Workbench, EM CLI and REST API)

- Source destination database version for all migration types can be from 11.2.0.4 to 21c

- Source operating systems can be Linux, Sun Solaris, AIX or Windows

- All migration types offer Cross Platform Support, allowing you to choose the best combination of database type, database version and operating system for your environment going forward.

Figure 3-2 Database Support Matrix

Note:

Transportable Tablespace Migrations are currently not supported for Windows Destination Databases.Migration Methods

There are two main types of migration methods available in Migration Workbench: Data Pump and Transportable Tablespaces. Review the information on each so you can choose the correct migration method for your needs.

Guideline for Migration Workbench Method Selection

The following table is a guideline for selecting the proper Database Migration Workbench Migration Method for your particular scenario:| Migration Method | Size of Source Database | Move to New Server | Change in Endianness | Operating System Change | Character Set Change | Architecture Layout Change | Selected Object (Table/User) Migration | Tablespace Migration | Downtime Required |

|---|---|---|---|---|---|---|---|---|---|

| Data Pump | < 500 GB | Yes | Yes | Yes | Yes | Yes | Yes | No | Yes |

| Transportable Tablespace | > 500 GB (or any size) | Yes | Yes | Yes | No | No | No | Yes | Minimal |

Data Pump

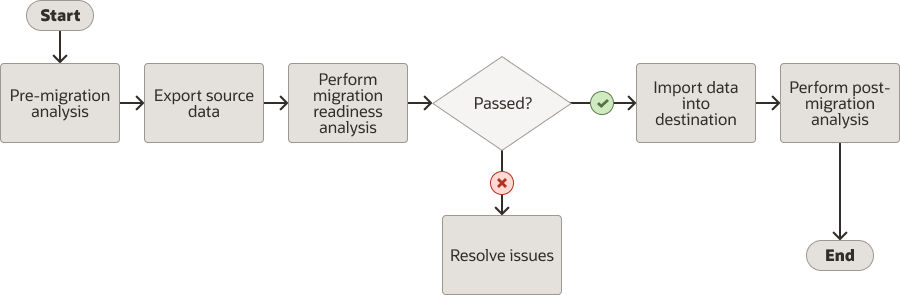

Figure 3-3 Data Pump Migration Overview

- Full Database: In this mode, the entire content of the source database is moved to the File Dump and loaded into the destination database.

- Schema: In this mode, only objects owned by the specified schema are moved to the File Dump and later loaded.

Note:

When performing Data Pump migrations do not useSYSDBA as the named credentials for Export and Import.

Transportable Tablespace (TTS)

Note:

Starting with Enterprise Manager 13.5c Release Update 10 you can perform non-CDB to non-CDB TTS migrations.- Single-phase: In this mode, the entire content of the source database is moved via a single full backup and loaded into the target database.

- Multi-phase: In this mode you decide what to migrate (migrate full database or selected table spaces), and how to migrate (single phase or user controlled multiple phases). The entire content of the source database is moved via an initial full backup and multiple user defined incremental backups.

- Tablespace: In this mode, only selected tablespace objects are moved to the target database.

Note:

When performing Transportable Tablespace migrations you will need to useSYSDBA as the named credentials.

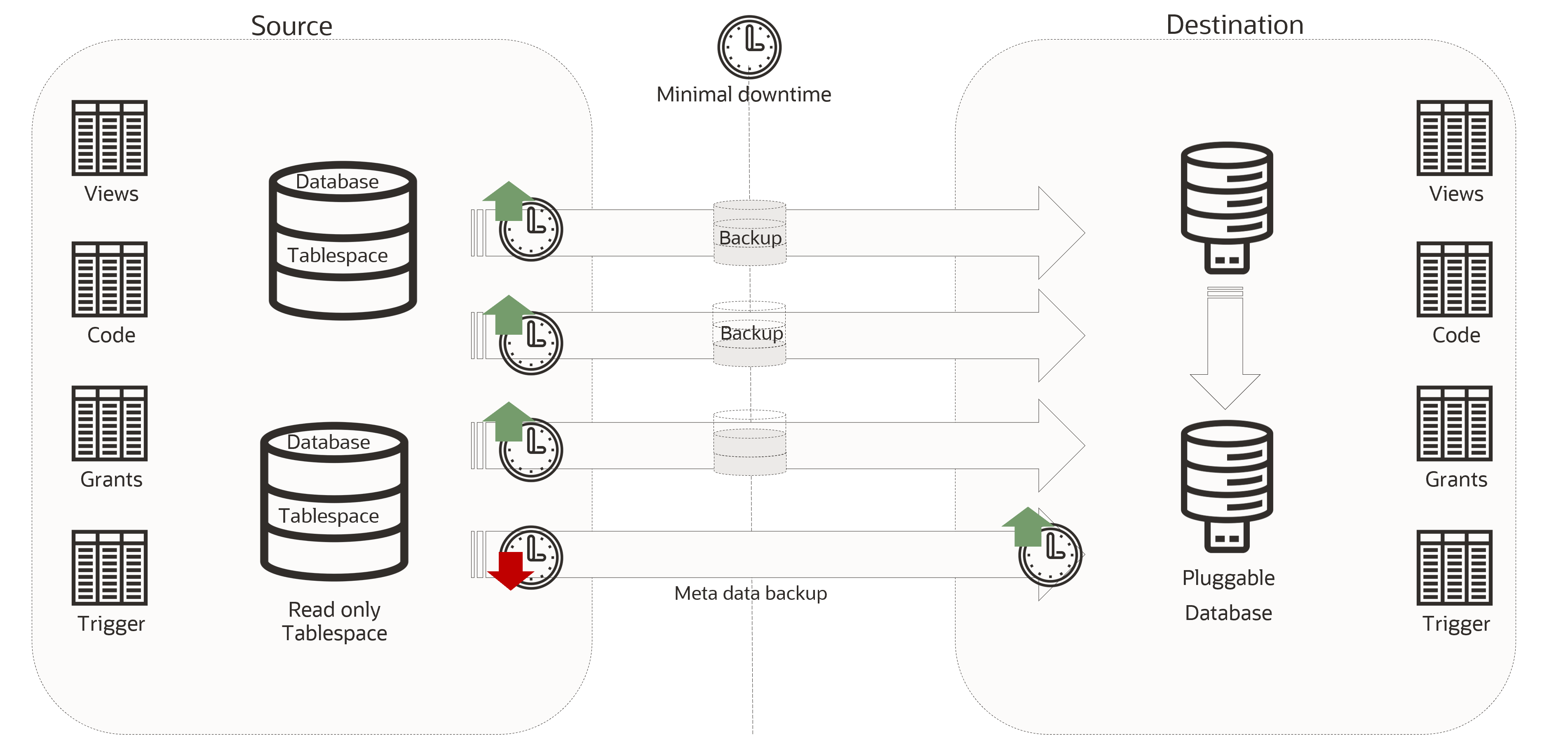

Migration Workbench Architecture

The following is an architecture diagram example for a TTS multi-phase migration. TTS migration support multi-phase and single-phase migration.

For example: If you want to migrate huge-size database with minimum downtime, then a multi-phase migration with a full and incremental backup combination is performed. For a small database, you can go for single-phase Transportable Tablespace migration which will take a full backup and complete the migration process without taking any incremental backups. During the cutover phase TTS migration puts your tablespaces in a read-only mode, takes the final incremental backup with a metadata backup, completes the migration, and the cutover process.

Customization Support for Migration Workbench

Migration Workbench allows customization of both Data Pump and Transportable Tablespace migration methods by allowing the user to upload and use Pre and Post migration scripts.

About Pre and Post Scripts

You can run custom scripts before and after a database has been created or provisioned. These requests are additional commands that can run on the machine on which the database is originating or commands to perform additional activities and operations on the newly migrated database. Scripts can be Shell scripts or Perl scripts (SQL scripts cannot be called directly but can be called internally from Shell or Perl scripts).

Pre and Post scripts need to be uploaded as EM Software Library Entity (Directive) prior to their usage with database migration activities. With the Pre and Post scripts in Software Library, the whole process is centralized and automated with the script staged at a common place within the Software Library, the script is staged and cleaned up on the target host as part of the deployment execution itself.

Pre and Post Script Requirements

The custom script must follow certain guidelines and consists of four parts:- Response File: The response file contains the service template and request specific information. Before the custom script is executed, the request procedure, the request procedure generates a response file (file with name-value pairs) containing the data from the service template as well as the request-specific data such as the SID that is auto computed, the hosts on which the database will be deployed, and so on.

- Driver Script: This is the key driver script that will be invoked by the request procedure. The driver script accepts only one argument which specifies the location of the response file.

- Other Scripts: Apart from the driver script, you can specify other perl or sql scripts. These scripts must be invoked from the driver script.

- Additional Variables: You can include a file containing additional variables that can used by the custom script as applicable.

Creating a Software Library Entity For Uploading The Script

Create a Software Library entity of type Directive (for uploading the custom scripts to EM).- Select Targets, then Databases, next select Administration, and then Provisioning and Patching and click on Software Library.

- Select the directory where you want to create the Software Library entity.

- Select Actions, then select Create Entity and Click Directives.

- Provide all the basic details on the Directive screen.

- Click Add under Command Line Arguments on the Configure screen.

- The INPUT_FILE to be added as mentioned in the list below. Click OK.

Argument Prefix="Argument Suffix="Property Name=INPUT_FILE

- Click Next.

- Click Add in Specify Source and select the script files to upload the custom script on the Select File screen. Choose the driver script as the main file.

- Click Next.

- Click Save and Upload.

- An entity is created successfully in the Software Library.

How to Get URN for SWLIB Entity

- To get URN for the SWLIB through EMCLI:

$OMS_HOME/bin/emcli list_swlib_entities -show_entity_rev_id -name="" - To get URN for the SWLIB through EM UI:

- Select Enterprise, then Provisioning and Patching and click on Software Library.

- Click View, select Columns and click on Internal ID.

- Go to the uploaded entity and copy the Internal ID for the uploaded entity, this is the URN.

Custom Scripts for Transportable Tablespace

You can use Pre and Post scripts to further customize your Transportable Tablespace migrations. This topic lists Pre and Post scripts available for Transportable Tablespace migrations.

Pre Scripts for Transportable Tablespace

- Pre Complete Migration Backup: This script will run on the source database prior to taking the initial full backup.

Post Scripts for Transportable Tablespace

- Post Complete Migration Backup (Final Incremental): This script will run on the destination database after migration is complete.

- Post Migration SQL: This SQL script will run on the destination database after the Post Complete Migration phase completes.

Database Migration Prerequisites

Before you can migrate on-premises Oracle Databases to Oracle Cloud, certain general prerequisites need to be met whether you are migrating via Database Migration Workbench, EM CLI or REST API.

Common Prerequistites for Migration Workbench

Note:

Oracle Enterprise Manager Migration Workbench only supports Enterprise Edition database targets. Standard Edition (SE/SE2) database targets are not supported.- For NEW installations of Enterprise Manager 13.5 Release Update 18 and onward the

DOWNLOAD_DB_MIGRATION_PREREQSjob that downloads the Database Migration Prerequisites is disabled. For existing Enterprise Manager customers the job will remain enabled for three months after the last Migration Workbench activity. Run the following REST API to enable:Feature Description Request Method Post URI /em/websvcs/restful/db/migration Payload { "action": "ENABLE_DOWNLOAD_DB_MIGRATION_PREREQS", "jobName": "DOWNLOAD_DB_MIGRATION_PREREQS" } - Source Database and Host credentials. Host credentials need to be supplied for any operation which needs to log into the host and are mandatory for any job. Database credentials are used to make database management easier. For more information on setting up credentials see: Setting Up Credentials in Oracle Enterprise Manager Database Lifecycle Management Administrator's Guide.

- VPN connectivity or an existing connectivity between on-premises data center and destination service.

Note:

Destination will be the OCI data center for Autonomous Databases (required for calls to APIs and Autonomous Database connections) - Enterprise Manager Migration Workbench requires open connections (direct or via proxy) to the following URLs:

- https://download.oracle.com/*

- https://objectstorage.us-phoenix-1.oraclecloud.com/*

- In Enterprise Manager assign the following privileges to each corresponding target:

For more information on how to grant privileges see: About Deployment Procedures and Granting Roles and Privileges to Administrators in Oracle Enterprise Manager Database Lifecycle Management Administrator's Guide.

Required Privilege: Resource to Apply Privilege to: Connect to Target Source and Destination Database Execute Command Source Database Host Configure Target Destination Database Execute Command Monitoring Agent host of Destination Database Create New or Existing Views Named Credential Create Any Software Library Entity Software Library Entity View Any Software Library Entity Software Library Entity - For Oracle 11.2.0.4 databases migrating to a higher release version, DBCONTROL should be present at the source database and verify compatibility of the APEX version at the source database matches with that at the destination database.

- To verify if the

DBCONTROLutility is present on the database use the following query:select count(*) as object_count from dba_segments where owner i n ('SYS','SYSMAN','SYSTEM') and tablespace_name not in ('SYSTEM','SYS','SYSMAN', 'UNDO','SYSAUX') and segment_type not in ('INDEX') and segment_type not like ('% UNDO%') - Migrate any user objects reside in SYS, SYSMAN, SYSTEM schema separately outside of the Migration Workbench. For databases not 11.2.0.4 database, you can skip this step.

- To check for APEX certifications MyOracle Support’s Certification Tab

- Go to https://support.oracle.com.

- Click Certifications.

- Enter Oracle Application Express as the Product.

- Select APEX version from the Release List.

- To verify if the

- Enterprise Manager agents use

PUT_FILEandGET_FILErequests to copy backup data from source to destination server, make sure the default port3872is available to ensure proper communication between servers. - Remote agents are supported for Lifecycle Management activities, for more information on remote agents see: Installing Oracle Remote Management Agents.

Data Pump Migration Prerequisites

The following is a list of prerequisites needed for a Data Pump type migration.

| Prerequisite | Description |

|---|---|

| Privileges on the source database target (in Enterprise Manager) |

|

| Privileges on the export working directory |

|

| Privileges on the source database | Grant the DATAPUMP_EXP_FULL_DATABASE role to the export database user. This role grants the user performing the export additional privileges that aid Data Pump migrations:

|

| Destination database | Destination database is discovered and visible in Enterprise Manager. For more information see: Discover Autonomous Databases in Oracle Enterprise Manager Administrator's Guide for Oracle Autonomous Databases. |

| Privileges on destination database | Grant the DATAPUMP_IMP_FULL_DATABASE role to the import database user. This role grants the user performing the import additional privileges that aid Data Pump migrations:

|

| Privileges on the import working directory | Write privilege to the directory where the data dump will be stored for the import database user. |

| Prerequisites for the destination Autonomous Database |

|

| Prerequisites for SQL Performance Analizer (SPA) | In order to compare performance after migration using SPA the following user privileges are required:

|

Additional Prerequisite Information for REST and EM CLI:

- If you are planning to migrate using REST, you will need to upload the migration tools as part of the prerequisites follow the steps outlined in: Prerequisites for REST API Data Pump Based Database Migration.

- If you are planning to migrate using EM CLI, you will need to upload the migration tools as part of the prerequisites, follow the steps outlined in: Prerequisites for EM CLI Data Pump Based Database Migration.

Data Pump Migration Best Practices

The following is a list of best practices for a Data Pump type migration.

These best practices can be validated before submission in the Review & Submit page of a Migration Workbench activity. To perform a validation for a Data Pump based migration see Migrate Using Data Pump.

Best Practices validation checks are available starting with Oracle Enterprise Manager 13 Release 5 Update 11 (13.5.0.11).

| Recommendation | Parameter | Recommended Value | Default Value | Comments |

|---|---|---|---|---|

| Exclude statistics from export & import operation. After import collect statistics | EXCLUDE | Set parameter EXCLUDE=STATISTICS or EXCLUDE=TABLE_STATISTICS,INDEX_STATISTICS |

EXCLUDE=STATISTICS | Import and export statistics that need to be excluded. Can be excluded in expdp |

| Setup parallelism parameter | PARALLEL | Set Parallel parameter with twice the number of physical CPU/cores | Sum of (2 x (no. of physical CPU) per node) but no greater than 32 | Maximum cap of 32 |

| Gather accurate statistics before and after data pump operation | dbms_stats | Collect statistics using the dbms_stats package with gather_database_stats using Auto sample size

|

DBMS_STATS, gather_schema_stats for user schema

|

NA |

| Setup resource utilization appropriately | STREAMS_POOL_SIZE | Set STREAMS_POOL_SIZE to a value in a range of 64mb-256mb

|

Validate if SGA_TARGET initialization parameter is set. The STREAMS_POOL_SIZE initialization parameter is automatically set to a value in range.

|

NA |

| Setup compression for data pump file size | compression

compression_algorithm |

Setup Compression with default Basic algorithm | compression=all

|

Setup Basic compression to reduce dump file size for export, as well as the size of the data stream during import . Requires Database Options license license. |

| Check AQ_TM_PROCESSES parameter value is not 0 | AQ_TM_PROCESSES | Setup AQ_TM_PROCESSES parameter value to null or greater than 0.

|

AQ_TM_PROCESSES=1 |

Zero can reduce advance queue operation. |

| Use of _OPTIMIZER_GATHER_STATS_ON_LOAD | _OPTIMIZER_GATHER_STATS_ON_LOAD | Ensure _OPTIMIZER_GATHER_STATS_ON_LOAD is not set during the data pump operation. You may set _OPTIMIZER_GATHER_STATS_ON_LOAD=TRUE after the data pump operation is complete. | User Configurable | Analyze hidden parameters from DB. |

For more information on Data Pump best practices see Oracle Data Pump Best Practices from Oracle Database Utilities.

Upload Migration Tools

Migration Workbench uses Instant Client and the Cloud Premigration Advisor Tool (CPAT) as part of its migration toolkit. Make sure these utilities are uploaded into the Enterprise Manager software library for Database Migration to work successfully on all Data Pump migrations.

Note:

Enterprise Manager automatically downloads the latest version of the tools when setup with either a MOS Proxy or direct internet connection. If these utilities are not available from the Software Library for your operating system you will need to download them manually. This usually occurs when the latest version of the Migration Tools are not compatible with your current version of the Operating System.Upload Migration tools are required for Data Pump based migrations only, they are not required for Transportable Tablespace based migrations.

Instant Client

Oracle Instant Client provides the necessary Oracle Database client-side command-line utilities: SQL*Plus, SQL*Loader and Oracle Data Pump. Instant Client simplifies the deployment of applications by eliminating the need for an Oracle home on the client machines, leading to a significantly smaller footprint.| Operation | Tools Used | Comments |

|---|---|---|

| Export | expdp |

This will be used from the source Database home as part of the Lift operation. |

| Upload | N/A | OCI REST APIS will be used |

| Import | sqlplus/impdp |

These are required in the destination Database, make sure you have the latest version matching the OCI Database version. |

To upload the Migration Tools into Enterprise Manager for a migration see step 3 Migrate Using Database Migration Workbench User Interface in Migrate Using Database Migration Workbench.

Cloud Premigration Advisor Tool (CPAT)

CPAT is a modern robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are addressed beforehand. To use CPAT you will need Enterprise Manager 13.5 Release 5 Update 4 (13.5.0.4) or higher. Select Cloud Premigration Advisor Tool (CPAT), and select to upload from a Local Machine (uploaded using browser) or from the Agent Machine (Agent uploads the tool). CPAT can be used for on-premise to on-premise migration with Oracle Enterprise Manager 13.5 Release 5 Update 6 (13.5.0.6) or higher.You can run CPAT by Creating a Migration Activity from Migration Workbench. You can access this by going to Enterprise, then the Migration and Consolidation menu and clicking on Migration Workbench.

Perform the steps outlined in the Migration Wizard, in the last step press the Check Migration Readiness button (but do not submit the migration) to open the Analysis Report will in a new browser window. The report generation may take few minutes to complete.

Do not submit a migration until you are satisfied with the CPAT results. Submitting a migration activity is not required to run and view the CPAT results.

- CPAT helps to determine the suitability of migrating an Oracle database instance to on premise or Cloud database.

- CPAT assess source database instance, checks for potentially problematic content, other factors that could impede a successful migration.

- CPAT pre-migration advisory report categorize results into Failure, Action Required, Review Required, and Review Suggested categories with pass information including severity wise breakdown.

- The overall result of the CPAT advisory report will be the most severe result of all checks performed.

For more information on CPAT see: Cloud Premigration Advisor Tool (CPAT) Analyzes Databases for Suitability of Cloud Migration (Doc ID 2758371.1)

Prerequisites for EM CLI Data Pump Based Database Migration

Before you can begin a Data Pump based database migration with the EM CLI verb migrate_db you will need to review, install and configure the following:

Migration Workbench migrations using EM CLI migrate_db verb, use a JSON payload file exclusively.

Validate if Prerequistes are Met

emcli migrate_db

-file="/home/migrations/prereqs.json"prereqs.json contains the following payload:{

"action": "VALIDATE_PREREQS",

"type": "DATAPUMP",

"platform": "linux32|linux_64|windows64|solaris_sparc64|solaris_x64|aix_ppc64"

}Upload Migration Tools Using EM CLI

emcli migrate_db

-file="/home/migrations/prereqsupload.json"prereqsupload.json contains the following payload:{

"action": "UPLOAD_PREREQS",

"type": "DATAPUMP",

"hostTarget": "<host-target>",

"hostTargetCred": "<hostCredName>:<hostCredOwner>",

"tools": {

"instantClient": {

"version": "<DB Version e.g.: 19.16.0.0.0>",

"platform": "linux32|linux_64|windows64|solaris_sparc64|solaris_x64|aix_ppc64",

"files": [

"/u01/db/insClients/instantclient-basic-<Operating System>-<DB Version>dbru.zip",

"/u01/db/insClients/instantclient-sqlplus-<Operating System>-<DB Version>dbru.zip",

"/u01/db/insClients/instantclient-tools-<Operating System>-<DB Version>dbru.zip"

]

},

"schemaAdvisor":{

"files":[

"/u01/db/tools/install_adb_advisor.sql"

]

}

}

}Note:

Schema Advisor is currently available for Oracle Enterprise Manager 13 Release 5 Update 3 (13.5.0.3) and below. For Oracle Enterprise Manager 13 Release 5 Update 4 (13.5.0.4) use the CPAT tool noted below.Upload CPAT Into the Software Library

Starting with Oracle Enterprise Manager 13 Release 5 Update 4 (13.5.0.4) you can use the Cloud Premigration Advisor Tool (CPAT). CPAT is a modern robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are addressed beforehand.emcli migrate_db

-file="/home/migrations/prereqsupload.json"schemaAdvisor:{

"action": "UPLOAD_PREREQS",

"type": "DATAPUMP",

"hostTarget": "<hostname>",

"hostTargetCred": "<host-cred-name>:<cred-owner>",

"tools": {

"cpat":{

"files":[

"<Complete file path with zip file>"

]

}

}

}Perform a CPAT Analysis with EM CLI

The following are examples on how to run CPAT and view a CPAT report using EM CLI

Run the Cloud Premigration Advisor Tool (CPAT)

Before starting a migration it is strongly suggested you run the Cloud Premigration Advisor Tool (CPAT) available from Oracle Enterprise Manager 13 Release 5 Update 4 (13.5.0.4) onward. CPAT is a modern robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are reported, allowing you to address them before submitting a migration.To run CPAT in EM CLI use the following command:

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "CHECK_MIGRATION_READINESS",

"type": "DATAPUMP",

"srcDatabaseInfo":{

"targetName":"<source database target name>",

"targetType":"<source database target type>",

"dbCredName":"<db-creds-name>:<db-creds-owner>",

"hostCredName":"<host-creds-name>:<host-creds-owner>"

},

"destDatabaseInfo":{

"targetName":"<destination database target name>",

"targetType":"<destination database target type>",

"dbCredName":"<db-creds-name>:<db-creds-owner>",

"hostCredName":"<host-creds-name>:<host-creds-owner>"

},

"schemas":<list of schema names to analyze>,

"excludeSchemas":<list of schema names to be excluded>,

"srcStageDir":"<Stage directory on source target>",

"destStageDir":"<Stage directory on destination target>",

"targetCloud":"<ATPD|ATPS|ADWD|ADWS|DEFAULT>"

}Note:

schemasandexcludeSchemasare mutually exclusive. Use only one or the other, depending on your environment's needs.schemasorexcludeSchemasneed to be included in the JSON for CPAT to properly run.targetCloudis an optional parameter. If not provided, information is obtained fromdestDatabaseInfoif available. If neither are available it will be set to a DEFAULT value.- If both

destDataaseInfoandtargetCloudare available,destDatabaseInfowill be used and overridestargetCloud.

View a CPAT Report

To view the CPAT report in EM CLI use the following command:

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "GET_MIGRATION_READINESS_REPORT",

"executionId":"<DP_Execution_ID>"

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Prerequisites for REST API Data Pump Based Database Migration

If you are migrating via REST API, part of the specific prerequisites for this method involve reviewing and uploading the Migration Tools. For more information on Migration Tools see: Upload Migration Tools

Validate if Prerequistes are Met

This REST request verifies that the Migration Tools are available within Enterprise Manager.| Feature | Description |

|---|---|

| Request Method | |

| URI | |

| Header | |

| Payload | |

| Response | |

Upload Migration Tools Using REST-API

This REST request will upload the Migration Tools and CPAT before starting a migration. To download the correct Migration Tools see: Upload Migration Tools.Note:

This API requires the files to be available at a host that is managed by Enterprise Manager. It will pull the files from that host and upload the Enterprise Manager Software Library.| Feature | Description |

|---|---|

| Request Method | |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"instantClient": true,

"schemaAdvisor": false,

"cpat": true,

"msgs": [

"Instant Client files uploaded to URN: oracle:defaultService:em:provisioning:1:cmp:COMP_Component:SUB_Generic:<GUID>:0.1",

"URN Version: 1",

"CPAT files uploaded to URN: oracle:defaultService:em:provisioning:1:cmp:COMP_Component:SUB_Generic:<GUID>:0.1",

"URN Version: 1"

]

} |

Note:

If your Oracle Enterprise Manager version is 13 Release 5 Update 3 (13.5.0.3) or below, CPAT is not available. You will need to use Schema Advisor instead.Perform a CPAT Analysis with REST API

The following is sample code to perform a CPAT analysis with REST API. To run the CPAT advisor Enterprise Manager 13 Release 5 update 5 (13.5.0.4) and above is required.

Run the Cloud Premigration Advisor Tool (CPAT)

Before starting a migration it is strongly suggested you run the Cloud Premigration Advisor Tool (CPAT) available from Oracle Enterprise Manager 13 Release 5 Update 4 (13.5.0.4) onward. CPAT is a modern robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are reported, allowing you to address them before submitting a migration.Use this REST call to enter the migration parameters:

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

Note:

schemasandexcludeSchemasare mutually exclusive. Use only one or the other, depending on your environment's needs.- If neither

schemasorexcludeSchemasare included in the JSON, CPAT will not run in full mode. targetCloudis an optional parameter. If not provided, information is obtained fromdestDatabaseInfoif available. If neither are available it will be set to a DEFAULT value.- If both

destDataaseInfoandtargetCloudare available,destDatabaseInfowill be used and overridestargetCloud.

View a CPAT Report

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

Transportable Tablespace Based Migration Prerequistes

The following is a list of prerequisites needed for a Transportable Tablespace based migration.

| Prerequisite | Description |

|---|---|

| Software Library Configuration | The Software Library should be configured in EM and the EM user should have the following privileges assigned: SWLIB_VIEW_ANY_ENTITY, SWLIB_CREATE_ANY_ENTITY.

|

| Source Database | The source database should be open in Read-Write mode and in ARCHIVELOG mode.

|

| OS Authentication | OS Authentication must be enabled at both source and destination. |

| Working Directory | The source and destination working directories should be present and have read-write permissions. The destination directory should also have sufficient disk space to stage the backup. |

| Source Tablespace |

|

| Compatibility |

|

| Source Tablespace | Ensure there are no logical or physical dependencies between the database objects in the transportable set and the database objects outside of the transportable set. Only self-contained tablespaces can be transported. |

| Source Database | The Source Database should always be Primary. Standby Database is currently not supported. |

| Working Directory | In case, Source or Destination being RAC then Working directory should be a shared location across all the nodes of RAC |

Perform a Migration Readiness Check with EM CLI

The following is sample code to perform a Migration Readiness Check with EM CLI on a Transportable Tablespace (TTS) based migration. To run the Migration Readiness Check Enterprise Manager 13 Release 5 update 15 (13.5.0.15) and above is required.

Run the TTS Migration Readiness Check Tool

Before starting a TTS based migration it is strongly suggested you run the Migration Readiness Check Tool for TTS based migrations available from Oracle Enterprise Manager 13 Release 5 Update 15 (13.5.0.15) onward. This is a modern robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are addressed beforehand.To run the Migration Readiness Check in EM CLI use the following command:

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "CHECK_MIGRATION_READINESS",

"type": "ONLINE_TTS",

"srcDatabaseInfo":

{

"targetName": "SRCDB",

"targetType": "oracle_database",

"dbCredName": "SYS_SRC:EM_ADMIN1",

"hostCredName": "HOST_SRC:EM_ADMIN1"

},

"destDatabaseInfo":

{

"targetName": "DESTDB",

"targetType": "oracle_database",

"dbCredName": "SYS_DEST:EM_ADMIN1",

"hostCredName": "HOST_DEST:ADMIN1"

}

}View the Migration Readiness Report

To view the Migration Readiness report in EM CLI use the following command:

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "GET_MIGRATION_READINESS_REPORT",

"executionId":"<DP_Execution_ID>"

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Perform a Migration Readiness Check with REST API

The following is sample code to perform a Migration Readiness Check with REST API on a Transportable Tablespace (TTS) based migration. To run the Migration Readiness Check Enterprise Manager 13 Release 5 update 15 (13.5.0.15) and above is required.

Run the Migration Readiness Check Tool

Before starting a TTS based migration it is strongly suggested you run the Migration Readiness Check Tool for TTS migrations is available from Oracle Enterprise Manager 13 Release 5 Update 15 (13.5.0.15) onward. This is a modern in house robust tool that will give you a detailed analysis of your databases before migration ensuring all possible Failures, Action Requirements, Required and Suggested Reviews are addressed beforehand. Use this REST call to enter the migration parameters:| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

View the Migration Readiness Report

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "....",

"submissionName": ".....",

"submissionBy": "SYSMAN",

"submissionId": "<Unique Submission ID>",

"executionId": "<Unique Execution ID>",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=<Submission ID>",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/<Submission ID>"

} |

Analyze Migration Activities

Database Migration Workbench offers robust tools for monitoring and troubleshooting your recently complete migrations. Database Migration Workbench also offers clean up tools that aid in recovering important disk space from dump files.

The section covers the following topics

Database Migration Activities Dashboard Screen

- All: Migrations occurred in the last 24 hours (default), 7 days or all.

- Completed: Completed migrations in the last 24 hours

- In Progress: Migrations currently in progress

- Problem: Migrations that encountered problems in the last 24 hours

- Scheduled: Migrations scheduled to occur in the next 24 hours

- Warnings: Migrations that completed with warnings in the last 24 hours

Note:

You can modify the dashboard views by selecting the time range in View Data to 7 Days or All on the top right corner of the dashboard.The Migration Activity table, lists detailed information for each specific migration including Activity, Status, Source, Destination, Start, End, Elapsed Time and Owner.

- Cleanup: Deletes the dump files created during the migration from the Data Pump directory and Object Storage. For more information on Cleanup see: Clean Up Migration Files.

Note:

The Cleanup activity will not remove, cancel, rollback or stop an ongoing migration. - View Details: Takes you to a new screen with specific details for the selected Activity. You can see specific steps and results for each step in this view.

- View Analysis: Takes you to a new screen with Input Validation, Pre-migration and Post-migration analysis dashboards with detailed information allowing you to drill down to specific validations and schema to best analyze performance for your migrated database.

- Input Validation: Carries out pre-check validations for the source and destination databases, login, database status, disk space, and privileges. Validation are carried out during the input or run-time stage of every migration job submitted and display severity-wise results. Database administrators can then take action on all issues reported by the Validation report.

Once all issues reported by the Input Validation are resolved, click Submit to migrate.

- Pre-migration Analysis: Performs an analysis of the source database with severity-wise result breakdown. Each result includes a recommendation and action item to be performed to fix the issue detected. The table below shows a breakdown of severity and required action on your part.

- Post-migration Analysis: Performs a comparison of the source database and migrated database object and lists a detailed analysis with a severity wise result breakdown. Post migration analysis checks if any scheduled DBMS or CRON jobs need to be rescheduled, object counts and status, object record counts, character set, time zone, and size of database. All elements that need to be validated by a database administrator prior to the database being released to for testing.

Table 3-1 View Analysis Results Severity Breakdown

Severity Level Action Failed The source and destination databases selected are not compatible or suitable for migration and need to be changed or the particular validation could not be completed due to internal errors. Action Required Validation failed, review and take action to resolve the issue. The migration will fail if the issue is not corrected before submission. Review Required Warning message, review and if necessary take action to correct. Migration may be impacted. Review Suggested Informational message, read and review. Passed Validation succeeded, you can proceed with the migration. - Input Validation: Carries out pre-check validations for the source and destination databases, login, database status, disk space, and privileges. Validation are carried out during the input or run-time stage of every migration job submitted and display severity-wise results. Database administrators can then take action on all issues reported by the Validation report.

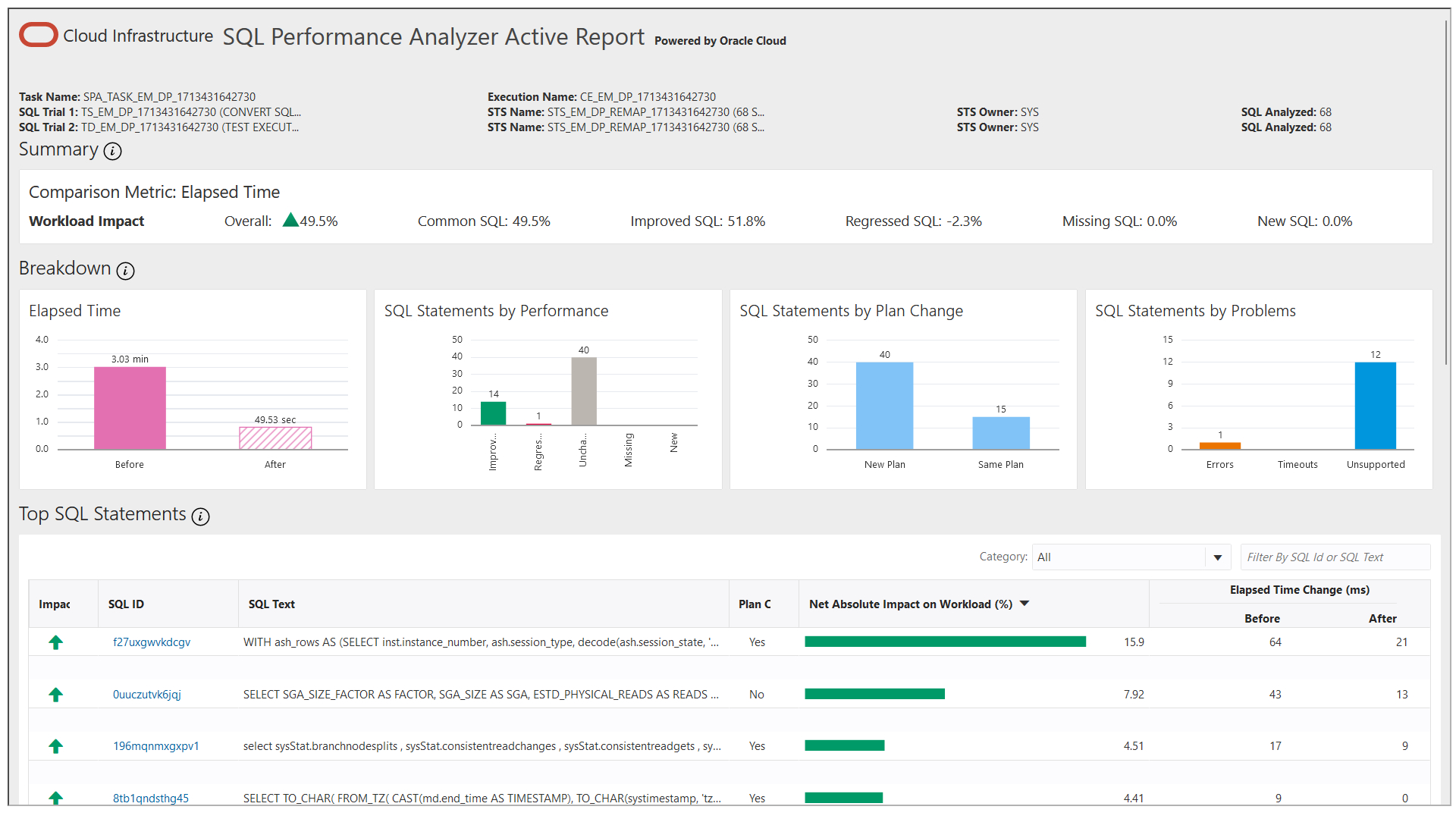

- View Performance: In this screen you can compare database performance from both the source database and the destination database with the SPA report. For more information see: Analyze Migrated Database Performance

Note:

For ADB and ATP migrations, an additional Compare Performance option will be shown with a second HTML based report, this is due to system constraints. - Mark as Complete: Migration activities that encounter warnings are reported with Status Completed with Errors, you can review and can mark the migration as Completed. For more information see: Review Migration Status and Complete Migration

Analyze Migrated Database Performance

Migrating a database can change the execution plans of SQL statements improving performance. Migrating to Oracle Cloud changes the platform on which database workloads are running. Oracle Cloud Infrastructure provides a high performing platform to run a database workload.

Note:

- When running SPA make sure to export your schema as it is needed to stage the tuning set. Failure to do so will generate an error while performing validations.

- SPA is not applicable for Metadata only migrations using Data Pump.

An SQL Tuning Set (STS) containing the SQL and relevant execution metadata from the source database can be passed to the migration procedure. A SPA task will be created which can then be used to compare the change in SQL Performance due to migration.

Should you need to use out-of-the-box performance management provided by the Autonomous Databases, Database Migration Workbench provides an option to verify performance by disabling indexes. You can then choose to disable indexes and let the Autonomous Database handle performance tuning. For more information see: Managing Auto Indexes in Oracle Database Administrator’s Guide .

View a SPA Report

Follow these steps to view a SPA report of a specific migration with Database Migration Workbench:Note:

When opening a SPA report you may be prompted to log in to the destination database, if you have not done so previously.

- Navigate to the Migration Activities Dashboard.

- Locate a recently completed Migration Activity you wish to review within the Migration Activity table.

- Click on the Action Menu located at the far left of the specific Migration Activity you want to analyze and click on View Performance, this will open the SPA Report.

- In the SPA report you can verify the following:

- Compare elapsed time by workload impact, allowing you to review the overall time, improved, regressed, missing and new SQL statements.

- Within the Breakdown you can review elapsed time, SQL statements by performance, SQL statements by plan change, and SQL statements by problems.

- Additionaly the report shows a listing of SQL statements by workload impacts

View a Migration Analysis

Follow these steps to view an analysis of a specific migration with Database Migration Workbench:- Navigate to the Migration Activities Dashboard.

- Locate a recently completed Migration Activity you wish to review within the Migration Activity table.

- Click on the Action Menu located at the far left of the specific Migration Activity you want to analyze and click on View Analysis.

- In the View Analysis dashboard screen you can analyze the results from the three main steps:

- Validation: Shows the results of the pre-migration checks.

- Export (data pump only): Shows the amount of successfully and unsuccessfully exported database objects.

- Import (data pump only): Shows the amount of successfully and unsuccessfully imported database objects.

View SQL Performance Using REST API

Obtain the SPA Report Using REST API

Note:

When viewing SQL performance for a TTS multi-phase migration using REST API, the execution ID to be used in the input payload should be that of the final complete migration step.| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {"report": "<html> <head> <title> SQL Performance Impact Analyzer Report </title> <style type=\"text/css\">

body, table, input, select, textarea {font:normal normal 8pt

Verdana,Arial;text-decoration:none;color:#000000;} .s8 {font-size:8pt;color:#006699} .s9

{font-size:10pt;color:#006699} .s10 {font-size:14pt;color:#006699;} .s16 {border-width :

1px; border-color : #CCCC99; border-style: solid;color:#006699;font-size:8pt;} .s17

{border-width : 1px; border-color : #CCCC99; border-style: solid;font-size:8pt;} .s27

{border-width : 1px; border-color : #CCCC99; border-style: solid;} </style> </head> <body> <a

name=\"general\"/> <a href=\"#general\" style=\"text-decoration:none\"> <font

class=\"s10\"> General Information </font> </a>....

} |

Run SQL Performance Analyzer Using EM CLI

The following is an example on how to run SQL Performance Analytics (SPA) on EM CLI.

Run SPA Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_SPA.JSON"DB_SPA.JSON contains the following parameters:"analyzePerformance":

{

"useSPA": true,

"createSTS": true,

“usePreferredSTS”: false —optional

“stsName”: “stsname01” —optional

“stsOwner”: “stsOwnedSchema” —optional

"stsOwner": "EXPUSER" --optional

}View SPA Report Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_SPA.JSON"DB_SPA.JSON contains the following parameters:

Note:

When viewing SQL performance for a TTS multi-phase migration using EMCLI, the execution ID to be used in the input JSON file should be that of the final complete migration step.{

"action": "SPA_REPORT",

"executionId": "<ID>",

"reportType": "html",

"reportLevel": "typical",

"reportSection": "ALL"

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Analyze a Migration Activity Using REST API

You can review the migration analysis for a recent Migration Workbench activity using REST API, see the sample file below.

| Feature | Description |

|---|---|

| Request Method | POST |

| URI | |

| Header | |

| Payload | |

| Response | {

"validationResults": {...},

"exportResults": {...},

"importResults": {...},

"status": "Succeeded",

"migrationMethod": <DATAPUMP/ONLINE_TTS>

} |

Analyze a Migration Activity Using EM CLI

You can review the migration analysis for a recent Migration Workbench activity using EM CLI.

Migration Analysis Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_ANALYZE.JSON"DB_SPA.JSON contains the following parameters:{

"action": "ANALYZE_ACTIVITY",

"executionId": "<DP execution ID>"

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Review Migration Status and Complete Migration

As part of the Analyze section of the Database Migration work cycle, you can review completed migrations to verify any errors or warnings. When satisfied with the migration results you can also mark migrations as Complete.

- Navigate to the Migration Activities Dashboard.

- Locate a recently completed Migration Activity you wish to review within the Migration Activity table. You can further filter migration activities by clicking on the different Migration Activities statuses:

- All: Shows all migrations including scheduled and in progress migrations.

- Completed: Shows all migrations that successfully completed. These will be marked as completed.

- Problems: Shows migrations that completed with errors.

- Warnings: Shows migrations that completed with warnings.

- On the Migration Activities Dashboard click on the left hand navigation menu specific to the reviewed activity, click View Details to review procedure details, analyze migration details and compare performance. Once you are satisfied that all warnings/errors reported are addressed return to the previous screen.

- On the Migration Activities Dashboard click on the left hand navigation menu specific to the reviewed activity, select Mark as Completed enter a comment in the pop up and click Yes. The Status of the Migration Activity will now show a blue check mark.

Note:

To reverse a status of a migration appearing as Completed, click on the Migration Activities Dashboard click on the left hand navigation menu specific to the reviewed activity and select Revert Status.

Review Migration Status Using REST API

As part of the Analyze section of the Database Migration work cycle, you can review, and mark as Complete, completed migrations to verify any errors or warnings.

Note:

POST can be used if user wants the OMS to pull the files from the Host. The Host needs to be discovered in Enterprise Manager.

Table 3-2 Review all Migration Activites

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | |

Table 3-3 Review a Specific Migration Activity

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | The generated Response will be a summary of the specific Migration Activity giving you a status of Failed, Completed with Errors or Completed and the specific details for each step.

|

Table 3-4 Complete a Migration Activity

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | |

Table 3-5 Revert Status for a Migration Activity

| Feature | Description |

|---|---|

| Request Method | Post |

| URI | |

| Header | |

| Payload | |

| Response | |

Review Migration Status Using EM CLI

As part of the Analyze section of the Database Migration work cycle, you can review completed migrations to verify any errors or warnings. To review migrations with EM CLI use the following:

Review Migration Status

emcli migrate_db

-file="/home/migrations/Analyze_Migration.json"Analyze_Migration file contains the following JSON payload format to review a list of activities:

{

"action": "LIST_ACTIVITIES",

"targetName": "<Source target/Destination database>",

"targetType": "<Target type of the database>"

"filters": {

"activityStatus": "ALL | IN_PROGRESS | PROBLEM | SCHEDULED | COMPLETED"

"timePeriod": "<startTimestamp>", "<endTimestamp>"

}

}Analyze_Migration file contains the following JSON payload format to review a specific activity: {

"action": "VALIDATION_RESULTS",

"executionId": "<Execution_ID>"

}Mark a Migration Activity as Complete and Revert Status

emcli migrate_db

-file="/home/migrations/Complete_Migration.json"Complete_Migration file contains the following JSON payload format to mark a migration as Complete:{

"action": "MARK_ACTIVITY_AS_COMPLETED",

"executionGuid": "<activity_execution_GUID>",

"comment":"<optional_user_comment>",

}{

"action": "MARK_ACTIVITY_AS_COMPLETED",

"executionGuid": "<activity_execution_GUID>",

"comment": "<optional_user_comment>",

"revertStatusChange": "true"

}Clean Up Migration Files

As part of the Analyze section of the Database Migration work cycle, you can clean up dump files with ease in the Database Migration Workbench. The Cleanup operation will identify the exported dump files for the migration activity and delete them from the Dump Directory where they were exported and the Object Storage where they were uploaded. The cleanup operation can be used to remove dump files for completed or failed database migrations.

Note:

Cleanup will not rollback the destination database or drop the PDB (if a new PDB was created) at this time.Clean Up Using the Database Migration Workbench

Follow these steps to cleanup database dump files after a migration with the Database Migration Workbench:- To begin cleanup, navigate to the Migration Activities Dashboard.

- Locate the specific Migration Activity to be cleaned up within the Migration Activity table.

- Click on the left hand hamburger menu specific to the activity, select Cleanup and click on Yes.

Note:

The Cleanup activity will not remove, cancel, rollback or stop an ongoing migration.Perform Migration Activity Cleanup Using REST API

After a migration has successfully completed it is recommended you run a Cleanup operation to free resources in your environment.

| Feature | Description |

|---|---|

| Request Method | |

| URI | |

| Header | |

| Payload | |

| Response | {

"statusCode": 200,

"status": "SUCCESS",

"message": "Successfully submitted the requested activity: Cleanup Database Migration",

"validations": {

"resultsList": [],

"result": false

},

"submissionName": "Cleanup Database Migration Tue Aug 10 2021 06:09:01 UTC",

"submissionBy": "SYSMAN",

"submissionId": "C92F546493DC1569E0538F0B4B6409E9",

"executionId": "C92F546493DF1569E0538F0B4B6409E9",

"restActivityUrl": "/em/websvcs/restful/db/migration/activities/C92F546493DF1569E0538F0B4B6409E9",

"uiActivityUrl": "/em/faces/core-jobs-procedureExecutionTracking?executionGUID=C92F546493DF1569E0538F0B4B6409E9"

} |

Perform Migration Activity Cleanup Using EM CLI

After a migration has successfully completed it is recommended you run a Cleanup operation to free resources in your environment.

Cleanp Using EM CLI

emcli migrate_db

-file="/home/migrations/DB_Migration.JSON"DB_Migration.JSON contains the following parameters:{

"action": "CLEANUP_ACTIVITY",

"executionId": "<Execution Id>"

}For more information on migrate_db and the accepted parameters required for migration, see: migrate_db in Oracle Enterprise Manager Command Line Interface .

Troubleshoot the Migration Process

Database Migration Workbench reviews and informs at several steps during the process that all prerequisites, requirements, databases, and schema are met, available, and ready. Should an issue or error come up during the Database Migration Workbench process this section can help you troubleshoot your migration.

| Issue | Reason | Category | Workaround | Database Version |

|---|---|---|---|---|

| Upload dump file step generates error because the agent is down. | The agent may have run out of memory. Review the log files. | EM Agent | You can update the degree of parallelism within the failed step to a lower number and retry. Pending files will upload. | All versions |

| Creation of Table Space fails at destination Autonomous Database | Autonomous Databases do not allow Table Space creation with small Data Files | ADB | Create a tablespace at the destination ADB with a large data file and specify remap_tablespace.

|

All versions |

| Creation of schema fails at destination ADB | The user profile does not get created at the destination ADB, causing schema creation failure.

Data Pump does not export Profiles when not run in |

ADB | Pre-create profile at the ADB, or use remap_schema.

Example:

|

All versions |

Export failed because of error: DETERMINE_FATAL_ERROR with ORA-20005: object statistics are locked (stattype = ALL) |

Tables used for statistic gathering are blocked. | Data Pump | Unlock the tables for statistics using the command: SQL> exec dbms_stats.unlock_table_stats('owner', 'tablename');To get a list of tables that are locked you can execute: |

All versions |

Data remapped to the USERS table space might not get imported.

|

USERS table space is missing at the destination database.

|

On Premise Migration | Create the USERS table space and retry. Alternative solution is to remap to an existing available table space that can replace USERS.

|

All versions |

While moving the data to a new Pluggable Database (PDB), the data remapped to USERS tables pace might not get imported.

|

USERS table space failed to create in the new PDB.

|

Migration into a new PDB. | Remap to a SYSTEM table space with some caveats or select an existing PDB.

|

All versions |