1 Database Migration Planner

Database Migration Planner provides a comprehensive end-to-end solution for managing database consolidation. It enables you to match managed sources you want to consolidate with new or existing destinations. Database Migration Planner supports the following combinations:

- Consolidate source databases (single instance or RAC) to fewer destination databases, using the database to database (D2D) consolidation type (consolidation to multi-tenant). Destinations can be existing databases (both non-CDB and CDB) or new databases on new servers, which can be Oracle Exadata Database Machines, Oracle Compute Cloud shapes, or generic servers.

- Consolidate source databases (single instance or RAC) to fewer servers where the number of databases stays the same, using the database to server (D2S) consolidation type. Destinations can be existing servers or new servers, which can be Oracle Exadata Database Machines, Oracle Compute Cloud shapes, or generic servers.

- Migrate source databases to another server with more resources. You can perform consolidation at a later time.

Database Migration Planner uses database metrics in assessing resource requirements for database consolidation. You must have Oracle Enterprise Manager 13.5 Release 5 (13.5.0.5) Database Plug-in or later to collect the database metrics Database Migration Planner uses.

The following sections describe Database Migration Planner:

- Overview of Database Migration Planner

- Creating a Database Migration Planner Project

- Creating a Database Migration Planner Scenario

- Other Database Consolidation Scenario Creation Options

- Evaluating Database Consolidation Scenarios

- About the Advisor Feature of Database Migration Planner

- About Compression Advisor

- Estimating Compressed Storage Requirements

- Assessing the Performance Impact of Database Migration on SQL Workload

Overview of Database Migration Planner

Database Migration Planner offers flexibility for various customer scenarios:

-

Consolidate 10.2 and higher database versions.

-

Consolidate to Oracle private or public cloud or to Exadata.

-

Use high availability options to minimize downtime, subject to source and destination database platform and version.

Database Migration Planner takes the guess work out of consolidation by basing analysis on historical workload (database and host metrics). It eliminates human error by automating all phases of consolidation from planning to deployment.

Consolidation optimization advice enables you to estimate resource allocation under various consolidation scenarios, from ultra conservative, representing a peak maximum load, to aggressive, measured as the average daily usage per hour. Use optimization advice to identify conflicts based on workload characteristics and Exadata suitability. Assess the impact of compression on I/O and storage, including I/O offloading and Flash Cache technology.

As servers have become more and more powerful, with much greater workload capacity, enterprises with many small databases running on different servers find that their servers are greatly underutilized. Their aim is to consolidate these small databases onto fewer servers, thereby reducing their hardware purchasing cost and ongoing maintenance expenditures.

At the same time, database customers, in assessing their performance needs, want to evaluate resource utilization over a total specified time period to ensure meeting peak demand.

When weighing source I/O requirements to determine needed destination capacity, the key is I/O capacity of external storage units of Oracle Engineered Systems such as Exadata and Exalogic typically shared by databases.

Database Migration Planner collects detailed storage information regarding total space allocated and total space used for each source database by segment type (Table, Index, LOB, Other). It then estimates how much space can be saved by compressing the data. Depending on the segment type and database version, estimates are given for several types and levels of compression.

-

At the project level, Database Migration Planner displays data storage requirements by database and segment type, with compressed and uncompressed values.

-

At the scenario level, Database Migration Planner recommends a specific type and quantity of Exadata external storage system, based on the requirement and customer input with regard to compression and other storage options.

Database Migration Planner collects the following I/O requirements:

-

I/O requests per second (IOPS)

-

I/O bandwidth (MB/Second)

Database Migration Planner fits the requirements to external storage unit I/O capacity, using the ratio of I/O bandwidth to IOPS to characterize each source database workload as either OLTP or DSS. These findings determine whether Database Migration Planner considers IOPS, I/O bandwidth, or both when consolidating to external storage. Note, however, that users can override these recommendations.

Thus, when consolidating databases, Database Migration Planner attempts to fit CPU and memory capacity to the destination, but not database storage and I/O capacity.

Creating a Database Migration Planner Project

You create a database consolidation project for each consolidation effort, then add individual consolidation scenarios within it. You can then compare consolidation scenarios to determine which consolidation strategy makes the most sense.

After the project is defined, a job is submitted to collect available data for the specified targets from the Management Repository. Once the job finishes, the project becomes an active project. As long as the project is in an active state, data collection will continue.

Creating a project involves the following steps:

Selecting the Database Migration Project Type

Complete Step 1 of the database migration project creation process as follows:

Specifying Database Source Candidates

Complete Step 2 of the database consolidation project creation process as follows:

Specifying Database Destination Candidates

Complete Step 3 of the database consolidation project creation process as follows:

Setting Data Collection Parameters

Specify the duration over which data used to generate database consolidation scenarios will be collected for the source databases specified in the project. This data will be used to determine the resource requirements that a destination server or database must meet.

Choosing a Pre-configured Scenario

When creating a database consolidation project, you can optionally choose to generate pre-configured consolidation scenarios to add to the project.

These scenarios are generated using data collected for the sources defined in the consolidation project at the time the project is created. If insufficient data is available when the project is created, the pre-configured scenarios will be automatically generated once the minimum amount of data has been collected.

Enterprise Manager Consolidation ships with three out-of-the-box scenarios that represent aggressive, medium, and conservative consolidation schemes.

The pre-configured scenarios will be generated when the project is created using data collected for the databases defined in the consolidation project.

You can also opt to create your own custom scenario once the consolidation project has been completed.

Reviewing and Saving the Database Consolidation Project

Review the specifics of the database consolidation project. Use the Back button to return to a given step to make changes. If satisfied, click Submit. A message confirms that the project has been created and the job has been submitted.

Once the project is created, it will show up in the Consolidation console. Consolidation scenarios can then be defined for this project.

Creating a Database Migration Planner Scenario

You can create custom database consolidation scenarios instead of or in addition to using the pre-configured scenarios. Multiple scenarios can be created within a project, enabling you to compare different scenarios before deciding on a solution. New consolidation scenarios are created within an existing consolidation project.

Note:

Because a scenario can include a subset of all databases, in this situation it can be considered somewhat separate from a project.You can create consolidation scenarios for planning purposes. You also can implement the scenario, which enables you to perform the database migration on-demand.

Creating a scenario involves the following steps:

Setting Up the Scenario

Complete Step 1 of the database consolidation scenario creation process as follows:

Defining Constraints for Database Consolidation

Specify business, corporate or technical constraints that must be enforced. Constraints can guide the allocation process during automatic source-to-destination mapping. For manual mappings between sources and destinations, constraints can calculate violations.

Complete Step 2 of a database consolidation scenario as follows:

Mapping Database Sources to Destinations

Next, map the database sources to the destinations they will be consolidated to. The objective is to fit source requirements with each destination's available resources as tightly as possible.

Oracle recommends that you allow Database Migration Planner to perform this mapping automatically. This will allow the tool to maximize resource utilization of destinations based on resource capabilities and the various consolidation constraints specified. If you use manual mapping, the source will be mapped to the destination even if the destination lacks sufficient capacity. In addition, manual mapping may violate previously declared constraints.

When you have chosen existing destinations, you can optionally map each source and destination manually:

Reviewing and Saving the Database Consolidation Scenario

Finally, review the various parameters set in the new database scenario. Use the Back button if you need to make changes; otherwise, proceed as follows:

-

Optionally, you can save the scenario as a template, which can then be shared with other users. If you want to do this, click Save Scenario as a Template. In the dialog that opens, browse to a location in the local file system and save the template as an XML file.

-

Click Submit. A message appears confirming that a job has been submitted for further analysis of the scenario. Results appear at the bottom of the Consolidation home page when done.

Other Database Consolidation Scenario Creation Options

You can create a database consolidation scenario based on an existing scenario. Select an applicable scenario under a consolidation project and then select Create Like Scenario from the Actions menu. Modify the scenario as desired, provide a meaningful name, and submit for analysis as usual.

If you saved a scenario as a template, you can subsequently import the scenario into another environment.

Evaluating Database Consolidation Scenarios

You can view details for your consolidation scenarios using the Consolidation console. After evaluating the consolidation scenario results, you can define different scenarios as well as rerun existing scenarios to re-evaluate them based on the previously specified conditions with the latest available data. The results of the previous analysis will be over-written. You can also create a new scenario based on an existing scenario, where you tweak certain values to customize the new scenario. This iterative process helps you obtain the optimized consolidation scenario which is generated by compromising various factors and weighing different trade-offs.

Compare the consolidation scenarios you create to determine which consolidation strategy best meets your requirements.

Your objective is to:

-

Match source resource requirements with destinations best able to meet those requirements.

-

Ensure that the destination's available capacity can accommodate the combined calculated workloads of all source databases.

-

Provide room for growth on destinations by allowing for headroom as a factor of resource requirements.

-

Optionally balance the source workload across all available destinations.

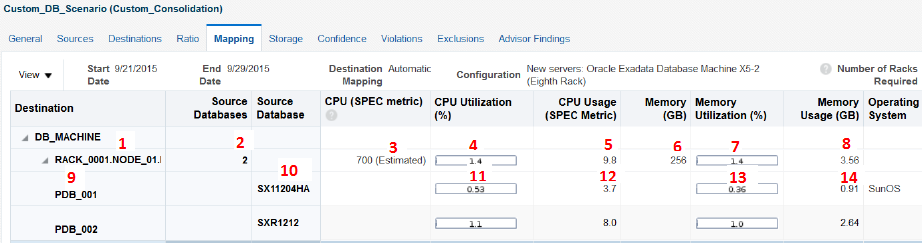

Figure 1-1shows the mapping for a simple database-to-database consolidation using the default phantom destination (clustered CDB/PDB). The figure illustrates the key points of mapping as explained in Table 1-1.

Figure 1-1 Database Consolidation Mapping

Table 1-1 explains the key points of mapping as annotated in Figure 1-1.

Table 1-1 Legend for Figure 1-1

| Reference Point | Explanation |

|---|---|

|

1 |

Name of rack, compute node, and consolidated database instance |

|

2 |

Number of databases consolidated to this instance |

|

3 |

CPU capacity of compute node |

|

4 |

Total CPU utilization (%) for this compute node, including CPU utilization for existing destinations when applicable |

|

5 |

Total CPU usage for this compute node |

|

6 |

Memory capacity of the compute node |

|

7 |

Total memory utilization (%) for this compute node, including utilization for existing destinations when applicable |

|

8 |

Total memory usage for this compute node |

|

9 |

Name of the pluggable database |

|

10 |

Name of source database consolidated to this PDB |

|

11 |

Percentage of compute node's CPU consumed by this source database |

|

12 |

CPU usage for this source database on the compute node |

|

13 |

Percentage of compute node's memory consumed by this source database |

|

14 |

Memory usage for this source database on the compute node |

About the Advisor Feature of Database Migration Planner

The Advisor gathers database performance data from the Automatic Workload Repository (AWR) and uses the data as input to rules. The rules are evaluated to determine if performance bottlenecks exist in the source or destination databases, and provide advice on how to relieve the problem. At the scenario level, the rules also look at source databases in combination as well as at destination specifications to determine if the consolidation might experience performance problems.

Advisor findings are viewable for both projects and scenarios, as a column on the Database Migration Planner home page, and as a tab in the respective project and scenario details region.

About Compression Advisor

The Compression Advisor estimates how much space compression savings each source database can potentially benefit from for different types of supported compression types, and calculates how much space the uncompressed data would require. The results appear on the Storage tab of a database consolidation scenario. You can also specify how to compress data in the destination, so that reductions can be applied when determining how much storage will be needed. To enable the estimation of compression, you have to deploy a job to each source database and run compression advice to make the results available to Database Migration Planner.

Estimating Compressed Storage Requirements

If you want to factor in compression ratios on source databases as part of a database consolidation, you have to submit a Deploy Database Migration Planner Packages job to gather compression advice on each source. You can do this beforehand or following project creation.

The result of these actions makes estimated compression ratios available to Database Migration Planner. Note that it may take up to 24 hours after compression advice is run for the metrics to collect the data into Enterprise Manager for scenario analysis.

Database Migration and Encrypted Tablespace

If a source database uses encrypted tablespace, you will not be able to access data in these tables on the destination following the migration until you copy the wallet from the source location to a location on the destination. You can find the wallet location in the sqlnet.ora file. The default location of the file is based on the TNS Admin or ORACLE_HOME/network/admin location.

After you copy the wallet to the destination, update the sqlnet.ora file on the destination with the location where you copied the wallet. For example:

ENCRYPTION_WALLET_LOCATION =

(SOURCE =

(METHOD = FILE)

(METHOD_DATA =

(DIRECTORY = /scratch/jdoe/app/jdoe/admin/dben/wallet)

)

)

After updating the file, you have to open the wallet before you can access data in the encrypted tablespace.

- From the Targets menu, select Databases, then search for the destination database.

- On the destination database home page, select Transparent Data Encryption from the Security menu.

- Open the wallet by providing the requisite password.

You can now access data in the encrypted tablespace.

For detailed information on encryption, wallets, and other security-related issues, see the Oracle Database Advanced Security Guide.

Assessing the Performance Impact of Database Migration on SQL Workload

System changes such as migrating a database may cause changes to execution plans of SQL statements, resulting in a significant impact on SQL performance. You can analyze performance impact of database migration on SQL workload using SQL Performance Analyzer to identify the SQL statements that have improved, remained unchanged, or regressed after the system change.

The typical flow to assess the performance impact on SQL workload using SQL Performance Analyzer is as follows:

- Capture the SQL workload that you intend to analyze and store it in a SQL tuning set.

- Pre-system change, create a SQL Performance Analyzer task.

- Build the pre-change SQL trial by test executing or generating execution plans for the SQL statements stored in the SQL tuning set.

- Perform the system change.

- Build the post-change SQL trial by re-executing the SQL statements in the SQL tuning set on the post-change system.

- Generate a report to identify the impact of change on the SQL statements.

- View the SQL Performance Analyzer report to compare pre- and post-change SQL performance. You can access the report from the destination database home page by selecting SQL then SQL Performance Analyzer Home from the Performance menu.

As noted, this is the typical flow to use SQL Performance Analyzer. In the case of a database migration, you only need to perform the first and last steps listed above—create the SQL tuning set and view the report. The migration job does the rest:

-

Creates the SQL Performance Analyzer task.

-

Builds the pre-change SQL trial.

-

Performs the database migration.

-

Builds the post-change SQL trial.

-

Generates the report.

For detailed information on using the feature, see "Part I SQL Performance Analyzer" in the Oracle Database Testing Guide.