4.5 Collecting Operating System Resources Metrics

Cluster Health Monitor (CHM) and System Health Monitor (SHM) are both high-performance, lightweight daemons that collect, analyze, aggregate, and store a large set of operating system metrics to help you diagnose and troubleshoot system issues.

Why CHM or SHM is unique

| CHM or SHM | Typical OS Collector |

|---|---|

|

Last man standing - daemon runs memory locked, RT scheduling class ensuring consistent data collection under system load. |

Inconsistent data dropouts due to scheduling delays under system load. |

|

High fidelity data sampling rate, 5 seconds. Very low resource usage profile at 5-second sampling rates. |

Running multiple utilities creates additional overhead on the system being monitored, and worsens with higher sampling rates. |

|

High Availability daemon, collated data collections across multiple resource categories. Highly optimized collector (data read directly from the operating system, same source as utilities). |

Set of scripts/command-line utilities, for example,

|

|

Collected data is collated into a system snapshot overview (Nodeview) on every sample, Nodeview also contains additional summarization and analysis of the collected data across multiple resource categories. |

System snapshot overviews across different resource categories are very tedious to collate. |

|

Significant inline analysis and summarization during data collection and collation into the Nodeview greatly reduces tedious, manual, time-consuming analysis to drive meaningful insights. |

The analysis is time-consuming and processing-intensive as the output of various utilities across multiple files needs to be collated, parsed, interpreted, and then analyzed for meaningful insights. |

|

Performs Clusterware-aware specific metrics collection (Process Aggregates, ASM/OCR/VD disk tagging, Private/Public NIC tagging). Also provides an extensive toolset for in-depth data analysis and visualization. |

None |

- Comparing CHM and SHM: Understanding their fundamental differences

This topic outlines the purpose and usage of Cluster Health Monitor (CHM) and System Health Monitor (SHM). - Additional Details About System Health Monitor (SHM)

System Health Monitor (SHM) is integrated and enabled by default in AHF. AHF now includes the SHM files in its diagnostic collection. - Collecting Cluster Health Monitor Data

Collect Cluster Health Monitor data from any node in the cluster. - Operating System Metrics Collected by Cluster Health Monitor and System Health Monitor

Review the metrics collected by CHM and SHM. - Detecting Component Failures and Self-healing Autonomously

Improved ability to detect component failures and self-heal autonomously improves business continuity. - CHM Inline Analysis

Inline Analysis is a new feature that will automatically execute on all systems where Autonomous Health Framework (AHF) is installed. - Integrating System Health Monitor (SHM) into AHF for Standalone Non-Root Installations

System Health Monitor (SHM) is a tool that collects essential operating system metrics for Oracle Support, especially valuable in diagnosing initial failures such as node evictions when Service Requests (SRs) are logged. - Creating an AHF Insights Report for Operating System Issues (Non-Root AHF Installation)

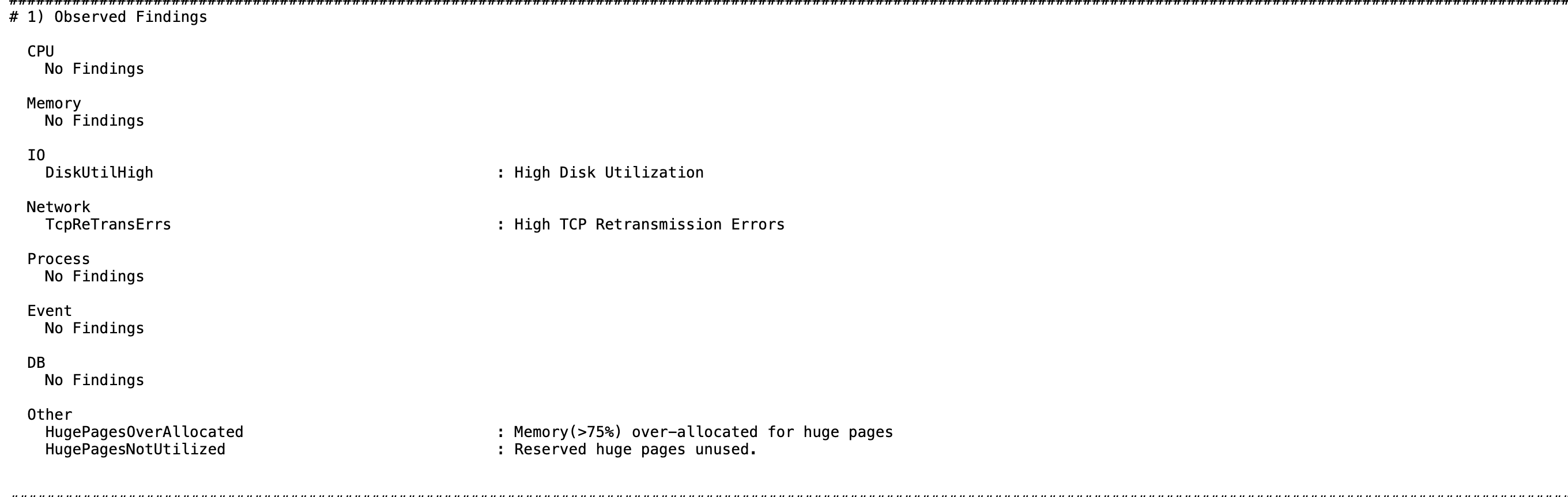

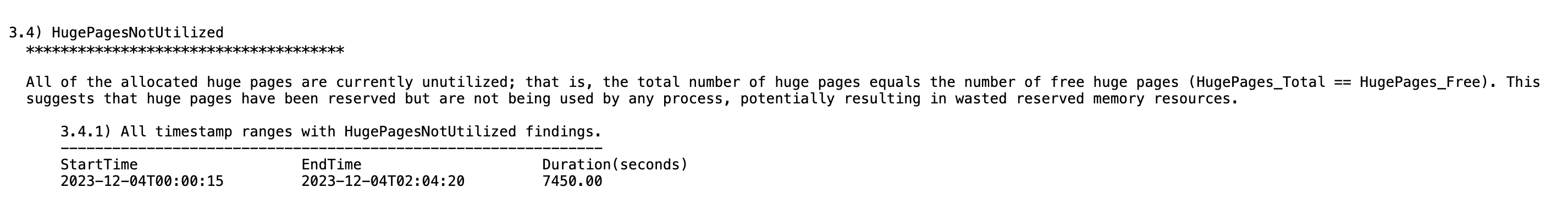

Create Insights reports from SHM data to proactively identify and investigate operating system-related issues in a non-root AHF environment. - Diagnostic Signature: HugePagesNotUtilized

A new diagnostic signature,HugePagesNotUtilized, has been introduced in AHF 25.11.

Parent topic: Collect Diagnostic Data

4.5.1 Comparing CHM and SHM: Understanding their fundamental differences

This topic outlines the purpose and usage of Cluster Health Monitor (CHM) and System Health Monitor (SHM).

| Cluster Health Monitor (CHM) | System Health Monitor (SHM) |

|---|---|

Known as system monitor daemon (osysmond) is a real-time monitoring and operating system metric collection daemon that runs on each cluster node on RAC systems.

|

known as system Health Monitor (ahf-sysmon) is a real time monitoring and operating system collection service available on Single-Instance Database and non-GI based systems.

|

| Integrated and enabled by default as part of GI since 11.2. | Integrated and enabled by default as part of AHF 24.6 |

Runs as system monitor service (osysmond) from GI home.

|

Runs as ahf-sysmon service from AHF home.

|

| Managed as High Availability Services (HAS) resource within GI stack. | Managed as tfa-monitor resource within AHF stack.

|

Status of the resource can be queried using below command: |

Status of the process can be queried using below command: |

|

Generated operating system metrics gets stored in Metric Repository is auto-managed on the above local filesystem.

|

Generated operating system metrics gets stored in Metric Repository is auto-managed on the above local filesystem.

|

Above generated operating system metrics are collected as part tfactl diagcollect.

|

Above generated operating system metrics are collected as part tfactl diagcollect.

|

| Supported on Linux, Solaris, AIX, zLinux, ARM64, and Microsoft Windows platforms. | Supported only on Linux platform. |

Parent topic: Collecting Operating System Resources Metrics

4.5.2 Additional Details About System Health Monitor (SHM)

System Health Monitor (SHM) is integrated and enabled by default in AHF. AHF now includes the SHM files in its diagnostic collection.

System Health Monitor (SHM) monitors operating system metrics in real time for processes, memory, network, IO and disk to troubleshoot and root cause the system performance issues in real time as well as root cause analysis of past issues. System Health Monitor (SHM) analysis will be available in AHF Insights. For more information, see Explore Diagnostic Insights.

SHM operates as a daemon process, triggered and controlled by AHF, enabled by default, but it is only available on Single-Instance Database and non-GI based systems.

Also, you can use the ahfctl statusahf command to check the status of System Health Monitor.

- To start SHM:

Run this command only if SHM has been stopped previously for some reason and needs to be switched back on.

ahf configuration set --property ahf.collectors.enhanced_os_metrics --value onRunning the command enables

ahf-sysmonto be started and the TFA daemon will then start and monitor it. - To stop SHM:

ahf configuration set --property ahf.collectors.enhanced_os_metrics --value offRunning the commands checks if

ahf-sysmonis up or not. If it's running, the command will kill the process and stopsahf-sysmon. - To verify the default value of SHM:

ahf configuration get --property ahf.collectors.enhanced_os_metrics ahf.collectors.enhanced_os_metrics: on - To verify the SHM process (

ahf-sysmon) is active by default:ps -fe | grep sysmon root 3333453 1 0 22:44 ? 00:00:00 /opt/oracle.ahf/shm/ahf-sysmon/bin/ahf-sysmon - To check SHM JSON files:

Locate the JSON files in the SHM data directory

/opt/oracle.ahf/data/<hostname>/shm - To check if SHM runs under the same

cgroupofTFAMainor not:-bash-4.4$ ps -ef | grep ahf-sysmon root 3232 1 0 09:38 ? 00:00:47 /opt/oracle.ahf/shm/ahf-sysmon/bin/ahf-sysmon testuser 155833 155678 0 17:04 pts/0 00:00:00 grep --color=auto ahf-sysmon -bash-4.4$ cat /proc/3232/cgroup | grep "cpu" 8:cpu,cpuacct:/oratfagroup 4:cpuset:/-bash-4.4$ ps -ef | grep tfa root 1945 1 0 09:37 ? 00:00:02 /bin/sh /etc/init.d/init.tfa run >/dev/null 2>&1 </dev/null root 2851 1 1 09:37 ? 00:05:21 /opt/oracle.ahf/jre/bin/java --add-opens java.base/java.lang=ALL-UNNAMED -server -Xms128m -Xmx256m -Djava.awt.headless=true -Ddisable.checkForUpdate=true -XX:+ExitOnOutOfMemoryError oracle.rat.tfa.TFAMain /opt/oracle.ahf/tfa testuser 156073 155678 0 17:05 pts/0 00:00:00 grep --color=auto tfa-bash-4.4$ cat /proc/2851/cgroup | grep "cpu" 8:cpu,cpuacct:/oratfagroup 4:cpuset:/-bash-4.4$ cat /proc/3232/cgroup | grep "cpu" 8:cpu,cpuacct:/oratfagroup 4:cpuset:/cat /proc/[PID_OF_AHF-SYSMON]/cgroup | grep "cpu"cat /proc/[PID_OF_TFA]/cgroup | grep "cpu" - To verify SHM files are collected in AHF collection:

- As prerequisite, run:

tfactl set smartprobclassifier=off - Then run:

tfactl diagcollect -last 1h -tag shm_last_1h; unzip -l $REPOSITORY_ROOT/shm_last_1h/$HOSTNAME*.zipA directory called

SHMshould be present in generated zip file. - And finally run:

tfactl diagcollect -last 1h Archive: /opt/oracle.ahf/data/repository/collection_Wed_Apr_10_22_03_04_UTC_2024_node_all/test-node.tfa_Wed_Apr_10_22_03_03_UTC_2024.zip | grep SHM Length Date Time Name --------- ---------- ----- ---- 327 04-10-2024 22:03 test-node/SHMDATA/shmdataconverter_3279258.log 6660 04-10-2024 22:03 test-node/SHMDATA/shmosmeta_1923000.json 43575 04-10-2024 22:03 test-node/SHMDATA/shmosmetricdescription.json 9561411 04-10-2024 22:03 test-node/SHMDATA/shmosdata_test-node_2024-04-10-2100.log 997193 04-10-2024 22:03 test-node/SHMDATA/shmosdata_test-node_2024-04-10-2200.log

- As prerequisite, run:

Parent topic: Collecting Operating System Resources Metrics

4.5.3 Collecting Cluster Health Monitor Data

Collect Cluster Health Monitor data from any node in the cluster.

Oracle recommends that you run the tfactl diagcollect command to collect diagnostic data when an Oracle Clusterware error occurs.

Parent topic: Collecting Operating System Resources Metrics

4.5.4 Operating System Metrics Collected by Cluster Health Monitor and System Health Monitor

Review the metrics collected by CHM and SHM.

Overview of Metrics

CHM groups the operating system data collected into a Nodeview. A Nodeview is a grouping of metric sets where each metric set contains detailed metrics of a unique system resource.

Brief description of metric sets are as follows:

- CPU metric set: Metrics for top 127 CPUs sorted by usage percentage

- Device metric set: Metrics for 127 devices that include ASM/VD/OCR along with those having a high average wait time

- Process metric set: Metrics for 127 processes

- Top 25 CPU consumers (idle processes not reported)

- Top 25 Memory consumers (RSS < 1% of total RAM not reported)

- Top 25 I/O consumers

- Top 25 File Descriptors consumers (helps to identify top inode consumers)

- Process Aggregation: Metrics summarized by foreground and background processes for all Oracle Database and Oracle ASM instances

- Network metric set: Metrics for 16 NICS that include public and private interconnects

- NFS metric set: Metrics for 32 NFS ordered by round trip time

- Protocol metric set: Metrics for protocol groups TCP, UDP, and IP

- Filesystem metric set: Metrics for filesystem utilization

- Critical resources metric set: Metrics for critical system

resource utilization

- CPU Metrics: system-wide CPU utilization statistics

- Memory Metrics: system-wide memory statistics

- Device Metrics: system-wide device statistics distinct from individual device metric set

- NFS Metrics: Total NFS devices collected every 30 seconds

- Process Metrics: system-wide unique process metrics

CPU Metric Set

Contains metrics from all CPU cores ordered by usage percentage.

Table 4-13 CPU Metric Set

| Metric Name (units) | Description |

|---|---|

| system [%] | Percentage of CPU utilization occurred while running at the system level (kernel). |

| user [%] | Percentage of CPU utilization occurred while running at the user level (application). |

| usage [%] | Total utilization (system[%] + user[%]). |

| nice [%] | Percentage of CPU utilization occurred while running at the user level with nice priority. |

| ioWait [%] | Percentage of time that the CPU was idle during which the system had an outstanding disk I/O request. |

| steal [%] | Percentage of time spent in involuntary wait by the virtual CPU while the hypervisor was servicing another virtual processor. |

Device Metric Set

Contains metrics from all disk devices/partitions ordered by their service time in milliseconds.

Table 4-14 Device Metric Set

| Metric Name (units) | Description |

|---|---|

| ioR [KB/s] | Amount of data read from the device. |

| ioW [KB/s] | Amount of data written to the device. |

| numIOs [#/s] | Average disk I/O operations. |

| qLen [#] | Number of I/O queued requests, that is, in a wait state. |

| aWait [msec] | Average wait time per I/O. |

| svcTm [msec] | Average service time per I/O request. |

| util [%] | Percent utilization of the device (same as

'%util metric from the iostat

-x command. Represents the percentage of time device

was active).

|

Process Metric Set

Contains multiple categories of summarized metric data computed across all system processes.

Table 4-15 Process Metric Set

| Metric Name (units) | Description |

|---|---|

| pid | Process ID. |

| pri | Process priority (raw value from the operating system). |

| psr | The processor that process is currently assigned to or running on. |

| pPid | Parent process ID. |

| nice | Nice value of the process. |

| state | State of the process. For example, R->Running,

S->Interruptible sleep, and so on.

|

| class | Scheduling class of the process. For example,

RR->RobinRound, FF->First in First

out, B->Batch scheduling, and so

on.

|

| fd [#] | Number of file descriptors opened by this process, which is updated every 30 seconds. |

| name | Name of the process. |

| cpu [%] | Process CPU utilization across cores. For example, 50% => 50% of single core, 400% => 100% usage of 4 cores. |

| thrds [#] | Number of threads created by this process. |

| vmem [KB] | Process virtual memory usage (KB). |

| shMem [KB] | Process shared memory usage (KB). |

| rss [KB] | Process memory-resident set size (KB). |

| ioR [KB/s] | I/O read in kilobytes per second. |

| ioW [KB/s] | I/O write in kilobytes per second. |

| ioT [KB/s] | I/O total in kilobytes per second. |

| cswch [#/s] | Context switch per second. Collected only for a few critical Oracle Database processes. |

| nvcswch [#/s] | Non-voluntary context switch per second. Collected only for a few critical Oracle Database processes. |

| cumulativeCpu [ms] | Amount of CPU used so far by the process in microseconds. |

NIC Metric Set

Contains metrics from all network interfaces ordered by their total rate in kilobytes per second.

Table 4-16 NIC Metric Set

| Metric Name (units) | Description |

|---|---|

| name | Name of the interface. |

| tag | Tag for the interface, for example, public, private, and so on. |

| mtu [B] | Size of the maximum transmission unit in bytes supported for the interface. |

| rx [Kbps] | Average network receive rate. |

| tx [Kbps] | Average network send rate. |

| total [Kbps] | Average network transmission rate (rx[Kb/s] + tx[Kb/s]). |

| rxPkt [#/s] | Average incoming packet rate. |

| txPkt [#/s] | Average outgoing packet rate. |

| pkt [#/s] | Average rate of packet transmission (rxPkt[#/s] + txPkt[#/s]). |

| rxDscrd [#/s] | Average rate of dropped/discarded incoming packets. |

| txDscrd [#/s] | Average rate of dropped/discarded outgoing packets. |

| rxUnicast [#/s] | Average rate of unicast packets received. |

| rxNonUnicast [#/s] | Average rate of multicast packets received. |

| dscrd [#/s] | Average rate of total discarded packets (rxDscrd + txDscrd). |

| rxErr [#/s] | Average error rate for incoming packets. |

| txErr [#/s] | Average error rate for outgoing packets. |

| Err [#/s] | Average error rate of total transmission (rxErr[#/s] + txErr[#/s]). |

NFS Metric Set

Contains top 32 NFS ordered by round trip time. This metric set is collected once every 30 seconds.

Table 4-17 NFS Metric Set

| Metric Name (units) | Description |

|---|---|

| op [#/s] | Number of read/write operations issued to a filesystem per second. |

| bytes [#/sec] | Number of bytes read/write per second from a filesystem. |

| rtt [s] | This is the duration from the time that the client's kernel sends the RPC request until the time it receives the reply. |

| exe [s] | This is the duration from that NFS client does the RPC request to its kernel until the RPC request is completed, this includes the RTT time above. |

| retrains [%] | This is the retransmission's frequency in percentage. |

Protocol Metric Set

Contains specific metrics for protocol groups TCP, UDP, and IP. Metric values are cumulative since the system starts.

Table 4-18 TCP Metric Set

| Metric Name (units) | Description |

|---|---|

| failedConnErr [#] | Number of times that TCP connections have made a direct transition to the CLOSED state from either the SYN-SENT state or the SYN-RCVD state, plus the number of times that TCP connections have made a direct transition to the LISTEN state from the SYN-RCVD state. |

| estResetErr [#] | Number of times that TCP connections have made a direct transition to the CLOSED state from either the ESTABLISHED state or the CLOSE-WAIT state. |

| segRetransErr [#] | Total number of TCP segments retransmitted. |

| rxSeg [#] | Total number of TCP segments received on TCP layer. |

| txSeg [#] | Total number of TCP segments sent from TCP layer. |

Table 4-19 UDP Metric Set

| Metric Name (units) | Description |

|---|---|

| unkPortErr [#] | Total number of received datagrams for which there was no application at the destination port. |

| rxErr [#] | Number of received datagrams that could not be delivered for reasons other than the lack of an application at the destination port. |

| rxPkt [#] | Total number of packets received. |

| txPkt [#] | Total number of packets sent. |

Table 4-20 IP Metric Set

| Metric Name (units) | Description |

|---|---|

| ipHdrErr [#] | Number of input datagrams discarded due to errors in their IPv4 headers. |

| addrErr [#] | Number of input datagrams discarded because the IPv4 address in their IPv4 header's destination field was not a valid address to be received at this entity. |

| unkProtoErr [#] | Number of locally-addressed datagrams received successfully but discarded because of an unknown or unsupported protocol. |

| reasFailErr [#] | Number of failures detected by the IPv4 reassembly algorithm. |

| fragFailErr [#] | Number of IPv4 discarded datagrams due to fragmentation failures. |

| rxPkt [#] | Total number of packets received on IP layer. |

| txPkt [#] | Total number of packets sent from IP layer. |

Filesystem Metric Set

Contains metrics for filesystem utilization. Collected only for GRID_HOME filesystem.

Table 4-21 Filesystem Metric Set

| Metric Name (units) | Description |

|---|---|

| mount | Mount point. |

| type | Filesystem type, for example, etx4. |

| tag | Filsystem tag, for example, GRID_HOME. |

| total [KB] | Total amount of space (KB). |

| used [KB] | Amount of used space (KB). |

| avbl [KB] | Amount of available space (KB). |

| used [%] | Percentage of used space. |

| ifree [%] | Percentage of free file nodes. |

System Metric Set

Contains a summarized metric set of critical system resource utilization.

Table 4-22 CPU Metrics

| Metric Name (units) | Description |

|---|---|

| pCpus [#] | Number of physical processing units in the system. |

| Cores [#] | Number of cores for all CPUs in the system. |

| vCpus [#] | Number of logical processing units in the system. |

| cpuHt | CPU Hyperthreading enabled (Y) or disabled (N). |

| osName | Name of the operating system. |

| chipName | Name of the chip of the processing unit. |

| system [%] | Percentage of CPUs utilization that occurred while running at the system level (kernel). |

| user [%] | Percentage of CPUs utilization that occurred while running at the user level (application). |

| usage [%] | Total CPU utilization (system[%] + user[%]). |

| nice [%] | Percentage of CPUs utilization occurred while running at the user level with NICE priority. |

| ioWait [%] | Percentage of time that the CPUs were idle during which the system had an outstanding disk I/O request. |

| Steal [%] | Percentage of time spent in involuntary wait by the virtual CPUs while the hypervisor was servicing another virtual processor. |

| cpuQ [#] | Number of processes waiting in the run queue within the current sample interval. |

| loadAvg1 | Average system load calculated over time of one minute. |

| loadAvg5 | Average system load calculated over of time of five minutes. |

| loadAvg15 | Average system load calculated over of time of 15 minutes. High load averages imply that a system is overloaded; many processes are waiting for CPU time. |

| Intr [#/s] | Number of interrupts occurred per second in the system. |

| ctxSwitch [#/s] | Number of context switches that occurred per second in the system. |

Table 4-23 Memory Metrics

| Metric Name (units) | Description |

|---|---|

| totalMem [KB] | Amount of total usable RAM (KB). |

| freeMem [KB] | Amount of free RAM (KB). |

| avblMem [KB] | Amount of memory available to start a new process without swapping. |

| shMem [KB] | Memory used (mostly) by tmpfs. |

| swapTotal [KB] | Total amount of physical swap memory (KB). |

| swapFree [KB] | Amount of swap memory free (KB). |

| swpIn [KB/s] | Average swap in rate within the current sample interval (KB/sec). |

| swpOut [KB/s] | Average swap-out rate within the current sample interval (KB/sec). |

| pgIn [#/s] | Average page in rate within the current sample interval (pages/sec). |

| pgOut [#/s] | Average page out rate within the current sample interval (pages/sec). |

| slabReclaim [KB] | The part of the slab that might be reclaimed such as caches. |

| buffer [KB] | Memory used by kernel buffers. |

| Cache [KB] | Memory used by the page cache and slabs. |

| bufferAndCache [KB] | Total size of buffer and cache (buffer[KB] + Cache[KB]). |

| hugePageTotal [#] | Total number of huge pages present in the system for the current sample interval. |

| hugePageFree [KB] | Total number of free huge pages in the system for the current sample interval. |

| hugePageSize [KB] | Size of one huge page in KB, depends on the operating system version. Typically the same for all samples for a particular host. |

Table 4-24 Device Metrics

| Metric Name (units) | Description |

|---|---|

| disks [#] | Number of disks configured in the system. |

| ioR [KB/s] | Aggregate read rate across all devices. |

| ioW [KB/s] | Aggregate write rate across all devices. |

| numIOs [#/s] | Aggregate I/O operation rate across all devices. |

Table 4-25 NFS Metrics

| Metric Name (units) | Description |

|---|---|

| nfs [#] | Total NFS devices. |

Table 4-26 Process Metrics

| Metric Name (units) | Description |

|---|---|

| fds [#] | Number of open file structs in system. |

| procs [#] | Number of processes. |

| rtProcs [#] | Number of real-time processes. |

| procsInDState | Number of processes in uninterruptible sleep. |

| sysFdLimit [#] | System limit on a number of file structs. |

| procsOnCpu [#] | Number of processes currently running on CPU. |

| procsBlocked [#] | Number of processes waiting for some event/resource becomes available, such as for the completion of an I/O operation. |

Process Aggregates Metric Set

Contains aggregated metrics for all processes by process groups.

Table 4-27 Process Aggregates Metric Set

| Metric Name (units) | Description |

|---|---|

| DBBG | User Oracle Database background process group. |

| DBFG | User Oracle Database foreground process group. |

| MDBBG | MGMTDB background processes group. |

| MDBFG | MGMTDB foreground processes group. |

| ASMBG | ASM background processes group. |

| ASMFG | ASM foreground processes group. |

| IOXBG | IOS background processes group. |

| IOXFG | IOS foreground processes group. |

| APXBG | APX background processes group. |

| APXFG | APX foreground processes group. |

| CLUST | Clusterware processes group. |

| OTHER | Default group. |

For each group, the below metrics are aggregated to report a group summary.

| Metric Name (units) | Description |

|---|---|

| processes [#] | Total number of processes in the group. |

| cpu [%] | Aggregated CPU utilization. |

| rss [KB] | Aggregated resident set size. |

| shMem [KB] | Aggregated shared memory usage. |

| thrds [#] | Aggregated thread count. |

| fds [#] | Aggregated open file-descriptor. |

| cpuWeight [%] | Contribution of the group in overall CPU utilization of the machine. |

Parent topic: Collecting Operating System Resources Metrics

4.5.5 Detecting Component Failures and Self-healing Autonomously

Improved ability to detect component failures and self-heal autonomously improves business continuity.

Cluster Health Monitor introduces a new diagnostic feature that identifies critical component events that indicate pending or actual failures and provides recommendations for corrective action. These actions may sometimes be performed autonomously. Such events and actions are then captured and admins are notified through components such as Oracle Trace File Analyzer.

Terms Associated with Diagnosability

CHMDiag:

CHMDiag is a python daemon managed by osysmond

that listens for events and takes actions. Upon receiving various events/actions,

CHMDiag validates them for correctness, does flow control, and

schedules the actions for runs. CHMDiag monitors each action to its

completion, and kills an action if it takes longer than pre-configured time specific

to that action.

This JSON file describes all events/actions and their respective attributes. All

events/actions have uniquely identifiable IDs. This file also contains various

configurable properties for various actions/events. CHMDiag loads

this file during its startup.

CRFE API: CRFE API is used by all C clients to send events to

CHMDiag. This API is used by internal clients like components

(RDBMS/CSS/GIPC) to publish events/actions.

This API also provides support for both synchronous and asynchronous publication of events. Asynchronous publication of events is done through a background thread which will be shared by all CRFE API clients within a process.

CHMDIAG_BASE: This directory resides in ORACLE_BASE/hostname/crf/chmdiag. This directory path contains following directories, which are populated or managed by CHMDiag.

- ActionsResults: Contains all results for all of the invoked actions with a subdirectory for each action.

- EventsLog: Contains a log of all the events/actions received by

CHMDiagand the location of their respective action results. These log files are also auto-rotated after reaching a fixed size. - CHMDiagLog: Contains

CHMDiagdaemon logs. Log files are auto-rotated and once they reach a specific size. Logs should have sufficient debug information to diagnose any problems thatCHMDiagcould run into. - Config: Contains a run sub-directory for

CHMDiagprocesspidfile management.

- oclumon chmdiag description: Use the

oclumon chmdiag descriptioncommand to get a detailed description of all the supported events and actions. - oclumon chmdiag query: Use the

oclumon chmdiag querycommand to query CHMDiag events/actions sent by various components and generate an HTML or a text report. - oclumon chmdiag collect: Use the

oclumon chmdiag collectcommand to collect all events/actions data generated byCHMDiaginto the specified output directory location.

Parent topic: Collecting Operating System Resources Metrics

4.5.6 CHM Inline Analysis

Inline Analysis is a new feature that will automatically execute on all systems where Autonomous Health Framework (AHF) is installed.

Traditionally, the operating system (OS) metrics collected by Cluster Health Monitor (CHM) or System Health Monitor (SHM) are stored directly in the Grid Infrastructure (GI) Base repository. However, this repository is limited in size—250 MB on HAS 19 and 500 MB on MAIN.

On large FASAAS systems, which may run between 30,000 and 40,000 processes, these size limitations mean that CHM OS data can only be retained for a few hours. As a result, the necessary OS data is frequently missing in most Service Requests (SRs), making it challenging to diagnose and resolve issues effectively.

With the CHM Inline Analysis enhancement, instead of preserving large volumes of raw CHM OS data, the system analyzes these OS metrics and stores only the summary analyzed data. The processed analysis files are significantly smaller than the raw data, allowing for several months of data retention within a 100 MB repository quota.

How it works

- Collection and Analysis:

- OS metrics are collected by CHM/SHM as usual.

- Every hour, Oracle Trace File Analyzer (TFA) triggers Inline Analysis which processes the hourly raw

chmosdata/shmosdatagzip files.

- Storage: Only the analyzed results are kept in the AHF home data repository, dramatically reducing space consumption.

- Execution and Security: Inline Analysis runs as the

rootuser and is configured with specific resource constraints to avoid impact on system performance.

Benefits

- Extended Retention: Analyzed data can be retained for several months rather than just a few hours.

- Reduced Storage Footprint: Only 100 MB of repository space is required, even for large systems.

- Improved Diagnostics: OS data will be available in more SRs, enabling more effective and timely troubleshooting.

Table 4-28 Feature Comparison: Traditional vs. Inline Analysis

| Feature | Previous Approach | With Inline Analysis |

|---|---|---|

| Data Stored | Raw CHM OS data | Analyzed (summarized) data |

| Repository Size (typical) | 250 MB (HAS 19), 500 MB (MAIN) | 100 MB |

| Data Retention | Hours | Months |

| Impact on Diagnostics | Data often missing in service requests (SRs) | Data available for analysis |

Related Topics

Parent topic: Collecting Operating System Resources Metrics

4.5.7 Integrating System Health Monitor (SHM) into AHF for Standalone Non-Root Installations

System Health Monitor (SHM) is a tool that collects essential operating system metrics for Oracle Support, especially valuable in diagnosing initial failures such as node evictions when Service Requests (SRs) are logged.

Note:

Presently, SHM is supported only on Linux platforms.With this enhancement, Autonomous Health Framework (AHF) will integrate SHM data into its diagnostic collections for non-root, standalone environments, that is, Oracle Restart or Single Instance. This enables non-root users to benefit from OS-level insight reports generated from SHM data, particularly focusing on OS-specific diagnostics.

For the initial release (25.6), end users are responsible for managing the SHM lifecycle in non-root standalone installations. After SHM data is collected, the host system will be able to generate an insight report that concentrates primarily on the gathered OS metrics.

Manage the SHM background process (daemon) with the following commands:

sysmonctl start: Start the System Health Monitor (SHM)sysmonctl stop: Stop the System Health Monitor (SHM)

Note:

- Scope: SHM within AHF for standalone, non-root installations is supported only in Oracle Restart or single-instance environments on Linux.

- Data Capture Limitations: Operating in non-root mode imposes certain restrictions, which may lead to differences in the depth and breadth of collected data when compared to root installations.

- Security and compatibility:

- SHM, when running as a non-root user, gathers metrics by reading from

/proc. - For environments running SELinux, ensure SHM operates correctly in enforcing mode without interruption or failure.

- SHM, when running as a non-root user, gathers metrics by reading from

Related Topics

Parent topic: Collecting Operating System Resources Metrics

4.5.8 Creating an AHF Insights Report for Operating System Issues (Non-Root AHF Installation)

Create Insights reports from SHM data to proactively identify and investigate operating system-related issues in a non-root AHF environment.

- Navigate to the CHM binaries directory.

cd $AHF_HOME/chm/bin/ - Run the report generation command.

$AHF_HOME/python/bin/python3 chm_tfa_driver.zip -o <OUTPUT_DIRECTORY> -s <START_TIME> -e <END_TIME>Example Run:

-bash-4.4$ $AHF_HOME/python/bin/python3 chm_tfa_driver.zip -o /tmp/shm_out/ -s 2025-08-22T12:00:00 -e 2025-08-22T12:30:00 Successfully ran the chm utils on the SHM data. Successfully ran the chm analyzer on the SHM data. Successfully ran the chm reportgen on the SHM analysis data. executing Report is generated at : /tmp/shm_out/test-node_insights_2025_08_22_12_32_44.zip Report jsons is generated at : /tmp/shm_out/test-node_insights_jsons_2025_08_22_12_32_44.zip Successfully ran the ahf insights driver on the SHM data. - Verify SHM Data.

SHM data files can be checked at:

$AHF_HOME/data/<HOSTNAME>/shmYou can view the AHF Insights report by downloading and unzipping the file, then opening

web/index.htmlin your browser.

Parent topic: Collecting Operating System Resources Metrics

4.5.9 Diagnostic Signature: HugePagesNotUtilized

A new diagnostic signature, HugePagesNotUtilized, has been introduced in AHF 25.11.

This enhancement automatically detects cases where HugePages are configured (that is, HugePages_Total is nonzero) but remain completely unused (HugePages_Total = HugePages_Free). When this condition is detected, the signature generates a clear alert in the analysis report, making it immediately visible to users including developers and support engineers that HugePages are not being utilized as expected.

By providing a precise and actionable notification, rather than a generic low-utilization warning, this feature helps users quickly identify potential memory configuration issues. This improves triage efficiency, accelerates troubleshooting, and ensures system resources are used effectively.

Accessing this Feature

You can view this diagnostic in the orachk report under the CHM analysis section.

Figure 4-7 HugePagesNotUtilized Signature in Orachk CHM Analysis section

Figure 4-8 HugePagesNotUtilized Signature in Orachk CHM Analysis section

Parent topic: Collecting Operating System Resources Metrics