Workflow for Oracle NoSQL Database Migrator

Learn about the various steps involved in using the Oracle NoSQL Database Migrator utility for migrating your NoSQL data.

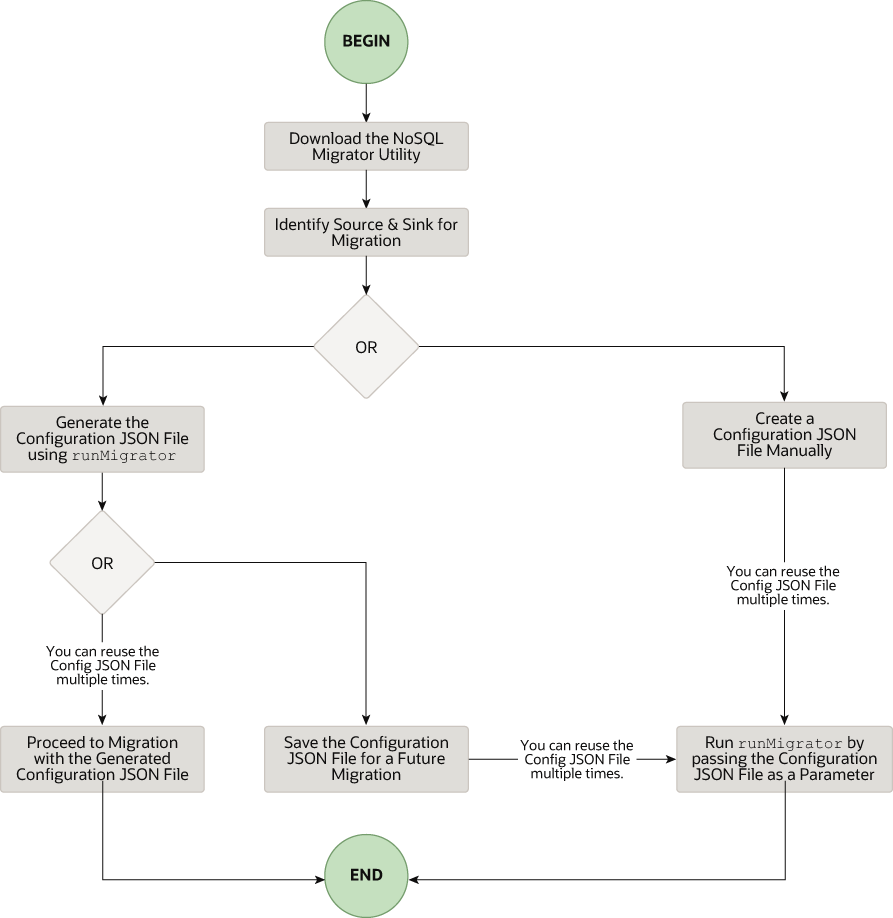

The high level flow of tasks involved in using NoSQL Database Migrator is depicted in the below figure.

Download the NoSQL Data Migrator Utility

runMigrator command from the command line interface.

Note:

Oracle NoSQL Database Migrator utility requires Java 11 or higher versions to run.Identify the Source and Sink

- Identify Sink Table Schema: If the sink is Oracle NoSQL Database on-premise or cloud, you must identify the schema for the sink table and ensure that the source data matches with the target schema. If required, use transformations to map the source data to the sink table.

- Default Schema: NoSQL Database Migrator provides an option to create a table with the default schema without the need to predefine the schema for the table. This is useful primarily when loading JSON source files into Oracle NoSQL Database.

If the source is a MongoDB-formatted JSON file, the default schema for the table will be as follows:

CREATE TABLE IF NOT EXISTS <tablename>(ID STRING, DOCUMENT JSON,PRIMARY KEY(SHARD(ID))Where:

— tablename = value provided for the table attribute in the configuration.

— ID = _id value from each document of the mongoDB exported JSON source file.

— DOCUMENT = For each document in the mongoDB exported file, the contents excluding the _id field are aggregated into the DOCUMENT column.

If the source is a DynamoDB-formatted JSON file, the default schema for the table will be as follows:CREATE TABLE IF NOT EXISTS <TABLE_NAME>(DDBPartitionKey_name DDBPartitionKey_type, [DDBSortKey_name DDBSortKey_type],DOCUMENT JSON, PRIMARY KEY(SHARD(DDBPartitionKey_name),[DDBSortKey_name]))Where:

— TABLE_NAME = value provided for the sink table in the configuration

— DDBPartitionKey_name = value provided for the partition key in the configuration

— DDBPartitionKey_type = value provided for the data type of the partition key in the configuration

— DDBSortKey_name = value provided for the sort key in the configuration if any

— DDBSortKey_type = value provided for the data type of the sort key in the configuration if any

— DOCUMENT = All attributes except the partition and sort key of a Dynamo DB table item aggregated into a NoSQL JSON column

If the source format is a CSV file, a default schema is not supported for the target table. You can create a schema file with a table definition containing the same number of columns and data types as the source CSV file. For more details on the Schema file creation, see Providing Table Schema.

For all the other sources, the default schema will be as follows:CREATE TABLE IF NOT EXISTS <tablename> (ID LONG GENERATED ALWAYS AS IDENTITY, DOCUMENT JSON, PRIMARY KEY(ID))Where:

— tablename = value provided for the table attribute in the configuration.

— ID = An auto-generated LONG value.

— DOCUMENT = The JSON record provided by the source is aggregated into the DOCUMENT column.Note:

If the _id value is not provided as a string in the MongoDB-formatted JSON file, NoSQL Database Migrator converts it into a string before inserting it into the default schema.

- Default Schema: NoSQL Database Migrator provides an option to create a table with the default schema without the need to predefine the schema for the table. This is useful primarily when loading JSON source files into Oracle NoSQL Database.

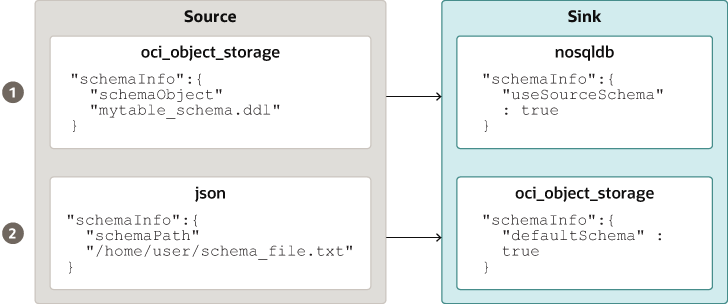

- Providing Table Schema: NoSQL Database Migrator allows the source to provide schema definitions for the table data using schemaInfo attribute. The schemaInfo attribute is available in all the data sources that do not have an implicit schema already defined. Sink data stores can choose any one of the following options.

- Use the default schema defined by the NoSQL Database Migrator.

- Use the source-provided schema.

- Override the source-provided schema by defining its own schema. For example, if you want to transform the data from the source schema to another schema, you need to override the source-provided schema and use the transformation capability of the NoSQL Database Migrator tool.

The table schema file, for example,mytable_schema.ddlcan include table DDL statements. The NoSQL Database Migrator tool executes this table schema file before starting the migration. The migrator tool supports no more than one DDL statement per line in the schema file. For example,CREATE TABLE IF NOT EXISTS(id INTEGER, name STRING, age INTEGER, PRIMARY KEY(SHARD(ID)))Note:

Migration will fail if the table is present at the sink and the DDL in theschemaPathis different than the table. - Create Sink Table: Once you identify the sink table

schema, create the sink table either through the Admin CLI or using the

schemaInfoattribute of the sink configuration file. See Sink Configuration Templates .Note:

If the source is a CSV file, create a file with the DDL commands for the schema of the target table. Provide the file path in schemaInfo.schemaPath parameter of the sink configuration file.

Migrating TTL Metadata for Table Rows

Time to Live (TTL) is a mechanism that allows you to automatically expire table rows. TTL is expressed as the amount of time, data is allowed to live in the store. Data that has reached its expiration timeout value can no longer be retrieved, and will not appear in any store statistics.

Table 1-1 Migrating TTL metadata

| Source types | Source configuration parameter | Sink configuration parameter |

|---|---|---|

| Oracle NoSQL Database | includeTTL |

includeTTL |

| Oracle NoSQL Database Cloud Service | includeTTL |

includeTTL |

| DynamoDB-Formatted JSON File | ttlAttributeName |

includeTTL |

| DynamoDB-Formatted JSON File stored in AWS S3 | ttlAttributeName |

includeTTL |

Exporting TTL metadata in Oracle NoSQL Database and Oracle NoSQL Database Cloud Service

NoSQL Database Migrator provides the includeTTL configuration parameter to support the export of table row's TTL metadata.

_metadata JSON object is not included explicitly in the exported data because its expiration value is always 0. The NoSQL Database Migrator exports the expiration time for each row as the number of milliseconds since the UNIX epoch (Jan 1st, 1970). For example,//Row 1

{

"id" : 1,

"name" : "xyz",

"age" : 45,

"_metadata" : {

"expiration" : 1629709200000 //Row Expiration time in milliseconds

}

}

//Row 2

{

"id" : 2,

"name" : "abc",

"age" : 52,

"_metadata" : {

"expiration" : 1629709400000 //Row Expiration time in milliseconds

}

}

//Row 3 No Metadata for below row as it will not expire

{

"id" : 3,

"name" : "def",

"age" : 15

}Importing TTL metadata

You can optionally import TTL metadata using the includeTTL configuration parameter in the sink configuration template.

The default reference time of import operation is the current time in milliseconds, obtained from System.currentTimeMillis(), of the machine where the NoSQL Database Migrator tool is running. However, you can also set a custom reference time using the ttlRelativeDate configuration parameter if you want to extend the expiration time and import rows that would otherwise expire immediately. The extension is calculated as follows and added to the expiration time.

Extended time = expiration time - reference timeThe import operation handles the following use cases when migrating table rows containing TTL metadata. These use cases are applicable only when the includeTTL configuration parameter is set to true.

- Use-case 1: No TTL metadata information is present in the importing table row.

If the row you want to import does not contain TTL information, then the NoSQL Database Migrator sets the TTL=0 for the row.

- Use-case 2: TTL value of the source table row is expired relative to the reference time when the table row gets imported.

The expired table row is ignored and not written into the store.

- Use-case 3: TTL value of the source table row is not expired relative to the reference time when the table row gets imported.

The table row gets imported with a TTL value. However, the imported TTL value may not match the original exported TTL value because of the integer hour and day window constraints in the TimeToLive class. For example,

Consider an exported table row:{ "id" : 8, "name" : "xyz", "_metadata" : { "expiration" : 1734566400000 //Thursday, December 19, 2024 12:00:00 AM in UTC } }The reference time while importing is 1734480000000, which is Wednesday, December 18, 2024 12:00:00 AM.

Imported table row{ "id" : 8, "name" : "xyz", "_metadata" : { "ttl" : 1734739200000 //Saturday, December 21, 2024 12:00:00 AM } }

Importing TTL Metadata in DynamoDB-Formatted JSON File and DynamoDB-Formatted JSON File stored in AWS S3

NoSQL Database Migrator provides an additional configuration parameter, ttlAttributeName to support the import of TTL metadata from the DynamoDB-formatted JSON file items.

DynamoDB exported JSON files include a specific attribute in each item to store the TTL expiration timestamp. To optionally import the TTL values from DynamoDB exported JSON files, you must supply the specific attribute's name as a value to the ttlAttributeName configuration parameter in the DynamoDB-Formatted JSON File or DynamoDB-Formatted JSON File stored in AWS S3 source configuration files. Also, you must set the includeTTL configuration parameter in the sink configuration template. The valid sinks are Oracle NoSQL Database and Oracle NoSQL Database Cloud Service. NoSQL Database Migrator stores TTL information in the _metadata JSON object for the imported item.

-

Use case 1: The ttlAttributeName configuration parameter value is set to the TTL attribute name specified in the DynamoDB exported JSON file.

NoSQL Database Migrator imports the expiration time for this item as the number of milliseconds since the UNIX epoch (Jan 1st, 1970).

For example, consider an item in the DynamoDB exported JSON file:{ "Item": { "DeptId": { "N": "1" }, "DeptName": { "S": "Engineering" }, "ttl": { "N": "1734616800" } } }Here, the attributettlspecifies the time-to-live value for the item. If you set the ttlAttributeName configuration parameter asttlin the DynamoDB-formatted JSON file or DynamoDB-formatted JSON file stored in AWS S3 source configuration file, NoSQL Database Migrator imports the expiration time for the item as follows:{ "DeptId": 1, "document": { "DeptName": "Engineering" } "_metadata": { "expiration": 1734616800000 } }Note:

You can supply the ttlRelativeDate configuration parameter in the sink configuration template as the reference time for calculating the expiration time. - Use case 2: The ttlAttributeName configuration parameter value is set, however, the value does not exist as an attribute in the item of the DynamoDB exported JSON file.

NoSQL Database Migrator does not import the TTL metadata information for the given item.

- Use case 3: The ttlAttributeName configuration parameter value does not match the attribute name in the item of DynamoDB exported JSON file.

NoSQL Database Migrator handles the import in one of the following ways based on the sink configuration:

- Copies the attribute as a normal field if configured to import using the default schema.

- Skips the attribute if configured to import using a user-defined schema.

Importing data to a sink with an IDENTITY column

You can import the data from a valid source to a sink table (On-premises/Cloud Services) with an IDENTITY column. You create the IDENTITY column as either GENERATED ALWAYS AS IDENTITY or GENERATED BY DEFAULT AS IDENTITY. For more information on table creation with an IDENTITY column, see Creating Tables With an IDENTITY Column in the SQL Reference Guide.

Before importing the data, make sure that the Oracle NoSQL Database table at the sink is empty if it exists. If there is pre-existing data in the sink table, migration can lead to issues such as overwriting existing data in the sink table or skipping source data during the import.

Sink table with IDENTITY column as GENERATED ALWAYS AS IDENTITY

Consider a sink table with the IDENTITY column created as GENERATED ALWAYS AS IDENTITY. The data import is dependent on whether or not the source supplies the values to the IDENTITY column and ignoreFields transformation parameter in the configuration file.

For example, you want to import data from a JSON file source to the Oracle NoSQL Database table as the sink. The schema of the sink table is:

CREATE TABLE IF NOT EXISTS migrateID(ID INTEGER GENERATED ALWAYS AS IDENTITY, name STRING, course STRING, PRIMARY KEY

(ID))| Source condition | User action | Migration outcome |

|---|---|---|

|

CASE 1: Source data does not supply a value for the IDENTITY field of the sink table. Example: JSON source file |

Create/generate the configuration file. |

Data migration is successful. IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table |

|

CASE 2: Source data supplies values for the IDENTITY field of the sink table. Example: JSON source file |

Create/generate the configuration file. You provide an ignoreFields transformation for the ID column in the sink configuration template.

|

Data migration is successful. The supplied ID values are skipped and the IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table

migrateID: |

|

You create/generate the configuration file without the ignoreFields transformation for the IDENTITY column. |

Data migration fails with the following error message: "Cannot set value for a generated always identity column". |

For more details on the transformation configuration parameters, see the topic Transformation Configuration Templates.

Sink table with IDENTITY column as GENERATED BY DEFAULT AS IDENTITY

Consider a sink table with the IDENTITY column created as GENERATED BY DEFAULT AS IDENTITY. The data import is dependent on whether or not the source supplies the values to the IDENTITY column and ignoreFields transformation parameter.

For example, you want to import data from a JSON file source to the Oracle NoSQL Database table as the sink. The schema of the sink table is:

CREATE TABLE IF NOT EXISTS migrateID(ID INTEGER GENERATED BY DEFAULT AS IDENTITY, name STRING, course STRING, PRIMARY KEY

(ID))| Source condition | User action | Migration outcome |

|---|---|---|

|

CASE 1: Source data does not supply a value for the IDENTITY field of the sink table. Example: JSON source file |

Create/generate the configuration file. |

Data migration is successful. IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table

migrateID: |

|

CASE 2: Source data supplies values for the IDENTITY field of the sink table and it is a Primary Key field. Example: JSON source file |

Create/generate the configuration file. You provide an ignoreFields transformation for the ID column in the sink configuration template (Recommended).

|

Data migration is successful. The supplied ID values are skipped and the IDENTITY column values are auto-generated. Migrated data in Oracle NoSQL Database sink table

migrateID: |

|

You create/generate the configuration file without the ignoreFields transformation for the IDENTITY column. |

Data migration is successful. The supplied When you try to insert an additional row to the table without supplying an ID value, the sequence generator tries to auto-generate the ID value. The sequence generator's starting value is 1. As a result, the generated ID value can potentially duplicate one of the existing ID values in the sink table. Since this is a violation of the primary key constraint, an error is returned and the row does not get inserted. See Sequence Generator for additional information. To avoid the primary key constraint violation, the sequence generator must start the sequence with a value that does not conflict with existing ID values in the sink table. To use the START WITH attribute to make this modification, see the example below: Example: Migrated data in Oracle NoSQL Database sink table

migrateID:To find the appropriate value for the sequence generator to insert in the ID column, fetch the maximum value of the

ID field using the following query:Output:

The maximum value of the

ID column in the sink table is 3. You want the sequence generator to start generating the ID values beyond 3 to avoid duplication. You update the sequence generator's START WITH attribute to 4 using the following statement:This will start the sequence at 4. Now when you insert rows to the sink table without supplying the ID values, the sequence generator auto-generates the ID values from 4 onwards averting the duplication of the IDs. |

For more details on the transformation configuration parameters, see the topic Transformation Configuration Templates.

Run the runMigrator command

The runMigrator executable file is available in the extracted NoSQL Database Migrator files. You must install Java 11 or higher version and bash on your system to successfully run the runMigrator command.

runMigrator command in two ways:

- By creating the configuration file using the runtime options of the

runMigratorcommand as shown below.[~]$ ./runMigrator configuration file is not provided. Do you want to generate configuration? (y/n) [n]: y ... ...- When you invoke the

runMigratorutility, it provides a series of runtime options and creates the configuration file based on your choices for each option. - After the utility creates the configuration file, you have a choice to either proceed with the migration activity in the same run or save the configuration file for a future migration.

- Irrespective of your decision to proceed or defer the migration activity with the generated configuration file, the file will be available for edits or customization to meet your future requirements. You can use the customized configuration file for migration later.

- When you invoke the

- By passing a manually created configuration file (in the JSON format) as a runtime parameter using the

-cor--configoption. You must create the configuration file manually before running therunMigratorcommand with the-cor--configoption. For any help with the source and sink configuration parameters, see Sources and Sinks.[~]$ ./runMigrator -c </path/to/the/configuration/json/file>

Logging Migrator Progress

NoSQL Database Migrator tool provides options, which enables trace, debugging, and progress messages to be printed to standard output or to a file. This option can be useful in tracking the progress of migration operation, particularly for very large tables or data sets.

- Log Levels

To control the logging behavior through the NoSQL Database Migrator tool, pass the --log-level or -l run time parameter to the

runMigratorcommand. You can specify the amount of log information to write by passing the appropriate log level value.$./runMigrator --log-level <loglevel>Example:$./runMigrator --log-level debugTable 1-2 Supported Log Levels for NoSQL Database Migrator

Log Level Description warning Prints errors and warnings. info (default) Prints the progress status of data migration such as validating source, validating sink, creating tables, and count of number of data records migrated. debug Prints additional debug information. all Prints everything. This level turns on all levels of logging. - Log File:

You can specify the name of the log file using --log-file or -f parameter. If --log-file is passed as run time parameter to the

runMigratorcommand, the NoSQL Database Migrator writes all the log messages to the file else to the standard output.$./runMigrator --log-file <log file name>Example:$./runMigrator --log-file nosql_migrator.log