1 Overview

The following sections describe the various security capabilities available with the Oracle Tuxedo system for ATMI applications:

Note:

The Oracle Tuxedo product includes environments that allow you to build both Application-to-Transaction Monitor Interfaces (ATMI) and CORBA applications. This topic explains how to implement security in an ATMI application. For information about implementing security in a CORBA application, see Using Security in CORBA Applications.- What Security Means

- Security Plug-ins

- ATMI Security Capabilities

- Operating System (OS) Security

- Authentication

- Authorization

- Auditing

- Link-Level Encryption

- TLS Encryption

- Public Key Security

- Message-based Digital Signature

- Message-based Encryption

- Public Key Implementation

- Default Authentication and Authorization

- Security Interoperability

- Security Compatibility

- Denial-of-Service (DoS) Defense

- Password Pair Protection

1.1 What Security Means

Security refers to techniques for ensuring that data stored in a computer or passed between computers is not compromised. Most security measures involve passwords and data encryption, where a password is a secret word or phrase that gives a user access to a particular program or system, and data encryption is the translation of data into a form that is unintelligible without a deciphering mechanism.

Distributed applications such as those used for electronic commerce (e-commerce) offer many access points for malicious people to intercept data, disrupt operations, or generate fraudulent input; the more distributed a business becomes, the more vulnerable it is to attack. Thus, the distributed computing software, or middleware, upon which such applications are built must provide security.

The Oracle Tuxedo product provides several security capabilities for ATMI applications, most of which can be customized for your particular needs.

See Also:

Parent topic: Overview

1.2 Security Plug-ins

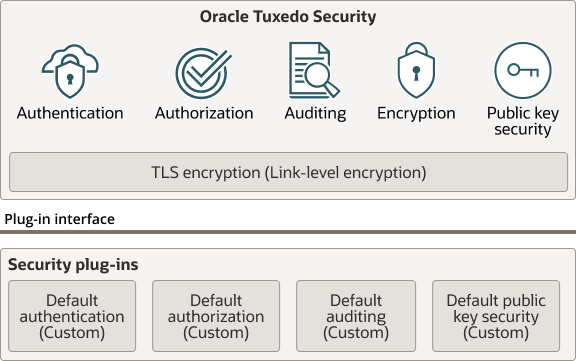

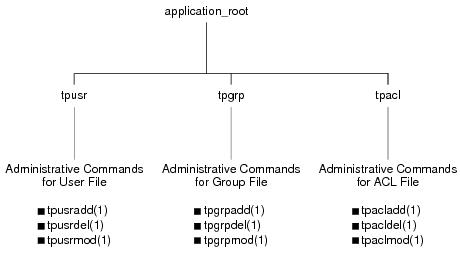

As shown in the following figure, all but one of the security capabilities available with the ATMI environment of the Oracle Tuxedo product are implemented through a plug-in interface, which allows Oracle Tuxedo customers to independently define and dynamically add their own security plug-ins. A security plug-in is a code module that implements a particular security capability.

Figure 1-1 Oracle Tuxedo ATMI Plug-in Security Architecture

The specifications for the security plug-in interface are not generally available, but are available to third-party security vendors. Third-party security vendors can enter into a special agreement with Oracle Systems to develop security plug-ins for Oracle Tuxedo. Oracle Tuxedo customers who want to customize a security capability must contact one of these vendors. For example, an Oracle Tuxedo customer who wants a custom implementation of public key security must contact a third-party security vendor who can provide the appropriate plug-ins. For more information about security plug-ins, including installation and configuration procedures, see your Oracle account executive.

See Also:

Parent topic: Overview

1.3 ATMI Security Capabilities

The Oracle Tuxedo system can enforce security in a number of ways, which includes using the security features of the host operating system to control access to files, directories, and system resources. In the following table describes the security capabilities available with the ATMI environment of the Oracle Tuxedo product.

Table 1-1 ATMI Security Capabilities

| Security Capability | Description | Plug-in Interface | Default Implementation |

|---|---|---|---|

| Operating system security | Controls access to files, directories, and system resources. | N/A | N/A |

| Authentication | Proves the stated identity of users or system processes; safely remembers and transports identity information; and makes identity information available when needed. | Implemented as a single interface | The default authorization plug-in provides security at three levels: no authentication, application password, and user-level authentication. This plug-in works the same way the Oracle Tuxedo implementation of authentication has worked since it was first made available with the Oracle Tuxedo system. |

| Authorization | Controls access to resources based on identity or other information. | Implemented as a single interface | The default authorization plug-in provides security at two levels: optional access control lists and mandatory access control lists. This plug-in works the same way the Oracle Tuxedo implementation of authorization has worked since it was first made available with the Oracle Tuxedo system. |

| Auditing | Safely collects, stores, and distributes information about operating requests and their outcomes. | Implemented as a single interface | Default auditing security is implemented by the Oracle Tuxedo EventBroker and user log (ULOG) features. |

| Link-level encryption | Uses symmetric key encryption to establish data privacy for messages moving over the network links that connect the machines in an ATMI application. | N/A | RC4 symmetric key encryption. |

| TLS Encryption | Uses the industry-standard TLS protocol to establish data privacy for messages moving over the network links that connect the machines in an ATMI application. (TLS is the successor standard to the SSL protocol.) | N/A | Oracle NZ Security Layer |

| Public key security | Uses public key (or asymmetric key) encryption to establish end-to-end digital signing and data privacy between ATMI application clients and servers. Complies with the PKCS-7 standard. | Implemented as six interfaces | Default public key security supports the following algorithms: |

See Also:

Parent topic: Overview

1.4 Operating System (OS) Security

On host operating systems with underlying security features, such as file permissions, the operating-system level of security is the first line of defense. An application administrator can use file permissions to grant or deny access privileges to specific users or groups of users.

Most ATMI applications are managed by an application administrator who configures the application, starts it, and monitors the running application dynamically, making changes as necessary. Because the ATMI application is started and run by the administrator, server programs are run with the administrator’s permissions and are therefore considered secure or “trusted.” This working method is supported by the login mechanism and the read and write permissions on the files, directories, and system resources provided by the underlying operating system.

Client programs are run directly by users with the users’

own permissions. In addition, users running native clients (that

is, clients running on the same machine on which the server program

is running) have access to the UBBCONFIG configuration

file and interprocess communication (IPC) mechanisms such as the

bulletin board (a reserved piece of shared memory in which

parameters governing the ATMI application and statistics about the

application are stored).

For ATMI applications running on platforms that support greater

security, a more secure approach is to limit access to the files

and IPC mechanisms to the application administrator and to have

“trusted” client programs run with the permissions of

the administrator (using the setuid command on a UNIX

host machine or the equivalent command on another platform). For

the most secure operating system security, allow only Workstation

clients to access the application; client programs should not be

allowed to run on the same machines on which application server and

administrative programs run.

See Also:

- Security Administration Tasks

- Administering Operating System (OS) Security

- “About the Configuration File” and “Creating the Configuration File” in Setting Up an Oracle Tuxedo Application

- UBBCONFIG(5) in the Oracle Tuxedo File Formats, Data Descriptions, MIBs, and System Processes Reference

Parent topic: Overview

1.5 Authentication

Authentication allows communicating processes to mutually prove identification. The authentication plug-in interface in the ATMI environment of the Oracle Tuxedo product can accommodate various security-provider authentication plug-ins using various authentication technologies, including shared-secret password, one-time password, challenge-response, and Kerberos. The interface closely follows the generic security service (GSS) application programming interface (API) where applicable; the GSSAPI is a published standard of the Internet Engineering Task Force. The authentication plug-in interface is designed to make integration of third-party vendor security products with the Oracle Tuxedo system as easy as possible, assuming the security products have been written to the GSSAPI.

- Authentication Plug-in Architecture

- Understanding Delegated Trust Authentication

- Establishing a Session

- Getting Authorization and Auditing Tokens

- Replacing Client Tokens with Server Tokens

- Implementing Custom Authentication

Parent topic: Overview

1.5.1 Authentication Plug-in Architecture

The underlying plug-in interface for authentication security is implemented as a single plug-in. The plug-in may be the default authentication plug-in or a custom authentication plug-in.

Parent topic: Authentication

1.5.2 Understanding Delegated Trust Authentication

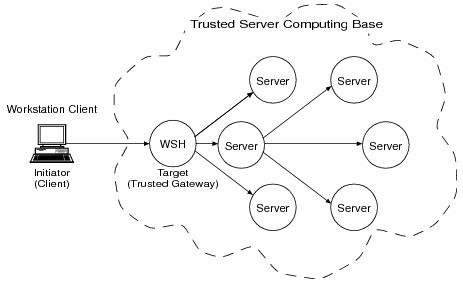

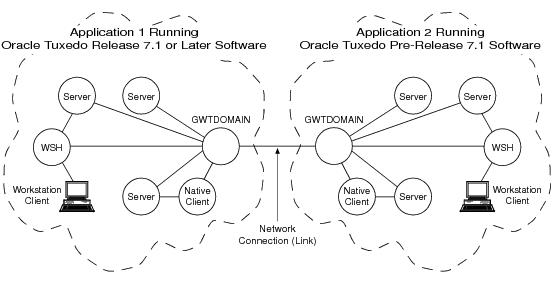

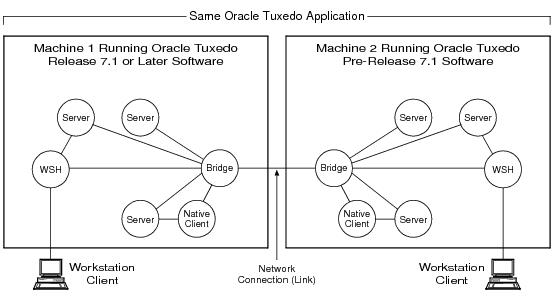

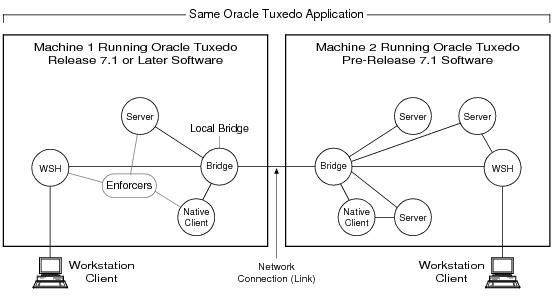

Direct end-to-end mutual authentication in a distributed enterprise middleware environment such as the Oracle Tuxedo system can be prohibitively expensive, especially when accomplished with security mechanisms optimized for long-duration connections. It is not efficient for clients to establish direct network connections with each server process, nor is it practical to exchange and verify multiple authentication messages as part of processing each service request. Instead, the ATMI applications use a delegated trust authentication model, as shown in the following figure:

Figure 1-2 ATMI Delegated Trust Authentication Model

A Workstation client authenticates to a trusted system gateway process, the workstation handler (WSH), at initialization time. A native client authenticates within itself, as explained later in this discussion. After a successful authentication, the authentication software assigns a security token to the client. A token is an opaque data structure suitable for transfer between processes. The WSH safely stores the token for the authenticated Workstation client, or the authenticated native client safely stores the token for itself.

As a client request flows through a trusted gateway, the gateway attaches the client’s security token to the request. The security token travels with the client’s request message, and is delivered to the destination server process(es) for authorization checking and auditing purposes.

In this model, the gateway trusts that the authentication software will verify the identity of the client and generate an appropriate token. Servers, in turn, trust that the gateway process will attach the correct security token. Servers also trust that any other servers involved in the processing of a client request will safely deliver the token.

Parent topic: Authentication

1.5.3 Establishing a Session

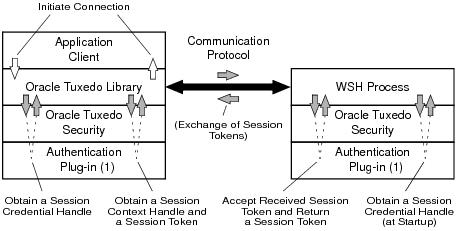

The following figure illustrates the control flow inside the ATMI environment of the Oracle Tuxedo system while a session is being established between a Workstation client and the WSH. The Workstation client and WSH are attempting to establish a long-term mutually authenticated connection by exchanging messages.

Figure 1-3 Control Flow in the ATMI Environment

The initiator process (may be thought of as a middleware client process) creates a session context by repeatedly calling the Oracle Tuxedo “initiate security context” function until a return code indicates success or failure. A session context associates identity information with an authenticated user.

When a Workstation client calls tpinit(3c) for C or TPINITIALIZE(3cbl) for COBOL to join an ATMI application, the Oracle Tuxedo system begins its response by first calling the internal “acquire credentials” function to obtain a session credential handle, and then calling the internal “initiate security context” function to obtain a session context. Each invocation of the “initiate security context” function takes an input session token (when one is available) and returns an output session token. A session token carries a protocol for verifying a user’s identity. The initiator process passes the output session token to the session’s target process (WSH), where it is exchanged for another input token. The exchange of tokens continues until both processes have completed mutual authentication.

A security-provider authentication plug-in defines the content of the session context and session token for its security implementation, so ATMI authentication must treat the session context and session token as opaque objects. The number of tokens passed back and forth is not defined, and may vary based on the architecture of the authentication system.

For a native client initiating a session, the initiator process and the target process are the same; the process may be thought of as a middleware client process. The middleware client process calls the security provider’s authentication plug-in to authenticate the native client.

Parent topic: Authentication

1.5.4 Getting Authorization and Auditing Tokens

After a successful authentication, the trusted gateway calls two Oracle Tuxedo internal functions that retrieve an authorization token and an auditing token for the client, which the gateway stores for safekeeping. Together, these tokens represent the user identity of a security context. The term security token refers collectively to the authorization and auditing tokens.

When default authentication is used, the authorization token carries two pieces of information:

- Principal name—the name of an authenticated user.

- Application key—a 32-bit value that uniquely identifies the client initiating the request message. See Application Key for more detail.

In addition, when default authentication is used, the auditing token carries the same two pieces of information: principal name and application key.

Like the session token, the authentication and auditing tokens are opaque; their contents are determined by the security provider. The authorization token can be used for performing authorization (permission) checks. The auditing token can be used for recording audit information. In some ATMI applications, it is useful to keep separate user identities for authorization and auditing.

Parent topic: Authentication

1.5.5 Replacing Client Tokens with Server Tokens

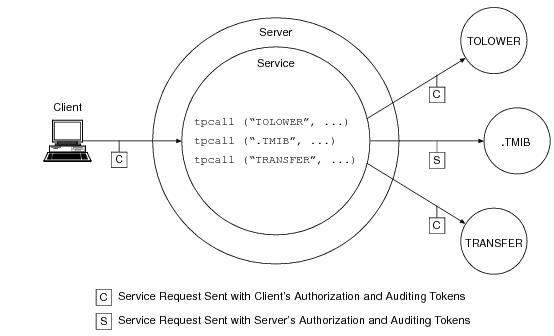

As shown in the following figure, there are situations where a client service request forwarded by a server takes on the identity of the server. The server replaces the client tokens attached to the request with its own tokens and then forwards the service request to the destination service.

Figure 1-4 Server Permission Upgrade Example

Note:

See Specifying Principal Names for an understanding of how servers acquire their own authorization and auditing tokens and why they need them.The feature demonstrated in the preceding figure is known as server permission upgrade, which operates in the following manner: whenever a server calls a dot service (a system-supplied service having a beginning period in its name—such as .TMIB), the service request takes on the identity of the server and thus acquires the access permissions of the server. A server’s access permissions are those of the application (system) administrator. Thus, certain requests that would be denied if the client called the dot service directly would be allowed if the client sent the requests to a server, and the server forwarded the requests to the dot service. For more information about dot services, see the TMIB service description on the MIB(5) reference page in the Oracle Tuxedo File Formats, Data Descriptions, MIBs, and System Processes Reference.

Parent topic: Authentication

1.5.6 Implementing Custom Authentication

You can provide authentication for your ATMI application by using the default plug-in or a custom plug-in. You choose a plug-in by configuring the Oracle Tuxedo registry, a tool that controls all security plug-ins.

If you want to use the default authentication plug-in, you do not need to configure the registry. If you want to use a custom authentication plug-in, however, you must configure the registry for your plug-in before you can install it. For more detail about the registry, see Setting the Oracle Tuxedo Registry.

1.6 Authorization

Authorization allows administrators to control access to ATMI applications. Specifically, an administrator can use authorization to allow or disallow principals (authenticated users) to use resources or facilities in an ATMI application.

- Authorization Plug-in Architecture

- How the Authorization Plug-in Works

- Implementing Custom Authorization

Parent topic: Overview

1.6.1 Authorization Plug-in Architecture

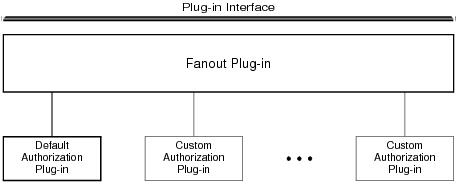

A fanout is an umbrella plug-in to which individual plug-in implementations are connected. As shown in the following figure, the authorization plug-in interface is implemented as a fanout.

Figure 1-5 Authorization Plug-in Architecture

The default authorization implementation consists of a fanout plug-in and a default authorization plug-in. A custom implementation consists of the fanout plug-in, the default authorization plug-in, and one or more custom authorization plug-ins.

In a fanout plug-in model, a caller sends a request to the fanout plug-in. The fanout plug-in passes the request to each of the subordinate plug-ins, and receives a response from each. Finally, the fanout plug-in forms a composite response from the individual responses, and sends the composite response to the caller.

The purpose of an authorization request is to determine whether a client operation should be allowed or whether the results of an operation should be kept unchanged. Each authorization plug-in returns one of three responses: permit, deny, or abstain. The abstain response gives writers of authorization plug-ins a graceful way to handle situations that are not accommodated by the original plug-in, such as names of operations that are added to the system after the plug-in is installed.

The authorization fanout plug-in forms a composite response as described in the following table. For default authorization, the composite response is determined solely by the default authorization plug-in.

Table 1-2 Authorization Composite Responses

| If Plug-ins Return . . . | The Composite Response Is . . . |

|---|---|

| All permit or a combination of permit and abstain | permit

|

| At least one deny | deny

|

| All abstain | deny If the SECURITY parameter in the ATMI application’s UBBCONFIG file is set to MANDATORY_ACL permit If the SECURITY parameter is not set in the ATMI application’s UBBCONFIG file or is set to any value other than MANDATORY_ACL

|

As an example of custom authorization, consider a banking application in which a user is identified as a member of the Customer group, and the following conditions are in effect:

- The default authorization plug-in allows any user in the

Customergroup to withdraw money from a particular account. - A custom authorization plug-in allows any user in the

Customergroup to withdraw money from a particular account but only on Monday through Friday between 9:00 A.M. and 5:00 P.M. - A second custom authorization plug-in allows any user in the

Customergroup to withdraw money from a particular account but only if the amount being withdrawn is less than $10,000.

So, if a user in the Customer group attempts to withdraw $500.00 on Monday at 10 A.M., the operation is allowed. If the same user attempts the same withdrawal on Saturday morning, the operation is not allowed.

Many other custom authorization scenarios are possible. Feel free to improvise; define the conditions that best serve the needs of your business.

Parent topic: Authorization

1.6.2 How the Authorization Plug-in Works

Authorization decisions are based partly on user identity, which is stored in an authorization token. Because authorization tokens are generated by the authentication security plug-in, providers of authentication and authorization plug-ins need to ensure that these plug-ins work together.

An Oracle Tuxedo system process or server (such as /Q server TMQUEUE(5) or EventBroker server TMUSREVT(5)) calls the authorization plug-in when it receives a client request. In response, the authorization plug-in performs a pre-operation check and returns whether the operation should be allowed.

- If allowed, the system carries out the client request.

- If not allowed, the system does not carry out the client request.

If the client operation is allowed, the Oracle Tuxedo system process or server may call the authorization plug-in after the client operation completes. In response, the authorization plug-in performs a post-operation check and returns whether the results of the operation are acceptable.

- If acceptable, the system accepts the operation results.

- If not unacceptable, the system either modifies the operation results or rolls back (reverses) the operation.

These calls are system-level calls, not application-level calls. An ATMI application cannot call the authorization plug-in.

The authorization process is somewhat different for (1) users of the default authorization plug-in provided by the Oracle Tuxedo system and (2) users of one or more custom authorization plug-ins. The default plug-in does not support post-operation checks. If the default authorization plug-in receives a post-operation check request, it returns immediately and does nothing.

The custom plug-ins support both pre-operation and post-operation checks.

Parent topic: Authorization

1.6.2.1 Default Authorization

When default authorization is called by an ATMI process to perform a pre-operation check in response to a client request, the authorization plug-in performs the following tasks.

- Gets information from the client’s authorization token by calling the authentication plug-in.

Because the authorization token is created by the authentication plug-in, the authorization plug-in has no record of the token’s content. This information is necessary for the authorization process.

- Performs a pre-operation check.

The authorization plug-in determines whether that operation should be allowed by examining the client’s authorization token, the access control list (ACL), and the configured security level (optional or mandatory ACL) of the ATMI application.

- Issues a decision about whether the operation will be performed.

The authorization fanout plug-in receives a decision (permit or deny) from the default authorization plug-in and operates on its behalf.

- If the decision is to permit the client operation, the fanout plug-in returns permit to the calling process. The system carries out the client request.

- If the decision is to deny the operation, the fanout plug-in returns deny to the calling process. The system does not carry out the client request.

Parent topic: How the Authorization Plug-in Works

1.6.2.2 Custom Authorization

Users of one or more custom authorization plug-ins may take advantage of additional functionality offered by the ATMI environment of the Oracle Tuxedo product. Specifically, the custom plug-ins may perform an additional check after an operation occurs.

When custom authorization is called by an ATMI process to perform a pre-operation check in response to a client request, the authorization plug-in performs the following tasks.

- Gets information from the client’s authorization token by calling the authentication plug-in.

- Performs a pre-operation check.

The authorization plug-in determines whether the operation should be allowed by examining the operation, the client’s authorization token, and associated data. “Associated data” may include user data and the security level of the ATMI application.

If necessary, in order to satisfy authorization requirements, the authorization plug-in may modify the user data before the operation is performed.

- Issues a decision about whether the operation will be performed.

The authorization fanout plug-in makes the ultimate decision by checking the individual responses (permit, deny, abstain) of its subordinate plug-ins.

- If the fanout plug-in allows the client operation, it returns permit to the calling process. The system carries out the client request.

- If the fanout plug-in does not allow the operation, it returns deny to the calling process. The system does not carry out the client request.

If the client operation is allowed, custom authorization may be called by the ATMI process to perform a post-operation check after the client operation completes. If so, the authorization plug-in performs the following tasks.

- Gets information from the client’s authorization token by calling the authentication plug-in.

- Performs a post-operation check.

The authorization plug-in determines whether the operation results are acceptable by examining the operation, the client’s authorization token, and associated data. “Associated data” may include user data and the security level of the ATMI application.

- Issues a decision about whether the operation results are acceptable.

The authorization fanout plug-in makes the ultimate decision by checking the individual responses (permit, deny, abstain) of its subordinate plug-ins.

- If the fanout plug-in decides that the operation results are acceptable, it returns permit to the calling process. The system accepts the operation results.

- If the fanout plug-in does not allow the operation, it returns deny to the calling process. The system either modifies the operation results or rolls back (reverses) the operation.

A post-operation check is useful for label-based security models. For example, suppose that a user is authorized to access CONFIDENTIAL documents but performs an operation that retrieves a TOP SECRET document. (Often, a document’s classification label is not easily determined until after the document has been retrieved.) In this case, the post-operation check is an efficient means to either deny the operation or modify the output data by expunging any restricted information.

Parent topic: How the Authorization Plug-in Works

1.6.3 Implementing Custom Authorization

You can provide authorization for your ATMI application by using the default plug-in or adding one or more custom plug-ins. You choose a plug-in by configuring the Oracle Tuxedo registry, a tool that controls all security plug-ins.

If you want to use the default authorization plug-in, you do not need to configure the registry. If you want to add one or more custom authorization plug-ins, however, you must configure the registry for your additional plug-ins before you can install them. For more detail about the registry, see Setting the Oracle Tuxedo Registry.

1.7 Auditing

Auditing provides a means to collect, store, and distribute information about operating requests and their outcomes. Audit-trail records may be used to determine which principals performed, or attempted to perform, actions that violated the security levels of an ATMI application. They may also be used to determine which operations were attempted, which ones failed, and which ones successfully completed.

How auditing is done (that is, how information is collected, processed, protected, and distributed) depends on the auditing plug-in.

Parent topic: Overview

1.7.1 Auditing Plug-in Architecture

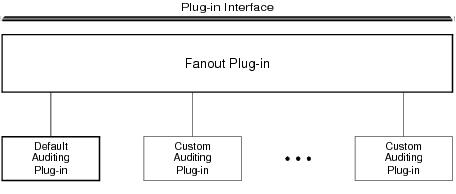

A fanout is an umbrella plug-in to which individual plug-in implementations are connected. As shown in the following figure, the auditing plug-in interface is implemented as a fanout.

Figure 1-6 Auditing Plug-in Architecture

The default auditing implementation consists of a fanout plug-in and a default auditing plug-in. A custom implementation consists of the fanout plug-in, the default auditing plug-in, and one or more custom auditing plug-ins.

In a fanout plug-in model, a caller sends a request to the fanout plug-in. The fanout plug-in passes the request to each of the subordinate plug-ins, and receives a response from each. Finally, the fanout plug-in forms a composite response from the individual responses, and sends the composite response to the caller.

The purpose of an auditing request is to record an event. Each auditing plug-in returns one of two responses: success (the audit succeeded—logged the event) or failure (the audit failed—did not log the event). The auditing fanout plug-in forms a composite response in the following manner: if all responses are success, the composite response is success; otherwise, the composite response is failure.

For default auditing, the composite response is determined solely by the default auditing plug-in. For custom auditing, the composite response is determined by the fanout plug-in after collecting the responses of the subordinate plug-ins. For more insight into how fanouts work, see Authorization Plug-in Architecture.

Parent topic: Auditing

1.7.2 How the Auditing Plug-in Works

Auditing decisions are based partly on user identity, which is stored in an auditing token. Because auditing tokens are generated by the authentication security plug-in, providers of authentication and auditing plug-ins need to ensure that these plug-ins work together.

An ATMI system process or server (such as /Q server TMQUEUE(5) or EventBroker server TMUSREVT(5)) calls the auditing plug-in when it receives a client request. Because it is called before an operation begins, the auditing plug-in can audit operation attempts and store data if that data will be needed later for a post-operation audit. In response, the auditing plug-in performs a pre-operation audit and returns whether the audit succeeded.

The ATMI system process or server may call the auditing plug-in after the client operation is performed. In response, the auditing plug-in performs a post-operation audit and returns whether the audit succeeded.

In addition, an ATMI system process or server may call the auditing plug-in when a potential security violation occurs. (Suspicion of a security violation arises when a pre-operation or post-operation authorization check fails, or when an attack on security is detected.) In response, the auditing performs a post-operation audit and returns whether the audit succeeded.

These calls are system-level calls, not application-level calls. An ATMI application cannot call the auditing plug-in.

The auditing process is somewhat different for (1) users of the default auditing plug-in provided by the Oracle Tuxedo system and (2) users of one or more custom auditing plug-ins. The default plug-in does not support pre-operation audits. If the default auditing plug-in receives a pre-operation audit request, it returns immediately and does nothing.

The custom plug-ins support both pre-operation and post-operation audits.

Parent topic: Auditing

1.7.2.1 Default Auditing

The default auditing implementation consists of the Oracle

Tuxedo EventBroker component and userlog (ULOG). These

utilities report only security violations; they do not report which

operations were attempted, which ones failed, and which ones

successfully completed.

When default auditing is called by an ATMI process to perform a post-operation audit when a security violation is suspected, the auditing plug-in performs the following tasks.

- Gets information from the client’s auditing token by

calling the authentication plug-in.

Because the auditing token is created by the authentication plug-in, the auditing plug-in has no record of the token’s content. This information is necessary for the auditing process.

- Performs a post-operation audit.

The auditing plug-in examines the client’s auditing token and the security violation delivered in the post-operation audit request.

- Issues a decision about whether the post-operation audit

succeeded.

The auditing fanout plug-in receives a decision (success or failure) from the default auditing plug-in and operates on its behalf.

- If the decision is success, the post-operation audit succeeded. The auditing fanout plug-in returns success to the calling process and logs the security violation.

- If the decision is failure, the post-operation audit failed. The auditing fanout returns failure to the calling process.

Parent topic: How the Auditing Plug-in Works

1.7.2.2 Custom Auditing

Users of one or more custom auditing plug-ins may take advantage of additional functionality offered by the ATMI environment of the Oracle Tuxedo product. Specifically, the custom plug-ins may perform an additional audit before an operation occurs.

When custom auditing is called by an ATMI process to perform a pre-operation audit in response to a client request, the auditing plug-in performs the following tasks.

- Gets information from the client’s auditing token by calling the authentication plug-in.

- Performs a pre-operation audit.

The auditing plug-in examines the client’s auditing token and may store user data if that data will be needed later for a post-operation audit.

- Issues a decision about whether the pre-operation audit

succeeded.

The auditing fanout plug-in makes the ultimate decision by checking the individual responses (success or failure) from its subordinate plug-ins.

- If the composite decision is success, the pre-operation audit succeeded. The auditing fanout plug-in returns success to the calling process and logs the client’s attempt to perform the operation.

- If the composite decision is failure, the pre-operation audit failed. The auditing fanout returns failure to the calling process.

Custom auditing may be called by the ATMI process to perform a post-operation audit after the client operation is performed. If so, the auditing plug-in performs the following tasks.

- Gets information from the client’s auditing token by calling the authentication plug-in.

- Performs a post-operation audit.

The auditing plug-in examines the client’s auditing token, the completion status delivered in the post-operation audit request, and any data stored during the pre-operation audit.

- Issues a decision about whether the post-operation audit

succeeded.

The auditing fanout plug-in decides if the post-operation audit succeeded or failed by checking the individual responses (success or failure) from its subordinate plug-ins.

- If the composite decision is success, the post-operation audit succeeded. The auditing fanout plug-in returns success to the calling process and logs the completion status of the operation.

- If the composite decision is failure, the post-operation audit failed. The auditing fanout returns failure to the calling process.

An operation is considered successful if it passes both pre- and post-operation audits, and the operation itself is successful. Some companies collect and store both pre- and post-operation auditing data, even though such data can occupy a lot of disk space.

Parent topic: How the Auditing Plug-in Works

1.7.3 Implementing Custom Auditing

You can provide auditing for your ATMI application by using the default plug-in or adding one or more custom plug-ins. You choose a plug-in by configuring the Oracle Tuxedo registry, a tool that controls all security plug-ins.

If you want to use the default auditing plug-in, you do not need to configure the registry. If you want to add one or more custom auditing plug-ins, however, you must configure the registry for your additional plug-ins before you can install them.

Now Oracle Tuxedo supports Oracle Platform Security Services (OPSS) plug-in.

Parent topic: Auditing

1.8 Link-Level Encryption

Link-level encryption (LLE) establishes data privacy for messages moving over the network links that connect the machines in an ATMI application. It employs the symmetric key encryption technique (specifically, RC4), which uses the same key for encryption and decryption.

When LLE is being used, the Oracle Tuxedo system encrypts data before sending it over a network link and decrypts it as it comes off the link. The system repeats this encryption/decryption process at every link through which the data passes. For this reason, LLE is referred to as a point-to-point facility.

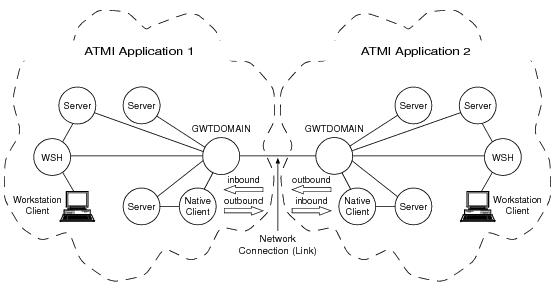

LLE can be used on the following types of ATMI application links:

- Workstation client to workstation handler (WSH)

- Bridge-to-Bridge

- Administrative utility (such as

tmbootortmshutdown) totlisten - Domain gateway to domain gateway

- 0-bit (no encryption)

- 56-bit (International)

- 128-bit (United States and Canada)

- How LLE Works

- Encryption Key Size Negotiation

- Backward Compatibility of LLE

- WSL/WSH Connection Timeout During Initialization

Parent topic: Overview

1.8.1 How LLE Works

LLE control parameters and underlying communication protocols are different for various link types, but the setup is basically the same in all cases:

- An initiator process begins the communication session.

- A target process receives the initial connection.

- Both processes are aware of the link-level encryption feature, and have two configuration parameters.

The first configuration parameter is the minimum encryption level that a process will accept. It is expressed as a key length: 0, 56, or 128 bits.

The second configuration parameter is the maximum encryption level a process can support. It also is expressed as a key length: 0, 56, or 128 bits.

For convenience, the two parameters are denoted as (min, max) in the discussion that follows. For example, the values “(56, 128)” for a process mean that the process accepts at least 56-bit encryption but can support up to 128-bit encryption.

Parent topic: Link-Level Encryption

1.8.2 Encryption Key Size Negotiation

When two processes at the opposite ends of a network link need to communicate, they must first agree on the size of the key to be used for encryption. This agreement is resolved through a two-step process of negotiation.

- Each process identifies its own min-max values.

- Together, the two processes find the largest key size supported by both.

1.8.2.1 Determining Min-Max Values

A Tuxedo process will process the MINENCRYTPBITS

and MAXENCRYPTBITS using the following steps.

- If the configured min-max values accommodate the default min-max values, then the local software assigns those values as the min-max values for the process.

- If one of the min-max values is not configured, then the default value will be used for the missing value. For instance (0, max-value-configured) or (min-value-configured, 128) will be used.

- If there are no min-max values specified in the configurations for a particular link type, then the local software assigns 0 as the minimum value and assigns the highest bit-encryption rate possible for the default min-max values as the maximum value, that is, (0, 128) for the LLE.

Parent topic: Encryption Key Size Negotiation

1.8.2.2 Finding a Common Key Size

After the min-max values are determined for the two processes, the negotiation of key size begins. The negotiation process need not be encrypted or hidden. Once a key size is agreed upon, it remains in effect for the lifetime of the network connection.

The following table describes which key size, if any, is agreed upon by two processes when all possible combinations of min-max values are negotiated. The header row holds the min-max values for one process; the far left column holds the min-max values for the other.

Table 1-3 Interprocess Negotiation Results

| (0, 0) | (0, 56) | (0, 128) | (56, 56) | (56, 128) | (128, 128) | |

|---|---|---|---|---|---|---|

| (0, 0) | 0 | 0 | 0 | ERROR | ERROR | ERROR |

| (0, 56) | 0 | 56 | 56 | 56 | 56 | ERROR |

| (0, 128) | 0 | 56 | 128 | 56 | 128 | 128 |

| (56, 56) | ERROR | 56 | 56 | 56 | 56 | ERROR |

| (56, 128) | ERROR | 56 | 128 | 56 | 128 | 128 |

| (128, 128) | ERROR | ERROR | 128 | ERROR | 128 | 128 |

Parent topic: Encryption Key Size Negotiation

1.8.3 Backward Compatibility of LLE

The ATMI environment of the Oracle Tuxedo product offers some backward compatibility for LLE.

- Interoperating with Release 6.5 Oracle Tuxedo Software

- Interoperating with Pre-Release 6.5 Oracle Tuxedo Software

Parent topic: Link-Level Encryption

1.8.3.1 Interoperating with Release 6.5 Oracle Tuxedo Software

In the following table describes which key size, if any, is agreed upon by two ATMI applications when one of them is running under release 6.5 and the other under release 7.1 or later. The header row holds the min-max values for the process running under release 7.1 or later; the far left column holds the min-max values for the process running under release 6.5.

Table 1-4 Negotiation Results When Interoperating with Release 6.5 Oracle Tuxedo Software

| (0,0) | (0,56) | (0,128) | (56,56) | (56,128) | (128,128) | |

|---|---|---|---|---|---|---|

| 0 | 0 | ERROR | ERROR | ERROR | ||

| 40 | 40 | ERROR | ERROR | ERROR | ||

| 40 | 128 | ERROR | 128 | 128 | ||

| ERROR | 40 | 40 | ERROR | ERROR | ERROR | |

| ERROR | 40 | 128 | ERROR | 128 | 128 | |

| ERROR | ERROR | 128 | ERROR | 128 | 128 |

If your current Oracle Tuxedo installation is configured for (0, 56), (0, 128), (56,56), or (56, 128), and you want to interoperate with a release 6.5 ATMI application that is configured for a maximum LLE level of 40 bits, then any negotiation results in an automatic upgrade to 56.

The negotiation result in this case is the same as the negotiation result for two sites running release 6.5 and configured for a maximum LLE level of 40 bits. In both scenarios, the negotiation results in an automatic upgrade to 56.

Parent topic: Backward Compatibility of LLE

1.8.3.2 Interoperating with Pre-Release 6.5 Oracle Tuxedo Software

In the following table describes which key size, if any, is agreed upon by two ATMI applications when one of them is running under pre-release 6.5 and the other under release 7.1 or later. The header row holds the min-max values for the process running under release 7.1 or later; the far left column holds the min-max values for the process running under pre-release 6.5.

Table 1-5 Negotiation Results When Interoperating with Pre-Release 6.5 Oracle Tuxedo Software

| (0,0) | (0,56) | (0,128) | (56,56) | (56,128) | (128,128) | |

|---|---|---|---|---|---|---|

| (0,0) | 0 | 0 | 0 | ERROR | ERROR | ERROR |

| (0,40) | 0 | 56 | 56 | 56 | 56 | ERROR |

| (0,128) | 0 | 56 | 128 | 56 | 128 | 128 |

| (40,40) | ERROR | 56 | 56 | 56 | 56 | ERROR |

| (40,128) | ERROR | 56 | 128 | 56 | 128 | 128 |

| (128,128) | ERROR | ERROR | 128 | ERROR | 128 | 128 |

If your current Oracle Tuxedo installation is configured for (0, 56) or (0, 128), and you want to interoperate with a pre-release 6.5 ATMI applications that is configured for a maximum LLE level of 40 bits, then the result of any negotiation is 40.

If your current Oracle Tuxedo installation is configured for (56, 56), (56, 128), or (128, 128), then your system cannot interoperate with a pre-release 6.5 ATMI application that is configured for a maximum LLE level of 40 bits. Attempts to negotiate a common key size fail.

Parent topic: Backward Compatibility of LLE

1.8.4 WSL/WSH Connection Timeout During Initialization

The length of time a Workstation client can take for initialization is limited. By default, this interval is 30 seconds in an ATMI application not using LLE, and 60 seconds in an ATMI application using LLE. The 60-second interval includes the time needed to negotiate an encrypted link. This time limit can be changed when LLE is configured by changing the value of the MAXINITTIME parameter for the workstation listener (WSL) server in the UBBCONFIG file, or the value of the TA_MAXINITTIME attribute in the T_WSL class of the WS_MIB(5) .

See Also:

- Security Administration Tasks

- Administering Link-Level Encryption

- “Distributing ATMI Applications Across a Network” and “Creating the Configuration File for a Distributed ATMI Application” in Setting Up an Oracle Tuxedo Application

Parent topic: Link-Level Encryption

1.9 TLS Encryption

The Oracle Tuxedo product provides the industry-standard TLS protocol to establish secure communications between client and server applications. When using the TLS protocol, principals use digital certificates to prove their identity to a peer.

Note:

The actual network protocol used is TLS, which is the successor to the TLS protocol, however, this document follows common usage and refer to this protocol as TLS Encryption.Like LLE, the TLS protocol can be used with password authentication to provide confidentiality and integrity to communication between the client application and the Oracle Tuxedo domain. When using the TLS protocol with password authentication, you are prompted for the password of the Listener/Handler (IIOP, Workstation, or JOLT) defined by the SEC_PRINCIPAL_NAME parameter when you enter the tmloadcf command.

TLS is used to secure ATMI application links in the following methods:

- Client to server handler (IIOP, Workstation, or JOLT)

- Bridge-to-Bridge

- Administrative utility (such as

tmbootortmshutdown) totlisten - Domain gateway to domain gateway

Available TLS ciphers include 256-bit, 128-bit, and 56-bit ciphers, as described later in this chapter.

- How the TLS Protocol Works

- Requirements for Using the TLS Protocol

- TLS Version Negotiation and Configuration

- Encryption Key Size Negotiation

- Backward Compatibility of TLS

- WSL/WSH Connection Timeout During Initialization

- Supported Cipher Suites

- TLS Installation

Parent topic: Overview

1.9.1 How the TLS Protocol Works

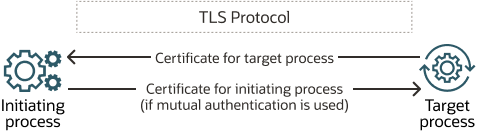

The TLS protocol works in the following manner:

- The Target Process presents its digital certificate to the initiating application.

- The initiating application compares the digital certificate of the Target Process against its list of trusted certificate authorities.

- If the initiating application validates the digital certificate of the Target Process, the application and the Target Process establish an TLS connection.

The initiating application can then use either password or certificate authentication to authenticate itself to the Oracle Tuxedo domain.

The following figure illustrates how the TLS protocol works.

Figure 1-7 How the TLS Protocol Works in a Tuxedo Application

Parent topic: TLS Encryption

1.9.2 Requirements for Using the TLS Protocol

The implementation of the TLS protocol is flexible enough to fit into most public key infrastructures. Tuxedo offers two different methods to store TLS security credentials:

- The Oracle Wallet is a new feature of Tuxedo 12c. An Oracle Wallet stores the private key, certificate chain, and trusted certificates for a process within a single PKCS12 file, which can be created using either Oracle tools or tools from other security vendors.

- The plugin framework used in previous release of Tuxedo can also be used to store security credentials. The default implementation of the plug-in frame work in the Oracle Tuxedo product requires that digital certificates are stored in an LDAP-enabled directory. You can choose any LDAP-enabled directory service. You also need to choose the certificate authority from which to obtain digital certificates and private keys used in a Tuxedo application. You must have an LDAP-enabled directory service and a certificate authority in place before using the TLS protocol in a Tuxedo application.

Parent topic: TLS Encryption

1.9.3 TLS Version Negotiation and Configuration

Tuxedo 12.2.2 supports TLS 1.2, 1.1, and 1.0, while some Tuxedo earlier releases support just TLS 1.0. When a secure network connection is established between the TLS server and client, the TLS version to be used is negotiated. When acting as an TLS server, Tuxedo components always accept TLS1.2/1.1/1.0 initiating request. When acting as an TLS client, Tuxedo 12.2.2 components conform to following rules:

- WSC has self-adaption capability, which means it can connect to a Tuxedo 12.2.2 listener using TLS 1.2, and can connect to the old release listener using TLS 1.0 automatically.

- GWTDOMAIN, COBRA client, and GWWS outbound HTTPS use TLS 1.2 by default. You need to change their TLS version to TLS 1.0 when connecting to a Tuxedo 12.1.3 GA or earlier release.

- When GWTDOMAIN is acting as both TLS client and TLS server, only the TLS version specified for TLS client side takes effect.

- If a Tuxedo 12.2.2 master machine connects to an earlier release slave machine in an MP model, you must start tlisten on the master machine before running the

tmbootcommand.

Table 1-6 Default TLS Version and Related Parameter

| When an TLS client is... | The default TLS version used is... | You can change the TLS Version using... |

|---|---|---|

| GWTDOMAIN | TLS 1.2 | The TLS version parameter in DMCONFIG. For more information, see File Formats, Data Descriptions, MIBs, and System Processes Reference. |

| WSC | Self-adaptive | The environment variable WSNADDR

|

| CORBA client (Tobj_Bootstrap) | TLS 1.2 | The Tobj_Bootstrap constructor naddress parameter or the environment variable TOBJADDR. For more information, seeFile Formats, Data Descriptions, MIBs, and System Processes Reference . |

| GWWS outbound | TLS 1.2 | The new attribute <TLSversion> for End Point of outbound in SALT Deployment File. For more information, see Configuring a SALT Application. |

Parent topic: TLS Encryption

1.9.4 Encryption Key Size Negotiation

When two processes at the opposite ends of a network link need to communicate, they must first agree on the size of the key to be used for encryption. This agreement is resolved through a two-step process of negotiation.

- Each process identifies its own min-max values.

- Together, the two processes find the largest key size supported by both.

1.9.4.1 Determining Min-Max Values

A Tuxedo process will process the MINENCRYTPBITS

and MAXENCRYPTBITS using the following steps:

- If the configured min-max values accommodate the default min-max values, then the local software assigns those values as the min-max values for the process.

- If one of the min-max values is not configured, then the default value will be used for the missing value. For instance (0, max-value-configured) or (min-value-configured, 128) will be used.

- If there are no min-max values specified in the configurations for a particular link type, then the local software assigns 0 as the minimum value and assigns 128 as the maximum value.

- The minimum encryption key size is 112. If

min-maxvalue is configured with 40 or 56, then 112 will be used by default. - The configuration information about encryption strength is processed independent of type of link level security.

- For /WS client, the default

MAXENCRYPTBITSis 256; it will be adjusted according to the actual link level security configured.

Parent topic: Encryption Key Size Negotiation

1.9.4.2 Finding a Common Key Size

After the min-max values are determined for the two processes, the negotiation of key size begins. The negotiation process need not be encrypted or hidden. Once a key size is agreed upon, it remains in effect for the lifetime of the network connection.

The following table describes which key size, if any, is agreed upon by two processes when all possible combinations of min-max values are negotiated. The header row holds the min-max values for one process; the far left column holds the min-max values for the other.

Table 1-7 Interprocess Negotiation Results (112,112) to (112,256)

| (112,112) | (112,128) | (112,256) | |

|---|---|---|---|

| (112,112) | 112 | 112 | 112 |

| (112,128) | 112 | 128 | 128 |

| (112,256) | 112 | 128 | 256 |

| (128,128) | ERROR | 128 | 128 |

| (128,256) | ERROR | 128 | 256 |

| (256,256) | ERROR | ERROR | 256 |

Table 1-8 Interprocess Negotiation Results (128,128) to (256,256)

| (128,128) | (128,256) | (256,256) | |

|---|---|---|---|

| (112,112) | ERROR | ERROR | ERROR |

| (112,128) | 128 | 128 | ERROR |

| (112,256) | 128 | 256 | 256 |

| (128,128) | 128 | 128 | ERROR |

| (128,256) | 128 | 256 | 256 |

| (256,256) | ERROR | 256 | 256 |

Parent topic: Encryption Key Size Negotiation

1.9.5 Backward Compatibility of TLS

In order to use TLS between two Tuxedo processes, both processes must be running Tuxedo 10.0 or later (except when using the CORBA TLS capabilities described in "Using Security in CORBA Applications." It is possible to specify both non-TLS and TLS ports for WSL and JSL processes and to specify TLS or LLE connectivity for individual entries in the *DM_TDOMAIN section of a DMCONFIG file. In this way, it is possible to gradually migrate a workstation or domain application to use TLS as individual workstation clients and Tuxedo domains are upgraded to Tuxedo 10.

See Also:

- It is not possible to use TLS between BRIDGE and tlisten processes in an MP mode application until all machines in the Tuxedo domain are upgraded to Tuxedo 10.0 or later.

- Zero bit TLS ciphers (which do not actually encrypt application data) were allowed prior to Tuxedo 12.1.1, but are disallowed by the Oracle NZ Security Layer used in Tuxedo 12.1.1 and later.

Parent topic: TLS Encryption

1.9.6 WSL/WSH Connection Timeout During Initialization

The length of time a Workstation client can take for initialization is limited. By default, this interval is 60. The 60-second interval includes the time needed to negotiate an encrypted link. This time limit can be changed when WSL is configured by changing the value of the MAXINITTIME parameter for the workstation listener (WSL) server in the UBBCONFIG file, or the value of the TA_MAXINITTIME attribute in the T_WSL class of the WS_MIB(5).

Parent topic: TLS Encryption

1.9.7 Supported Cipher Suites

A cipher suite is a TLS encryption method that includes the key exchange algorithm, the symmetric encryption algorithm, and the secure hash algorithm used to protect the integrity of the communication. For example, the cipher suite RSA_WITH_RC4_128_MD5 uses RSA for key exchange, RC4 with a 128-bit key for bulk encryption, and MD5 for message digest. The ATMI security environment supports the cipher suites described in the following table.

Table 1-9 SSL/TLS Cipher Suites Supported by the ATMI Security Environment

| Cipher Suite | Key Exchange Type | Symmetric Key Strength |

|---|---|---|

| TLS_RSA_WITH_AES_256_CBC_SHA | RSA | 256 |

| TLS_RSA_WITH_AES_128_CBC_SHA | RSA | 128 |

| SSL_RSA_WITH_RC4_128_SHA | RSA | 128 |

| SSL_RSA_WITH_RC4_128_MD5 | RSA | 128 |

| SSL_RSA_WITH_3DES_EDE_CBC_SHA SSL_DH_anon_WITH_3DES_EDE_CBC_SHA | RSA | 112 |

| SSL_RSA_WITH_DES_CBC_SHA SSL_DH_anon_WITH_DES_CBS_SHA | RSA | 56 |

| SSL_RSA_EXPORT_WITH_RC4_40_MD5 SSL_RSA_EXPORT_WITH_DES40_DBC_SHA SSL_DH_anon_EXPORT_WITH_DES40_CBC_SHA SSL_DH_anon_EXPORT_WITH_RC4_40_MD5 | RSA | 40 |

Parent topic: TLS Encryption

1.9.8 TLS Installation

TLS is delivered as a standard feature of the Tuxedo system. If an application will not be using the Oracle Wallet to store security credentials and will be using LDAP to obtain certificates, then the administrator should have the name of their LDAP server, the LDAP port number, and the LDAP filter file location available at installation time (The default LDAP filter file location of $TUXDIR/udataobj/security/bea_ldap_filter.dat should be fine for most applications.)

This information can be changed after installation using the epifregedtcommand.

See Also:

- Security Administration Tasks

- Administering TLS Encryption

- “Distributing ATMI Applications Across a Network” and “Creating the Configuration File for a Distributed ATMI Application” in Setting Up an Oracle Tuxedo Application

- Using Security in CORBA Applications

Parent topic: TLS Encryption

1.10 Public Key Security

Public key security provides two capabilities that make end-to-end digital signing and data encryption possible:

- Message-based digital signature

- Message-based encryption

Message-based digital signature allows the recipient (or recipients) of a message to identify and authenticate both the sender and the sent message. Digital signature provides solid proof of the originator and content of a message; a sender cannot falsely repudiate responsibility for a message to which that sender’s digital signature is attached. Thus, for example, Bob cannot issue a request for a withdrawal from his bank account and later claim that someone else issued that request.

In addition, message-based encryption protects the confidentiality of messages by ensuring that only designated recipients can decrypt and read them.

1.10.1 PKCS-7 Compliant

Informal but recognized industry standards for public key software have been issued by a group of leading communications companies, led by RSA Laboratories. These standards are called Public-Key Cryptography Standards, or PKCS. The public key software in the ATMI environment of the Oracle Tuxedo software complies with the PKCS-7 standard.

PKCS-7 is a hybrid cryptosystem architecture. A symmetric key algorithm with a random session key is used to encrypt a message, and a public key algorithm is used to encrypt the random session key. A random number generator creates a new session key for each communication, which makes it difficult for a would-be attacker to reuse previous communications.

Parent topic: Public Key Security

1.10.2 Supported Algorithms for Public Key Security

All the algorithms on which public key security is based are well known and commercially available. To select the algorithms that will best serve your ATMI application, consider the following factors: speed, degree of security, and licensing restrictions (for example, the United States government restricts the algorithms that it allows to be exported to other countries).

- Public Key Algorithms

- Digital Signature Algorithms

- Symmetric Key Algorithms

- Message Digest Algorithms

Parent topic: Public Key Security

1.10.2.1 Public Key Algorithms

The public key security in the ATMI environment of the Oracle Tuxedo product supports any public key algorithms supported by the underlying plug-ins, including RSA, ElGamal, and Rabin. (RSA stands for Rivest, Shamir, and Adelman, the inventors of the RSA algorithm.) All these algorithms can be used for digital signatures and encryption.

Public key (or asymmetric key) algorithms such as RSA are implemented through a pair of different but mathematically related keys:

- A public key (which is distributed widely) for verifying a digital signature or transforming data into a seemingly unintelligible form.

- A private key (which is always kept secret) for creating a digital signature or returning the data to its original form.

Parent topic: Supported Algorithms for Public Key Security

1.10.2.2 Digital Signature Algorithms

The public key security in the ATMI environment of the Oracle Tuxedo product supports any digital signature algorithms supported by the underlying plug-ins, including RSA, ElGamal, Rabin, and Digital Signature Algorithm (DSA). With the exception of DSA, all these algorithms can be used for digital signatures and encryption. DSA can be used for digital signatures but not for encryption.

Digital signature algorithms are simply public key algorithms used to provide digital signatures. DSA is also a public key algorithm (implemented through public-private key pairs), but it can only be used to provide digital signatures, not encryption.

Parent topic: Supported Algorithms for Public Key Security

1.10.2.3 Symmetric Key Algorithms

Public key security supports the following three symmetric key algorithms:

- DES-CBC (Data Encryption Standard for Cipher Block Chaining)

DES-CBC is a 64-bit block cipher run in Cipher Block Chaining (CBC) mode. It provides 56-bit keys (8 parity bits are stripped from the full 64-bit key) and is exportable outside the United States.

- Two-key triple-DES (Data Encryption Standard)

Two-key triple-DES is a 128-bit block cipher run in Encrypt-Decrypt-Encrypt (EDE) mode. Two-key triple-DES provides two 56-bit keys (in effect, a 112-bit key) and is not exportable outside the United States.

For some time it has been common practice to protect and transport a key for DES encryption with triple-DES, which means that the input data (in this case the single-DES key) is encrypted, decrypted, and then encrypted again (an encrypt-decrypt-encrypt process). The same key is used for the two encryption operations. - RC2 (Rivest’s Cipher 2)

RC2 is a variable key-size block cipher with a key size range of 40 to 128 bits. It is faster than DES and is exportable with a key size of 40 bits. A 56-bit key size is allowed for foreign subsidiaries and overseas offices of United States companies. In the United States, RC2 can be used with keys of virtually unlimited length, although the ATMI public key security restricts the key length to 128 bits.

Oracle Tuxedo customers cannot expand or modify this list of algorithms.

In symmetric key algorithms, the same key is used to encrypt and decrypt a message. The public key encryption system uses symmetric key encryption to encrypt a message sent between two communicating entities. Symmetric key encryption operates at least 1000 times faster than public key cryptography.

A block cipher is a type of symmetric key algorithm that transforms a fixed-length block of plaintext (unencrypted text) data into a block of ciphertext (encrypted text) data of the same length. This transformation takes place in accordance with the value of a randomly generated session key. The fixed length is called the block size.

Parent topic: Supported Algorithms for Public Key Security

1.10.2.4 Message Digest Algorithms

Public key security supports any message digest algorithms supported by the underlying plug-ins, including MD5, SHA-1 (Secure Hash Algorithm 1), and many others. Both MD5 and SHA-1 are well known, one-way hash algorithms. A one-way hash algorithm takes a message and converts it into a fixed string of digits, which is referred to as a message digest or hash value.

MD5 is a high-speed, 128-bit hash; it is intended for use with 32-bit machines. SHA-1 offers more security by using a 160-bit hash, but is slower than MD5.

See Also:

Parent topic: Supported Algorithms for Public Key Security

1.11 Message-based Digital Signature

Message-based digital signatures enhance ATMI security by allowing a message originator to prove its identity, and by binding that proof to a specific message buffer. Mutually authenticated and tamper-proof communication is considered essential for ATMI applications that transport data over the Internet, either between companies or between a company and the general public. It also is critical for ATMI applications deployed over insecure internal networks.

The scope of protection for a message-based digital signature is end-to-end: a message buffer is protected from the time it leaves the originating process until the time it is received at the destination process. It is protected at all intermediate transit points, including temporary message queues, disk-based queues, and system processes, and during transmission over inter-server network links.

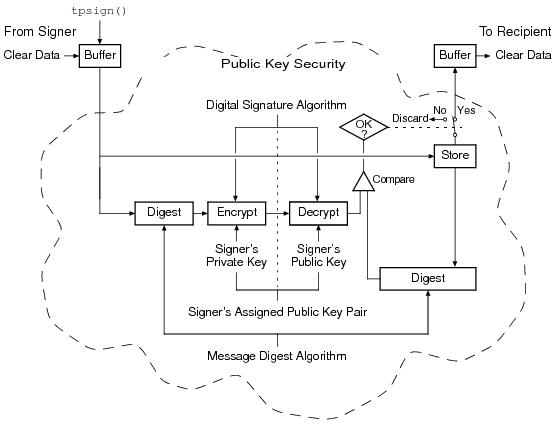

The following figure illustrates how end-to-end message-based digital signature works.

Figure 1-8 ATMI PKCS-7 End-to-End Digital Signing

Message-based digital signature involves generating a digital signature by computing a message digest on the message, and then encrypting the message digest with the sender’s private key. The recipient verifies the signature by decrypting the encrypted message digest with the signer’s public key, and then comparing the recovered message digest to an independently computed message digest. The signer’s public key either is contained in a digital certificate included in the signer information, or is referenced by an issuer-distinguished name and issuer-specific serial number that uniquely identify the certificate for the public key.

Parent topic: Overview

1.11.1 Digital Certificates

Digital certificates are electronic files used to uniquely identify individuals and resources over networks such as the Internet. A digital certificate securely binds the identity of an individual or resource, as verified by a trusted third party known as a Certification Authority, to a particular public key. Because no two public keys are ever identical, a public key can be used to identify its owner.

Digital certificates allow verification of the claim that a specific public key does in fact belong to a specific subscriber. A recipient of a certificate can use the public key listed in the certificate to verify that the digital signature was created with the corresponding private key. If such verification is successful, this chain of reasoning provides assurance that the corresponding private key is held by the subscriber named in the certificate, and that the digital signature was created by that particular subscriber.

A certificate typically includes a variety of information, such as:

- The name of the subscriber (holder, owner) and other identification information required to uniquely identify the subscriber, such as the URL of the Web server using the certificate, or an individual’s e-mail address.

- The subscriber’s public key.

- The name of the Certification Authority that issued the certificate.

- A serial number.

- The validity period (or lifetime) of the certificate (defined by a start date and an end date).

The most widely accepted format for certificates is defined by the ITU-T X.509 international standard. Thus, certificates can be read or written by any ATMI application complying with X.509. The public key security in the ATMI environment of the Oracle Tuxedo product recognizes certificates that comply with X.509 version 3, or X.509v3.

Parent topic: Message-based Digital Signature

1.11.2 Certification Authority

Certificates are issued by a Certification Authority, or CA. Any trusted third-party organization or company that is willing to vouch for the identities of those to whom it issues certificates and public keys can be a CA. When it creates a certificate, the CA signs the certificate with its private key, to obtain a digital signature. The CA then returns the certificate with the signature to the subscriber; these two parts—the certificate and the CA’s signature—together form a valid certificate.

The subscriber and others can verify the issuing CA’s digital signature by using the CA’s public key. The CA makes its public key readily available by publicizing that key or by providing a certificate from a higher-level CA attesting to the validity of the lower-level CA’s public key. The second solution gives rise to hierarchies of CAs.

The recipient of an encrypted message can develop trust in the CA’s private key recursively, if the recipient has a certificate containing the CA’s public key signed by a superior CA whom the recipient already trusts. In this sense, a certificate is a stepping stone in digital trust. Ultimately, it is necessary to trust only the public keys of a small number of top-level CAs. Through a chain of certificates, trust in a large number of users’ signatures can be established.

Thus, digital signatures establish the identities of communicating entities, but a signature can be trusted only to the extent that the public key for verifying the signature can be trusted.

Note:

The Oracle Tuxedo public key plug-in interface enables the customers to choose a CA of their choice.Parent topic: Message-based Digital Signature

1.11.3 Certificate Repositories

To facilitate the use of a public key in verification, a digital certificate may be published in a repository or made accessible in another manner. Repositories are databases of certificates and other information available for retrieval and use in verifying digital signatures. Retrieval can be accomplished automatically by having the verification program request certificates from the repository as required.

Parent topic: Message-based Digital Signature

1.11.4 Public-Key Infrastructure

The Public-Key Infrastructure (PKI) consists of protocols, services, and standards supporting applications of public key cryptography. Because the technology is still relatively new, the term PKI is somewhat loosely defined: sometimes “PKI” simply refers to a trust hierarchy based on public key certificates; in other contexts, it embraces digital signature and encryption services provided to end-user applications as well.

There is no single standard public key infrastructure today, though efforts are underway to define one. It is not yet clear whether a standard will be established or multiple independent PKIs evolves with varying degrees of interoperability. In this sense, the state of PKI technology today can be viewed as similar to local and wide-area network technology in the 1980s, before there was widespread connectivity via the Internet.

The following services are likely to be found in a PKI:

- Key registration: for issuing a new certificate for a public key

- Certificate revocation: for canceling a previously issued certificate

- Key selection: for obtaining a party’s public key

- Trust evaluation: for determining whether a certificate is valid and which operations it authorizes

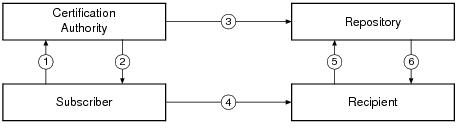

The following figure illustrates the PKI process flow.

Figure 1-9 PKI Process Flow

- Subscriber applies to Certification Authority (CA) for digital certificate.

- CA verifies identity of subscriber and issues digital certificate.

- CA publishes certificate to repository.

- Subscriber digitally signs electronic message with private key to ensure sender authenticity, message integrity, and non-repudiation, and then sends message to recipient.

- Recipient receives message, verifies digital signature with subscriber’s public key, and goes to repository to check status and validity of subscriber’s certificate.

- Repository returns results of status check on subscriber’s certificate to recipient.

Note:

Oracle Tuxedo enables you to utilize a PKI security solution based on PKI software from their vendor of choice through Oracle Tuxedo's public key plug-in interface.1.12 Message-based Encryption

Message-based encryption keeps data private, which is essential for ATMI applications that transport data over the Internet, whether between companies or between a company and its customers. Data privacy is also critical for ATMI applications deployed over insecure internal networks.

Message-based encryption also helps ensure message integrity, because it is more difficult for an attacker to modify a message when the content is obscured.

The scope of protection provided by message-based encryption is end-to-end; a message buffer is protected from the time it leaves the originating process until the time it is received at the destination process. It is protected at all intermediate transit points, including temporary message queues, disk-based queues, and system processes, and during transmission over interserver network links.

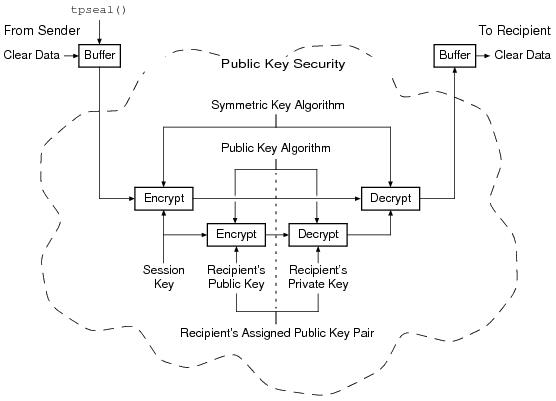

The following figure illustrates how end-to-end message-based encryption works.

Figure 1-10 ATMI PKCS-7 End-to-End Encryption

The message is encrypted by a symmetric key algorithm and a session key. Then, the session key is encrypted by the recipient’s public key. Next, the recipient decrypts the encrypted session key with the recipient’s private key. Finally, the recipient decrypts the encrypted message with the session key to obtain the message content.

Note:

The following figure does not depict two other steps in this process: (1) the data is compressed immediately before it is encrypted; and (2) the data is uncompressed immediately after it is decrypted.Because the unit of encryption is an ATMI message buffer, message-based encryption is compatible with all existing ATMI programming interfaces and communication paradigms. The encryption process is always the same, whether it is being performed on messages shipped between two processes in a single machine, or on messages sent between two machines through a network.

1.13 Public Key Implementation

The underlying plug-in interface for public key security consists of six component interfaces, each of which requires one or more plug-ins. By instantiating these interfaces with your preferred plug-ins, you can bring custom message-based digital signature and message-based encryption to your ATMI application.

The six component interfaces are:

- Public key initialization

- Key management

- Certificate lookup

- Certificate parsing

- Certificate validation

- Proof material mapping

- Public Key Initialization

- Key Management

- Certificate Lookup

- Certificate Parsing

- Certificate Validation

- Proof Material Mapping

- Implementing Custom Public Key Security

- Default Public Key Implementation

Parent topic: Overview

1.13.1 Public Key Initialization

The public key initialization interface allows public key software to open public and private keys. For example, gateway processes may need to have access to a specific private key in order to decrypt messages before routing them. This interface is implemented as a fanout.

Parent topic: Public Key Implementation

1.13.2 Key Management

The key management interface allows public key software to manage and use public and private keys. Note that message digests and session keys are encrypted and decrypted using this interface, but no bulk data encryption is performed using public key cryptography. Bulk data encryption is performed using symmetric key cryptography.

Parent topic: Public Key Implementation

1.13.3 Certificate Lookup

The certificate lookup interface allows public key software to retrieve X.509v3 certificates for a given principal. Principals are authenticated users. The certificate database may be stored using any appropriate tool, such as Lightweight Directory Access Protocol (LDAP), Microsoft Active Directory, Netware Directory Service (NDS), or local files.

Parent topic: Public Key Implementation

1.13.4 Certificate Parsing

The certificate parsing interface allows public key software to associate a simple principal name with an X.509v3 certificate. The parser analyzes a certificate to generate a principal name to be associated with the certificate.

Parent topic: Public Key Implementation

1.13.5 Certificate Validation

The certificate validation interface allows public key software to validate an X.509v3 certificate in accordance with specific business logic. This interface is implemented as a fanout, which allows Oracle Tuxedo customers to use their own business rules to determine the validity of a certificate.

Parent topic: Public Key Implementation

1.13.6 Proof Material Mapping

The proof material mapping interface allows public key software to access the proof materials needed to open keys, provide authorization tokens, and provide auditing tokens.

Parent topic: Public Key Implementation

1.13.7 Implementing Custom Public Key Security

You can provide public key security for your ATMI application by using custom plug-ins. You choose a plug-in by configuring the Oracle Tuxedo registry, a tool that controls all security plug-ins.

If you want to use custom public key plug-ins, you must configure the registry for your public key plug-ins before you can install them. For more detail about the registry, see Setting the Oracle Tuxedo Registry.

Parent topic: Public Key Implementation

1.14 Default Authentication and Authorization

The default authentication and authorization plug-ins provided by the ATMI environment of the Oracle Tuxedo product work in the same manner that implementations of authentication and authorization have worked since they were first made available with the Oracle Tuxedo system.

An application administrator can use the default authentication and authorization plug-ins to configure an ATMI application with one of five levels of security. The five levels include:

- No authentication

- Application password security

- User-level authentication

- Optional access control list (ACL) security

- Mandatory ACL security

At the lowest level, no authentication is provided. At the highest level, an access control checking feature determines which users can execute a service, post an event, or enqueue (or dequeue) a message on an application queue. The security levels are briefly described in the following table:

Table 1-10 Security Levels for Default Authentication and Authorization

| Security Level | Description |

|---|---|