4 Workflow

A Data Miner workflow is a mechanism to define machine learning operations such as model build, test, and apply.

These operations are defined in nodes, which comprise the workflow. You can create multiple workflows in a single project.

- About Workflows

A workflow must contain one or more sources of data, such as a table or a model. - Working with Workflows

A workflow allows you to create a series of nodes, and link them to each other to perform the required processing on your data. You cannot use Oracle Data Miner until you have created a workflow for your work. - About Nodes

Nodes define the machine learning operations in a workflow. - Working with Nodes

You can perform the following tasks with any nodes. - About Parallel Processing

In Parallel Query or Parallel Processing, multiple processes work simultaneously to run a single SQL statement. - Setting Parallel Processing for a Node or Workflow

By default, parallel processing is set toOFFfor any node type. - About Oracle Database In-Memory

The In-Memory Column store (IM column store) is an optional, static System Global Area (SGA) pool that stores copies of tables and partitions in a special columnar format in Oracle Database 12c Release 1 (12.1.0.2) and later.

4.1 About Workflows

A workflow must contain one or more sources of data, such as a table or a model.

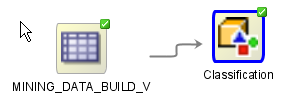

For example, to build a Naive Bayes model, you must first identify the input with a Data Source node. Then you create a Classification node to build and test the model.

By using a workflow, you can:

-

Build, analyze, and test machine learning process

-

Identify and examine data sources

-

Build and apply models

-

Create predictive queries

- Workflow Sequence

A workflow is built and run in a certain sequence. - Workflow Terminology

A workflow is a directed graph consisting of nodes that are connected to one another. - Workflow Thumbnail

The thumbnail view of the workflow provides an overall view of the workflow. Thumbnails are most useful for large workflows. - Components

The Components pane lists the components that you can add to workflows. - Workflow Properties

The Workflow Properties tab enables you to add or change comments associated with the selected workflow. - Properties

Properties enables you to view and change information about the entire workflow or a node in a workflow.

Parent topic: Workflow

4.1.1 Workflow Sequence

A workflow is built and run in a certain sequence.

The sequence is:

-

Create a blank workflow.

-

Add nodes to the workflow.

-

Connect the nodes in a workflow.

-

Run the nodes.

-

Examine the results.

-

Repeat the steps as required.

Related Topics

Parent topic: About Workflows

4.1.2 Workflow Terminology

A workflow is a directed graph consisting of nodes that are connected to one another.

There are specific terms used to indicate the nodes in relation to the workflow. For example, consider a workflow composed of two nodes, N1 and N2. Assume that N1 is connected to N2:

-

Parent node and child node: The node N1 is the parent node of N2, and the node N2 is a child of N1. A parent node provides information that the child node needs when it runs. You must build a model before you apply to new data.

-

Descendants and ancestors: The node N2 is called the descendant of N1 if there is a workflow connection starting from N1 that eventually connects to N2. N1 is called the ancestor of N2. N1 is always closer to the root node than N2.

-

Root nodes: The nodes that have no parent nodes are called root nodes. All workflows have at least one root node. A parent node can have multiple root nodes.

Note:

A parent node is closer to a root node than its child node.

-

Siblings: If a node has several child nodes, then the child nodes are referred to as siblings.

-

Upstream: Parent nodes are called upstream of child nodes.

Parent topic: About Workflows

4.1.3 Workflow Thumbnail

The thumbnail view of the workflow provides an overall view of the workflow. Thumbnails are most useful for large workflows.

To open the thumbnail viewer, go to View and click Thumbnail. Alternatively, press Ctrl+Shift+T to open the Thumbnail viewer.

In a Thumbnail view, you can:

-

Navigate to a specific node in the workflow

-

Change the zoom level of the workflow

-

Change the node that is currently viewed in the workflow

The Thumbnail view includes a rectangle. Click inside the viewer to move the rectangle around, thereby changing the focus of the display in the workflow editor. You can also resize the rectangle for a finer level of control.

When viewing models in the thumbnail view, if the model generates trees, then the thumbnail view automatically opens to provide an overall view to display the shape of the tree. The thumbnail view also shows your location in the overall model view.

Parent topic: About Workflows

4.1.4 Components

The Components pane lists the components that you can add to workflows.

To add a component to a workflow, drag and drop the component from the Components pane to the workflow.

The Components pane opens automatically when you load a workflow. If the Components pane is not visible, then go to View and click Components.

-

My Components: Contains the following two tabs:

-

Favorites

-

Recently Used

-

-

Workflow Editor: Contains the nodes categorized into categories from where you can use them to create a workflow.

-

All Pages: Lists all the available components in one list.

Parent topic: About Workflows

4.1.4.1 Workflow Editor

The Workflow Editor contains nodes that are categorized into sections. You can create and connect the following nodes in a workflow:

Parent topic: Components

4.1.5 Workflow Properties

The Workflow Properties tab enables you to add or change comments associated with the selected workflow.

Related Topics

Parent topic: About Workflows

4.1.6 Properties

Properties enables you to view and change information about the entire workflow or a node in a workflow.

To view the properties of a node, right-click the node and select Go to Properties.

If you select either the workflow or one of its node, then the respective properties are displayed in the Properties pane. For example, if you select a Data Source node, then the Data Source node properties are displayed in the Properties pane.

Note:

In the earlier releases of Oracle Data Miner, the Properties tab was known as the Property Inspector.

You can perform multiple tasks for a node and a workflow from:

-

Properties context menu

-

Properties pane

Related Topics

Parent topic: About Workflows

4.2 Working with Workflows

A workflow allows you to create a series of nodes, and link them to each other to perform the required processing on your data. You cannot use Oracle Data Miner until you have created a workflow for your work.

Ensure that you meet the workflow requirements. You can perform the following tasks with a workflow:

- Creating a Workflow

You must create a workflow to define machine learning operations such as model build, test, and apply. - Deploying Workflows

Oracle Data Miner enables you to generate a script from a workflow that recreates all the objects generated by that workflow. - Deleting a Workflow

You can delete a workflow from the workflow context menu. - Loading a Workflow

When you open a workflow, it loads in the workflow pane. - Managing a Workflow

After you create a workflow, the workflow is listed under Projects in the Data Miner tab. - Oracle Enterprise Manager Jobs

Oracle Enterprise Manager (OEM) allows database administrators to define jobs through the OEM application. - Renaming a Workflow

You can rename a workflow using the workflow content menu. - Runtime Requirements

You must have the Data Miner repository installed on the system where the scripts are run. - Running a Workflow

The View Event Log enables you to view the progress of a workflow that is running. - Scheduling a Workflow

Using the Workflow Schedule, you can define a schedule to run a workflow at a definite time and date. - Workflow Prerequisites

Before you perform any task with a workflow, the workflow prerequisites must be met. - Workflow Script Requirements

Workflow script requirements include the following:

Parent topic: Workflow

4.2.1 Creating a Workflow

You must create a workflow to define machine learning operations such as model build, test, and apply.

To create a blank workflow:

Parent topic: Working with Workflows

4.2.1.1 Workflow Name Restrictions

A workflow name must meet the following conditions:

-

The character count for the workflow name should be between 1 and 128.

-

The workflow name must not have a slash (/).

-

The workflow name must be unique within the project.

Parent topic: Creating a Workflow

4.2.2 Deploying Workflows

Oracle Data Miner enables you to generate a script from a workflow that recreates all the objects generated by that workflow.

In earlier releases of Data Miner, you could only generate a script for Transformation nodes.

A script that recreates all objects generated by that workflow, also enables you to replicate the behavior of the entire workflow. Such scripts provide a basis for application integration or a lightweight deployment of the workflow, that does not require Data Miner repository installation and workflows in the target and production system.

Oracle Data Miner provides the following two types of deployment:

- Deploy Workflows using Data Query Scripts

- Deploy Workflows using Object Generation Scripts

- Running Workflow Scripts

Parent topic: Working with Workflows

4.2.2.1 Deploy Workflows using Data Query Scripts

Any node that generates data has the Save SQL option in its context menu.

The Save SQL option generates a SQL script. The generated SQL can be created using the SQL*Plus script or as a standard SQL script. The advantage of the script format is the ability to override table and model references with parameters.

The Save SQL option enables you to select the kind of script to generate, and the location to save the script.

Related Topics

Parent topic: Deploying Workflows

4.2.2.2 Deploy Workflows using Object Generation Scripts

The Object Generation Script generates scripts that create objects. The scripts that generate objects are:

-

Scripts Generated

-

Script Variable Definitions

Related Topics

Parent topic: Deploying Workflows

4.2.2.3 Running Workflow Scripts

You can run workflow scripts using Oracle Enterprise Manager Jobs or Oracle Scheduler Jobs. You can run the generated scripts in the following ways:

-

As Oracle Enterprise Manager Jobs, either as SQL Script or an operating system command.

-

As Oracle Scheduler Jobs:

-

External Jobs that calls the SQL Script

-

Environment: Using Oracle Enterprise Manager for runtime management or using PL/SQL

-

-

Run the scripts using SQL*PLus or SQL Worksheet.

Note:

To run a script, ensure that Oracle Data Miner repository is installed.

Related Topics

Parent topic: Deploying Workflows

4.2.3 Deleting a Workflow

You can delete a workflow from the workflow context menu.

To delete a workflow:

- In the Data Miner tab, expand Projects and select the workflow that you want to delete.

- Right-click and click Delete.

Parent topic: Working with Workflows

4.2.4 Loading a Workflow

When you open a workflow, it loads in the workflow pane.

After creating a workflow, you can perform the following tasks:

-

Load a workflow: In the Data Miner tab, expand the project and double-click the workflow name. The workflow opens in a new tab.

-

Close a workflow: Close the tab in which the workflow is loaded and displayed.

-

Save a workflow: Go to File and click Save. If a workflow has any changes, then they are saved when you exit.

Parent topic: Working with Workflows

4.2.5 Managing a Workflow

After you create a workflow, the workflow is listed under Projects in the Data Miner tab.

To perform any one of the following tasks, right-click the workflow under Projects and select an option from the context menu:

-

New Workflow: To create a new workflow in the current project.

-

Delete: To delete the workflow from the project.

-

Rename: To rename the workflow.

-

Export: To export a workflow.

-

Import: To import a workflow.

- Exporting a Workflow Using the GUI

You can export a workflow, save it as an XML file, and then import it into another project. - Import Requirements of a Workflow

To import a workflow, you must meet the requirements related to workflow compatibility, permissions, user account related rights. - Data Table Names

The account from which the workflow is exported is encoded in the exported workflow. - Workflow Compatibility

Before you import or export a workflow, ensure that Oracle Data Miner versions are compatible. - Building and Modifying Workflows

You can build and modify workflows using the Workflow Editor. The Workflow Editor is the tool to modify workflows. - Missing Tables or Views

If Oracle Data Miner detects that some tables or views are missing from the imported schema, then it gives you the option to select another table. - Managing Workflows using Workflow Controls

Several controls are available as icon in the top border of a workflow, just below the name of the workflow. - Managing Workflows and Nodes in the Properties Pane

In the Properties pane, you can view and change information about the entire workflow or a node in a workflow. - Performing Tasks from Workflow Context Menu

The context menu options depend on the type of the node. It provides the shortcut to perform various tasks and view information related to the node.

Parent topic: Working with Workflows

4.2.5.1 Exporting a Workflow Using the GUI

You can export a workflow, save it as an XML file, and then import it into another project.

To export a workflow:

Related Topics

Parent topic: Managing a Workflow

4.2.5.2 Import Requirements of a Workflow

To import a workflow, you must meet the requirements related to workflow compatibility, permissions, user account related rights.

The import requirements of a workflow are:

-

All the tables and views used as data sources in the exported workflow must be in the new account.

-

The tables or views must have the same name in the new account as they did in the old account.

-

It may be necessary to redefine the Data Source node.

-

If the workflow includes Model nodes, then the account where the workflow is imported must contain all models that are used. The Model node may have to be redefined in the same way that Data Source nodes are.

-

You must have permission to run the workflow.

-

The workflow must satisfy the compatibility requirements.

Related Topics

Parent topic: Managing a Workflow

4.2.5.3 Data Table Names

The account from which the workflow is exported is encoded in the exported workflow.

Assume that a workflow is exported from the account DMUSER and contains the Data Source node with data MINING_DATA_BUILD.

If you import the schema into a different account, that is, an account that is not DMUSER, and try to run the workflow, then the Data Source node fails because the workflow looks for DMUSER.MINING_DATA_BUILD_V.

To resolve this issue:

-

Right-click the Data Source node

MINING_DATA_BUILD_Vand select Define Data Wizard.A message appears indicating that

DMUSER.MINING_DATA_BUILD_Vdoes not exist in the available tables or views. -

Click OK and select

MINING_DATA_BUILD_Vin the current account. This resolves the issue.

Parent topic: Managing a Workflow

4.2.5.4 Workflow Compatibility

Before you import or export a workflow, ensure that Oracle Data Miner versions are compatible.

The compatibility requirements for importing and exporting workflows are:

-

Workflows exported using a version of Oracle Data Miner earlier than Oracle Database 11g Release 2 (11.2.0.1.2) cannot always be imported into a later version of Oracle Data Miner.

-

Workflows exported from an earlier version of Oracle Data Miner may contain invalid XML. If a workflow XML file contains invalid XML, then the import is terminated.

To check the version of Oracle Data Miner:

-

Go to Help and click Version.

-

Click the Extensions tab.

-

Select Data Miner.

Parent topic: Managing a Workflow

4.2.5.5 Building and Modifying Workflows

You can build and modify workflows using the Workflow Editor. The Workflow Editor is the tool to modify workflows.

The Workflow Editor is supported by:

Parent topic: Managing a Workflow

4.2.5.6 Missing Tables or Views

If Oracle Data Miner detects that some tables or views are missing from the imported schema, then it gives you the option to select another table.

Table Selection Failureindicating that the table is missing. You have the choice to select another table.

To select another table, click Yes. The Define Data Source Wizard opens. Use the wizard to select a table and attributes.

Parent topic: Managing a Workflow

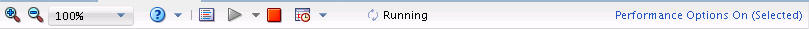

4.2.5.7 Managing Workflows using Workflow Controls

Several controls are available as icon in the top border of a workflow, just below the name of the workflow.

You can perform the following tasks:

-

Zoom in and zoom out workflow nodes: Click the

and

and  icon respectively.

icon respectively.

-

Control node size: Click the percent drop-down list and select the size. Default size is 100%.

-

View event log: Click the

icon.

icon.

-

Run selected nodes: Click the

icon. When you select the node to be run, the triangle turns green.

icon. When you select the node to be run, the triangle turns green.

-

Schedule workflow: Click

to create or edit a workflow schedule.

to create or edit a workflow schedule.

-

Refresh Workflow Data Definition: Click

to ensure that the workflow is updated with new columns that are either added or removed.

to ensure that the workflow is updated with new columns that are either added or removed.

Note:

The Refresh Workflow Data Definition option is applicable only to Data Source node and SQL Query node. -

Set performance settings: Click

to set the In-Memory and Parallel settings for the nodes in the workflow.

to set the In-Memory and Parallel settings for the nodes in the workflow.

Parent topic: Managing a Workflow

4.2.5.8 Managing Workflows and Nodes in the Properties Pane

In the Properties pane, you can view and change information about the entire workflow or a node in a workflow.

To view the properties of a node:

- Right-click the node and select Go to Properties. The corresponding Properties pane opens. For example, if you select a Data Source Node, then in the Properties pane, the details of the Data Source node is displayed.

- Use the Search field to find items in Properties.

- Click the

icon to open the editor for the item that you are viewing.

icon to open the editor for the item that you are viewing. - Use the options in the context menu to perform additional tasks.

Related Topics

Parent topic: Managing a Workflow

4.2.5.9 Performing Tasks from Workflow Context Menu

The context menu options depend on the type of the node. It provides the shortcut to perform various tasks and view information related to the node.

To view a workflow, double-click the name of the workflow in the Data Miner tab. The workflow opens in a tab located between the Data Miner tab and the Components pane. If you open several workflows, then each workflow opens in a different tab. Only one workflow is active at a time.

The following options are available in the context menu:

-

Close: Closes the selected tab.

-

Close All: Closes all workflow tabs.

-

Close Other: Closes all tabs except the current one.

-

Maximize: Maximizes the workflow. The Data Miner tab, Components pane, and other items are not visible. To return to previous size, click the selection again.

-

Minimize: Minimizes the Properties tab to a menu. To return to the previous size, right-click the Properties tab, and click Dock.

-

Split Vertically: Splits the active editor or viewer into two documents. The two documents are split vertically. Click the selection again to undo the split.

-

Split Horizontally: Splits the active editor or viewer into two documents. The two documents are split horizontally. Click the selection again to undo the split.

-

New Document Tab Group: Adds the currently active editor or viewer into its own tab group. Use Collapse Editor Tab Groups to undo this operation.

-

Collapse Document Tab Groups: Collapses all the editors or viewers into one tab group. Only displayed after New Editor Tab Group.

-

Float: Converts the Properties tab into a movable pane.

-

Dock: Sets the location for floating window. Alternatively, you can press Alt+Shift+D.

-

Clone: Creates a new instance of Properties.

-

Freeze Content: Freezes the content. To unfreeze Properties, click again on this selection.

Parent topic: Managing a Workflow

4.2.6 Oracle Enterprise Manager Jobs

Oracle Enterprise Manager (OEM) allows database administrators to define jobs through the OEM application.

The job is run through OEM instead of Oracle Scheduler. The job can be run manually from OEM. The running of the job can be monitored. The result is either a success or a reported failure.

-

The job definitions can directly open the generated scripts files.

-

The job definition should define the master script invocation as a script file using a full file path.

-

The job can be run on a schedule or on demand.

-

All script that run within the master script must have fully qualified path names.

Parent topic: Working with Workflows

4.2.7 Renaming a Workflow

You can rename a workflow using the workflow content menu.

To change the name of a workflow or project:

Related Topics

Parent topic: Working with Workflows

4.2.8 Runtime Requirements

You must have the Data Miner repository installed on the system where the scripts are run.

The generated scripts require access to Data Miner repository objects. The script checks the repository version and ensures that the repository is the same version or greater than the version of the source system.

Parent topic: Working with Workflows

4.2.9 Running a Workflow

The View Event Log enables you to view the progress of a workflow that is running.

You can also view the time taken for workflow creation.

Model builds can be very resource-intensive. The MAX_NUM_THREADS parameter in the Oracle Data Miner server controls the number of parallel builds. MAX_NUM_THREADS specifies the maximum number of model builds across all workflows running in the server.

Default Value=10. Therefore, by default, 10 models can occur concurrently across all workflows. There is the MAX_NUM_THREADS parameter in the repository table ODMRSYS. ODMR$REPOSITORY_PROPERTIES, where you can specify the value.

If you increase the value of MAX_NUM_THREADS, then do it gradually. Workflows can appear to be running even though the network connection is lost.

To control the parallel model build behavior, the following parameters are used:

-

THREAD_WAIT_TIME. The default is

5.When MAX_NUM_THREADS is reached, further Build process will be put on queue until parallel model build count MAX_NUM_THREADS. This setting (in seconds) determines how often to check for parallel model build count. -

MAX_THREAD_WAIT. The default is NULL. The timeout (in seconds) for Build process that has been put on queue. If NULL, then no timeout will occur.

- Network Connection Interruption

You may encounter interruption in network connection while running a workflow. - Locking and Unlocking Workflows

A workflow is locked when it is opened, or one or all its nodes are running.

Related Topics

Parent topic: Working with Workflows

4.2.9.1 Network Connection Interruption

You may encounter interruption in network connection while running a workflow.

If a network connection to the Data Miner server is interrupted while running a workflow, then the Workflow Editor may indicate that the workflow is still running indefinitely. On the other hand, the Workflow Jobs window may indicate that the connection is down.

Oracle Data Miner issues a message indicating that the connection was lost. If the connection is not recovered in a reasonable period, then close and open the Workflow Editor again.

Note:

Use the Workflow Jobs window to monitor connections.

Parent topic: Running a Workflow

4.2.9.2 Locking and Unlocking Workflows

A workflow is locked when it is opened, or one or all its nodes are running.

A workflow is locked under the following conditions:

-

When a node is running, the workflow is locked, and none of its nodes can be edited. Existing results may be viewed while the workflow is running. You can also go to a different workflow and edit or run it.

-

When a user opens a workflow, the workflow is locked so that other users cannot modify it.

-

When a workflow is running, an animation in the tool bar (circular arrow loop) shows that the workflow is running.

-

When you open a locked workflow,

Lockedis displayed in the tool bar and a running indicator if the workflow is running:

-

If no one else has the workflow locked, and you are given the lock, then lock icon is removed from the tool bar.

-

Unlocking a locked workflow: If a workflow seems to complete, but is still locked, then click Locked to unlock the workflow.

-

Refreshing Workflow: Once a locked workflow has stopped running, you can refresh the workflow by clicking the

icon. You can also try to obtain the lock yourself by clicking on the lock.

icon. You can also try to obtain the lock yourself by clicking on the lock.

Parent topic: Running a Workflow

4.2.10 Scheduling a Workflow

Using the Workflow Schedule, you can define a schedule to run a workflow at a definite time and date.

You can also edit an existing Workflow Schedule and cancel any scheduled workflows. To create a workflow schedule:

- Create Schedule

Use the Create Schedule option to define a schedule to run a workflow at a definite time and date. - Repeat

- Repeat Hourly

- Repeat Daily

- Repeat Weekly

- Repeat Monthly

- Repeat Yearly

- Schedule

- Save a Schedule

- Advanced Settings

Parent topic: Working with Workflows

4.2.10.1 Create Schedule

Use the Create Schedule option to define a schedule to run a workflow at a definite time and date.

To save the workflow schedule settings, click  . You can provide a name for the schedule in the Save a Schedule dialog box.

. You can provide a name for the schedule in the Save a Schedule dialog box.

Related Topics

Parent topic: Scheduling a Workflow

4.2.10.2 Repeat

Parent topic: Scheduling a Workflow

4.2.10.3 Repeat Hourly

If you select Hourly in the Frequency field, then select after how many hours, the workflow should run.

- The Frequency field displays

Hourly. - In Every field select a number by clicking the arrow. This number determines after how many hours the workflow should run. For example, if you select 5, then after every 5 hours, your workflow will run.

- Click OK. This takes you to the Create Schedule dialog box.

Parent topic: Scheduling a Workflow

4.2.10.4 Repeat Daily

If you select Daily in the Frequency field, then select after how many days, the workflow should run.

- The Frequency field displays

Daily. - In Every field select a number by clicking the arrow. This number determines after how many days the workflow should run. For example, if you select 5, then after every 5 days, your workflow will run.

- Click OK. This takes you to the Create Schedule dialog box.

Parent topic: Scheduling a Workflow

4.2.10.5 Repeat Weekly

If you select Weekly in the Frequency field, then select after how many weeks, and on which days of the week, the workflow should run.

- The Frequency field displays

Weekly. - In Every field, select a number by clicking the arrow. This number determines after how many weeks the workflow should run. For example, if you select 2, then after every 2 weeks, your workflow will run.

- Select the days of the week on which you want to run the workflow.

- Click OK. This takes you to the Create Schedule dialog box.

Parent topic: Scheduling a Workflow

4.2.10.6 Repeat Monthly

If you select Monthly in the Frequency field, then select after how many months, and on which dates of the month, the workflow should run.

Parent topic: Scheduling a Workflow

4.2.10.7 Repeat Yearly

If you select Yearly in the Frequency field, then select after how many years, the workflow should run. Select the months in the appropriate field.

- The Frequency field displays

Yearly. - In Every field, select a number by clicking the arrow. This number determines after how many years the workflow should run.

- Select the months of the year, on which the workflow should run.

- Click OK. This takes you to the Create Schedule dialog box.

Parent topic: Scheduling a Workflow

4.2.10.8 Schedule

Parent topic: Scheduling a Workflow

4.2.10.9 Save a Schedule

- In the Name field, provide a name for the workflow schedule.

- Click OK.

Parent topic: Scheduling a Workflow

4.2.10.10 Advanced Settings

In the Advanced Settings dialog box, you can set up email notifications, settings related to workflow jobs and nodes. To set up email notifications, and other settings:

-

In the Notification tab:

-

Select Enable Email Notification to receive notifications.

-

In the Recipients field, enter the email addresses to receive notifications.

-

In the Subject field, enter an appropriate subject.

-

In the Comments fields, enter comments, if any.

-

Select one or more events for which you want to receive the notifications:

-

Started:To receive notifications for all jobs that started. -

Succeeded:To receive notifications for all jobs that succeeded. -

Failed:To receive notifications for all jobs that failed. -

Stopped:To receive notifications for all jobs that stopped.

-

-

Click OK.

-

-

In the Settings tab:

-

In the Time Zone field, select a time zone of your preference.

-

In the Job Priority field, set the priority of the workflow job by placing the pointer between High and Low.

-

Select Max Failure and set a number as the maximum number of failed workflow execution.

-

Select Max Run Duration and set the days, hours and minutes for the duration of maximum run time of the workflow job.

-

Select Schedule Limit and set the days, hours and minutes.

-

Click OK.

-

-

In the Nodes tab, all workflow nodes that are scheduled to run are displayed. This is a ready only display.

Parent topic: Scheduling a Workflow

4.2.11 Workflow Prerequisites

Before you perform any task with a workflow, the workflow prerequisites must be met.

The workflow prerequisites are:

-

Create and establish a connection to the database.

-

Create a project under which the workflow is created.

Related Topics

Parent topic: Working with Workflows

4.2.12 Workflow Script Requirements

Workflow script requirements include the following:

- Script File Character Set Requirements

Ensure that all script files are generated using UTF8 character set. - Script Variable Definitions

Scripts have variable definitions that provide object names for the public objects created by the scripts. - Scripts Generated

Scripts Generated is a kind of deployment where several general scripts such as master script, cleanup script and so on are generated. - Running Scripts using SQL*Plus or SQL Worksheet

If you run generated scripts using either SQL*Plus or SQL Worksheet, and require input from users, then you must run a command before the generated SQL.

Parent topic: Working with Workflows

4.2.12.1 Script File Character Set Requirements

Ensure that all script files are generated using UTF8 character set.

Scripts can contain characters based on character sets that will not be handled well unless the script file is generated using UTF8 character set.

Parent topic: Workflow Script Requirements

4.2.12.2 Script Variable Definitions

Scripts have variable definitions that provide object names for the public objects created by the scripts.

The Master Script is responsible for calling all underlying scripts in order. So, the variable definitions must be defined in the Master Script.

The variable Object Types enables you to change the name of the object names that are input to the scripts, such as tables or views, and models. By default, these names are the original table or view, and model names.

All generated scripts should be put under the same directory.

Parent topic: Workflow Script Requirements

4.2.12.3 Scripts Generated

Scripts Generated is a kind of deployment where several general scripts such as master script, cleanup script and so on are generated.

The generated scripts are:

-

Master Script: Starts all the required scripts in the appropriate order. The script performs the following:

-

Validates if the version of the script is compatible with the version of the Data Miner repository that is installed.

-

Creates a workflow master table that contains entries for all the underlying objects created by the workflow script.

-

Contains generated documentation covering key usage information necessary to understand operation of scripts.

-

-

Cleanup Script: Drops all objects created by the workflow script. The Cleanup Script drops the following objects:

-

Hidden objects, such as the table generated for Explore Data.

-

Public objects, such as Model Names created by Build Nodes.

-

Tables created by a Create Table Node.

-

-

Workflow Diagram Image: Creates an image (.png file) of the workflow at the time of script generation. The entire workflow is displayed.

The other scripts that are generated depend on the nodes in the chain. Table 4-1 lists the nodes and their corresponding script functionality.

Table 4-1 Nodes and Script Functionality

| Node | Script Functionality |

|---|---|

|

Creates a view reflecting the output of the node. |

|

Filter Column node |

Creates a view reflecting the output of the Filter Column node such as other Transform type nodes. If the |

|

Build Text node |

For each text transformation, the following objects are created:

Creates a view reflecting the output of the Build Text node. This is essentially the same output as an Apply Text node |

|

Classification Build node |

A model is created for each model build specification. A master test result table is generated to store the list of test result tables generated per model. GLM Model Row Diagnostics Table is created if row diagnostics is turned on. Each Model Test has one table generated for each of the following test results: Performance, Performance Matrix, ROC (for binary classification only). Each Model Test has one table for each of the following tests per target value (up to 100 maximum target values): List and Profit. |

|

Regression Build node |

A model is created for each model build specification. GLM Model Row Diagnostics Table is created if row diagnostics is turned on. A master test result table is generated to store the list of test result tables generated per model. Each Model Test will have one table generated for each of the following test results: Performance and Residual. |

|

A model is created for each model build specification. |

|

Test Node (Classification) |

A master test result table is generated to store the list of test result tables generated per model. GLM Model Row Diagnostics Table is created if row diagnostics is turned on. Each Model Test has one table generated for each of these test results: Performance, Performance Matrix, ROC (for binary classification only). Each Model Test has one table for each of Lift and Profit per target value up to 100 maximum target values. |

|

Test Node (Regression) |

GLM Model Row Diagnostics Table is created if row diagnostics is turned on. A master test result table is generated to store the list of test result tables generated per model. Each Model Test has one table generated for each of Performance and Residual. |

|

No scripts are generated. These nodes are just reference nodes to metadata. |

Parent topic: Workflow Script Requirements

4.2.12.4 Running Scripts using SQL*Plus or SQL Worksheet

If you run generated scripts using either SQL*Plus or SQL Worksheet, and require input from users, then you must run a command before the generated SQL.

Run the following command before the generated SQL:

set define off

You can either run this new line separately before running the generated SQL. You can also run the new line along with the generated SQL.

Parent topic: Workflow Script Requirements

4.3 About Nodes

Nodes define the machine learning operations in a workflow.

A workflow consists of one or more nodes that are connected by a link. The node categories, listed in Table 4-2, are available in the Components pane.

- Node Name and Node Comments

Every node must have a name and may have a comment. Name assignment is fully validated to assure uniqueness. Oracle Data Miner generates a default node name. - Node Types

Oracle Data Miner provides different types of nodes for specific purposes such as build, model, linking, machine learning operations, data transformation and so on. - Node States

A node is always associated with a state which indicates its status.

Parent topic: Workflow

4.3.1 Node Name and Node Comments

Every node must have a name and may have a comment. Name assignment is fully validated to assure uniqueness. Oracle Data Miner generates a default node name.

When a new node of particular type is created, its default name is based on the node type, such as Class Build for a Classification node. If a node with that name already exists, then the name is appended with 1 to make it unique. So if Class Build exists, then the node is named Class Build 1. If Class Build 1 exists, then 2 is appended so that the third Classification node is named Class Build 2, and so on. Each node type is handled independently. For example, Filter Columns node have its own sequence, different from the sequence of Classification nodes.

You can change the default name to any name that satisfies the following requirements:

-

Node names must be unique within the workflow.

-

Node names must not contain any / (slash) characters.

-

Node names must be at least one character long. Maximum length of node name is 128 characters.

To change a node name, either change it in the Details tab of Properties or select the name in the workflow and type the new name.

Comments for the node are optional. If you provide any comments, then it must be not more than 4000 characters long.

Parent topic: About Nodes

4.3.2 Node Types

Oracle Data Miner provides different types of nodes for specific purposes such as build, model, linking, machine learning operations, data transformation and so on.

Table 4-2 lists the different categories of nodes:

Table 4-2 Types of Nodes

| Type | Description |

|---|---|

|

They specify models to build or models to add to a workflow. |

|

|

They evaluate and apply models. |

|

|

They specify data for mining operation, data transformation or to save data to a table. |

|

|

They perform one or more transformation on the table or tables identified in a Data node. |

|

|

They create predictive results without the need to build models. The predictive queries automatically generate refined predictions based on data partitions. |

|

|

They prepare data sources that contain one or more text columns so that the data can be used in Build and Apply models. |

|

|

They provide a way to link or connect nodes. |

Parent topic: About Nodes

4.3.3 Node States

A node is always associated with a state which indicates its status.

Table 4-3 lists the states of a node.

Table 4-3 Node States

| Node States | Description | Graphical Indicator |

|---|---|---|

|

|

Indicates that the node has not been completely defined and is not ready for execution. Most nodes must be connected to be valid. That is, they must have the input defined. A Data node does not need to be connected to be valid. |

|

|

|

Indicates that the node attempted to run but encountered an error. For nodes that perform several tasks such as build several models, any single failure sets the status of the node to You must correct all problems to clear the Any change clears the |

|

|

|

Indicates that the node is properly defined and is ready for execution. Nodes in |

No graphical indicator |

|

|

Indicates that the node execution is successfully completed. |

|

|

|

Indicates that the node execution is complete but not with the expected result. |

|

Parent topic: About Nodes

4.4 Working with Nodes

You can perform the following tasks with any nodes.

- Add Nodes or Create Nodes

You create or add nodes to the workflow. - Copy Nodes

You can copy one or more nodes, and paste them into the same workflow or into a different workflow. - Edit Nodes

You can edit nodes by using any one of the following ways: - Link Nodes

Each link connects a source node to a target node. - Refresh Nodes

Nodes such as Data Source node, Update Table node, and Model node rely on database resources for their definition. It may be necessary to refresh a node definition if the database resources change. - Run Nodes

You perform the tasks specified in the workflow by running one or more nodes. - Performing Tasks from the Node Context Menu

The context menu options depend on the type of the node. It provides the shortcut to perform various tasks and view information related to the node.

Parent topic: Workflow

4.4.1 Add Nodes or Create Nodes

You create or add nodes to the workflow.

For the node to be ready to run, you may have to specify information such as a table or view for a Data Source node or the target for a Classification node. To specify information, you edit nodes. You must connect nodes for the workflow to run. For example, you specify input for a Build node by connecting a Data node to a Build node.

To add nodes to a workflow:

Parent topic: Working with Nodes

4.4.2 Copy Nodes

You can copy one or more nodes, and paste them into the same workflow or into a different workflow.

Copy and paste does not carry with it any mining model or results that belong to the original nodes. Since model names must be unique, unique names are assigned to models, using original name as a starting point.

For example, when a copied Build node is pasted into a workflow, the paste operation creates a new Build node with settings identical to those of the original node. However, models or test results do not exist until the node runs.

Related Topics

Parent topic: Working with Nodes

4.4.3 Edit Nodes

You can edit nodes by using any one of the following ways:

- Editing Nodes through Edit Dialog Box

The Edit dialog box for each node gives you the option to provide and edit settings related to the node. - Editing Nodes through Properties

The Properties pane of a node is the pane that opens when a node in a workflow is selected. You can dock this pane.

Parent topic: Working with Nodes

4.4.3.1 Editing Nodes through Edit Dialog Box

The Edit dialog box for each node gives you the option to provide and edit settings related to the node.

To display the Edit dialog box of any node:

- Double-click the node or right-click the node and select Edit.

- The Edit dialog box opens. Make the edits to the node as applicable, and click OK.

For some nodes, such as Data Source node, the Edit Data Source Node dialog box automatically opens either when the node is dropped in to the workflow or when an input node is connected to a node.

Parent topic: Edit Nodes

4.4.3.2 Editing Nodes through Properties

The Properties pane of a node is the pane that opens when a node in a workflow is selected. You can dock this pane.

To edit a node through Properties pane:

Parent topic: Edit Nodes

4.4.4 Link Nodes

Each link connects a source node to a target node.

A workflow is a directional graph. That is, it consists of nodes that are connected in order. To create a workflow, you connect or link nodes. When you connect two nodes, you indicate a relationship between the nodes. For example, to specify the data for a model build, link a Data Source node to a Model Build node.

You can link nodes, delete links, and cancel links using the following options:

- Linking Nodes in Components Pane

You can connect two or more nodes by using the Linking nodes option. - Node Connection Dialog Box

In the Node Connection dialog box, you can link two or more nodes. - Change Node Position

You can change the position of a node in a workflow. - Connect Option in Diagram Menu

Use the Connect option in the diagram menu to link two or more nodes. - Deleting a Link

You can delete an existing link, by selecting the link and pressing the DELETE key. - Cancelling a Link

You can cancel a link while you are linking it, by pressing the ESC key.

Parent topic: Working with Nodes

4.4.4.1 Linking Nodes in Components Pane

You can connect two or more nodes by using the Linking nodes option.

To connect two nodes using the Linking Nodes option:

Parent topic: Link Nodes

4.4.4.2 Node Connection Dialog Box

In the Node Connection dialog box, you can link two or more nodes.

To link two nodes :

Parent topic: Link Nodes

4.4.4.3 Change Node Position

You can change the position of a node in a workflow.

You can change the position of workflow nodes in these ways:

-

Drag: To drag a node, click the node. Without releasing the mouse button, drag the node to the desired location. Links to the node are automatically repositioned.

-

Adjust with arrow keys: To adjust the position by small increments, select the node. Then press and hold Shift + CONTROL keys. Use the arrow keys to move the node.

Parent topic: Link Nodes

4.4.4.4 Connect Option in Diagram Menu

Use the Connect option in the diagram menu to link two or more nodes.

To use the Connect option:

Parent topic: Link Nodes

4.4.4.5 Deleting a Link

You can delete an existing link, by selecting the link and pressing the DELETE key.

You may want to delete a link between two or more nodes in a workflow, if required.

Parent topic: Link Nodes

4.4.4.6 Cancelling a Link

You can cancel a link while you are linking it, by pressing the ESC key.

To cancel a link while you are linking it, press the ESC key or select another item in the Components pane.

Parent topic: Link Nodes

4.4.5 Refresh Nodes

Nodes such as Data Source node, Update Table node, and Model node rely on database resources for their definition. It may be necessary to refresh a node definition if the database resources change.

To refresh a node:

Other possible validation error scenarios include:

-

Source table not present: Error message states that the source table or attribute is missing. You have the option to:

-

Select another table or replace a missing attribute.

-

Leave the node in its prior state by clicking Cancel.

-

-

Model is invalid: Click the Model refresh button on the Properties model tool bar to make the node valid. For Model nodes, the node becomes

Invalidif a model is found to be invalid. The Model node cannot be run until the node is madeValid. -

Model is missing: If the Model node is run and the server determines that the model is missing, then the node is set to

Error.You can rerun the model after the missing model is replaced.

Parent topic: Working with Nodes

4.4.6 Run Nodes

You perform the tasks specified in the workflow by running one or more nodes.

If a node depends on outputs of one or more parent nodes, then the parent node runs automatically only if the outputs required by the running node are missing. You can also run one or more nodes by selecting the nodes and then clicking in the toolbar of the workflow.

Note:

Nodes cannot be run always. If any ancestor node is in the Invalid state and its outputs are missing, then the child nodes that depend on it cannot run.

Each node in a workflow has a state that indicates its status.

You can run a node by clicking the following options in the context menu:

-

Run

-

Force Run

Related Topics

Parent topic: Working with Nodes

4.4.7 Performing Tasks from the Node Context Menu

The context menu options depend on the type of the node. It provides the shortcut to perform various tasks and view information related to the node.

Right-click a node to display the context list for the node. The context menu includes the following:

- Connect

Use the Connect option to link nodes in a workflow. - Run

Use the Run option to execute the tasks specified in the nodes that comprise the workflow. - Force Run

Use the Force Run option to rerun one or more nodes that are complete. - Create Schedule

Use the Create Schedule option to define a schedule to run a workflow at a definite time and date. - Edit

Use the Edit option to edit the default settings of a node. - View Data

Use the View Data option to view the data contained in a Data node. - View Models

Use the View Models option to view the details of the models that are built after running the workflow. - Generate Apply Chain

Use the Generate Apply Chain to create a new node that contains the specification of a node that performs a transformation. - Refresh Input Data Definition

Use the Refresh Input Data Definition option if you want to update the workflow with new columns, that are either added or removed. - Show Event Log

Use the Show Event Log option to view information about events in the current connection, errors, warnings, and information messages. - Deploy

Use the Deploy option to deploy a node or workflow by creating SQL scripts that perform the tasks specified in the workflow. - Show Graph

The Show Graph option opens the Graph Node Editor. - Cut

Use the Cut option to remove the selected object, which could be a node or connection. - Copy

Use the Copy option to copy one or more nodes and paste them into the same workflow or a different workflow. - Paste

Use the Paste option to paste the copied object in the workflow. - Extended Paste

Use the Extended Paste option to preserve node and model names while pasting them. - Select All

Use the Select All option to select all the nodes in a workflow. - Performance Settings

Use the Performance Settings option to edit Parallel settings and In-Memory settings of the nodes. - Toolbar Actions

Use the Toolbar Action option to select actions in the toolbar from the context menu. - Show Runtime Errors

Use the Show Runtime Errors to view errors related to node failure during runtime. This option is displayed only when running of the node fails at runtime. - Show Validation Errors

Use the Show Validation Errors option to view validation errors, if any. - Save SQL

Use the Save SQL option to generate SQL script for the selected node. - Validate Parents

Use the Validate Parents option to validate all parent nodes of the current node. - Compare Test Results

Use the Compare Test Results option to view and compare test results of models that are built successfully. - View Test Results

Use the View Test Results option to view the test results of the selected model. This option is applicable only for Classification and Regression models. - Go to Properties

Use the Go to Properties option to open the Properties pane of the selected node. - Navigate

Use the Navigate option to view the links available from the selected node.

Parent topic: Working with Nodes

4.4.7.1 Connect

Use the Connect option to link nodes in a workflow.

To connect nodes:

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.2 Run

Use the Run option to execute the tasks specified in the nodes that comprise the workflow.

The Data Miner server runs workflows asynchronously. The client does not have to be connected. You can run one or more nodes in the workflow:

-

To run one node: Right-click the node and select Run.

-

To run multiple nodes simultaneously: Select the nodes by holding down the Ctrl key and click each individual node. Then right-click any selected node and select Run.

If a node depends on outputs of one or more parent nodes, then the parent node runs automatically only if the outputs required by the running node are missing.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.3 Force Run

Use the Force Run option to rerun one or more nodes that are complete.

Force Run deletes any existing models before building them once again.

To select more than one node, click the nodes while holding down the Ctrl key.

You can Force Run a node at any location in a workflow. Depending on the location of the node in the workflow, you have the following choices for running the node using Force Run:

-

Selected Node -

Selected Node and Children(available if the node has child nodes) -

Child Node Only(available if the node one or more child nodes) -

Selected Node and Parents(available if the node has parent nodes)

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.4 Create Schedule

Use the Create Schedule option to define a schedule to run a workflow at a definite time and date.

To save the workflow schedule settings, click  . You can provide a name for the schedule in the Save a Schedule dialog box.

. You can provide a name for the schedule in the Save a Schedule dialog box.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.5 Edit

Use the Edit option to edit the default settings of a node.

Nodes have default algorithms and settings. When you edit a node, the default algorithms and settings are modified. You can edit a node in any one of the following ways:

-

Edit nodes using the Edit dialog box

-

Edit nodes through Properties UI

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.6 View Data

Use the View Data option to view the data contained in a Data node.

The Data nodes are Create Table or View node, Data Source node, Explore Data node, Graph node, SQL Query node, and Update Table node.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.7 View Models

Use the View Models option to view the details of the models that are built after running the workflow.

To view models, you must select a model from the list to open the model viewer. A model must be built successfully before it can be viewed.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.8 Generate Apply Chain

Use the Generate Apply Chain to create a new node that contains the specification of a node that performs a transformation.

If you have several transformations performed in sequence, for example, Sample followed by a Custom transform, then you must select Generate Apply Chain for each transformation in the sequence.You must connect the individual nodes and connect them to an appropriate data source.

Generate Apply Chain helps you create a sequence of transformations that you can use to ensure that new data is prepared in the same way as existing data. For example, to ensure that Apply data is prepared in the same way as Build data, use this option.

The Generate Apply Chain option is not valid for all nodes. For example, it does not copy the specification of a Build node.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.9 Refresh Input Data Definition

Use the Refresh Input Data Definition option if you want to update the workflow with new columns, that are either added or removed.

SELECT* capability in the input source. The option allows you to quickly refresh your workflow definitions to include or exclude columns, as applicable.

Note:

The Refresh Input Data Definition option is available as a context menu option in Data Source nodes and SQL Query nodes.Parent topic: Performing Tasks from the Node Context Menu

4.4.7.10 Show Event Log

Use the Show Event Log option to view information about events in the current connection, errors, warnings, and information messages.

Clicking the Show Event Log option opens the View and Event Log dialog box.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.11 Deploy

Use the Deploy option to deploy a node or workflow by creating SQL scripts that perform the tasks specified in the workflow.

The scripts generated by Deploy are saved to a directory.

Note:

You must run a node before deploying it.

You can generate a script that replicates the behavior of the entire workflow. Such a script can serve as the basis for application integration or as a light-weight deployment than the alternative of installing the Data Miner repository and workflows in the target and production system.

To deploy a workflow or part of a workflow:

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.12 Show Graph

The Show Graph option opens the Graph Node Editor.

All graphs are displayed in the Graph Node Editor.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.13 Cut

Use the Cut option to remove the selected object, which could be a node or connection.

You can also delete objects by selecting them and pressing DELETE on your keyboard.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.14 Copy

Use the Copy option to copy one or more nodes and paste them into the same workflow or a different workflow.

To copy and paste nodes:

Note:

Copying and pasting nodes do not carry any mining models or results from the original node.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.15 Paste

Use the Paste option to paste the copied object in the workflow.

To paste an object, right-click the workflow and click Paste. Alternatively, you can press Ctrl+V.

Note:

Node names and model names are changed to avoid naming collisions. To preserve names, use the option Extended Paste.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.16 Extended Paste

Use the Extended Paste option to preserve node and model names while pasting them.

The default behavior of Paste is to change node names and model names to avoid naming collisions.

To go to the Extended Paste option, right-click the workflow and click Extended Paste. Alternatively, you can press Control+Shift+V.

Note:

If model names are not unique, then the models may be overwritten when they are rebuilt.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.17 Select All

Use the Select All option to select all the nodes in a workflow.

The selected nodes and links are highlighted in a dark blue border.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.18 Performance Settings

Use the Performance Settings option to edit Parallel settings and In-Memory settings of the nodes.

If you click Performance Settings in the context menu, or if you click Performance Options in the workflow toolbar, then the Edit Selected Node Settings dialog box opens. It lists all the nodes that comprise the workflow. To edit the settings in the Edit Selected Node Settings dialog box:

-

Click Parallel Settings and select:

-

Enable: To enable parallel settings in the selected nodes in the workflow.

-

Disable: To disable parallel settings in the selected nodes in the workflow.

-

All: To turn on parallel processing for all nodes in the workflow.

-

None: To turn off parallel processing for all nodes in the workflow.

-

-

Click In-Memory Settings and select:

-

Enable: To enable In-Memory settings for the selected nodes in the workflow.

-

Disable: To disable In-Memory settings for the selected nodes in the workflow.

-

All: To turn on In-Memory settings for the selected nodes in the workflow.

-

None: To turn off In-Memory settings for all nodes in the workflow

-

-

Click

to set the Degree of Parallel, and In-Memory settings such as Compression Method, and Priority Levels in the Edit Node Performance Settings dialog box.

to set the Degree of Parallel, and In-Memory settings such as Compression Method, and Priority Levels in the Edit Node Performance Settings dialog box.

If you specify parallel settings for at least one node, then this indication appears in the workflow title bar:

Performance Settings is either On for Selected nodes, On (for All nodes), or Off. You can click Performance Options to open the Edit Selected Node Settings dialog box.

-

Click

to edit default the preferences for parallel processing.

to edit default the preferences for parallel processing.

-

Edit Node Default Settings: You can edit the Parallel Settings and In-Memory settings for the selected node in the Performance Options dialog box. You can access the Performance Options dialog box from the Preferences options in the SQL Developer Tools menu.

-

Change Settings to Default

-

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.19 Toolbar Actions

Use the Toolbar Action option to select actions in the toolbar from the context menu.

Current actions are Zoom In and Zoom Out.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.20 Show Runtime Errors

Use the Show Runtime Errors to view errors related to node failure during runtime. This option is displayed only when running of the node fails at runtime.

The Event Log opens with a list of errors. Select the error to see the exact message and details.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.21 Show Validation Errors

Use the Show Validation Errors option to view validation errors, if any.

This option is displayed only when there are validation errors. For example, if an Association node is not connected to a Data Source node, then select Show Validation Errors to view the validation error No build data input node connected.

You can also view validation errors by moving the mouse over the node. The errors are displayed in a tool tip.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.22 Save SQL

Use the Save SQL option to generate SQL script for the selected node.

To generate SQL script for the selected node:

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.23 Validate Parents

Use the Validate Parents option to validate all parent nodes of the current node.

To validate parent nodes of a node, right-click the node and select Validate Parents.

You can validate parent nodes when the node is in Ready, Complete and Error state. All parent nodes must be in completed state.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.24 Compare Test Results

Use the Compare Test Results option to view and compare test results of models that are built successfully.

For Classification and Regression models, this option displays the test results for all successfully built models to allow you to pick the model that best solves the problem.

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.25 View Test Results

Use the View Test Results option to view the test results of the selected model. This option is applicable only for Classification and Regression models.

The test results are displayed in the test viewer of the respective models:

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.26 Go to Properties

Use the Go to Properties option to open the Properties pane of the selected node.

Related Topics

Parent topic: Performing Tasks from the Node Context Menu

4.4.7.27 Navigate

Use the Navigate option to view the links available from the selected node.

Note:

The Navigate option is enabled only if there are links to other nodes.Navigate displays the collection of links available from this node. Selecting one of the links selects the link and the selected link is highlighted in the workflow. The link itself has context menu options as well so you can right click and continue with the Navigate option. You can also use the arrow keys to progress to the next node.

Parent topic: Performing Tasks from the Node Context Menu

4.5 About Parallel Processing

In Parallel Query or Parallel Processing, multiple processes work simultaneously to run a single SQL statement.

Oracle Data Miner uses the specifications in a workflow to create SQL queries. These queries are passed and run in the Oracle Database.

By dividing the work among multiple processes, the Oracle Database can run the statement more quickly. For example, suppose four processes handle four different quarters in a year instead of one process handling all four quarters by itself.

The benefits of Parallel Processing:

-

Reduces response time for data-intensive operations on large databases such as data warehouses.

-

Enhances performance of symmetric multiprocessing (SMP) as statement processing are split up among multiple systems. Certain types of OLTP and hybrid systems also benefit from parallel processing.

In Oracle RAC systems, the service placement of a specific service controls parallel processing. Specifically, parallel processes run on the nodes on which the service is configured. By default, Oracle Database runs parallel processes only on an instance that offers the service used to connect to the database. This does not affect other parallel operations such as parallel recovery or the processing of GV$ queries.

Parallel processing must be configured by a Database Administrator (DBA). For more information on parallel processing in Oracle Database, see:

-

Oracle Database Data Warehousing Guide or Oracle Database VLDB and Partitioning Guide for more information about parallel processing

-

Oracle Real Application Clusters Administration and Deployment Guide for considerations about parallel processing in Oracle RAC environments

- Parallel Processing Use Cases

This section lists some common use cases where you can use parallel processing. - Oracle Machine Learning Support for Parallel Processing

Model scoring is done in parallel for all algorithms and data in Oracle Database 12.1 and later.

Related Topics

Parent topic: Workflow

4.5.1 Parallel Processing Use Cases

This section lists some common use cases where you can use parallel processing.

- Premise of the Parallel Processing Use Case

The use cases for parallel processing lists the conditions under which models are built in parallel. - Making Transformation Run Faster, using Parallel Processing and Other Methods

You can make transformations run faster by using Parallel Processing and other techniques. - Running Graph Node in Parallel

You can run Graph nodes in parallel. Even if no other nodes are parallel, performance may improve. - Running a Node in Parallel to Test Performance

You can run parallel processing on a node just once to see if parallel processing results in improved performance.

Parent topic: About Parallel Processing

4.5.1.1 Premise of the Parallel Processing Use Case

The use cases for parallel processing lists the conditions under which models are built in parallel.

Premise of the use case:

-

If the input source for a model is a table defined with parallel and no intervening workflow nodes generate a table that changes this state, then models are built in parallel without any changes to the workflow settings.

-

If the input source is not already defined in parallel, you can still build the model in parallel:

-

For Classification and Regression models: Turn on Parallel Processing for the workflow. The setting for Classification and Regression will be to Split input into Tables with the Parallel option.

-

For all models: Turn on Parallel Processing for the workflow. Insert a Create Table node before the Build node. Use the created table as input to the models.

-

Parent topic: Parallel Processing Use Cases

4.5.1.2 Making Transformation Run Faster, using Parallel Processing and Other Methods

You can make transformations run faster by using Parallel Processing and other techniques.

The techniques are:

-

Turning on Parallel Processing for the workflow:All nodes that have a form of sample input could have some benefit. If the sample size is small, then the Oracle Database may not generate parallel queries. But a complex query could still trigger parallel processing. -

Adding a Create Table node:You can add a Create Table node after expensive transformations to reduce repetitive querying costs. -

Adding an index to Create Table node:You can add an index to a Create Table node to improve downstream join performance.

Parent topic: Parallel Processing Use Cases

4.5.1.3 Running Graph Node in Parallel

You can run Graph nodes in parallel. Even if no other nodes are parallel, performance may improve.

To run a Graph node in parallel:

- Set parallel processing for the entire workflow.

- Turn off parallel processing for all nodes except for the Graph nodes.

- Run the Graph nodes. Graph node sample data is now generated in parallel. If the Graph node sample is small or the query is simple, then the query may not be made parallel.

Related Topics

Parent topic: Parallel Processing Use Cases

4.5.1.4 Running a Node in Parallel to Test Performance

You can run parallel processing on a node just once to see if parallel processing results in improved performance.

To run parallel processing:

- Set parallel processing for the entire workflow.

- Run the workflow. Note the performance.

- Now, turn off parallel processing for the workflow.

Related Topics

Parent topic: Parallel Processing Use Cases

4.5.2 Oracle Machine Learning Support for Parallel Processing

Model scoring is done in parallel for all algorithms and data in Oracle Database 12.1 and later.

All algorithms do not support parallel build. For Oracle Database 12.1 and later, the following algorithms support parallel build:

-

Decision Trees

-

Naive Bayes

-

Minimum Description Length

-

Expectation Maximization

All other algorithms support serial build only.

Parent topic: About Parallel Processing

4.6 Setting Parallel Processing for a Node or Workflow

By default, parallel processing is set to OFF for any node type.

Even if parallel processing is set to ON with a preference, the user can override the section for a specific workflow or node.

To set parallel processing for a node or workflow:

- Right-click the node and select Performance Settings from the context menu. The Edit Selected Node Settings dialog box opens.

- Click OK.

- Performance Settings

Use the Performance Settings option to edit Parallel settings and In-Memory settings of the nodes. - Edit Node Performance Settings

The Edit Node Performance Settings window opens when you click edit icon in the Edit Selected Node Settings. You can set Parallel Processing settings and In-Memory settings for one or all nodes in the workflow. - Edit Node Parallel Settings

In the Edit Node Parallel Settings dialog box, you can provide parallel query settings for the selected node.

Parent topic: Workflow

4.6.1 Performance Settings

Use the Performance Settings option to edit Parallel settings and In-Memory settings of the nodes.

If you click Performance Settings in the context menu, or if you click Performance Options in the workflow toolbar, then the Edit Selected Node Settings dialog box opens. It lists all the nodes that comprise the workflow. To edit the settings in the Edit Selected Node Settings dialog box:

-

Click Parallel Settings and select:

-

Enable: To enable parallel settings in the selected nodes in the workflow.

-

Disable: To disable parallel settings in the selected nodes in the workflow.

-

All: To turn on parallel processing for all nodes in the workflow.

-

None: To turn off parallel processing for all nodes in the workflow.

-

-

Click In-Memory Settings and select:

-

Enable: To enable In-Memory settings for the selected nodes in the workflow.

-

Disable: To disable In-Memory settings for the selected nodes in the workflow.

-

All: To turn on In-Memory settings for the selected nodes in the workflow.

-

None: To turn off In-Memory settings for all nodes in the workflow

-

-

Click

to set the Degree of Parallel, and In-Memory settings such as Compression Method, and Priority Levels in the Edit Node Performance Settings dialog box.

to set the Degree of Parallel, and In-Memory settings such as Compression Method, and Priority Levels in the Edit Node Performance Settings dialog box.

If you specify parallel settings for at least one node, then this indication appears in the workflow title bar:

Performance Settings is either On for Selected nodes, On (for All nodes), or Off. You can click Performance Options to open the Edit Selected Node Settings dialog box.

-

Click

to edit default the preferences for parallel processing.

to edit default the preferences for parallel processing.

-

Edit Node Default Settings: You can edit the Parallel Settings and In-Memory settings for the selected node in the Performance Options dialog box. You can access the Performance Options dialog box from the Preferences options in the SQL Developer Tools menu.

-

Change Settings to Default

-

Parent topic: Setting Parallel Processing for a Node or Workflow

4.6.2 Edit Node Performance Settings

The Edit Node Performance Settings window opens when you click edit icon in the Edit Selected Node Settings. You can set Parallel Processing settings and In-Memory settings for one or all nodes in the workflow.

-

Select Parallel Query On to set parallel processing for the node. If you specify parallel processing for a node type, then the query generated by the node may not run in parallel.

-

System Determined: This is the default degree of parallelism.

-

Degree Value: To specify a value for degree of parallelism, select this option and choose a value by clicking the arrows. The default value is

1.The specified value is displayed in the Degree of Parallel column for the node type in the Performance Option and Edit Selected Node Settings dialog boxes.

-

-

Select In-Memory Columnar option to set the compression method and priority level for the selected node. The selected settings are displayed in the In-Memory Settings option in the Performance Option dialog box.

Note:

The In Memory option is available in Oracle Database 12.1.0.2 and later.-