14 Developing Applications with Graph Analytics

In order to run graph algorithms, the graph application connects to the graph server (PGX) in the middle tier, which in turn connects to the Oracle Database.

Note:

You can only load property graph views into the graph server (PGX). Loading a SQL property graph into the graph server (PGX) is not supported.- About Vertex and Edge IDs

The graph server (PGX) enforces by default the existence of a unique identifier for each vertex and edge in a graph. - Graph Management in the Graph Server (PGX)

You can load a graph into the graph server (PGX) and perform different actions such as publish, store, or delete a graph. - Keeping the Graph in Oracle Database Synchronized with the Graph Server

You can use theFlashbackSynchronizerAPI to automatically apply changes made to graph in the database to the correspondingPgxGraphobject in memory, thus keeping both synchronized. - Optimizing Graphs for Read Versus Updates in the Graph Server (PGX)

The graph server (PGX) can store an optimized graph for other reads or updates. This is only relevant when the updates are made directly to a graph instance in the graph server. - Executing Built-in Algorithms

The graph server (PGX) contains a set of built-in algorithms that are available as Java APIs. - Using Custom PGX Graph Algorithms

A custom PGX graph algorithm allows you to write a graph algorithm in Java syntax and have it automatically compiled to an efficient parallel implementation. - Creating Subgraphs

You can create subgraphs based on a graph that has been loaded into memory. You can use filter expressions or create bipartite subgraphs based on a vertex (node) collection that specifies the left set of the bipartite graph. - Using Automatic Delta Refresh to Handle Database Changes

You can automatically refresh (auto-refresh) graphs periodically to keep the in-memory graph synchronized with changes to the property graph stored in the property graph tables in Oracle Database (VT$ and GE$ tables). - User-Defined Functions (UDFs) in PGX

User-defined functions (UDFs) allow users of PGX to add custom logic to their PGQL queries or custom graph algorithms, to complement built-in functions with custom requirements. - Using the Graph Server (PGX) as a Library

When you utilize PGX as a library in your application, the graph server (PGX) instance runs in the same JVM as the Java application and all requests are translated into direct function calls instead of remote procedure invocations.

Parent topic: Using the Graph Server (PGX)

14.1 About Vertex and Edge IDs

The graph server (PGX) enforces by default the existence of a unique identifier for each vertex and edge in a graph.

PgxGraph.getVertex(ID id) and

PgxGraph.getEdge(ID id), or by PGQL queries using the built-in

id() method.

vertex_id_strategy and edge_id_strategy:

keys_as_ids: This is the default strategy to generate vertex IDs.partitioned_ids: This is the recommended strategy for partitioned graphs.unstable_generated_ids: This results in system generated vertex or edge IDs.no_ids: This strategy disables vertex or edge IDs and therefore prevents you from calling APIs using vertex or edge IDs.

Using keys to generate IDs

The default strategy to generate the vertex IDs is to use the keys provided during

loading of the graph (keys_as_ids). In that case, each vertex should have a

vertex key that is unique across all providers.

For edges, by default no keys are required in the edge data, and edge IDs will

be automatically generated by PGX (unstable_generated_ids). This automatic

ID generation can be applied for vertex IDs also. Note that the generation of vertex or edge

IDs is not guaranteed to be deterministic. If required, it is also possible to load edge

keys as IDs.

The partitioned_ids strategy requires keys to be unique only

within a vertex or edge provider (data source). The keys do not have to be globally

unique. Globally unique IDs are derived from a combination of the provider name and the key

inside the provider, as

<provider_name>(<unique_key_within_provider>).

For example, Account(1).

The partititioned_ids strategy can be set through the configuration fields vertex_id_strategy and edge_id_strategy. For example,

{

"name": "bank_graph_analytics",

"optimized_for": "updates",

"vertex_id_strategy" : "partitioned_ids",

"edge_id_strategy" : "partitioned_ids",

"vertex_providers": [

{

"name": "Accounts",

"format": "rdbms",

"database_table_name": "BANK_ACCOUNTS",

"key_column": "ID",

"key_type": "integer",

"props": [

{

"name": "ID",

"type": "integer"

},

{

"name": "NAME",

"type": "string"

}

],

"loading": {

"create_key_mapping" : true

}

}

],

"edge_providers": [

{

"name": "Transfers",

"format": "rdbms",

"database_table_name": "BANK_TXNS",

"key_column": "ID",

"source_column": "FROM_ACCT_ID",

"destination_column": "TO_ACCT_ID",

"source_vertex_provider": "Accounts",

"destination_vertex_provider": "Accounts",

"props": [

{

"name": "ID",

"type": "integer"

},

{

"name": "AMOUNT",

"type": "double"

}

],

"loading": {

"create_key_mapping" : true

}

}

]

}Note:

All available key types are supported in combination with partitioned IDs.After the graph is loaded, PGX maintains information about which property of a

provider corresponds to the key of the provider. In the preceding example, the vertex

property ID happens to correspond to the vertex key and also the edge

property ID happens to correspond to the edge key. Each provider can have

at most one such "key property" and the property can have any name.

vertex key property ID cannot be updatedUsing an auto-incrementer to generate partitioned IDs

It is recommended to always set create_key_mapping to

true to benefit from performance optimizations. But if there are no

single-column keys for edges, create_key_mapping can be set to

false. Similarly, create_key_mapping can be set to

false for vertex providers also. IDs will be generated via an

auto-incrementer, for example Accounts(1), Accounts(2),

Accounts(3).

See PGQL Queries with Partitioned IDs for more information on executing PGQL queries with partitioned IDs.

Parent topic: Developing Applications with Graph Analytics

14.2 Graph Management in the Graph Server (PGX)

You can load a graph into the graph server (PGX) and perform different actions such as publish, store, or delete a graph.

- Reading Graphs from Oracle Database into the Graph Server (PGX)

Once logged into the graph server (PGX), you can read graphs from the database into the graph server. - Storing a Graph Snapshot on Disk

After reading a graph into memory, you can make any changes to the graph (such as running the PageRank algorithm and storing the values as vertex properties), and then store this snapshot of the graph on disk. - Publishing a Graph

You can publish a graph that can be referenced by other sessions. - Deleting a Graph

In order to reduce the memory usage of the graph server (PGX), the session must drop the unused graph objects created through thegetGraph()method, by invoking thedestroy()method.

Parent topic: Developing Applications with Graph Analytics

14.2.1 Reading Graphs from Oracle Database into the Graph Server (PGX)

Once logged into the graph server (PGX), you can read graphs from the database into the graph server.

Your database user must exist and have read access on the graph data in the database.

There are several ways to load a property graph view into the graph server (PGX) from Oracle Database:

- Using the

readGraphByNameAPI - see Loading a PG View Using the readGraphByName API for more details. - Using the PGQL

CREATE PROPERTY GRAPHstatement - see Creating a Property Graph Using PGQL for more details. - Using the

PgViewSubgraphReader#fromPgViewAPI to create and load a subgraph - see Loading a Subgraph from Property Graph Views for more details. - Using a PGX graph configuration file in JSON format - see Loading a Graph Using a JSON Configuration File for more details.

- Using the GraphConfigBuilder class to create Oracle RDBMS graph configurations programmatically through Java methods - see Loading a Graph by Defining a Graph Configuration Object for more details.

- Reading Entity Providers at the Same SCN

If you have a graph which consists of multiple vertex or edge tables or both, then you can read all the vertices and edges at the same System Change Number (SCN). - Progress Reporting and Estimation for Graph Loading

Loading a large graph into the graph server(PGX) can be a long running operation. However, if you load the graph using an asynchronous action, then you can monitor the progress of the graph loading operation. - API for Loading Graphs into Memory

Learn about the APIs used for loading a graph using a JSON configuration file or graph configuration object. - Graph Configuration Options

Learn about the graph configuration options. - Data Loading Security Best Practices

Loading a graph from the database requires authentication and it is therefore important to adhere to certain security guidelines when configuring access to this kind of data source. - Data Format Support Matrix

Learn about the different data formats supported in the graph server (PGX). - Immutability of Loaded Graphs

Once the graph is loaded into the graph server (PGX), the graph and its properties are automatically marked as immutable.

Parent topic: Graph Management in the Graph Server (PGX)

14.2.1.1 Reading Entity Providers at the Same SCN

If you have a graph which consists of multiple vertex or edge tables or both, then you can read all the vertices and edges at the same System Change Number (SCN).

This helps to overcome issues such as reading edge providers at a later SCN than the SCN at which the vertices were read, as some edges may reference missing vertices.

Note that reading a graph from the database is still possible even if Flashback is not enabled on Oracle Database. In case of multiple databases, SCN can be used to maintain consistency for entity providers belonging to the same database only.

You can use the as_of flag in the graph configuration to specify at what

SCN an entity provider must be read. The valid values for the as_of

flag are as follows:

Table 14-1 Valid values for "as_of" Key in Graph Configuration

| Value | Description |

|---|---|

A positive long value

|

This is a parseable SCN value. |

"<current-scn>" |

The current SCN is determined at the beginning of the graph loading. |

"<no-scn>" |

This is to disable SCN at the time of graph loading. |

null |

This defaults to "<current-scn>"

behavior.

|

If "as_of" is omitted for a vertex or an edge provider in

the graph configuration file, then this follows the same behavior as "as_of":

null.

Example 14-1 Graph Configuration Using

"as_of" for Vertex and Edge Providers in the Same

Database

The following example configuration has three vertex providers and one edge provider pointing to the same database.

{

"name": "employee_graph",

"vertex_providers": [

{

"name": "Department",

"as_of": "<current-scn>",

"format": "rdbms",

"database_table_name": "DEPARTMENTS",

"key_column": "DEPARTMENT_ID",

"props": [

{

"name": "DEPARTMENT_NAME",

"type": "string"

}

]

},

{

"name": "Location",

"as_of": "28924323",

"format": "rdbms",

"database_table_name": "LOCATIONS",

"key_column": "LOCATION_ID",

"props": [

{

"name": "CITY",

"type": "string"

}

]

},

{

"name": "Region",

"as_of": "<no-scn>",

"format": "rdbms",

"database_table_name": "REGIONS",

"key_column": "REGION_ID",

"props": [

{

"name": "REGION_NAME",

"type": "string"

}

]

}

],

"edge_providers": [

{

"name": "LocatedAt",

"format": "rdbms",

"database_table_name": "DEPARTMENTS",

"key_column": "DEPARTMENT_ID",

"source_column": "DEPARTMENT_ID",

"destination_column": "LOCATION_ID",

"source_vertex_provider": "Department",

"destination_vertex_provider": "Location"

}

]

}When reading the employee_graph using the preceding

configuration file, the graph is read at the same SCN for the

Department and LocatedAt entity providers.

This is explained in the following table:

Table 14-2 Example Scenario Using "as_of"

| Entity Provider | "as_of" |

SCN Value |

|---|---|---|

Department |

"<current-scn>" |

SCN determined automatically |

Location |

"28924323" |

"28924323" used as SCN

|

Region |

"<no-scn>" |

No SCN used |

LocatedAt |

"as_of" flag is omitted

|

SCN determined automatically |

The current SCN value of the database can be determined using one of the following options:

- Querying

V$DATABASEview:SELECT CURRENT_SCN FROM V$DATABASE; - Using

DBMS_FLASHBACKpackage:SELECT DBMS_FLASHBACK.GET_SYSTEM_CHANGE_NUMBER FROM DUAL;

If you do not have the required privileges to perform either of the preceding operations, then you can use:

SELECT TIMESTAMP_TO_SCN(SYSDATE) FROM DUAL;However, note that this option is less precise than the earlier two options.

You can then read the graph into the graph server using the JSON configuration file as shown:

opg4j> var g = session.readGraphWithProperties("employee_graph.json")PgxGraph g = session.readGraphWithProperties("employee_graph.json");g = session.read_graph_with_properties("employee_graph.json")14.2.1.2 Progress Reporting and Estimation for Graph Loading

Loading a large graph into the graph server(PGX) can be a long running operation. However, if you load the graph using an asynchronous action, then you can monitor the progress of the graph loading operation.

The following table shows the asynchronous graph loading APIs supported for the following formats:

Table 14-3 Asynchronous Graph Loading APIs

| Data Format | API |

|---|---|

| PG VIEWS | session.readGraphByNameAsync() |

| PG SCHEMA | session.readGraphWithPropertiesAsync() |

| CSV | session.readGraphFileAsync() |

These supported APIs return a PgxFuture object.

You can then use the PgxFuture.getProgress() method to collect the

following statistics:

- Report on the progress of the graph loading operation

- Estimate of the remaining vertices and edges that need to be loaded into memory

For example, the following code shows the steps to load a PG view graph

asynchronously and subsequently obtain the FutureProgress object to

report and estimate the loading progress. However, note that the graph loading estimate

(for example, the number of loaded entities and providers or the number of total

entities and providers) can be obtained only until the graph loading operation is in

progress. Also, the system internally computes the graph loading progress for every

10000 entries of entities that are loaded into the graph server (PGX).

opg4j> var graphLoadingFuture = session.readGraphByNameAsync("BANK_GRAPH_VIEW", GraphSource.PG_VIEW)

readGraphFuture ==> oracle.pgx.api.PgxFuture@6106dfb6[Not completed]

opg4j> while (!graphLoadingFuture.isDone()) {

...> var progress = graphLoadingFuture.getProgress();

...> var graphLoadingProgress = progress.asGraphLoadingProgress();

...> if (graphLoadingProgress.isPresent()) {

...> var numLoadedVertices = graphLoadingProgress.get().getNumLoadedVertices();

...> }

...> Thread.sleep(1000);

...> }

opg4j> var graph = graphLoadingFuture.get();

graph ==> PgxGraph[name=BANK_GRAPH_VIEW_3,N=999,E=4993,created=1664289985985]

PgxFuture<PgxGraph> graphLoadingFuture = session.readGraphByNameAsync("BANK_GRAPH_VIEW", GraphSource.PG_VIEW);

while (!graphLoadingFuture.isDone()) {

FutureProgress progress = graphLoadingFuture.getProgress();

Optional < GraphLoadingProgress > graphLoadingProgress = progress.asGraphLoadingProgress();

if (graphLoadingProgress.isPresent()) {

long numLoadedVertices = graphLoadingProgress.get().getNumLoadedVertices();

}

Thread.sleep(1000);

}

PgxGraph graph = graphLoadingFuture.get();It is recommended that you do not use the FutureProgress object in a

chain of asynchronous operations.

14.2.1.3 API for Loading Graphs into Memory

Learn about the APIs used for loading a graph using a JSON configuration file or graph configuration object.

The following methods in PgxSession can be used to load graphs into

the graph server (PGX).

PgxGraph readGraphWithProperties(String path)

PgxGraph readGraphWithProperties(String path, String newGraphName)

PgxGraph readGraphWithProperties(GraphConfig config)

PgxGraph readGraphWithProperties(GraphConfig config, String newGraphName)

PgxGraph readGraphWithProperties(GraphConfig config, boolean forceUpdateIfNotFresh)

PgxGraph readGraphWithProperties(GraphConfig config, boolean forceUpdateIfNotFresh, String newGraphName)

PgxGraph readGraphWithProperties(GraphConfig config, long maxAge, TimeUnit maxAgeTimeUnit)

PgxGraph readGraphWithProperties(GraphConfig config, long maxAge, TimeUnit maxAgeTimeUnit, boolean blockIfFull, String newGraphName)read_graph_with_properties(self, config, max_age=9223372036854775807, max_age_time_unit='days',

block_if_full=False, update_if_not_fresh=True, graph_name=None)The first argument (path to a graph configuration file

or a parsed config object) is the meta-data of the graph to be

read. The meta-data includes the following information:

- Location of the graph data such as file location and name, DB location and connection information, and so on

- Format of the graph data such as plain text formats, XML-based formats, binary formats, and so on

- Types and Names of the properties to be loaded

The forceUpdateIfNotFresh and maxAge

arguments can be used to fine-control the age of the snapshot to be read. The graph

server (PGX) will return an existing graph snapshot if the given graph specification

was already loaded into memory by a different session. So, the

maxAge argument becomes important if reading from a database in

which the data might change frequently. If no forceUpdateIfNotFresh

or maxAge is specified, PGX will favor cached data over reading new

snapshots into memory.

14.2.1.4 Graph Configuration Options

Learn about the graph configuration options.

The following table lists the JSON fields that are common to all graph configurations:

Table 14-4 Graph Config JSON Fields

| Field | Type | Description | Default |

|---|---|---|---|

| name | string | Name of the graph. | Required |

| array_compaction_threshold | number | [only relevant if the graph is optimized for updates] Threshold used to determined when to compact the delta-logs into a new array. If lower than the engine min_array_compaction_threshold value, min_array_compaction_threshold will be used instead

|

0.2 |

| attributes | object | Additional attributes needed to read and write the graph data. | null |

| data_source_id | string |

Data source id to use to connect to an RDBMS instance. | null |

| edge_id_strategy | enum[no_ids, keys_as_ids, unstable_generated_ids] | Indicates what ID strategy should be used for the edges of this graph. If not specified (or set to null), the strategy will be determined during loading or using a default value.

|

null |

| edge_id_type | enum[long] | Type of the edge ID. Setting it to long requires the IDs in the edge providers to be unique across the graphs; those IDs will be used as global IDs. Setting it to null (or omitting it) will allow repeated IDs across different edge providers and PGX will automatically generate globally-unique IDs for the edges.

|

null |

| edge_providers | array of object | List of edge providers in this graph. | [] |

| error_handling | object | Error handling configuration. | null |

| external_stores | array of object | Specification of the external stores where external string properties reside. | [] |

| jdbc_url | string | JDBC URL pointing to an RDBMS instance | null |

| keystore_alias | string | Alias to the keystore to use when connecting to database. | null |

| loading | object | Loading-specific configuration to use. | null |

| local_date_format | array of string | array of local_date formats to use when loading and storing local_date properties. See DateTimeFormatter for more details of the format string

|

[] |

| max_prefetched_rows | integer | Maximum number of rows prefetched during each round trip resultset-database. | 10000 |

| num_connections | integer | Number of connections to read and write data from or to the RDBMS table. | <no-of-cpus> |

| optimized_for | enum[read, updates] | Indicates if the graph should use data-structures optimized for read-intensive scenarios or for fast updates. | read |

| password | string | Password to use when connecting to database. | null |

| point2d | string | Longitude and latitude as floating point values separated by a space. | 0.0 0.0 |

prepared_queries |

array of object |

An additional list of prepared queries with arguments, working in the same way as 'queries'. Data matching at least one those queries will also be loaded. | [] |

queries |

array of string |

A list of queries used to determine which data to load from the database. Data matching at least one of the queries will be loaded. Not setting any query will load the entire graph. | [] |

| redaction_rules | array of object | Array of redaction rules. | [] |

| rules_mapping | array of object | Mapping for redaction rules to users and roles. | [] |

| schema | string | Schema to use when reading or writing RDBMS objects | null |

source_name |

string |

Name of the database graph, if the graph is loaded from a database. | null |

source_type |

enum[pg_view] |

Source type for database graphs. | null |

| time_format | array of string | The time format to use when loading and storing time properties. See DateTimeFormatter for a documentation of the format string.

|

[] |

| time_with_timezone_format | array of string | The time with timezone format to use when loading and storing time with timezone properties. Please see DateTimeFormatter for more information of the format string.

|

[] |

| timestamp_format | array of string | The timestamp format to use when loading and storing timestamp properties. See DateTimeFormatter for more information of the format string.

|

[] |

| timestamp_with_timezone_format | array of string | The timestamp with timezone format to use when loading and storing timestamp with timezone properties. See DateTimeFormatter for more information of the format string.

|

[] |

| username | string | Username to use when connecting to an RDBMS instance. | null |

| vector_component_delimiter | character | Delimiter for the different components of vector properties. | ; |

| vertex_id_strategy | enum[no_ids, keys_as_ids, unstable_generated_ids] | Indicates what ID strategy should be used for the vertices of this graph. If not specified (or set to null), the strategy will be automatically detected.

|

null |

| vertex_id_type | enum[int, integer, long, string] | Type of the vertex ID. For homogeneous graphs, if not specified (or set to null), it will default to a specific value (depending on the origin of the data).

|

null |

| vertex_providers | array of object | List of vertex providers in this graph. | [] |

Note:

Database connection fields specified in the graph configuration will be used as default in case underlying data provider configuration does not specify them.Provider Configuration JSON file Options

You can specify the meta-information about each provider's data using provider configurations. Provider configurations include the following information about the provider data:

- Location of the data: a file, multiple files or database providers

- Information about the properties: name and type of the property

Table 14-5 Provider Configuration JSON file Options

| Field | Type | Description | Default |

|---|---|---|---|

| format | enum[pgb, csv, rdbms] | Provider format. | Required |

| name | string | Entity provider name. | Required |

| attributes | object | Additional attributes needed to read and write the graph data. | null |

| destination_vertex_provider | string | Name of the destination vertex provider to be used for this edge provider. | null |

| error_handling | object | Error handling configuration. | null |

| has_keys | boolean | Indicates if the provided entities data have keys. | true |

| key_type | enum[int, integer, long, string] | Type of the keys. | long |

| keystore_alias | string | Alias to the keystore to use when connecting to database. | null |

| label | string | label for the entities loaded from this provider. | null |

| loading | object | Loading-specific configuration. | null |

| local_date_format | array of string | Array of local_date formats to use when loading and storing local_date properties. See DateTimeFormatter for a documentation of the format string. | [] |

| password | string | Password to use when connecting to database. | null |

| point2d | string | Longitude and latitude as floating point values separated by a space. | 0.0 0.0 |

| props | array of object | Specification of the properties associated with this entity provider. | [] |

| source_vertex_provider | string | Name of the source vertex provider to be used for this edge provider. | null |

| time_format | array of string | The time format to use when loading and storing time properties. See DateTimeFormatter for a documentation of the format string. | [] |

| time_with_timezone_format | array of string | The time with timezone format to use when loading and storing time with timezone properties. See DateTimeFormatter for a documentation of the format string. | [] |

| timestamp_format | array of string | The timestamp format to use when loading and storing timestamp properties. See DateTimeFormatter for a documentation of the format string. | [] |

| timestamp_with_timezone_format | array of string | The timestamp with timezone format to use when loading and storing timestamp with timezone properties. See DateTimeFormatter for a documentation of the format string. | [] |

| vector_component_delimiter | character | Delimiter for the different components of vector properties. | ; |

Provider Labels

The label field in the provider configuration can be used to set a label for the entities loaded from the provider. If no label is specified, all entities from the provider are labeled with the name of the provider. It is only possible to set the same label for two different providers if they have exactly the same properties (same names and same types).

Property Configuration

The props entry in the Provider configuration is an object with the following JSON fields:

Table 14-6 Property Configuration

| Field | Type | Description | Default |

|---|---|---|---|

| name | string | Name of the property. | Required |

| type | enum[boolean, integer, vertex, edge, float, long, double, string, date, local_date, time, timestamp, time_with_timezone, timestamp_with_timezone, point2d] | Type of the property .

Note: date is deprecated, use one of local_date / time / timestamp / time_with_timezone / timestamp_with_timezone instead). |

Required |

| aggregate | enum[identity, group_key, min, max, avg, sum, concat, count] | [currently unsupported] which aggregation function to use, aggregation always happens by vertex key. | null |

| column | value | Name or index (starting from 0) of the column holding the property data. If it is not specified, the loader will try to use the property name as column name (for CSV format only). | null |

| default | value | Default value to be assigned to this property if datasource does not provide it. In case of date type: string is expected to be formatted with yyyy-MM-dd HH:mm:ss. If no default is present (null), non-existent properties will contain default Java types (primitives) or empty string (string) or 01.01.1970 00:00 (date).

|

null |

| dimension | integer | Dimension of property. | 0 |

| drop_after_loading | boolean | [currently unsupported] indicating helper properties only used for aggregation, which are dropped after loading | false |

| field | value | Name of the JSON field holding the property data. Nesting is denoted by dot - separation. Field names containing dots are possible, in this case the dots need to be escaped using backslashes to resolve ambiguities. Only the exactly specified object are loaded, if they are non existent, the default value is used. | null |

| format | array of string | Array of formats of property. | [] |

| group_key | string | [currently unsupported] can only be used if the property / key is part of the grouping expression. | null |

| max_distinct_strings_per_pool | integer | [only relevant if string_pooling_strategy is indexed] Amount of distinct strings per property after which to stop pooling. If the limit is reached an exception is thrown. If set to null, the default value from the global PGX configuration will be used. | null |

| stores | array of object | A list of storage identifiers that indicate where this property resides. | [] |

| string_pooling_strategy | enum[indexed, on_heap, none] | Indicates which string pooling strategy to use. If set to null, the default value from the global PGX configuration will be used.

|

null |

Loading Configuration

The loading entry is a JSON object with the following fields:

Table 14-7 Loading Configuration

| Field | Type | Description | Default |

|---|---|---|---|

| create_key_mapping | boolean | If true, a mapping between entity keys and internal IDs is prepared during loading.

|

true |

| filter | string | [currently unsupported] the filter expression | null |

| grouping_by | array of string | [currently unsupported] array of edge properties used for aggregator. For Vertices, only the ID can be used (default) | [] |

| load_labels | boolean | Whether or not to load the entity label if it is available. | false |

| strict_mode | boolean | If true, exceptions are thrown and logged with ERROR level whenever loader encounters problems with input file, such as invalid format, repeated keys, missing fields, mismatches and other potential errors. If false, loader may use less memory during loading phase, but behave unexpectedly with erratic input files.

|

true |

Error Handling Configuration

The error_handling entry is a JSON object with the following fields:

Table 14-8 Error Handling Configuration

| Field | Type | Description | Default |

|---|---|---|---|

| on_missed_prop_key | enum[silent, log_warn, log_warn_once, error] | Error handling for a missing property key. | log_warn_once |

| on_missing_vertex | enum[ignore_edge, ignore_edge_log, ignore_edge_log_once, create_vertex, create_vertex_log, create_vertex_log_once, error] | Error handling for a missing source or destination vertex of an edge in a vertex data source. | error |

| on_parsing_issue | enum[silent, log_warn, log_warn_once, error] | Error handling for incorrect data parsing. If set to silent, log_warn or log_warn_once, will attempt to continue loading. Some parsing issues may not be recoverable and provoke the end of loading.

|

error |

| on_prop_conversion | enum[silent, log_warn, log_warn_once, error] | Error handling when encountering a different property type other than the one specified, but coercion is possible. | log_warn_once |

| on_type_mismatch | enum[silent, log_warn, log_warn_once, error] | Error handling when encountering a different property type other than the one specified, but coercion is not possible. | error |

| on_vector_length_mismatch | enum[silent, log_warn, log_warn_once, error] | Error handling for a vector property that does not have the correct dimension. | error |

Note:

The only supported setting for theon_missing_vertex error handling configuration is ignore_edge.

14.2.1.5 Data Loading Security Best Practices

Loading a graph from the database requires authentication and it is therefore important to adhere to certain security guidelines when configuring access to this kind of data source.

The following guidelines are recommended:

- The user or role used to access the data should be a read-only account that only has access to the required graph data.

- The graph data should be marked as read-only, for example, with non-updateable views in the case of the database.

14.2.1.6 Data Format Support Matrix

Learn about the different data formats supported in the graph server (PGX).

Note:

The table refers to limitations of the PGX implementation of the format and not necessarily to limitations of the format itself.Table 14-9 Data Format Support Matrix

| Format | Vertex IDs | Edge IDs | Vertex Labels | Edge Labels | Vector properties |

|---|---|---|---|---|---|

PGB |

int, long, string |

long |

multiple | single | supported (vectors can be of type integer, long, float or double)

|

CSV |

int, long, string |

long |

multiple | single | supported (vectors can be of type integer, long, float or double)

|

ADJ_LIST |

int, long, string |

Not supported | Not supported | Not supported | supported (vectors can be of type integer, long, float or double)

|

EDGE_LIST |

int, long, string |

Not supported | multiple | single | supported (vectors can be of type integer, long, float or double)

|

GRAPHML |

int, long, string |

Not supported | Not supported | Not supported | Not supported |

14.2.1.7 Immutability of Loaded Graphs

Once the graph is loaded into the graph server (PGX), the graph and its properties are automatically marked as immutable.

The immutability of loaded graphs is due to the following design choices:

- Typical graph analyses happen on a snapshot of a graph instance, and therefore they do not require mutations of the graph instance.

- Immutability allows PGX to use an internal graph representation optimized for fast analysis.

- In remote mode, the graph instance might be shared among multiple clients.

However, the graph server (PGX) also provides methods to customize and mutate graph instances for the purpose of analysis. See Graph Mutation and Subgraphs for more information.

14.2.2 Storing a Graph Snapshot on Disk

After reading a graph into memory, you can make any changes to the graph (such as running the PageRank algorithm and storing the values as vertex properties), and then store this snapshot of the graph on disk.

If you want to save the state of the graph in memory, then a snapshot of a graph can be saved as a file in binary format (PGB file).

In general, if you must shut down the graph server, then it is recommended that you store all the graph queries and analytics APIs that have been run on the graph. Once the graph server (PGX) is restarted, you can reload the graph and rerun the APIs.

However, if you must save the state of the graph, then the following example explains how to store the graph snapshot on disk.

As a prerequisite for storing the graph snapshot, you need to explicitly authorize access to the corresponding directories by defining a directory object pointing to the directory (on the graph server) that contains the files to read or write.

CREATE OR REPLACE DIRECTORY pgx_file_location AS '<path_to_dir>';

GRANT READ, WRITE ON directory pgx_file_location to GRAPH_DEVELOPER;Also, note the following:

- The directory in the

CREATE DIRECTORYstatement must exist on the graph server (PGX). - The directory must be readable (and/or writable) at the OS level by the graph server (PGX).

The preceding code grants the privileges on the directory to the

GRAPH_DEVELOPER role. However, you can also grant permissions to an

individual user:

GRANT READ, WRITE ON DIRECTORY pgx_file_location TO <graph_user>;You can then run the following code to load a property graph view into the graph server (PGX) and save the graph snapshot as a file. Note that multiple PGB files will be generated, one for each vertex and edge provider in the graph.

opg4j> var g = session.readGraphByName("BANK_GRAPH", GraphSource.PG_VIEW)

g ==> PgxGraph[name=BANK_GRAPH_NEW,N=999,E=4993,created=1676021791568]

opg4j> analyst.pagerank(graph)

$8 ==> VertexProperty[name=pagerank,type=double,graph=BANK_GRAPH]

// Now save the state of this graph

opg4j> var storedPgbConfig = graph.store(ProviderFormat.PGB, "<path_to_dir>")In a three-tier deployment, the file is written on the server-side file system. You must also ensure that the file location to write is specified in the graph server (PGX). (As explained in Three-Tier Deployments of Oracle Graph with Autonomous Database, in a three-tier deployment, access to the PGX server file system requires a list of allowed locations to be specified.)

Parent topic: Graph Management in the Graph Server (PGX)

14.2.3 Publishing a Graph

You can publish a graph that can be referenced by other sessions.

Publishing a Single Graph Snapshot

The PgxGraph#publish() method can be used to publish the

current selected snapshot of the graph. The publish operation will move the graph

name from the session-private namespace to the public namespace. If a graph with the

same name has been already published, then the publish() method

will fail with an exception. Graphs published with snapshots and single published

snapshots share the same namespace.

Table 12-6 describes the grants required to publish a graph.

Note that calling the publish() method without arguments

publishes the snapshot with its persistent properties only. However, if you want to

publish specific transient properties, then you must list them within the

publish() call as shown:

opg4j> var prop1 = graph.createVertexProperty(PropertyType.INTEGER, "prop1")

opg4j> prop1.fill(0)

opg4j> var cost = graph.createEdgeProperty(PropertyType.DOUBLE, "cost")

opg4j> cost.fill(0d)

opg4j> graph.publish(List.of(prop1), List.of(cost))VertexProperty<Integer, Integer> prop1 = graph.createVertexProperty(PropertyType.INTEGER, "prop1");

prop1.fill(0);

EdgeProperty<Double> cost = graph.createEdgeProperty(PropertyType.DOUBLE, "cost");

cost.fill(0d);

List<VertexProperty<Integer, Integer> vertexProps = Arrays.asList(prop);

List<EdgeProperty<Double>> edgeProps = Arrays.asList(cost);

graph.publish(vertexProps, edgeProps);

prop = graph.create_vertex_property("integer", "prop1")

prop1.fill(0)

cost = graph.create_edge_property("double", "cost")

cost.fill(0d)

vertex_props = [prop]

edge_props = [cost]

graph.publish(vertex_props, edge_props)Publishing a Graph with Snapshots

If you want to make all snapshots of the graph

visible to other sessions, then use the publishWithSnapshots()

method. When a graph is published with snapshots, the GraphMetaData

information of each snapshot is also made available to the other sessions, with the

exception of the graph configuration, which is null.

When calling the publishWithSnapshots() method, all

the persistent properties of all the snapshots are published and made visible to the

other sessions. Transient properties are session-private and therefore they must be

published explicitly. Once published, all properties become read-only.

Similar

to publishing a single graph snapshot, the publishWithSnapshots()

method will move the graph name from the session-private namespace to the public

namespace. If a graph with the same name has been already published, then the

publishWithSnapshots() method will fail with an exception.

If you want to publish specific transient

properties, you should list them within the publishWithSnapshots()

call, as in the following example:

opg4j> var prop1 = graph.createVertexProperty(PropertyType.INTEGER, "prop1")

opg4j> prop1.fill(0)

opg4j> var cost = graph.createEdgeProperty(PropertyType.DOUBLE, "cost")

opg4j> cost.fill(0d)

opg4j> graph.publishWithSnapshots(List.of(prop1), List.of(cost))VertexProperty<Integer, Integer> prop1 = graph.createVertexProperty(PropertyType.INTEGER, "prop1");

prop1.fill(0);

EdgeProperty<Double> cost = graph.createEdgeProperty(PropertyType.DOUBLE, "cost");

cost.fill(0d);

List<VertexProperty<Integer, Integer> vertexProps = Arrays.asList(prop);

List<EdgeProperty<Double>> edgeProps = Arrays.asList(cost);

graph.publishWithSnapshots(vertexProps,edgeProps);VertexProperty<Integer, Integer> prop1 = graph.createVertexProperty(PropertyType.INTEGER, "prop1")

prop1.fill(0)

EdgeProperty<Double> cost = graph.createEdgeProperty(PropertyType.DOUBLE, "cost")

cost.fill(0d)

List<VertexProperty<Integer, Integer> vertexProps = Arrays.asList(prop)

List<EdgeProperty<Double>> edgeProps = Arrays.asList(cost)

graph.publishWithSnapshots(vertexProps,edgeProps)Referencing a Published Graph from Another Session

You can reference a published graph by its name in another session,

using the PgxSession#getGraph() method.

The following example references a published graph

myGraph in a new session (session2):

opg4j> var session2 = instance.createSession("session2")

opg4j> var graph2 = session2.getGraph(Namespace.PUBLIC, "myGraph")PgxSession session2 = instance.createSession("session2");

PgxGraph graph2 = session2.getGraph(Namespace.PUBLIC, "myGraph");session2 = pypgx.get_session("session2");

PgxGraph graph2 = session2.get_graph("myGraph")

session2 can access only the published snapshot. If the graph has

been published without snapshots, calling the

getAvailableSnapshots() method will return an empty queue.

In case if the graph snapshots have been published, then the call to

getGraph() returns the most recent available snapshot.

session2 can see all the available snapshots through the

getAvailableSnapshots() method. You can then set a specific

snapshot using the PgxSession#setSnapshot() method.

Note:

If a referenced graph is not required anymore, then it is important that you release the graph. See Deleting a Graph for more information.Publishing a Property

After publishing (a single snapshot or all of them), you can still publish transient properties individually. Published properties are associated to a specific snapshot on which they are created, and hence visible only on that snapshot.

opg4j> graph.getVertexProperty("prop1").publish()

opg4j> graph.getEdgeProperty("cost").publish()

graph.getVertexProperty("prop1").publish();

graph.getEdgeProperty("cost").publish();graph.get_vertex_property("prop1").publish()

graph.get_edge_property("cost").publish()Getting a Published Property in Another Session

Sessions referencing a published graph (with or without snapshots) can reference a published property through the

PgxGraph#getVertexProperty and

PgxGraph#getEdgeProperty.

opg4j> var session2 = instance.createSession("session2")

opg4j> var graph2 = session2.getGraph(Namespace.PUBLIC, "myGraph")

opg4j> var vertexProperty = graph2.getVertexProperty("prop1")

opg4j> var edgeProperty = graph2.getEdgeProperty("cost")PgxSession session2 = instance.createSession("session2");

PgxGraph graph2 = session2.getGraph(Namespace.PUBLIC, "myGraph");

VertexProperty<Integer, Integer> vertexProperty = graph2.getVertexProperty("prop1");

EdgeProperty<Double> edgeProperty = graph2.getEdgeProperty("cost");session2 = pypgx.get_session(session_name ="session2")

graph2 = session2.get_graph("myGraph")

vertex_property = graph2.get_vertex_property("prop1")

edge_property = graph2.get_edge_property("cost")Pinning a Published Graph

You can pin a published graph so that it remains published even if no session uses it.

opg4j> graph.pin()graph.pin();>>> graph.pin()Unpinning a Published Graph

You can unpin a published graph that was earlier pinned. By doing this, you can remove the graph and all its snapshots, if no other session is using a snapshot of the graph.

opg4j> var graph = session.getGraph("bank_graph_analytics")

graph ==> PgxGraph[name=bank_graph_analytics,N=999,E=4993,created=1660217577201]

opg4j> graph.unpin()PgxGraph graph = session.getGraph("bank_graph_analytics");

graph.unpin();>>> graph = session.get_graph("bank_graph_analytics")

>>> graph.unpin()Related Topics

Parent topic: Graph Management in the Graph Server (PGX)

14.2.4 Deleting a Graph

In order to reduce the memory usage of the graph server (PGX), the session must drop the

unused graph objects created through the getGraph() method, by invoking the

destroy() method.

Calling the destroy() method not only destroys the specified

graph, but all of its associated properties, including transient properties as well. In addition,

all of the collections related to the graph instance (for example, a VertexSet)

are also destroyed automatically. If a session holds multiple PgxGraph objects

referencing the same graph, invoking destroy() on any of them will invalidate

all the PgxGraph objects referencing that graph, making any operation on those

objects fail.

For example:

opg4j> var graph1 = session.getGraph("myGraphName")

opg4j> var graph2 = session.getGraph("myGraphName")

opg4j> graph2.destroy() // Delete graph2

opg4j> var properties = graph1.getVertexProperties() //throws an exception as graph1 reference is not valid anymore

opg4j> properties = graph2.getVertexProperties() //throws an exception as graph2 reference is not valid anymorePgxGraph graph1 = session.getGraph("myGraphName");

// graph2 references the same graph of graph1

PgxGraph graph2 = session.getGraph("myGraphName");

// Delete graph2

graph2.destroy();

// Both the following calls throw an exception, as both references are not valid anymore

Set<VertexProperty<?, ?>> properties = graph1.getVertexProperties();

properties = graph2.getVertexProperties();graph1 = session.get_graph("myGraphName")

# graph2 references the same graph of graph1

graph2 = session.get_graph("myGraphName")

# Delete graph2

graph2.destroy()

# Both the following calls throw an exception, as both references are not valid anymore

properties = graph1.get_vertex_properties()

properties = graph2.get_vertex_properties()The same behavior occurs when multiple PgxGraph objects

reference the same snapshot. Since a snapshot is effectively a graph, destroying a

PgxGraph object referencing a certain snapshot invalidates all

PgxGraph objects referencing the same snapshot, but does not invalidate those

referencing other snapshots:

// Get a snapshot of "myGraphName"

PgxGraph graph1 = session.getGraph("myGraphName");

// graph2 and graph3 reference the same snapshot as graph1

PgxGraph graph2 = session.getGraph("myGraphName");

PgxGraph graph3 = session.getGraph("myGraphName");

// Assume another snapshot is created ...

// Make graph3 references the latest snapshot available

session.setSnapshot(graph3, PgxSession.LATEST_SNAPSHOT);

graph2.destroy();

// Both the following calls throw an exception, as both references are not valid anymore

Set<VertexProperty<?, ?>> properties = graph1.getVertexProperties();

properties = graph2.getVertexProperties();

// graph3 is still valid, so the call succeeds

properties = graph3.getVertexProperties();Note:

Even if a graph is destroyed by a session, the graph data may still remain in the server memory, if the graph is currently shared by other sessions. In such a case, the graph may still be visible among the available graphs through thePgxSession.getGraphs() method.

As a safe alternative to the manual removal of each graph, the PGX API supports

some implicit resource management features which allow developers to safely omit the

destroy() call. See Resource Management Considerations for more information.

Parent topic: Graph Management in the Graph Server (PGX)

14.3 Keeping the Graph in Oracle Database Synchronized with the Graph Server

You can use the FlashbackSynchronizer API to automatically apply changes made to graph in the database to the corresponding PgxGraph object in memory, thus keeping both synchronized.

This API uses Oracle's Flashback

Technology to fetch the changes in the

database since the last fetch and then push those

changes into the graph server using the

ChangeSet API. After the changes

are applied, the usual snapshot semantics of the

graph server apply: each delta fetch application

creates a new in-memory snapshot. Any queries or

algorithms that are executing concurrently to

snapshot creation are unaffected by the changes

until the corresponding session refreshes its

PgxGraph object to the latest

state by calling the

session.setSnapshot(graph,

PgxSession.LATEST_SNAPSHOT)

procedure.

Also, if the changes from the previous fetch

operation no longer exist, then the synchronizer will throw an

exception. This occurs if the previous fetch duration is longer than

the UNDO_RETENTION parameter setting in the database.

To avoid this exception, ensure to fetch the changes at intervals less

than the UNDO_RETENTION parameter value. The default

setting for the UNDO_RETENTION parameter is

900 seconds. See Oracle Database Reference for more

information.

Prerequisites for Synchronizing

The Oracle database must have Flashback enabled and the database user that you use to perform synchronization must have:

- Read access to all tables which need to be kept synchronized.

- Permission to use flashback APIs. For example:

GRANT EXECUTE ON DBMS_FLASHBACK TO <user>

The database must also be configured to retain changes for the amount of time needed by your use case.

Types of graphs that can be synchronized

Not all PgxGraph objects in PGX can be synchronized. The following limitations apply:

-

Only the original creator of the graph can synchronize it. That is, the current user must have the MANAGE permission of the graph.

- Only graphs loaded from database tables (PG View graphs) can be synchronized. Graphs created from other formats or graphs created via the graph builder API or PG View graphs created from database views cannot be synchronized.

- Only the latest snapshot of a graph can be synchronized.

Types of changes that can be synchronized

The synchronizer supports keeping the in-memory graph snapshot in sync with the following database-side modifications:

- insertion of new vertices and edges

- removal of existing vertices and edges

- update of property values of any vertex or edge

The synchronizer does not support schema-level changes to the input graph, such as:

- alteration of the list of input vertex or edge tables

- alteration of any columns of any input tables (vertex or edge tables)

Furthermore, the synchronizer does not support updates to vertex and edge keys.

For a detailed example, see the following topic:

- Synchronizing a PG View Graph

You can synchronize a graph loaded into the graph server (PGX) from a property graph view (PG View) with the changes made to the graph in the database. - Synchronizing a Published Graph

You can synchronize a published graph by configuring the Flashback Synchronizer with aPartitionedGraphConfigobject containing the graph schema along with the database connection details.

Parent topic: Developing Applications with Graph Analytics

14.3.1 Synchronizing a PG View Graph

You can synchronize a graph loaded into the graph server (PGX) from a property graph view (PG View) with the changes made to the graph in the database.

14.3.2 Synchronizing a Published Graph

You can synchronize a published graph by configuring the Flashback

Synchronizer with a PartitionedGraphConfig object containing the graph

schema along with the database connection details.

PartitionedGraphConfig object can be created either

through the PartitionedGraphConfigBuilder API or by reading the graph

configuration from a JSON file.

Though synchronization of graphs created via graph configuration objects is supported in general, the following few limitations apply:

- Only partitioned graph configurations with all providers being database tables are supported.

- Each edge or vertex provider or both must specify the owner of the

table by setting the username field. For example, if user

SCOTTowns the table, then set the user name accordingly for the providers. - Snapshot source must be set to

CHANGE_SET. - It is highly recommended to optimize the graph for update operations in order to avoid memory exhaustion when creating many snapshots.

The following example shows the sample configuration for creating

the PartitionedGraphConfig object:

{

...

"optimized_for": "updates",

"vertex_providers": [

...

"username":"<username>",

...

],

"edge_providers": [

...

"username":"<username>",

...

],

"loading": {

"snapshots_source": "change_set"

}

}GraphConfig cfg = GraphConfigBuilder.forPartitioned()

…

.setUsername("<username>")

.setSnapshotsSource(SnapshotsSource.CHANGE_SET)

.setOptimizedFor(GraphOptimizedFor.UPDATES)

...

.build();opg4j> var graph = session.readGraphWithProperties("<path_to_json_config_file>")

graph ==> PgxGraph[name=bank_graph_analytics_fb,N=999,E=4993,created=1664310157103]

opg4j> graph.publishWithSnapshots()PgxGraph graph = session.readGraphWithProperties("<path_to_json_config_file>");

graph.publishWithSnapshots();>>> graph = session.read_graph_with_properties("<path_to_json_config_file>")

>>> graph.publish_with_snapshots()You can now perform the following steps to synchronize the published graph using a graph configuration object which is built from a JSON file.

14.4 Optimizing Graphs for Read Versus Updates in the Graph Server (PGX)

The graph server (PGX) can store an optimized graph for other reads or updates. This is only relevant when the updates are made directly to a graph instance in the graph server.

Graph Optimized for Reads

Graphs optimized for reads will provide the best performance for graph analytics and PGQL queries. In this case there could be potentially higher latencies to update the graph (adding or removing vertex and edges or updating the property values of previously existing vertex or edges through GraphChangeSet API). There could also be higher memory consumption. When using graphs optimized for reads, each updated graph or graph snapshot consumes memory proportional to the size of the graph in terms of vertices and edges.

The optimized_for configuration property can be set to

reads when loading the graph into the graph server (PGX) to

create a graph instance that is optimized for reads.

Graph Optimized for Updates

Graphs optimized for updates use a representation enabling low-latency update of graphs. With this representation, the graph server can reach millisecond-scale latencies when updating graphs with millions of vertices and edges (this is indicative and will vary depending on the hardware configuration).

To achieve faster update operations, graph server avoids as much as possible doing a full duplication of the previous graph (snapshot) to create a new graph (snapshot). This also improves the memory consumption (in typical scenarios). New snapshots (or new graphs) will only consume additional memory proportional to the memory required for the changes applied.

In this representation, there could be lower performance of graph queries and analytics.

The optimized_for configuration property can be set to

updates when loading the graph into the graph server (PGX) to

create a graph instance that is optimized for reads.

Parent topic: Developing Applications with Graph Analytics

14.5 Executing Built-in Algorithms

The graph server (PGX) contains a set of built-in algorithms that are available as Java APIs.

The following table provides an overview of the available algorithms, grouped by category. Note that these algorithms can be invoked through the Analyst Class.

Note:

See the supported Built-In Algorithms on GitHub for more details.Table 14-10 Overview of Built-In Algorithms

| Category | Algorithms |

|---|---|

| Classic graph algorithms | Prim's Algorithm |

| Community detection | Conductance Minimization (Soman and Narang Algorithm), Infomap, Label Propagation, Louvain |

| Connected components | Strongly Connected Components, Weakly Connected Components (WCC) |

| Link predition | WTF (Whom To Follow) Algorithm |

| Matrix factorization | Matrix Factorization |

| Other | Graph Traversal Algorithms |

| Path finding | All Vertices and Edges on Filtered Path, Bellman-Ford Algorithms, Bidirectional Dijkstra Algorithms, Compute Distance Index, Compute High-Degree Vertices, Dijkstra Algorithms, Enumerate Simple Paths, Fast Path Finding, Fattest Path, Filtered Fast Path Finding, Hop Distance Algorithms |

| Ranking and walking | Closeness Centrality Algorithms, Degree Centrality Algorithms, Eigenvector Centrality, Hyperlink-Induced Topic Search (HITS), PageRank Algorithms, Random Walk with Restart, Stochastic Approach for Link-Structure Analysis (SALSA) Algorithms, Vertex Betweenness Centrality Algorithms |

| Structure evaluation | Adamic-Adar index, Bipartite Check, Conductance, Cycle Detection Algorithms, Degree Distribution Algorithms, Eccentricity Algorithms, K-Core, Local Clustering Coefficient (LCC), Modularity, Partition Conductance, Reachability Algorithms, Topological Ordering Algorithms, Triangle Counting Algorithms |

This following topics describe the use of the graph server (PGX) using Triangle Counting and PageRank analytics as examples.

- About Built-In Algorithms in the Graph Server (PGX)

- Running the Triangle Counting Algorithm

- Running the PageRank Algorithm

Parent topic: Developing Applications with Graph Analytics

14.5.1 About Built-In Algorithms in the Graph Server (PGX)

The graph server (PGX) contains a set of built-in algorithms that are available as

Java APIs. The details of the APIs are documented in the Javadoc that is included in the

product documentation library. Specifically, see the BuiltinAlgorithms

interface Method Summary for a list of the supported in-memory analyst methods.

For example, this is the PageRank procedure signature:

/**

* Classic pagerank algorithm. Time complexity: O(E * K) with E = number of edges, K is a given constant (max

* iterations)

*

* @param graph

* graph

* @param e

* maximum error for terminating the iteration

* @param d

* damping factor

* @param max

* maximum number of iterations

* @return Vertex Property holding the result as a double

*/

public <ID extends Comparable<ID>> VertexProperty<ID, Double> pagerank(PgxGraph graph, double e, double d, int max);

Parent topic: Executing Built-in Algorithms

14.5.2 Running the Triangle Counting Algorithm

For triangle counting, the sortByDegree boolean parameter of countTriangles() allows you to control whether the graph should first be sorted by degree (true) or not (false). If true, more memory will be used, but the algorithm will run faster; however, if your graph is very large, you might want to turn this optimization off to avoid running out of memory.

opg4j> analyst.countTriangles(graph, true)

==> 1import oracle.pgx.api.*; Analyst analyst = session.createAnalyst(); long triangles = analyst.countTriangles(graph, true);

The algorithm finds one triangle in the sample graph.

Tip:

When using the graph shell, you can increase the amount of log output during execution by changing the logging level. See information about the:loglevel command with :h

:loglevel.

Parent topic: Executing Built-in Algorithms

14.5.3 Running the PageRank Algorithm

PageRank computes a rank value between 0 and 1 for each vertex (node) in the graph and stores the values in a double property. The algorithm therefore creates a vertex property of type double for the output.

In the graph server (PGX), there are two types of vertex and edge properties:

-

Persistent Properties: Properties that are loaded with the graph from a data source are fixed, in-memory copies of the data on disk, and are therefore persistent. Persistent properties are read-only, immutable and shared between sessions.

-

Transient Properties: Values can only be written to transient properties, which are private to a session. You can create transient properties by calling

createVertexPropertyandcreateEdgePropertyonPgxGraphobjects, or by copying existing properties usingclone()on Property objects.Transient properties hold the results of computation by algorithms. For example, the PageRank algorithm computes a rank value between 0 and 1 for each vertex in the graph and stores these values in a transient property named

pg_rank. Transient properties are destroyed when the Analyst object is destroyed.

This example obtains the top three vertices with the highest PageRank values. It uses a transient vertex property of type double to hold the computed PageRank values. The PageRank algorithm uses the following default values for the input parameters: error (tolerance = 0.001), damping factor = 0.85, and maximum number of iterations = 100.

opg4j> rank = analyst.pagerank(graph, 0.001, 0.85, 100);

==> ...

opg4j> rank.getTopKValues(3)

==> 128=0.1402019732468347

==> 333=0.12002296283541904

==> 99=0.09708583862990475

import java.util.Map.Entry;

import oracle.pgx.api.*;

Analyst analyst = session.createAnalyst();

VertexProperty<Integer, Double> rank = analyst.pagerank(graph, 0.001, 0.85, 100);

for (Entry<Integer, Double> entry : rank.getTopKValues(3)) {

System.out.println(entry.getKey() + "=" + entry.getValue());

}Parent topic: Executing Built-in Algorithms

14.6 Using Custom PGX Graph Algorithms

A custom PGX graph algorithm allows you to write a graph algorithm in Java syntax and have it automatically compiled to an efficient parallel implementation.

- Writing a Custom PGX Algorithm

- Compiling and Running a Custom PGX Algorithm

- Example Custom PGX Algorithm: PageRank

Parent topic: Developing Applications with Graph Analytics

14.6.1 Writing a Custom PGX Algorithm

A PGX algorithm is a regular .java file with a single class definition that is

annotated with @GraphAlgorithm. For example:

import oracle.pgx.algorithm.annotations.GraphAlgorithm;

@GraphAlgorithm

public class MyAlgorithm {

...

}

A PGX algorithm class must contain exactly one public method which will be used as entry point. The class may contain any number of private methods.

For example:

import oracle.pgx.algorithm.PgxGraph;

import oracle.pgx.algorithm.VertexProperty;

import oracle.pgx.algorithm.annotations.GraphAlgorithm;

import oracle.pgx.algorithm.annotations.Out;

@GraphAlgorithm

public class MyAlgorithm {

public int myAlgorithm(PgxGraph g, @Out VertexProperty<Integer> distance) {

System.out.println("My first PGX Algorithm program!");

return 42;

}

}

As with normal Java methods, a PGX algorithm method only supports primitive data

types as return values (an integer in this example). More interesting is the

@Out annotation, which marks the vertex property

distance as output parameter. The caller passes output parameters

by reference. This way, the caller has a reference to the modified property after the

algorithm terminates.

Parent topic: Using Custom PGX Graph Algorithms

14.6.1.1 Collections

To create a collection you call the .create() function. For example, a VertexProperty<Integer> is created as follows:

VertexProperty<Integer> distance = VertexProperty.create();To get the value of a property at a certain vertex v:

distance.get(v);Similarly, to set the property of a certain vertex v to a value e:

distance.set(v, e);You can even create properties of collections:

VertexProperty<VertexSequence> path = VertexProperty.create();However, PGX Algorithm assignments are always by value (as opposed to by reference). To make this explicit, you must call .clone() when assigning a collection:

VertexSequence sequence = path.get(v).clone();Another consequence of values being passed by value is that you can check for equality using the == operator instead of the Java method .equals(). For example:

PgxVertex v1 = G.getRandomVertex();

PgxVertex v2 = G.getRandomVertex();

System.out.println(v1 == v2);

Parent topic: Writing a Custom PGX Algorithm

14.6.1.2 Iteration

The most common operations in PGX algorithms are iterations (such as looping over all vertices, and looping over a vertex's neighbors) and graph traversal (such as breath-first/depth-first). All collections expose a forEach and forSequential method by which you can iterate over the collection in parallel and in sequence, respectively.

For example:

- To iterate over a graph's vertices in parallel:

G.getVertices().forEach(v -> { ... }); - To iterate over a graph's vertices in sequence:

G.getVertices().forSequential(v -> { ... }); - To traverse a graph's vertices from

rin breadth-first order:import oracle.pgx.algorithm.Traversal; Traversal.inBFS(G, r).forward(n -> { ... });Inside the

forward(orbackward) lambda you can access the current level of the BFS (or DFS) traversal bycalling currentLevel().

Parent topic: Writing a Custom PGX Algorithm

14.6.1.3 Reductions

Within these parallel blocks it is common to atomically update, or reduce to, a variable defined outside the lambda. These atomic reductions are available as methods on Scalar<T>: reduceAdd, reduceMul, reduceAnd, and so on. For example, to count the number of vertices in a graph:

public int countVertices() {

Scalar<Integer> count = Scalar.create(0);

G.getVertices().forEach(n -> {

count.reduceAdd(1);

});

return count.get();

}

Sometimes you want to update multiple values atomically. For example, you might want to find the smallest property value as well as the vertex whose property value attains this smallest value. Due to the parallel execution, two separate reduction statements might get you in an inconsistent state.

To solve this problem the Reductions class provides argMin and argMax functions. The first argument to argMin is the current value and the second argument is the potential new minimum. Additionally, you can chain andUpdate calls on the ArgMinMax object to indicate other variables and the values that they should be updated to (atomically). For example:

VertexProperty<Integer> rank = VertexProperty.create();

int minRank = Integer.MAX_VALUE;

PgxVertex minVertex = PgxVertex.NONE;

G.getVertices().forEach(n ->

argMin(minRank, rank.get(n)).andUpdate(minVertex, n)

);

Parent topic: Writing a Custom PGX Algorithm

14.6.2 Compiling and Running a Custom PGX Algorithm

Note:

Compiling a custom PGX Algorithm using the PGX Algorithm API is not supported on Oracle JDK 17.Parent topic: Using Custom PGX Graph Algorithms

14.6.3 Example Custom PGX Algorithm: PageRank

The following is an implementation of pagerank as a PGX algorithm:

import oracle.pgx.algorithm.PgxGraph;

import oracle.pgx.algorithm.Scalar;

import oracle.pgx.algorithm.VertexProperty;

import oracle.pgx.algorithm.annotations.GraphAlgorithm;

import oracle.pgx.algorithm.annotations.Out;

@GraphAlgorithm

public class Pagerank {

public void pagerank(PgxGraph G, double tol, double damp, int max_iter, boolean norm, @Out VertexProperty<Double> rank) {

Scalar<Double> diff = Scalar.create();

int cnt = 0;

double N = G.getNumVertices();

rank.setAll(1 / N);

do {

diff.set(0.0);

Scalar<Double> dangling_factor = Scalar.create(0d);

if (norm) {

dangling_factor.set(damp / N * G.getVertices().filter(v -> v.getOutDegree() == 0).sum(rank::get));

}

G.getVertices().forEach(t -> {

double in_sum = t.getInNeighbors().sum(w -> rank.get(w) / w.getOutDegree());

double val = (1 - damp) / N + damp * in_sum + dangling_factor.get();

diff.reduceAdd(Math.abs(val - rank.get(t)));

rank.setDeferred(t, val);

});

cnt++;

} while (diff.get() > tol && cnt < max_iter);

}

}

Parent topic: Using Custom PGX Graph Algorithms

14.7 Creating Subgraphs

You can create subgraphs based on a graph that has been loaded into memory. You can use filter expressions or create bipartite subgraphs based on a vertex (node) collection that specifies the left set of the bipartite graph.

Note:

Starting from Graph Server and Client Release 22.3, creating subgraphs using filter expressions is deprecated. It is recommended that you load a subgraph from property graph views. See Loading a Subgraph from Property Graph Views for more information.For information about reading a graph into memory, see Reading Graphs from Oracle Database into the Graph Server (PGX) for the various methods to load a graph into the graph server (PGX).

- About Filter Expressions

- Using a Simple Filter to Create a Subgraph

- Using a Complex Filter to Create a Subgraph

- Using a Vertex Set to Create a Bipartite Subgraph

Parent topic: Developing Applications with Graph Analytics

14.7.1 About Filter Expressions

Filter expressions are expressions that are evaluated for each vertex or edge. The expression can define predicates that a vertex or an edge must fulfil in order to be contained in the result, in this case a subgraph.

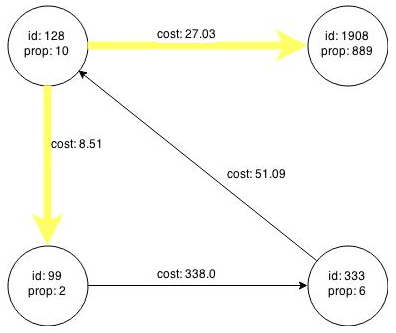

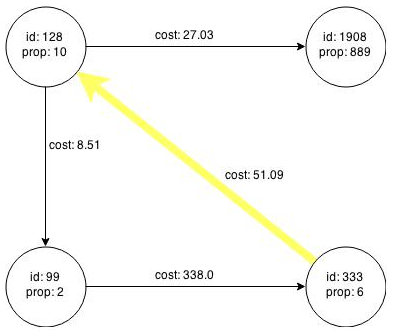

Consider an example graph that consists of four vertices (nodes) and four edges. For an edge to match the filter expression src.prop == 10, the source vertex prop property must equal 10. Two edges match that filter expression, as shown in the following figure.

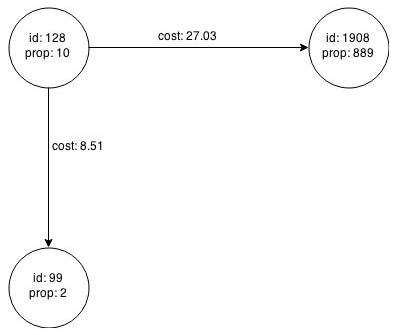

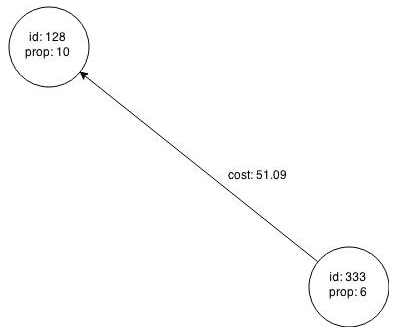

The following figure shows the graph that results when the filter is applied.

Figure 14-2 Graph Created by the Simple Filter

Description of "Figure 14-2 Graph Created by the Simple Filter"

The vertex filter src.prop == 10 filters out the edges associated with

vertex 333 and the vertex itself.

Parent topic: Creating Subgraphs

14.7.2 Using a Simple Filter to Create a Subgraph

The following examples create the subgraph described in About Filter Expressions.

var subgraph = graph.filter(new VertexFilter("vertex.prop == 10"))import oracle.pgx.api.*;

import oracle.pgx.api.filter.*;

PgxGraph graph = session.readGraphWithProperties(...);

PgxGraph subgraph = graph.filter(new VertexFilter("vertex.prop == 10"));

Parent topic: Creating Subgraphs

14.7.3 Using a Complex Filter to Create a Subgraph

This example uses a slightly more complex filter. It uses the outDegree function, which calculates the number of outgoing edges for an identifier (source src or destination dst). The following filter expression matches all edges with a cost property value greater than 50 and a destination vertex (node) with an outDegree greater than 1.

dst.outDegree() > 1 && edge.cost > 50

One edge in the sample graph matches this filter expression, as shown in the following figure.

Figure 14-3 Edges Matching the outDegree Filter

Description of "Figure 14-3 Edges Matching the outDegree Filter"

The following figure shows the graph that results when the filter is applied. The filter excludes the edges associated with the vertices 99 and 1908, and so excludes those vertices also.

Figure 14-4 Graph Created by the outDegree Filter

Description of "Figure 14-4 Graph Created by the outDegree Filter"

Parent topic: Creating Subgraphs

14.7.4 Using a Vertex Set to Create a Bipartite Subgraph

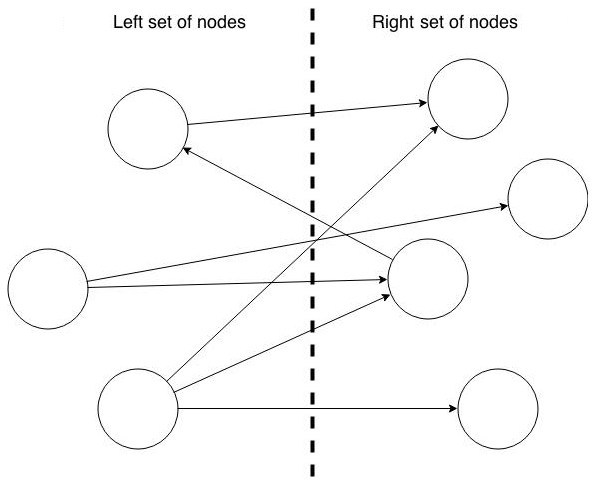

You can create a bipartite subgraph by specifying a set of vertices (nodes), which are used as the left side. A bipartite subgraph has edges only between the left set of vertices and the right set of vertices. There are no edges within those sets, such as between two nodes on the left side. In the graph server (PGX), vertices that are isolated because all incoming and outgoing edges were deleted are not part of the bipartite subgraph.

The following figure shows a bipartite subgraph. No properties are shown.

The following examples create a bipartite subgraph from a simple graph consisting of

four vertices and four edges. The vertex ID values for the four vertices are

99, 128, 1908 and 333

respectively. See Figure 14-1 in About Filter Expressions for more information on the vertex and edge property values including the edge

direction between the vertices.

You must first create a vertex collection and fill it

with the vertices for the left side. In the example

shown, vertices with vertex ID values

333 and 99 are

added to the left side of the vertex collection.

Using the Shell to Create a Bipartite Subgraph

opg4j> s = graph.createVertexSet() ==> ... opg4j> s.addAll([graph.getVertex(333), graph.getVertex(99)]) ==> ... opg4j> s.size() ==> 2 opg4j> bGraph = graph.bipartiteSubGraphFromLeftSet(s) ==> PGX Bipartite Graph named sample-sub-graph-4

Using Java to Create a Bipartite Subgraph

import oracle.pgx.api.*; VertexSet<Integer> s = graph.createVertexSet(); s.addAll(graph.getVertex(333), graph.getVertex(99)); BipartiteGraph bGraph = graph.bipartiteSubGraphFromLeftSet(s);

When you create a subgraph, the graph server (PGX) automatically creates a Boolean vertex (node) property that indicates whether the vertex is on the left side. You can specify a unique name for the property.

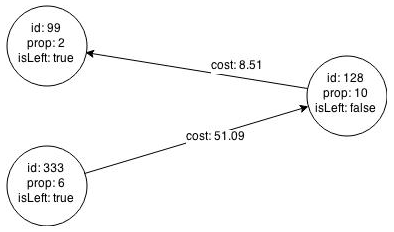

The resulting bipartite subgraph looks like this:

Vertex with ID 1908 is excluded from the bipartite subgraph. The

only edge that connected that vertex extended from

128 to 1908. The

edge was removed, because it violated the

bipartite properties of the subgraph. Vertex

1908 had no other edges, and so

was removed as well. Moreover, the edge from the

vertex with the ID 128 to the

vertex with ID 99 is not present

in the bipartite subgraph, because edges are only

allowed to go from left to right (and not from

right to left).

Parent topic: Creating Subgraphs

14.8 Using Automatic Delta Refresh to Handle Database Changes

You can automatically refresh (auto-refresh) graphs periodically to keep the in-memory graph synchronized with changes to the property graph stored in the property graph tables in Oracle Database (VT$ and GE$ tables).

Note that the auto-refresh feature is not supported when loading a graph into PGX in memory directly from relational tables.

- Configuring the Graph Server (PGX) for Auto-Refresh

- Configuring Basic Auto-Refresh

- Reading the Graph Using the Graph Server (PGX) or a Java Application

- Checking Out a Specific Snapshot of the Graph

- Advanced Auto-Refresh Configuration

- Special Considerations When Using Auto-Refresh

Parent topic: Developing Applications with Graph Analytics

14.8.1 Configuring the Graph Server (PGX) for Auto-Refresh

Because auto-refresh can create many snapshots and therefore may lead to a high memory usage, by default the option to enable auto-refresh for graphs is available only to administrators.

To allow all users to auto-refresh graphs, you must include the following line into

the graph server (PGX) configuration file (located in

/etc/oracle/graph/pgx.conf):

{

"allow_user_auto_refresh": true

}

Parent topic: Using Automatic Delta Refresh to Handle Database Changes

14.8.2 Configuring Basic Auto-Refresh

Auto-refresh is configured in the loading section of the graph configuration. The example in this topic sets up auto-refresh to check for updates every minute, and to create a new snapshot when the data source has changed.

The following block (JSON format) enables the auto-refresh feature in the configuration file of the sample graph:

{

"format": "pg",

"jdbc_url": "jdbc:oracle:thin:@mydatabaseserver:1521/dbName",

"username": "scott",

"password": "<password>",