45 Oracle Globally Distributed Database MAA Best Practices

Choosing Oracle Globally Distributed Database

Customers choose a distributed database architecture primarily for the following reasons:

- Scalability and agility (Hyperscaling)

- Ability to horizontally scale data, handling increasing data volumes and user loads by adding more shards (nodes) without any application changes

- Ability to add or drop shards with application transparency

- Each shard is open and active for the application.

-

High availability with always-on continuous database availability

-

Automatic failover and data replication across shards to ensure the database remains operational, even if some shards or nodes go down

- Fault tolerance and isolation across shards, including protection from data center, availability domain (AD), or availability zone (AZ) failures

- Failover in low single-digit seconds or extremely low Recovery Time Objective for database failures

- Zero data loss

- MAA observed less than 5 seconds of application impact for shard database instance or node failures. See High Availability Recommendations and Observations.

-

-

Seamless distributed application integration

- Distributed application is initially designed with a shard key for data operation, and queries may be adjusted to be shard scoped aware.

- No application changes are required while scaling or adjusting the number of database shards.

- From the perspective of an application, a distributed database looks like a single database; the number of shards and the distribution of data across those shards are completely transparent to the application.

-

Consistency and transaction support

- Support for consistent data reads/writes, with required levels of transactional guarantees (strong, eventual, and so on) across shards as needed by the application.

- External consistency for sharded applications is a property that guarantees transactions appear to execute as if they occurred one at a time, in an order that respects the real-time, or "wall-clock," order of their commits. This is a critical and challenging requirement for globally distributed and sharded databases, ensuring that all observers see the same, globally-consistent transaction history, regardless of which shard they interact with.

-

Ease of management and monitoring

- Simple operations for shard orchestration, monitoring, backup/restore, high availability, software updates, and scaling operations.

Oracle Globally Distributed Database 23ai with Raft replication provides all the above benefits.

MAA for Distributed Databases is a new class of MAA focused on providing high availability and application best practices to maximize availability and scalability.

Globally Distributed Database with Raft replication is Oracle's strategic and recommended Distributed Database Solution. For more information about Oracle Globally Distributed Database, see the Oracle Globally Distributed AI Database Guide.

MAA Best Practices for Oracle Globally Distributed Database with Raft Replication

The scope of this topic is MAA best practices for the Oracle Database 23ai feature, Oracle Globally Distributed Database with Raft replication, and does not cover alternative Oracle distributed database options that leverage Oracle Real Application Clusters, Oracle Data Guard, or Oracle GoldenGate.

Note: Applications running with existing MAA architectures or using various Oracle Database features such as Oracle Real Application Clusters, Data Guard, and Oracle GoldenGate should continue to use existing MAA best practices presented in other topics.

Oracle Maximum Availability Architecture (MAA) compiled best practices that covers the following topics:

- Architecture and Planning

- Network Considerations and Best Practices

- Application Best Practices

- Configuration Best Practices

- High Availability Recommendations and Observations

- Scaling Recommendations and Observations

- Software Update Recommendations and Observations

- Monitoring and Alerting

Architecture and Planning

Globally Distributed Database with Raft Replication Architecture Components

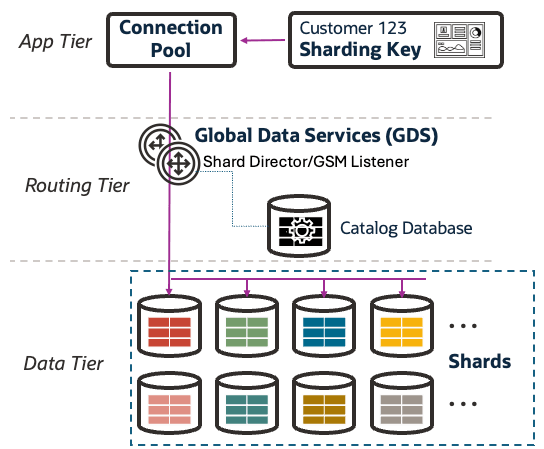

The following figure illustrates the major architectural components of Oracle Globally Distributed Database.

These components can be divided into three tiers for ease of understanding.

Figure 45-1 Oracle Globally Distributed Database Architecture

Application Tier

Oracle Globally Distributed Database provides benefits for a variety of applications. Such applications may encompass real-time OLTP systems, globally distributed applications, data streaming platforms, advanced analytics, and machine learning workloads.

Application deployment for a distributed database generally follows the same process as deployment for a regular Oracle Database. Applications connect to the distributed database using the Global Services defined by Global Data Services (GDS).

Oracle connection pools and drivers support Oracle Globally Distributed Database with high availability features to achieve less than 5 seconds of application impact for failures.

Application deployment and configuration best practices for Globally Distributed Database are explained in Application Best Practices

Routing Tier

Oracle Global Data Services (GDS) manages the routing tier in a distributed database . With the introduction of Oracle Database 23ai, GDS supports Oracle Global Distributed Database with Raft replication.

GDS provides the framework to manage the distributed database shards, Raft replication, Global Service Manager (GSM) instances, and global services using the GDSCTL utility.

A GSM in a distributed database context is known as a shard director. A shard director is a specific implementation of a GSM that acts as a regional listener for clients that connect to a distributed database. The shard director maintains a current topology map of the distributed database. Based on the sharding key passed during a connection request, the shard director routes the connections to the appropriate shard.

For a distributed database it is recommended that you configure three global service managers for each region. GDS uses a shard catalog database to store meta-data relating to the layout and status of the distributed database configuration. For maximum availability, Oracle recommends that the shard catalog database be deployed independently, and that Oracle's high-availability features, such as Oracle Real Application Clusters (Oracle RAC) and Oracle Data Guard, be used to protect the shard catalog database against outages.

Follow the MAA Global Data Services in Oracle Maximum Availability Architecture guidelines for implementation and configuration of GDS.

Data Tier

The data tier consists of the shards, which are Oracle databases deployed on separate hosts. All of the shards together make up a single logical database, called a distributed database. The shards do not share any software or hardware between them to provide fault isolation between other shards.

Shard databases can be deployed on any platform, and if deployed on Oracle Exadata Database Machine, they can greatly benefit from extremely low latency I/O. Shards on Exadata platform can also take advantage of Exadata smart scan and offload performance benefits as well Oracle Exadata's built in high availability and data protection features. Oracle Restart or Oracle Clusterware can provide the restart capabilities if a shard database instance or node goes down.

Each shard has multiple Replication Units (RUs) which are protected by Raft replication with one leader and multiple followers. Raft replication tries to maintain a balanced distribution of leaders and followers across shards. The application transparently writes and reads to the leaders RUs.

Raft replication is configured with a specific replication factor (RF) which determines the number of participants or number of replicas in a Raft group. This number includes the leader and its followers. By default Raft shards are deployed with RF 3. This means data or replication units will have three replicas spread across three shards.

For more details about configuring distributed database shards, see Create the Shard Databases.

Planning

Planning an Oracle Globally Distributed Database deployment with Raft replication requires careful consideration of several key factors.

Data Distribution Method

When Raft replication is selected as the replication method, a critical planning step is the selection of the data distribution strategy.

Begin with analyzing which data distribution method works best for your application. The data distribution method determines how the application schema tables are partitioned. The distribution method also controls the placement of data on the shards.

Raft replication supports system-managed (hash) and composite data distribution methods at this time.

See Data Distribution Methods for more details on each distribution method.

Replication

The replication strategy for a distributed database is chosen primarily based on the application's workload requirements, latency tolerance, and recovery objectives.

Raft replication provides synchronous data replication between the shards, high availability with multiple replicas, data consistency, and near zero recovery time from failures.

This document only covers Globally Distributed Database with Raft replication.

Topology

Proper placement of Globally Distributed Database components plays a key role in optimizing network latency, ensuring availability, and simplifying operations.

Consider the following while planning the placement of distributed database components:

- Deployment of shards across availability domains or zones to provide best fault isolation

- Ensure application response time is acceptable when using various database shards. An application can leverage any of the shards during failures or maintenance.

- Configure the shard catalog database with Oracle Data Guard to ensure high availability and data protection.

- Multiple shard directors (GSM instances) for high availability and load balancing

Capacity

Each shard should be sized with sufficient system resources to handle its portion of the workload, plus an additional 30% conservatively, to handle workload when at least one shard is down for maintenance or outage occurs. If the workload increases, resize accordingly.

While planning for network capacity, consider the bandwidth required for application data, inter shard communication, Raft replication traffic and backup data.

More details about capacity are discussed in Provision and Configure Hosts and Operating Systems.

Finally, establish a clear methodology for managing and operating the distributed environment, including monitoring and failover procedures.

Addressing these aspects during the planning phase ensures a more resilient, scalable, and efficient deployment.

Network Considerations and Best Practices

In Oracle Globally Distributed Database with Raft replication,network latency is an important factor in ensuring acceptable application performance while leveraging lightweight synchronous Raft replication. A transaction is only complete when a Raft leader and half of the followers write the changes to the Raft logs. Commit latency is influenced by the network round trip time between the Raft leader and the slowest follower participating in the quorum to complete the transaction.

A larger network latency could impact user transaction response time and can also influence failure detection and role transition timings.

The following are some of the MAA network best practices for Globally Distributed Database with Raft replication:

- Ensure that your application workload can be accommodated by available network bandwidth.

- Ensure that application performance requirements with synchronous Raft replication can be met given the latency between shards.

- Ensure a stable network connection between all shard replicas.

- Implement network redundancy to eliminate single points of failure. This can involve redundant network interfaces, switches, and potentially diverse network paths.

- Define SLOs: target commit latency, read latency, RPO=0, RTO for regional loss.

- Validate network latency between shards using

iperfororatcptesttools. - Implement the following network configuration best practices to improve

performance and minimize impact, especially if shards are in distant

proximity:

- For a network interface that sends or receives Raft replication logical change records, validate optimal MTU size. When sending replication data across geographical regions, experiments have shown that using MTU=9000 can benefit in some network topologies. Conduct performance tests with oratcptest and compare the results with default MTU and MTU=9000 settings. This may reduce some unnecessary network round trips which will increase overall throughput.

- Increasing maximum operating system socket buffer size can increase single process throughput by 2-8 times. Test with different socket buffer sizes on the Raft shard nodes to see what value yields the best results, for example, larger TCP socket buffer sizes of 64MB or 128MB. Bandwidth Delay Product is the product of the network link capacity of a channel and the round trip time, or latency. The minimum recommended value for socket buffer sizes is 3*BDP, especially for a high-latency, high-bandwidth network. Use oratcptest to tune the socket buffer sizes on the shard nodes.

- The SDU determines the maximum packet size for Oracle

Net Services communication. When replicating large

amounts of data, a larger SDU provides several

benefits. Set the SDU size in the

sqlnet.oraandtnsnames.orafiles on the shard database nodes. Set the SDU size to a higher value, for example, 65535, and validate with the application workload.

See Assessing and Optimizing Network Performance and Network Evaluation for detailed examples of network performance evaluation and optimization.

Application Best Practices

For OLTP applications, choose a sharding key that provides the best data distribution and avoids transactions spanning across multiple shards.

Direct routing to shards is the recommended application configuration to achieve the best performance. See Direct Routing to a Shard for routing details.

Use the latest Oracle 23ai UCP and JDBC drivers to achieve the best integration with Globally Distributed Database. The latest drivers are required to achieve the high availability and extremely low recovery times. For more details about connection pools, drivers, and API compatibility see APIs Supporting Direct Routing.

The table below explains important UCP parameters and recommended values based on MAA evaluation to achieve extremely low application brownout and blackout (less than 5 seconds) for various outages:

Note:

The values of certain parameters below may be adjusted to meet application SLA requirements.| UCP Connection Pool Parameter | Recommended Value | Notes |

|---|---|---|

initialPoolSize |

(Total number of App threads) * (Number of Shards) |

Set the initial pool size to the recommended value. Also Review the best practice guidelines for static connection pools from Oracle's Real-World Performance group in Optimizing Real-World Performance with Static Connection Pools. |

minPoolSize |

Same as initialPoolSize | For static connection pool configuration keep the value same as initial pool size. |

maxPoolSize |

Same as minPoolSize | Allows maximum connection pool size based on the application requirements to handle peak workload |

abandonedConnectionTimeout |

1 second |

Enables borrowed connections to be reclaimed by the connection pool after a connection has not been used for a specific amount of time. Default is 0 and feature is disabled. |

connectionValidationTimeout |

1 second |

This timeout refers to the maximum duration allowed for a connection validation operation when a connection is borrowed from the pool. If the validation is not completed within the specified period, the connection is considered invalid. Default is 15 seconds and a lower value is recommended for faster detection of hung connections improving availability. |

connectionWaitDuration |

1 second |

Specifies the maximum time, in seconds, that a request waits for an available connection from the pool. The value can also be specified in milliseconds for a more aggressive detection of connection availability. This parameter is new in Oracle 23ai and parameter

|

timeoutCheckInterval |

5 secs | Controls how frequently various timeout properties (such as abandoned connection timeout, time-to-live connection timeout, and inactive connection timeout) are enforced. |

inactiveConnectionTimeout |

120 secs | Specifies how long an available connection can remain idle before it is closed and removed from the pool. Default value is 0 and feature is disabled. |

The following table explains the JDBC properties and recommended values.

| JDBC Properties | Recommended Value | Notes |

|---|---|---|

FastFailover |

true | This property enables Fast Application Notification (FAN) aware, high-availability behavior so that connection pools can quickly detect and react to database node or service failures. |

TcpNoDelay |

true | When enabled, packets are sent immediately, without waiting to batch them. Can help reduce latency for OLTP applications. |

StatementCaching |

120 | When enabled, cached statements can be reused instead of being re-parsed by the database, which can greatly increase efficiency. |

FetchSize |

30 | Helps reduce network round trips. Adjust the value based on application requirements. |

ReadTimeout |

30 |

Defines the maximum time (in milliseconds) the JDBC driver waits when reading from the database socket. Adjust the value of this parameter according to application timeout requirements. |

oracle.jdbc.useShardingDriverConnection |

true | This JDBC property tells the Oracle JDBC driver to use the Sharding Driver instead of the standard JDBC Thin driver for the connection. The Sharding Driver is required when connecting to an Oracle Globally Distributed Database. |

oracle.jdbc.allowSingleShardTransactionSupport |

true |

When this property is set to 'true', the data source supports local transactions on a single shard when auto-commit is disabled. If set to 'false' transactions are started on the catalog database. |

The following table illustrates some of the errors which are raised to the application during switchover and failover of the Raft replication units and shards.

Applications need to implement exception handling for these errors and retry logic based on application-specific requirements to ensure continual operations.

| Exception Code | Error Message | Explanation |

|---|---|---|

| ORA-03974 | Cannot perform DML operations during switchover to shard <shard_id> for replication unit <ru_id> | Error is raised due to switchover of replication unit from one shard to another. |

| ORA-03838 | Cannot perform DML operations (<DML>) in a follower shard for replication unit <ru_id>, chunk ID <chunk_id> | Error is raised after switchover of replication unit . |

| ORA-03996 | Cannot perform DML operations during recovery of the leader for replication unit <ru_id> |

Error is raised if LCR apply is slow or incomplete on a follower shard, typically during a switchover or failover of the leader shard. This generally happens while performing DMLs with proxy routing and not direct routing of Applications. |

| ORA-05048 | Cannot commit transaction in a follower shard for replication unit <ru_id>, transaction ID <txn_id> | Error is raised due to failover of replication unit. |

| ORA-45582 | The chunk <chunk_id> was not found or has been moved to another shard | Error is raised due to switchover or move operation of a replication unit. |

| ORA-17008 | Closed connection | Error is typically raised during shard instance or node failure. |

| ORA-17002 | Network adapter could not establish connection | Error is raised due to shard node failure or network error. |

| ORA-01013 | User requested cancel of current operation. | When a read timeout or any other timeout fires, UCP cancels the ongoing query, and the caller receives ORA-01013. |

| UCP-29 | Failed to get a connection | Error is raised by UCP when it is unable to acquire a connection because a shard is unavailable due to an instance or node failure. |

Configuration Best Practices

Raft Logs Sizing and Configuration

In Raft replication, changes are captured as part of the user transactions and stored in a replication log, also known as Raft log. Similar to Online Redo Logs or Standby Redo Logs, Raft logs contain committed and uncommitted user transactions. A user transaction is considered committed on the leader when half of the followers have acknowledged the commit record and persisted it in their Raft logs.

Each replication unit is tied to a set of Raft logs and operating system processes that manage the Raft logs and replicate changes from the leader to the followers. This design enables multiple replication units to function independently and in parallel, both within a single shard and across multiple shards. It also provides flexibility to scale replication up or down by adjusting the number of Replication Units. This architecture not only improves concurrency and fault isolation but also enables elastic scalability and high performance.

Sizing and placement of Raft logs is critical to maintain predictable performance

and recovery of the shard databases. Raft logs are created alongside the

shard database files, controlled by the db_create_file_dest

parameter. Place Raft logs on an extremely low latency and high IOPs storage

tier for optimal performance.

By default each replication unit is created with three Raft logs which are rotated in a circular fashion. The Raft protocol ensures that followers receive log records in the exact order generated by the leader. In normal operation the followers receive a continuous stream of changes from the leader. If one of the followers is behind with the Raft replication, or restarted because of planned or unplanned outages, the leader sends the change records from the Raft logs once the replication processes are up on the follower's shard.

If a follower shard remains down for an extended period and the leader Raft logs

are rotated or overwritten due to application workload, the follower will be

unable to automatically resynchronize with the missed changes after it

restarts. In this situation a recovery of the replication units on the

follower shard is required, which is typically done using the COPY

RU GDSCTL command from another follower replication unit

which is consistent with the leader. It is best to avoid this situation due

to the overhead and time it takes to recover.

The following table provides guidelines for shard database instance parameters for managing Raft logs.

| Parameter | Default Value | Recommendation | Notes |

|---|---|---|---|

shard_raft_logfile_size |

1GB | Size Raft logs equal to shard database redo logs |

A recommended starting point is to size the Raft logs to match the size of the database redo logs. Size can be better estimated by analyzing the rotation time of each raft log during peak application workload. |

_shard_raft_log_retention_time_mins |

Null | 1440 (24h) | Set the Raft log retention window large enough to accommodate planned and unplanned outages of shards. Retaining Raft logs allows leaders to send changes to followers to automatically resync after an outage. |

_shard_max_num_raft_logfile |

10 | 32 |

This parameter controls the total number of

Raft logs per RU. Enforced when parameter

Setting the value to a higher number is recommended to accommodate peak workload and retention window. The maximum value is 32. Note: If the number of Raft logs is not large enough

to accommodate the retention time frame, Raft

stops the replication unit and raises error

|

Additional Configuration Best Practices

The following are additional configuration best practices for Globally Distributed Database with Raft Replication:

- Size shard database and Raft logs storage tier to accommodate sufficient

I/O capacity for:

- Application workload

- RMAN backup and remote restore operations for

MOVE RUorCOPY RUoperations. - Exadata provides additional I/O bandwidth, extreme low latency for commits, Exadata Smart Flash and Exadata Smart Offloading.

- Deploy multiple shard directors for high availability in each availability domain or zone.

- Configure Data Guard Fast-Start Failover for shard catalog database protection and availability.

- Deploy additional shards for flexibility, capacity management, and workload balancing.

- Size shards so that performance remains acceptable, even when one shard is down for maintenance or an outage occurs.

- Benchmark Raft synchronous replication performance under different workloads.

- Implement a mechanism to automatically detect Raft shard instance

failures and restart.

- Oracle Restart, Exadata or Oracle Clusterware provide these features and can also manage single instance databases.

- Place Raft shards across independent failure domains and availability domains to match your failure isolation goals and latency budget.

High Availability Recommendations and Observations

Validating the high availability and resiliency features of the Globally Distributed Database is essential. Oracle MAA has conducted rigorous and extensive testing of Globally Distributed Database with Raft replication and provides the following recommendations and considerations.

- Add alert and automatic restart capabilities for the shard database

instances after planned and unplanned outages.

Oracle Restart or Oracle Clusterware provides this functionality and works seamlessly for restarting local listener or shard database instance

- Evaluate the following outage scenarios to validate that your

application fails over transparently in your distributed

database:

- Shard database instance or node failure

- Shard restart to simulate a software update maintenance window

- Raft replication heartbeat loss timeout and election timeout values are

automatically managed by the database and should not be changed

using internal parameters unless directed by Oracle Support due to

extensive heartbeat failures.

The default heartbeat interval is between 150-300 milliseconds, with randomized election timeouts (up to 150 milliseconds) to prevent multiple shards from triggering elections at the same time.

Oracle MAA has observed the following during tests of distributed database Raft shards under database instance and shard node failure conditions.

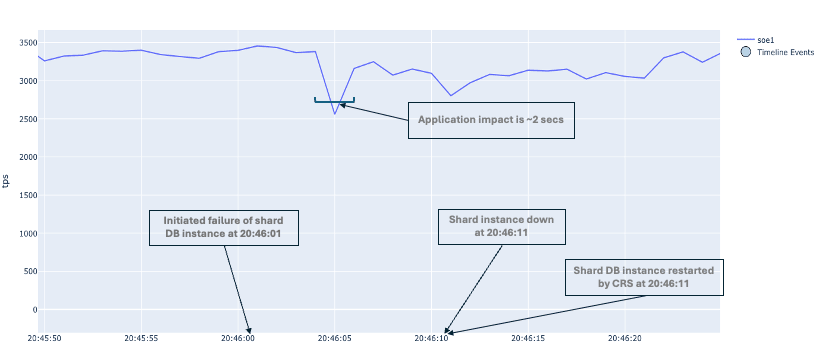

Shard Database Instance Failure

- Shard database instance failure impact is around two seconds with a brief application workload brownout.

- Leader RUs automatically fail over to the follower RUs on the surviving shards.

- Oracle Restart or Oracle Clusterware detects failure and automatically restarts the shard database instance.

- After restart, the follower RUs on the failed shard instance automatically resync with the replication by receiving changes from the leader RUs.

Figure 45-2 Application TPS impact during shard database instance failure

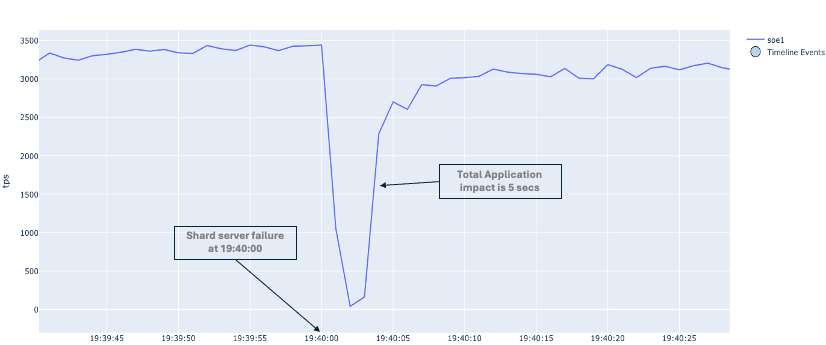

Shard Node Failure

- Shard node failure impact is typically less than five seconds with a larger application workload brownout for a short duration.

- Leader RUs automatically fail over to the follower RUs on the surviving shards.

- After a shard node recovers from failure, Oracle Restart or Oracle Clusterware automatically restarts the shard database instance and local listener.

- Following a restart, the follower RUs on the failed shard node automatically resync with the replication stream by applying changes received from the leader RUs.

Figure 45-3 Application TPS impact during shard node failure

Best Practices for Shard Database Instance and Node Failure

- Switch leader RUs back to the recovered shard at the next available opportunity to balance the application workload.

- Avoid longer duration outages for shard database instance and

nodes.

If a shard is down for longer and leader Raft logs are rotated, the failed shard will not be able to resync with the replication automatically. A recovery of all the follower RUs on the shard is required in this scenario. Automatic detection and restart of shards can help avoid this situation.

- Implement exception handling for specific error messages raised during shard database instance and node failures.

Scaling Recommendations and Observations

In a Globally Distributed Database with Raft replication, shards can be added or

removed from the configuration for scalability and load balancing. The

ADD SHARD or REMOVE SHARD

operation can be performed online with no database downtime. Raft

replication manages the data distribution and relocation automatically.

During the ADD SHARD process new RUs are created, and

CHUNK tablespaces from existing RUs are relocated

to new RUs. The new RUs are then moved to the shard being added.

Before a shard is removed, RUs need to be relocated to shards where an RU follower does not exist.

See Scaling with Raft Replication for detailed steps showing how to add or remove a shard from the distributed database configuration.

Oracle MAA has performed extensive testing of Raft shard scaling under various workloads and provides the following considerations and recommendations for shard scalability.

Recommendations for Adding Shards

- Evaluate network latency between the new and existing shards to quantify potential impact on application response time after replication units are moved to the new shard.

- Ensure there is sufficient I/O and CPU capacity to allow RU movement to new shard. RU move to new shard is performed using remote RMAN restore and recovery processes initiated from the new shard.

- Initiate the

ADD SHARDprocess during a low application activity or maintenance window to minimize impact. AMOVE RUoperation can potentially cause multiple brief application brownouts under high workload.

Recommendations for Removing Shards

- Ensure that the target shards have enough compute, storage, and IOPS capacity to absorb the RUs from the shard being removed.

- Check if the application workload will remain balanced across the surviving shards after redistribution of RUs.

- Initiate the REMOVE SHARD process during a low application activity or maintenance window to minimize impact. A MOVE RU operation can potentially cause multiple brief application brownouts under high workload.

Planned Maintenance or Software Update Recommendations and Observations

Planned maintenance, including software updates, can occur frequently for any of the Oracle Globally Distributed Database components.

The following MAA best practices and guidelines can provide zero application downtime during planned maintenance for Oracle Globally Distributed Database with Raft replication.

Multiple GSM instances deployed, following MAA best practices, can provide a seamless rolling software update experience for applications connecting to Globally Distributed Database using GDS.

For a shard catalog database deployed with Oracle RAC and Data Guard, following MAA high availability best practices enables zero-downtime maintenance.

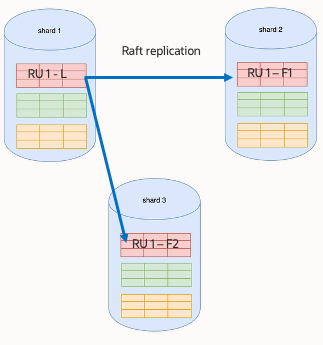

Replication Unit Leader Switchover

In a balanced configuration, all of the shard databases have one or more RU leaders, and applications are reading and writing to these.

It is highly recommended that you switch RU leaders from a shard before planned maintenance activity. Raft replication unit switchover can be done in a graceful manner with a specified timeout value to allow application transactions to complete on the shard before switching over and enabling the leadership of RU on a different shard.

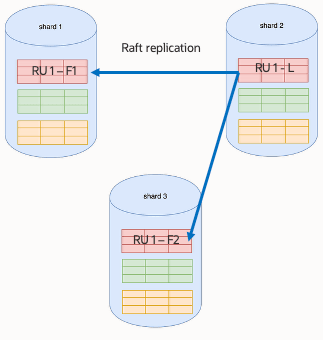

The following example workflow illustrates the switchover of an RU leader from one shard to another.

In this example a switchover of RU # 1 is performed from shard1 to shard2.

Before the switchover, the RU leader (RU1 - L) is on shard1 as shown here:

- Check the RU status to make sure the RU followers are in a

healthy

state.

GDSCTL> status ru -ru 1 Replication units ------------------------ Database RU# Role Term Log Index Status -------- --- ----- ---- --------- ----- shard1 1 Leader 155 123242128 Ok shard2 1 Follower 155 123242128 Ok shard3 1 Follower 155 123242128 Ok - Run the GDSCTL

SWITCHOVER RUcommand.This command changes which member (specified by the shard it resides on) of the RU is the leader. The

-timeoutvalue allows transactions to complete. The target shard RU will not be available for read-write until after the previous leader becomes read-only.GDSCTL> switchover ru -ru 1 -shard shard2 -timeout 1 Switchover process has been started Switchover process completed The operation completed successfullyAfter the switchover command completes, the RU #1 leader is on shard2, as shown here:

- Check the status of RU #

1.

GDSCTL> status ru -ru 1 Replication units ------------------------ Database RU# Role Term Log Index Status -------- --- ----- ---- --------- ----- shard1 1 Follower 155 123242128 Ok shard2 1 Leader 155 123242128 Ok shard3 1 Follower 155 123242128 Ok

Planned Maintenance Best Practices

Best practice guidelines for planned maintenance:

- Perform the maintenance on shards in a rolling fashion.

- Validate shards capacity to make sure that shards are able to

accommodate additional workload when RU leaders are switched

over from the shard going under maintenance.

- AHF,

oswatchertool, and other operating system monitoring commands can be used for capacity analysis.

- AHF,

- Check Raft replication apply lag and any apply

errors using GDSCTL commands or the dictionary views

specified in Globally Distributed Database Health Check and Monitoring on each shard.

- If there is a high Raft replication apply lag, replication unit switchover may take longer or fail.

- Address any apply lag issues before switching over replication units.

- Validate leadership status of the RUs on the shard going under

maintenance, and switch RU leaders to a different shard

database with sufficient capacity.

- Use GDSCTL

STATUS RUcommand on GDS to check the leadership status for RUs. See Globally Distributed Database Health Check and Monitoring - Always use the timeout option (1-2ms) with the

SWITCHOVER RUcommand to minimize application errors and impact.

- Use GDSCTL

- Perform a health check before bringing down the next shard

during rolling shard maintenance.

- After a shard comes back online, Raft replication automatically starts resyncing, but it can take some time to become current, depending on the number of changes and duration of outage.

- Validate the RU followers status and log index on the shard after it comes back online using the GDSCTL utility. The RU follower log index needs to be the same or very close to the leader log index for a follower to be current.

- Validate the apply lag for the replication units on the shard after it starts applying the Raft replication changes.

- Switch some of the RU members back to the updated shard to balance RU leaders and application workload while going through rolling maintenance of shards.

- Normalize and balance the remaining RUs at the end of the

rolling maintenance.

- For 3 shards and 6 replication units, a minimum of 10 switchovers are required for rebalancing, and 12 switchovers are required if rebalancing with affinity or putting RU leaders back on the original shard.

Rolling Shard Maintenance Example Workflow

The following example illustrates rolling shard maintenance for Globally Distributed Database with 3 shards and 6 RUs.

The shard names used in the example are shard1, shard2, and shard3. RU numbers in the example are 1 through 6.

In a balanced configuration, each shard hosts 2 RU leaders and 4 RU followers.

Perform the following steps to complete this task:

Step 1: Verify shard health and RU leadership status

Check the health and status of all the RUs across all shards using GDSCTL:

GDSCTL> status ru -sortRun the apply lag check query specified in Globally Distributed Database Health Check and Monitoring. to make sure there is no apply lag issue on any of the shards.

Proceed with the next steps only after verifying that all RUs report an OK status in the output of the STATUS RU command, and that the apply lag is within a few seconds.

Step 2: Switchover RU leaders from the first shard

On the first shard which is going under maintenance, check the RU leadership status and switchover RU leaders from the first shard to other shards.

GDSCTL> status ru -leaders -sort

Replication units

------------------------

Database RU# Role Term Log Index Status

-------- --- ---- ---- --------- ------

shard1 1 Leader 1 1 Ok

shard1 2 Leader 1 1 Ok

shard2 3 Leader 2 2 Ok

shard2 4 Leader 2 2 Ok

shard3 5 Leader 2 2 Ok

shard3 6 Leader 2 2 OkTo check the RU leader status only on shard1 run the below command:

GDSCTL> status ru -leaders -shard shard1Switchover all the RU leaders from shard1 to other shards using timeout option.

GDSCTL> switchover ru -ru 1 -shard shard2 -timeout 1

GDSCTL> switchover ru -ru 2 -shard shard3 -timeout 1Validate to make sure no RU leaders remain on the first shard.

GDSCTL> status ru -leaders -shard shard1Step 3: Perform maintenance on the first shard and restart

Shard1 can be shut down for maintenance and restarted once the activity is complete.

Step 4: Ensure that RU followers on the first shard resync with Raft replication

When the shard is brought back online, all RU members on it assume the follower role. These RU followers automatically receive updates from the RU leaders and begin resynchronization.

You must allow the RU followers to complete synchronization, ensuring their log index is aligned with, or very close to, those of the RU leaders before proceeding.

See Globally Distributed Database Health Check and Monitoring for more details about how to run the GDSCTL STATUS

RU command and explanation of the log index used by

the RUs.

GDSCTL> status ru -sort

GDSCTL> status ru -shard shard1Step 5: Switchover RU leaders from the second shard

The second shard should have 3 RU leaders, as one of the leaders was switched from shard1 in step 2.

Switchover all the RU leaders from shard2 to other shards.

GDSCTL> status ru -leaders -sort

Replication units

------------------------

Database RU# Role Term Log Index Status

-------- --- ---- ---- --------- ------

shard2 1 Leader 1 1 Ok

shard3 2 Leader 1 1 Ok

shard2 3 Leader 2 2 Ok

shard2 4 Leader 2 2 Ok

shard3 5 Leader 2 2 Ok

shard3 6 Leader 2 2 OkTo check the leader RU status only on shard2 run the below command:

GDSCTL> status ru -leaders -shard shard2Switchover all the RU leaders from shard2 to other shards using timeout option. RU # 1 should be switched back to shard1 so it can be relocated back to the original host.

GDSCTL> switchover ru -ru 1 -shard shard1 -timeout 1

GDSCTL> switchover ru -ru 3 -shard shard1 -timeout 1

GDSCTL> switchover ru -ru 4 -shard shard3 -timeout 1Validate to make sure no RU leaders remain on the second shard.

GDSCTL> status ru -leaders -shard shard2Step 6: Perform maintenance on the second shard and restart

Shard2 can be shut down for maintenance and restarted once the activity is complete.

Step 7: Ensure RU followers on the second shard resync with Raft replication

After maintenance on shard2, you must allow the RU followers to complete synchronization, ensuring their log index is aligned with, or very close to, those of the RU leaders before proceeding.

GDSCTL> status ru -sort

GDSCTL> status ru -shard shard2Step 8: Switchover RU leaders from the third shard

GDSCTL> status ru -leaders -sort

Replication units

------------------------

Database RU# Role Term Log Index Status

-------- --- ---- ---- --------- ------

shard1 1 Leader 1 1 Ok

shard3 2 Leader 1 1 Ok

shard1 3 Leader 2 2 Ok

shard3 4 Leader 2 2 Ok

shard3 5 Leader 2 2 Ok

shard3 6 Leader 2 2 OkTo check the RU leaders status only on shard3 run the below command:

GDSCTL> status ru -leaders -shard shard3Switchover all the RU leaders from shard3 to other shards using timeout option. RU # 2 leader should be switched back to shard1 so it is relocated back to the original host. RU # 4 leader should be switched back to shard2 so it is relocated back to the original host.

GDSCTL> switchover ru -ru 2 -shard shard1 -timeout 1

GDSCTL> switchover ru -ru 4 -shard shard2 -timeout 1

GDSCTL> switchover ru -ru 5 -shard shard1 -timeout 1

GDSCTL> switchover ru -ru 6 -shard shard2 -timeout 1Validate to make sure no RU leaders remain on the third shard.

GDSCTL> status ru -leaders -shard shard3Step 9: Perform maintenance on the second shard and restart

Shard3 can be shut down for maintenance and restarted once the activity is complete.

Step 10: Ensure RU followers on the third shard resync with Raft replication

After maintenance on shard3, you must allow the RU followers to complete synchronization, ensuring their log index is aligned with, or very close to, those of the RU leaders before proceeding.

GDSCTL> status ru -sort

GDSCTL> status ru -shard shard3Step 11: Rebalance RU leaders across all shards

Once maintenance is complete on all shards, the RU leaders should be rebalanced to their original locations to restore even distribution of load.

GDSCTL> status ru -leaders -sort

Replication units

------------------------

Database RU# Role Term Log Index Status

-------- --- ---- ---- --------- ------

shard1 1 Leader 1 1 Ok

shard1 2 Leader 1 1 Ok

shard1 3 Leader 2 2 Ok

shard2 4 Leader 2 2 Ok

shard1 5 Leader 2 2 Ok

shard2 6 Leader 2 2 OkSwitchover the RU leaders from shard1 and shard2 to shard3 to balance the RUs.

GDSCTL> switchover ru -ru 3 -shard shard2 -timeout 1

GDSCTL> switchover ru -ru 5 -shard shard3 -timeout 1

GDSCTL> switchover ru -ru 6 -shard shard3 -timeout 1Alternately the SWITCHOVER command can be used with the

-rebalance option to relocate all the RUs

back to their original shard location using a single command. The

SWITCHOVER -rebalance command is aware of

the original affinity of the RUs and issues multiple switchover

commands in the background.

GDSCTL> switchover ru -rebalanceValidate all the RUs are balanced after switchover completes.

GDSCTL> status ru -sort

GDSCTL> status ru -leaders -sortGlobally Distributed Database Health Check and Monitoring

- Raft replication processes information, and any specific error messages

are typically reported in the shard database instance alert log. But

there is some additional information logged in the shard database

instance debug log

debug_<sid>.loglocated in directory$DIAG_DIR/diag/rdbms/<dbname>/<sid_name>/log, for example:- Raft replication processes state change information.

- RU leader and follower heartbeat failures and role

transitions messages.

- For example, "SNR role change RU_ID 1. CANDIDATE. Reason heartbeat loss"

- Implement comprehensive network monitoring to track bandwidth

utilization, latency, and potential network issues that could impact

performance.

- Configure alerts to notify administrators of any network problems affecting Raft replication.

- Monitor RU health status and apply lag.

- Use shard database AWR/ASH reports for capacity tracking and performance troubleshooting. Establish run-books for Raft RU leader placement in a balanced configuration, and in case of shard addition and removal.

Below are some useful commands using the GDSCTL utility to check the overall health and status of RUs.

Example 45-1 Check the health of all of the replication units across all the shards

To check the health of all of the replication units across all the shards, run the below command.

The STATUS RU command shows the Status and Log Index of

each RU. The Log Index represents the latest Raft log entry index

that the RU has acknowledged. The RU leader assigns log entries and

replicates them to RU followers. The RU follower Log Index is same

or very close to the RU leader Log Index under normal

operations.

GDSCTL> status ru -sortExample 45-2 Check the status of all the replication unit leaders

GDSCTL> status ru -leaders -sortExample 45-3 Check the CHUNK allocation to all the replication units

GDSCTL> status ru -show_chunks -sortExample 45-4 Check the detailed status of a replication unit and any errors

GDSCTL> status ru -ru 1

GDSCTL> ru -ru 1 -show_errorsExample 45-5 Check the status of any in progress GDS tasks or jobs

GDSCTL> config taskExample 45-6 Cancel or resume any GDS task

GDSCTL> alter task -resume -task <no>

GDSCTL> alter task -cancel -task <no>Some useful shard database queries:

Example 45-7 Check the apply lag

To check apply lag, log in to the shard PDB as a privileged user and execute the following SQL:

SELECT

ru_id,

state,

apply_name,

case

when (hwm_lcr_create_time > hwm_lcr_create_time) then 0

else NVL(( hwm_time - hwm_lcr_create_time ) * 86400, 0) end as latency_in_seconds,

to_char(hwm_lcr_create_time, 'HH24:MI:SS MM/DD/YY') AS message_creation_time,

to_char(hwm_time, 'HH24:MI:SS MM/DD/YY') AS apply_time,

hwm_lcr_log_index,

processed_lcr_log_index

FROM

v$shard_apply_coordinator;Example 45-8 Check transport lag

To check the transport lag, log in to the shard PDB as a privileged user and execute the following SQL:

SELECT

lcr_prdcr.ru_id AS ru_id,

ntwk_sndr.remote_peer_id as remote_peer_id,

'' as follower_shard,

ntwk_sndr.startup_time AS network_sender_startup,

lcr_prdcr.startup_time AS lcr_prdcr_startup,

ntwk_sndr.state AS network_sender_state,

lcr_prdcr.state AS lcr_prdcr_state,

lcr_prdcr.flwctrl_peers as flwctrl_peers,

case

when (ntwk_sndr.last_sent_lcr_time > lcr_prdcr.last_enqueued_lcr_time) then 0

else nvl( abs(lcr_prdcr.last_enqueued_lcr_time - ntwk_sndr.last_sent_lcr_time) * 86400, 0) end AS transport_lag_seconds,

( lcr_prdcr.last_enqueued_lcr_log_index - last_sent_lcr_log_index ) AS transport_lag_logindexes,

ntwk_sndr.elapsed_propagation_time elapsed_propagation_time,

ntwk_sndr.connect_error_count connect_error_count

FROM

v$shard_lcr_producer lcr_prdcr,

v$shard_network_sender ntwk_sndr

WHERE

lcr_prdcr.ru_id = ntwk_sndr.ru_id;Example 45-9 Check the status of the Raft logs for all the replication units

To check the status of the Raft logs for all the replication units, log in to the shard PDB and execute the below SQLs.

The CURR_FILE column with value 'Y' indicates which Raft

log is the current file for each replication unit.

set lines 200 pages 200

col name for a95

col start_time_timestamp for a35

select ru_id, name, rotd_idx, size_gb, curr_file, timestamp'1970-01-01 00:00:00' + numtodsinterval ( start_sec, 'second' ) start_time_timestamp

from v$shard_lcr_logs order by ru_id, curr_file, rotd_idx;

set lines 200 pages 200

col name for a95

col start_time_timestamp for a35

select ru_id, rotd_idx, size_gb, curr_file, STIDX_LOW, STIDX_HIGH, ENDIDX_LOW, ENDIDX_HIGH, timestamp'1970-01-01 00:00:00' + numtodsinterval ( start_sec, 'second' ) start_time_timestamp

from v$shard_lcr_logs order by ru_id, curr_file, rotd_idx;