Chunk Management

You can manage chunks in your deployment with Oracle Enterprise Manager Cloud Control and GDSCTL.

Resharding and Hot Spot Elimination

The process of redistributing data between shards, triggered by a change in the number of shards, is called resharding. Automatic resharding is a feature of the system-managed sharding method that provides elastic scalability of a distributed database.

Sometimes data in a distributed database needs to be migrated from one shard to another. Data migration across shards is required in the following cases:

-

When one or multiple shards are added to or removed from a distributed database

-

When there is skew in the data or workload distribution across shards

The unit of data migration between shards is the chunk. Migrating data in chunks guaranties that related data from different sharded tables are moved together.

When a shard is added to or removed from a distributed database, multiple chunks are migrated to maintain a balanced distribution of chunks and workload across shards.

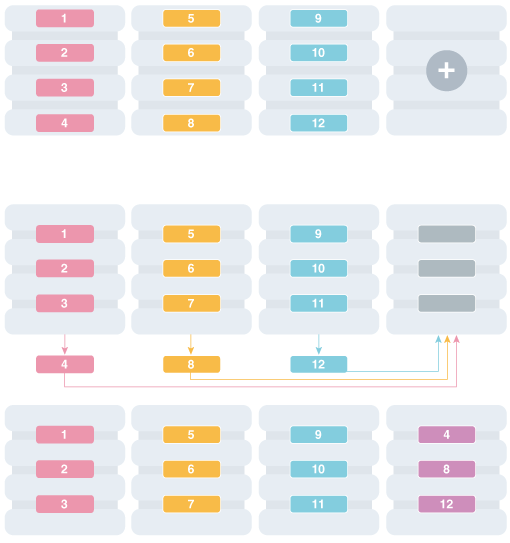

Depending on the sharding method, resharding happens automatically (system-managed) or is directed by the user (composite). The following figure shows the stages of automatic resharding when a shard is added to a distributed database with three shards.

Figure 9-7 Resharding a Distributed Database

Description of "Figure 9-7 Resharding a Distributed Database"

A particular chunk can also be moved from one shard to another, when data or workload skew occurs, without any change in the number of shards. In this case, chunk migration can be initiated by the database administrator to eliminate the hot spot.

RMAN Incremental Backup, Transportable Tablespace, and Oracle Notification Service technologies are used to minimize impact of chunk migration on application availability. A chunk is kept online during chunk migration. There is a short period of time (a few seconds) when data stored in the chunk is available for read-only access only.

FAN-enabled clients receive a notification when a chunk is about to become read-only in the source shard, and again when the chunk is fully available in the destination shard on completion of chunk migration. When clients receive the chunk read-only event, they can either repeat connection attempts until the chunk migration is completed, or access the read-only chunk in the source chunk. In the latter case, an attempt to write to the chunk will result in a run-time error.

Note:

Running multi-shard queries while a distributed database is resharding can result in errors, so it is recommended that you do not deploy new shards during multi-shard workloads.

Moving Chunks

Sometimes it becomes necessary to move a chunk from one shard to another. To maintain scalability of the distributed database environment, it is important to attempt to maintain an equal distribution of the load and activity across all shards.

As the environment matures in a composite distributed database, some shards may become more active and have more data than other shards. In order to keep a balance within the environment you must move chunks from more active servers to less active servers. There are other reasons for moving chunks:

-

When a shard becomes more active than other shards, you can move a chunk to a less active shard to help redistribute the load evenly across the environment.

-

When using range, list, or composite sharding, and you are adding a shard to a shardgroup.

-

When using range, list, or composite sharding, and you a removing a shard from a shardgroup.

-

After splitting a chunk it is often advisable to move one of the resulting chunks to a new shard.

When moving shards to maintain scalability, the ideal targets of the chunks are shards that are less active, or have a smaller portion of data. Oracle Enterprise Manager and AWR reports can help you identify the distribution of activity across the shards, and help identify shards that are good candidates for chunk movement.

Note:

Any time a chunk is moved from one shard to another, you should make a full backup of the databases involved in the operation (both the source of the chunk move, and the target of the chunk move.)

You can manage chunks using GDSCTL or Oracle Enterprise Manager Cloud Control:

-

Oracle Database Global Data Services Concepts and Administration Guide for information about using the

GDSCTL MOVE CHUNKcommand - Moving Chunks

Updating an In-Process Chunk Move Operation

While a MOVE CHUNK operation is in process, you can use the

GDSCTL ALTER MOVE command to suspend, resume, or cancel any or all

chunks scheduled to be moved (where the move is not yet started) in the

operation.

There are three variations on this command: -SUSPEND is used to

postpone chunk migration operation, -RESUME is used to restart the

move process, and -CANCEL cancels chunk migration.

In addition, the -CHUNK and -SHARD options are used

to filter the list of scheduled chunk moves. You can use the CONFIG CHUNKS

-SHOW_RESHARD command to get a list of scheduled chunk moves.

Suspending Chunk Moves

ALTER MOVE -SUSPEND postpones chunk migration for a

specified scope until you wish resume or cancel the operation. The shards on which

to suspend operation must be specified, and you can list source and target shards.

You can also specify a list of specific chunks to suspend.

If any chunk in the defined scope is already being moved (any state other than "scheduled"), that chunk will not be suspended.

For example, the following command suspends all scheduled chunk moves to or from shard1.

GDSCTL> alter move -suspend -shard shard1Restarting Chunk Moves

ALTER MOVE -RESUME resets any "move failed" flags on

specified shards, and restarts any stalled or suspended chunk moves.

You can optionally provide a list of source and target shards that will have their "move failed" flags reset before the moves restart. If no shards are specified, the suspended moves are restarted once any moves in process are complete.

For example, the following command restarts chunk moves on any suspended or "failed" chunk moves scheduled to or from shard1.

GDSCTL> alter move -resume -shard shard1Canceling Chunk Moves

ALTER MOVE -CANCEL removes specified chunks from the move chunk

schedule.

The -CHUNK option specifies that all listed chunks will be removed

from the schedule, and -SHARD specifies that all chunk moves

to/from this database will be removed from the schedule. If no chunks or shards are

specified, then all chunk moves not already in process are canceled.

If any chunk in the defined scope is currently being moved (any state other than "scheduled"), that chunk move will not be canceled.

Chunks that are canceled cannot be resumed/restarted. You must issue a new

MOVE CHUNK command to move these chunks.

For example, the following command removes chunks 1, 2, and 3 from the chunk move schedule, if they are not already being moved.

GDSCTL> alter move -cancel -chunk 1,2,3Splitting Chunks

Splitting a chunk in a distributed database is required when chunks become too big, or only part of a chunk must be migrated to another shard.

Oracle Globally Distributed Database supports the online split of a chunk. Theoretically it is possible to have a single chunk for each shard and split it every time data migration is required. However, even though a chunk split does not affect data availability, the split is a time-consuming and CPU-intensive operation because it scans all of the rows of the partition being split, and then inserts them one by one into the new partitions. For composite sharding, it is time consuming and may require downtime to redefine new values for the shard key or super shard key.

Therefore, it is recommended that you pre-create multiple chunks on each shard and split them either when the number of chunks is not big enough for balanced redistribution of data during re-sharding, or a particular chunk has become a hot spot.

Even with system-managed sharding, a single chunk may grow larger than other chunks or may become more active. In this case, splitting that chunk and allowing automatic re-sharding to move one of the resulting chunks to another shard maintains a more equal balanced distribution of data and activity across the environment.

Oracle Enterprise Manager heat maps show which chunks are more active than other chunks. Using this feature will help identify which chunks could be split, and one of the resulting chunks could then be moved to another shard to help rebalance the environment.

You can manage chunks using GDSCTL or Oracle Enterprise Manager Cloud Control:

-

Oracle Database Global Data Services Concepts and Administration Guide for information about using the

GDSCTL SPLIT CHUNKcommand - Splitting Chunks with Oracle Enterprise Manager Cloud Control

Splitting Chunks into Shardspaces Based on Super Key

In a distributed database using the composite sharding data distribution method, the data can be organized into different shardspaces based on super shard key column values. You can split the existing data chunks per super shard key values into new shardspaces.

Splitting chunks by super sharding key is a unique operation of partition/chunk split which also requires data reorganization and movement. Many internal steps during the course of this operation make use of online DDL support so that your application can stay online during a reshard operation.

Use Case

For example, you have a distributed database with tables Customers, Orders, and Lineitems, which are related to each other

through the customer_id as reference key and sharding key. The distributed database uses the composite sharding data distribution method with Class for customers as

the super shard key.

You want to arrange the location of the data by customer Class so that in the future, the data can be rearranged to provide different levels of service or resources to premium Class customers.

Due to business needs, a requirement arises for a new shardspace where data for only Gold and Silver Class customers reside in the future, though the data is not currently distributed that way. For example, there could be additional reporting or business services or data security services offered only to premium customers for additional costs, and segregating these customers in a different shardspace hosted in a different availability domain or region in the cloud makes it more convenient. Or, there is more efficient, but expensive hardware which can host only few shards and not all of the shards, so you want to allot those to the premium classes.

Splitting the Data into Shardspaces Based on Super Key

A PARTITIONSET operator, SPLIT, is introduced to

support splitting chunks by super sharding key, as shown in this ALTER

TABLE syntax.

ALTER TABLE tablename

SPLIT PARTITIONSET partitionset_name

INTO

(partitionset partitionset_name

values [(list of values)] | [LESS THAN (value)]

[lob_column1 store as (tablespace set_name1),

lob_column2 store as (tablespace set_name2) … ]

[[SUBPARTITIONS store in (<tablespace set_name1,

tablespace set_name2, …]|[tablespace_set

tablespaceset_name]],

partitionset partitionset_name

[lob_column1 store as (tablespace set_name1),

lob_column2 store as (tablespace set_name2) … ]

[[SUBPARTITIONS store in (<tablespace set_name1,

Tablespace set_name2, …]|[tablespace_set

tablespaceset_name]]) ;

For example, to split a customers partitionset into Gold customers and non-Gold customers:

ALTER TABLE customers

SPLIT PARTITIONSET all_customers

INTO (PARTITIONSET gold_customers

VALUES (‘gold’)

TABLESPACE SET ts2,

PARTITIONSET non_gold_customers)

As shown above, the command resembles ALTER TABLE SPLIT

PARTITION; however, there are certain rules for this syntax in the

SPLIT PARTITIONSET case as follows.

-

The number of shards in the target shardspace must be the same as the source shardspace.

-

The target shardspace must be clean and not have any chunks.

-

The number of chunks in the target shardspace must be the same as the source shardspace.

-

Only two resulting partitionsets are allowed from one source partitionset. So the command can have at most 3 partitionsets specified.

-

You can specify the

STORAGEclauseorTABLESPACE SETclause with only one resulting partitionset and not both. -

Whichever partitionset has a

TABLESPACE SETorSTORAGEclause is considered the target partitionset; that is, the new partitionset on a different shardspace. All of the tablespace sets specified in the clause need to be on the target, or new shardspace. -

PARTITIONSETwithoutTABLESPACE SETclause is considered local; that is, it will be on the same shardspace the as existing source shardspace. -

The

VALUESclause must always be specified with the firstPARTITIONSETin the list. This enables either the upper or lower side of the range to be moved to the target shardspace in case of range partitioning for partitionsets. This clause can’t be specified or is implicit for the second resulting partitionset. -

You cannot use the

DEPENDENT TABLESclause to set specific properties for dependent tables when you issueSPLIT PARTITIONSET. -

Resulting partitionsets must have distinct names.

Note that in case of range partitioning for partitionset keys, the command can retain the upper range or lower range in the original partitionset, by indicating the storage clause with the intended target partitionset. For example,

ALTER TABLE employees SPLIT PARTITIONSET P1

into (PARTITIONSET junior_employees values less than 10,

PARTITIONSET senior_employees TABLESPACE SET ts4)The above command has ‘senior_employees’ as the new, or target, partitionset. Rows of partitionset employees having partitionset key column values less than 10 remain in the origin partitionset, and this partitionset is renamed ‘junior_employees’ at the end of the operation.

Rows of partitionset employees having partitionset key column values equal to or greater than 10 are moved to a new partitionset ‘senior_employees’ with a shardspace different than that of partitionset ‘employees’. Tablespace set clause associated with partitionset ‘senior_employees’ and tablespace set ‘ts4’ being in the target shardspace indicates that this partitionset will be in the new target shardspace.

Managing Chunks with Oracle Enterprise Manager Cloud Control

You can manage Globally Distributed Database chunks using Oracle Enterprise Manager Cloud Control.

To manage chunks using Cloud Control, the shard databases on which they reside must first be discovered. Because each database shard is a database itself, you can use standard Cloud Control database discovery procedures.

The following topics describe chunk management using Oracle Enterprise Manager Cloud Control: