Machine Learning Techniques and Algorithms

Machine learning problems are categorized into mining techniques. Each machine learning function specifies a class of problems that can be modeled and solved. An algorithm is a mathematical procedure for solving a specific kind of problem.

Machine Learning Techniques

Machine learning techniques define classes of problems for modeling and solving. Understand supervised and unsupervised methods in Oracle Machine Learning.

A basic understanding of machine learning techniques and algorithms is required for using Oracle Machine Learning.

Each machine learning technique specifies a class of problems that can be modeled and solved. Machine learning techniques fall generally into two categories: supervised and unsupervised. Notions of supervised and unsupervised learning are derived from the science of machine learning, which has been called a sub-area of artificial intelligence.

Artificial intelligence refers to the implementation and study of systems that exhibit autonomous intelligence or behavior of their own. Machine learning deals with techniques that enable devices to learn from their own performance and modify their own functioning.

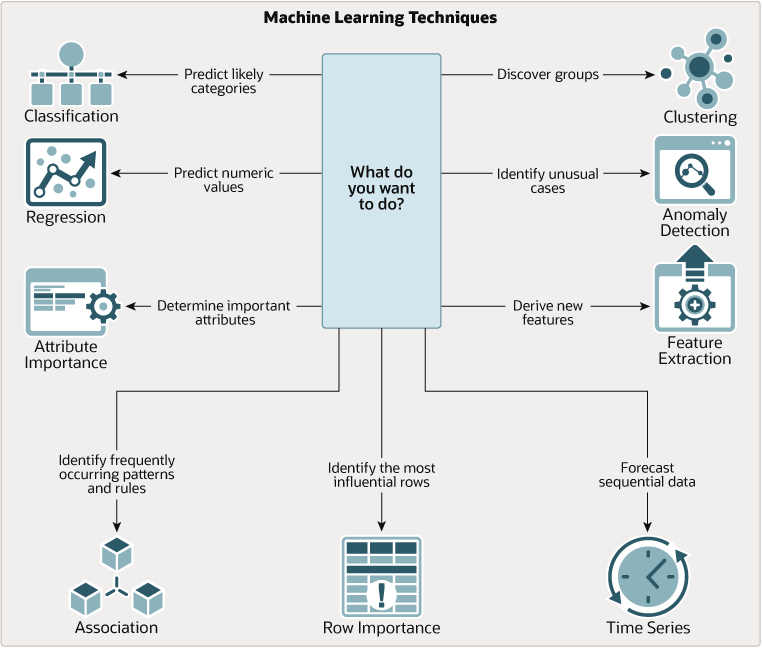

Figure 1-2 How to Use Machine Learning techniques

Description of "Figure 1-2 How to Use Machine Learning techniques"

Related Topics

Supervised Learning

Supervised learning is also known as directed learning. The learning process is directed by a previously known dependent attribute or target.

Supervised machine learning attempts to explain the behavior of the target as a function of a set of independent attributes or predictors. Supervised learning generally results in predictive models.

The building of a supervised model involves training, a process whereby the software analyzes many cases where the target value is already known. In the training process, the model "learns" the patterns in the data that enable making predictions. For example, a model that seeks to identify the customers who are likely to respond to a promotion must be trained by analyzing the characteristics of many customers who are known to have responded or not responded to a promotion in the past.

Oracle Machine Learning supports the following supervised machine learning functions:

Table 1-1 Supervised Machine Learning Functions

| Function | Description | Sample Problem | Supported Algorithms |

|---|---|---|---|

| Feature Selection or Attribute Importance | Identifies the attributes that are most important in predicting a target attribute | Given customer response to an affinity card program, find the most significant predictors | |

| Classification | Assigns items to discrete classes and predicts the class to which an item belongs | Given demographic data about a set of customers, predict customer response to an affinity card program | |

| Regression | Approximates and forecasts continuous values | Given demographic and purchasing data about a set of customers, predict customers' age | |

| Ranking | Predicts the probability of one item over other items | Recommend products to online customers based on their browsing history | XGBoost |

| Time Series | Forecasts target value based on known history of target values taken at equally spaced points in time | Predict the length of the ocean waves, address tactical issues such as projecting costs, inventory requirements and customer satisfaction, and so on. | Exponential Smoothing |

Unsupervised Learning

Unsupervised learning is non-directed. There is no distinction between dependent and independent attributes. There is no previously-known result to guide the algorithm in building the model.

Unsupervised learning can be used for descriptive purposes. In unsupervised learning, the goal is pattern detection. It can also be used to make predictions.

Oracle Machine Learning supports the following unsupervised machine learning functions:

Table 1-2 Unsupervised Machine Learning Functions

| Function | Description | Sample Problem | Supported Algorithms |

|---|---|---|---|

| Anomaly Detection | Identifies rows (cases, examples) that do not satisfy the characteristics of "normal" data | Given demographic data about a set of customers, identify which customer purchasing behaviors are unusual in the dataset, which may be indicative of fraud. | |

| Association | Finds items that tend to co-occur in the data and specifies the rules that govern their co-occurrence | Find the items that tend to be purchased together and specify their relationship | Apriori |

| Clustering | Finds natural groupings in the data | Segment demographic data into clusters and rank the probability that an individual belongs to a given cluster | |

| Feature Extraction | Creates new attributes (features) using linear combinations of the original attributes | Given demographic data about a set of customers, transform the original attributes into fewer new attributes. | |

| Row Importance | Row importance technique is used in dimensionality reduction of large data sets. Row importance identifies the most influential rows of the data set. | Given a data set, select rows that meet a minimum importance value prior to model building. | cur Matrix Decomposition |

What is a Machine Learning Algorithm

An algorithm is a mathematical procedure for solving a specific kind of problem. For some machine learning techniques, you can choose among several algorithms.

Each algorithm produces a specific type of model, with different characteristics. Some machine learning problems can best be solved by using more than one algorithm in combination. For example, you might first use a feature extraction model to create an optimized set of predictors, then a classification model to make a prediction on the results.

About Unstructured Text

Unstructured text may contain important information that is critical to the success of a business.

Machine learning algorithms act on data that is numerical or categorical. Numerical data is ordered. It is stored in columns that have a numeric data type, such as NUMBER or FLOAT. Categorical data is identified by category or classification. It is stored in columns that have a character data type, such as VARCHAR2 or CHAR.

Unstructured text data is neither numerical nor categorical. Unstructured text includes items such as web pages, document libraries, Power Point presentations, product specifications, emails, comment fields in reports, and call center notes. It has been said that unstructured text accounts for more than three quarters of all enterprise data. Extracting meaningful information from unstructured text can be critical to the success of a business.

About Machine Learning and Oracle Text

Understand machine learning operations on text and Oracle Text.

Machine learning operations on text is the process of applying machine learning techniques to text terms, also called text features or tokens. Text terms are words or groups of words that have been extracted from text documents and assigned numeric weights. Text terms are the fundamental unit of text that can be manipulated and analyzed.

Oracle Text is an Oracle AI Database technology that provides term extraction, word and theme searching, and other utilities for querying text. When columns of text are present in the training data, Oracle Machine Learning for SQL uses Oracle Text utilities and term weighting strategies to transform the text for machine learning operations. OML4SQL passes configuration information supplied by you to Oracle Text and uses the results in the model creation process.

Related Topics

About Partitioned Models

Introduces partitioned models to organize and represent multiple models.

When you build a model on your data set and apply it to new data, sometimes the

prediction may be generic that performs badly when run on new and evolving data. To

overcome this, the data set can be divided into different parts based on some

characteristics. Oracle Machine Learning for SQL supports

partitioned model. Partitioned models allow users to build a type of ensemble model for

each data partition. The top-level model has sub models that are automatically produced.

The sub models are based on the attribute options. For example, if your data set has an

attribute called REGION with four values and you have defined it as the

partitioned attribute. Then, four sub models are created for this attribute. The sub

models are automatically managed and used as a single model. The partitioned model

automates a typical machine learning task and can potentially

achieve better accuracy through multiple targeted models.

The partitioned model and its sub models reside as first class, persistent database objects. Persistent means that the partitioned model has an on-disk representation. In a partition model, the performance of partitioned models with a large number of partitions is enhanced, and dropping a single model within a partition model is also improved.

To create a partitioned model, include the ODMS_PARTITION_COLUMNS setting. To define the number of partitions, include the ODMS_MAX_PARTITIONS setting. When you are making predictions, you must use the top-level model. The correct sub model is selected automatically based on the attribute, the attribute options, and the partition setting. You must include the partition columns as part of the USING clause when scoring. The GROUPING hint is an optional hint that applies to machine learning scoring functions when scoring partitioned models.

The partition names, key values, and the structure of the partitioned model are available in the ALL_MINING_MODEL_PARTITIONS view.

Related Topics

See Also:

Oracle AI Database SQL Language Reference on how to useGROUPING hint.

Oracle Machine Learning for SQL User’s Guide to understand more about partitioned models.

Partitioned Model Build Process

To build a partitioned model, Oracle Machine Learning for SQL requires a partitioning key specified in a settings table.

The partitioning key is a comma-separated list of one or more columns (up to 16) from the input data set. The partitioning key horizontally slices the input data based on discrete values of the partitioning key. That is, partitioning is performed as list values as opposed to range partitioning against a continuous value. The partitioning key supports only columns of the data type NUMBER and VARCHAR2.

During the build process the input data set is partitioned based on the distinct values of the specified key. Each data slice (unique key value) results in its own model partition. The resultant model partition is not separate and is not visible to you as a standalone model. The default value of the maximum number of partitions for partitioned models is 1000 partitions. You can also set a different maximum partitions value. If the number of partitions in the input data set exceeds the defined maximum, Oracle Machine Learning for SQL throws an exception.

The partitioned model organizes features common to all partitions and the partition specific features. The common features consist of the following metadata:

-

The model name

-

The machine learning function

-

The machine learning algorithm

-

A super set of all machine learning model attributes referenced by all partitions (signature)

-

A common set of user-defined column transformations

-

Any user-specified or default build settings that are interpreted as global; for example, the Auto Data Preparation (ADP) setting

DDL in Partitioned model

Learn about maintenance of partitioned models thorough DDL operations.

Partitioned models are maintained through the following DDL operations:

Add Partition

Oracle Machine Learning for SQL supports adding a single partition or multiple partitions to an existing partitioned model.

The addition occurs based on the input data set and the name of the existing partitioned model. The operation takes the input data set and the existing partitioned model as parameters. The partition keys are extracted from the input data set and the model partitions are built against the input data set. These partitions are added to the partitioned model.

If the input data set contains multiple keys, then the operation may create

one or more partitions. If the total number of partitions in the model increases to more

than the user-defined maximum specified when the model was created, then you get an

error. The default threshold value for the number of partitions is 1000. See

ODMS_MAX_PARTITIONS in DBMS_DATA_MINING - Global Settings for more details.

Tip:

In the case where partition keys for new partitions conflict with the existing partitions in the model, you can select from the following three approaches to resolve the conflicts:-

ERROR: Terminates the ADD operation without adding any partitions. -

REPLACE: Replaces the existing partition for which the conflicting keys are found. -

IGNORE: Eliminates the rows having the conflicting keys.

The ODMS_PARTITIONED_COLUMNS parameter is used for selecting

the column on which partition is applied. The following example shows selecting the

column for partition:

%script

BEGIN DBMS_DATA_MINING.DROP_MODEL('SVM_MOD_PARTITIONED');

EXCEPTION WHEN OTHERS THEN NULL; END;

/

DECLARE

v_setlst DBMS_DATA_MINING.SETTING_LIST;

BEGIN

v_setlst('ALGO_NAME') := 'ALGO_SUPPORT_VECTOR_MACHINES'; -- SVM algorithm

v_setlst('SVMS_KERNEL_FUNCTION') := 'SVMS_LINEAR'; -- choose linear kernel, which provides coefficients

v_setlst('ODMS_PARTITION_COLUMNS') := 'CUST_GENDER'; -- choose column on which to partition the data

DBMS_DATA_MINING.CREATE_MODEL2(

MODEL_NAME => 'SVM_MOD_PARTITIONED',

MINING_FUNCTION => 'REGRESSION',

DATA_QUERY => 'SELECT * FROM CUSTOMERS_DEMO',

SET_LIST => v_setlst,

CASE_ID_COLUMN_NAME => 'CUST_ID',

TARGET_COLUMN_NAME => 'YRS_RESIDENCE');

END;

You can also define ODMS_PARTITION_BUILD_TYPE to control the

parallel build of partitioned models.

ERROR as an

optional value for ADD_OPTIONS which terminates the add operation

without adding any

partitions.BEGIN

DBMS_DATA_MINING.ADD_PARTITION(

model_name => 'SVM_MOD_PARTITIONED',

data_query =>'SELECT * FROM CUSTOMERS_DEMO',

add_options = >'ERROR'

);

END;

/See Also:

DBMS_DATA_MINING - Global Settings and ADD_PARTITION Procedure to specify the settings.

Drop Partition

Oracle Machine Learning for SQL supports dropping a single model partition for a given partition name.

Drop a partition when a particular segment of data is no longer relevant or has become obsolete.

Note:

If only one partition exists, you cannot directly drop it. Instead, add more partitions before dropping it or drop the entire model.CUST_GENDER

column on which partition was

applied.--For dropping a partition with the value 'M'

BEGIN

DBMS_DATA_MINING.DROP_PARTITION(

model_name => 'SVM_MOD_PARTITIONED',

partition_name =>'M'

);

END;

/

--For dropping a partition with the value 'F'

BEGIN

DBMS_DATA_MINING.DROP_PARTITION(

model_name => 'SVM_MOD_PARTITIONED',

partition_name =>'F'

);

END;

/

Replace Partition

Oracle Machine Learning for SQL supports replacing an existing partition for which conflicting keys are found.

You can use REPLACE as an optional parameter to define how

add operation handles when rows in the input data set conflict with existing partitions

in the model.

The following example shows how you can use REPLACE as an

optional value for ADD_OPTIONS.

BEGIN

DBMS_DATA_MINING.ADD_PARTITION(

model_name => 'SVM_MOD_PARTITIONED',

data_query =>'SELECT * FROM CUSTOMERS_DEMO',

add_options = >'REPLACE'

);

END;

/See Also:

ADD_PARTITION ProcedureDrop Partitioned Model

OML4SQL supports dropping a partitioned model. This is used when the full model is obsolete or no longer required.

BEGIN DBMS_DATA_MINING.DROP_MODEL('SVM_MOD_PARTITIONED');

EXCEPTION WHEN OTHERS THEN NULL;

END;

/See Also:

DROP_MODEL ProcedurePartitioned Model Scoring

The scoring of the partitioned model is the same as that of the non-partitioned model.

The syntax of the machine learning function remains the same but is extended to provide an optional hint.

For scoring a partitioned model, the signature columns used during the build for the partitioning key must be present in the scoring data set. These columns are combined to form a unique partition key. The unique key is then mapped to a specific underlying model partition, and the identified model partition is used to score that row.

The partitioned objects that are necessary for scoring are loaded on demand during the query execution and are aged out depending on the System Global Area (SGA) memory.

CREATE_MODEL2 procedure is used for creating

the model. The partition attribute is CUST_GENDER. This attribute

has two options M and F.%script

BEGIN DBMS_DATA_MINING.DROP_MODEL('SVM_MOD_PARTITIONED');

EXCEPTION WHEN OTHERS THEN NULL; END;

/

DECLARE

v_setlst DBMS_DATA_MINING.SETTING_LIST;

BEGIN

v_setlst('ALGO_NAME'):= 'ALGO_SUPPORT_VECTOR_MACHINES';

v_setlst('SVMS_KERNEL_FUNCTION') :='SVMS_LINEAR';

v_setlst('ODMS_PARTITION_COLUMNS'):='CUST_GENDER';

DBMS_DATA_MINING.CREATE_MODEL2(

MODEL_NAME => 'SVM_MOD_PARTITIONED',

MINING_FUNCTION => 'REGRESSION',

DATA_QUERY => 'SELECT * FROM CUSTOMERS_DEMO',

SET_LIST => v_setlst,

CASE_ID_COLUMN_NAME => 'CUST_ID',

TARGET_COLUMN_NAME => 'YRS_RESIDENCE');

END;

PL/SQL procedure successfully completed.

---------------------------

PL/SQL procedure successfully completed.

%script

SELECT cust_id, YRS_RESIDENCE,

ROUND(PREDICTION(SVM_MOD_PARTITIONED USING *),2) pred_YRS_RESIDENCE

FROM CUSTOMERS_DEMO;

CUST_ID YRS_RESIDENCE PRED_YRS_RESIDENCE

100100 4 4.71

100200 2 1.62

100300 4 4.66

100400 6 5.9

100500 2 2.07

100600 3 2.74

100700 6 5.78

100800 5 7.22

100900 4 4.88

101000 7 6.49

101100 4 3.54

101200 1 1.46

101300 4 4.34

101400 4 4.34 ...SVM_MOD_PARTITIONED, for the partition where

CUST_GENDER =

'M'.SELECT target_value class, attribute_name aname, attribute_value aval, coefficient coeff

FROM (SELECT target_value, attribute_name, attribute_value, coefficient

FROM DM$VLSVM_MOD_PARTITIONED WHERE partition_name =

(SELECT ORA_DM_PARTITION_NAME(SVM_MOD_PARTITIONED using 'M' CUST_GENDER) FROM dual)

ORDER BY coefficient DESC)

WHERE ROWNUM <= 10;Use Optional Hint for Scoring a Partitioned Model

The optional hint can impact the performance of a query which involves scoring a partitioned model. See GROUPING Hint for more details.

The following example retrieves the top 3 customers per gender based on

prediction probability using a partitioned SVM model named

SVM_MOD_PARTITIONED and using a GROUPING

hint.

column gender format a1

column income format a30

column rnk format 9

SELECT cust_id, cust_gender as gender, rnk, pd FROM

( SELECT cust_id, cust_gender,

PREDICTION_DETAILS(/*+ GROUPING */ SVM_MOD_PARTITIONED, 1 USING *) pd,

rank() over (partition by cust_gender order by

PREDICTION_PROBABILITY(SVM_MOD_PARTITIONED, 1 USING *) desc, cust_id) rnk

FROM mining_data_apply_parallel_v)

WHERE rnk <= 3

order by rnk, cust_gender;See the oml4sql-partitioned-models-svm example on GitHub for a complete example.

Related Topics

Automatic Data Preparation

Most algorithms require some form of data transformation. During the model build process, Oracle Machine Learning for SQL can automatically perform the transformations required by the algorithm.

You can choose to supplement the automatic transformations with additional transformations of your own, or you can choose to manage all the transformations yourself.

In calculating automatic transformations, Oracle Machine Learning for SQL uses heuristics that address the common requirements of a given algorithm. This process results in reasonable model quality in most cases.

Binning and normalization are transformations that are commonly needed by machine learning algorithms.

Related Topics

Binning

Binning, also called discretization, is a technique for reducing the cardinality of continuous and discrete data. Binning groups related values together in bins to reduce the number of distinct values.

Binning can improve resource utilization and model build response time dramatically without significant loss in model quality. Binning can improve model quality by strengthening the relationship between attributes.

Supervised binning is a form of intelligent binning in which important characteristics of the data are used to determine the bin boundaries. In supervised binning, the bin boundaries are identified by a single-predictor decision tree that takes into account the joint distribution with the target. Supervised binning can be used for both numerical and categorical attributes.

How ADP Transforms the Data

The following table shows how ADP prepares the data for each algorithm.

Table 1-3 Oracle Machine Learning Algorithms With ADP

See Also:

-

Part III, Algorithms, in Oracle Machine Learning for SQL Concepts for more information about algorithm-specific data preparation

Missing Value Treatment in Oracle Machine Learning for SQL

Summarizes the treatment of missing values in Oracle Machine Learning for SQL.

Missing value treatment depends on the algorithm and on the nature of the data (categorical or numerical, sparse or missing at random). Missing value treatment is summarized in the following table.

Note:

Oracle Machine Learning for SQL performs the same missing value treatment whether or not you are using Automatic Data Preparation (ADP).

Table 1-4 Missing Value Treatment by Algorithm

Data Preparation

Data preparation involves cleaning, transforming, and organizing data for building effective machine learning models. Quality data is essential for accurate model predictions.

The quality of a model depends to a large extent on the quality of the data used to build (train) it. Much of the time spent in any given machine learning project is devoted to data preparation. The data must be carefully inspected, cleansed, and transformed, and algorithm-appropriate data preparation methods must be applied.

The process of data preparation is further complicated by the fact that any data to which a model is applied, whether for testing or for scoring, must undergo the same transformations as the data used to train the model.

Simplify Data Preparation with Oracle Machine Learning for SQL

Oracle Machine Learning for SQL (OML4SQL) provides inbuilt data preparation, automatic data preparation, custom data preparation through the DBMS_DATA_MINING_TRANSFORM PL/SQL package, model details, and employs consistent approach across machine learning algorithms to manage missing and sparse data.

OML4SQL offers several features that significantly simplify the process of data preparation:

-

Embedded data preparation: The transformations used in training the model are embedded in the model and automatically run whenever the model is applied to new data. If you specify transformations for the model, you only have to specify them once.

-

Automatic Data Preparation (ADP): Oracle Machine Learning for SQL supports an automated data preparation mode. When ADP is active, Oracle Machine Learning for SQL automatically performs the data transformations required by the algorithm. The transformation instructions are embedded in the model along with any user-specified transformation instructions.

-

Automatic management of missing values and sparse data: Oracle Machine Learning for SQL uses consistent methodology across machine learning algorithms to handle sparsity and missing values.

-

Transparency: Oracle Machine Learning for SQL provides model details, which are a view of the attributes that are internal to the model. This insight into the inner details of the model is possible because of reverse transformations, which map the transformed attribute values to a form that can be interpreted by a user. Where possible, attribute values are reversed to the original column values. Reverse transformations are also applied to the target of a supervised model, thus the results of scoring are in the same units as the units of the original target.

-

Tools for custom data preparation: Oracle Machine Learning for SQL provides many common transformation routines in the

DBMS_DATA_MINING_TRANSFORMPL/SQL package. You can use these routines, or develop your own routines in SQL, or both. The SQL language is well suited for implementing transformations in the database. You can use custom transformation instructions along with ADP or instead of ADP.

Case Data

Case data organizes information in single-record rows for each case, essential for most machine learning algorithms in Oracle Machine Learning for SQL.

Most machine learning algorithms act on single-record case data, where the information for each case is stored in a separate row. The data attributes for the cases are stored in the columns.

When the data is organized in transactions, the data for one case (one transaction) is stored in many rows. An example of transactional data is market basket data. With the single exception of Association Rules, which can operate on native transactional data, Oracle Machine Learning for SQL algorithms require single-record case organization.

Nested Data

Nested data supports attributes in nested columns, enabling effective mining of complex data structures and multiple sources.

Oracle Machine Learning for SQL supports attributes in nested columns. A transactional table can be cast as a nested column and included in a table of single-record case data. Similarly, star schemas can be cast as nested columns. With nested data transformations, Oracle Machine Learning for SQL can effectively mine data originating from multiple sources and configurations.

Text Data

Text data involves transforming unstructured text into numeric values for analysis, utilizing Oracle Text utilities and configurable transformations.

Oracle Machine Learning for SQL interprets CLOB columns and long VARCHAR2

columns automatically as unstructured text. Additionally, you can specify columns of

short VARCHAR2, CHAR, BLOB, and

BFILE as unstructured text. Unstructured text includes data items

such as web pages, document libraries, Power Point presentations, product

specifications, emails, comment fields in reports, and call center notes.

Oracle Machine Learning for SQL uses Oracle Text utilities and term weighting strategies to transform unstructured text for analysis. In text transformation, text terms are extracted and given numeric values in a text index. The text transformation process is configurable for the model and for individual attributes. Once transformed, the text can by mined with a Oracle Machine Learning for SQL algorithm.

Related Topics

Data Requirements

Understand how data is stored and viewed for Oracle Machine Learning.

Machine learning activities require data that is defined within a single table or view. The information for each record must be stored in a separate row. The data records are commonly called cases. Each case can optionally be identified by a unique case ID. The table or view itself can be referred to as a case table.

The CUSTOMERS table in the

SH schema is an example of a table that

could be used for machine learning. All the

information for each customer is contained in a single row. The

case ID is the CUST_ID column. The rows listed in

the following example are

selected from SH.CUSTOMERS.

Note:

Oracle Machine Learning requires single-record case data for all types of models except association models, which can be built on native transactional data.

Example 1-1 Sample Case Table

select cust_id, cust_gender, cust_year_of_birth,

cust_main_phone_number from sh.customers where cust_id < 11;

The output is as follows:

CUST_ID CUST_GENDER CUST_YEAR_OF_BIRTH CUST_MAIN_PHONE_NUMBER

------- ----------- ---- ------------- -------------------------

1 M 1946 127-379-8954

2 F 1957 680-327-1419

3 M 1939 115-509-3391

4 M 1934 577-104-2792

5 M 1969 563-667-7731

6 F 1925 682-732-7260

7 F 1986 648-272-6181

8 F 1964 234-693-8728

9 F 1936 697-702-2618

10 F 1947 601-207-4099Related Topics

Column Data Types

Understand the different types of column data in a case table.

The columns of the case table hold the attributes that describe each case. In Example 1-1, the attributes are: CUST_GENDER, CUST_YEAR_OF_BIRTH, and CUST_MAIN_PHONE_NUMBER. The attributes are the predictors in a supervised model or the descriptors in an unsupervised model. The case ID, CUST_ID, can be viewed as a special attribute; it is not a predictor or a descriptor.

Oracle Machine Learning for SQL supports standard Oracle data types except DATE, TIMESTAMP, RAW, and LONG. Oracle Machine Learning supports date type (datetime, date, timestamp) for case_id, CLOB/BLOB/FILE that are interpreted as text columns, and the following collection types as well:

DM_NESTED_CATEGORICALSDM_NESTED_NUMERICALSDM_NESTED_BINARY_DOUBLESDM_NESTED_BINARY_FLOATS

Note:

The attributes with the data type BOOLEAN are treated as numeric with the following values: TRUE means 1, FALSE means 0, and NULL is interpreted as an unknown value. The CASE_ID_COLUMN_NAME attribute does not support BOOLEAN data type.

Vector Data Type

You can provide

VECTOR data as input to Oracle Machine Learning

in-database algorithms to complement other structured data or be used alone. The vector data

type is supported for clustering, classification, anomaly detection, and feature extraction.

While dense vectors with arbitrary precision and dimensions are supported, in a flex vector column, precision may differ and dimensions within a single vector column must remain consistent. Errors are raised for mismatched dimensions.

Partitioned models track vector dimensions alongside partition statistics. Different partitions can have different vector dimensions, however, dimensions must remain consistent within a single partition. Errors are raised for mismatched dimensions within a single partition.

The system supports FLOAT32, FLOAT64, and

INT8 as datatypes. Vectors with FLEX dimension and

precision are supported. These features can be used in combination with the other data

types supported by OML (numerical, categorical, nested, and text).

Scoring with Vectors

The system treats each vector dimension as an individual predictor and

provides model details at the vector component level, labeled as

DM$$VECxxx, where xxx represents the

component's position. For example, DM$$VEC1. During scoring, the

system matches vector dimensions between the model and input data at compile time or

runtime, raising errors if mismatches occur. A vector cannot be a target or a

case_id column, errors are raised if you set vector as a target

or case_id.

- analytic scoring with vectors, analytic scoring operators skip the vector inputs without displaying any error.

- sparse vectors, raises an error that the format is not supported if sparse vectors are identified. To learn more about sparse vectors, see Create Tables Using the VECTOR Data Type.

- binary vector precision, raises an error that the format is not supported

OML supports the vector data type for the following algorithms and the scoring operators:

| Technique | Algorithms | Scoring Operator |

|---|---|---|

| Classification or Regression | SVM, Neural Network, GLM | PREDICTION,

PREDICTION_PROBABILITY,

PREDICTION_SET,

PREDICTION_BOUNDS |

| Anomaly Detection | One-class SVM, Expectation Maximization | PREDICTION,

PREDICTION_PROBABILITY,

PREDICTION_SET |

| Clustering | k-Means, Expectation Maximization | CLUSTER_ID,

CLUSTER_PROBABILITY,

CLUSTER_SET,

CLUSTER_DISTANCE |

| Feature Extraction | SVD, PCA | FEATURE_ID,

FEATURE_VALUE, FEATURE_SET,

VECTOR_EMBEDDING |

See Example: Using Vector Data for Dimensionality Reduction and Clustering for more details.

Scoring Requirements

Learn how scoring is done in Oracle Machine Learning for SQL.

Most machine learning models can be applied to separate data in a process known as scoring. Oracle Machine Learning for SQL supports the scoring operation for classification, regression, anomaly detection, clustering, and feature extraction.

The scoring process matches column names in the scoring data with the names of the columns that were used to build the model. The scoring process does not require all the columns to be present in the scoring data. If the data types do not match, Oracle Machine Learning for SQL attempts to perform type coercion. For example, if a column called PRODUCT_RATING is VARCHAR2 in the training data but NUMBER in the scoring data, Oracle Machine Learning for SQL effectively applies a TO_CHAR() function to convert it.

The column in the test or scoring data must undergo the same transformations as the corresponding column in the build data. For example, if the AGE column in the build data was transformed from numbers to the values CHILD, ADULT, and SENIOR, then the AGE column in the scoring data must undergo the same transformation so that the model can properly evaluate it.

Note:

Oracle Machine Learning for SQL can embed user-specified transformation instructions in the model and reapply them whenever the model is applied. When the transformation instructions are embedded in the model, you do not need to specify them for the test or scoring data sets.

Oracle Machine Learning for SQL also supports Automatic Data Preparation (ADP). When ADP is enabled, the transformations required by the algorithm are performed automatically and embedded in the model along with any user-specified transformations.

See Also:

Automatic Data Preparation and Embed Transformations in a Model for more information on automatic and embedded data transformations

About Attributes

Attributes are the items of data that are used in machine learning. Attributes are also referred as variables, fields, or predictors.

In predictive models, attributes are the predictors that affect a given outcome. In descriptive models, attributes are the items of information being analyzed for natural groupings or associations. For example, a table of employee data that contains attributes such as job title, date of hire, salary, age, gender, and so on.

Data Attributes and Model Attributes

Data attributes are columns in the data set used to build, test, or score a model. Model attributes are the data representations used internally by the model.

Data attributes and model attributes can be the same. For example, a column called SIZE, with values S, M, and L, are attributes used by an algorithm to build a model. Internally, the model attribute SIZE is most likely be the same as the data attribute from which it was derived.

On the other hand, a nested column SALES_PROD, containing the sales figures for a group of products, does not correspond to a model attribute. The data attribute can be SALES_PROD, but each product with its corresponding sales figure (each row in the nested column) is a model attribute.

Transformations also cause a discrepancy between data attributes and model attributes. For example, a transformation can apply a calculation to two data attributes and store the result in a new attribute. The new attribute is a model attribute that has no corresponding data attribute. Other transformations such as binning, normalization, and outlier treatment, cause the model's representation of an attribute to be different from the data attribute in the case table.

Related Topics

Target Attribute

Understand what a target means in machine learning and understand the different target data types.

The target of a supervised model is a special kind of attribute. The target column in the training data contains the historical values used to train the model. The target column in the test data contains the historical values to which the predictions are compared. The act of scoring produces a prediction for the target.

Clustering, feature extraction, association, and anomaly detection models do not use a target.

Nested columns and columns of unstructured data (such as BFILE, CLOB, or BLOB) cannot be used as targets.

Table 1-5 Target Data Types

| Machine Learning Function | Target Data Types |

|---|---|

|

Classification |

|

|

Regression |

|

You can query the *_MINING_MODEL_ATTRIBUTES view to find the target for a given model.

Numericals, Categoricals, and Unstructured Text

Explains numeric, categorical, and unstructured text attributes.

Model attributes are numerical, categorical, or unstructured (text). Data attributes, which are columns in a case table, have Oracle data types, as described in "Column Data Types".

Numerical attributes can theoretically have an infinite number of values. The values have an implicit order, and the differences between them are also ordered. Oracle Machine Learning for SQL interprets NUMBER, FLOAT, BINARY_DOUBLE, BINARY_FLOAT, BOOLEAN, DM_NESTED_NUMERICALS, DM_NESTED_BINARY_DOUBLES, and DM_NESTED_BINARY_FLOATS as numerical.

Categorical attributes have values that identify a finite number of discrete categories or classes. There is no implicit order associated with the values. Some categoricals are binary: they have only two possible values, such as yes or no, or male or female. Other categoricals are multi-class: they have more than two values, such as small, medium, and large.

Oracle Machine Learning for SQL interprets CHAR and VARCHAR2 as categorical by default, however these columns may also be identified as columns of unstructured data (text). Oracle Machine Learning for SQL interprets columns of DM_NESTED_CATEGORICALS as categorical. Columns of CLOB, BLOB, and BFILE always contain unstructured data.

The target of a classification model is categorical. (If the target of a classification model is numeric, it is interpreted as categorical.) The target of a regression model is numerical. The target of an attribute importance model is either categorical or numerical.

Related Topics

Model Signature

Learn about model signature and the data types that are considered in the build data.

The model signature is the set of data attributes that are used to build a model. Some or all of the attributes in the signature must be present for scoring. The model accounts for any missing columns on a best-effort basis. If columns with the same names but different data types are present, the model attempts to convert the data type. If extra, unused columns are present, they are disregarded.

The model signature does not necessarily include all the columns in the build data. Algorithm-specific criteria can cause the model to ignore certain columns. Other columns can be eliminated by transformations. Only the data attributes actually used to build the model are included in the signature.

The target and case ID columns are not included in the signature.

Scoping of Model Attribute Name

Learn about model attribute name.

The model attribute name consists of two parts: a column name, and a subcolumn name.

column_name[.subcolumn_name]

The column_name component is the name of the data attribute. It is present in all model attribute names. Nested attributes and text attributes also have a subcolumn_name component as shown in the following example.

Example 1-2 Model Attributes Derived from a Nested Column

The nested column SALESPROD has three rows.

SALESPROD(ATTRIBUTE_NAME, VALUE) -------------------------------- ((PROD1, 300), (PROD2, 245), (PROD3, 679))

The name of the data attribute is SALESPROD. Its associated model attributes are:

SALESPROD.PROD1 SALESPROD.PROD2 SALESPROD.PROD3

Model Details

Model details reveal information about model attributes and their treatment by the algorithm. Oracle recommends that users leverage the model detail views for the respective algorithm.

Transformation and reverse transformation expressions are associated with model attributes. Transformations are applied to the data attributes before the algorithmic processing that creates the model. Reverse transformations are applied to the model attributes after the model has been built, so that the model details are expressed in the form of the original data attributes, or as close to it as possible.

Reverse transformations support model transparency. They provide a view of the data that the algorithm is working with internally but in a format that is meaningful to a user.

Deprecated GET_MODEL_DETAILS

There is a separate GET_MODEL_DETAILS routine for each algorithm. Starting from Oracle Database 12c Release 2, the GET_MODEL_DETAILS are deprecated. Oracle recommends to use Model Detail Views for the respective algorithms.

Related Topics

Use Nested Data

A join between the tables for one-to-many relationship is represented through nested columns.

Oracle Machine Learning for SQL requires a case table in single-record case format, with each record in a separate row. What if some or all of your data is in multi-record case format, with each record in several rows? What if you want one attribute to represent a series or collection of values, such as a student's test scores or the products purchased by a customer?

This kind of one-to-many relationship is usually implemented as a join between tables. For example, you can join your customer table to a sales table and thus associate a list of products purchased with each customer.

Oracle Machine Learning for SQL supports dimensioned data through nested columns. To include dimensioned data in your case table, create a view and cast the joined data to one of the machine learning nested table types. Each row in the nested column consists of an attribute name/value pair. Oracle Machine Learning for SQL internally processes each nested row as a separate attribute.

Note:

O-Cluster is the only algorithm that does not support nested data.

Related Topics

Nested Object Types

Nested tables are object data types that can be used in place of other data types.

Oracle AI Database supports user-defined data types that make it possible to model real-world entities as objects in the database. Collection types are object data types for modeling multi-valued attributes. Nested tables are collection types. Nested tables can be used anywhere that other data types can be used.

OML4SQL supports the following nested object types:

DM_NESTED_BINARY_DOUBLESDM_NESTED_BINARY_FLOATSDM_NESTED_NUMERICALSDM_NESTED_CATEGORICALS

Descriptions of the nested types are provided in this example.

Example 1-3 OML4SQL Nested Data Types

describe dm_nested_binary_double

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE BINARY_DOUBLE

describe dm_nested_binary_doubles

DM_NESTED_BINARY_DOUBLES TABLE OF SYS.DM_NESTED_BINARY_DOUBLE

Name Null? Type

------------------------------------------ -------- ---------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE BINARY_DOUBLE

describe dm_nested_binary_float

Name Null? Type

----------------------------------------- -------- ---------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE BINARY_FLOAT

describe dm_nested_binary_floats

DM_NESTED_BINARY_FLOATS TABLE OF SYS.DM_NESTED_BINARY_FLOAT

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE BINARY_FLOAT

describe dm_nested_numerical

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE NUMBER

describe dm_nested_numericals

DM_NESTED_NUMERICALS TABLE OF SYS.DM_NESTED_NUMERICAL

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE NUMBER

describe dm_nested_categorical

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE VARCHAR2(4000)

describe dm_nested_categoricals

DM_NESTED_CATEGORICALS TABLE OF SYS.DM_NESTED_CATEGORICAL

Name Null? Type

----------------------------------------- -------- ----------------------------

ATTRIBUTE_NAME VARCHAR2(4000)

VALUE VARCHAR2(4000)Related Topics

Example: Transforming Transactional Data for Machine Learning

In this example, a comparison is shown for sale of products in four regions with data before transformation and then after transformation.

Example 1-4 shows data from a view of a sales table. It includes sales for three of the many products sold in four regions. This data is not suitable for machine learning at the product level because sales for each case (product), is stored in several rows.

Example 1-5 shows how this data can be transformed for machine learning. The case ID

column is PRODUCT.

SALES_PER_REGION, a nested column

of type DM_NESTED_NUMERICALS, is a data

attribute. This table is suitable for machine learning at the product case level,

because the information for each case is stored in a

single row.

Note:

The presentation in this example is conceptual only. The data is not actually pivoted before being processed.

Example 1-4 Product Sales per Region in Multi-Record Case Format

PRODUCT REGION SALES

------- -------- ----------

Prod1 NE 556432

Prod2 NE 670155

Prod3 NE 3111

.

.

Prod1 NW 90887

Prod2 NW 100999

Prod3 NW 750437

.

.

Prod1 SE 82153

Prod2 SE 57322

Prod3 SE 28938

.

.

Prod1 SW 3297551

Prod2 SW 4972019

Prod3 SW 884923

.

.

Example 1-5 Product Sales per Region in Single-Record Case Format

PRODUCT SALES_PER_REGION

(ATTRIBUTE_NAME, VALUE)

------ --------------------------

Prod1 ('NE' , 556432)

('NW' , 90887)

('SE' , 82153)

('SW' , 3297551)

Prod2 ('NE' , 670155)

('NW' , 100999)

('SE' , 57322)

('SW' , 4972019)

Prod3 ('NE' , 3111)

('NW' , 750437)

('SE' , 28938)

('SW' , 884923)

.

.

Example 1-6 Model Attributes Derived From SALES_PER_REGION

PRODUCT SALES_PER_REGION.NE SALES_PER_REGION.NW SALES_PER_REGION.SE SALES_PER_REGION.SW

------- ------------------ ------------------- ------------------ -------------------

Prod1 556432 90887 82153 3297551

Prod2 670155 100999 57322 4972019

Prod3 3111 750437 28938 884923

.

.Handle Missing Values

Understand sparse data and missing values.

Oracle Machine Learning for SQL distinguishes between sparse data and data that contains random missing values. The latter means that some attribute values are unknown. Sparse data, on the other hand, contains values that are assumed to be known, although they are not represented in the data.

A typical example of sparse data is market basket data. Out of hundreds or thousands of available items, only a few are present in an individual case (the basket or transaction). All the item values are known, but they are not all included in the basket. Present values have a quantity, while the items that are not represented are sparse (with a known quantity of zero).

Oracle Machine Learning for SQL interprets missing data as follows:

-

Missing at random: Missing values in columns with a simple data type (not nested) are assumed to be missing at random.

-

Sparse: Missing values in nested columns indicate sparsity.

Missing Values or Sparse Data?

Some real life examples are described to interpret missing values and sparse data.

The examples illustrate how Oracle Machine Learning for SQL identifies data as either sparse or missing at random.

Sparsity in a Sales Table

Understand how Oracle Machine Learning for SQL interprets missing data in nested column.

A sales table contains point-of-sale data for a group of products that are sold in several stores to different customers over a period of time. A particular customer buys only a few of the products. The products that the customer does not buy do not appear as rows in the sales table.

If you were to figure out the amount of money a customer has spent for each product, the unpurchased products have an inferred amount of zero. The value is not random or unknown; it is zero, even though no row appears in the table.

Note that the sales data is dimensioned (by product, stores, customers, and time) and are often represented as nested data for machine learning.

Since missing values in a nested column always indicate sparsity, you must ensure that this interpretation is appropriate for the data that you want to mine. For example, when trying to mine a multi-record case data set containing movie ratings from users of a large movie database, the missing ratings are unknown (missing at random), but Oracle Machine Learning for SQL treats the data as sparse and infer a rating of zero for the missing value.

Missing Values in a Table of Customer Data

When the data is not available for some attributes, those missing values are considered to be missing at random.

A table of customer data contains demographic data about customers. The case ID column is the customer ID. The attributes are age, education, profession, gender, house-hold size, and so on. Not all the data is available for each customer. Any missing values are considered to be missing at random. For example, if the age of customer 1 and the profession of customer 2 are not present in the data, that information is unknown. It does not indicate sparsity.

Note that the customer data is not dimensioned. There is a one-to-one mapping between the case and each of its attributes. None of the attributes are nested.

Changing the Missing Value Treatment

Transform the missing data as sparse or missing at random.

If you want Oracle Machine Learning for SQL to treat missing data as sparse instead of missing at random or missing at random instead of sparse, transform it before building the model.

If you want missing values to be treated as sparse, but OML4SQL interprets them as missing at random, you can use a SQL function like NVL to replace the nulls with a value such as "NA". OML4SQL does not perform missing value treatment when there is a specified value.

If you want missing nested attributes to be treated as missing at random, you can transform the nested rows into physical attributes in separate columns — as long as the case table stays within the column limitation imposed by the Database. Fill in all of the possible attribute names, and specify them as null. Alternatively, insert rows in the nested column for all the items that are not present and assign a value such as the mean or mode to each one.

Related Topics

Prepare the Case Table

The first step in preparing data for machine learning is the creation of a case table.

If all the data resides in a single table and all the information for each case (record) is included in a single row (single-record case), this process is already taken care of. If the data resides in several tables, creating the data source involves the creation of a view. For the sake of simplicity, the term "case table" is used here to refer to either a table or a view.

Convert Column Data Types

In OML, string columns are treated as categorical, number columns as numerical, and BOOLEAN columns are treated as numerical. If you have a numeric column that you want to be treated as a categorical, you must convert it to a string. For example, the day number of the week.

For example, zip codes identify different postal zones; they do not imply order. If the zip codes are stored in a numeric column, they are interpreted as a numeric attribute. You must convert the data type so that the column data can be used as a categorical attribute by the model. You can do this using the TO_CHAR function to convert the digits 1-9 and the LPAD function to retain the leading 0, if there is one.

LPAD(TO_CHAR(ZIPCODE),5,'0')

The attributes with the data type BOOLEAN are treated as numeric with the following values: TRUE means 1, FALSE means 0, and NULL is interpreted as an unknown value. The CASE_ID_COLUMN_NAME attribute does not support BOOLEAN data type.

Extract Datetime Column Values

You can extract values from a datatime or interval value using the EXTRACT function.

The EXTRACT function extracts and returns the value of a specified datetime field from a datetime or interval value expression. The values that can be extracted are YEAR, MONTH, DAY, HOUR, MINUTE, SECOND, TIMEZONE_HOUR, TIMEZONE_MINUTE, TIMEZONE_REGION, and TIMEZONE_ABBR.

sales_ts view. The sales_ts view contains CUST_ID and TIME_STAMP columns. select cust_id, time_stamp,

extract(year from time_stamp) year,

extract(month from time_stamp) month,

extract(day from time_stamp) day_of_month,

to_char(time_stamp,'ww') week_of_year,

to_char(time_stamp,'D') day_of_week,

extract(hour from time_stamp) hour,

extract(minute from time_stamp) minute,

extract(second from time_stamp) second

from sales_tsText Transformation

Learn text processing using Oracle Machine Learning for SQL.

You can use Oracle Machine Learning for SQL to process text. Columns of text in the case table can be processed once they have undergone the proper transformation.

The text column must be in a table, not a view. The transformation process uses several features of Oracle Text; it treats the text in each row of the table as a separate document. Each document is transformed to a set of text tokens known as terms, which have a numeric value and a text label. The text column is transformed to a nested column of DM_NESTED_NUMERICALS.

About Business and Domain-Sensitive Transformations

Understand why you need to transform data according to business problems.

Some transformations are dictated by the definition of the business problem. For example, you want to build a model to predict high-revenue customers. Since your revenue data for current customers is in dollars you need to define what "high-revenue" means. Using some formula that you have developed from past experience, you can recode the revenue attribute into ranges Low, Medium, and High before building the model.

Another common business transformation is the conversion of date information into elapsed time. For example, date of birth can be converted to age.

Domain knowledge can be very important in deciding how to prepare the data. For example, some algorithms produce unreliable results if the data contains values that fall far outside of the normal range. In some cases, these values represent errors or unusualities. In others, they provide meaningful information.

Related Topics

Create Nested Columns

In transactional data, the information for each case is contained in multiple rows. When the data source includes transactional data (multi-record case), the transactions must be aggregated to the case level in nested columns.

An example is sales data in a star schema when machine learning at the product level. Sales is stored in many rows for a single product (the case) because the product is sold in many stores to many customers over a period of time.

See Also:

Using Nested Data for information about converting transactional data to nested columns