5.2 Create AutoML UI Experiment

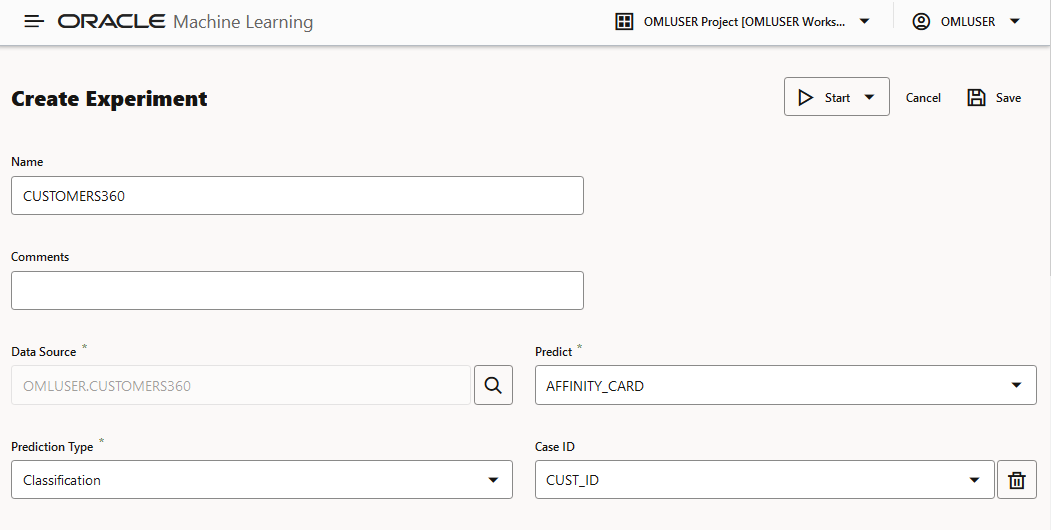

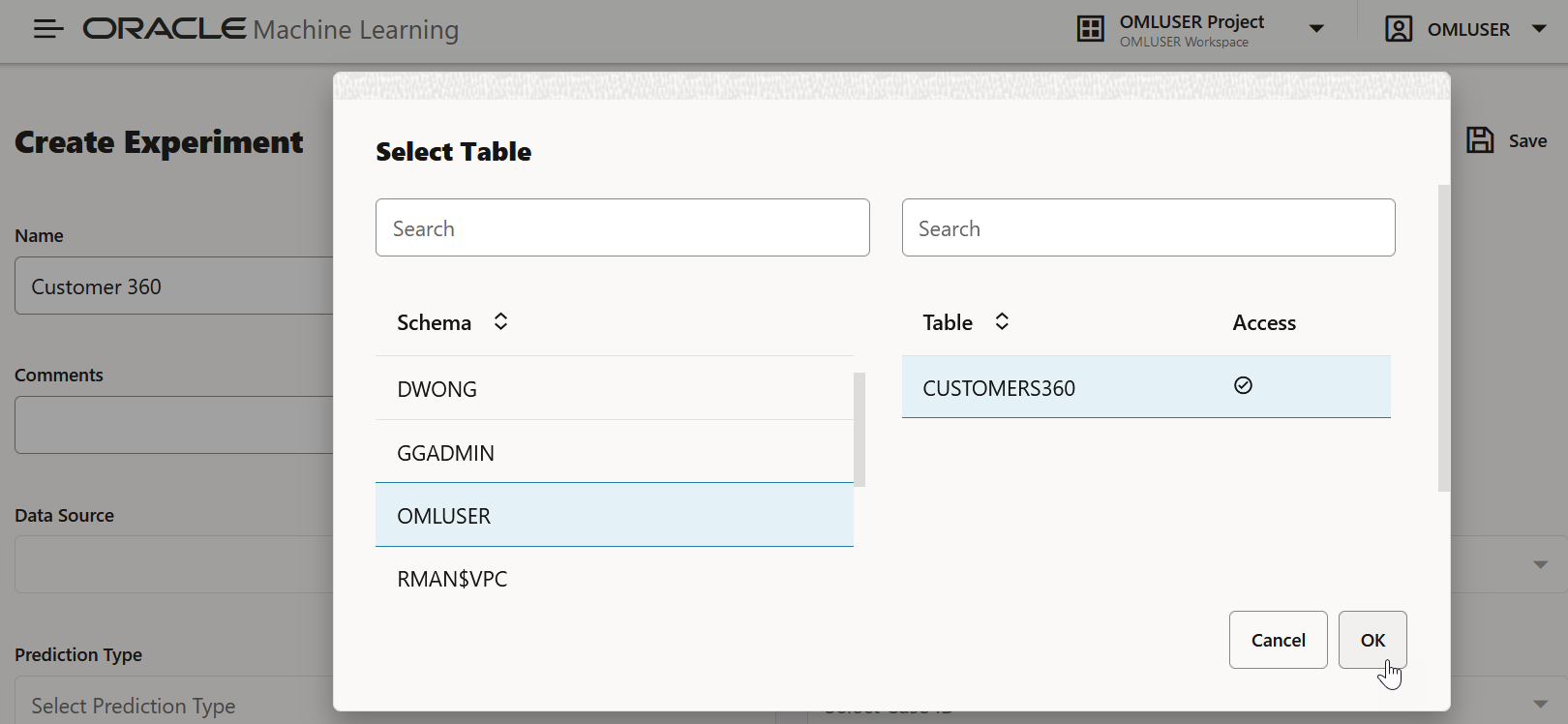

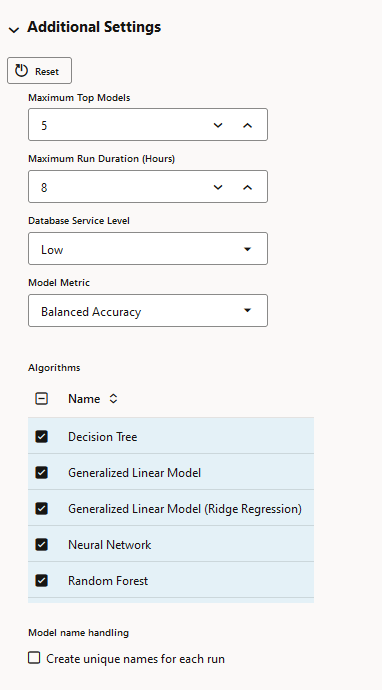

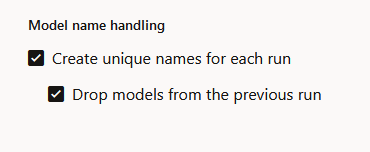

To use the Oracle Machine Learning AutoML UI, you start by creating an experiment. An experiment is a unit of work that minimally specifies the data source, prediction target, and prediction type. After an experiment runs successfully, it presents you a list of machine learning models in order of model quality according to the metric selected. You can select any of these models for deployment or to generate a notebook. The generated notebook contains Python code using OML4Py and the specific settings AutoML used to produce the model.

- Supported Data Types for AutoML UI Experiments

When creating an AutoML experiment, you must specify the data source and the target of the experiment. This topic lists the data types for Python and SQL that are supported by AutoML experiments.

Parent topic: AutoML UI

5.2.1 Supported Data Types for AutoML UI Experiments

When creating an AutoML experiment, you must specify the data source and the target of the experiment. This topic lists the data types for Python and SQL that are supported by AutoML experiments.

Table 5-1 Supported Data Types by AutoML Experiments

| Data Types | SQL Data Types | Python Data Types |

|---|---|---|

| Numerical |

|

|

| Categorical |

|

|

| Unstructured Text |

|

|

Parent topic: Create AutoML UI Experiment