D Transaction Log Audit Data Collection for Oracle Database

You can fine-tune audit data collection by setting REDO log parameters for Oracle Database targets.

D.1 Introduction to Transaction Log Audit Trails for Oracle Database Using Oracle GoldenGate

Learn about the recommended collection from REDO logs settings using Oracle GoldenGate.

REDO log files also known as transaction logs are files used by Oracle Database to maintain logs of all the transactions that have occurred in the database. This chapter contains the recommendations for setting initialization parameters to use the TRANSACTION LOG audit trail type to collect audit data from the REDO logs of Oracle Database target.

These log files allow Oracle Database to recover the changes made to the database in case of a failure. For example, if a user updates a salary value in a table that contains employee related data, a REDO record is generated. It contains the value before this change (old value) and the new changed value. REDO records are used to guarantee ACID (Atomicity, Consistency, Isolation, and Durability) properties over crash or hardware failure. In case of a database crash, the system performs redo (re-process) of all the changes on data files that takes the database data back to the state it was when the last REDO record was written.

REDO log records contain Before and After values for every DML (Data Manipulation Language) and DDL (Data Definition Language) operations. Oracle Audit Vault and Database Firewall provides the ability to monitor the changed values from REDO logs using Transaction Log collector.

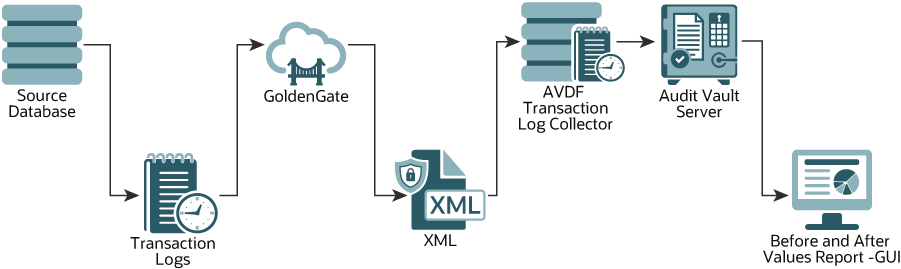

Transaction Log collector takes advantage of Oracle GoldenGate’s Integrated Extract process to move the REDO log data from database to XML files. The extract process is configured to run against the source database or it is configured to run on a Downstream Mining database (Oracle only). It captures DML and DDL operations that are performed on the configured objects. The captured operations from transaction logs are transferred to GoldenGate XML trail files. Oracle AVDF's Transaction Log collector collects transaction log records from generated XML files. These logs are forwarded to the Audit Vault Server to show the before and after values that were changed in the Data Modification Before-After Values report. The DDL changes are available in the All Activity report. The DML changes are available in the Data Modification Before-After Values report.

Starting with Oracle AVDF 20.10, the Data Modification Before-After Values report has additional information about key columns. GoldenGate, by default, uses the primary key columns of the table as key columns. If no primary keys are defined for the table, or if you want to use some other columns as key columns, then GoldenGate provides an option to specify key columns in the parameter file.

Figure D-1 Transaction Log Collection Process

Description of "Figure D-1 Transaction Log Collection Process"

See Also:

Oracle Database Setup Scripts for instructions on setting up privileges in the Oracle Database for collecting audit data from the REDO logs.

D.2 Sizing Guidelines

Learn and follow the sizing guidelines outlined in this topic.

Prerequisites

Adhere to the system and sizing requirements outlined in System Requirements and Operating System Requirements of Oracle GoldenGate documentation.

General sizing guidelines

- General recommendation for memory and CPU is to start with 32G memory and 2 CPUs per Integrated Extract as it is a multi threaded process and uses large memory when processing large transaction. Depending on the transaction volume and transaction pattern, scale up the resources appropriately following the guidelines in Oracle GoldenGate documentation.

- General recommendation for disk space is to start with 2T, and vary based on the volume of data the Integrated Extract captures from the source databases. Extract uses storage for trail files and temporary disk space for cache files in case there is big transaction to buffer for processing.

There are multiple database dictionary views in the computation formulae referred in

the guidelines. They provide information on transaction log size. For example,

v$log gives detailed information of each online log. Similarly

number of log switches per day can be estimated from

v$log_history/gv$log_history.

Temporary disk space requirements on account of large transactions may fill up cache and spill over to the transaction cached data or temporary files. Configure an archive policy and define the retention period of the files, so they can be recycled accordingly.

Maintain enough physical memory to handle large transactions. As per the guidelines,

have at least 32 GB of memory available for Extract to use. For a more accurate

estimation, collect the statistics from the database server history run and check

for the size of the biggest transaction. Oracle GoldenGate provides send

<extract> cachemgr, cachestats command that displays the statistics

of the transaction, that is helpful to determine the base line for estimation.

In general, the sizing, storage, and memory for Oracle GoldenGate Integrated Extract process is highly dependent on the transaction volume and transaction pattern. Collect these statistics from every single database server to estimate as there is no standard value.

The number of databases that can be supported by a single GoldenGate instance or Integrated Extract process, depends on the system resources that support multiple extracts. Ensure to configure one extract for every database.

D.3 Restricted Use License for Oracle GoldenGate

Learn about restricted license of Oracle GoldenGate.

A restricted use license for Oracle GoldenGate is included with Oracle Audit Vault and Database Firewall release 20. This license permits you to install Oracle GoldenGate and use the Integrated Extract process to capture transactional changes in database systems monitored by Oracle AVDF. The extracted data from Oracle GoldenGate is consumed only by Oracle AVDF. Deploy Oracle GoldenGate Microservices Architecture on a separate server other than the server on which the Oracle AVDF appliance is deployed. Later configure the Integrated Extract feature of Oracle GoldenGate. Oracle GoldenGate version 19.1.0.0.4 is the minimum version supported with Oracle Audit Vault and Database Firewall 20.9 and earlier, and Oracle GoldenGate 21.9.0.0.0 is the minimum version supported with Oracle Audit Vault and Database Firewall 20.10 and later. To support Oracle Databases prior to 12.2, Downstream Mining needs to be configured. It requires the deployment of Oracle Database Enterprise Edition and has to be licensed separately.

D.4 Installing Oracle GoldenGate on Oracle Databases

Follow these instructions to install Oracle GoldenGate for Oracle Databases.

Deploy Oracle GoldenGate on a separate server other than the server on which the Oracle AVDF appliance is deployed. Then configure the Oracle GoldenGate Integrated Extract feature.

Oracle AVDF 20.9 and Earlier

Download and install Oracle GoldenGate 19.1.0.0.0 Microservices architecture from Oracle Software Delivery Cloud.

Follow the instructions for Installing Oracle GoldenGate for Oracle Databases in the Oracle GoldenGate 19c documentation. After installing Oracle GoldenGate, apply the Oracle GoldenGate 19.1.0.0.4 Microservices architecture patch from My Oracle Support.

Note:

After installing Oracle GoldenGate, contact Oracle Support to create a Merge Label Request for applying the patch 32063871, 32175609, 33701099, 34014874, and 36684067. This patch needs to be applied on Oracle GoldenGate installation.Oracle AVDF 20.10 and Later

Download and install Oracle GoldenGate 21.9.0.0.0 Microservices architecture from My Oracle Support (patch 34958369 complete install).

Follow the instructions for Installing Oracle GoldenGate in the Oracle GoldenGate Microservices documentation for Oracle GoldenGate 21c.

D.5 Capturing Transaction Log Data from Oracle Database 12.2.0.1 and Later

Learn how to capture Transaction Log data from Oracle Database versions 12.2.0.1 and later.

Oracle GoldenGate Integrated Extract process is supported only for Oracle Database versions 12.2.0.1 and later. In this case Oracle GoldenGate Integrated Extract is configured on the source database. To capture Transaction Log data from Oracle Database 12.2.0.1 or later, run the steps in the following sections and in the same order:

D.6 Downstream Mining to Capture Transaction Log Data from Oracle Database Prior to 12.2.0.1

Learn how to capture Transaction Log data from Oracle Database versions prior to 12.2.0.1.

Oracle GoldenGate Integrated Extract process is supported only for Oracle Database versions 12.2.0.1 and later. In this case Oracle GoldenGate Integrated Extract is configured on the source database.

For capturing Transaction Log data from Oracle Database versions prior to 12.2.0.1, Downstream Mining must be used. In this case there are 2 databases, the source database and the Downstream Mining database. The source database (Oracle Database prior to 12.2.0.1) is configured to ship the online REDO logs to a Downstream database (Oracle Database version 12.2.0.1 or later). Integrated Extract is then configured on the Downstream database.

Note:

-

Before configuring Downstream Mining, execute the steps in sections Create User and Grant Relevant Privileges and Configure Oracle GoldenGate Parameters for Oracle Database on both the source database and the Downstream Mining database.

-

Configure Downstream Mining by referring to section Configure GoldenGate Downstream Mining.

D.7 Migrating Transaction Log Audit Trail from Oracle AVDF 12.2 to 20

Learn how to migrate transaction log audit trail from Oracle AVDF 12.2 to 20.

Transaction log audit trail data can be migrated from Oracle AVDF 12.2 to 20. Follow this procedure before upgrading to Oracle AVDF 20:

- Install and deploy Oracle GoldenGate.

-

Run the below procedure for every transaction log audit trail in Oracle AVDF 12.2:

- Ensure Oracle AVDF 12.2 transaction log audit trail is running on the Oracle source database. Create Oracle Goldengate integrated extract. If Oracle source database is older than 12.2.0.1, then configure Downstream Mining and create Integrated Extract for Downstream Mining database. If Oracle source database is version 12.2.0.1 or later, then create Integrated Extract for the source database.

- Configure Integrated Extract XML file for each source database instance in a unique location.

- Wait for five minutes after creating the Integrated Extract, to ensure it is running successfully. In case the Integrated Extract fails, then check the logs in the Reports tab and fix the issue.

- After confirming that the Integrated Extract is running successfully, wait till DDL/DML statements run. Ensure that the Integrated Extract file contains XML data in it.

- Stop the 12.2 transaction log audit trail. Before Oracle AVDF 12.2 transaction log audit trail is stopped, for a brief duration both the GoldenGate Integrated Extract and Oracle AVDF 12.2 transaction log audit trail are running concurrently. Hence duplicate records are observed only for this brief duration. Safely ignore the duplicate records observed for this short duration.

- Ensure these steps run successfully for all the 12.2 transaction log audit trails.

- If the current version of Oracle AVDF is prior to 12.2.0.9.0, then first upgrade to 12.2.0.9.0 and then upgrade to Oracle AVDF 20.

-

After upgrading to Oracle AVDF 20, perform these steps for each target database which has transaction log audit trail:

- Delete the old transaction log audit trail.

- Create a new transaction log audit trail.

- Make sure the trail location is the full path of the directory containing Integrated Extract XML files.

D.8 Create User and Grant Relevant Privileges

Learn how to create a user and grant the required privileges.

Create a new user depending on the type of the database:

-

In case of standalone database, create a new user and grant relevant privileges to the user. This new user can fetch REDO log data from the Oracle Database using Oracle GoldenGate Integrated Extract.

-

In case of multitenant database, create a new user in the CDB and grant relevant privileges to the user. This new CDB user can fetch REDO log data from individual PDBs in Oracle Database using Oracle GoldenGate Integrated Extract.

Follow this procedure for the standalone database:

- Log in to the database as sysdba.

-

Execute the following command to create an example user avggadmin:

create user avggadmin identified by avggadmin; -

Execute the following commands to grant privileges to the newly created user:

grant create session, resource, alter system to avggadmin;grant unlimited tablespace to avggadmin; -

Execute the following commands to grant GoldenGate admin privilege to the example user avggadmin:

begin DBMS_GOLDENGATE_AUTH.GRANT_ADMIN_PRIVILEGE( 'avggadmin', '*', TRUE, TRUE, NULL, NULL, NULL, 'CURRENT'); end; /

Follow this procedure for multitenant database:

D.9 Configure Oracle GoldenGate Parameters for Oracle Database

Follow this procedure to configure Oracle GoldenGate parameters for Oracle Database.

D.10 Create a New Credential in the GoldenGate Administration Server

Create a new credential for the target database in the Oracle GoldenGate Administration Server.

D.11 Create a New Integrated Extract in Oracle GoldenGate Administration Server

Follow this procedure to create new Integrated Extract in Oracle GoldenGate Administration Server for the target database.

D.12 Periodic Backup of LogMiner Dictionary

Learn when to take backup of the LogMiner dictionary.

Oracle GoldenGate highly recommends periodic backup (preferably every day) of the LogMiner dictionary. It can be performed by extracting the LogMiner dictionary to the redo log files. Database jobs can be created to perform periodic backup.

D.13 Sample Oracle GoldenGate Integrated Extract Parameter Files

Use these Oracle GoldenGate Integrated Extract parameter files as samples.

Audit DML and DDL in the schema excluding some tables

The following parameter file configures Integrated Extract to capture the following:

- DDL operations on all objects, except the objects in

accountsschema - DML operations on all tables in

scottschema, except theemptable in thescottschema

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

DDL INCLUDE ALL, EXCLUDE OBJNAME accounts.*

TABLE scott.*;

TABLEEXCLUDE scott.emp

Audit all DDL and DML in a schema

The following parameter file configures Integrated Extract to capture the following:

- DDL operations on all objects in the

scottschema - DML operations on all tables in the

scottschema

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

DDL INCLUDE OBJNAME scott.*

TABLE scott.*;

Audit only DDL in a schema

The following parameter file configures Integrated Extract to capture

DDL operations on all objects in the scott schema.

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

DDL INCLUDE OBJNAME scott.*

Audit only DML in a schema

The following parameter file configures Integrated Extract to capture

DML operations on all tables in the scott schema.

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

TABLE scott.*;

Audit all DDL in all schema

The following parameter file configures Integrated Extract to capture DDL operations on all objects.

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

DDL INCLUDE ALL

Audit DML for a table and set the columns to be used as key columns

The following parameter file configures Integrated Extract to do the following:

- Capture DML operations on the

emptable in thescottschema - Set

empnoandenameas key columns

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

TABLE scott.emp, KEYCOLS (empno, ename);Audit DML for a table with GETBEFORECOLS and KEYCOLS option

The following parameter file configures Integrated Extract to do the following:

- Capture DML operations on the

emptable in thescottschema - Set

empnoandenameas key columns

The following sample parameter file has GETBEFORECOLS options. The Oracle AVDF is supporting display of key columns from AVDF 20.10.0.0.0. To show key columns in the report for update and delete operations, key column should appear in the before image of audit file generated by the Oracle GoldenGate. If key columns are absent in the before images then, user can use GETBEFORECOLS option.

extract <extract_name>

useridalias <credential_userid_alias> domain <credential_domain>

OUTPUTFORMAT XML _AUDIT_VAULT

exttrail <sub_directory>/<trail_name>

SOURCECATALOG cdb1_pdb1

TABLE scott.emp, KEYCOLS (empno, ename), GETBEFORECOLS (ON UPDATE ALL, ON DELETE ALL);Related Topics

D.14 Audit Trail Creation in Audit Vault Console

Learn how to create a mandatory target attribute before creating audit trail.

Ensure the mandatory target attribute

AV.COLLECTOR.TIMEZONEOFFSET is set on the Oracle Database

target in Audit Vault Server console before audit trail creation. This attribute

must be set to timezone offset of Oracle Database. For example,

+03:00 for positive offset, -03:00 for

negative offset.

Create audit trail by specifying the following details or guidelines:

- Trail Type: TRANSACTION LOG

- Trail Location: Full path of directory containing integrated extract XML files

- Agent should be running on the host machine which has access to the trail location

- Agent user should have read permission on the trail location

D.15 Audit Trail Cleanup

Learn how to delete the files that are read by Audit Vault Agent.

Oracle Golden Gate Collector writes the checkpoint information into the Audit Trail

Cleanup (ATC) file. This file is present in

<Agent_Home>/av/atc directory. The ATC file contains

information of the target type, target name, trail type, trail directory, and the

checkpoint timestamp. The ATC file has extension .atc. All the

records with event timestamp older than the checkpoint timestamp are read by Audit

Vault Agent and written into the event_log table in Audit Vault

Server.

Note:

The timestamp in ATC file is in UTC (Coordinated Universal Time) time zone.Here is an example ATC file:

securedTargetType=Oracle Database

SecuredTargetName=secured_target_oracle_one

TrailType=TRANSACTION LOG

TrailName=/foo/bar/trail_files

2020-06-30 07:11:46.0

For Oracle AVDF 20.3 and Earlier

To delete the files that are read by the Audit Vault Agent, create a script which deletes the files. These are the files where the last modified timestamp is older than the checkpoint timestamp present in ATC file. This script can be scheduled to run periodically.

For Oracle AVDF 20.4 and Later

To delete the files that are read by the Audit Vault Agent use the Oracle GoldenGate Extract Cleanup utility. This is available starting Oracle AVDF 20.4.

D.16 Configure GoldenGate Downstream Mining

Learn how to configure GoldenGate downstream mining.

Oracle GoldenGate Integrated Extract process is supported only for Oracle Database versions 12.2.0.1 and later. In this case Oracle GoldenGate Integrated Extract is configured on the source database. For capturing transaction log data from Oracle Database versions prior to 12.2.0.1, downstream mining is used. In this case there are 2 databases, the source database and the Downstream Mining database. The source database (Oracle Database versions prior to 12.2.0.1) is configured to send the online REDO logs to a Downstream database (Oracle Database version 12.2.0.1 or later). Integrated Extract is then configured on the Downstream database to generate XML files in _AUDIT_VAULT format.

Prerequisite

Execute the steps in Create User and Grant Relevant Privileges and Configure Oracle GoldenGate Parameters for Oracle Database on both the source database and the Downstream Mining database.

Configuring the Password File

-

Execute the follow command on the source database to see database compatibility:

show parameter compatible; -

If the version of the source database is 12.1.0.2.0, then execute below command:

alter system set compatible = '12.1.0.2.0' scope=spfile;shutdown immediatestartup -

Execute the following command and check if the compatibility has changed to 12.1.0.2.0:

show parameter compatible; -

Execute the following query to find the global_name on both the source database and the Downstream Mining database:

select * from global_name; -

Ensure the source database and the Downstream Mining database do not have the same global_name.

-

If a source database has a remote login password file, copy it to the appropriate directory of the mining database system. The password file must be the same as the source database and the mining database. If the source database and the Downstream Mining database do not have the same password file, then execute the following commands in the source database and then copy over the source password file to the Downstream Mining database:

alter system set remote_login_passwordfile = 'shared' scope = spfile;shutdown immediatestartup -

In the source database, the password file is

$ORACLE_HOME/dbs/orapw<$ORACLE_SID>.The example source password file is

/foo/bar/orapwsource. -

Execute the following command on the Downstream Mining database to find the downstream password file:

select file_name from v$passwordfile_info;The example downstream password file is

/foo/bar/orapwdownstream. -

Execute the following command to take backup of the existing downstream password file:

cp /foo/bar/orapwdownstream /foo/bar/orapwdownstream_orig -

Execute the following command to copy the source password file to downstream password file location:

cp /foo/bar/orapwsource /foo/bar/orapwdownstream

Configuring the Source Database

In this example, the database unique name for source database is

source_db_unique_name and for the Downstream Mining database is

downstream_db_unique_name.

Execute the following command to find the database unique name:

select db_unique_name from v$database;Execute the following commands on the source database, to configure the source

database to transmit redo data to the Downstream Mining database. While setting the

LOG_ARCHIVE_DEST_2 parameter, the connection details of the

Downstream Mining database needs to be provided.

ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(source_db_unique_name,downstream_db_unique_name)';ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='SERVICE="(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=foo.bar.com)(PORT=1234))(CONNECT_DATA=(SERVICE_NAME=foo.bar.com)))" ASYNC OPTIONAL NOREGISTER VALID_FOR=(ONLINE_LOGFILE,PRIMARY_ROLE) DB_UNIQUE_NAME=downstream_db_unique_name';ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE;Configuring the Downstream Mining Database

-

Archiving must be enabled in the Downstream Mining database to run Integrated Extract in real time integrated capture mode. Execute the following commands on the Downstream Mining database to archive local redo log files:

ALTER SYSTEM SET LOG_ARCHIVE_DEST_1='LOCATION=/foo/bar/arc_dest/local valid_for=(ONLINE_LOGFILE,PRIMARY_ROLE)';ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_1=ENABLE; -

Downstream Mining database must be configured to archive the standby redo logs that receive redo data from the online redo logs of the source database. The foreign archived logs should not be archived in the recovery area of the Downstream Mining database. Execute the following commands on the Downstream Mining database to archive standby redo logs locally:

ALTER SYSTEM SET LOG_ARCHIVE_CONFIG='DG_CONFIG=(source_db_unique_name,downstream_db_unique_name)';ALTER SYSTEM SET LOG_ARCHIVE_DEST_2='LOCATION=/foo/bar/arc_dest/standbyredo VALID_FOR=(STANDBY_LOGFILE,PRIMARY_ROLE)';ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_2=ENABLE ; -

Execute the following command on the source database and make a note of the results:

select group#, thread#, bytes from v$log; -

Add the standby log file groups to the mining database. The standby log file size must be at least the size of the source log file. The number of standby log file groups must be at least one more than the number of source online log file groups. This applies to each instance (thread) in case of Oracle RAC installation. If you have

nthreads in the source database, each havingmredo log groups, then you should configuren*(m+1)redo log groups in the Downstream Mining database.For example, let us assume the following is the result of the query

select group#, thread#, bytes from v$log;:GROUP# THREAD# BYTES 1 1 26214400 2 1 26214400 Number of threads ( n ) is 1.

Number of groups per thread ( m ) is 2.

Hence

n*(m+1) = 3redo logs groups are required in the Downstream Mining database, where the size of each log group should be at least 26214400 bytes. -

For this example, execute the following query on the Downstream Mining database:

ALTER DATABASE ADD STANDBY LOGFILE GROUP 3 ('/foo/bar/arc_dest/standbyloggroups/slog3a.rdo', '/foo/bar/arc_dest/standbyloggroups/slog3b.rdo') SIZE 500M; ALTER DATABASE ADD STANDBY LOGFILE GROUP 4 ('/foo/bar/arc_dest/standbyloggroups/slog4a.rdo', '/foo/bar/arc_dest/standbyloggroups/slog4b.rdo') SIZE 500M; ALTER DATABASE ADD STANDBY LOGFILE GROUP 5 ('/foo/bar/arc_dest/standbyloggroups/slog5a.rdo', '/foo/bar/arc_dest/standbyloggroups/slog5b.rdo') SIZE 500M; SELECT * FROM V$STANDBY_LOG;

Registering Integrated Extract

-

Create credentials for both the source database and the Downstream Mining database on Oracle GoldenGate administration server by following the steps in Create a New Credential in the GoldenGate Administration Server.

-

Launch the adminclient command line interface:

$GG_HOME/bin/adminclient -

Execute the command to connect to the GoldenGate Service Manager. If SSL is configured, then execute the command:

connect https://<hostname>:<port> as <username> password <password> !If SSL is not configured, then execute the command:

connect http://<hostname>:<port> as <username> password <password> !Example command if SSL is configured:

connect https://localhost:1234 as ggadminuser password ggadminpassword !Example command if SSL is not configured:

connect https://localhost:1234 as ggadminuser password ggadminpassword ! -

Execute the following command to log in to the source database in adminclient:

dblogin useridalias <source db user id> domain <source db domain>For example:

dblogin useridalias avggadmin_remotesourceinst1 domain remotesourceinst1 -

Execute the following command to log in to the Downstream Mining database in adminclient:

miningdblogin useridalias <downstream db user id> domain <downstream db domain>For example:

miningdblogin useridalias avggadmin_remotedowninst1 domain remotedowninst1 -

Execute the following commands to add and register the Integratd Extract. Before executing these steps, manually create the subdirectory, where the Integrated Extract XML files need to be stored.

ADD EXTRACT <extract name> INTEGRATED TRANLOG BEGIN NOWREGISTER EXTRACT <extract name> DATABASEADD EXTTRAIL <subdirectory>/<trail name>, EXTRACT <extract name>After executing this command, you may see the message

OGG-12029 The file with name '<extract name>.prm' does not exist. Ignore this message.For example:

ADD EXTRACT ext1 INTEGRATED TRANLOG BEGIN NOW REGISTER EXTRACT ext1 DATABASE ADD EXTTRAIL e1/e1, EXTRACT ext1 -

Log in to the Oracle GoldenGate administration server.

-

In the Extracts panel, the newly created Integrated Extract is displayed. To update the parameter file of the Integrated Extract follow these steps:

- Click Actions drop down next to the Integrated Extract icon.

- Select Details.

-

In the Parameters tab, enter the below parameters:

extract <extract name> useridalias <source db user id> domain <source db domain> TRANLOGOPTIONS MININGUSERALIAS <downstream db user id> domain <downstream db domain> TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine Y) OUTPUTFORMAT XML _AUDIT_VAULT exttrail <subdirectory>/<trail name> DDL INCLUDE ALL TABLE <schema>.<table>;For example:

extract ext1 useridalias avggadmin_remotesourceinst1 domain remotesourceinst1 TRANLOGOPTIONS MININGUSERALIAS avggadmin_remotedowninst1 domain remotedowninst1 TRANLOGOPTIONS INTEGRATEDPARAMS (downstream_real_time_mine Y) OUTPUTFORMAT XML _AUDIT_VAULT exttrail e1/e1 DDL INCLUDE ALL TABLE scott.*;

-

After updating the parameters, click the Apply button.

-

Click Actions drop down next to the Integrated Extract icon.

-

Click Start button to start the Integrated Extract. Wait for 5 minutes for the Integrated Extract to start successfully and create the background log mining process. Log Mining process runs in the background and is not visible to the user.

-

Execute the following commands on the source database to switch the log files on the source database:

select * from v$log;alter system switch logfile;select * from v$log; -

Wait for 5 minutes after performing the log switch. The Integrated Extract needs few minutes to start creating the XML files.

Checking the status of Downstream Mining

Execute the following commands on both the source database and the Downstream Mining database:

select * from V$archive_dest_status;select * from V$archive_dest;In the row having dest_name column with values

LOG_ARCHIVE_DEST_1 and LOG_ARCHIVE_DEST_2,

ensure the status column has a value VALID and the

gap_status column has a value NO GAP or

null.

If the status column has a value ERROR, then the error column shows

the relevant error message.

Checking the status of Integrated Extract

- Log in to the Oracle GoldenGate administration server.

- View the Extracts panel. The Integrated Extract is displayed.

- Check the status of the Integrated Extract. Click Actions drop down next to the Integrated Extract icon.

- Select Details.

- Click Report tab to view the diagnostic messages. In case the extract process fails, then the relevant errors are displayed in the report.

See Also:

Understand the downstream mining process available in Configuring a Downstream Mining Database and Example Downstream Mining Configuration.