Installation on Linux

Follow the steps below to get your Agent Factory environment provisioned, configured, and running on Linux machine. You can choose between Production mode and Quick Start mode, depending on your requirements. See Installation Modes to select the installation mode that best suits your needs.

Caution: Run the installation as a non-root user. Podman, a critical component, must be set up and used in rootless mode for Agent Factory installations. Do not perform the installation or run deployment steps as the root user.

Note: Sections where user input is expected are indicated by angle brackets <>.

VPN On. Production mode

Installing the App on Linux on Production Mode with VPN On

Choose a staging location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle. Note that this directory should not be located on an NFS mount.

Get the installation kit

Go to the official download website and get the installation kit. See Download Installation Kit.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Before you begin the installation process, make sure to configure any proxies required to connect outside your VPN.

export http_proxy=<your-http-proxy>;

export https_proxy=<your-https-proxy>;

export no_proxy=<your-domain>;

export HTTP_PROXY=<your-http-proxy>;

export HTTPS_PROXY=<your-https-proxy>;

export NO_PROXY=<your-domain>;Run Interactive Installer

The interactive_install.sh script, included in the installation kit, automates nearly all setup tasks, including environment configuration, dependency installation, and application deployment.

This file will be present in the staging location once you extract the kit.

-

Run the Interactive Installer

Now that the kit is unpacked, execute the

interactive_install.shscript from within the same directory.bash interactive_install.sh --reset (Required if previously installed) bash interactive_install.sh -

When the interactive installer prompts you, select the option that better suits your environment:

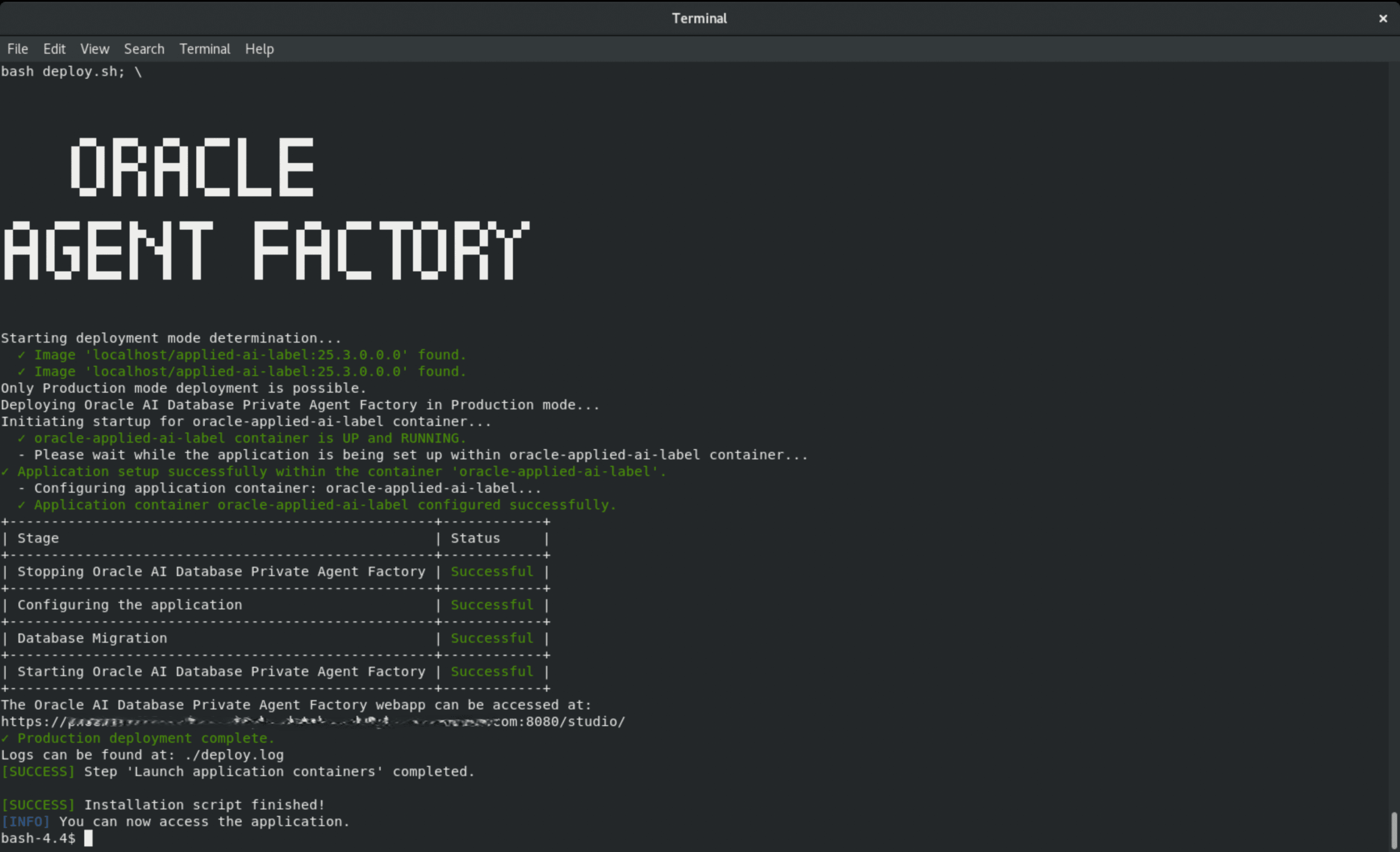

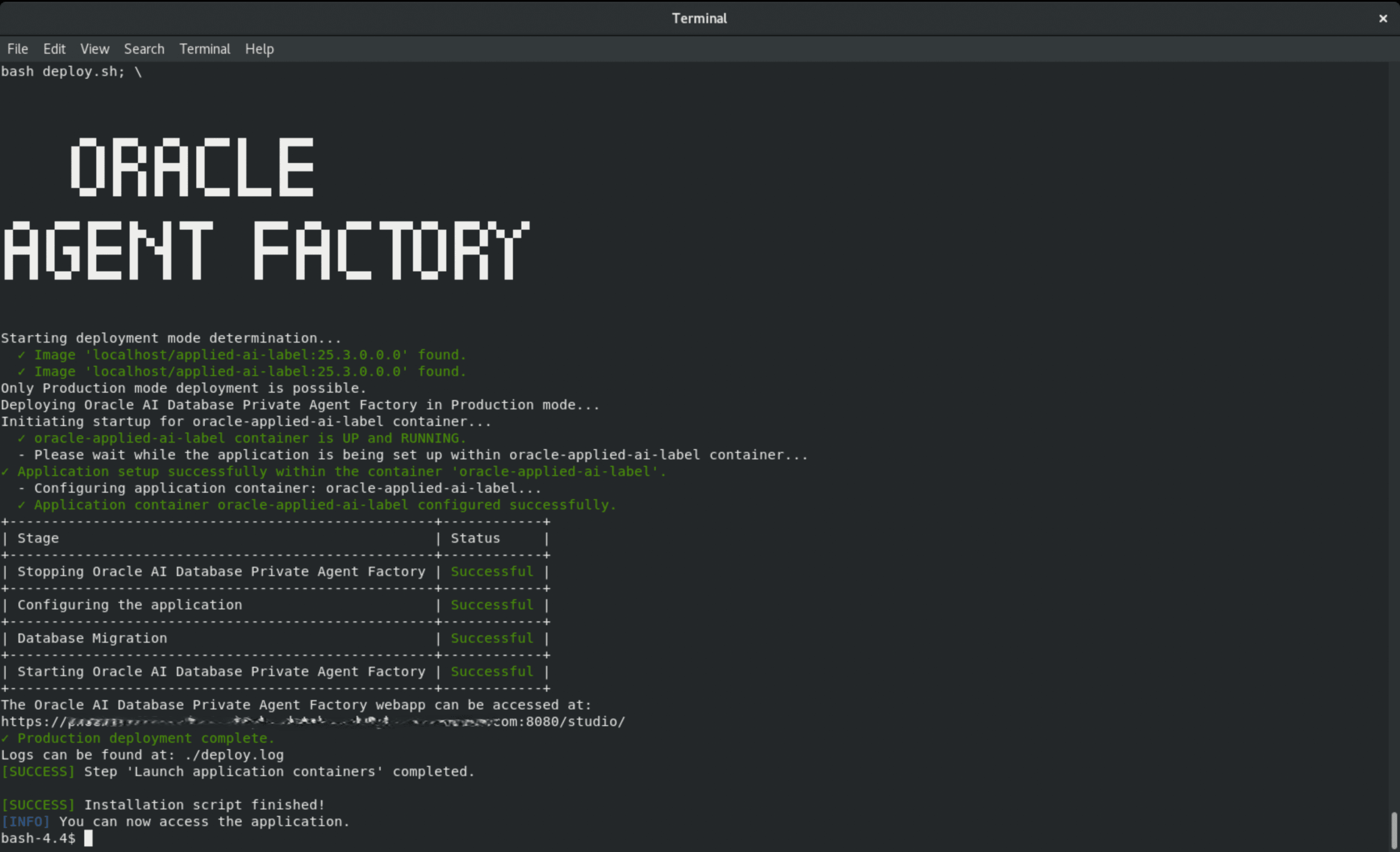

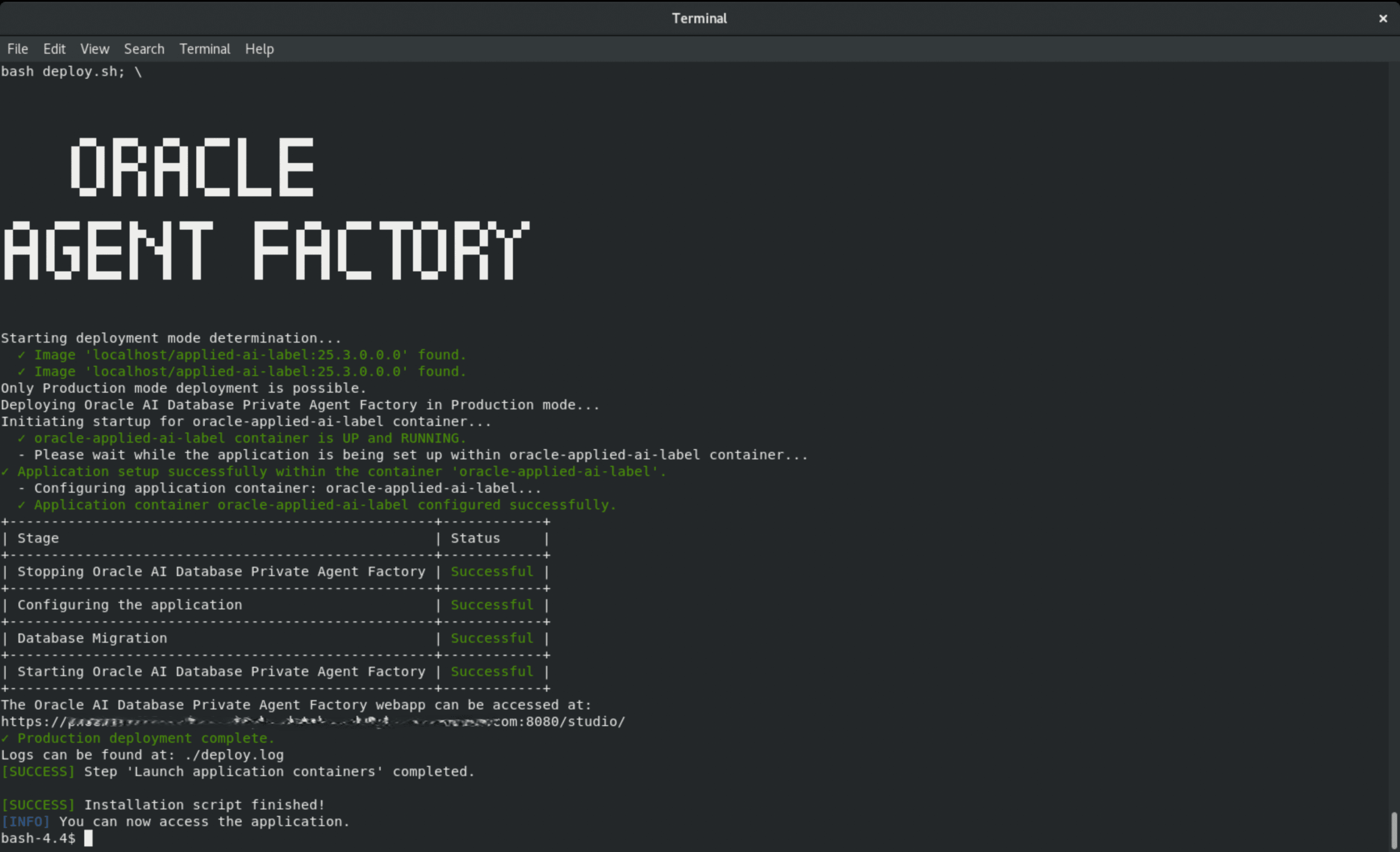

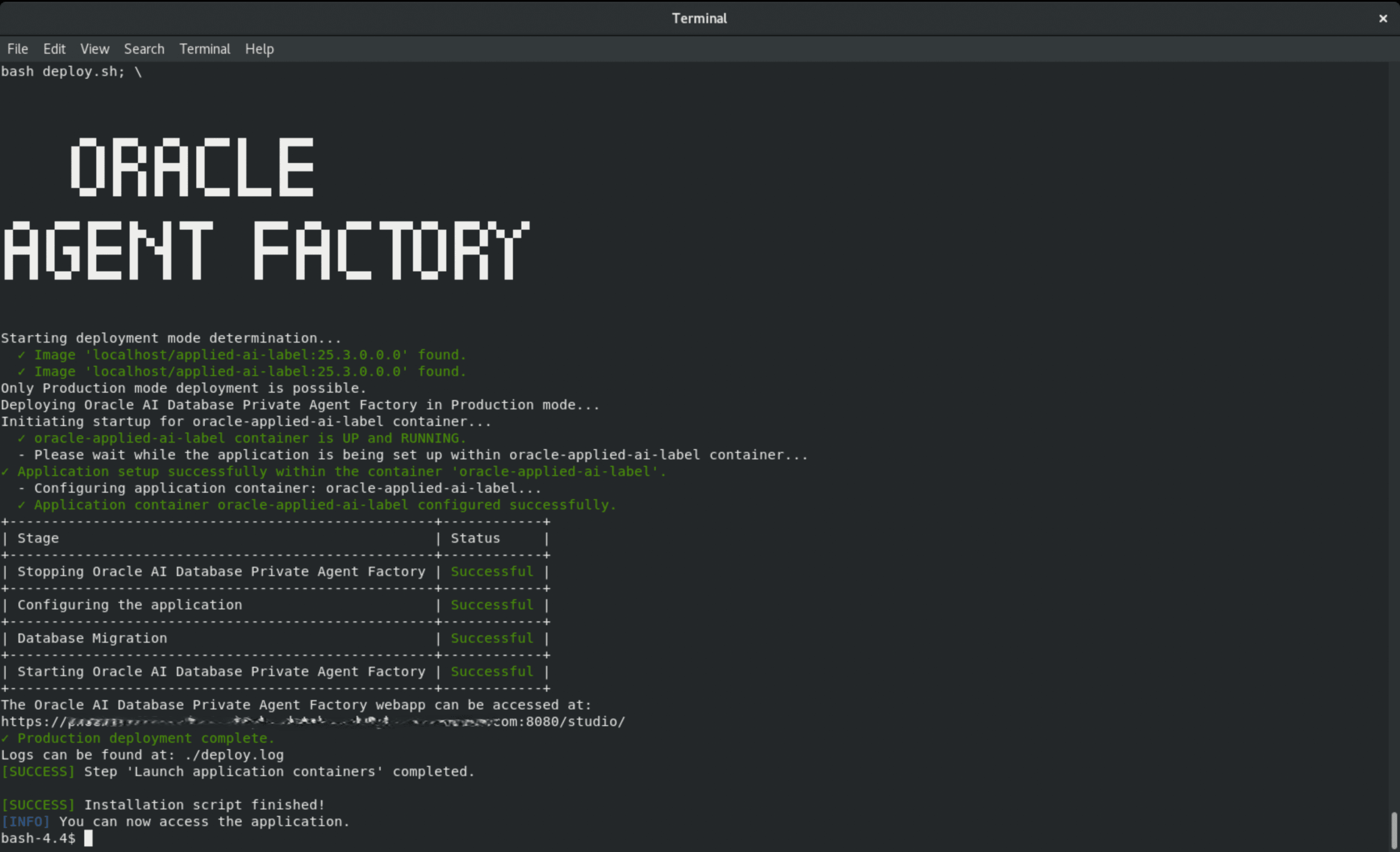

Are you on a corporate network that requires an HTTP/HTTPS proxy? (y/N): y Enter 1 if you are on a Standard Oracle Linux machine or 2 if you are on OCI: <user_number_choice> Enter your Linux username: <user_linux_username> Does your default /tmp directory have insufficient space (< 100GB)? (y/N): y [INFO] You can get a token from https://container-registry.oracle.com/ Username: <email_registered_on_container_registry> Password: <token_from_container_registry> Do you want to proceed with the manual database setup? (y/N): y [WARNING] Step 1: Create the database user. Enter the DB username you wish to create: <your_db_user> Enter the password for the new DB user: <your_db_user_password> [INFO] Run these SQL commands as a SYSDBA user on your PDB (Pluggable Database): ---------------------------------------------------- CREATE USER <your_db_user> IDENTIFIED BY <your_db_user_password> DEFAULT TABLESPACE USERS QUOTA unlimited ON USERS; GRANT CONNECT, RESOURCE, CREATE TABLE, CREATE SYNONYM, CREATE DATABASE LINK, CREATE ANY INDEX, INSERT ANY TABLE, CREATE SEQUENCE, CREATE TRIGGER, CREATE USER, DROP USER TO <your_db_user>; GRANT CREATE SESSION TO <your_db_user> WITH ADMIN OPTION; GRANT READ, WRITE ON DIRECTORY DATA_PUMP_DIR TO <your_db_user>; GRANT SELECT ON V_$PARAMETER TO <your_db_user>; exit; ---------------------------------------------------- Press [Enter] to continue to the next step... Select installation mode: 1) prod 2) quickstart Enter choice (1 or 2): 1 You selected Production mode. Confirm? (yes/no): yesThe following output indicates that the installation was completed successfully.

Once the installation script finishes successfully, you can access the application at the URL https://<hostname>:8080/studio/ provided by the script and complete the remaining configuration through your web browser.

Access the Application

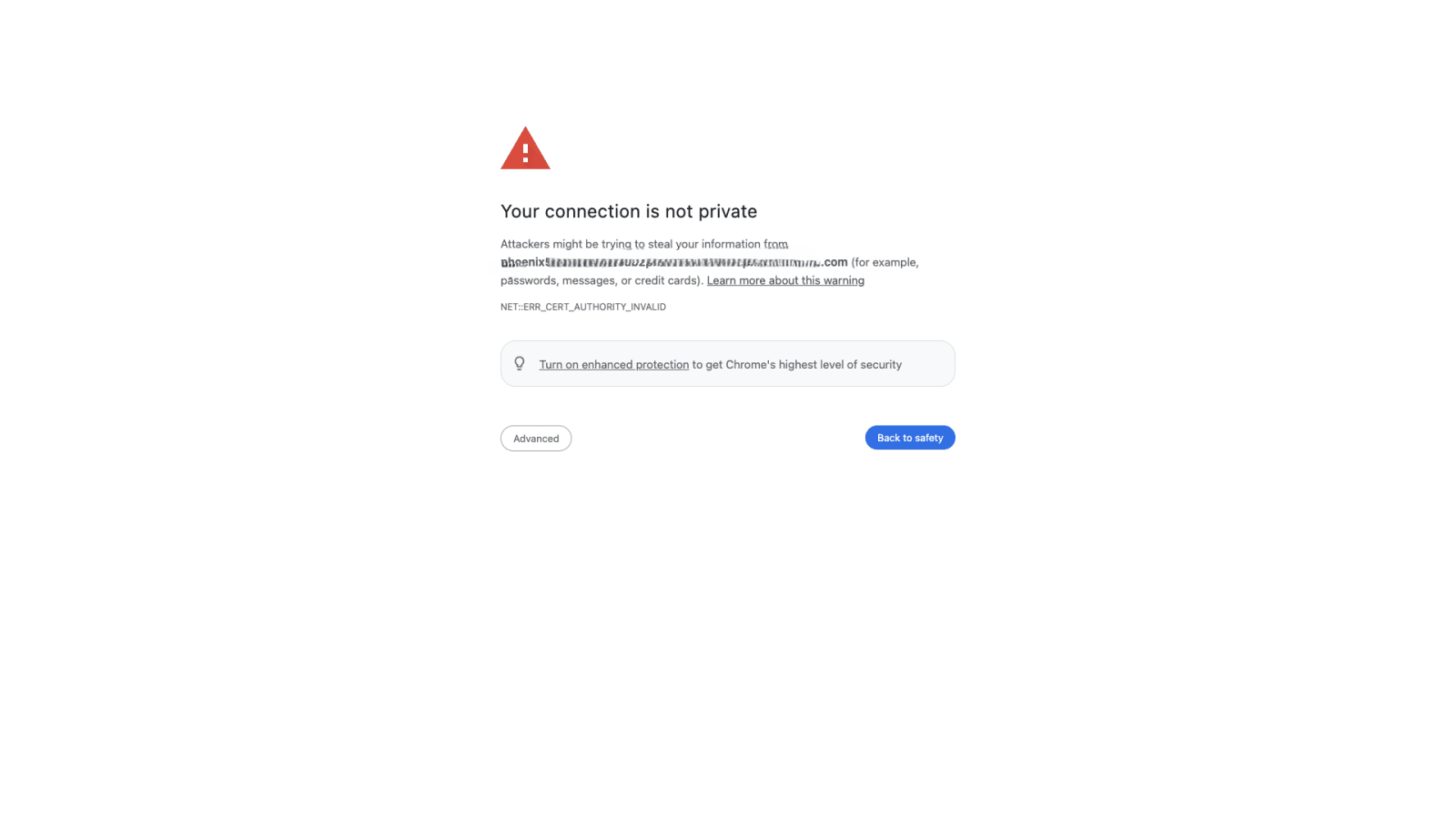

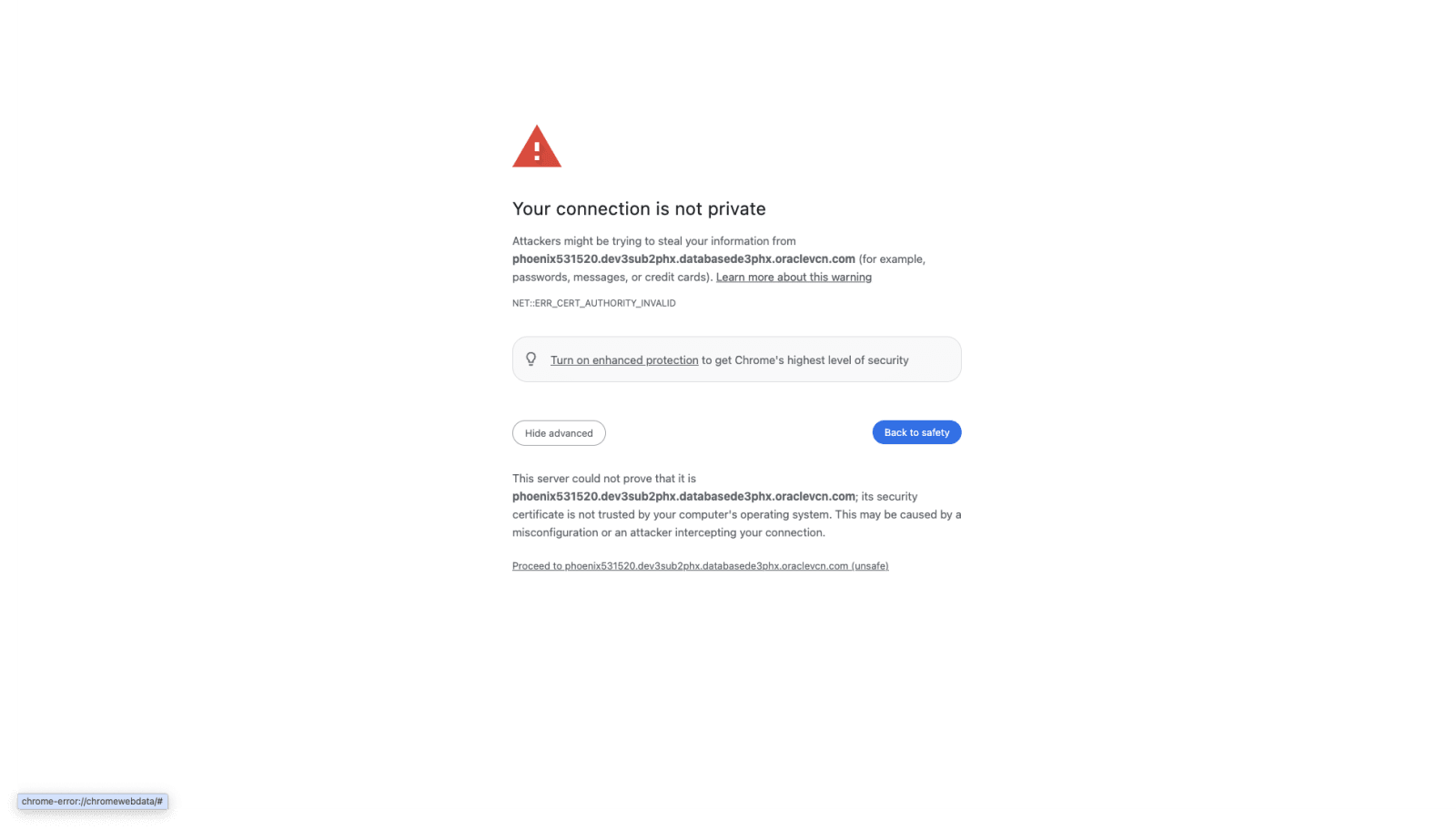

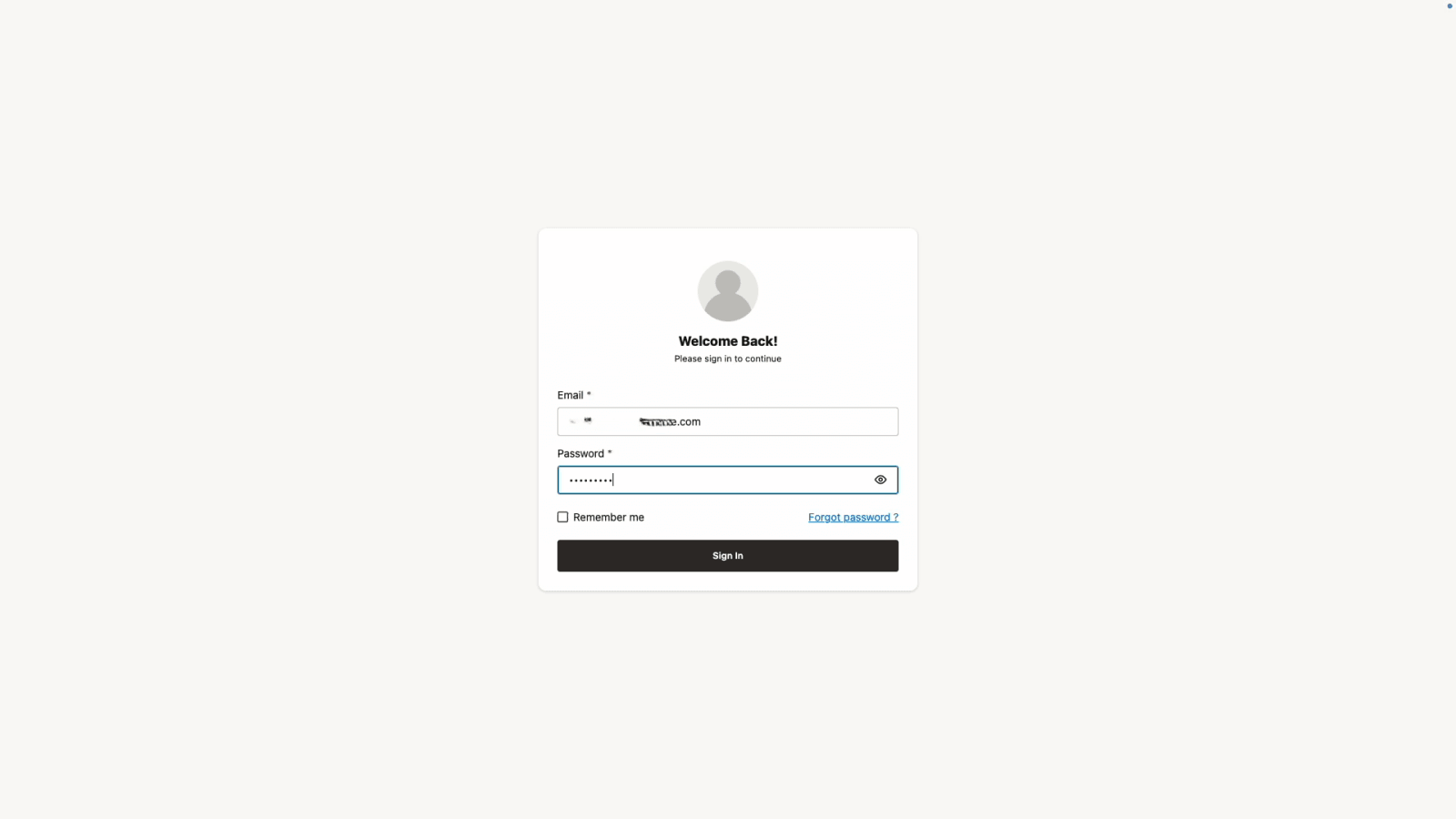

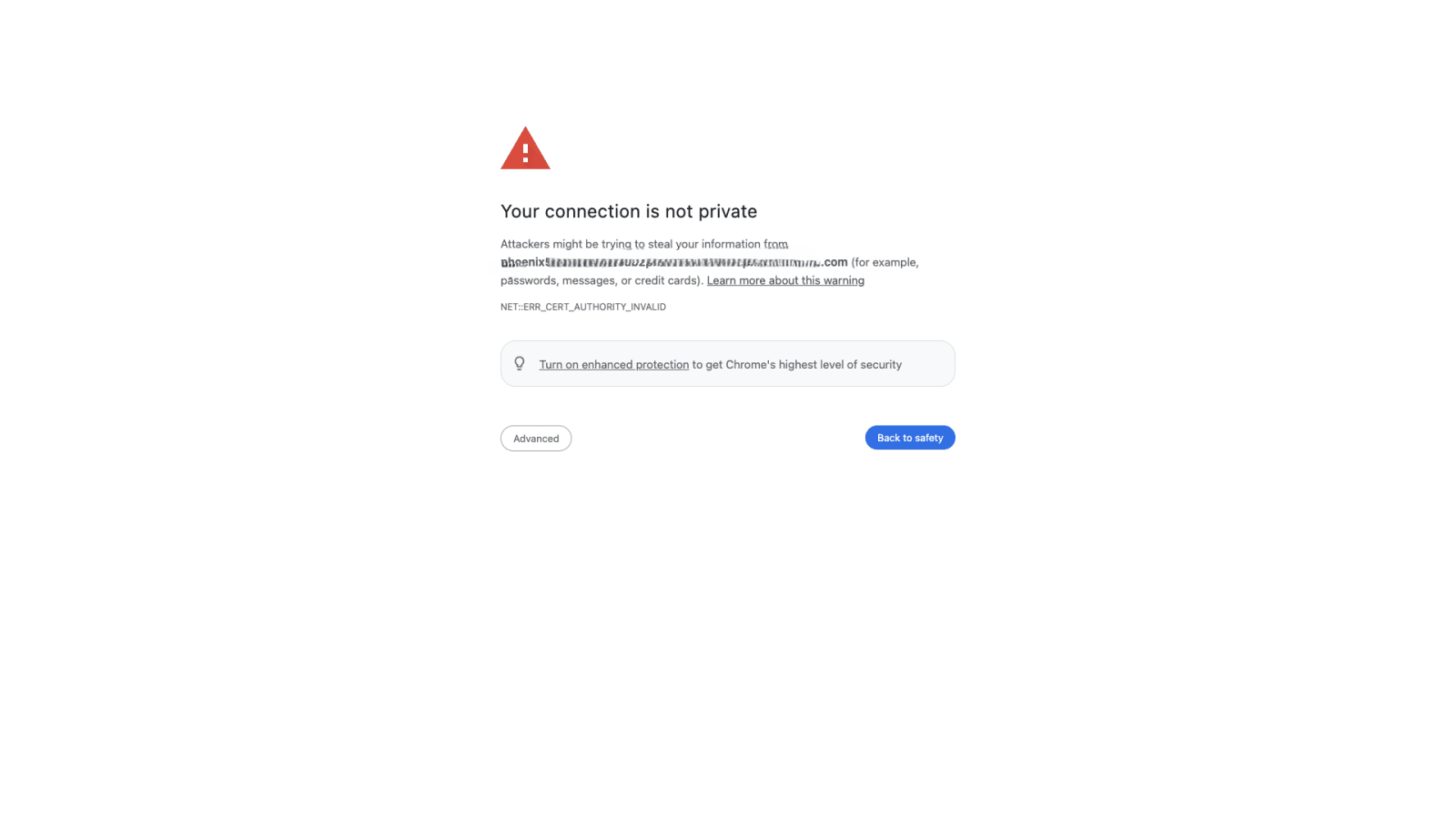

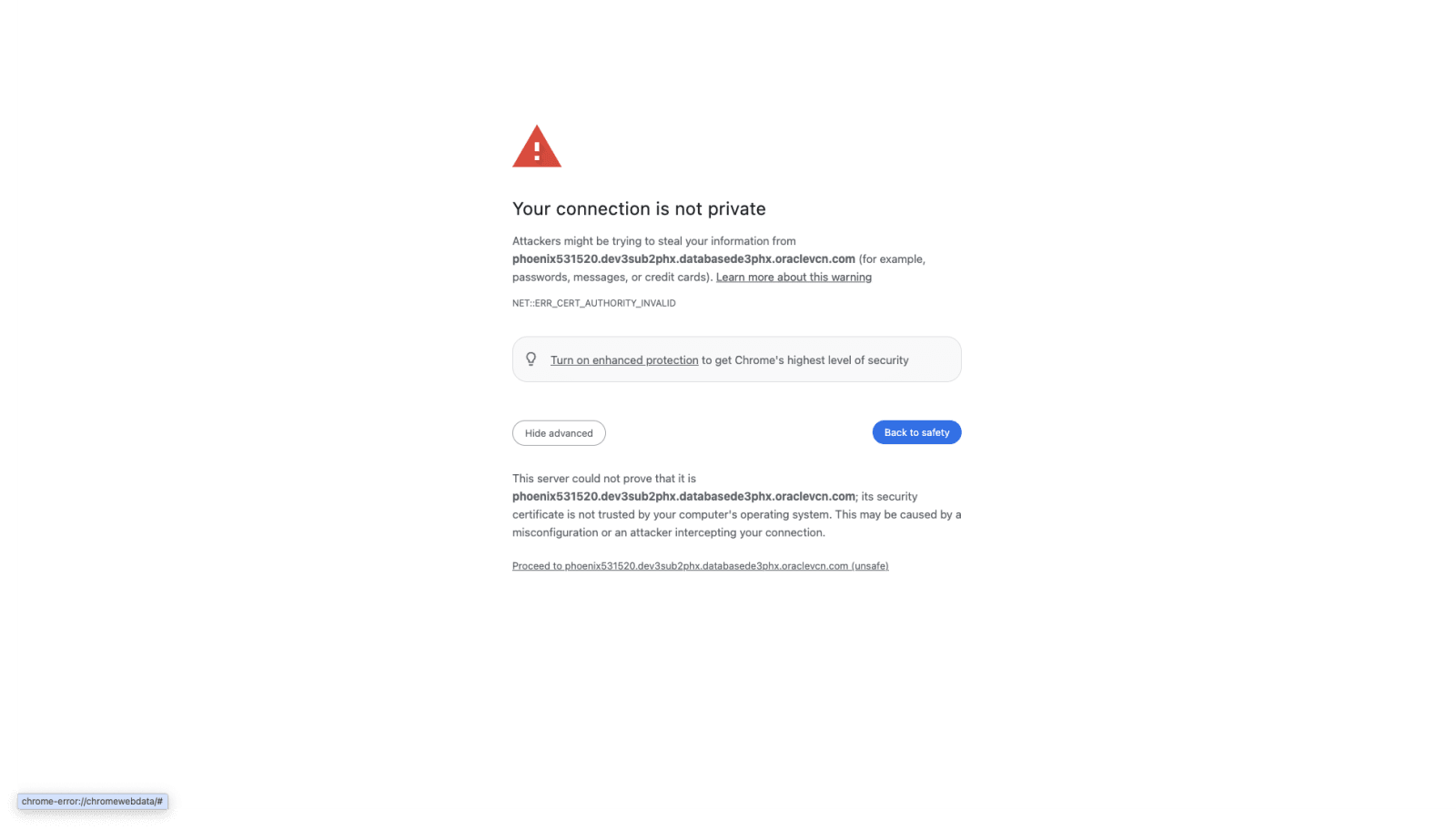

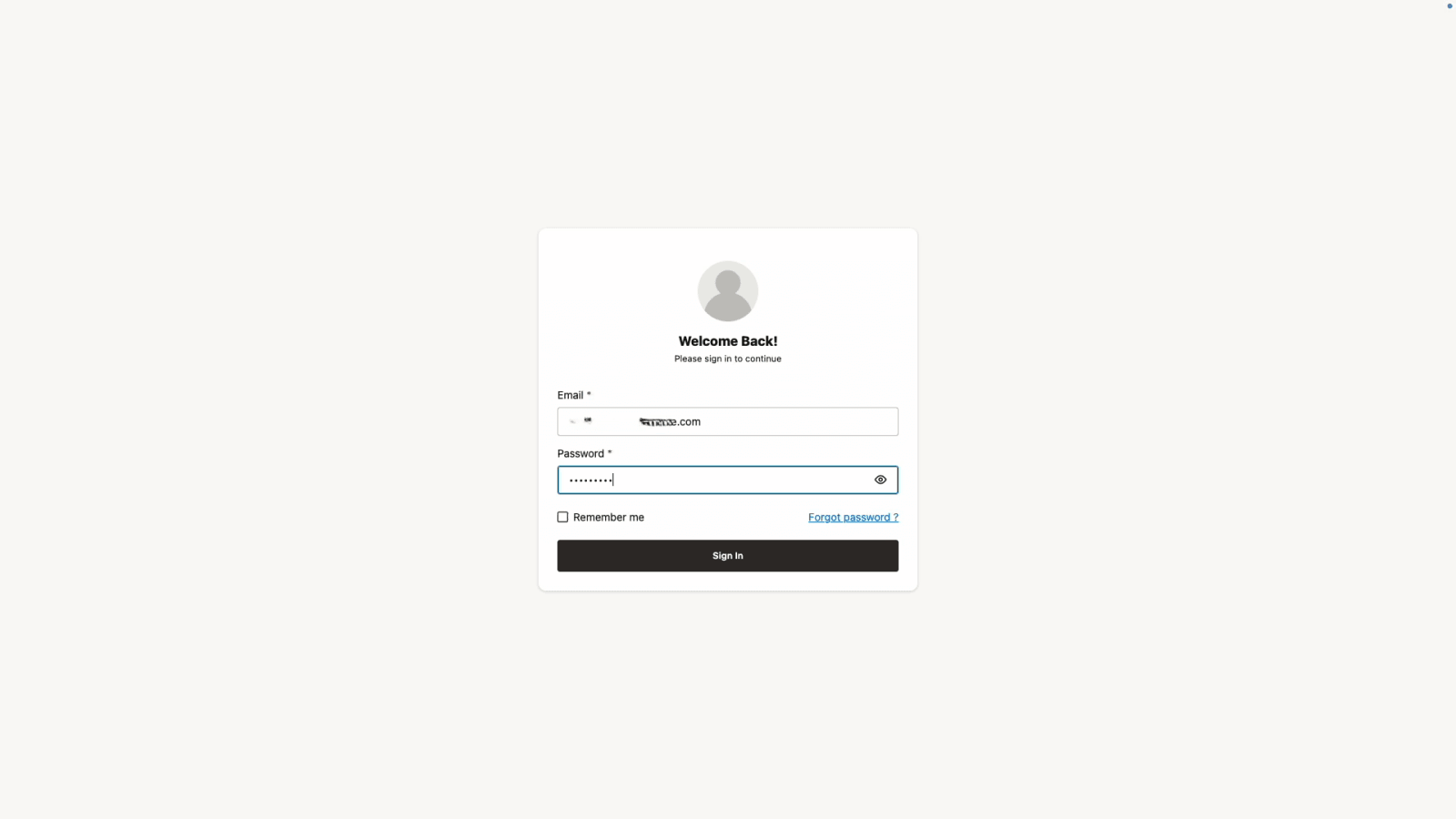

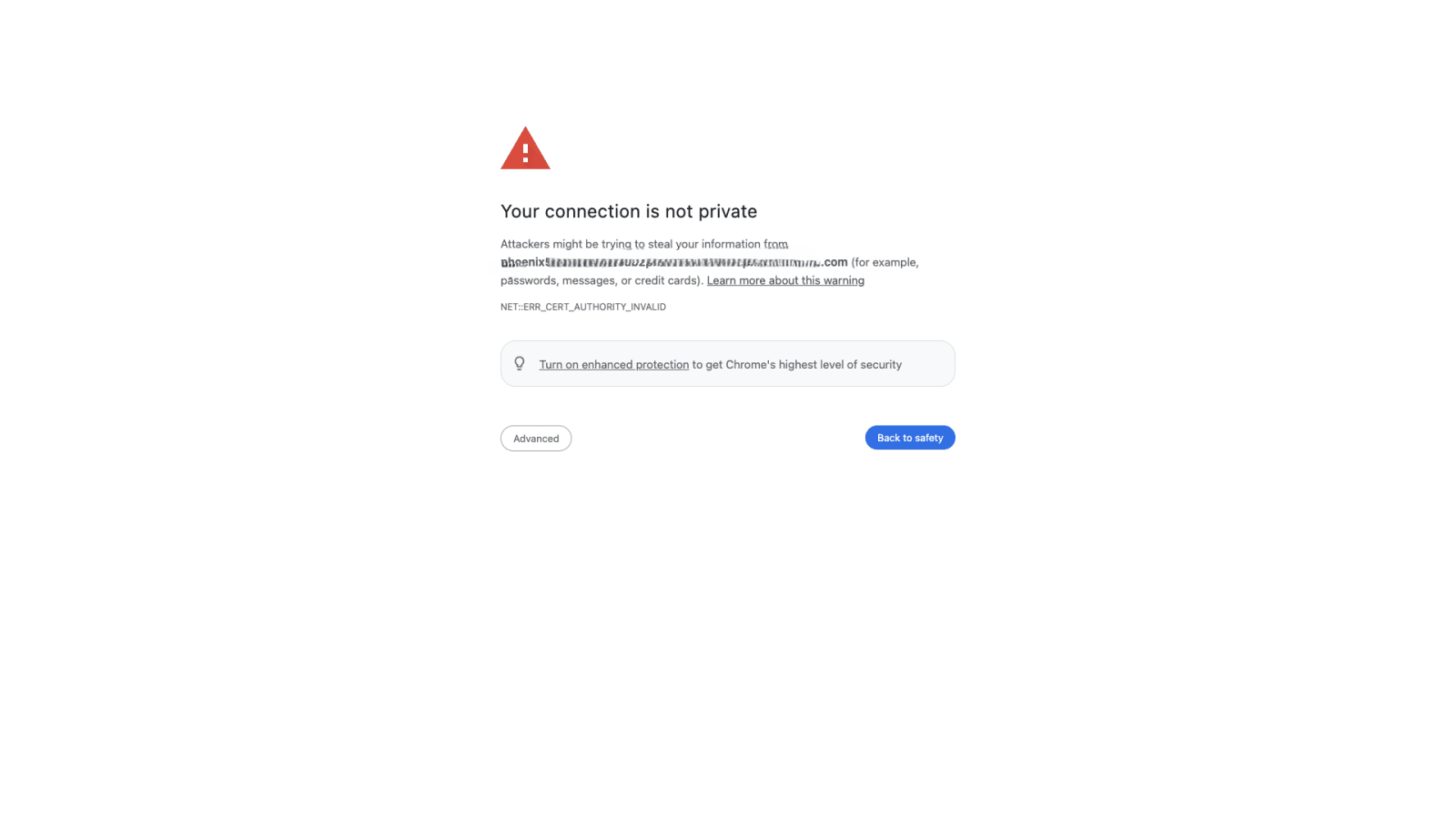

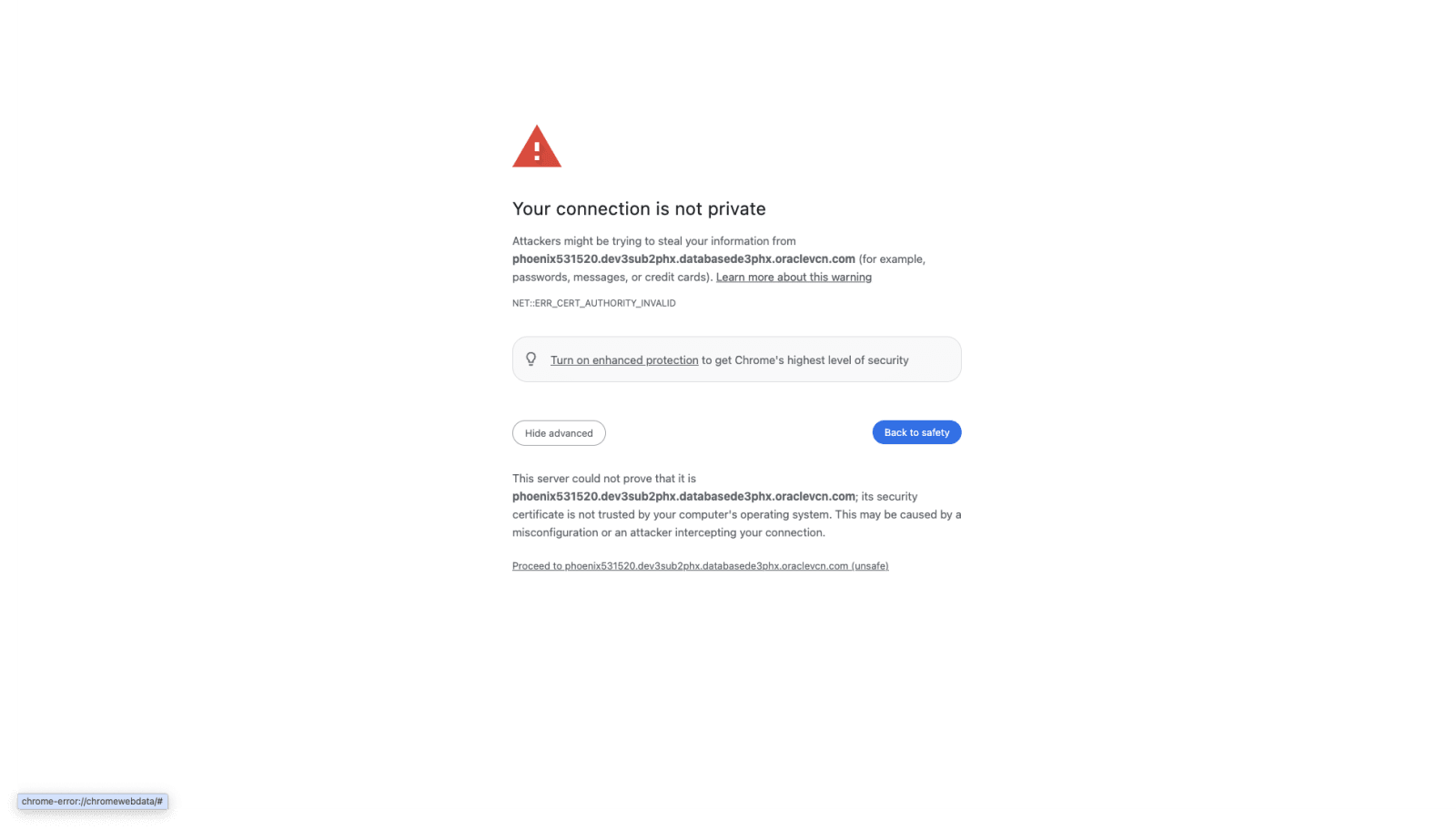

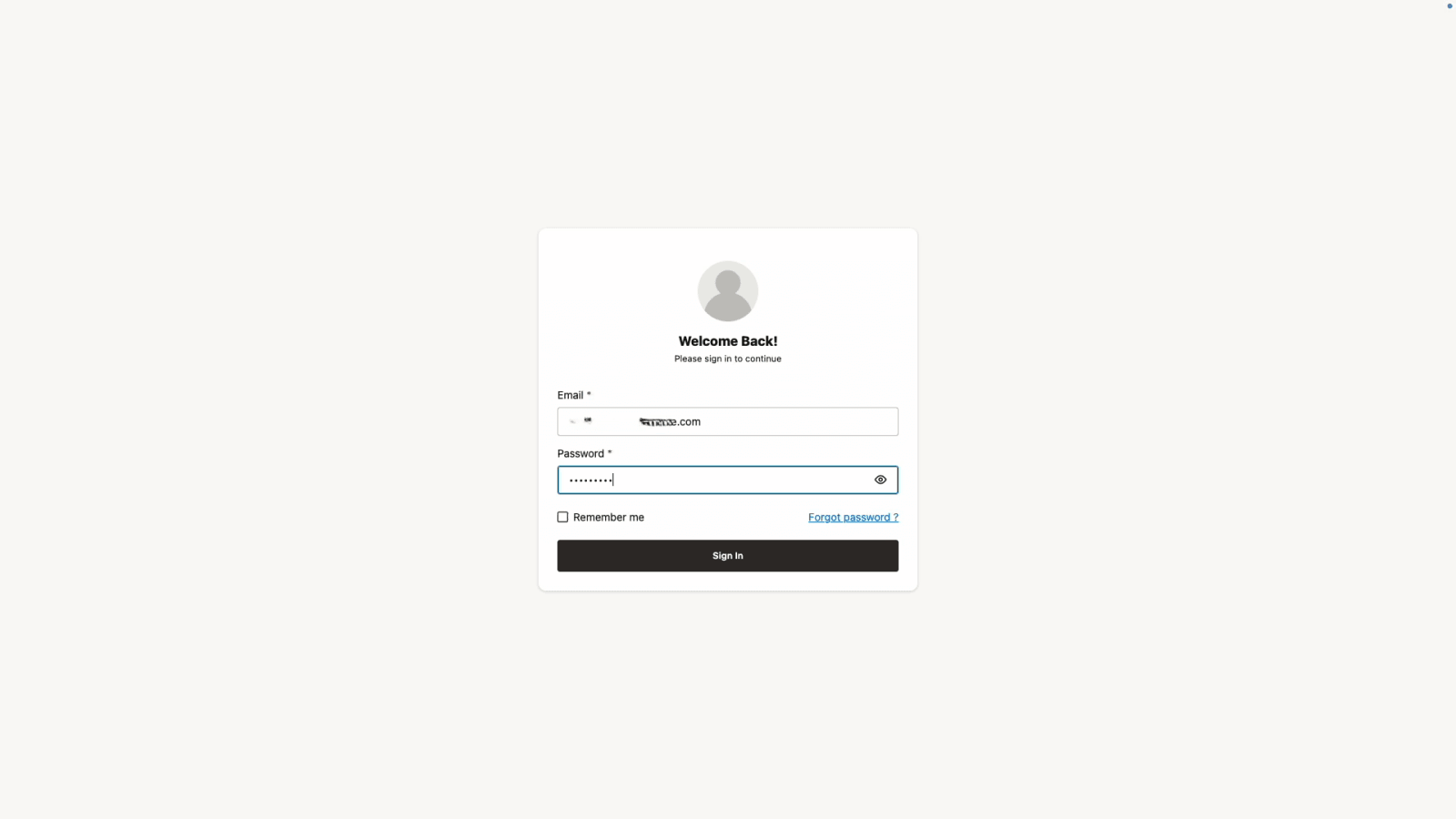

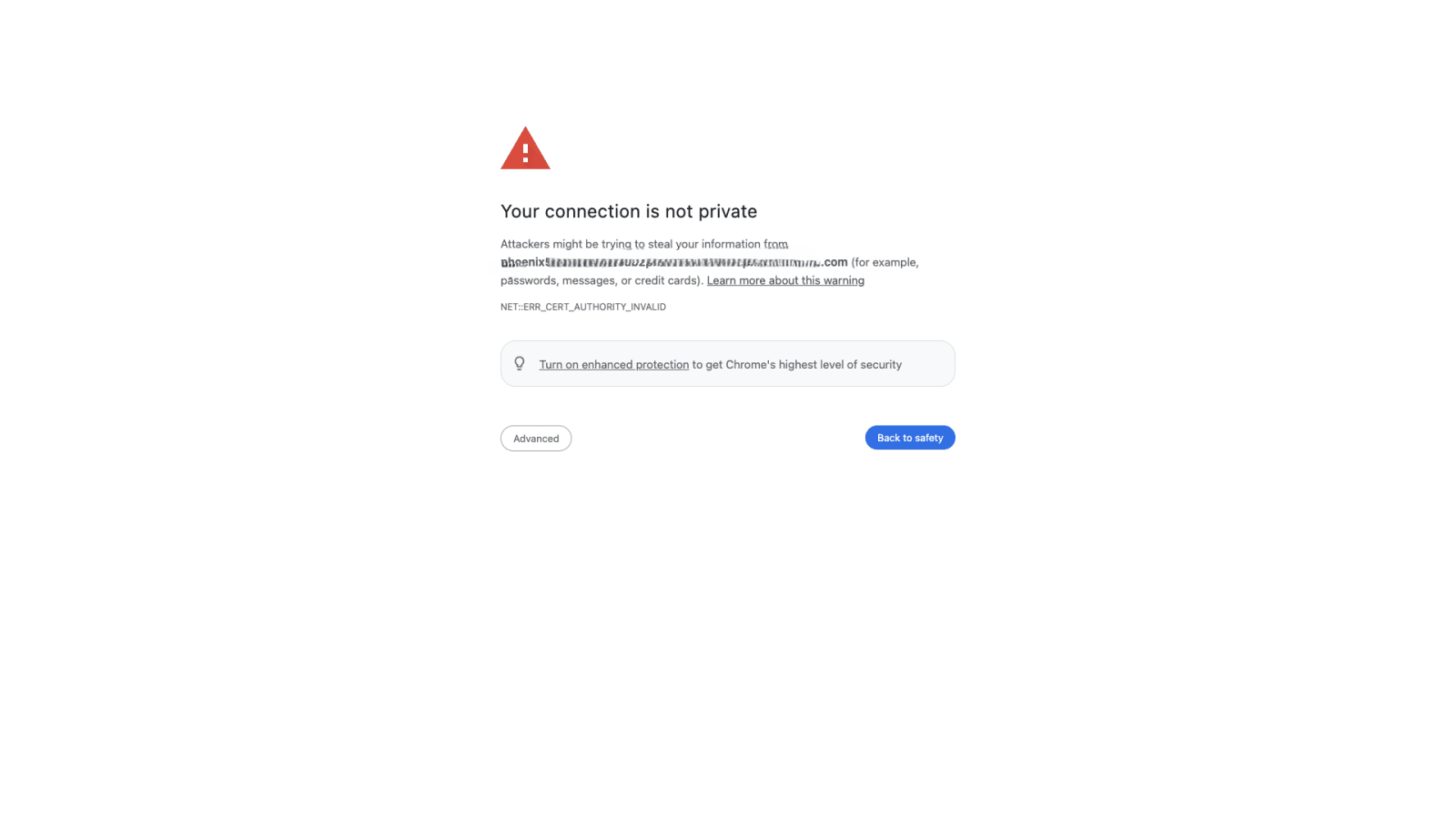

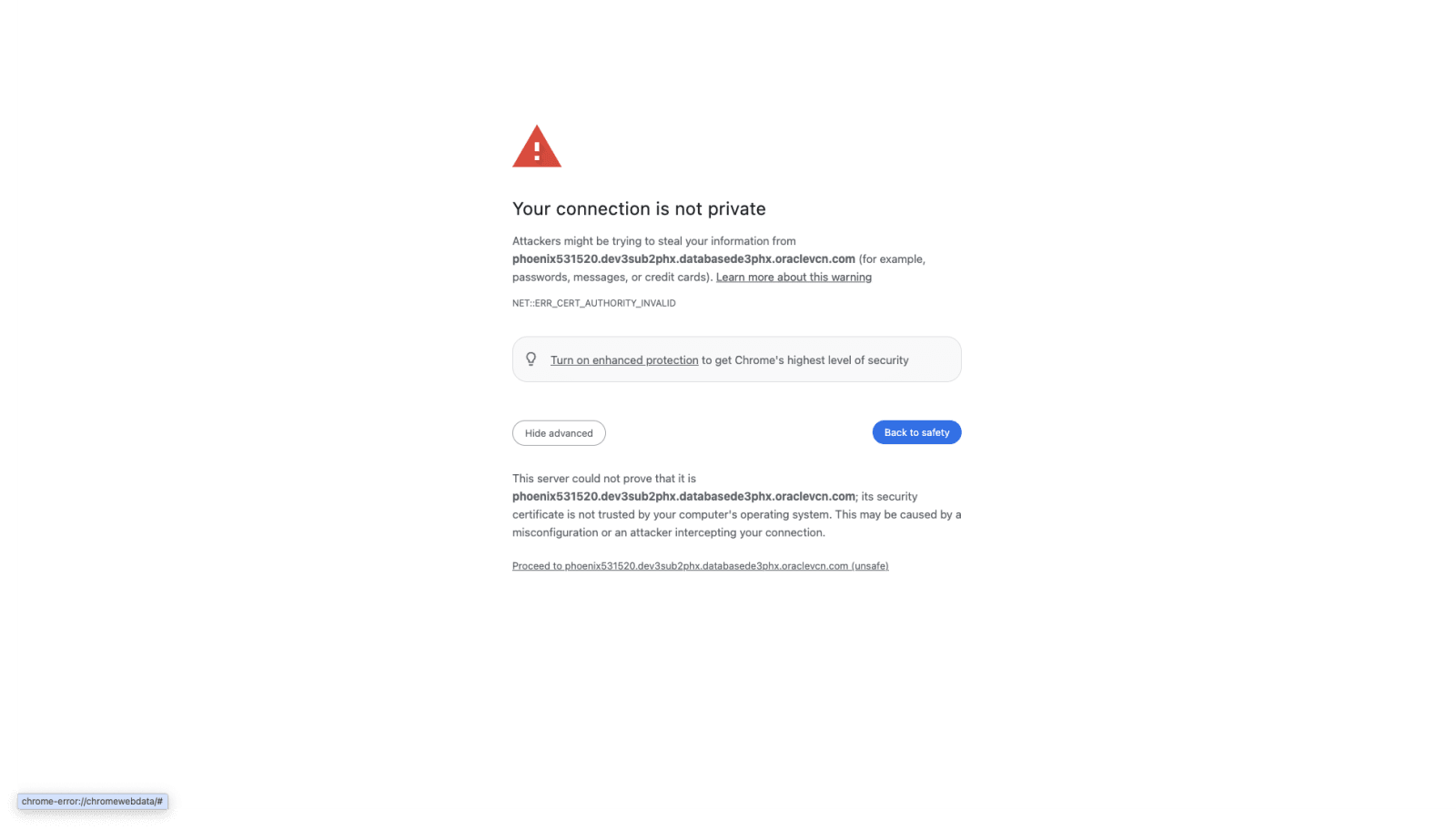

Copy and paste the application URL in a browser. You will see a page like below.

Click ‘Advanced’ and then ‘Proceed to host’.

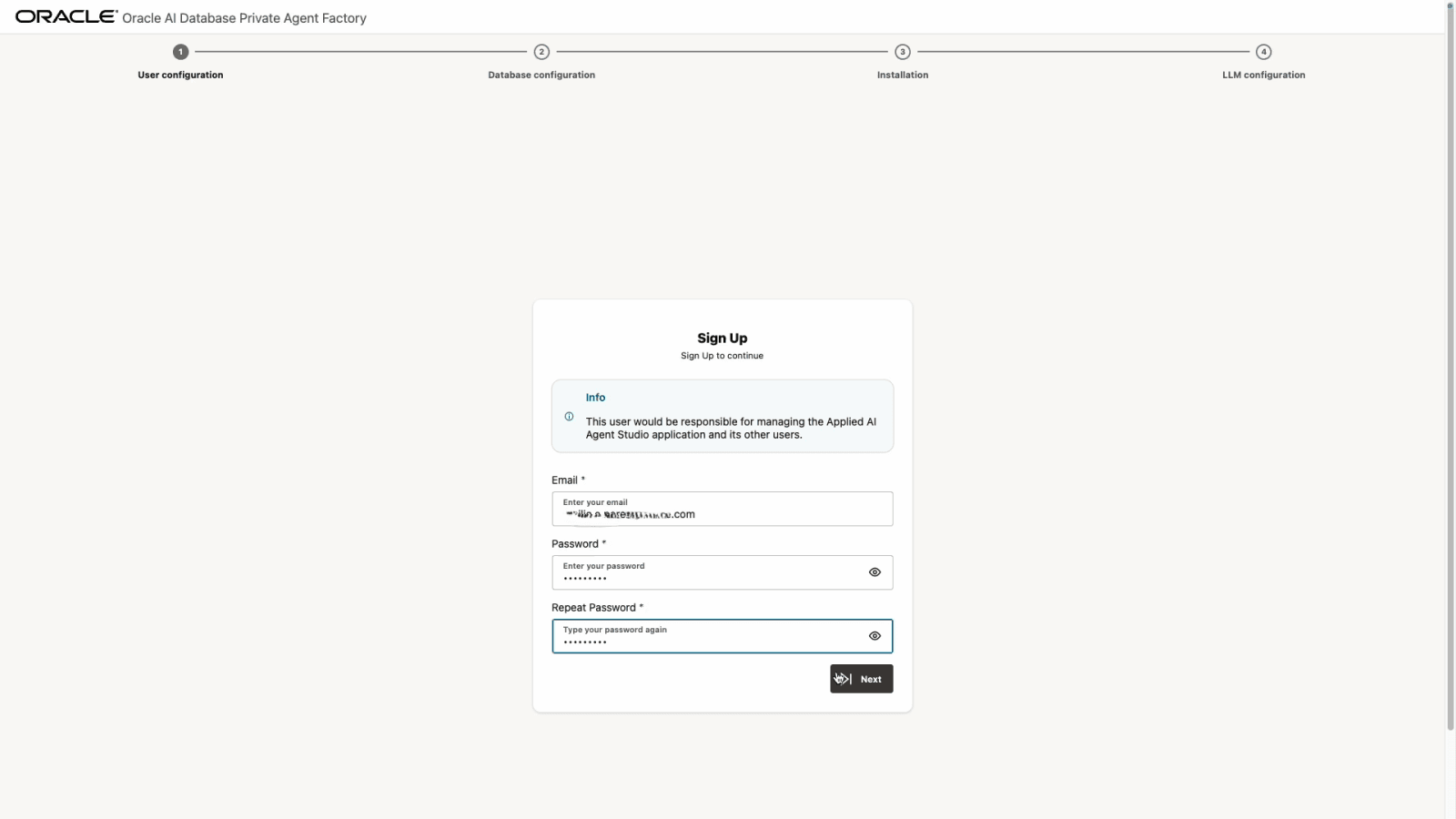

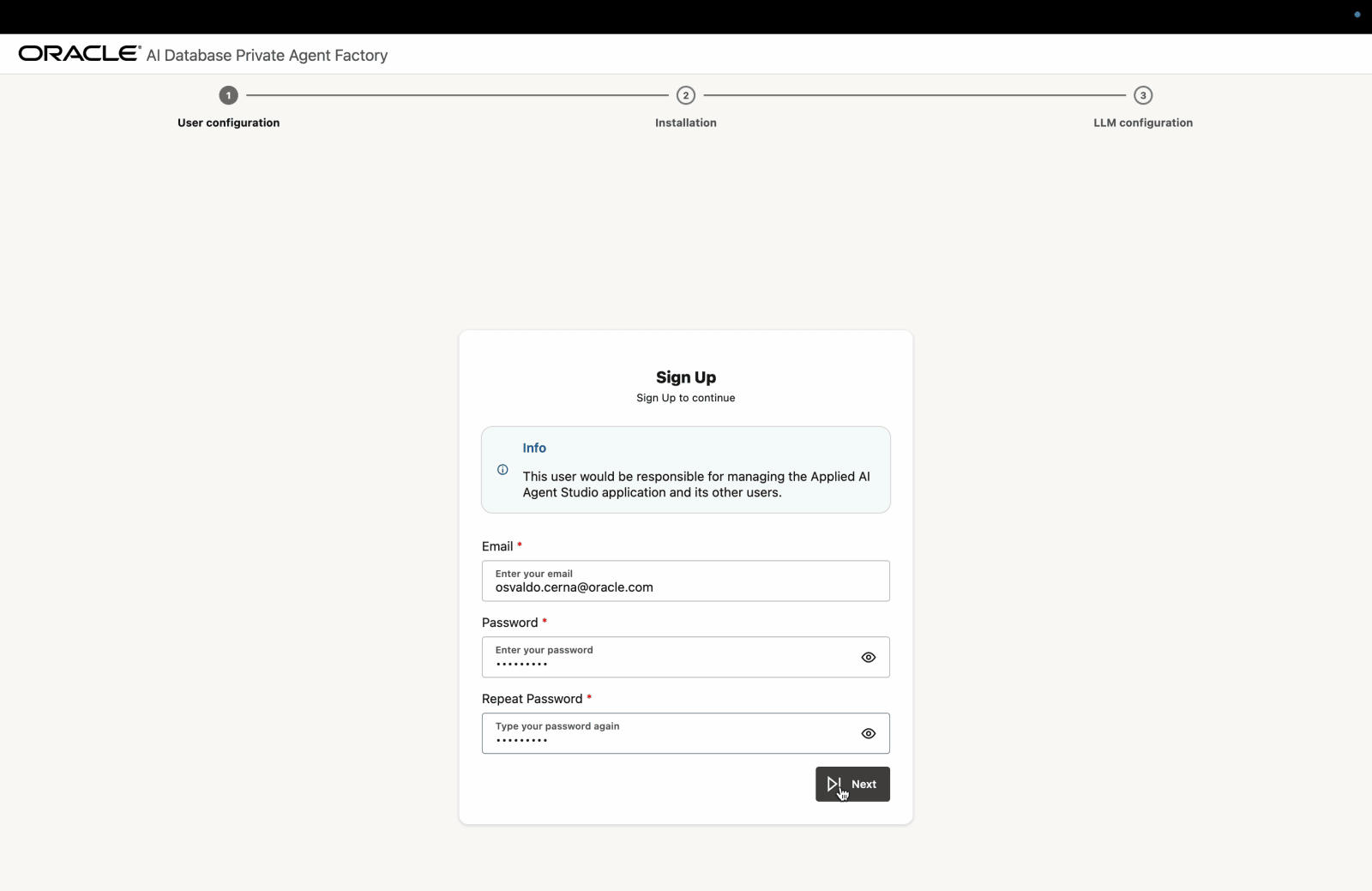

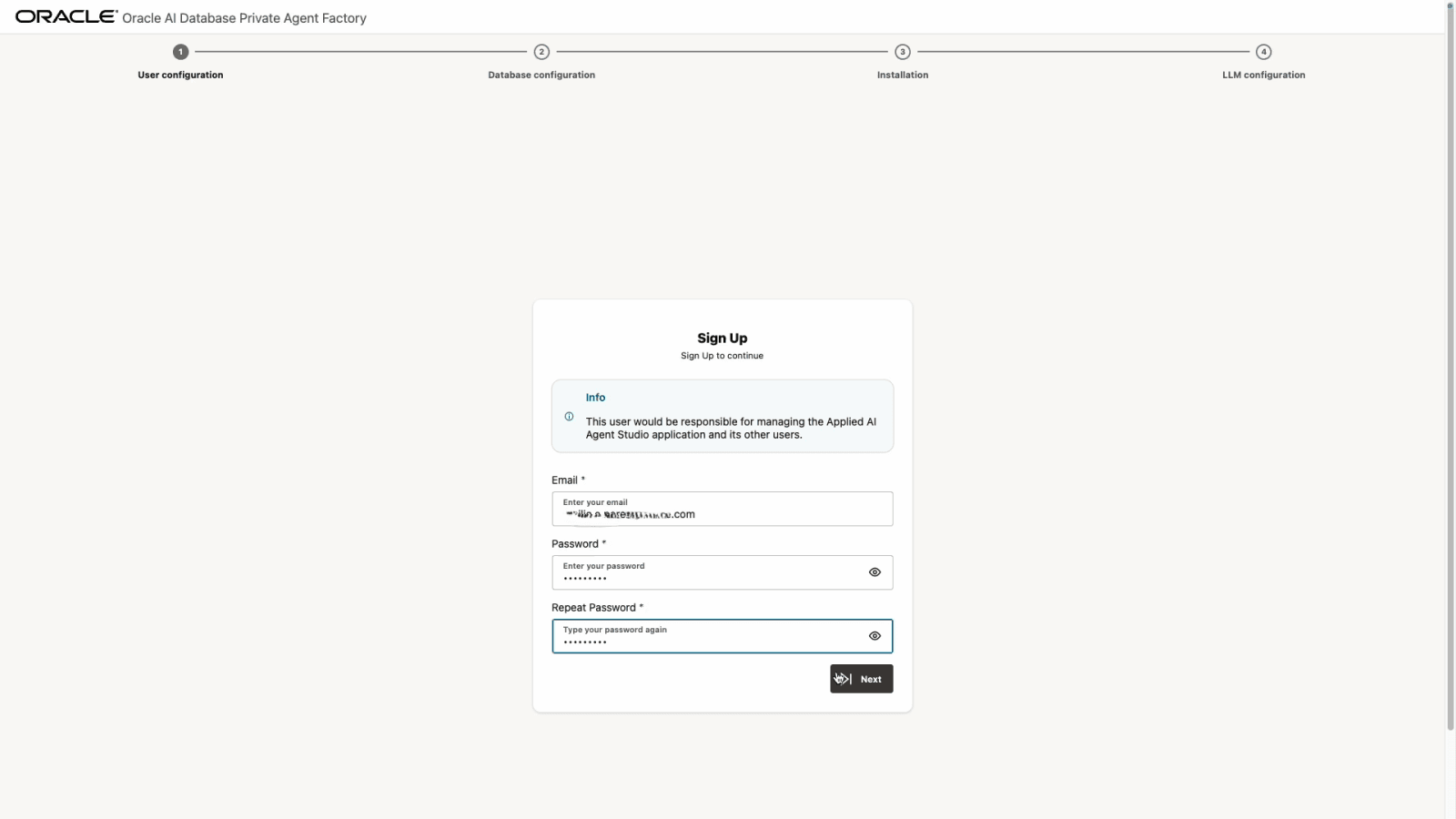

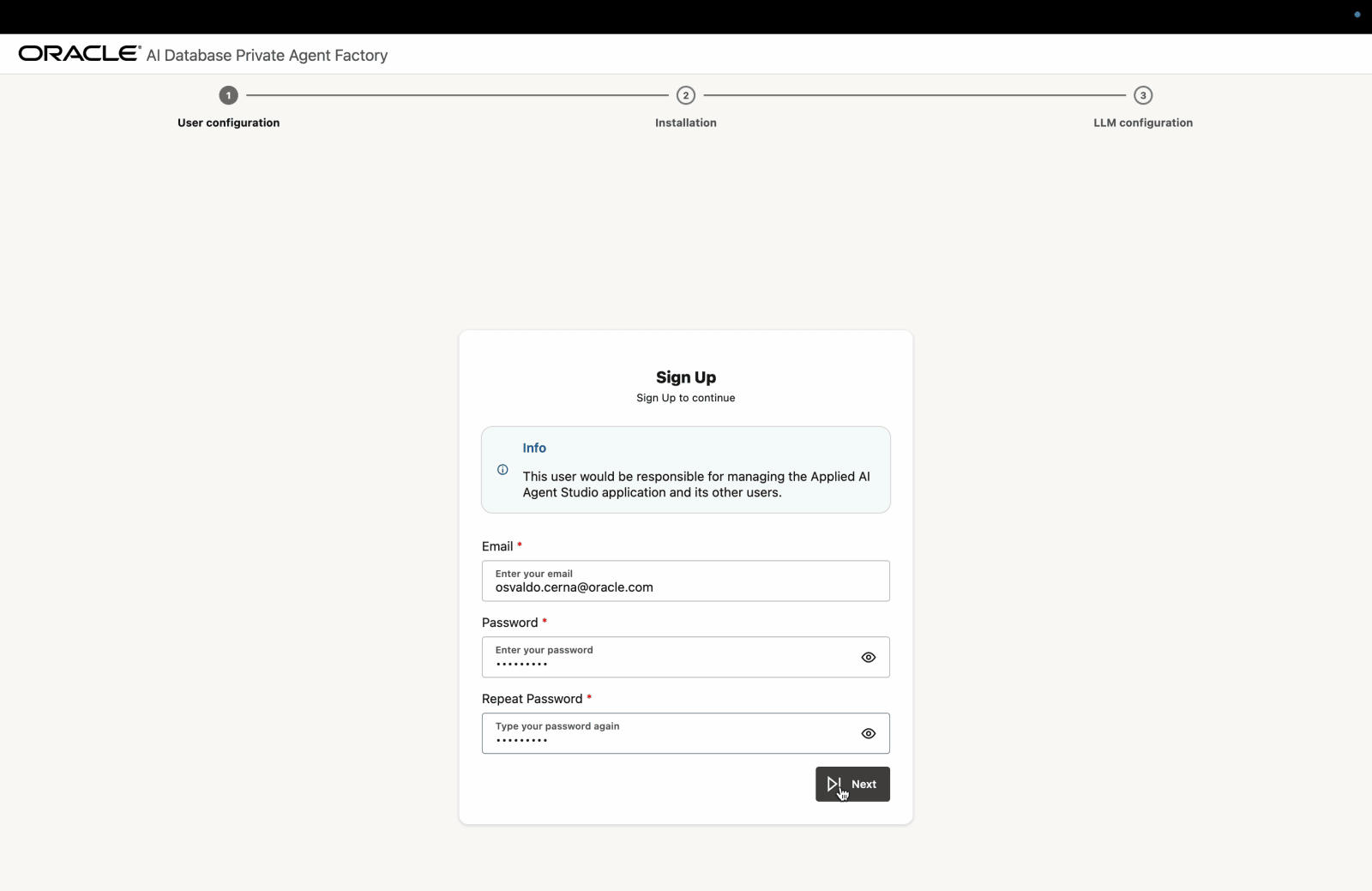

Configure User

Set up an email and a secure password.

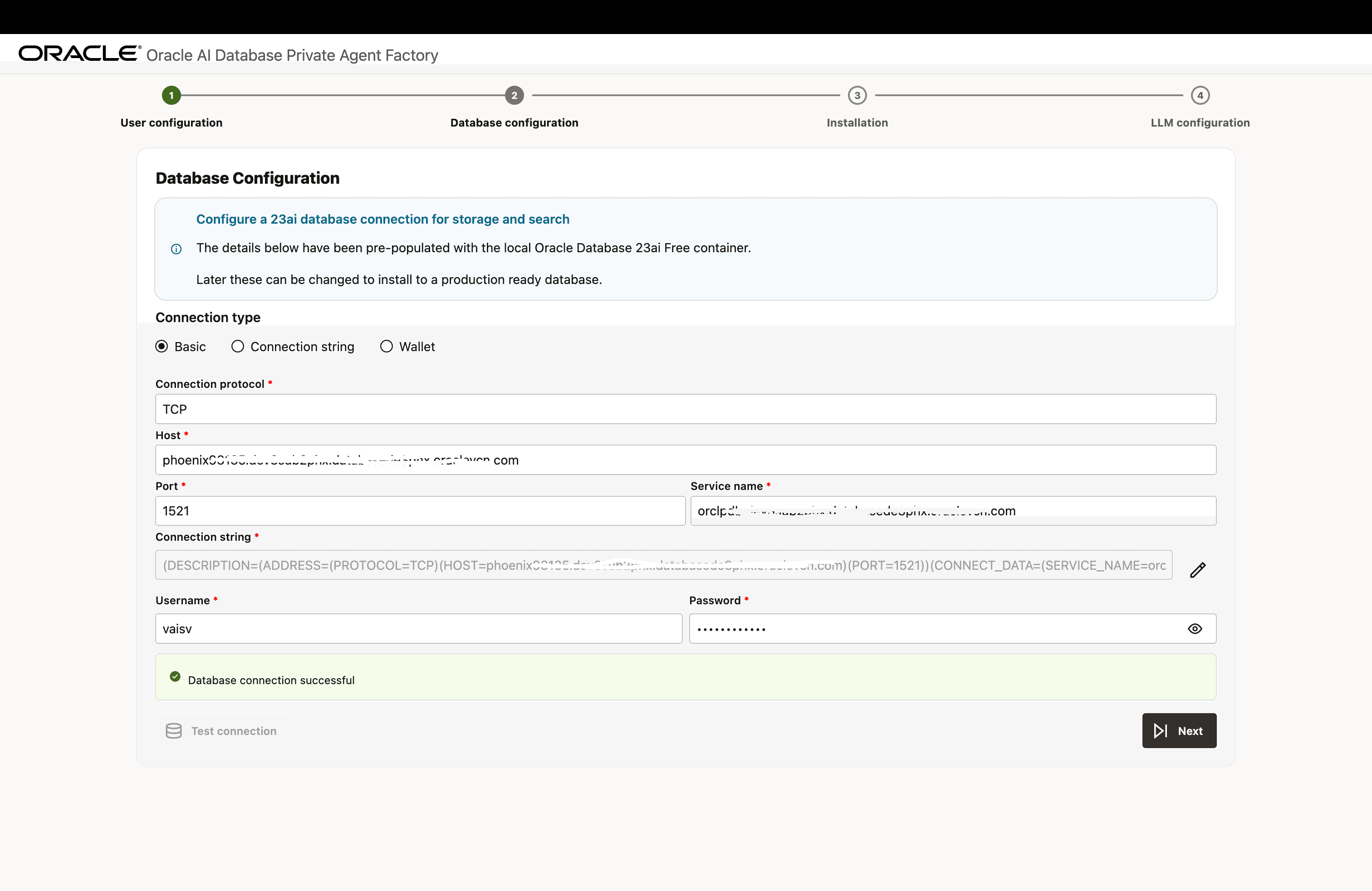

Configure Database Connection

-

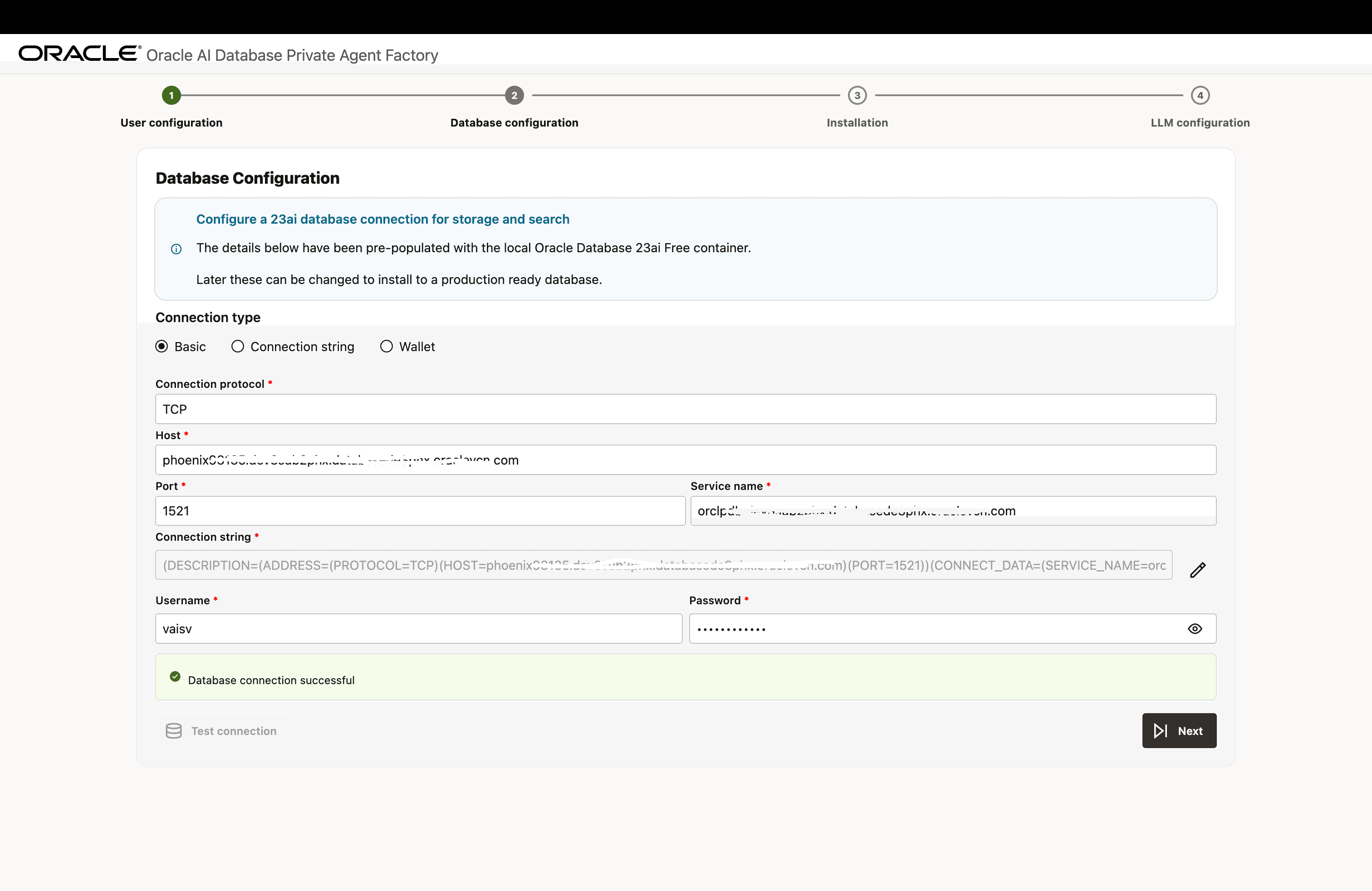

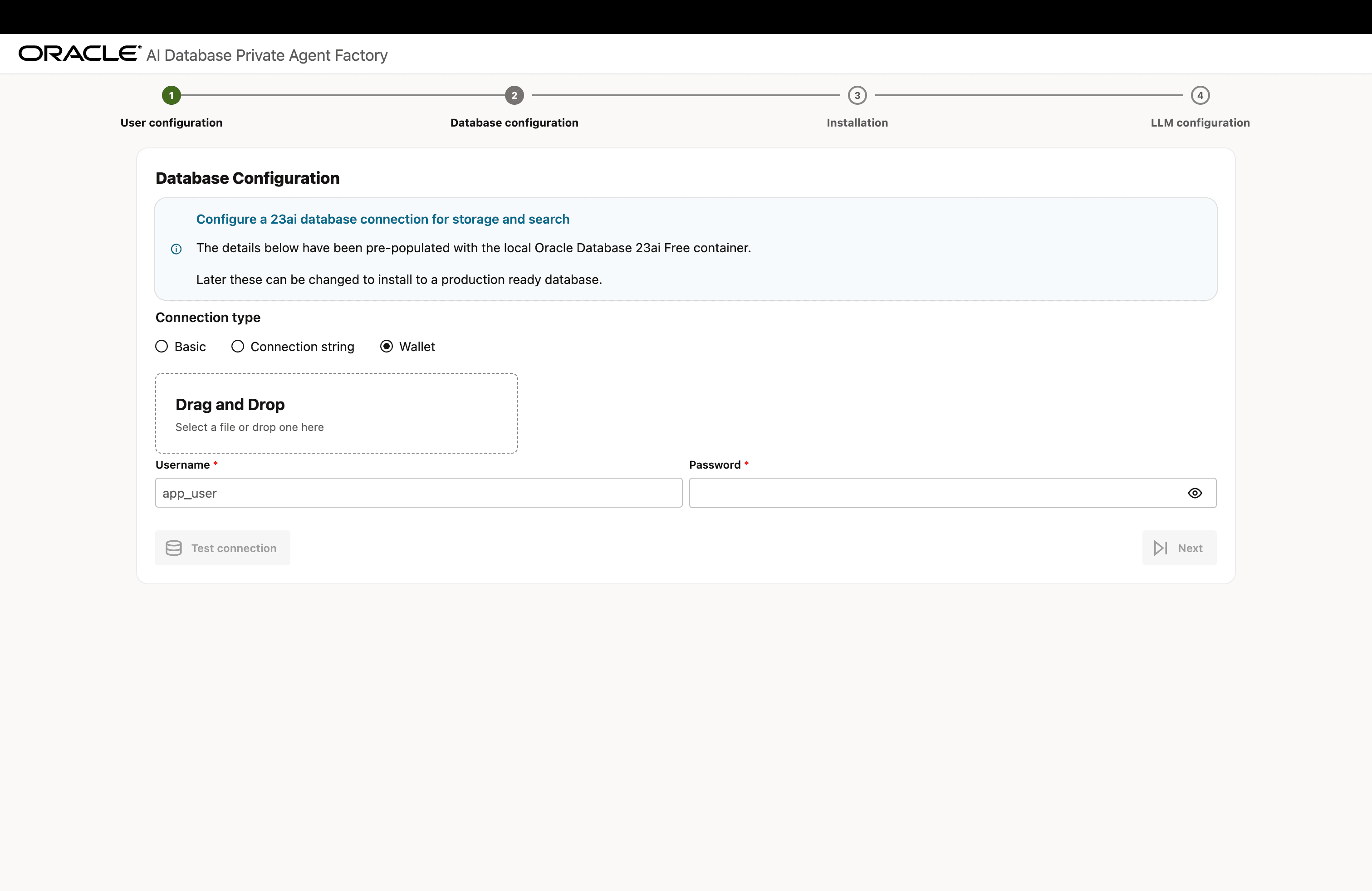

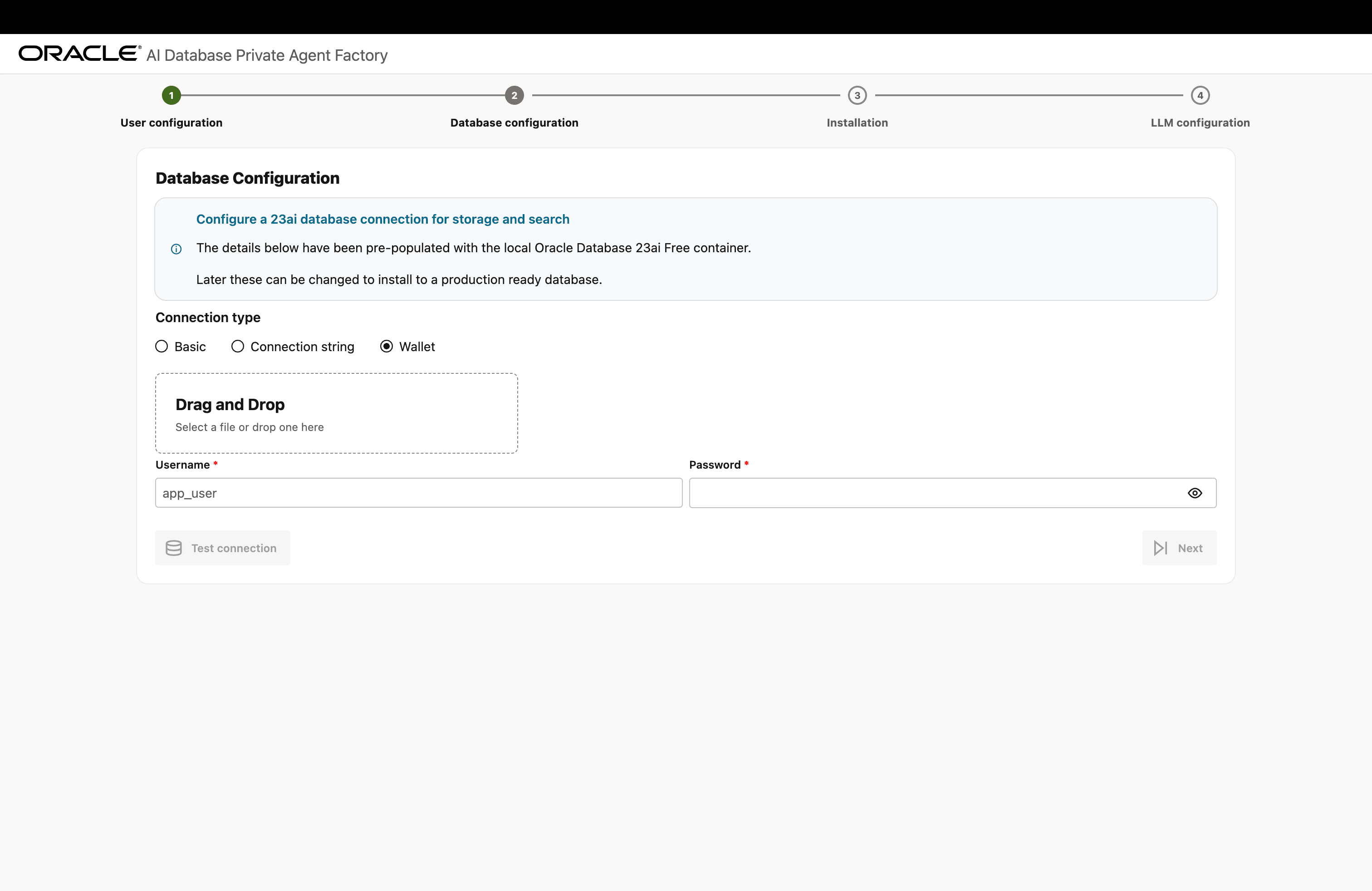

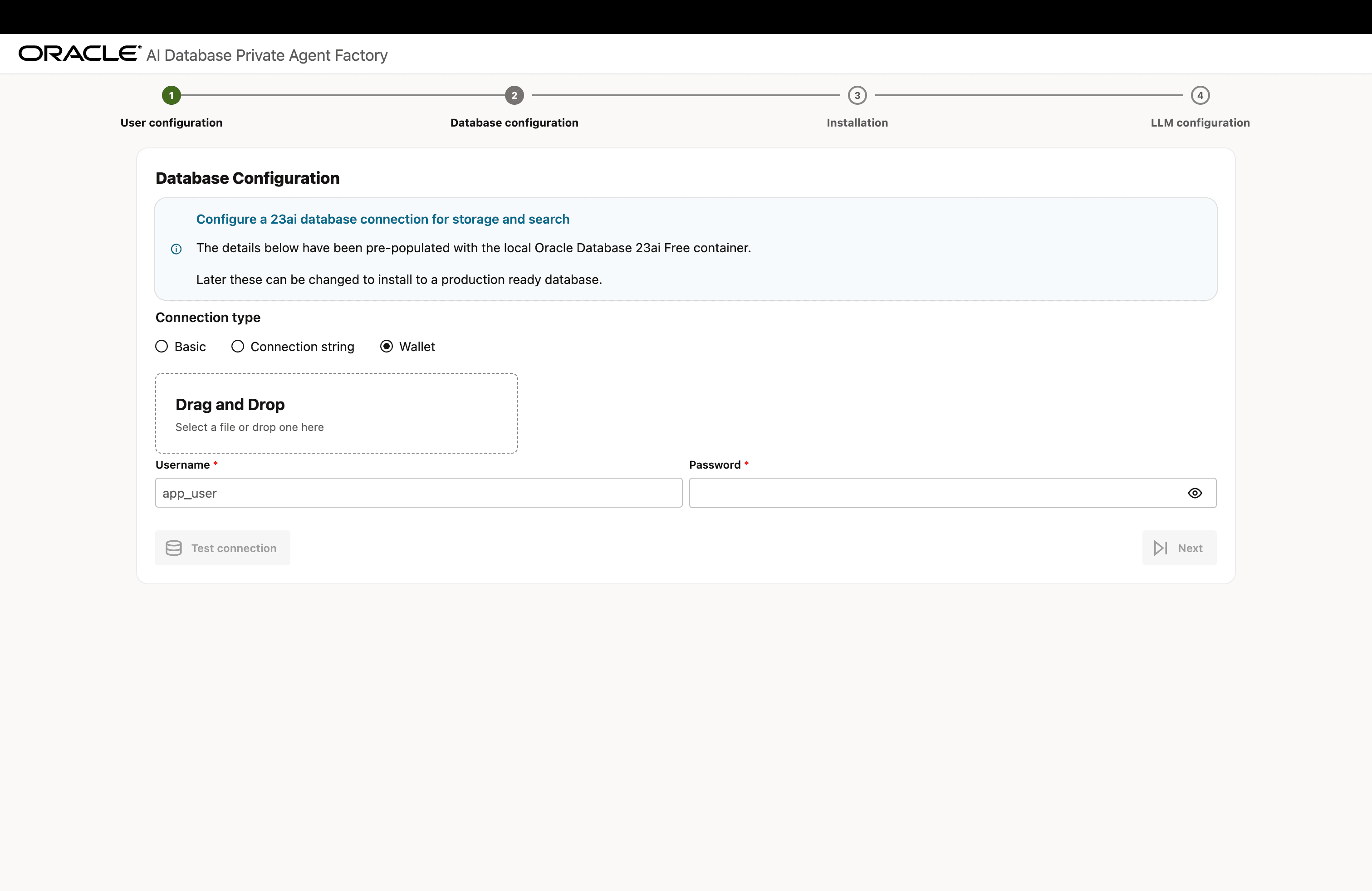

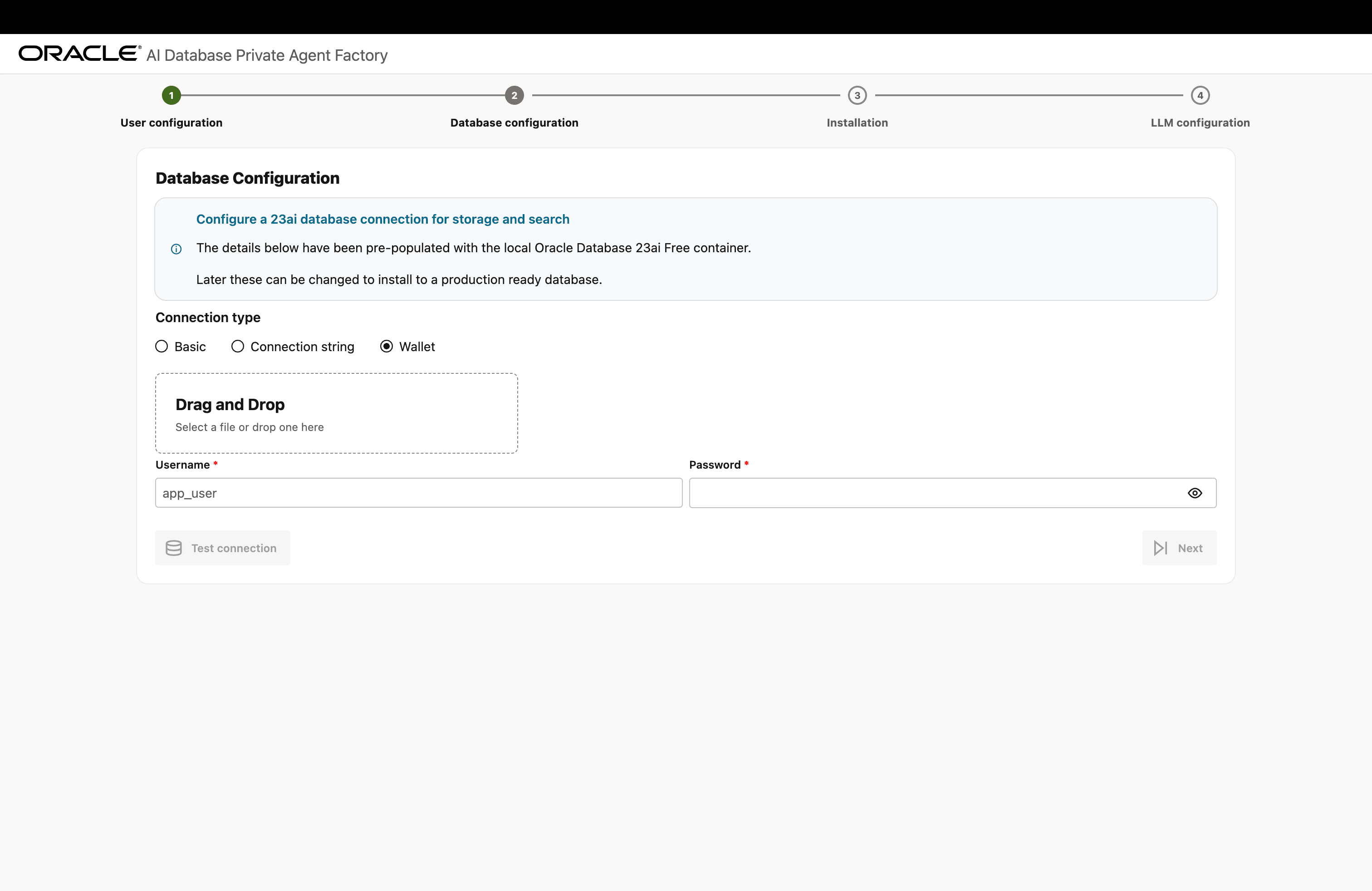

Provide your database connection details. You can either enter the Oracle AI Database 26ai connection details directly, provide the connection string or upload a database wallet file, along with a user and password.

-

Basic: Enter all the connection details manually and the previously created database user and password.

Description of the illustration install-step-2-db-config-1.png

-

Connection String: Enter the Database Connection String and the previously created database user and password.

Description of the illustration install-step-2-db-config-2.png

-

Wallet: Upload a Database Wallet File and enter the previously created database user and password.

Description of the illustration install-step-2-db-config-3.png

To successfully install the Knowledge Agent, including the pre-built Knowledge Assistants, Agent Factory requires the database to access OCI Object Storage using Pre-Authenticated Request (PAR) files. Verify whether

WALLET_LOCATIONorENCRYPTION_WALLET_LOCATIONis specified in thesqlnet.orafile on the database server. If either is configured, ensure that the required OCI certificates are added to the wallet. If the certificates are not present, add them to the wallet to enable access to Object Storage files and prevent Knowledge Agent installation failures. If the database does NOT use a wallet, you can skip this step.Click here to download the certificates you need to add to the wallet. Below example assumes that the SSL wallet is located at

/u01/app/oracle/dcs/commonstore/wallets/ssland that you have unpacked the certificates in/home/oracle/dbc:#! /bin/bash # Check if the certificates are available under "Trusted Certificates" orapki wallet display -wallet /u01/app/oracle/dcs/commonstore/wallets/ssl # Add certificates if they are missing for i in $(ls /home/oracle/dbc/DigiCert*cer) do orapki wallet add -wallet /u01/app/oracle/dcs/commonstore/wallets/ssl -trusted_cert -cert $i -pwd <SSL Wallet password> doneSee Create SSL Wallet with Certificates for more details.

-

-

Click Test Connection to verify that the information entered is correct. Upon a successful connection, you will see a ‘Database connection successful’ notification. Click Next.

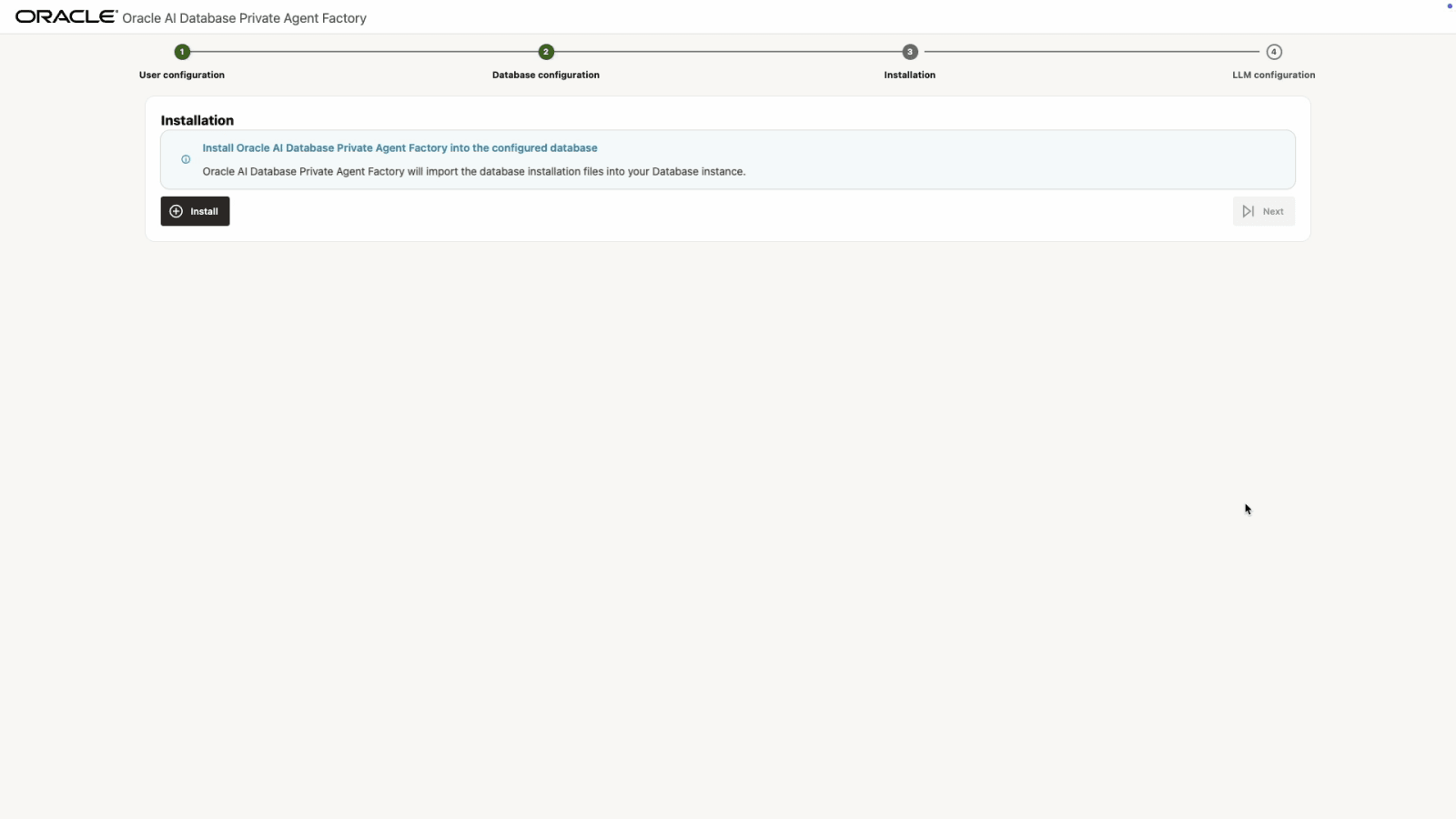

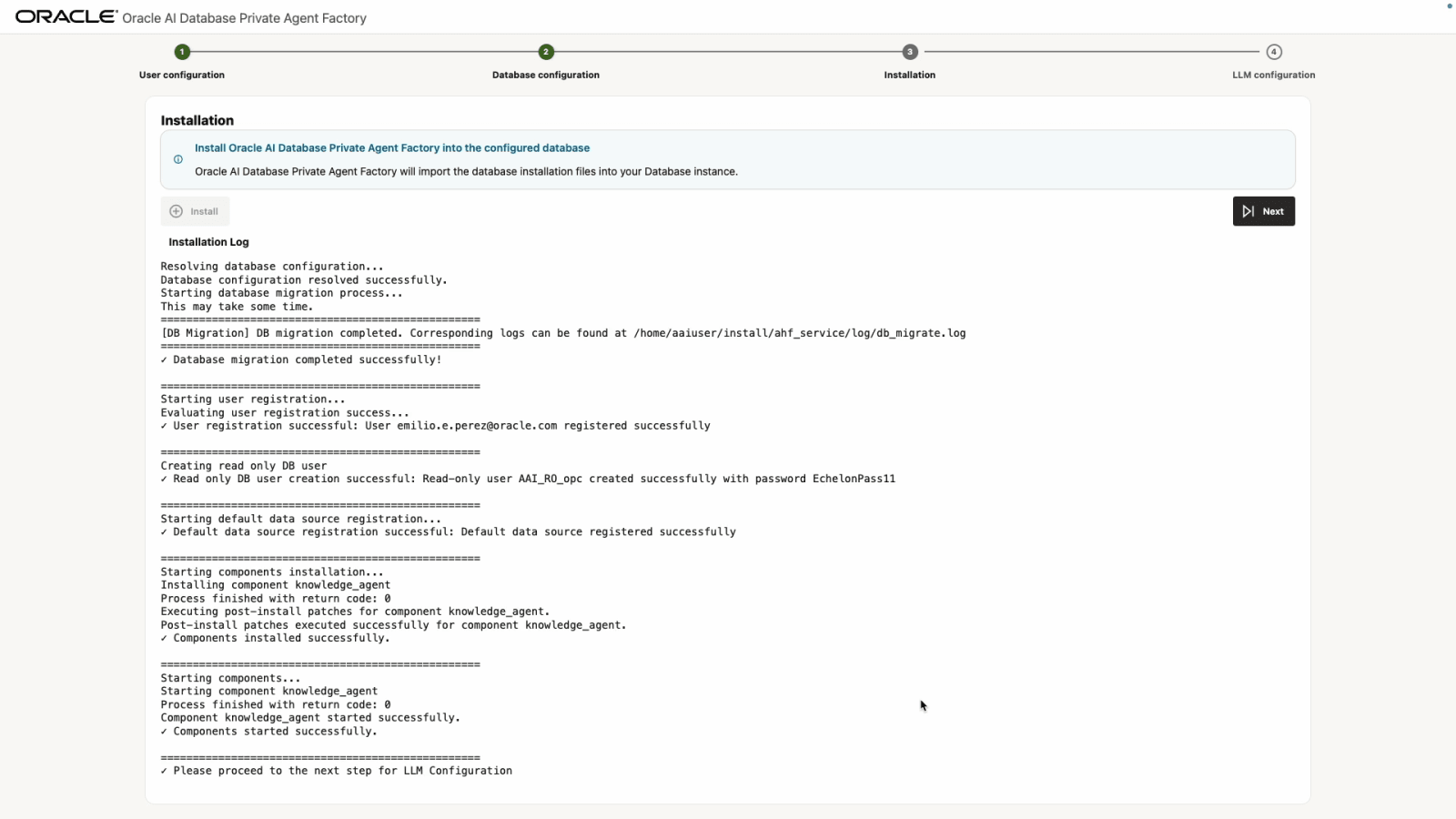

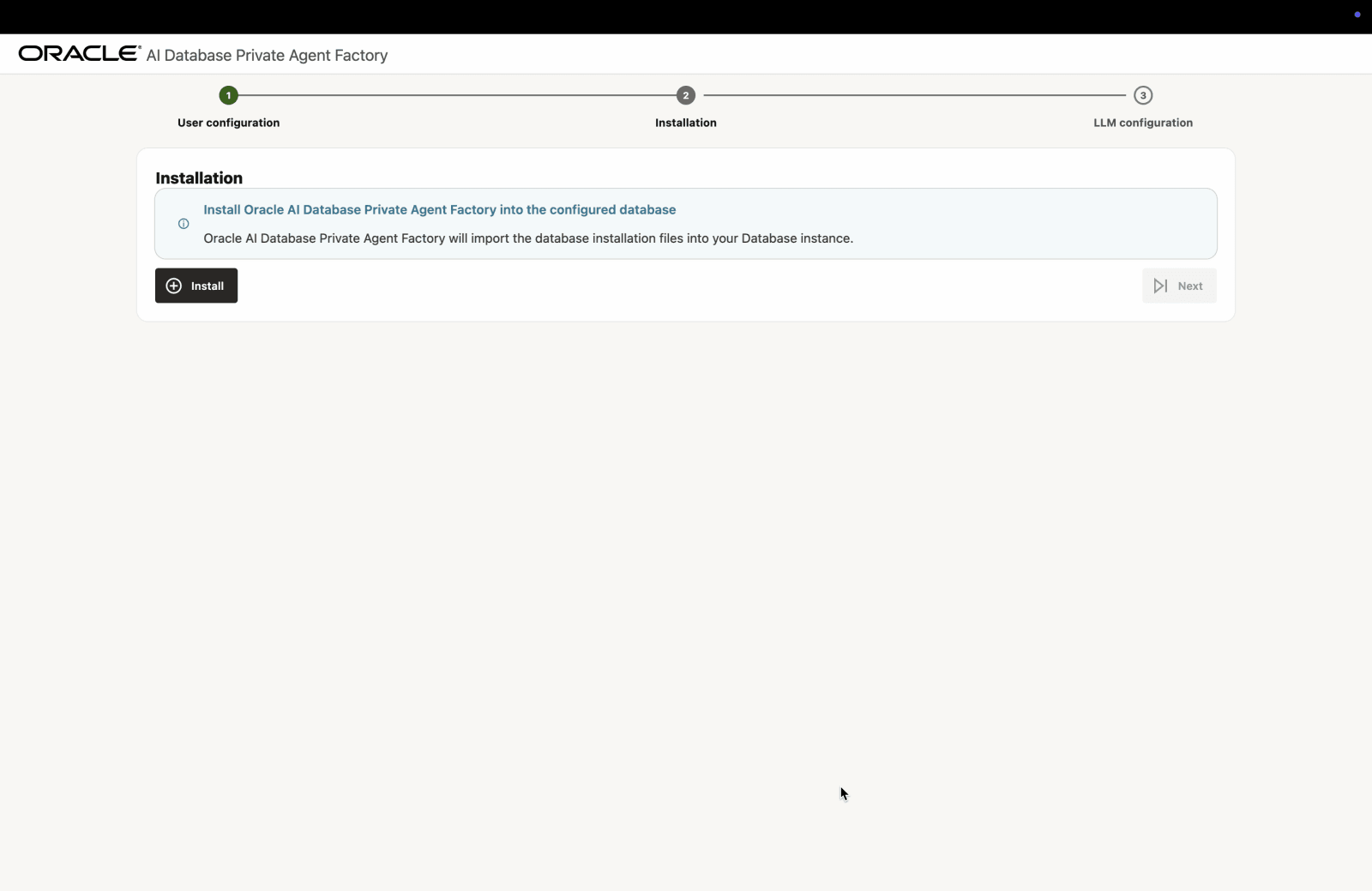

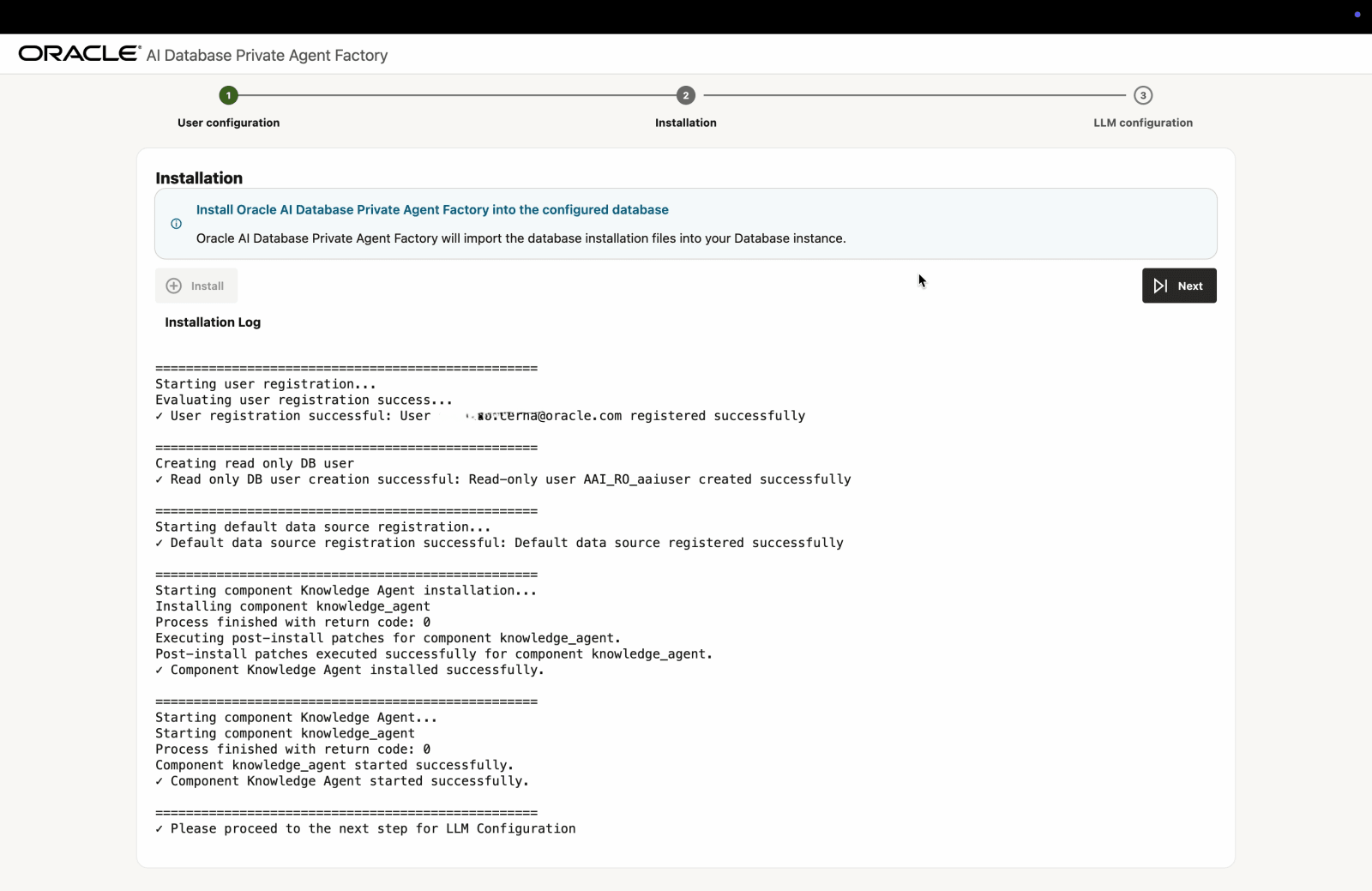

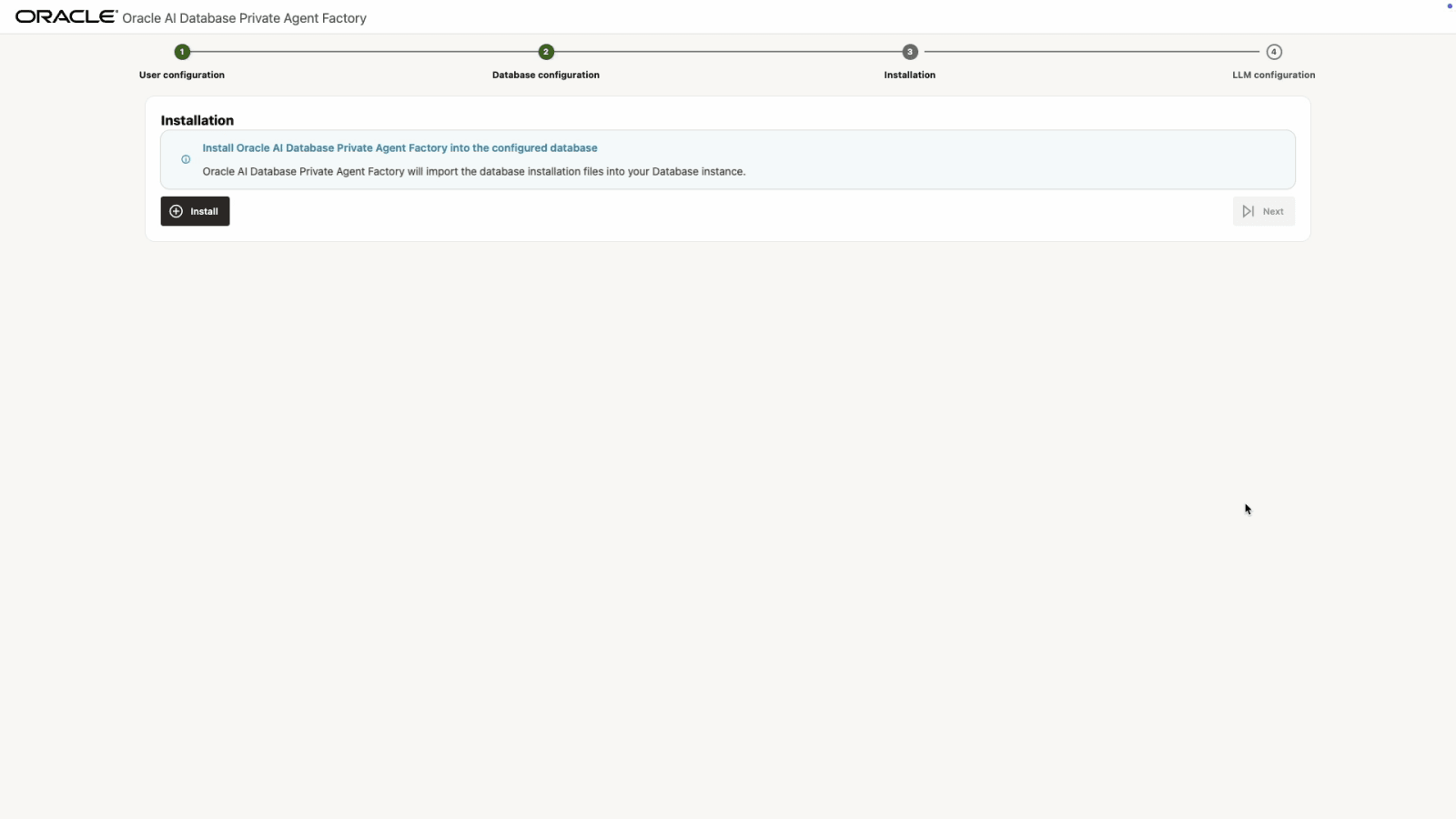

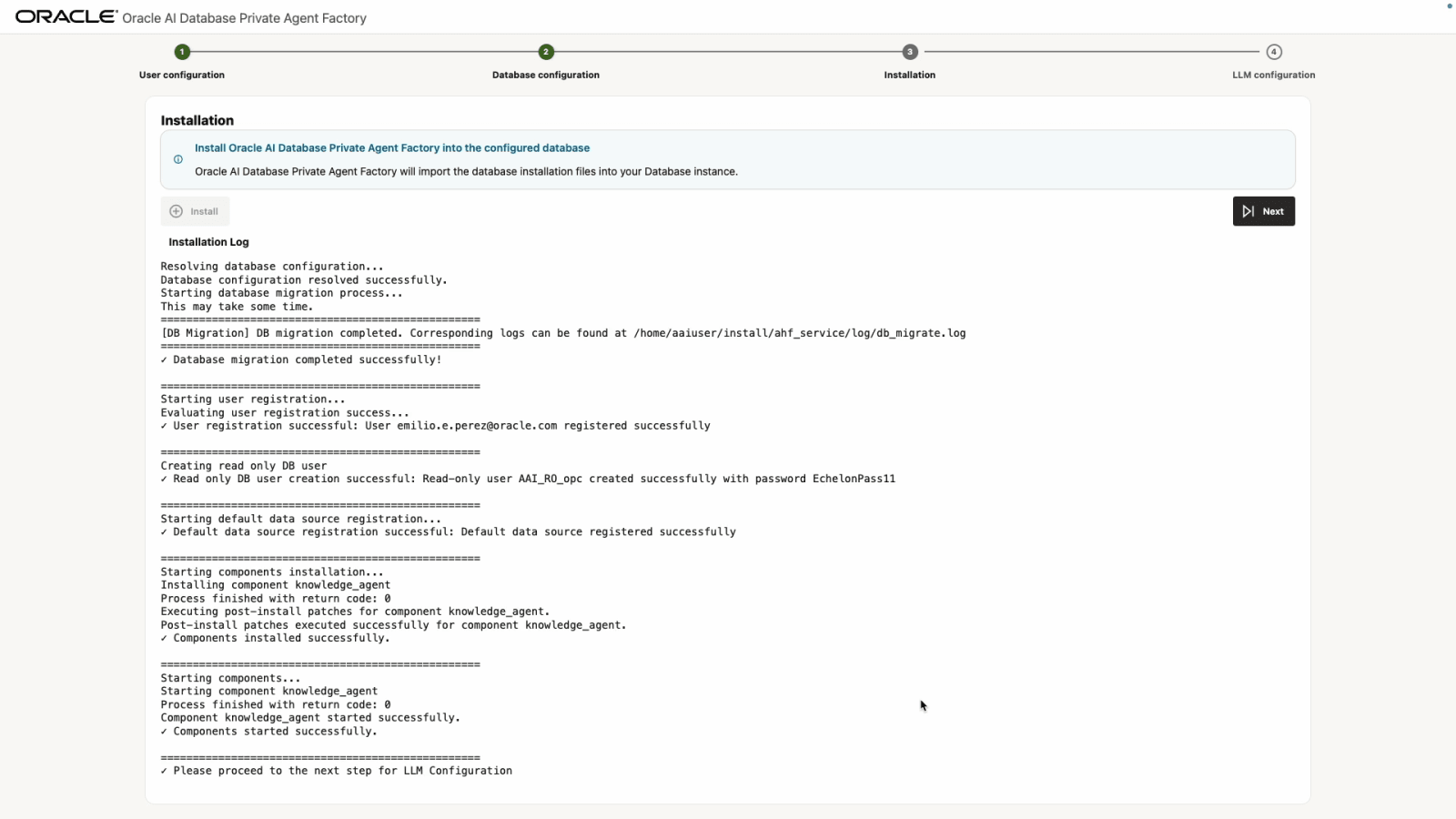

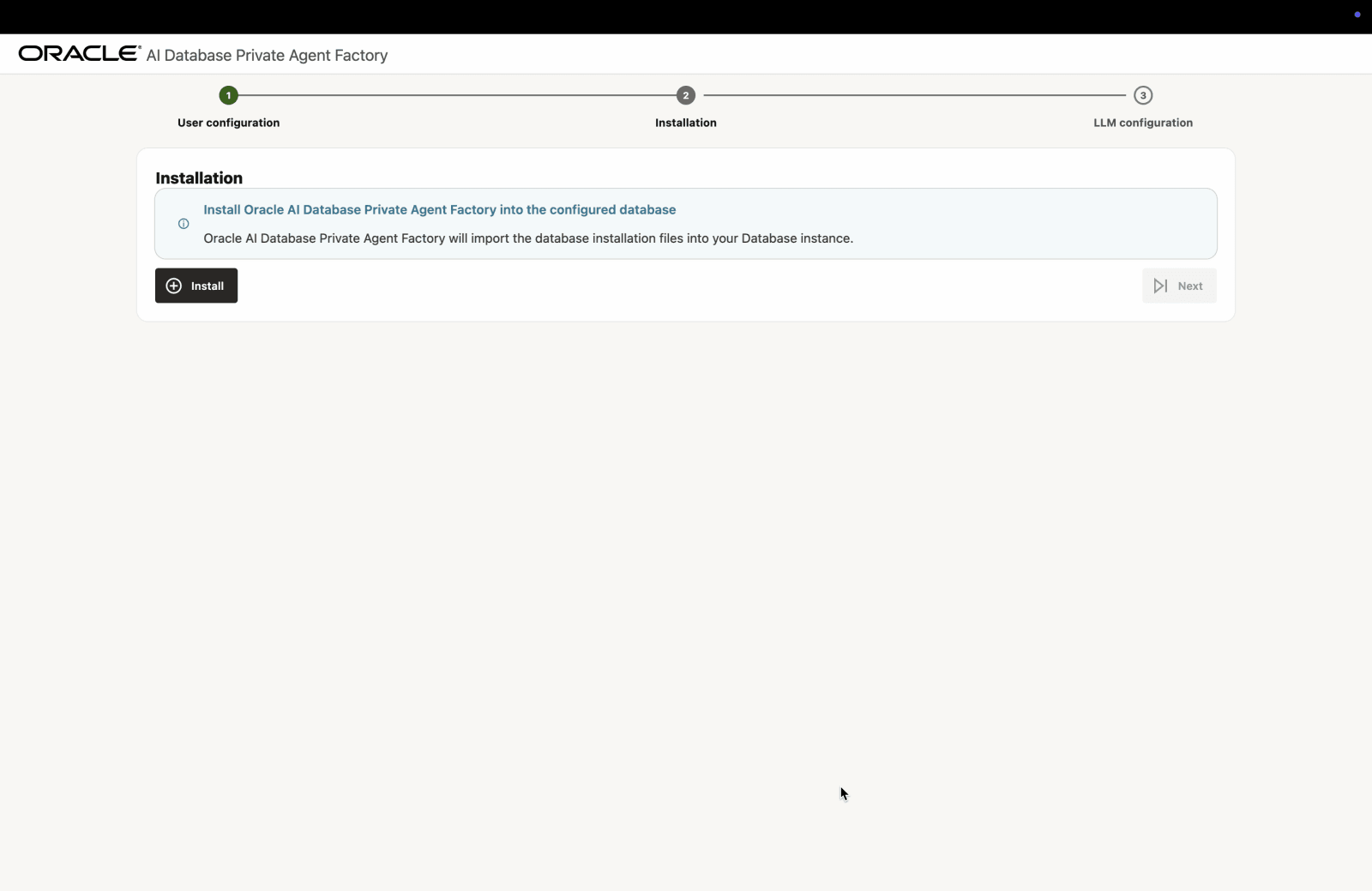

Install Components

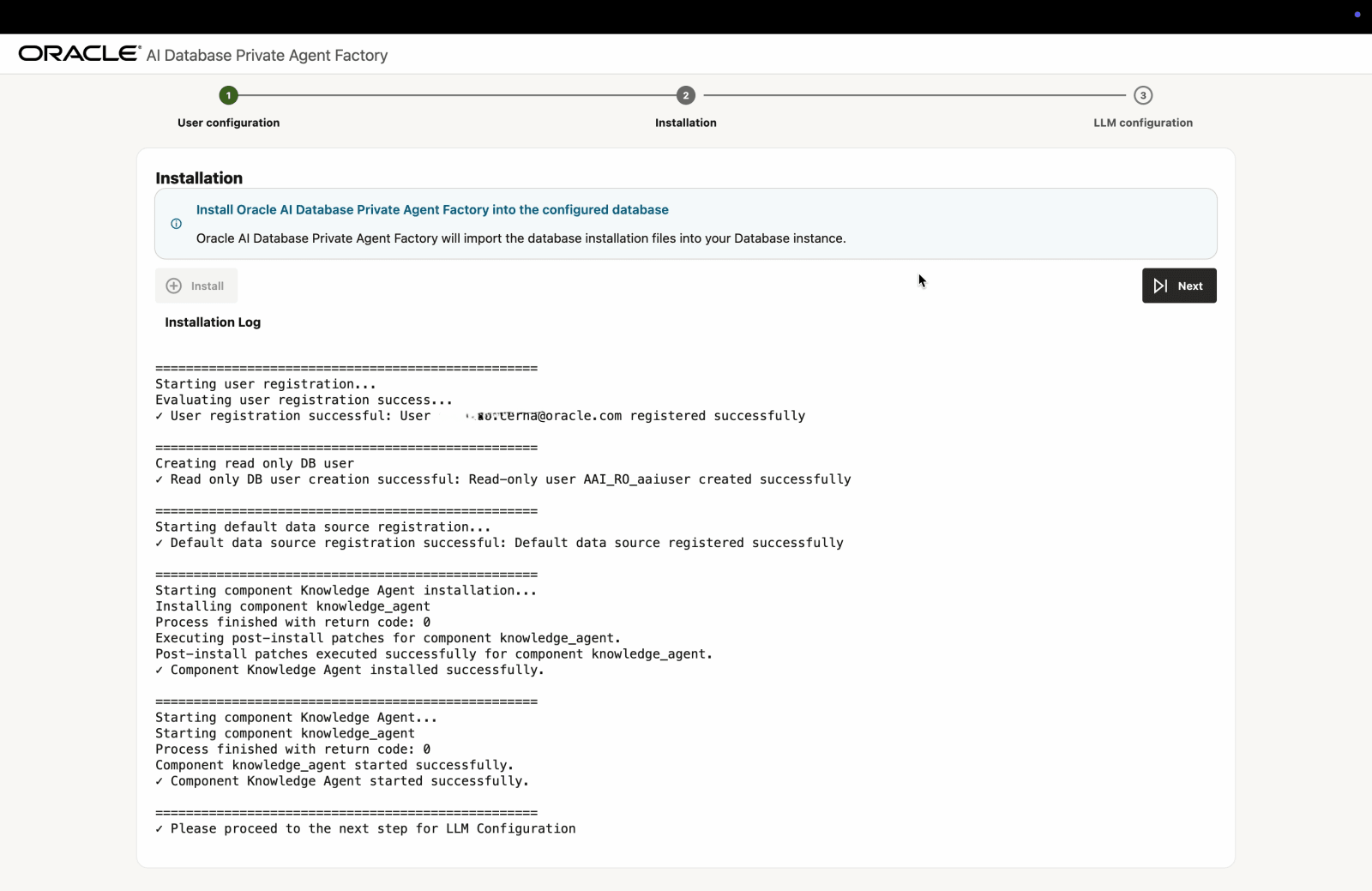

Click Install and wait a few minutes for the setup to complete.

When finished, you will see the message ✓ Please proceed to the next step for LLM Configuration. Click Next.

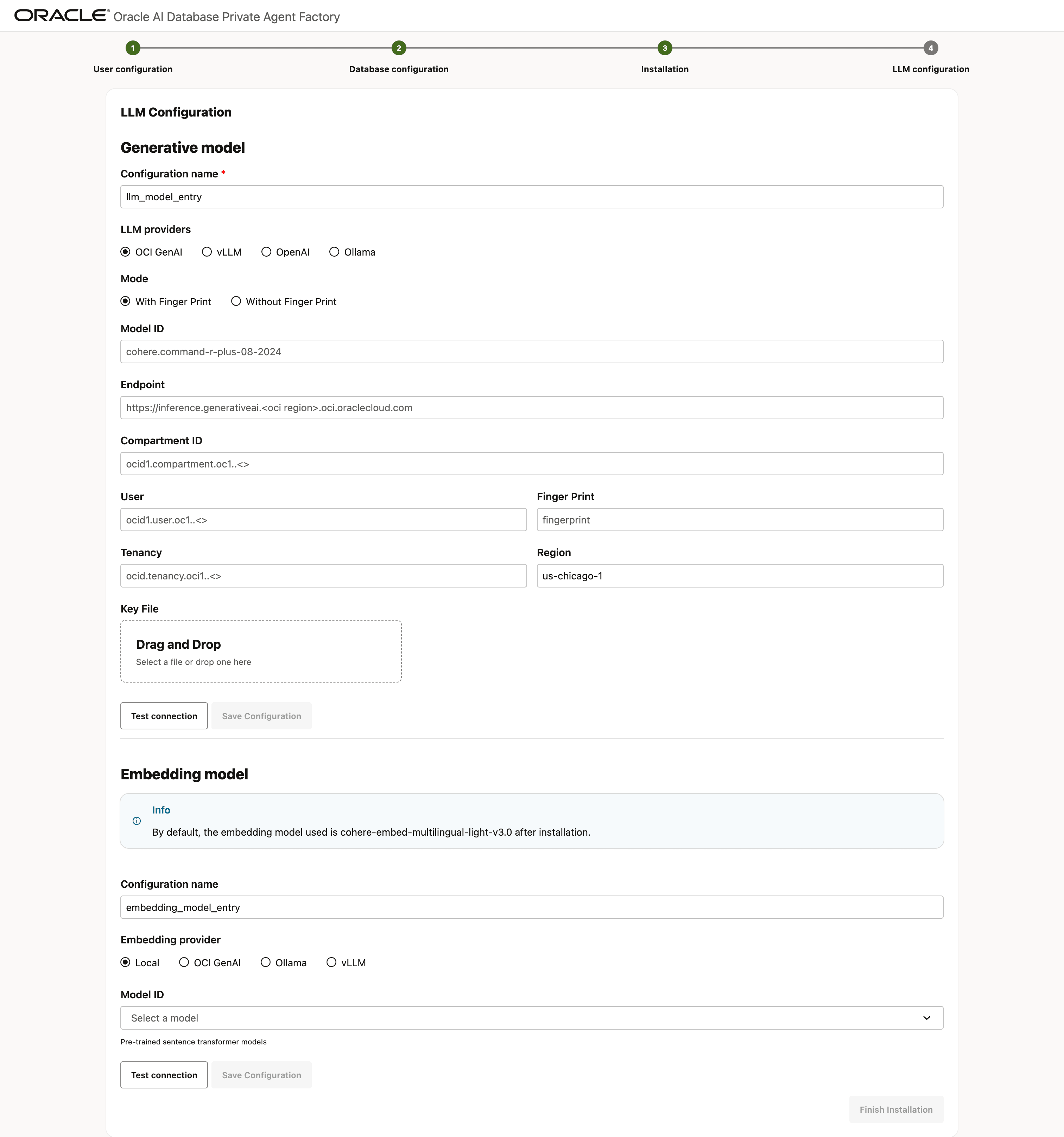

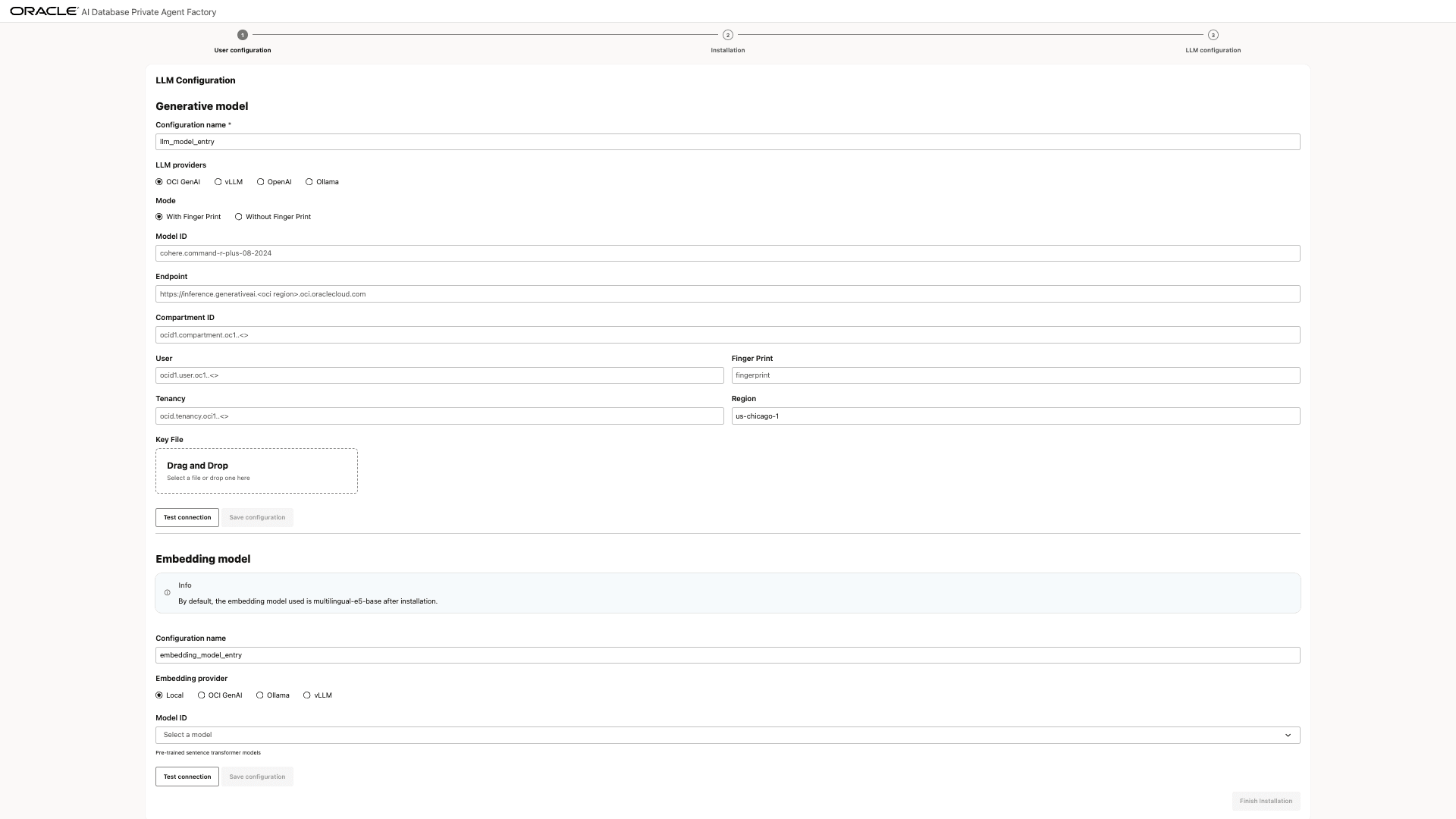

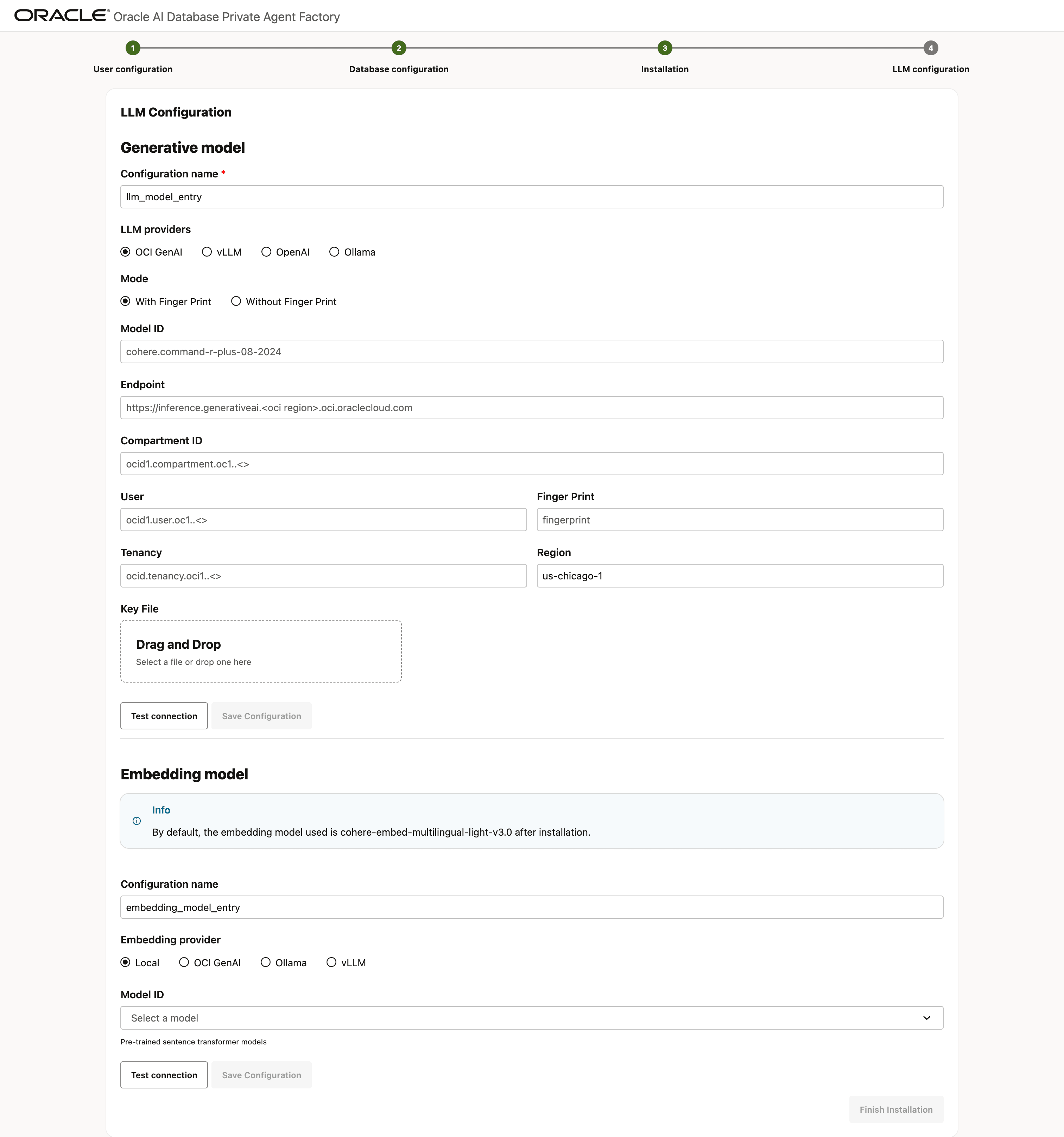

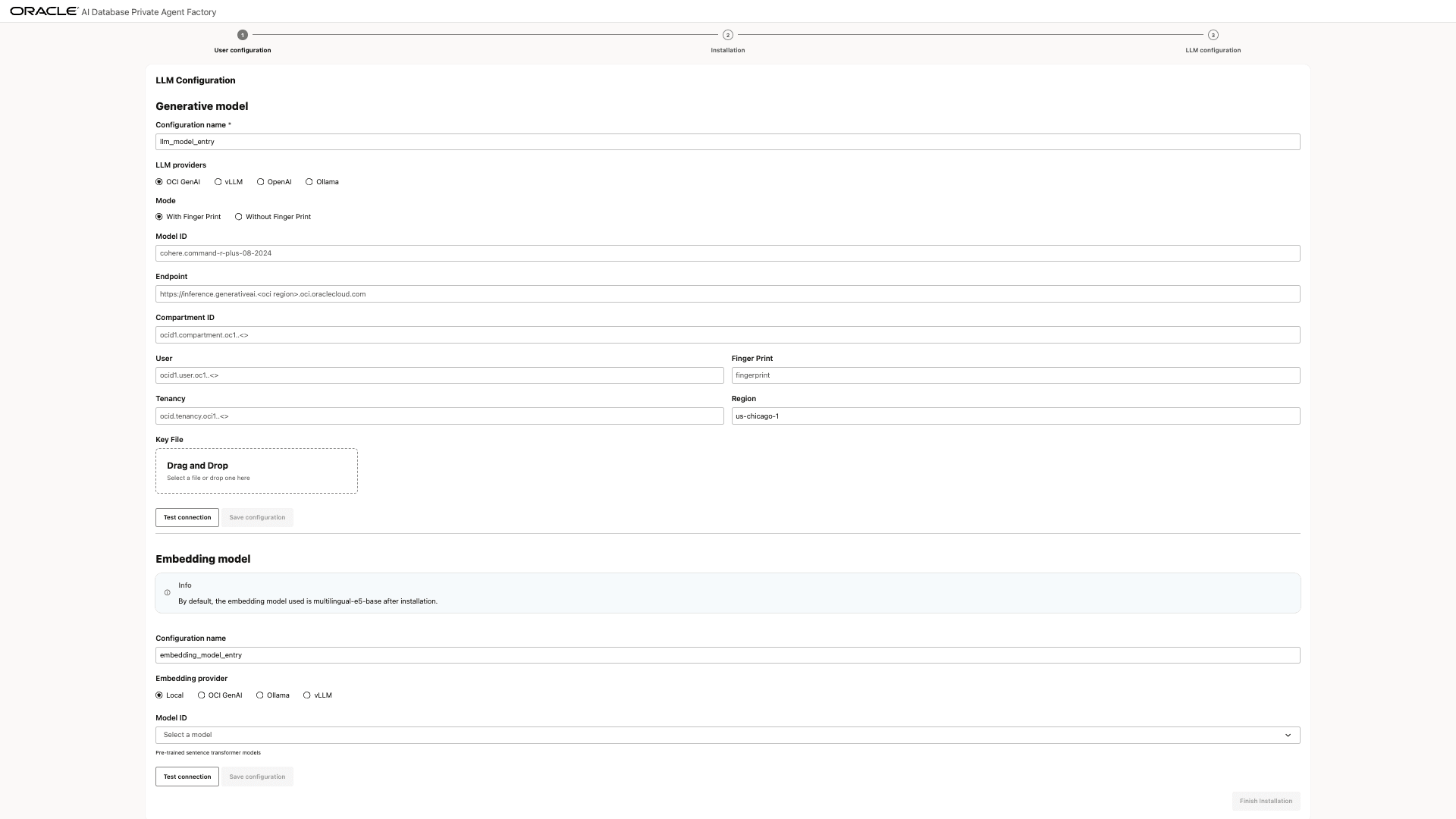

Configure LLM

Before you continue, see Manage LLM to review the supported models and LLM providers, and how to set them up.

Select an LLM configuration based on your LLM provider. The application supports integration with Ollama, vLLM, OpenAI, and the full suite of OCI GenAI offerings. Depending on your selected provider, the required connection credentials may differ.

Description of the illustration install-step-llm-config.png

Enter the required details in the selected LLM configuration and select Test Connection. Once you see the Connection successful message, select Save Configuration.

Optionally, configure an embedding model to use for data ingestion and retrieval. By default, multilingual-e5-base model is used to generate embeddings and is also the local embedding model included with the service.

Pre-built Knowledge Assistants currently support only embedding models from the OCI Generative AI Service. To ensure proper functionality of the pre-built knowledge agents, select from the following cohere embedding models.

- multilingual-e5-base

- cohere.embed-v4.0

- cohere.embed-multilingual-light-v3.0

- cohere.embed-english-v3.0

- cohere.embed-multilingual-v3.0

Finally, select Finish Installation to complete the setup and be redirected to the login page.

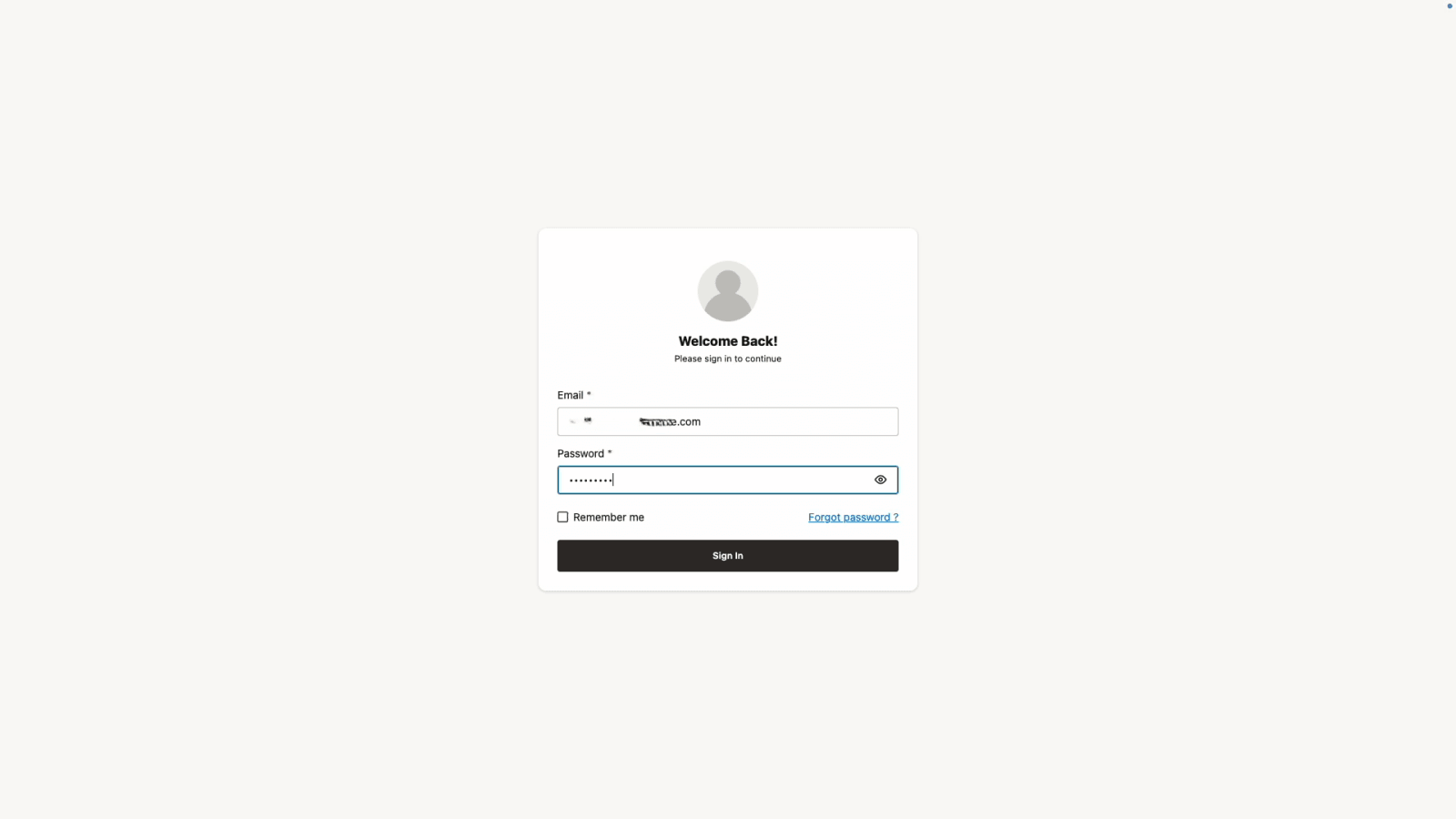

Log In to the Application

Log in with the email and password created previously. After logging in, you will be greeted by the Get Started screen.

VPN On. Quickstart mode

Install the Application on Quickstart Mode

Choose a staging location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle. Note that this directory should not be located on an NFS mount.

Get the installation kit

Go to the official download website and get the installation kit. See Download Installation Kit.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Before you begin the installation process, make sure to configure any proxies required to connect outside your VPN.

export http_proxy=<your-http-proxy>;

export https_proxy=<your-https-proxy>;

export no_proxy=<your-domain>;

export HTTP_PROXY=<your-http-proxy>;

export HTTPS_PROXY=<your-https-proxy>;

export NO_PROXY=<your-domain>;Uncompress the Installation Kit at the Staging Location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle.

Note: While choosing the staging location, make sure it is not on an NFS mount.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Run Interactive Installer

The interactive_install.sh script, included in the installation kit, automates nearly all setup tasks, including environment configuration, dependency installation, and application deployment.

This file will be present in the staging location once you extract the kit.

-

Run the Interactive Installer

Now that the kit is unpacked, execute the

interactive_install.shscript from within the same directory.bash interactive_install.sh --reset (Required if previously installed) bash interactive_install.sh -

When the interactive installer prompts you, select the option that better suits your environment:

Are you on a corporate network that requires an HTTP/HTTPS proxy? (y/N): y Enter 1 if you are on a Standard Oracle Linux machine or 2 if you are on OCI: <user_number_choice> Enter your Linux username: <user_linux_username> Does your default /tmp directory have insufficient space (< 100GB)? (y/N): y [INFO] You can get a token from https://container-registry.oracle.com/ Username: <email_registered_on_container_registry> Password: <token_from_container_registry> Do you want to proceed with the manual database setup? (y/N): n Select installation mode: 1) prod 2) quickstart Enter choice (1 or 2): 2 You selected Quickstart mode. Confirm? (yes/no): yes

The following output indicates that the installation was completed successfully.

Once the installation script finishes successfully, you can access the application at the URL https://<hostname>:8080/studio/ provided by the script and complete the remaining configuration through your web browser.

Access the Application

Copy and paste the application URL in a browser. You will see a page like below.

Click ‘Advanced’ and then ‘Proceed to host’.

Configure User

Set up an email and a secure password.

Install Components

Click Install and wait a few minutes for the setup to complete.

When finished, you will see the message ✓ Please proceed to the next step for LLM Configuration. Click Next.

Configure LLM

Before you continue, see Manage LLM to review the supported models and LLM providers, and how to set them up.

Select an LLM configuration based on your LLM provider. The application supports integration with Ollama, vLLM, OpenAI, and the full suite of OCI GenAI offerings. Depending on your selected provider, the required connection credentials may differ.

Enter the required details in the selected LLM configuration and select Test Connection. Once you see the Connection successful message, select Save Configuration.

Optionally, configure an embedding model to use for data ingestion and retrieval. By default, multilingual-e5-base model is used to generate embeddings and is also the local embedding model included with the service.

Pre-built Knowledge Assistants currently support only embedding models from the OCI Generative AI Service. To ensure proper functionality of the pre-built knowledge agents, select from the following cohere embedding models.

- multilingual-e5-base

- cohere.embed-v4.0

- cohere.embed-multilingual-light-v3.0

- cohere.embed-english-v3.0

- cohere.embed-multilingual-v3.0

Finally, select Finish Installation to complete the setup and be redirected to the login page.

Log In to the Application

Log in with the email and password created previously. After logging in, you will be greeted by the Get Started screen.

Enable Ollama After QuickStart Installation

Note

When installing Agent Factory using QuickStart mode, the Ollama service is not created or started automatically. This behavior is intentional.

Running Ollama inside a container using CPU-only resources resulted in suboptimal performance. To provide a better experience, Ollama is expected to be run directly on the host machine, where it can leverage available GPU resources. This setup ensures optimal performance and proper connectivity.

As a result, you will not see an Ollama container or pod created during QuickStart installation.

To use Ollama with Agent Factory:

- Install and run Ollama directly on the host machine (outside of containers)

- Configure the Ollama endpoint using LLM Management in the application

- Verify the connection to the Ollama model before using it in agents

No VPN. Production mode

Installing the App on Linux on Production Mode with VPN off

Choose a staging location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle. Note that this directory should not be located on an NFS mount.

Get the installation kit

Go to the official download website and get the installation kit. See Download Installation Kit.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Run Interactive Installer

The interactive_install.sh script, included in the installation kit, automates nearly all setup tasks, including environment configuration, dependency installation, and application deployment.

This file will be present in the staging location once you extract the kit.

-

Run the Interactive Installer

Now that the kit is unpacked, execute the

interactive_install.shscript from within the same directory.bash interactive_install.sh --reset (Required if previously installed) bash interactive_install.sh -

When the interactive installer prompts you, select the option that better suits your environment:

Are you on a corporate network that requires an HTTP/HTTPS proxy? (y/N): n Enter 1 if you are on a Standard Oracle Linux machine or 2 if you are on OCI: <user_number_choice> Enter your Linux username: <user_linux_username> Does your default /tmp directory have insufficient space (< 100GB)? (y/N): y [INFO] You can get a token from https://container-registry.oracle.com/ Username: <email_registered_on_container_registry> Password: <token_from_container_registry> Do you want to proceed with the manual database setup? (y/N): y [WARNING] Step 1: Create the database user. Enter the DB username you wish to create: <your_db_user> Enter the password for the new DB user: <your_db_user_password> [INFO] Run these SQL commands as a SYSDBA user on your PDB (Pluggable Database): ---------------------------------------------------- CREATE USER <your_db_user> IDENTIFIED BY <your_db_user_password> DEFAULT TABLESPACE USERS QUOTA unlimited ON USERS; GRANT CONNECT, RESOURCE, CREATE TABLE, CREATE SYNONYM, CREATE DATABASE LINK, CREATE ANY INDEX, INSERT ANY TABLE, CREATE SEQUENCE, CREATE TRIGGER, CREATE USER, DROP USER TO <your_db_user>; GRANT CREATE SESSION TO <your_db_user> WITH ADMIN OPTION; GRANT READ, WRITE ON DIRECTORY DATA_PUMP_DIR TO <your_db_user>; GRANT SELECT ON V_$PARAMETER TO <your_db_user>; exit; ---------------------------------------------------- Press [Enter] to continue to the next step... Select installation mode: 1) prod 2) quickstart Enter choice (1 or 2): 1 You selected Production mode. Confirm? (yes/no): yesThe following output indicates that the installation was completed successfully.

Once the installation script finishes successfully, you can access the application at the URL https://<hostname>:8080/studio/ provided by the script and complete the remaining configuration through your web browser.

Access the Application

Copy and paste the application URL in a browser. You will see a page like below.

Click ‘Advanced’ and then ‘Proceed to host’.

Configure User

Set up an email and a secure password.

Configure Database Connection

-

Provide your database connection details. You can either enter the Oracle AI Database 26ai connection details directly, provide the connection string or upload a database wallet file, along with a user and password.

-

Basic: Enter all the connection details manually and the previously created database user and password.

Description of the illustration install-step-2-db-config-1.png

-

Connection String: Enter the Database Connection String and the previously created database user and password.

Description of the illustration install-step-2-db-config-2.png

-

Wallet: Upload a Database Wallet File and enter the previously created database user and password.

Description of the illustration install-step-2-db-config-3.png

To successfully install the Knowledge Agent, including the pre-built Knowledge Assistants, Agent Factory requires the database to access OCI Object Storage using Pre-Authenticated Request (PAR) files. Verify whether

WALLET_LOCATIONorENCRYPTION_WALLET_LOCATIONis specified in thesqlnet.orafile on the database server. If either is configured, ensure that the required OCI certificates are added to the wallet. If the certificates are not present, add them to the wallet to enable access to Object Storage files and prevent Knowledge Agent installation failures. If the database does NOT use a wallet, you can skip this step.Click here to download the certificates you need to add to the wallet. Below example assumes that the SSL wallet is located at

/u01/app/oracle/dcs/commonstore/wallets/ssland that you have unpacked the certificates in/home/oracle/dbc:#! /bin/bash # Check if the certificates are available under "Trusted Certificates" orapki wallet display -wallet /u01/app/oracle/dcs/commonstore/wallets/ssl # Add certificates if they are missing for i in $(ls /home/oracle/dbc/DigiCert*cer) do orapki wallet add -wallet /u01/app/oracle/dcs/commonstore/wallets/ssl -trusted_cert -cert $i -pwd <SSL Wallet password> doneSee Create SSL Wallet with Certificates for more details.

-

-

Click Test Connection to verify that the information entered is correct. Upon a successful connection, you will see a ‘Database connection successful’ notification. Click Next.

Install Components

Click Install and wait a few minutes for the setup to complete.

When finished, you will see the message ✓ Please proceed to the next step for LLM Configuration. Click Next.

Configure LLM

Before you continue, see Manage LLM to review the supported models and LLM providers, and how to set them up.

Select an LLM configuration based on your LLM provider. The application supports integration with Ollama, vLLM, OpenAI, and the full suite of OCI GenAI offerings. Depending on your selected provider, the required connection credentials may differ.

Description of the illustration install-step-llm-config.png

Enter the required details in the selected LLM configuration and select Test Connection. Once you see the Connection successful message, select Save Configuration.

Optionally, configure an embedding model to use for data ingestion and retrieval. By default, multilingual-e5-base model is used to generate embeddings and is also the local embedding model included with the service.

Pre-built Knowledge Assistants currently support only embedding models from the OCI Generative AI Service. To ensure proper functionality of the pre-built knowledge agents, select from the following cohere embedding models.

- multilingual-e5-base

- cohere.embed-v4.0

- cohere.embed-multilingual-light-v3.0

- cohere.embed-english-v3.0

- cohere.embed-multilingual-v3.0

Finally, select Finish Installation to complete the setup and be redirected to the login page.

Log In to the Application

Log in with the email and password created previously. After logging in, you will be greeted by the Get Started screen.

No VPN. Quickstart mode

Install the Application on Quickstart Mode

Choose a staging location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle. Note that this directory should not be located on an NFS mount.

Get the installation kit

Go to the official download website and get the installation kit. See Download Installation Kit.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Uncompress the Installation Kit at the Staging Location

The staging location is a specified directory used to store build artifacts (such as executables and configuration files) needed to create Podman images for the application, and it also includes a Makefile to manage the entire deployment lifecycle.

Note: While choosing the staging location, make sure it is not on an NFS mount.

-

Create the staging location and copy the downloaded kit to the staging location.

mkdir <staging_location> cd <staging_location> cp <path to installation kit> . -

Uncompress the installation kit in the staging location.

-

For ARM 64:

tar xzf applied_ai_arm64.tar.gz -

For Linux X86-64:

tar xzf applied_ai.tar.gz

-

Run Interactive Installer

The interactive_install.sh script, included in the installation kit, automates nearly all setup tasks, including environment configuration, dependency installation, and application deployment.

This file will be present in the staging location once you extract the kit.

-

Run the Interactive Installer

Now that the kit is unpacked, execute the

interactive_install.shscript from within the same directory.bash interactive_install.sh --reset (Required if previously installed) bash interactive_install.sh -

When the interactive installer prompts you, select the option that better suits your environment:

Are you on a corporate network that requires an HTTP/HTTPS proxy? (y/N): N Enter 1 if you are on a Standard Oracle Linux machine or 2 if you are on OCI: <user_number_choice> Enter your Linux username: <user_linux_username> Does your default /tmp directory have insufficient space (< 100GB)? (y/N): y [INFO] You can get a token from https://container-registry.oracle.com/ Username: <email_registered_on_container_registry> Password: <token_from_container_registry> Do you want to proceed with the manual database setup? (y/N): n Select installation mode: 1) prod 2) quickstart Enter choice (1 or 2): 2 You selected Quickstart mode. Confirm? (yes/no): yes

The following output indicates that the installation was completed successfully.

Once the installation script finishes successfully, you can access the application at the URL https://<hostname>:8080/studio/ provided by the script and complete the remaining configuration through your web browser.

Access the Application

Copy and paste the application URL in a browser. You will see a page like below.

Click ‘Advanced’ and then ‘Proceed to host’.

Configure User

Set up an email and a secure password.

Install Components

Click Install and wait a few minutes for the setup to complete.

When finished, you will see the message ✓ Please proceed to the next step for LLM Configuration. Click Next.

Configure LLM

Before you continue, see Manage LLM to review the supported models and LLM providers, and how to set them up.

Select an LLM configuration based on your LLM provider. The application supports integration with Ollama, vLLM, OpenAI, and the full suite of OCI GenAI offerings. Depending on your selected provider, the required connection credentials may differ.

Enter the required details in the selected LLM configuration and select Test Connection. Once you see the Connection successful message, select Save Configuration.

Optionally, configure an embedding model to use for data ingestion and retrieval. By default, multilingual-e5-base model is used to generate embeddings and is also the local embedding model included with the service.

Pre-built Knowledge Assistants currently support only embedding models from the OCI Generative AI Service. To ensure proper functionality of the pre-built knowledge agents, select from the following cohere embedding models.

- multilingual-e5-base

- cohere.embed-v4.0

- cohere.embed-multilingual-light-v3.0

- cohere.embed-english-v3.0

- cohere.embed-multilingual-v3.0

Finally, select Finish Installation to complete the setup and be redirected to the login page.

Log In to the Application

Log in with the email and password created previously. After logging in, you will be greeted by the Get Started screen.

Enable Ollama After QuickStart Installation

Note

When installing Agent Factory using QuickStart mode, the Ollama service is not created or started automatically. This behavior is intentional.

Running Ollama inside a container using CPU-only resources resulted in suboptimal performance. To provide a better experience, Ollama is expected to be run directly on the host machine, where it can leverage available GPU resources. This setup ensures optimal performance and proper connectivity.

As a result, you will not see an Ollama container or pod created during QuickStart installation.

To use Ollama with Agent Factory:

- Install and run Ollama directly on the host machine (outside of containers)

- Configure the Ollama endpoint using LLM Management in the application

- Verify the connection to the Ollama model before using it in agents