3 Installing or Upgrading the Oracle Database Side of Oracle Big Data SQL

Oracle Big Data SQL must be installed on both the Hadoop cluster management server and the Oracle Database system. This section describes the installation of Oracle Big Data SQL on both single-instance and RAC systems.

- Before You Start the Database-Side Installation

- About the Database-Side Installation Directory

- Steps for Installing on Oracle Database Nodes

- Granting User Access to Oracle Big Data SQL

- Enabling Object Store Access

- Enabling Hive Transactional Node Tables

- Installing and Configuring Oracle SQL Access to Kafka

3.1 Before You Start the Database-Side Installation

Review the points in this section before starting the installation.

If the current version of Oracle Big Data SQL is already installed on the Oracle Database system and you are making changes to the existing configuration, then you do not need to repeat the entire installation process. See Reconfiguring an Existing Oracle Big Data SQL Installation.

Important:

- If you intend to log in as the database owner user with an anonymous connection to SQL*Plus, first be sure that the ORACLE_HOME and ORACLE_SID environment variables are set properly. GI_HOME should also be set if Grid infrastructure is running on the system and its location is not relative to ORACLE_HOME.

-

For multi-node databases (such as Oracle RAC systems), you must repeat this installation on every compute node of the database. If this is not done, you will see RPC connection errors when the Oracle Big Data SQL service is started.

-

It is recommended that you set up passwordless SSH for root on the database nodes where Grid is running. Otherwise, you will need to supply the credentials during the installation on each node.

-

If the

diskmonprocess is not already running prior to the installation, a Grid infrastructure restart will be required in order to complete the installation. -

If you set up Oracle Big Data SQL connections from more than one Hadoop cluster to the same database, be sure that the configurations for each connection are the same. Do not set up one connection to use InfiniBand and another to use Ethernet. Likewise, if you enable database authentication in one configuration, then this feature must be enabled in all Oracle Big Data SQL connections between different Hadoop clusters and the same database.

-

If the database system is Kerberos-secured, then it is important to note that authentication for the database owner principal can be performed by only one KDC. Oracle Big Data SQL currently does not support multiple Kerberos tickets. If two or more Hadoop cluster connections are installed by the same database owner, all must use the same KDC.

The

KRB5_CONFenvironment variable must point to only one configuration file. The configuration file must exist, and the variable itself must be set for the database owner account at system startup.Be sure that you have installed a Kerberos client on each of the database compute nodes.

Also, if the principal used on the Hadoop side is not the same as the principal of the database owner, then before running the database-side installation, you must copy the correct principal from the KDC to the Oracle Database side. Then when you run the installer, include the

--alternate-principalparameter in the command line in order to direct the installer to the correct keytab. (See Command Line Parameter Reference for bds-database-install.sh -

For database running under Oracle Grid Infrastructure, if the system has more than one network interfaces of the same type (two or more Ethernet, two or more InfiniBand, or two or more Ethernet-over-InfiniBand interfaces), then the installation always selects the one whose name is first in alphabetical order.

See Also:

Details are provide in Special Considerations When a System Under Grid Infrastructure has Multiple Network Interfaces of the Same Type.

3.1.1 Check for Required Grid Patches

Make sure all patches to the Oracle Grid Infrastructure required by Oracle Big Data SQL have been installed.

Check the Oracle Big Data SQL Master Compatibility Matrix (Doc ID 2119369.1 in My Oracle Support) to find up-to-date information on patch requirements.

3.1.2 Potential Requirement to Restart Grid Infrastructure

In certain database environments bds-database-install.sh needs to create cellinit.ora and/or celliniteth.ora. In these cases, the script will attempt to propagate similar changes across all nodes in the Grid Infrastructure. To do this, the script expects that passwordless SSH is set up between oracle and root or it will prompt for the root password for each node during execution. If the nature of the changes requires a restart of the Grid Infrastructure, the script will also display messages indicating that grid infrastructure needs to be restarted manually. Because the installlation cannnot complete without Grid credentials if a restart is necessary, be sure that you have the Grid password at hand.

3.1.2.1 Understanding When Grid or Database Restart is Required

On the database side of Oracle Big Data SQL, the diskmon process is the agent in charge of communications with the Hadoop cluster. This is similar to its function on the Oracle Exadata Database Machine, where it manages communications between compute nodes and storage nodes.

In Grid environments, diskmon is owned by the Grid user. In a non-Grid environment, it is owned by the Oracle Database owner.

In Oracle Big Data SQL, diskmon settings are stored on cellinit.ora and celliniteth.ora files in the /etc/oracle/cell/network-config/ directory. The installer updates these files in accordance with the cluster connection requirements.

This is how the installer determines when Grid or Oracle Database needs to be restarted:

-

If the installer detects that no previous

cellinit.oraorcelliniteth.orafile exists, this means that no diskmon process is running. In this case, if the environment includes Oracle Grid then you must restart the Grid. If the environment does not include Grid, then you must restart Oracle Database. -

If previous

cellinit.oraand/orcelliniteth.orafile exist, this indicates that diskmon process is running. In this case, if the installer needs to make a change to these files, then only the database needs to be restarted. -

In multi-node Grid environments, diskmon works on all nodes as a single component and

cellinit.oraandcelliniteth.oramust be synchronized on all nodes. This task is done through SSH. If passwordless SSH is set up on the cluster, no user interaction is required. If passwordless SSH is not set up, then the script will pause for you to input the root credentials for all nodes. When the cellinit.ora and celliniteth.ora files across all nodes are synchronized, then the script will continue. Then the script finishes and in this case, you must restart the Grid infrastructure.

3.1.3 Special Considerations When a System Under Grid Infrastructure has Multiple Network Interfaces of the Same Type

The Oracle Big Data SQL installation or SmartScan operation may sometimes fail in these environments because the wrong IP address is selected for communication with the cells.

When installing Oracle Big Data SQL within Oracle Grid Infrastructure, you cannot provide the installer with a specific IP address to select for communication with the Oracle Big Data SQL cells on the nodes. Network interface selection is automatically determined in this environment. This determination is not always correct and there are instances where the database-side installation may fail, or, you may later discover that SmartScan is not working.

You can manually correct this problem, but first it is helpful to understand how the installation decides which network interfaces to select.

How the Installation Selects From Among Multiple Interfaces of the Same Type on a System Under Grid Infrastructure

-

The

diskmonprocess is controlled by Oracle Grid Infrastructure and not by the database. Grid manages communications with the Oracle Big Data SQL cells. -

The Oracle Big Data SQL installer in these cases does not create

cellinit.oraandcelliniteth.ora, nor does it update the cell settings stored in these files. In these environments, the task is handled by Grid, because it is a cluster-wide task that must be synchronized across all nodes. -

If there are multiple network interfaces, the Grid-managed update to the cells automatically selects the first network interface of the appropriate type on each node. It selects the interface whose name is first in alphabetical order.

For example, here is a system that is under Grid Infrastructure. It has multiple InfiniBand, Ethernet, and Ethernet over InfiniBand (bondeth*) network interfaces. This is the list of interfaces:

[root@mynode ~]# ip -o -f inet addr show

1: lo inet 127.0.0.1/8

2: eth0 inet 12.17.207.156/21

3: eth1 inet 16.10.145.12/21

19: bondeth0 inet 12.17.128.15/20

20: bondeth2 inet 16.10.230.160/20

21: bondeth4 inet 192.168.1.45/20

30: bondib0 inet 192.168.31.178/21

31: bondib1 inet 192.168.129.2/21

32: bondib2 inet 192.168.199.205/21

33: bondib3 inet 192.168.216.31/21

34: bondib4 inet 192.168.249.129/21 When the Oracle Big Data SQL installer runs on this system, the following interfaces would be selected.

-

192.168.31.178/21 is selected for the InfiniBand connection configured in

cellinit.ora.Among the InfiniBand interfaces on this system, the interface name

bondib0is first in an ascending alphabetical sort. -

12.17.128.15/20 is selected for an Ethernet-over-InfniBand connection configured in

celliniteth.ora.Note:

This example demonstrates an additional selection factor – Ethernet over InfiniBand takes precedence over standard Ethernet.The

bondeth0interface name is first in this case.

How the Installation (or SmartScan) may Fail Under These Conditions

It is possible that diskmon cannot connect to the Oracle Big Data SQL cells via the network interface selected according to the logic described above. The correct subnet (one that diskmon can reach) may not appear first in an alphabetical sort.

How to Fix the Problem

You can manually change the IP addresses in thecellinit.ora and celliniteth.ora files. These files are at /etc/oracle/cell/network-config on each node.

-

Stop the CRS process. (Be sure to do this before the cell edit. If you do not,

diskmonmay hang.) -

Edit

cellinit.oraand/orcelliniteth.ora. Change the IP addresses as needed. -

Restart CRS.

3.2 About the Database-Side Installation Directory

You start the

database side of the installation by unpacking the database-side

installation bundle and executing the run file it contains. The

run file creates an installation directory under

$(orabasehome)/BDSJaguar-4.1.2. For

example:

$(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.mycluster.<domain>.comThe installation of Oracle Big Data SQL is not finished when this directory is created. The directory is a staging area that contains all of the files needed to complete the installation on the database node.

There can be Oracle Big Data SQL connections between the database and multiple Hadoop clusters. Each connection is established through a separate database-side installation and therefore creates a separate installation directory. The segments in the name of the installation directory enable you to identify the Hadoop cluster in this specific connection:

<Hadoop cluster name>-<Number nodes in the cluster>-<FQDN of the cluster management server node>You should keep this directory. It captures the latest state of the installation and you can use it to regenerate the installation if necessary. Furthermore, if in the future you need to adjust the database-side of the installation to Hadoop cluster changes, then the updates generated by the Jaguar reconfigure command are applied to this directory.

Consider applying permissions that would prevent the installation directory from being modified or deleted by any user other than oracle (or other database owner).

3.3 Steps for Installing on Oracle Database Nodes

To install the database side of Oracle Big Data SQL, copy over the zip file containing the database-side installation bundle that was created on the Hadoop cluster management server, unzip it, execute the run file it contains, then run the installer.

Perform the procedure in this section as the database owner (oracle

or other). You stage the bundle in a temporary directory, but after you unpack the bundle

and execute the run file it contains, then the installation package is installed in a

subdirectory under $(orabasehome). For example:

$(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.mycluster.<domain>.com.

Before starting, check that ORACLE_HOME and

ORACLE_SID are set correctly. You should also review the script

parameters in Command Line Parameter Reference for bds-database-install.sh so that you know which ones you should include in the install command for a given

environment.

How Many Times Do I Run the Installation?

You must perform the installation for each instance of each database. For example, if you have a non-CBD database and a CBD database (DBA and DBB respectively, in the example below) on a single two-node RAC, then you would install Oracle Big Data SQL on both nodes as follows:

- On node

1

./bds-database-install.sh --db-resource=DBA1 --cdb=false ./bds-database-install.sh --db-resource=DBB1 --cdb=true - On node

2

./bds-database-install.sh --db-resource=DBA2 --cdb=false ./bds-database-install.sh --db-resource=DBB2 --cdb=true

Copy Over the Components and Prepare for the Installation

Copy over and install the software on each database node in sequence from node 1 to node n.

-

If you have not already done so, use your preferred method to copy over the database-side installation bundle from the Hadoop cluster management server to the Oracle Database server. If there are multiple bundles, be sure to select the correct bundle for cluster that you want to connect to Oracle Database. Copy the bundle to any secure directory that you would like to use as a temporary staging area.

In this example the bundle is copied over to/opt/tmp.scp root@<hadoop node name>:/opt/oracle/BDSJaguar/db-bundles/bds-4.1.2-db-<Hadoop cluster>-200421.0517.zip /opt/tmp -

If you generated a database request key, then also copy that key over to the Oracle Database server.

# scp root@<hadoop node name>:/opt/oracle/BDSJaguar/dbkeys/<database name or other name>.reqkey oracle@<database_node> /opt/tmp -

Then,

cdto the directory where you copied the file (or files). -

Unzip the bundle. You will see that it contains a single, compressed run file.

-

Check to ensure that the prerequisite environment variables are set –

$ORACLE_HOMEand$ORACLE_SID. -

Run the file in order to unpack the bundle into

$(orabasehome). For example:$ ./bds-4.1.2-db-cdh510-170309.1918.runBecause you can set up independent Oracle Big Data SQL connections between an Oracle Database instance and multiple Hadoop clusters, the

runcommand unpacks the bundle to a cluster-specific directory under$(orabasehome)/BDSJaguar-4.1.2. For example:$ ls $(orabasehome)/BDSJaguar-4.1.2 cdh510-6-node1.mycluster.<domain>.com test1-3-node1.myothercluster.<domain>.comIf the

BDSJaguar-4.1.2directory does not already exist, it is created as well. -

If you generated a database request key, then copy it into the newly created installation directory. For example:

$ cp /opt/tmp/mydb.reqkey $(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.mycluster.<domain>.comTip:

You also have the option to leave the key file in the temporary location and use the

--reqkeyparameter in the installation command in order to tell the script where the key file is located. This parameter lets you specify a non-default request key filename and/or path.However, the install script only detects the key in the installation directory when the key filename is the same as the database name. Otherwise, even if the key is local, if you gave it a different name then you must still use

--reqkeyto identify to the install script. - If you plan to authenticate with a Kerberos principal that is not the same

as the Kerberos principal used on the Hadoop side, then copy the keytab for that principal

into the installation directory.

Skip this step if you plan to use the same principal on both sides of the installation. A copy of the keytab that was used for authentication in the Hadoop cluster is already in the installation directory.

Now that the installation directory for the cluster is in place, you are ready to install the database side of Oracle Big Data SQL.

Install Oracle Big Data SQL on the Oracle Database Node

Important:

The last part of the installation may require a single restart of Oracle Database under either or both of these conditions:

-

If Oracle Database does not include the Oracle Grid Infrastructure. In this case, the installation script makes a change to the

pfileorspfileconfiguration file in order to support standalone operation ofdiskmon. -

If there are changes to the IP address and the communication protocol recorded in

cellinit.ora. These parameters define the connection to the cells on the Hadoop cluster. For example, if this is a re-installation and the IP address for the Hadoop/Oracle Database connection changes from an Ethernet address to an InfiniBand address and/or the protocol changes (between TCP and UDP), then a database restart is required.

-

Start the database if it is not running.

-

Log on to the Oracle Database node as the database owner and change directories to the cluster-specific installation directory under

$(orabasehome)/BDSJaguar-4.1.2. For example:$ cd $(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.my.<domain>.com -

Run

bds-database-install.sh, the database-side Oracle Big Data SQL installer. You may need to include some optional parameters.$ ./bds-database-install.sh [options] -

Restart the database (optional).

See Also :

The bds-database-install.sh installer command supports parameters that are ordinarily optional, but may be required for some configurations. See the Command Line Parameter Reference for bds-database-install.sh

Extra Step If You Enabled Database Authentication or Hadoop Secure Impersonation

-

If

database_auth_enabledorimpersonation_enabledwas set to “true” in the configuration file used to create this installation bundle, copy the ZIP file generated by the database-side installer back to the Hadoop cluster management server and run the Jaguar “Database Acknowledge” operation. This completes the set up of login authentication between Hadoop cluster and Oracle Database.Find the zip file that contains the GUID-key pair in the installation directory. The file is named according to the format below.

For example:<name of the Hadoop cluster>-<number nodes in the cluster>-<FQDN of the node where the cluster management server is running>-<FQDN of this database node>.zip$ ls $(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.my.<domain>.com/*.zip $ mycluster1-18-mycluster1node03.<domain>.com-myoradb1.<domain>.com.zip-

Copy the ZIP file back to

/opt/oracle/DM/databases/confon the Hadoop cluster management server. -

Log on to the cluster management server as

root, cd to/BDSJaguarunder the directory where Oracle Big Data SQL was installed, and rundatabaseack(the Jaguar “database acknowledge” routine). Pass in the configuration file that was used to generate the installation bundle (bds-config.jsonor other).# cd <Big Data SQL Install Directory>/BDSJaguar # ./jaguar databaseack bds-config.json

-

3.3.1 Command Line Parameter Reference for bds-database-install.sh

The bds-database-install.sh script accepts a number of command line parameters. Each parameter is optional, but the script requires at least one.

Table 3-1 Parameters for bds-database-install.sh

| Parameter | Function |

|---|---|

--install |

Install the Oracle Big Data SQL connection to this cluster. Note: In mid-operation, this script will pause and prompt you to run a second script as root: As root, open a session in a second terminal and run the script there. When that script is complete, return to the original terminal and press Enter to resume the Because Oracle Big Data SQL is installed on the database side as a regular user (not a superuser), tasks that must be done as root and/or the Grid user require the installer to spawn shells to run other scripts under those accounts while In some earlier releases, the |

--version |

Show the bds-database-install.sh script version.

|

--info |

Show information about the cluster, such as the cluster name, cluster management server host, and the web server. |

--grid-home |

Specifies the Oracle Grid home directory. |

--crs |

Use The installer always checks to verify that Grid is running. If Grid is not running, then the installer assumes that Grid is not installed and that the database is single-instance. It then automatically sets the If |

--alternate-principal |

This optional parameter enables you to specify a

Kerberos principal that is other than the database owner. It can be

used in conjunction with the --install,

--reconfigure, and --uninstall

parameters.

See About Using an Alternate Kerberos Principal on the Database Side below for details. |

--alternate-repo |

CDH 6 uses yum for the client installations. The

For CDH 6.x prior to 6.3.3,

--alternate-repo is optional. If Internet

access is open, then by default the Hadoop-side Jaguar installer

should be able to download the clients from the public Cloudera

repository and integrate them into the database-side

installation bundle that it generates. If the Internet is not

accessible or if you want to download the clients from a

different local or remote repository, use

--alternate-repo. To enable the installer

to download directly from the Cloudera repository (or elsewhere)

pass the URL, as in this example for CDH

6.2.1:For CDH 6.3.3 and later,

--alternate-repo is required, because you

must provide Cloudera login credentials in order to download

files for these releases from Cloudera. The Big Data SQL

installer cannot automatically download CDH 6.3.3 and later

files from the public repository. For these later releases,

include you Cloudera user account and password, as in this

example:Note: You can set up a local yum repository that

includes the correct version of the HDFS, Hive, and HBase

clients and then point to that local repo instead of the

Cloudera public

repository:

There are several options if HTTP is not available on the database system:

The --alternate-repo parameter is required for CDH 6.3.3 and greater, optional for CDH 6.x prior to 6.3.3, unavailable for CDH 5 as well as for HDP systems. |

--cdb |

Create database objects on all PDBs for CDB databases. |

--reqkey |

This parameter tells the installer the name and location of the request key file. Database Authentication is enabled by default in the configuration. Unless you do not intend to enable this feature, then as one of the steps to complete the configuration, you must use

If the request key filename is provided without a path, then the key file is presumed to be in the installation directory. The installer will find the key if the filename is the same as the database name. For example, in this case the request key file is in the install directory and the name is the same as the database name. This file is consumed only once on the database side in order to connect the first Hadoop cluster to the database. Subsequent cluster installations to connect to the same database use the configured key. You do not need to resubmit the |

--uninstall |

Uninstall the Oracle Big Data SQL connection to this cluster. If you installed using

the

--alternate-principal parameter, then also

include this parameter when you uninstall.

--alternate-principal in this table for

more detail.

|

--reconfigure |

Reconfigures bd_cell network parameters, Hadoop configuration files, and Big Data SQL parameters. The Oracle Big Data SQL installation to connect to this cluster must already exist. Run reconfigure when a change has occurred on the Hadoop cluster side, such as a change in the DataNode inventory. |

--databaseack-only |

Create the Database Acknowledge zip file. (You must then copy the zip file back to |

--mta-restart |

MTA extproc restart. (Oracle Big Data SQL must already be installed on the database server.) |

--mta-setup |

Set up MTA extproc with no other changes. (Oracle Big Data SQL must already be installed on the database server.) |

--mta-destroy |

Destroy MTA extproc and make no other changes. (Oracle Big Data SQL must already be installed on the database server.) |

--aux-run-mode |

Because Oracle Big Data SQL is installed on the database side as a regular user (not a superuser), tasks that must be done as The Mode options are:

|

--root-script |

Enables or disables the startup root script execution.

|

--no-root-script |

Skip root script creation and execution. |

--root-script-name |

Set a name for the root script (the default name is based on the PID). |

--pdb-list-to-install |

For container-type databases, Oracle Big Data SQL is by default set up on all open PDBs. This parameter limits the setup to the specified list of PDBs. |

--restart-db |

If a database restart is required, then by default the install script prompts the user to choose between doing the restart now or later. Setting --restart-db=yes tells the script in advance to proceed with any needed restart without prompting the user. If --restart-db=no then the prompt is displayed and the installation waits for a response. This is useful for unattended executions.

|

--skip-db-patches-check |

To skip the patch validation, add this parameter when you run the installer:

By default, database patch requirements are checked when you run |

--set-default-cluster |

To set the default cluster to the current cluster in

a multi-cluster installation, add this parameter when you run

the

installer:

In a multi-cluster installation, the first cluster installed is considered the default cluster. If more clusters are installed, all queries use the java clients provided by the default cluster regardless of the versions of the actual cluster receiving the query. In some cases you may want to change the default, for example, to use newer java clients. If passing this parameter the current cluster is set as the default. The required database links and file system links are regenerated accordingly, and the existing multi-threading agents are restarted. |

--force-incompatible |

To allow different versions of Hadoop, set this

parameter when you run the

installer:Without this parameter, the installer prevents the installation of clusters with different major or minor Hadoop versions. For example, if a Hadoop 2.6 cluster is installed and then a Hadoop 3.0 is installed the script does not allow the second install. This behavior avoids possible Hadoop version incompatibilities. However, this parameter can be used to override this behavior. When the force-incompatible parameter is used and two clusters of different Hadoop versions are detected the user simply gets a warning. |

The --root-script-only parameter from previous releases is

obsolete.

3.3.2 About Using an Alternate Kerberos Principal on the Database Side

The Kerberos principal that represents the Oracle Database owner is the default, but you can also choose a different principal for Oracle Big Data SQL authentication on Oracle Database.

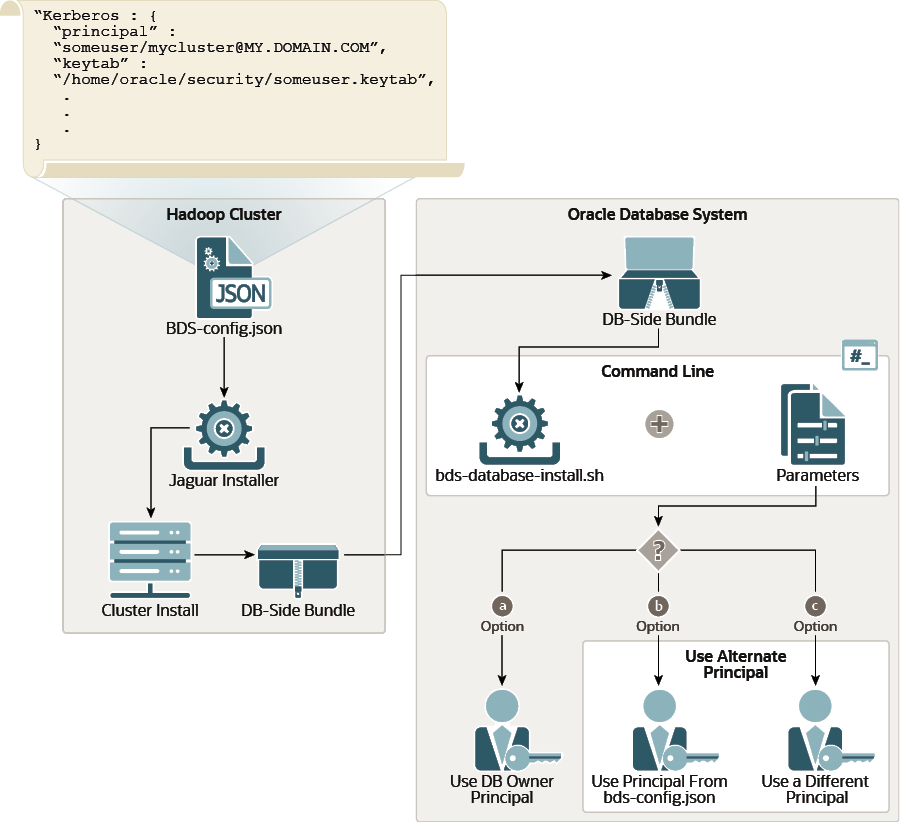

The figure below shows how alternate principals are handled in the installation. On the Hadoop side of the install, use the Kerberos principal and keytab specified in the BDS-config.json file to set up Kerberos authentication for Big Data SQL on the Hadoop cluster. The same keytab is included in the installation bundle that you unzip on the database side. When you unzip the bundle and run the installer on the database side, you have three choices for setting up Kerberos authentication:

a. Ignore the principal and keytab provided with the installation bundle and use the database owner principal.

b. Use the principal and keytab provided with the bundle.

c. Specify a different principal.

Figure 3-1 Using the --alternate-principal Parameter with bds-database-install.sh

Description of "Figure 3-1 Using the --alternate-principal Parameter with bds-database-install.sh"

This is the explanation of options a, b, and c in the figure above.

Option a: Use the database owner principal for Kerberos authentication.

If the keytab of the database owner principal is present in the

staging directory and you want to use that principal, then do not include

the --alternate-principal parameter when you invoke the

installer. By default the installer will use the database owner

principal.

# ./bds-datbase-install.sh --install <other parameters, except --alternate-principal>Option b: Use the principal included with the installation bundle for authentication.

Include --alternate-principal in the install

command, but do not supply a value for this parameter. This tells the

installer to use the principal that was specified in the Jaguar

bds-config.json configuration file on the Hadoop

side of the installation. This principal is included in the database-side

installation bundle and already exists in the staging directory. This is the

directory that the installation run file generated. It includes the

installation files for connection to a specific Hadoop cluster and node. For

example,

$(orabasehome)/BDSJaguar-4.1.2/cdh510-6-node1.mycluster.mydomain.com.

# ./bds-datbase-install.sh --install --alternate-principal <other parameters>Option c: Use a different principal for authentication.

Include --alternate-principal on the command line

and pass the principal as the value.

bds-database-install.sh. When you ran the run file

that unpacked the database-side install bundle, it created a database-side

installation directory. Copy the alternate keytab file into this directory.

Then, rename the file by prefixing "bds_" to the principal.

The prefix tells the installer to look for the principal in this keytab

file. You can then run the command as

follows:./bds-database-install.sh --reconfigure --alternate-principal=<primary>/<instance>@<REALM>Note:

Anybds-database-install.sh operation that includes the

--alternate-principal parameter generates a script

that must be run as root. You will be prompted to run this script and then

to press Enter to continue and complete the operation. For

example:bds-database-install: root shell script : /home/oracle/db_home2/install/bds-database-install-28651-root-script-scaj42.sh

please run as root:

/home/oracle/db_home2/install/bds-database-install-28651-root-script-<cluster name>.sh

waiting for root script to complete, press <enter> to continue checking.. q<enter> to quitChanging the Kerberos Principal Post Release

You can use the reconfigure operation to switch to a different principal.

./bds-database-install --reconfigure --alternate-principal="<other_primary>/<instance>@<REALM>"Important:

If the keytab of the database owner principal is found by the installer in the staging directory (the directory where the run file unpacked the installer files), then the database owner principal is used and the--alternate-principal parameter is ignored.

Removing a Kerberos Principal and Keytab When You Uninstall Big Data SQL

If you specified an alternate principle when you installed

Big Data SQL, then if you uninstall Big Data SQL later, include the

same --alternate-principle parameter and value, so

that the uninstall operation removes the principal and keytab from

the system.

./bds-database-install --uninstall --alternate-principal="bds_<primary>/<instance>@<REALM>"3.4 Granting User Access to Oracle Big Data SQL

In Oracle Big Data SQL releases prior to 3.1, access is granted to the PUBLIC user group. In the current release, you must do the following for each user who needs access to Oracle Big Data SQL:

- Grant the

BDSQL_USERrole. - Grant read privileges on the BigDataSQL configuration directory object.

- Grant read privileges on the Multi-User Authorization security table to enable impersonation.

For example, to grant access to user1:

SQL> grant BDSQL_USER to user1;

SQL> grant read on directory ORACLE_BIGDATA_CONFIG to user1;

SQL> grant read on BDSQL_USER_MAP to user1;See Also:

Use the DBMS_BDSQL PL/SQL Package in the Oracle Big Data SQL User's Guide to indirectly provide users access to Hadoop data. The Multi-User Authorization feature that this package implements uses Hadoop Secure Impersonation to enable theoracle account to execute tasks on behalf of other designated users.

3.5 Enabling Object Store Access

If you want to use Oracle Big Data SQL to query object stores, certain database properties and Network ACL values must be set on the Oracle Database side of the installation. The installation provides two SQL scripts you can use to do this.

The ORACLE_BIGDATA driver enables you to create external tables over data within object stores in the cloud. Currently, Oracle Object Store, Microsoft Azure, and Amazon S3 are supported. You can create external tables over Parquet, Avro, and text files in these stores. The first step is set up access to the object stores as follows.

Run set_parameters_cdb.sql and allow_proxy_pdb.sql to Enable Object Store Access

- After you run

bds-database-install.shto execute the database-side installation, find these two SQL script files under $(orabasehome), in the cluster subdirectory under the BDSJaguar directory:set_parameters_cdb.sql allow_proxy_pdb.sql - Open and read each of these files. Confirm that the configuration is correct.

Important:

Because there are security implications, carefully check that the HTTP server setting and other settings are correct. - In CBD ROOT, run

set_parameters_cdb.sql. - In each PDB that needs access to object stores, run

allow_proxy_pdb.sql.

In a RAC database, you only need to run these scripts on one instance of the database.

3.6 Enabling Hive Transactional Node Tables

If you want to enable transactional node table support on Hive, you need to manually add parameters to the Cloudera configuration files inside the <configuration> xml tag.

You must add the following entries to the

$ORACLE_HOME/bigdatasql/clusters/<your cluster

name>/config/hive-site.xml file.

<property>

<name>hive.exec.dynamic.partition.mode</name>

<value>nonstrict</value>

</property>

<property>

<name>hive.txn.manager</name>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManager</value>

</property>3.7 Installing and Configuring Oracle SQL Access to Kafka

If you work with Apache Kafka clusters as well as Oracle Database, Oracle SQL Access to Kafka (OSAK) can give you access to Kafka brokers through Oracle SQL. You can then query data in the Kafka records and also join the Kafka data with data from Oracle Database tables.

After completing the Oracle Database side of the Oracle Big Data SQL installation, you can configure OSAK connections to your Kafka cluster.

Installation Requirements

- A cluster running Apache Kafka. Kafka version 2.3.0 is supported, as are all versions of Kafka that run on Oracle Big Data Appliance.

- A version of Oracle Database from 12.2 to 19c.

Note:

Big Data SQL supports Oracle Database 12.1, but OSAK does not.

Steps Performed by the Oracle Big Data SQL Installer

OSAK is included in the database-side installation bundle. As part of the

installation, the bds-database-install.sh installer described in the

previous section of this guide does the following:

- Copies the OSAK kit into the Big Data SQL directory

(

$(orabasehome)/bigdatasql/), unzips it, and verifies the contents of the kit. - Creates the symlink

$(orabasehome)/bigdatasql/orakafka, which links to the extracted OSAK directory. - Sets JAVA_HOME for OSAK to

$(orabasehome)/bigdatasql/jdk.

bds-database-install.sh output. This example is from an Oracle

Database 19c system. It is the same on earlier database releases except for the shiphome

path. ...

bds-database-setup: installing orakafka kit

Step1: Check for valid JAVA_HOME

--------------------------------------------------------------

Found /u03/app/oracle/19.1.0/dbhome_orcl/shiphome/database/bigdatasql/jdk/bin/java, \

JAVA_HOME path is valid.

Step1 succeeded.

Step2: JAVA version check

--------------------------------------------------------------

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)

Java version >= 1.8

Step2 succeeded.

Step3: Creating configure_java.sh script

--------------------------------------------------------------

Wrote to /u03/app/oracle/19.1.0/dbhome_orcl/shiphome/database/bigdatasql/orakafka-1.1.0\

/bin/scripts/configure_java.sh

Step3 succeeded.

Successfully configured JAVA_HOME in /u03/app/oracle/19.1.0/dbhome_orcl/shiphome/database\

/bigdatasql/orakafka-1.1.0/bin/scripts/configure_java.sh

The above information is written to /u03/app/oracle/19.1.0/dbhome_orcl/shiphome/database\

/bigdatasql/orakafka-1.1.0/logs/set_java_home.log.2020.02.20-17.40.23

...

Steps You Must Perform to Complete the OSAK Installation and Configuration

To complete the installation and configuration, refer to the

instructions in the Oracle SQL Access to Kafka README_INSTALL file

at $(orabasehome)/bigdatasql/orakafka/doc. The

README_INSTALL file tells you how to use

orakafka.sh. This script can help you perform the remaining

configuration steps:

- Add a new Kafka cluster configuration directory under

$(orabasehome)/bigdatasql/orakafka/clusters/. - Allow a database user to access the Kafka cluster.

- Install the ORA_KAFKA PL/SQL packages in a user schema and create the required database directories.

Important:

If you are setting up access to a secured Kafka cluster, then after generating the cluster directory, you must also set the appropriate properties in this properties file.$(orabasehome)/bigdatasql/orakafka/clusters/<cluster_name>/conf/orakafka.propertiesThe properties file includes some tested subsets of possible security configurations. For general information about Kafka security, refer to the Apache Kafka documentation at https://kafka.apache.org/documentation/#security

- Working with Oracle Cloud Infrastructure Streaming Service

In order to work with Oracle Cloud Infrastructure Streaming Service, the following properties must be uncommented and set in the cluster configuration properties file:

<app_data_home>/clusters/<cluster_name>/conf/orakafka.properties security.protocol=SASL_SSL sasl.mechanism=PLAIN #Parameters for JAAS config (Authentication using SASL/PLAIN) sasl.plain.username=<tenancyName/<username>/<streamPoolId> sasl.plain.password=<user auth_token> #Uncomment the following for SASL/PLAIN authentication sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username=\"${sasl.plain.username}\" password=\"${sasl.plain.password}\";For more information on Kafka compatibility with Oracle Cloud Infrastructure Streaming Service, refer to Using Streaming with Apache Kafka.

When you have completed the configuration, the next step is to register your Kafka cluster. After registering the cluster, you can start using Oracle SQL Access to Kafka to create views over Kafka topics and query the data.

See Also:

Oracle SQL Access to Kafka in the Oracle Big Data SQL User's Guide shows you how to register your Kafka cluster and start working with OSAK.