2 Using Big Data Spatial and Graph with Spatial Data

This chapter provides conceptual and usage information about loading, storing, accessing, and working with spatial data in a Big Data environment.

- About Big Data Spatial and Graph Support for Spatial Data

Oracle Big Data Spatial and Graph features enable spatial data to be stored, accessed, and analyzed quickly and efficiently for location-based decision making. - Oracle Big Data Vector and Raster Data Processing

Oracle Big Data Spatial and Graph supports the storage and processing of both vector and raster spatial data. - Oracle Big Data Spatial Hadoop Image Processing Framework for Raster Data Processing

Oracle Spatial Hadoop Image Processing Framework allows the creation of new combined images resulting from a series of processing phases in parallel. - Loading an Image to Hadoop Using the Image Loader

The first step to process images using the Oracle Spatial and Graph Hadoop Image Processing Framework is to actually have the images in HDFS, followed by having the images separated into smart tiles. - Processing an Image Using the Oracle Spatial Hadoop Image Processor

Once the images are loaded into HDFS, they can be processed in parallel using Oracle Spatial Hadoop Image Processing Framework. - Loading and Processing an Image Using the Oracle Spatial Hadoop Raster Processing API

The framework provides a raster processing API that lets you load and process rasters without creating XML but instead using a Java application. The application can be executed inside the cluster or on a remote node. - Using the Oracle Spatial Hadoop Raster Simulator Framework to Test Raster Processing

When you create custom processing classes. you can use the Oracle Spatial Hadoop Raster Simulator Framework to do the following by "pretending" to plug them into the Oracle Raster Processing Framework. - Oracle Big Data Spatial Raster Processing for Spark

Oracle Big Data Spatial Raster Processing for Apache Spark is a spatial raster processing API for Java. - Spatial Raster Processing Support in Big Data Cloud Service

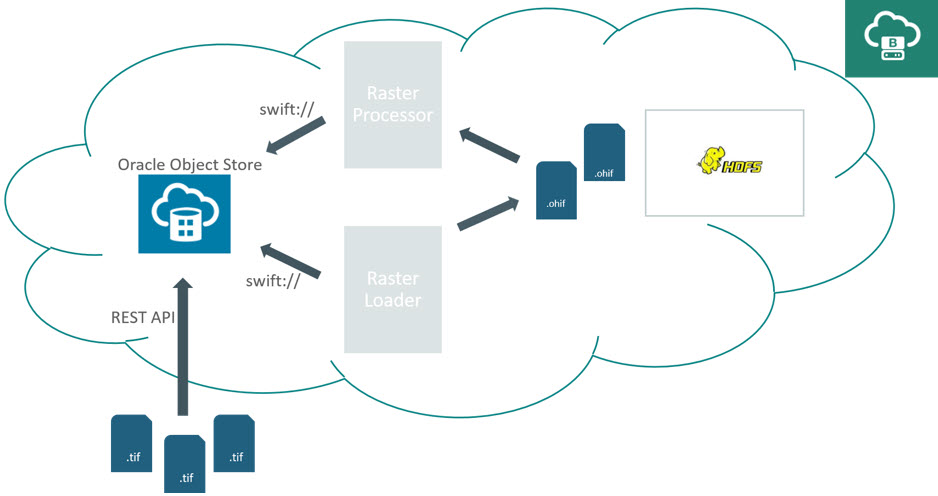

Oracle Big Data Spatial Raster Processing is supported in Big Data Cloud Service (BDCS) by making use of the Oracle Object Storage platform. - Oracle Big Data Spatial Vector Analysis

Oracle Big Data Spatial Vector Analysis is a Spatial Vector Analysis API, which runs as a Hadoop job and provides MapReduce components for spatial processing of data stored in HDFS. - Oracle Big Data Spatial Vector Analysis for Spark

Oracle Big Data Spatial Vector Analysis for Apache Spark is a spatial vector analysis API for Java and Scala that provides spatially-enabled RDDs (Resilient Distributed Datasets) that support spatial transformations and actions, spatial partitioning, and indexing. - Oracle Big Data Spatial Vector Hive Analysis

Oracle Big Data Spatial Vector Hive Analysis provides spatial functions to analyze the data using Hive. - Using the Oracle Big Data SpatialViewer Web Application

You can use the Oracle Big Data SpatialViewer Web Application (SpatialViewer) to perform a variety of tasks.

2.1 About Big Data Spatial and Graph Support for Spatial Data

Oracle Big Data Spatial and Graph features enable spatial data to be stored, accessed, and analyzed quickly and efficiently for location-based decision making.

Spatial data represents the location characteristics of real or conceptual objects in relation to the real or conceptual space on a Geographic Information System (GIS) or other location-based application.

The spatial features are used to geotag, enrich, visualize, transform, load, and process the location-specific two and three dimensional geographical images, and manipulate geometrical shapes for GIS functions.

- What is Big Data Spatial and Graph on Apache Hadoop?

- Advantages of Oracle Big Data Spatial and Graph

- Oracle Big Data Spatial Features and Functions

- Oracle Big Data Spatial Files, Formats, and Software Requirements

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.1.1 What is Big Data Spatial and Graph on Apache Hadoop?

Oracle Big Data Spatial and Graph on Apache Hadoop is a framework that uses the MapReduce programs and analytic capabilities in a Hadoop cluster to store, access, and analyze the spatial data. The spatial features provide a schema and functions that facilitate the storage, retrieval, update, and query of collections of spatial data. Big Data Spatial and Graph on Hadoop supports storing and processing spatial images, which could be geometric shapes, raster, or vector images and stored in one of the several hundred supported formats.

Note:

Oracle Spatial and Graph Developer's Guide for an introduction to spatial concepts, data, and operations

2.1.2 Advantages of Oracle Big Data Spatial and Graph

The advantages of using Oracle Big Data Spatial and Graph include the following:

-

Unlike some of the GIS-centric spatial processing systems and engines, Oracle Big Data Spatial and Graph is capable of processing both structured and unstructured spatial information.

-

Customers are not forced or restricted to store only one particular form of data in their environment. They can have their data stored both as a spatial or nonspatial business data and still can use Oracle Big Data to do their spatial processing.

-

This is a framework, and therefore customers can use the available APIs to custom-build their applications or operations.

-

Oracle Big Data Spatial can process both vector and raster types of information and images.

2.1.3 Oracle Big Data Spatial Features and Functions

The spatial data is loaded for query and analysis by the Spatial Server and the images are stored and processed by an Image Processing Framework. You can use the Oracle Big Data Spatial and Graph server on Hadoop for:

-

Cataloguing the geospatial information, such as geographical map-based footprints, availability of resources in a geography, and so on.

-

Topological processing to calculate distance operations, such as nearest neighbor in a map location.

-

Categorization to build hierarchical maps of geographies and enrich the map by creating demographic associations within the map elements.

The following functions are built into Oracle Big Data Spatial and Graph:

-

Indexing function for faster retrieval of the spatial data.

-

Map function to display map-based footprints.

-

Zoom function to zoom-in and zoom-out specific geographical regions.

-

Mosaic and Group function to group a set of image files for processing to create a mosaic or subset operations.

-

Cartesian and geodetic coordinate functions to represent the spatial data in one of these coordinate systems.

-

Hierarchical function that builds and relates geometric hierarchy, such as country, state, city, postal code, and so on. This function can process the input data in the form of documents or latitude/longitude coordinates.

2.1.4 Oracle Big Data Spatial Files, Formats, and Software Requirements

The stored spatial data or images can be in one of these supported formats:

-

GeoJSON files

-

Shapefiles

-

Both Geodetic and Cartesian data

-

Other GDAL supported formats

You must have the following software, to store and process the spatial data:

-

Java runtime

-

GCC Compiler - Only when the GDAL-supported formats are used

2.2 Oracle Big Data Vector and Raster Data Processing

Oracle Big Data Spatial and Graph supports the storage and processing of both vector and raster spatial data.

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.2.1 Oracle Big Data Spatial Raster Data Processing

For processing the raster data, the GDAL loader loads the raster spatial data or images onto a HDFS environment. The following basic operations can be performed on a raster spatial data:

-

Mosaic: Combine multiple raster images to create a single mosaic image.

-

Subset: Perform subset operations on individual images.

-

Raster algebra operations: Perform algebra operations on every pixel in the rasters (for example, add, divide, multiply, log, pow, sine, sinh, and acos).

-

User-specified processing: Raster processing is based on the classes that user sets to be executed in mapping and reducing phases.

This feature supports a MapReduce framework for raster analysis operations. The users have the ability to custom-build their own raster operations, such as performing an algebraic function on a raster data and so on. For example, calculate the slope at each base of a digital elevation model or a 3D representation of a spatial surface, such as a terrain. For details, see Oracle Big Data Spatial Hadoop Image Processing Framework for Raster Data Processing.

Parent topic: Oracle Big Data Vector and Raster Data Processing

2.2.2 Oracle Big Data Spatial Vector Data Processing

This feature supports the processing of spatial vector data:

-

Loaded and stored on to a Hadoop HDFS environment

-

Stored either as Cartesian or geodetic data

The stored spatial vector data can be used for performing the following query operations and more:

-

Point-in-polygon

-

Distance calculation

-

Anyinteract

-

Buffer creation

Sevetal data service operations are supported for the spatial vector data:

-

Data enrichment

-

Data categorization

-

Spatial join

In addition, there is a limited Map Visualization API support for only the HTML5 format. You can access these APIs to create custom operations. For details, see "Oracle Big Data Spatial Vector Analysis."

Parent topic: Oracle Big Data Vector and Raster Data Processing

2.3 Oracle Big Data Spatial Hadoop Image Processing Framework for Raster Data Processing

Oracle Spatial Hadoop Image Processing Framework allows the creation of new combined images resulting from a series of processing phases in parallel.

It includes the following features:

-

HDFS Images storage, where every block size split is stored as a separate tile, ready for future independent processing

-

Subset, user-defined, and map algebra operations processed in parallel using the MapReduce framework

-

Ability to add custom processing classes to be executed in the mapping or reducing phases in parallel in a transparent way

-

Fast processing of georeferenced images

-

Support for GDAL formats, multiple bands images, DEMs (digital elevation models), multiple pixel depths, and SRIDs

-

Java API providing access to framework operations; useful for web services or standalone Java applications

-

Framework for testing and debugging user processing classes in the local environment

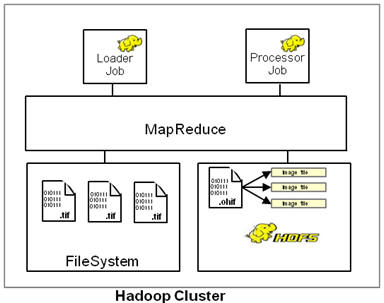

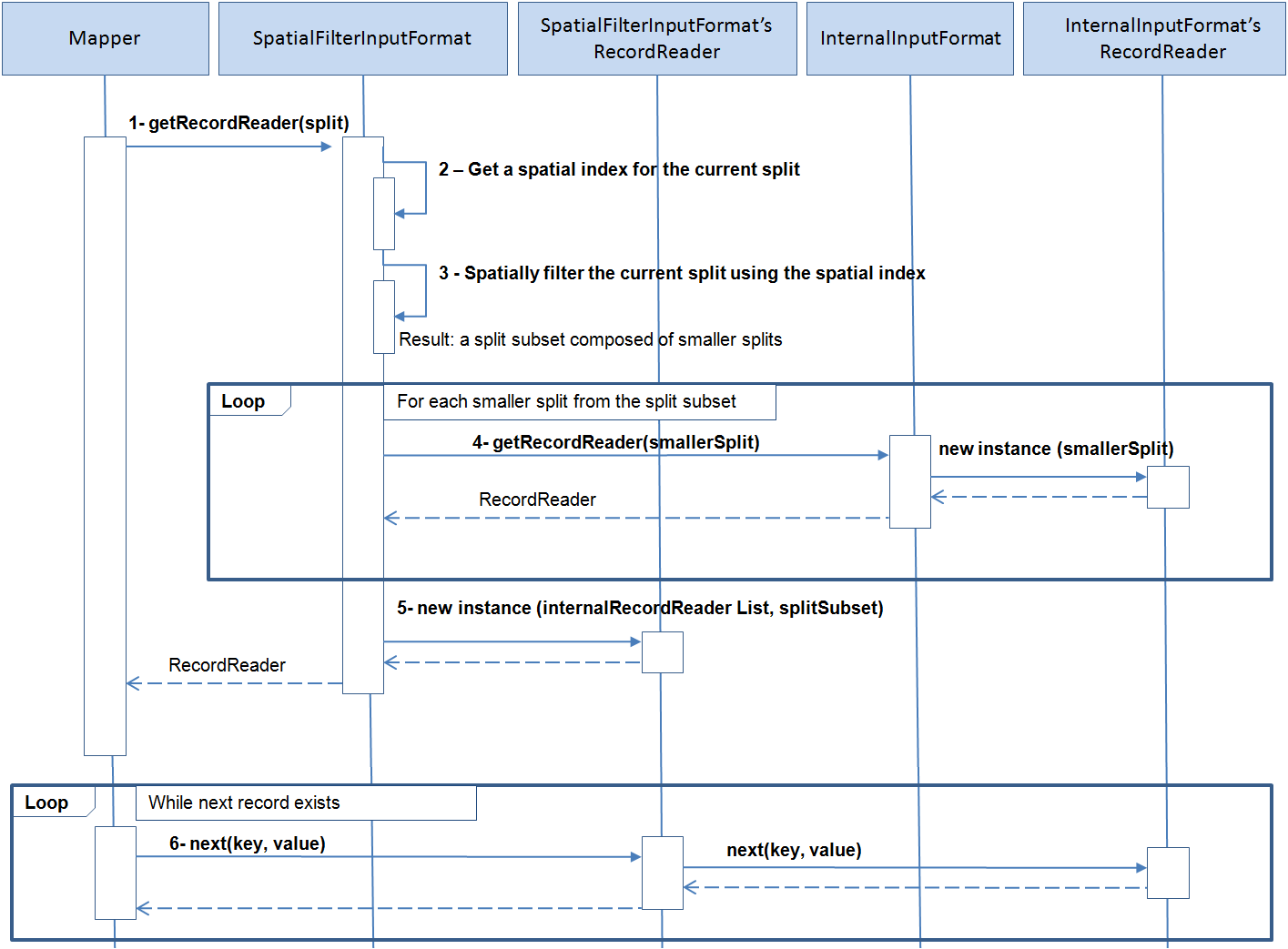

The Oracle Spatial Hadoop Image Processing Framework consists of two modules, a Loader and Processor, each one represented by a Hadoop job running on different stages in a Hadoop cluster, as represented in the following diagram. Also, you can load and process the images using the SpatialViewer web application, and you can use the Java API to expose the framework’s capabilities.

For installation and configuration information, see:

2.3.1 Image Loader

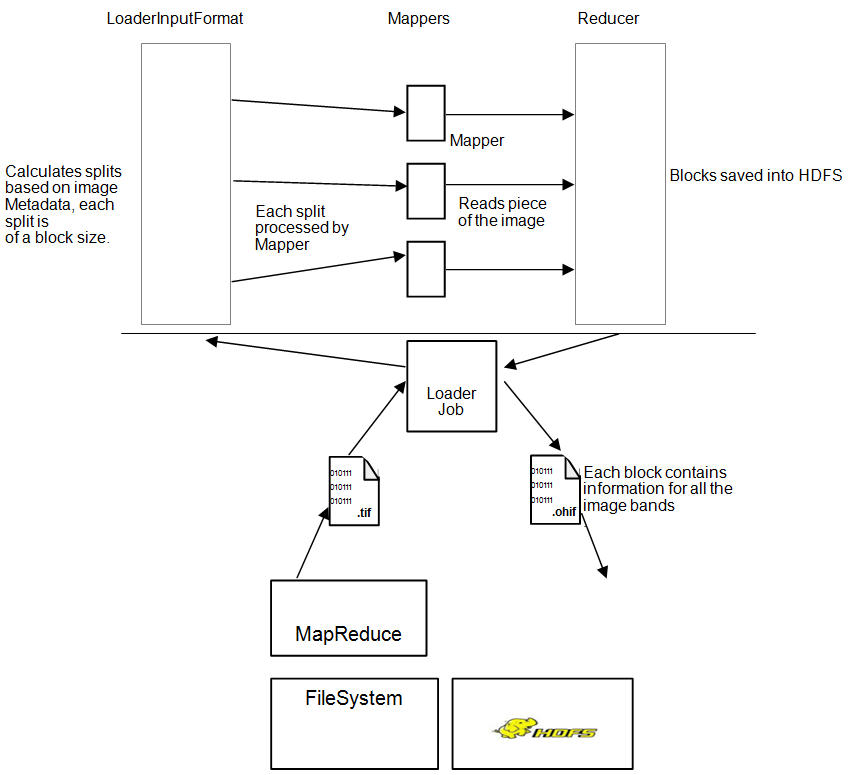

The Image Loader is a Hadoop job that loads a specific image or a group of images into HDFS.

-

While importing, the image is tiled and stored as an HDFS block.

-

GDAL is used to tile the image.

-

Each tile is loaded by a different mapper, so reading is parallel and faster.

-

Each tile includes a certain number of overlapping bytes (user input), so that the tiles cover area from the adjacent tiles.

-

A MapReduce job uses a mapper to load the information for each tile. There are 'n' number of mappers, depending on the number of tiles, image resolution and block size.

-

A single reduce phase per image puts together all the information loaded by the mappers and stores the images into a special

.ohifformat, which contains the resolution, bands, offsets, and image data. This way the file offset containing each tile and the node location is known. -

Each tile contains information for every band. This is helpful when there is a need to process only a few tiles; then, only the corresponding blocks are loaded.

The following diagram represents an Image Loader process:

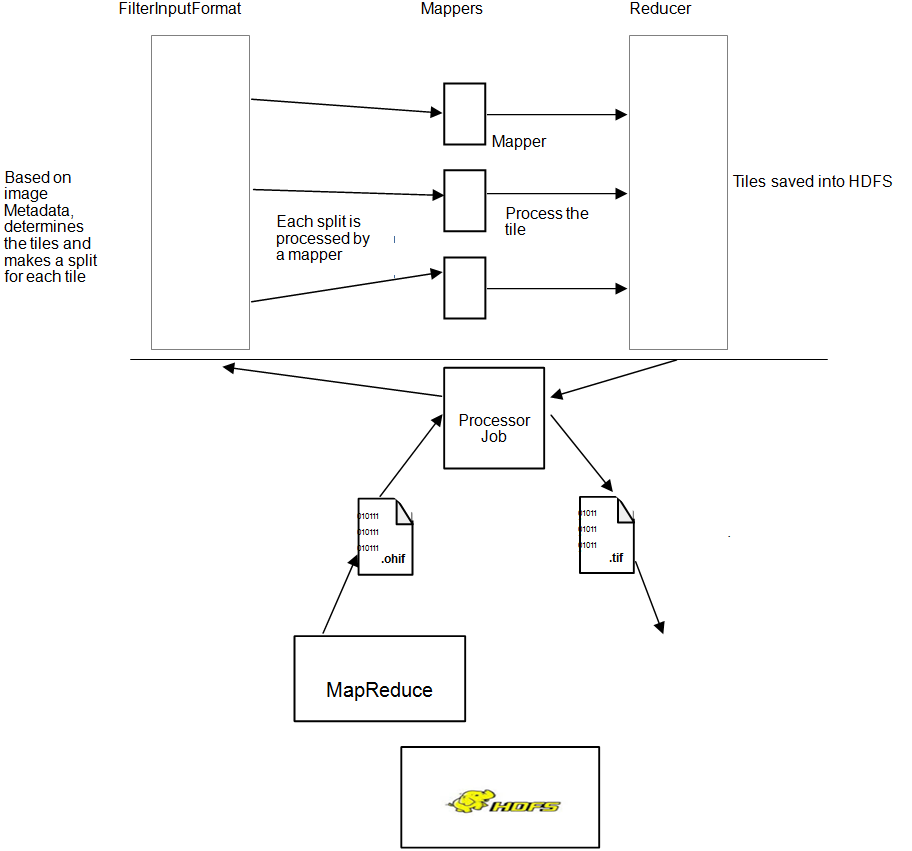

2.3.2 Image Processor

The Image Processor is a Hadoop job that filters tiles to be processed based on the user input and performs processing in parallel to create a new image.

-

Processes specific tiles of the image identified by the user. You can identify one, zero, or multiple processing classes. These classes are executed in the mapping or reducing phase, depending on your configuration. For the mapping phase, after the execution of processing classes, a mosaic operation is performed to adapt the pixels to the final output format requested by the user. If no mosaic operation was requested, the input raster is sent to reduce phase as is. For reducer phase, all the tiles are put together into a GDAL data set that is input for user reduce processing class, where final output may be changed or analyzed according to user needs.

-

A mapper loads the data corresponding to one tile, conserving data locality.

-

Once the data is loaded, the mapper filters the bands requested by the user.

-

Filtered information is processed and sent to each mapper in the reduce phase, where bytes are put together and a final processed image is stored into HDFS or regular File System depending on the user request.

The following diagram represents an Image Processor job:

2.4 Loading an Image to Hadoop Using the Image Loader

The first step to process images using the Oracle Spatial and Graph Hadoop Image Processing Framework is to actually have the images in HDFS, followed by having the images separated into smart tiles.

This allows the processing job to work separately on each tile independently. The Image Loader lets you import a single image or a collection of them into HDFS in parallel, which decreases the load time.

The Image Loader imports images from a file system into HDFS, where each block contains data for all the bands of the image, so that if further processing is required on specific positions, the information can be processed on a single node.

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.4.1 Image Loading Job

The image loading job has its custom input format that splits the image into related image splits. The splits are calculated based on an algorithm that reads square blocks of the image covering a defined area, which is determined by

area = ((blockSize - metadata bytes) / number of bands) / bytes per pixel.

For those pieces that do not use the complete block size, the remaining bytes are refilled with zeros.

Splits are assigned to different mappers where every assigned tile is read using GDAL based on the ImageSplit information. As a result an ImageDataWritable instance is created and saved in the context.

The metadata set in the ImageDataWritable instance is used by the processing classes to set up the tiled image in order to manipulate and process it. Since the source images are read from multiple mappers, the load is performed in parallel and faster.

After the mappers finish reading, the reducer picks up the tiles from the context and puts them together to save the file into HDFS. A special reading process is required to read the image back.

Parent topic: Loading an Image to Hadoop Using the Image Loader

2.4.2 Input Parameters

The following input parameters are supplied to the Hadoop command:

hadoop jar /opt/oracle/oracle-spatial-graph/spatial/raster/jlib/hadoop-imageloader.jar -files <SOURCE_IMGS_PATH> -out <HDFS_OUTPUT_FOLDER> -gdal <GDAL_LIB_PATH> -gdalData <GDAL_DATA_PATH> [-overlap <OVERLAPPING_PIXELS>] [-thumbnail <THUMBNAIL_PATH>] [-expand <false|true>] [-extractLogs <false|true>] [-logFilter <LINES_TO_INCLUDE_IN_LOG>] [-pyramid <OUTPUT_DIRECTORY, LEVEL, [RESAMPLING]>]

Where:

SOURCE_IMGS_PATHis a path to the source image(s) or folder(s). For multiple inputs use a comma separator. This path must be accessible via NFS to all nodes in the cluster.HDFS_OUTPUT_FOLDERis the HDFS output folder where the loaded images are stored.OVERLAPPING_PIXELSis an optional number of overlapping pixels on the borders of each tile, if this parameter is not specified a default of two overlapping pixels is considered.GDAL_LIB_PATHis the path where GDAL libraries are located.GDAL_DATA_PATHis the path where GDAL data folder is located. This path must be accessible through NFS to all nodes in the cluster.THUMBNAIL_PATHis an optional path to store a thumbnail of the loaded image(s). This path must be accessible through NFS to all nodes in the cluster and must have write access permission for yarn users.-expandcontrols whether the HDFS path of the loaded raster expands the source path, including all directories. If you set this tofalse, the.ohiffile is stored directly in the output directory (specified using the-ooption) without including that directory’s path in the raster.-extractLogscontrols whether the logs of the executed application should be extracted to the system temporary directory. By default, it is not enabled. The extraction does not include logs that are not part of Oracle Framework classes.-logFilter <LINES_TO_INCLUDE_IN_LOG>is a comma-separated String that lists all the patterns to include in the extracted logs, for example, to include custom processing classes packages.-pyramid <OUTPUT_DIRECTORY, LEVEL, [RESAMPLING]>allows the creation of pyramids while making the initial raster load. An OUPUT_DIRECTORY must be provided to store the local pyramids before uploading to HDFS; pyramids are loaded in the same HDFSA directory requested for load. A pyramid LEVEL must be provided to indicate how many pyramids are required for each raster. A RESAMPLING algorithm is optional to specify the method used to execute the resampling; if none is set, thenBILINEARis used.

For example, the following command loads all the georeferenced images under the images folder and adds an overlapping of 10 pixels on every border possible. The HDFS output folder is ohiftest and thumbnail of the loaded image are stored in the processtest folder.

hadoop jar /opt/oracle/oracle-spatial-graph/spatial/raster/jlib/hadoop-imageloader.jar -files /opt/shareddir/spatial/demo/imageserver/images/hawaii.tif -out ohiftest -overlap 10 -thumbnail /opt/shareddir/spatial/processtest –gdal /opt/oracle/oracle-spatial-graph/spatial/raster/gdal/lib –gdalData /opt/shareddir/data

By default, the Mappers and Reducers are configured to get 2 GB of JVM, but users can override this settings or any other job configuration properties by adding an imagejob.prop properties file in the same folder location from where the command is being executed. This properties file may list all the configuration properties that you want to override. For example,

mapreduce.map.memory.mb=2560 mapreduce.reduce.memory.mb=2560 mapreduce.reduce.java.opts=-Xmx2684354560 mapreduce.map.java.opts=-Xmx2684354560

Java heap memory (java.opts properties) must be equal to or less than the total memory assigned to mappers and reducers (mapreduce.map.memory and mapreduce.reduce.memory). Thus, if you increase Java heap memory, you might also need to increase the memory for mappers and reducers.

For GDAL to work properly, the libraries must be available using $LD_LIBRARY_PATH. Make sure that the shared libraries path is set properly in your shell window before executing a job. For example:

export LD_LIBRARY_PATH=$ALLACCESSDIR/gdal/native

Parent topic: Loading an Image to Hadoop Using the Image Loader

2.4.3 Output Parameters

The reducer generates two output files per input image. The first one is the .ohif file that concentrates all the tiles for the source image, each tile may be processed as a separated instance by a processing mapper. Internally each tile is stored as a HDFS block, blocks are located in several nodes, one node may contain one or more blocks of a specific .ohif file. The .ohif file is stored in user specified folder with -out flag, under the /user/<USER_EXECUTING_JOB>/OUT_FOLDER/<PARENT_DIRECTORIES_OF_SOURCE_RASTER> if the flag –expand was not used. Otherwise, the .ohif file will be located at /user/<USER_EXECUTING_JOB>/OUT_FOLDER/, and the file can be identified as original_filename.ohif.

The second output is a related metadata file that lists all the pieces of the image and the coordinates that each one covers. The file is located in HDFS under the metadata location, and its name is hash generated using the name of the ohif file. This file is for Oracle internal use only, and lists important metadata of the source raster. Some example lines from a metadata file:

srid:26904 datatype:1 resolution:27.90809458890406,-27.90809458890406 file:/user/hdfs/ohiftest/opt/shareddir/spatial/data/rasters/hawaii.tif.ohif bands:3 mbr:532488.7648166901,4303164.583549625,582723.3350767174,4269619.053853762 0,532488.7648166901,4303164.583549625,582723.3350767174,4269619.053853762 thumbnailpath:/opt/shareddir/spatial/thumb/

If the -thumbnail flag was specified, a thumbnail of the source image is stored in the related folder. This is a way to visualize a translation of the .ohif file. Job execution logs can be accessed using the command yarn logs -applicationId <applicationId>.

Parent topic: Loading an Image to Hadoop Using the Image Loader

2.5 Processing an Image Using the Oracle Spatial Hadoop Image Processor

Once the images are loaded into HDFS, they can be processed in parallel using Oracle Spatial Hadoop Image Processing Framework.

You specify an output, and the framework filters the tiles to fit into that output, processes them, and puts them all together to store them into a single file. Map algebra operations are also available and, if set, will be the first part of the processing phase. You can specify additional processing classes to be executed before the final output is created by the framework.

The image processor loads specific blocks of data, based on the input (mosaic description or a single raster), and selects only the bands and pixels that fit into the final output. All the specified processing classes are executed and the final output is stored into HDFS or the file system depending on the user request.

- Image Processing Job

- Input Parameters

- Job Execution

- Processing Classes and ImageBandWritable

- Map Algebra Operations

- Multiple Raster Algebra Operations

- Pyramids

- Output

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.5.1 Image Processing Job

The image processing job has different flows depending on the type of processing requested by the user.

-

Default Image Processing Job Flow: executed for processing that includes a mosaic operation, single raster operation, or basic multiple raster operation.

-

Multiple Raster Image Processing Job Flow: executed for processing that includes complex multiple raster algebra operations.

2.5.1.1 Default Image Processing Job Flow

The default image processing job flow is executed when any of the following processing is requested:

-

Mosaic operation

-

Single raster operation

-

Basic multiple raster algebra operation

The flow has its own custom FilterInputFormat, which determines the tiles to be processed, based on the SRID and coordinates. Only images with same data type (pixel depth) as the mosaic input data type (pixel depth) are considered. Only the tiles that intersect with coordinates specified by the user for the mosaic output are included. For processing of a single raster or basic multiple raster algebra operation (excluding mosaic), the filter includes all the tiles of the input rasters, because the processing will be executed on the complete images. Once the tiles are selected, a custom ImageProcessSplit is created for each image.

When a mapper receives the ImageProcessSplit, it reads the information based on what the ImageSplit specifies, performs a filter to select only the bands indicated by the user, and executes the list of map operations and of processing classes defined in the request, if any.

Each mapper process runs in the node where the data is located. After the map algebra operations and processing classes are executed, a validation verifies if the user is requesting mosaic operation or if analysis includes the complete image; and if a mosaic operation is requested, the final process executes the operation. The mosaic operation selects from every tile only the pixels that fit into the output and makes the necessary resolution changes to add them in the mosaic output. The single process operation just copies the previous raster tile bytes as they are. The resulting bytes are stored in NFS to be recovered by the reducer.

A single reducer picks the tiles and puts them together. If you specified any basic multiple raster algebra operation, then it is executed at the same time the tiles are merged into the final output. This operation affects only the intersecting pixels in the mosaic output, or in every pixel if no mosaic operation was requested. If you specified a reducer processing class, the GDAL data set with the output raster is sent to this class for analysis and processing. If you selected HDFS output, the ImageLoader is called to store the result into HDFS. Otherwise, by default the image is prepared using GDAL and is stored in the file system (NFS).

Parent topic: Image Processing Job

2.5.1.2 Multiple Raster Image Processing Job Flow

The multiple raster image processing job flow is executed when a complex multiple raster algebra operation is requested. It applies to rasters that have the same MBR, pixel type, pixel size, and SRID, since these operations are applied pixel by pixel in the corresponding cell, where every pixel represents the same coordinates.

The flow has its own custom MultipleRasterInputFormat, which determines the tiles to be processed, based on the SRID and coordinates. Only images with same MBR, pixel type, pixel size and SRID are considered. Only the rasters that match with coordinates specified by the first raster in the catalog are included. All the tiles of the input rasters are considered, because the processing will be executed on the complete images.

Once the tiles are selected, a custom MultipleRasterSplit is created. This split contains a small area of every original tile, depending on the block size, because now all the rasters must be included in a split, even if it is only a small area. Each of these is called an IndividualRasterSplit, and they are contained in a parent MultipleRasterSplit.

When a mapper receives the MultipleRasterSplit, it reads the information of all the raster´s tiles that are included in the parent split, performs a filter to select only the bands indicated by the user and only the small corresponding area to process in this specific mapper, and then executes the complex multiple raster algebra operation.

Data locality may be lost in this part of the process, because multiple rasters are included for a single mapper that may not be in the same node. The resulting bytes for every pixel are put in the context to be recovered by the reducer.

A single reducer picks pixel values and puts them together. If you specified a reducer processing class, the GDAL data set with the output raster is sent to this class for analysis and processing. The list of tiles that this class receives is null for this scenario, and the class can only work with the output data set. If you selected HDFS output, the ImageLoader is called to store the result into HDFS. Otherwise, by default the image is prepared using GDAL and is stored in the file system (NFS).

Parent topic: Image Processing Job

2.5.2 Input Parameters

The following input parameters can be supplied to the hadoop command:

hadoop jar /opt/oracle/oracle-spatial-graph/spatial/raster/jlib/hadoop-imageprocessor.jar -config <MOSAIC_CONFIG_PATH> -gdal <GDAL_LIBRARIES_PATH> -gdalData <GDAL_DATA_PATH> [-catalog <IMAGE_CATALOG_PATH>] [-usrlib <USER_PROCESS_JAR_PATH>] [-thumbnail <THUMBNAIL_PATH>] [-nativepath <USER_NATIVE_LIBRARIES_PATH>] [-params <USER_PARAMETERS>] [-file <SINGLE_RASTER_PATH>]

Where:

MOSAIC_CONFIG_PATHis the path to the mosaic configuration xml, that defines the features of the output.GDAL_LIBRARIES_PATHis the path where GDAL libraries are located.GDAL_DATA_PATHis the path where the GDAL data folder is located. This path must be accessible via NFS to all nodes in the cluster.IMAGE_CATALOG_PATHis the path to the catalog xml that lists the HDFS image(s) to be processed. This is optional because you can also specify a single raster to process using–fileflag.USER_PROCESS_JAR_PATHis an optional user-defined jar file or comma-separated list of jar files, each of which contains additional processing classes to be applied to the source images.THUMBNAIL_PATHis an optional flag to activate the thumbnail creation of the loaded image(s). This path must be accessible via NFS to all nodes in the cluster and is valid only for an HDFS output.USER_NATIVE_LIBRARIES_PATHis an optional comma-separated list of additional native libraries to use in the analysis. It can also be a directory containing all the native libraries to load in the application.USER_PARAMETERSis an optional key/value list used to define input data for user processing classes. Use a semicolon to separate parameters. For example:azimuth=315;altitude=45SINGLE_RASTER_PATHis an optional path to the.ohiffile that will be processed by the job. If this is set, you do not need to set a catalog.

For example, the following command will process all the files listed in the catalog file input.xml file using the mosaic output definition set in testFS.xml file.

hadoop jar /opt/oracle/oracle-spatial-graph/spatial/raster/jlib/hadoop-imageprocessor.jar -catalog /opt/shareddir/spatial/demo/imageserver/images/input.xml -config /opt/shareddir/spatial/demo/imageserver/images/testFS.xml -thumbnail /opt/shareddir/spatial/processtest –gdal /opt/oracle/oracle-spatial-graph/spatial/raster/gdal/lib –gdalData /opt/shareddir/data

By default, the Mappers and Reducers are configured to get 2 GB of JVM, but users can override this settings or any other job configuration properties by adding an imagejob.prop properties file in the same folder location from where the command is being executed.

For GDAL to work properly, the libraries must be available using $LD_LIBRARY_PATH. Make sure that the shared libraries path is set properly in your shell window before executing a job. For example:

export LD_LIBRARY_PATH=$ALLACCESSDIR/gdal/native

2.5.2.1 Catalog XML Structure

The following is an example of input catalog XML used to list every source image considered for mosaic operation generated by the image processing job.

-<catalog> -<image> <raster>/user/hdfs/ohiftest/opt/shareddir/spatial/data/rasters/maui.tif.ohif</raster> <bands datatype='1' config='1,2,3'>3</bands> </image> </catalog>

A <catalog> element contains the list of <image> elements to process.

Each <image> element defines a source image or a source folder within the <raster> element. All the images within the folder are processed.

The <bands> element specifies the number of bands of the image, The datatype attribute has the raster data type and the config attribute specifies which band should appear in the mosaic output band order. For example: 3,1,2 specifies that mosaic output band number 1 will have band number 3 of this raster, mosaic band number 2 will have source band 1, and mosaic band number 3 will have source band 2. This order may change from raster to raster.

Parent topic: Input Parameters

2.5.2.2 Mosaic Definition XML Structure

The following is an example of a mosaic configuration XML used to define the features of the output generated by the image processing job.

-<mosaic exec="false">

-<output>

<SRID>26904</SRID>

<directory type="FS">/opt/shareddir/spatial/processOutput</directory>

<!--directory type="HDFS">newData</directory-->

<tempFSFolder>/opt/shareddir/spatial/tempOutput</tempFSFolder>

<filename>littlemap</filename>

<format>GTIFF</format>

<width>1600</width>

<height>986</height>

<algorithm order="0">2</algorithm>

<bands layers="3" config="3,1,2"/>

<nodata>#000000</nodata>

<pixelType>1</pixelType>

</output>

-<crop>

-<transform>

356958.985610072,280.38843650364862,0,2458324.0825054757,0,-280.38843650364862 </transform>

</crop>

<process><classMapper params="threshold=454,2954">oracle.spatial.hadoop.twc.FarmTransformer</classMapper><classReducer params="plot_size=100400">oracle.spatial.hadoop.twc.FarmAlignment</classReducer></process>

<operations>

<localif operator="<" operand="3" newvalue="6"/>

<localadd arg="5"/>

<localsqrt/>

<localround/>

</operations>

</mosaic>

The <mosaic> element defines the specifications of the processing output. The exec attribute specifies if the processing will include mosaic operation or not. If set to “false”, a mosaic operation is not executed and a single raster is processed; if set to “true” or not set, a mosaic operation is performed. Some of the following elements are required only for mosaic operations and ignored for single raster processing.

The <output> element defines the features such as <SRID> considered for the output. All the images in different SRID are converted to the mosaic SRID in order to decide if any of its tiles fit into the mosaic or not. This element is not required for single raster processing, because the output rster has the same SRID as the input.

The <directory> element defines where the output is located. It can be in an HDFS or in regular FileSystem (FS), which is specified in the tag type.

The <tempFsFolder> element sets the path to store the mosaic output temporarily. The attribute delete=”false” can be specified to keep the output of the process even if the loader was executed to store it in HDFS.

The <filename> and <format> elements specify the output filename. <filename> is not required for single raster process; and if it is not specified, the name of the input file (determined by the -file attribute during the job call) is used for the output file. <format> is not required for single raster processing, because the output raster has the same format as the input.

The <width> and <height> elements set the mosaic output resolution. They are not required for single raster processing, because the output raster has the same resolution as the input.

The <algorithm> element sets the order algorithm for the images. A 1 order means, by source last modified date, and a 2 order means, by image size. The order tag represents ascendant or descendant modes. (These properties are for mosaic operations where multiple rasters may overlap.)

The <bands> element specifies the number of bands in the output mosaic. Images with fewer bands than this number are discarded. The config attribute can be used for single raster processing to set the band configuration for output, because there is no catalog.

The <nodata> element specifies the color in the first three bands for all the pixels in the mosaic output that have no value.

The <pixelType> element sets the pixel type of the mosaic output. Source images that do not have the same pixel size are discarded for processing. This element is not required for single raster processing: if not specified, the pixel type will be the same as for the input.

The <crop> element defines the coordinates included in the mosaic output in the following order: startcoordinateX, pixelXWidth, RotationX, startcoordinateY, RotationY, and pixelheightY. This element is not required for single raster processing: if not specified, the complete image is considered for analysis.

The <process> element lists all the classes to execute before the mosaic operation.

The <classMapper> element is used for classes that will be executed during mapping phase, and the <classReducer> element is used for classes that will be executed during reduce phase. Both elements have the params attribute, where you can send input parameters to processing classes according to your needs.

The <operations> element lists all the map algebra operations that will be processed for this request. This element can also include a request for pyramid operations; for example:

<operations>

<pyramid resampling="NEAREST_NEIGHBOR" redLevel="6"/>

</operations>

Parent topic: Input Parameters

2.5.3 Job Execution

The first step of the job is to filter the tiles that would fit into the output. As a start, the location files that hold tile metadata are sent to theInputFormat.

By extracting the pixelType, the filter decides whether the related source image is valid for processing or not. Based on the user definition made in the catalog xml, one of the following happens:

-

If the image is valid for processing, then the SRID is evaluated next

-

If it is different from the user definition, then the MBR coordinates of every tile are converted into the user SRID and evaluated.

This way, every tile is evaluated for intersection with the output definition.

-

For a mosaic processing request, only the intersecting tiles are selected, and a split is created for each one of them.

-

For a single raster processing request, all the tiles are selected, and a split is created for each one of them.

-

For a complex multiple raster algebra processing request, all the tiles are selected if the MBR and pixel size is the same. Depending on the number of rasters selected and the blocksize, a specific area of every tile´s raster (which does not always include the complete original raster tile) is included in a single parent split.

A mapper processes each split in the node where it is stored. (For complex multiple raster algebra operations, data locality may be lost, because a split contains data from several rasters.) The mapper executes the sequence of map algebra operations and processing classes defined by the user, and then the mosaic process is executed if requested. A single reducer puts together the result of the mappers and, for user-specified reducing processing classes, sets the output data set to these classes for analysis or process. Finally, the process stores the image into FS or HDFS upon user request. If the user requested to store the output into HDFS, then the ImageLoader job is invoked to store the image as an .ohif file.

By default, the mappers and reducers are configured to get 1 GB of JVM, but you can override this settings or any other job configuration properties by adding an imagejob.prop properties file in the same folder location from where the command is being executed.

2.5.4 Processing Classes and ImageBandWritable

The processing classes specified in the catalog XML must follow a set of rules to be correctly processed by the job. All the processing classes in the mapping phase must implement the ImageProcessorInterface interface. For the reducer phase, they must implement the ImageProcessorReduceInterface interface.

When implementing a processing class, you may manipulate the raster using its object representation ImageBandWritable. An example of an processing class is provided with the framework to calculate the slope on DEMs. You can create mapping operations, for example, to transforms the pixel values to another value by a function. The ImageBandWritable instance defines the content of a tile, such as resolution, size, and pixels. These values must be reflected in the properties that create the definition of the tile. The integrity of the mosaic output depends on the correct manipulation of these properties.

The ImageBandWritable instance defines the content of a tile, such as resolution, size, and pixels. These values must be reflected in the properties that create the definition of the tile. The integrity of the output depends on the correct manipulation of these properties.

Table 2-1 ImageBandWritable Properties

| Type - Property | Description |

|---|---|

|

IntWritable dstWidthSize |

Width size of the tile |

|

IntWritable dstHeightSize |

Height size of the tile |

|

IntWritable bands |

Number of bands in the tile |

|

IntWritable dType |

Data type of the tile |

|

IntWritable offX |

Starting X pixel, in relation to the source image |

|

IntWritable offY |

Starting Y pixel, in relation to the source image |

|

IntWritable totalWidth |

Width size of the source image |

|

IntWritable totalHeight |

Height size of the source image |

|

IntWritable bytesNumber |

Number of bytes containing the pixels of the tile and stored into baseArray |

|

BytesWritable[] baseArray |

Array containing the bytes representing the tile pixels, each cell represents a band |

|

IntWritable[][] basePaletteArray |

Array containing the int values representing the tile palette, each array represents a band. Each integer represents an entry for each color in the color table, there are four entries per color |

|

IntWritable[] baseColorArray |

Array containing the int values representing the color interpretation, each cell represents a band |

|

DoubleWritable[] noDataArray |

Array containing the NODATA values for the image, each cell contains the value for the related band |

|

ByteWritable isProjection |

Specifies if the tile has projection information with Byte.MAX_VALUE |

|

ByteWritable isTransform |

Specifies if the tile has the geo transform array information with Byte.MAX_VALUE |

|

ByteWritable isMetadata |

Specifies if the tile has metadata information with Byte.MAX_VALUE |

|

IntWritable projectionLength |

Specifies the projection information length |

|

BytesWritable projectionRef |

Specifies the projection information in bytes |

|

DoubleWritable[] geoTransform |

Contains the geo transform array |

|

IntWritable metadataSize |

Number of metadata values in the tile |

|

IntWritable[] metadataLength |

Array specifying the length of each metadataValue |

|

BytesWritable[] metadata |

Array of metadata of the tile |

|

GeneralInfoWritable mosaicInfo |

The user-defined information in the mosaic xml. Do not modify the mosaic output features. Modify the original xml file in a new name and run the process using the new xml |

|

MapWritable extraFields |

Map that lists key/value pairs of parameters specific to every tile to be passed to the reducer phase for analysis |

Processing Classes and Methods

When modifying the pixels of the tile, first get the band information into an array using the following method:

byte [] bandData1 =(byte []) img.getBand(0);

The bytes representing the tile pixels of band 1 are now in the bandData1 array. The base index is zero.

The getBand(int bandId) method will get the band of the raster in the specified bandId position. You can cast the object retrieved to the type of array of the raster; it could be byte, short (unsigned int 16 bits, int 16 bits), int (unsigned int 32 bits, int 32 bits), float (float 32 bits), or double (float 64 bits).

With the array of pixels available, it is possible now to transform them upon a user request.

After processing the pixels, if the same instance of ImageBandWritable must be used, then execute the following method:

img.removeBands;

This removes the content of previous bands, and you can start adding the new bands. To add a new band use the following method:

img.addBand(Object band);

Otherwise, you may want to replace a specific band by using trhe following method:

img.replaceBand(Object band, int bandId)

In the preceding methods, band is an array containing the pixel information, and bandID is the identifier of the band to be replaced.. Do not forget to update the instance size, data type, bytesNumber and any other property that might be affected as a result of the processing operation. Setters are available for each property.

2.5.4.1 Location of the Classes and Jar Files

All the processing classes must be contained in a single jar file if you are using the Oracle SpatialViewer web application. The processing classes might be placed in different jar files if you are using the command line option.

When new classes are visible in the classpath, they must be added to the mosaic XML in the <process><classMapper> or <process><classReducer> section. Every <class> element added is executed in order of appearance: for mappers, just before the final mosaic operation is performed; and for reducers, just after all the processed tiles are put together in a single data set.

Parent topic: Processing Classes and ImageBandWritable

2.5.5 Map Algebra Operations

You can process local map algebra operations on the input rasters, where pixels are altered depending on the operation. The order of operations in the configuration XML determines the order in which the operations are processed. After all the map algebra operations are processed, the processing classes are run, and finally the mosaic operation is performed.

The following map algebra operations can be added in the <operations> element in the mosaic configuration XML, with the operation name serving as an element name. (The data types for which each operation is supported are listed in parentheses.)

-

localnot: Gets the negation of every pixel, inverts the bit pattern. If the result is a negative value and the data type is unsigned, then the NODATA value is set. If the raster does not have a specified NODATA value, then the original pixel is set. (Byte, Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits) -

locallog: Returns the natural logarithm (base e) of a pixel. If the result is NaN, then original pixel value is set; if the result is Infinite, then the NODATA value is set. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

locallog10: Returns the base 10 logarithm of a pixel. If the result is NaN, then the original pixel value is set; if the result is Infinite, then the NODATA value is set. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localadd: Adds the specified value as argument to the pixel .Example:<localadd arg="5"/>. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localdivide: Divides the value of each pixel by the specified value set as argument. Example:<localdivide arg="5"/>. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localif: Modifies the value of each pixel based on the condition and value specified as argument. Valid operators: = , <, >, >=, < !=. Example:<localif operator="<" operand="3" newvalue="6"/>, which modifies all the pixels whose value is less than 3, setting the new value to 6. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localmultiply: Multiplies the value of each pixel times the value specified as argument. Example:<localmultiply arg="5"/>. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localpow: Raises the value of each pixel to the power of the value specified as argument. Example:<localpow arg="5"/>. If the result is infinite, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localsqrt: Returns the correctly rounded positive square root of every pixel. If the result is infinite or NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localsubstract: Subtracts the value specified as argument to every pixel value. Example:<localsubstract arg="5"/>. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localacos: Calculates the arc cosine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localasin: Calculates the arc sine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localatan: Calculates the arc tangent of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localcos: Calculates the cosine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localcosh: Calculates the hyperbolic cosine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localsin: Calculates the sine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localtan: Calculates the tangent of a pixel. The pixel is not modified if the cosine of this pixel is 0. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localsinh: Calculates the arc hyperbolic sine of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localtanh: Calculates the hyperbolic tangent of a pixel. If the result is NaN, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localdefined: Maps an integer typed pixel to 1 if the cell value is not NODATA; otherwise, 0. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits, Float 32 bits) -

localundefined: Maps an integer typed Raster to 0 if the cell value is not NODATA; otherwise, 1. (Unsigned int 16 bits, Unsigned int 32 bits, Int 16 bits, Int 32 bits) -

localabs: Returns the absolute value of signed pixel. If the result is Infinite, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localnegate: Multiplies by -1 the value of each pixel. (Int 16 bits, Int 32 bits, Float 32 bits, Float 64 bits) -

localceil: Returns the smallest value that is greater than or equal to the pixel value and is equal to a mathematical integer. If the result is Infinite, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Float 32 bits, Float 64 bits) -

localfloor: Returns the smallest value that is less than or equal to the pixel value and is equal to a mathematical integer. If the result is Infinite, the NODATA value is set to this pixel. If the raster does not have a specified NODATA value, then the original pixel is set. (Float 32 bits, Float 64 bits) -

localround: Returns the closest integer value to every pixel. (Float 32 bits, Float 64 bits)

2.5.6 Multiple Raster Algebra Operations

You can process raster algebra operations that involve more than one raster, where pixels are altered depending on the operation and taking in consideration the pixels from all the involved rasters in the same cell.

Only one operation can be processed at a time and it is defined in the configuration XML using the <multipleops> element. Its value is the operation to process.

There are two types of operations:

-

Basic Multiple Raster Algebra Operations are executed in the reduce phase right before the Reduce User Processing classes.

-

Complex Multiple Raster Algebra Operations are processed in the mapping phase.

2.5.6.1 Basic Multiple Raster Algebra Operations

Basic multiple raster algebra operations are executed in the reducing phase of the job.

They can be requested along with a mosaic operation or just a process request. If requested along with a mosaic operation, the input rasters must have the same MBR, pixel size, SRID and data type.

When a mosaic operation is performed, only the intersecting pixels (pixels that are identical in both rasters) are affected.

The operation is processed at the time that mapping tiles are put together in the output dataset, the pixel values that intersect (if a mosaic operation was requested) or all the pixels (when mosaic is not requested) are altered according to the requested operation.

The order in which rasters are added to the data set is the mosaic operation order if it was requested; otherwise, it is the order of appearance in the catalog.

The following basic multiple raster algebra operations are available:

-

add: Adds every pixel in the same cell for the raster sequence. -

substract: Subtracts every pixel in the same cell for the raster sequence. -

divide: Divides every pixel in the same cell for the raster sequence. -

multiply: Multiplies every pixel in the same cell for the raster sequence. -

min: Assigns the minimum value of the pixels in the same cell for the raster sequence. -

max: Assigns the maximum value of the pixels in the same cell for the raster sequence. -

mean: Calculates the mean value for every pixel in the same cell for the raster sequence. -

and: Processes binary “and” operation on every pixel in the same cell for raster sequence, “and“ operation copies a bit to the result if it exists in both operands. -

or: Processes binary “or” operation on every pixel in the same cell for raster sequence, “or” operation copies a bit if it exists in either operand. -

xor: Processes binary “xor” operation on every pixel in the same cell for raster sequence, “xor” operation copies the bit if it is set in one operand but not both.

Parent topic: Multiple Raster Algebra Operations

2.5.6.2 Complex Multiple Raster Algebra Operations

Complex multiple raster algebra operations are executed in the mapping phase of the job, and a job can only process this operation; any request for resizing, changing the SRID, or custom mapping must have been previously executed. The input for this job is a series of rasters with the same MBR, SRID, data type, and pixel size.

The tiles for this job include a piece of all the rasters in the catalog. Thus, every mapper has access to an area of cells in all the rasters, and the operation is processed there. The resulting pixel for every cell is written in the context, so that reducer can put results in the output data set before processing the reducer processing classes.

The order in which rasters are considered to evaluate the operation is the order of appearance in the catalog.

The following complex multiple raster algebra operations are available:

-

combine: Assigns a unique output value to each unique combination of input values in the same cell for the raster sequence. -

majority: Assigns the value within the same cells of the rasters sequence that is the most numerous. If there is a values tie, the one on the right is selected. -

minority: Assigns the value within the same cells of the raster sequence that is the least numerous. If there is a values tie, the one on the right is selected. -

variety: Assigns the count of unique values at each same cell in the sequence of rasters. -

mask: Generates a raster with the values from the first raster, but only includes pixels in which the corresponding pixel in the rest of rasters of the sequence is set to the specified mask values. Otherwise, 0 is set. -

inversemask: Generates a raster with the values from the first raster, but only includes pixels in which the corresponding pixel in the rest of rasters of the sequence is not set to the specified mask values. Otherwise, 0 is set. -

equals: Creates a raster with data type byte, where cell values equal 1 if the corresponding cells for all input rasters have the same value. Otherwise, 0 is set. -

unequal: Creates a raster with data type byte, where cell values equal 1 if the corresponding cells for all input rasters have a different value. Otherwise, 0 is set. -

greater: Creates a raster with data type byte, where cell values equal 1 if the cell value in the first raster is greater than the rest of corresponding cells for all input. Otherwise, 0 is set. -

greaterorequal: Creates a raster with data type byte, where cell values equal 1 if the cell value in the first raster is greater or equal than the rest of corresponding cells for all input. Otherwise, 0 is set. -

less: Creates a raster with data type byte, where cell values equal 1 if the cell value in the first raster is less than the rest of corresponding cells for all input. Otherwise, 0 is set. -

lessorequal: Creates a raster with data type byte, where cell values equal 1 if the cell value in the first raster is less or equal than the rest of corresponding cells for all input. Otherwise, 0 is set.

Parent topic: Multiple Raster Algebra Operations

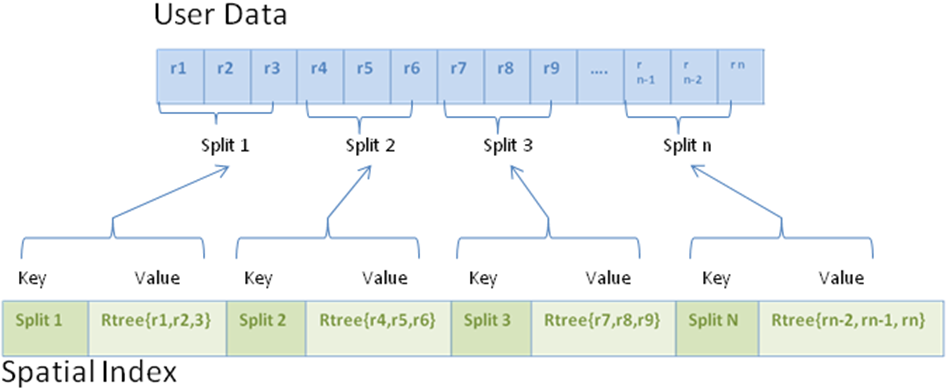

2.5.7 Pyramids

Pyramids are subobjects of a raster object that represent the raster image or raster data at differing sizes and degrees of resolution.

The size is usually related to the amount of time that an application needs to retrieve and display an image, particularly over the web. That is, the smaller the image size, the faster it can be displayed; and as long as detailed resolution is not needed (for example, if the user has "zoomed out" considerably), the display quality for the smaller image is adequate.

Pyramid levels represent reduced or increased resolution images that require less or more storage space, respectively. (Big Data Spatial and Graph supports only reduced resolution pyramids.) A pyramid level of 0 indicates the original raster data; that is, there is no reduction in the image resolution and no change in the storage space required. Values greater than 0 (zero) indicate increasingly reduced levels of image resolution and reduced storage space requirements.

A single raster is processed for each pyramid request, and the following parameters apply:

-

Pyramid level (required): the maximum reduction level; that is, the number of pyramid levels to create at a reduced size than the original object. For example,

redLevel=”6”causes pyramid levels to be created for levels 0 through 5.The dimension sizes at each lower level are:

r(n) = r(n - 1)/2andc(n) = c(n - 1)/2where:r(n)andc(n)are the row and column sizes for a pyramid at levelnThe smaller of the row and column dimension sizes of the top-level overview is between 64 and 128 (maximum reduced-resolution level):

(int)(log2(a/64))whereais the smaller of the original row or column dimension size.If an

rLevelvalue greater than the maximum reduced-resolution level is specified, therLevelvalue is set to the maximum reduced-resolution level. -

Resampling algorithm: the resampling method to use.

Must be one of the following:

NEAREST_NEIGHBOR,BILINEAR,AVERAGE4,AVERAGE16. (BILINEARandAVERAGE4have the same effect.) If no resampling algorithm is specified,BILINEARis used by default.

Pyramids can be created while loading multiple rasters or processing a single raster:

-

While loading the rasters in HDFS, by adding the

-pyramidparameter to the loader command line call or by using the APIloader.addPyramid() -

For processing a single raster, by adding the operation in the user request XML or by using the API

processor.addPyramid()

2.5.8 Output

When you specify an HDFS directory in the configuration XML, the output generated is an .ohif file as in the case of an ImageLoader job,

When the user specifies a FS directory in the configuration XML, the output generated is an image with the filename and type specified and is stored into regular FileSystem.

In both the scenarios, the output must comply with the specifications set in the configuration XML. The job execution logs can be accessed using the command yarn logs -applicationId <applicationId>.

2.6 Loading and Processing an Image Using the Oracle Spatial Hadoop Raster Processing API

The framework provides a raster processing API that lets you load and process rasters without creating XML but instead using a Java application. The application can be executed inside the cluster or on a remote node.

The API provides access to the framework operations, and is useful for web service or standalone Java applications.

To execute any of the jobs, a HadoopConfiguration object must be created. This object is used to set the necessary configuration information (such as the jar file name and the GDAL paths) to create the job, manipulate rasters, and execute the job. The basic logic is as follows:

//Creates Hadoop Configuration

HadoopConfiguration hadoopConf = new HadoopConfiguration();

//Assigns GDAL_DATA location based on specified SHAREDDIR, this data folder is required by gdal to look for data tables that allow SRID conversions

String gdalData = sharedDir + ProcessConstants.DIRECTORY_SEPARATOR + "data";

hadoopConf.setGdalDataPath(gdalData);

//Sets jar name for processor

hadoopConf.setMapreduceJobJar("hadoop-imageprocessor.jar");

//Creates the job

RasterProcessorJob processor = (RasterProcessorJob) hadoopConf.createRasterProcessorJob();

If the API is used on a remote node, you can set properties in the Hadoop Configuration object to connect to the cluster. For example:

//Following config settings are required for standalone execution. (REMOTE ACCESS)

hadoopConf.setUser("hdfs");

hadoopConf.setHdfsPathPrefix("hdfs://sys3.example.com:8020");

hadoopConf.setResourceManagerScheduler("sys3.example.com:8030");

hadoopConf.setResourceManagerAddress("sys3.example.com:8032");

hadoopConf.setYarnApplicationClasspath("/etc/hadoop/conf/,/usr/lib/hadoop/*,/usr/lib/hadoop/lib/*," +

"/usr/lib/hadoop-hdfs/*,/usr/lib/hadoop-hdfs/lib/*,/usr/lib/hadoop-yarn/*," +

"/usr/lib/hadoop-yarn/lib/*,/usr/lib/hadoop-mapreduce/*,/usr/lib/hadoop-mapreduce/lib/* ");

After the job is created, the properties for its execution must be set depending on the job type. There are two job classes: RasterLoaderJob to load the rasters into HDFS, and RasterProcessorJob to process them.

The following example loads a Hawaii raster into the APICALL_HDFS directory. It creates a thumbnail in a shared folder, and specifies 10 pixels overlapping on each edge of the tiles.

private static void executeLoader(HadoopConfiguration hadoopConf){

hadoopConf.setMapreduceJobJar("hadoop-imageloader.jar");

RasterLoaderJob loader = (RasterLoaderJob) hadoopConf.createRasterLoaderJob();

loader.setFilesToLoad("/net/den00btb/scratch/zherena/hawaii/hawaii.tif");

loader.setTileOverlap("10");

loader.setOutputFolder("APICALL");

loader.setRasterThumbnailFolder("/net/den00btb/scratch/zherena/processOutput");

try{

loader.setGdalPath("/net/den00btb/scratch/zherena/gdal/lib");

boolean loaderSuccess = loader.execute();

if(loaderSuccess){

System.out.println("Successfully executed loader job");

}

else{

System.out.println("Failed to execute loader job");

}

}catch(Exception e ){

System.out.println("Problem when trying to execute raster loader " + e.getMessage());

}

}

}The following example processes the loaded raster.

private static void executeProcessor(HadoopConfiguration hadoopConf){

hadoopConf.setMapreduceJobJar("hadoop-imageprocessor.jar");

RasterProcessorJob processor = (RasterProcessorJob) hadoopConf.createRasterProcessorJob();

try{

processor.setGdalPath("/net/den00btb/scratch/zherena/gdal/lib");

MosaicConfiguration mosaic = new MosaicConfiguration();

mosaic.setBands(3);

mosaic.setDirectory("/net/den00btb/scratch/zherena/processOutput");

mosaic.setFileName("APIMosaic");

mosaic.setFileSystem(RasterProcessorJob.FS);

mosaic.setFormat("GTIFF");

mosaic.setHeight(3192);

mosaic.setNoData("#FFFFFF");

mosaic.setOrderAlgorithm(ProcessConstants.ALGORITMH_FILE_LENGTH);

mosaic.setOrder("1");

mosaic.setPixelType("1");

mosaic.setPixelXWidth(67.457513);

mosaic.setPixelYWidth(-67.457513);

mosaic.setSrid("26904");

mosaic.setUpperLeftX(830763.281336);

mosaic.setUpperLeftY(2259894.481403);

mosaic.setWidth(1300);

processor.setMosaicConfigurationObject(mosaic.getCompactMosaic());

RasterCatalog catalog = new RasterCatalog();

Raster raster = new Raster();

raster.setBands(3);

raster.setBandsOrder("1,2,3");

raster.setDataType(1);

raster.setRasterLocation("/user/hdfs/APICALL/net/den00btb/scratch/zherena/hawaii/hawaii.tif.ohif");

catalog.addRasterToCatalog(raster);

processor.setCatalogObject(catalog.getCompactCatalog());

boolean processorSuccess = processor.execute();

if(processorSuccess){

System.out.println("Successfully executed processor job");

}

else{

System.out.println("Failed to execute processor job");

}

}catch(Exception e ){

System.out.println("Problem when trying to execute raster processor " + e.getMessage());

}

}In the preceding example, the thumbnail is optional if the mosaic results will be stored in HDFS. If a processing jar file is specified (used when the additional user processing classes are specified), the location of the jar file containing these lasses must be specified. The other parameters are required for the mosaic to be generated successfully.

Several examples of using the processing API are provided /opt/oracle/oracle-spatial-graph/spatial/raster/examples/java/src. Review the Java classes to understand their purpose. You may execute them using the scripts provided for each example located under /opt/oracle/oracle-spatial-graph/spatial/raster/examples/java/cmd.

After you have executed the scripts and validated the results, you can modify the Java source files to experiment on them and compile them using the provided script /opt/oracle/oracle-spatial-graph/spatial/raster/examples/java/build.xml. Ensure that you have write access on the /opt/oracle/oracle-spatial-graph/spatial/raster/jlib directory.

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.7 Using the Oracle Spatial Hadoop Raster Simulator Framework to Test Raster Processing

When you create custom processing classes. you can use the Oracle Spatial Hadoop Raster Simulator Framework to do the following by "pretending" to plug them into the Oracle Raster Processing Framework.

-

Develop user processing classes on a local computer

-

Avoid the need to deploy user processing classes in a cluster or in Big Data Lite to verify their correct functioning

-

Debug user processing classes

-

Use small local data sets

-

Create local debug outputs

-

Automate unit tests

The Simulator framework will emulate the loading and processing processes in your local environment, as if they were being executed in a cluster. You only need to create a Junit test case that loads one or more rasters and processes them according to your specification in XML or a configuration object.

Tiles are generated according to specified block size, so you must set a block size. The number of mappers and reducers to execute depends on the number of tiles, just as in regular cluster execution. OHIF files generated during the loading process are stored in local directory, because no HDFS is required.

-

Simulator (“Mock”) Objects

-

User Local Environment Requirements

-

Sample Test Cases to Load and Process Rasters

Simulator (“Mock”) Objects

To load rasters and convert them into .OHIF files that can be processed, a RasterLoaderJobMock must be executed. This class constructor receives the HadoopConfiguration that must include the block size, the directory or rasters to load, the output directory to store the OHIF files, and the gdal directory. The parameters representing the input files and the user configuration vary in terms of how you specify them:

-

Location Strings for catalog and user configuration XML file

-

Catalog object (

CatalogMock) -

Configuration objects (

MosaicProcessConfigurationMockorSingleProcessConfigurationMock) -

Location for a single raster processing and a user configuration (

MosaicProcessConfigurationMockorSingleProcessConfigurationMock)

User Local Environment Requirements

Before you create test cases, you need to configure your local environment.

-

1. Ensure that a directory has the native gdal libraries,

gdal-dataandlibproj.For Linux:

-

Follow the steps in Getting and Compiling the Cartographic Projections Library to obtain

libproj.so. -

Get the gdal distribution from the Spatial installation on your cluster or BigDataLite VM at

/opt/oracle/oracle-spatial-graph/spatial/raster/gdal. -

Move

libproj.soto your local gdal directory undergdal/libwith the rest of the native gdal libraries.

For Windows:

-

Get the gdal distribution from your Spatial install on your cluster or BigDataLite VM at

/opt/oracle/oracle-spatial-graph/spatial/raster/examples/java/mock/lib/gdal_windows.x64.zip. -

Be sure that Visual Studio installed. When you install it, make sure you select the Common Tools for Visual C++.

-

Download the PROJ 4 source code, version branch 4.9 from https://trac.osgeo.org/proj4j.

-

Open the Visual Studio Development Command Prompt and type:

cd PROJ4/src_dir nmake /f makefile.vc -

Move

proj.dllto your local gdal directory undergdal/binwith the rest of the native gdal libraries.

-

-

Add GDAL native libraries to system path.

For Linux: Export LD_LIBRARY_PATH with corresponding native gdal libraries directory

For Windows: Add to the Path environment variable the native gdal libraries directory.

-

Ensure that the Java project has Junit libraries.

-

Ensure that the Java project has the following Hadoop jar and Oracle Image Processing Framework files in the classpath You may get them from the Oracle BigDataLite VM or from your cluster; these are all jars included in the Hadoop distribution, and for specific framework jars, go to

/opt/oracle/oracle-spatial-graph/spatial/raster/jlib:(In the following list,

VERSION_INCLUDEDrefers to the version number from the Hadoop installation containing the files; it can be a BDA cluster or a BigDataLite VM.)commons-collections-VERSION_INCLUDED.jar commons-configuration-VERSION_INCLUDED.jar commons-lang-VERSION_INCLUDED.jar commons-logging-VERSION_INCLUDED.jar commons-math3-VERSION_INCLUDED.jar gdal.jar guava-VERSION_INCLUDED.jar hadoop-auth-VERSION_INCLUDED-cdhVERSION_INCLUDED.jar hadoop-common-VERSION_INCLUDED-cdhVERSION_INCLUDED.jar hadoop-imageloader.jar hadoop-imagemocking-fwk.jar hadoop-imageprocessor.jar hadoop-mapreduce-client-core-VERSION_INCLUDED-cdhVERSION_INCLUDED.jar hadoop-raster-fwk-api.jar jackson-core-asl-VERSION_INCLUDED.jar jackson-mapper-asl-VERSION_INCLUDED.jar log4j-VERSION_INCLUDED.jar slf4j-api-VERSION_INCLUDED.jar slf4j-log4j12-VERSION_INCLUDED.jar

Sample Test Cases to Load and Process Rasters

After your Java project is prepared for your test cases, you can test the loading and processing of rasters.

The following example creates a class with asetUp method to configure the directories for gdal, the rasters to load, your configuration XML files, the output thumbnails, ohif files, and process results. It also configures the block size (8 MB). (A small block size is recommended for single computers.) /**

* Set the basic directories before starting the test execution

*/

@Before

public void setUp(){

String sharedDir = "C:\\Users\\zherena\\Oracle Stuff\\Hadoop\\Release 4\\MockTest";

String allAccessDir = sharedDir + "/out/";

gdalDir = sharedDir + "/gdal";

directoryToLoad = allAccessDir + "rasters";

xmlDir = sharedDir + "/xmls/";

outputDir = allAccessDir;

blockSize = 8;

}

The following example creates a RasterLoaderJobMock object, and sets the rasters to load and the output path for OHIF files:

/**

* Loads a directory of rasters, and generate ohif files and thumbnails

* for all of them

* @throws Exception if there is a problem during load process

*/

@Test

public void basicLoad() throws Exception {

System.out.println("***LOAD OF DIRECTORY WITHOUT EXPANSION***");

HadoopConfiguration conf = new HadoopConfiguration();

conf.setBlockSize(blockSize);

System.out.println("Set block size of: " +

conf.getProperty("dfs.blocksize"));

RasterLoaderJobMock loader = new RasterLoaderJobMock(conf,

outputDir, directoryToLoad, gdalDir);

//Puts the ohif file directly in the specified output directory

loader.dontExpandOutputDir();

System.out.println("Starting execution");

System.out.println("------------------------------------------------------------------------------------------------------------");

loader.waitForCompletion();

System.out.println("Finished loader");

System.out.println("LOAD OF DIRECTORY WITHOUT EXPANSION ENDED");

System.out.println();

System.out.println();

}

The following example specifies catalog and user configuration XML files to the RasterProcessorJobMock object. Make sure your catalog xml points to the correct location of your local OHIF files.

/**

* Creates a mosaic raster by using configuration and catalog xmls.

* Only two bands are selected per raster.

* @throws Exception if there is a problem during mosaic process.

*/

@Test

public void mosaicUsingXmls() throws Exception {

System.out.println("***MOSAIC PROCESS USING XMLS***");

HadoopConfiguration conf = new HadoopConfiguration();

conf.setBlockSize(blockSize);

System.out.println("Set block size of: " +

conf.getProperty("dfs.blocksize"));

String catalogXml = xmlDir + "catalog.xml";

String configXml = xmlDir + "config.xml";

RasterProcessorJobMock processor = new RasterProcessorJobMock(conf, configXml, catalogXml, gdalDir);

System.out.println("Starting execution");

System.out.println("------------------------------------------------------------------------------------------------------------");

processor.waitForCompletion();

System.out.println("Finished processor");

System.out.println("***********************************************MOSAIC PROCESS USING XMLS ENDED***********************************************");

System.out.println();

System.out.println();

Additional examples using the different supported configurations for RasterProcessorJobMock are provided in /opt/oracle/oracle-spatial-graph/spatial/raster/examples/java/mock/src.They include an example using an external processing class, which is also included and can be debugged.

Parent topic: Using Big Data Spatial and Graph with Spatial Data

2.8 Oracle Big Data Spatial Raster Processing for Spark

Oracle Big Data Spatial Raster Processing for Apache Spark is a spatial raster processing API for Java.

This API allows the creation of new combined images resulting from a series of user-defined processing phases, with the following features:

-

HDFS images storage, where every block size split is stored as a separate tile, ready for future independent processing

-

Subset, mosaic, and raster algebra operations processed in parallel using Spark to divide the processing.

-

Support for GDAL formats, multiple bands images, DEMs (digital elevation models), multiple pixel depths, and SRIDs

Currently the API supports Spark 1.6 and Spark 2.2. The only visible change in the API is the substitution of Dataframe with Dataset<Row> in the results of Spark 2.2 SQL queries.