5 Using Oracle XQuery for Apache Hadoop

This chapter explains how to use Oracle XQuery for Apache Hadoop (Oracle XQuery for Hadoop) to extract and transform large volumes of semistructured data. It contains the following sections:

What Is Oracle XQuery for Hadoop?

Oracle XQuery for Hadoop is a transformation engine for semistructured big data. Oracle XQuery for Hadoop runs transformations expressed in the XQuery language by translating them into a series of MapReduce jobs, which are executed in parallel on an Apache Hadoop cluster. You can focus on data movement and transformation logic, instead of the complexities of Java and MapReduce, without sacrificing scalability or performance.

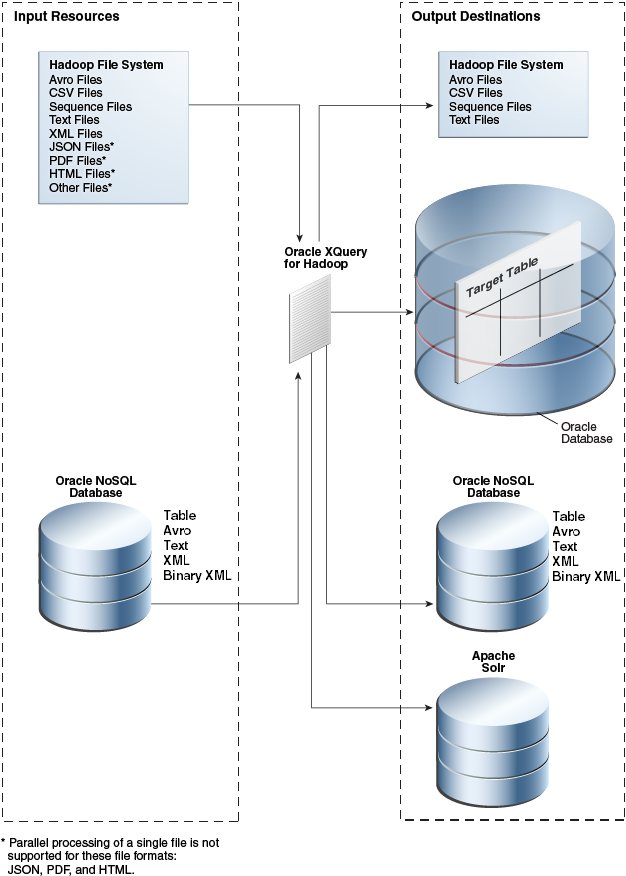

The input data can be located in a file system accessible through the Hadoop File System API, such as the Hadoop Distributed File System (HDFS), or stored in Oracle NoSQL Database. Oracle XQuery for Hadoop can write the transformation results to Hadoop files, Oracle NoSQL Database, or Oracle Database.

Oracle XQuery for Hadoop also provides extensions to Apache Hive to support massive XML files.

Oracle XQuery for Hadoop is based on mature industry standards including XPath, XQuery, and XQuery Update Facility. It is fully integrated with other Oracle products, which enables Oracle XQuery for Hadoop to:

-

Load data efficiently into Oracle Database using Oracle Loader for Hadoop.

-

Provide read and write support to Oracle NoSQL Database.

The following figure provides an overview of the data flow using Oracle XQuery for Hadoop.

Figure 5-1 Oracle XQuery for Hadoop Data Flow

Description of "Figure 5-1 Oracle XQuery for Hadoop Data Flow"

Get Started With Oracle XQuery for Hadoop

Oracle XQuery for Hadoop is designed for use by XQuery developers. If you are already familiar with XQuery, then you are ready to begin. However, if you are new to XQuery, then you must first acquire the basics of the language. This guide does not attempt to cover this information.

Example: Hello World!

Follow these steps to create and run a simple query using Oracle XQuery for Hadoop:

-

Create a text file named

hello.txtin the current directory that contains the lineHello.$ echo "Hello" > hello.txt

-

Copy the file to HDFS:

$ hdfs dfs -copyFromLocal hello.txt

-

Create a query file named

hello.xqin the current directory with the following content:import module "oxh:text"; for $line in text:collection("hello.txt") return text:put($line || " World!") -

Run the query:

$ hadoop jar $OXH_HOME/lib/oxh.jar hello.xq -output ./myout -print 13/11/21 02:41:57 INFO hadoop.xquery: OXH: Oracle XQuery for Hadoop 4.2.0 ((build 4.2.0-cdh5.0.0-mr1 @mr2). Copyright (c) 2014, Oracle. All rights reserved. 13/11/21 02:42:01 INFO hadoop.xquery: Submitting map-reduce job "oxh:hello.xq#0" id="3593921f-c50c-4bb8-88c0-6b63b439572b.0", inputs=[hdfs://bigdatalite.localdomain:8020/user/oracle/hello.txt], output=myout . . . -

Check the output file:

$ hdfs dfs -cat ./myout/part-m-00000 Hello World!

About the Oracle XQuery for Hadoop Functions

Oracle XQuery for Hadoop reads from and writes to big data sets using collection and put functions:

-

A collection function reads data from Hadoop files or Oracle NoSQL Database as a collection of items. A Hadoop file is one that is accessible through the Hadoop File System API. On Oracle Big Data Appliance and most Hadoop clusters, this file system is Hadoop Distributed File System (HDFS).

-

A put function adds a single item to a data set stored in Oracle Database, Oracle NoSQL Database, or a Hadoop file.

The following is a simple example of an Oracle XQuery for Hadoop query that reads items from one source and writes to another:

for $x in collection(...) return put($x)

Oracle XQuery for Hadoop comes with a set of adapters that you can use to define put and collection functions for specific formats and sources. Each adapter has two components:

-

A set of built-in put and collection functions that are predefined for your convenience.

-

A set of XQuery function annotations that you can use to define custom put and collection functions.

Other commonly used functions are also included in Oracle XQuery for Hadoop.

About the Adapters

Following are brief descriptions of the Oracle XQuery for Hadoop adapters.

- Avro File Adapter

-

The Avro file adapter provides access to Avro container files stored in HDFS. It includes collection and put functions for reading from and writing to Avro container files.

- JSON File Adapter

-

The JSON file adapter provides access to JSON files stored in HDFS. It contains a collection function for reading JSON files, and a group of helper functions for parsing JSON data directly. You must use another adapter to write the output.

- Oracle Database Adapter

-

The Oracle Database adapter loads data into Oracle Database. This adapter supports a custom put function for direct output to a table in an Oracle database using JDBC or OCI. If a live connection to the database is not available, the adapter also supports output to Data Pump or delimited text files in HDFS; the files can be loaded into the Oracle database with a different utility, such as SQL*Loader, or using external tables. This adapter does not move data out of the database, and therefore does not have collection or get functions.

See "Software Requirements" for the supported versions of Oracle Database.

- Oracle NoSQL Database Adapter

-

The Oracle NoSQL Database adapter provides access to data stored in Oracle NoSQL Database. The data can be read from or written as Table, Avro, XML, binary XML, or text. This adapter includes collection, get, and put functions.

- Sequence File Adapter

-

The sequence file adapter provides access to Hadoop sequence files. A sequence file is a Hadoop format composed of key-value pairs.

This adapter includes collection and put functions for reading from and writing to HDFS sequence files that contain text, XML, or binary XML.

- Solr Adapter

-

The Solr adapter provides functions to create full-text indexes and load them into Apache Solr servers.

- Text File Adapter

-

The text file adapter provides access to text files, such as CSV files. It contains collection and put functions for reading from and writing to text files.

The JSON file adapter extends the support for JSON objects stored in text files.

- XML File Adapter

-

The XML file adapter provides access to XML files stored in HDFS. It contains collection functions for reading large XML files. You must use another adapter to write the output.

About Other Modules for Use With Oracle XQuery for Hadoop

You can use functions from these additional modules in your queries:

- Standard XQuery Functions

-

The standard XQuery math functions are available.

- Hadoop Functions

-

The Hadoop module is a group of functions that are specific to Hadoop.

- Duration, Date, and Time Functions

-

This group of functions parse duration, date, and time values.

- String-Processing Functions

-

These functions add and remove white space that surrounds data values.

Create an XQuery Transformation

This chapter describes how to create XQuery transformations using Oracle XQuery for Hadoop. It contains the following topics:

XQuery Transformation Requirements

You create a transformation for Oracle XQuery for Hadoop the same way as any other XQuery transformation, except that you must comply with these additional requirements:

-

The main XQuery expression (the query body) must be in one of the following forms:

FLWOR1

or

(FLWOR1, FLWOR2,... , FLWORN)

In this syntax FLWOR is a top-level XQuery FLWOR expression "For, Let, Where, Order by, Return" expression.

-

Each top-level FLWOR expression must have a

forclause that iterates over an Oracle XQuery for Hadoopcollectionfunction. Thisforclause cannot have a positional variable.See Oracle XQuery for Apache Hadoop Reference for the

collectionfunctions. -

Each top-level FLWOR expression can have optional

let,where, andgroup byclauses. Other types of clauses are invalid, such asorder by,count, andwindowclauses. -

Each top-level FLWOR expression must return one or more results from calling an Oracle XQuery for Hadoop

putfunction. See Oracle XQuery for Apache Hadoop Reference for theputfunctions. -

The query body must be an updating expression. Because all

putfunctions are classified as updating functions, all Oracle XQuery for Hadoop queries are updating queries.In Oracle XQuery for Hadoop, a

%*:putannotation indicates that the function is updating. The%updatingannotation orupdatingkeyword is not required with it.See Also:

-

"FLWOR Expressions" in XQuery 3.1: An XML Query Language

-

For a description of updating expressions, "Extensions to XQuery 1.0" in W3C XQuery Update Facility 1.0

-

About XQuery Language Support

Oracle XQuery for Hadoop supports W3C XQuery 3.1, except for the following:

-

FLWOR window clause

-

FLWOR count clause

-

namespace constructors

-

fn:parse-ietf-date

-

fn:transform

-

higher order XQuery functions

For the language, see W3C XQuery 3.1: An XML Query Language .

For the functions, see W3C XPath and XQuery Functions and Operators .

Accessing Data in the Hadoop Distributed Cache

You can use the Hadoop distributed cache facility to access auxiliary job data. This mechanism can be useful in a join query when one side is a relatively small file. The query might execute faster if the smaller file is accessed from the distributed cache.

To place a file into the distributed cache, use the -files Hadoop command line option when calling Oracle XQuery for Hadoop. For a query to read a file from the distributed cache, it must call the fn:doc function for XML, and either fn:unparsed-text or fn:unparsed-text-lines for text files. See Example 5-7.

Call Custom Java Functions from XQuery

Oracle XQuery for Hadoop is extensible with custom external functions implemented in the Java language. A Java implementation must be a static method with the parameter and return types as defined by the XQuery API for Java (XQJ) specification.

A custom Java function binding is defined in Oracle XQuery for Hadoop by annotating an external function definition with the %ora-java:binding annotation. This annotation has the following syntax:

%ora-java:binding("java.class.name[#method]")- java.class.name

-

The fully qualified name of a Java class that contains the implementation method.

- method

-

A Java method name. It defaults to the XQuery function name. Optional.

See Example 5-8 for an example of %ora-java:binding.

All JAR files that contain custom Java functions must be listed in the -libjars command line option. For example:

hadoop jar $OXH_HOME/lib/oxh.jar -libjars myfunctions.jar query.xq

Access User-Defined XQuery Library Modules and XML Schemas

Oracle XQuery for Hadoop supports user-defined XQuery library modules and XML schemas when you comply with these criteria:

-

Locate the library module or XML schema file in the same directory where the main query resides on the client calling Oracle XQuery for Hadoop.

-

Import the library module or XML schema from the main query using the location URI parameter of the

import moduleorimport schemastatement. -

Specify the library module or XML schema file in the

-filescommand line option when calling Oracle XQuery for Hadoop.

For an example of using user-defined XQuery library modules and XML schemas, see Example 5-9.

See Also:

"Location URIs" in XQuery 3.1: An XML Query Language

XQuery Transformation Examples

For these examples, the following text files are in HDFS. The files contain a log of visits to different web pages. Each line represents a visit to a web page and contains the time, user name, page visited, and the status code.

mydata/visits1.log 2013-10-28T06:00:00, john, index.html, 200 2013-10-28T08:30:02, kelly, index.html, 200 2013-10-28T08:32:50, kelly, about.html, 200 2013-10-30T10:00:10, mike, index.html, 401 mydata/visits2.log 2013-10-30T10:00:01, john, index.html, 200 2013-10-30T10:05:20, john, about.html, 200 2013-11-01T08:00:08, laura, index.html, 200 2013-11-04T06:12:51, kelly, index.html, 200 2013-11-04T06:12:40, kelly, contact.html, 200

Example 5-1 Basic Filtering

This query filters out pages visited by user kelly and writes those files into a text file:

import module "oxh:text";

for $line in text:collection("mydata/visits*.log")

let $split := fn:tokenize($line, "\s*,\s*")

where $split[2] eq "kelly"

return text:put($line)

The query creates text files in the output directory that contain the following lines:

2013-11-04T06:12:51, kelly, index.html, 200 2013-11-04T06:12:40, kelly, contact.html, 200 2013-10-28T08:30:02, kelly, index.html, 200 2013-10-28T08:32:50, kelly, about.html, 200

Example 5-2 Group By and Aggregation

The next query computes the number of page visits per day:

import module "oxh:text";

for $line in text:collection("mydata/visits*.log")

let $split := fn:tokenize($line, "\s*,\s*")

let $time := xs:dateTime($split[1])

let $day := xs:date($time)

group by $day

return text:put($day || " => " || fn:count($line))

The query creates text files that contain the following lines:

2013-10-28 => 3 2013-10-30 => 3 2013-11-01 => 1 2013-11-04 => 2

Example 5-3 Inner Joins

This example queries the following text file in HDFS, in addition to the other files. The file contains user profile information such as user ID, full name, and age, separated by colons (:).

mydata/users.txt john:John Doe:45 kelly:Kelly Johnson:32 laura:Laura Smith: phil:Phil Johnson:27

The following query performs a join between users.txt and the log files. It computes how many times users older than 30 visited each page.

import module "oxh:text";

for $userLine in text:collection("mydata/users.txt")

let $userSplit := fn:tokenize($userLine, "\s*:\s*")

let $userId := $userSplit[1]

let $userAge := xs:integer($userSplit[3][. castable as xs:integer])

for $visitLine in text:collection("mydata/visits*.log")

let $visitSplit := fn:tokenize($visitLine, "\s*,\s*")

let $visitUserId := $visitSplit[2]

where $userId eq $visitUserId and $userAge gt 30

group by $page := $visitSplit[3]

return text:put($page || " " || fn:count($userLine))

The query creates text files that contain the following lines:

about.html 2 contact.html 1 index.html 4

The next query computes the number of visits for each user who visited any page; it omits users who never visited any page.

import module "oxh:text";

for $userLine in text:collection("mydata/users.txt")

let $userSplit := fn:tokenize($userLine, "\s*:\s*")

let $userId := $userSplit[1]

for $visitLine in text:collection("mydata/visits*.log")

[$userId eq fn:tokenize(., "\s*,\s*")[2]]

group by $userId

return text:put($userId || " " || fn:count($visitLine))

The query creates text files that contain the following lines:

john 3 kelly 4 laura 1

Note:

When the results of two collection functions are joined, only equijoins are supported. If one or both sources are not from a collection function, then any join condition is allowed.

Example 5-4 Left Outer Joins

This example is similar to the second query in Example 5-3, but also counts users who did not visit any page.

import module "oxh:text";

for $userLine in text:collection("mydata/users.txt")

let $userSplit := fn:tokenize($userLine, "\s*:\s*")

let $userId := $userSplit[1]

for $visitLine allowing empty in text:collection("mydata/visits*.log")

[$userId eq fn:tokenize(., "\s*,\s*")[2]]

group by $userId

return text:put($userId || " " || fn:count($visitLine))

The query creates text files that contain the following lines:

john 3 kelly 4 laura 1 phil 0

Example 5-5 Semijoins

The next query finds users who have ever visited a page:

import module "oxh:text";

for $userLine in text:collection("mydata/users.txt")

let $userId := fn:tokenize($userLine, "\s*:\s*")[1]

where some $visitLine in text:collection("mydata/visits*.log")

satisfies $userId eq fn:tokenize($visitLine, "\s*,\s*")[2]

return text:put($userId)

The query creates text files that contain the following lines:

john kelly laura

Example 5-6 Multiple Outputs

The next query finds web page visits with a 401 code and writes them to trace* files using the XQuery text:trace() function. It writes the remaining visit records into the default output files.

import module "oxh:text";

for $visitLine in text:collection("mydata/visits*.log")

let $visitCode := xs:integer(fn:tokenize($visitLine, "\s*,\s*")[4])

return if ($visitCode eq 401) then text:trace($visitLine) else text:put($visitLine)

The query generates a trace* text file that contains the following line:

2013-10-30T10:00:10, mike, index.html, 401

The query also generates default output files that contain the following lines:

2013-10-30T10:00:01, john, index.html, 200 2013-10-30T10:05:20, john, about.html, 200 2013-11-01T08:00:08, laura, index.html, 200 2013-11-04T06:12:51, kelly, index.html, 200 2013-11-04T06:12:40, kelly, contact.html, 200 2013-10-28T06:00:00, john, index.html, 200 2013-10-28T08:30:02, kelly, index.html, 200 2013-10-28T08:32:50, kelly, about.html, 200

Example 5-7 Accessing Auxiliary Input Data

The next query is an alternative version of the second query in Example 5-3, but it uses the fn:unparsed-text-lines function to access a file in the Hadoop distributed cache:

import module "oxh:text";

for $visitLine in text:collection("mydata/visits*.log")

let $visitUserId := fn:tokenize($visitLine, "\s*,\s*")[2]

for $userLine in fn:unparsed-text-lines("users.txt")

let $userSplit := fn:tokenize($userLine, "\s*:\s*")

let $userId := $userSplit[1]

where $userId eq $visitUserId

group by $userId

return text:put($userId || " " || fn:count($visitLine))

The hadoop command to run the query must use the Hadoop -files option. See "Accessing Data in the Hadoop Distributed Cache."

hadoop jar $OXH_HOME/lib/oxh.jar -files users.txt query.xq

The query creates text files that contain the following lines:

john 3 kelly 4 laura 1

Example 5-8 Calling a Custom Java Function from XQuery

The next query formats input data using the java.lang.String#format method.

import module "oxh:text";

declare %ora-java:binding("java.lang.String#format")

function local:string-format($pattern as xs:string, $data as xs:anyAtomicType*) as xs:string external;

for $line in text:collection("mydata/users*.txt")

let $split := fn:tokenize($line, "\s*:\s*")

return text:put(local:string-format("%s,%s,%s", $split))

The query creates text files that contain the following lines:

john,John Doe,45 kelly,Kelly Johnson,32 laura,Laura Smith, phil,Phil Johnson,27

See Also:

Java Platform Standard Edition 7 API Specification for Class String.

Example 5-9 Using User-Defined XQuery Library Modules and XML Schemas

This example uses a library module named mytools.xq:

module namespace mytools = "urn:mytools";

declare %ora-java:binding("java.lang.String#format")

function mytools:string-format($pattern as xs:string, $data as xs:anyAtomicType*) as xs:string external;

The next query is equivalent to the previous one, but it calls a string-format function from the mytools.xq library module:

import module namespace mytools = "urn:mytools" at "mytools.xq";

import module "oxh:text";

for $line in text:collection("mydata/users*.txt")

let $split := fn:tokenize($line, "\s*:\s*")

return text:put(mytools:string-format("%s,%s,%s", $split))

The query creates text files that contain the following lines:

john,John Doe,45 kelly,Kelly Johnson,32 laura,Laura Smith, phil,Phil Johnson,27

Example 5-10 Filtering Dirty Data Using a Try/Catch Expression

The XQuery try/catch expression can be used to broadly handle cases where input data is in an unexpected form, corrupted, or missing. The next query finds reads an input file, ages.txt, that contains a username followed by the user’s age.

USER AGE

------------------

john 45

kelly

laura 36

phil OLD!Notice that the first two lines of this file contain header text and that the entries for Kelly and Phil have missing and dirty age values. For each user in this file, the query writes out the user name and whether the user is over 40 or not.

import module "oxh:text";

for $line in text:collection("ages.txt")

let $split := fn:tokenize($line, "\s+")

return

try {

let $user := $split[1]

let $age := $split[2] cast as xs:integer

return

if ($age gt 40) then

text:put($user || " is over 40")

else

text:put($user || " is not over 40")

} catch * {

text:trace($err:code || " : " || $line)

}

The query generates an output text file that contains the following lines:

john is over 40

laura is not over 40The query also generates a trace* file that contains the following lines:

err:FORG0001 : USER AGE

err:XPTY0004 : ------------------

err:XPTY0004 : kelly

err:FORG0001 : phil OLD!

Run Queries

To run a query, call the oxh utility using the hadoop jar command. The following is the basic syntax:

hadoop jar $OXH_HOME/lib/oxh.jar [generic options] query.xq -output directory [-clean] [-ls] [-print] [-sharelib hdfs_dir][-skiperrors] [-version]Oracle XQuery for Hadoop Options

- query.xq

-

Identifies the XQuery file. See "Create an XQuery Transformation."

- -clean

-

Deletes all files from the output directory before running the query. If you use the default directory, Oracle XQuery for Hadoop always cleans the directory, even when this option is omitted.

- -exportliboozie directory

-

Copies Oracle XQuery for Hadoop dependencies to the specified directory. Use this option to add Oracle XQuery for Hadoop to the Hadoop distributed cache and the Oozie shared library. External dependencies are also copied, so ensure that environment variables such as

KVHOME,OLH_HOME, andOXH_SOLR_MR_HOMEare set for use by the related adapters (Oracle NoSQL Database, Oracle Database, and Solr). - -ls

-

Lists the contents of the output directory after the query executes.

- -output directory

-

Specifies the output directory of the query. The put functions of the file adapters create files in this directory. Written values are spread across one or more files. The number of files created depends on how the query is distributed among tasks. The default output directory is

/tmp/oxh-user_name/output.See "About the Oracle XQuery for Hadoop Functions" for a description of put functions.

-

Prints the contents of all files in the output directory to the standard output (your screen). When printing Avro files, each record prints as JSON text.

- -sharelib hdfs_dir

-

Specifies the HDFS folder location containing Oracle XQuery for Hadoop and third-party libraries.

- -skiperrors

-

Turns on error recovery, so that an error does not halt processing.

All errors that occur during query processing are counted, and the total is logged at the end of the query. The error messages of the first 20 errors per task are also logged. See these configuration properties:

- -version

-

Displays the Oracle XQuery for Hadoop version and exits without running a query.

Generic Options

You can include any generic hadoop command-line option. Oracle XQuery for Hadoop implements the org.apache.hadoop.util.Tool interface and follows the standard Hadoop methods for building MapReduce applications.

The following generic options are commonly used with Oracle XQuery for Hadoop:

- -conf job_config.xml

-

Identifies the job configuration file. See "Oracle XQuery for Hadoop Configuration Properties."

When you work with the Oracle Database or Oracle NoSQL Database adapters, you can set various job properties in this file. See "Oracle Loader for Hadoop Configuration Properties and Corresponding %oracle-property Annotations" and "Oracle NoSQL Database Adapter Configuration Properties".

- -D property=value

-

Identifies a configuration property. See "Oracle XQuery for Hadoop Configuration Properties."

- -files

-

Specifies a comma-delimited list of files that are added to the distributed cache. See "Accessing Data in the Hadoop Distributed Cache."

See Also:

For full descriptions of the generic options, go to

About Running Queries Locally

When developing queries, you can run them locally before submitting them to the cluster. A local run enables you to see how the query behaves on small data sets and diagnose potential problems quickly.

In local mode, relative URIs resolve against the local file system instead of HDFS, and the query runs in a single process.

To run a query in local mode:

-

Set the Hadoop

-jtand-fsgeneric arguments tolocal. This example runs the query described in "Example: Hello World!" in local mode:$ hadoop jar $OXH_HOME/lib/oxh.jar -jt local -fs local ./hello.xq -output ./myoutput -print

-

Check the result file in the local output directory of the query, as shown in this example:

$ cat ./myoutput/part-m-00000 Hello World!

Run Queries from Apache Oozie

Apache Oozie is a workflow tool that enables you to run multiple MapReduce jobs in a specified order and, optionally, at a scheduled time. Oracle XQuery for Hadoop provides an Oozie action node that you can use to run Oracle XQuery for Hadoop queries from an Oozie workflow.

Use Oozie with Oracle XQuery for Hadoop Action

Follow these steps to execute your queries in an Oozie workflow:

Supported XML Elements

The Oracle XQuery for Hadoop action extends Oozie's Java action. It supports the following optional child XML elements with the same syntax and semantics as the Java action:

-

archive -

configuration -

file -

job-tracker -

job-xml -

name-node -

prepare

See Also:

The Java action description in the Oozie Specification at

https://oozie.apache.org/docs/4.0.0/WorkflowFunctionalSpec.html#a3.2.7_Java_Action

In addition, the Oracle XQuery for Hadoop action supports the following elements:

-

script: The location of the Oracle XQuery for Hadoop query file. Required.The query file must be in the workflow application directory. A relative path is resolved against the application directory.

Example:

<script>myquery.xq</script> -

output: The output directory of the query. Required.The

outputelement has an optionalcleanattribute. Set this attribute totrueto delete the output directory before the query is run. If the output directory already exists and thecleanattribute is either not set or set tofalse, an error occurs. The output directory cannot exist when the job runs.Example:

<output clean="true">/user/jdoe/myoutput</output>

Any error raised while running the query causes Oozie to perform the error transition for the action.

Example: Hello World

This example uses the following files:

-

workflow.xml: Describes an Oozie action that sets two configuration values for the query inhello.xq: an HDFS file and the stringWorld!The HDFS input file is

/user/jdoe/data/hello.txtand contains this string:Hello

See Example 5-11.

-

hello.xq: Runs a query using Oracle XQuery for Hadoop.See Example 5-12.

-

job.properties: Lists the job properties for Oozie. See Example 5-13.

To run the example, use this command:

oozie job -oozie http://example.com:11000/oozie -config job.properties -run

After the job runs, the /user/jdoe/myoutput output directory contains a file with the text "Hello World!"

Example 5-11 The workflow.xml File for Hello World

This file is named /user/jdoe/hello-oozie-oxh/workflow.xml. It uses variables that are defined in the job.properties file.

<workflow-app xmlns="uri:oozie:workflow:0.4" name="oxh-helloworld-wf">

<start to="hello-node"/>

<action name="hello-node">

<oxh xmlns="oxh:oozie-action:v1">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<!--

The configuration can be used to parameterize the query.

-->

<configuration>

<property>

<name>myinput</name>

<value>${nameNode}/user/jdoe/data/src.txt</value>

</property>

<property>

<name>mysuffix</name>

<value> World!</value>

</property>

</configuration>

<script>hello.xq</script>

<output clean="true">${nameNode}/user/jdoe/myoutput</output>

</oxh>

<ok to="end"/>

<error to="fail"/>

</action>

<kill name="fail">

<message>OXH failed: [${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<end name="end"/>

</workflow-app>Example 5-12 The hello.xq File for Hello World

This file is named /user/jdoe/hello-oozie-oxh/hello.xq.

import module "oxh:text";

declare variable $input := oxh:property("myinput");

declare variable $suffix := oxh:property("mysuffix");

for $line in text:collection($input)

return

text:put($line || $suffix)Example 5-13 The job.properties File for Hello World

oozie.wf.application.path=hdfs://example.com:8020/user/jdoe/hello-oozie-oxh nameNode=hdfs://example.com:8020 jobTracker=hdfs://example.com:8032 oozie.use.system.libpath=true

Oracle XQuery for Hadoop Configuration Properties

Oracle XQuery for Hadoop uses the generic methods of specifying configuration properties in the hadoop command. You can use the -conf option to identify configuration files, and the -D option to specify individual properties. See "Run Queries."

See Also:

Hadoop documentation for job configuration files at

| Property | Description | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

oracle.hadoop.xquery.lib.share |

Type: String Default Value: Not defined. Description: Identifies an HDFS directory that contains the libraries for Oracle XQuery for Hadoop and third-party software. For example: http://path/to/shared/folder All HDFS files must be in the same directory. Alternatively, use the Pattern Matching: You can use pattern matching characters in a directory name. If multiple directories match the pattern, then the directory with the most recent modification timestamp is used. To specify a directory name, use alphanumeric characters and, optionally, any of the following special, pattern matching characters:

Oozie libraries: The value |

||||||||||||||||||

|

oracle.hadoop.xquery.output |

Type: String Default Value: Description: Sets the output directory for the query. This property is equivalent to the |

||||||||||||||||||

|

oracle.hadoop.xquery.scratch |

Type: String Default Value: Description: Sets the HDFS temp directory for Oracle XQuery for Hadoop to store temporary files. |

||||||||||||||||||

|

oracle.hadoop.xquery.timezone |

Type: String Default Value: Client system time zone Description: The XQuery implicit time zone, which is used in a comparison or arithmetic operation when a date, time, or datetime value does not have a time zone. The value must be in the format described by the Java

|

||||||||||||||||||

|

oracle.hadoop.xquery.skiperrors |

Type: Boolean Default Value: Description: Set to |

||||||||||||||||||

|

oracle.hadoop.xquery.skiperrors.counters |

Type: Boolean Default Value: Description: Set to |

||||||||||||||||||

|

oracle.hadoop.xquery.skiperrors.max |

Type: Integer Default Value: Unlimited Description: Sets the maximum number of errors that a single MapReduce task can recover from. |

||||||||||||||||||

|

oracle.hadoop.xquery.skiperrors.log.max |

Type: Integer Default Value: 20 Description: Sets the maximum number of errors that a single MapReduce task logs. |

||||||||||||||||||

|

log4j.logger.oracle.hadoop.xquery |

Type: String Default Value: Not defined Description: Configures the |