2 Planning the Installation

This chapter provides information about planning your installation.

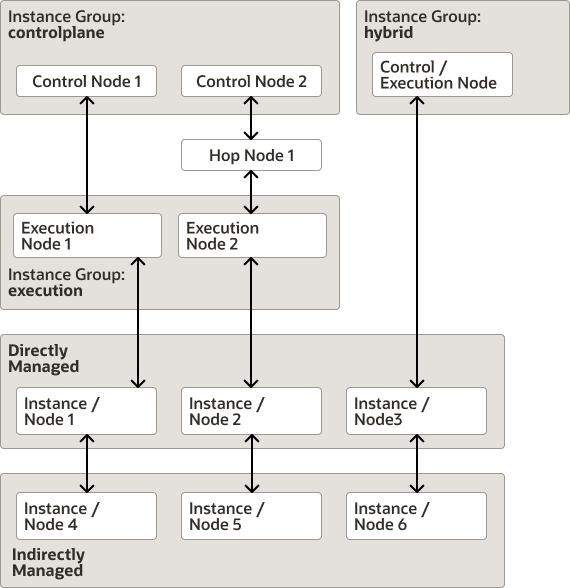

Oracle Linux Automation Manager Node Architecture

- Control Nodes: These nodes provide management functions such as launching system jobs,

inventory updates, and project synchronizations. Control nodes use

ansible-runner which in turn uses Podman to run jobs within the Control Plane

Execution Environment execution environments. The Control Plane

Execution Environment execution environment references the

olam-eecontainer image found on the Oracle Linux Container Registry. Control nodes do not run standard jobs. - Execution Nodes: These nodes run standard jobs using ansible-runner which in turn

uses Podman to run playbooks within OLAM EE execution environments. The OLAM

EE execution environment references the

olam-eecontainer image found on the Oracle Linux Container Registry. Execution nodes do not run management jobs. Execution nodes can also run custom execution environments that you can create using the Builder utility. For more information about custom execution environments, see Oracle Linux Automation Manager 2.1: Private Automation Hub Installation Guide. For more information about using Podman and the Oracle Linux Container Registry, see Oracle Linux: Podman User's Guide. - Hybrid Nodes: Hybrid nodes combine the functions of both control nodes and execution nodes into one node. Hybrid nodes are supported in single host Oracle Linux Automation Manager deployments, but not in clustered multi-host deployments.

- Hop Nodes: You can use hop nodes to connect control nodes to execution nodes within a cluster. Hop nodes cannot run playbooks and do not appear in instance groups. However, they do appear as part of the service mesh.

- Directly Managed Instances: Directly managed instances (nodes) are any virtual, physical, or software instances that Oracle Linux Automation Manager manages over an ssh connection.

- Indirectly Managed Instances: Indirectly managed instances (nodes) include any identifiable instance not directly connected to Oracle Linux Automation Manager, but managed by a device that is directly connected to Oracle Linux Automation Manager.

For example, the following image illustrates all node and instance types and some of the ways that they can be related to one another.

Installation Options

- Standalone Installation: All components are on the same host, including the database.

Figure 2-1 Standalone Installation with Local Database

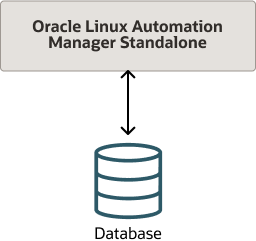

- Standalone Installation with Remote Database: All components are on the same host, with

the exception of the database which is on a remote host.

Figure 2-2 Standalone Installation with Remote Database

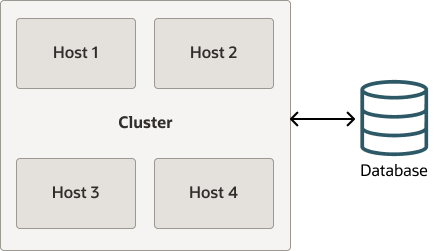

- Clustered Installation with Remote Database: Clustered installation can contain up to 20 nodes

with one or more control node, one or more execution nodes, and one or more hop nodes all

connected to one database. For example, the following shows a cluster with two control

plane nodes and two execution plane nodes, each on separate hosts, and all of them

connected to a remote database.

Figure 2-3 Clustered Installation with Remote Database

Service Mesh Topology Examples

There are a variety of ways that you can configure the Oracle Linux Automation Manager Service Mesh topology.

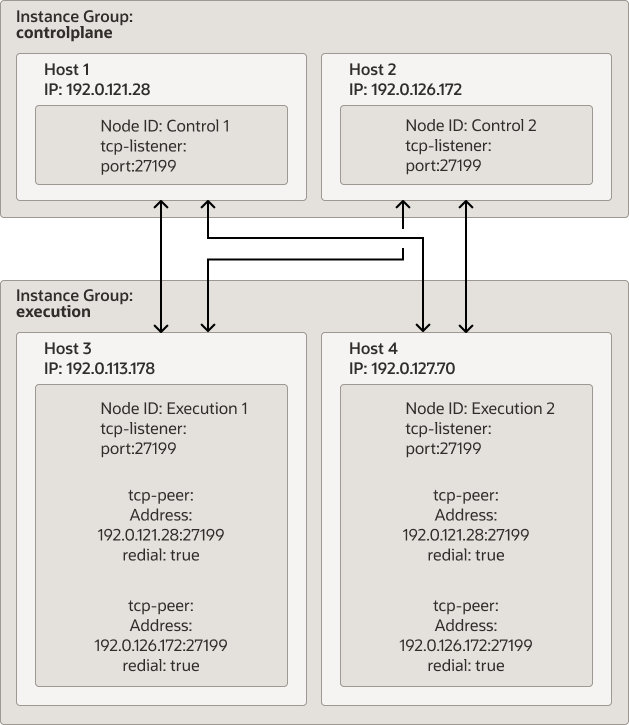

Example 1: Design the Service Mesh such that you have at least one backup control plane node and one backup execution plane node. For example, two control nodes and two execution nodes. Each execution plane node would have communication with both control plane nodes in case one of the control plane node were to fail. If the first execution plane node were to fail, the control plane node would switch to the second execution plane node.

Figure 2-4 Clustered Installation with Remote Database

- Configure the

/etc/receptor/receptor.conffile with the Node ID, tcp-listener, and tcp-peer addresses as required for each node. For more information about this task, see Configuring and Starting the Control Plane Service Mesh and Configuring and Starting the Execution Plane Service Mesh. - From a control plane node, log in as the awx user, and run the awx-manage command to do the

following:

- Provision each host's IP address or host name, and designate it as a control plane or execution

plane node type. For example, the following commands provision two control plane and

two execution plane nodes as illustrated in the figure

above:

awx-manage provision_instance --hostname=192.0.121.28 --node_type=control awx-manage provision_instance --hostname=192.0.126.72 --node_type=control awx-manage provision_instance --hostname=192.0.113.178 --node_type=execution awx-manage provision_instance --hostname=192.0.127.70 --node_type=execution - Register each node to either the

controlplaneor theexecutioninstance group, based on the type of node you designated for each node. The awx-manage command refers to instance groups as queuenames. For example, the following commands create the controlplane and execution instance groups and associates the two control plane and two exection plane nodes to each instance group as illustrated in the figure above:awx-manage register_queue --queuename=controlplane --hostnames=192.0.121.28 awx-manage register_queue --queuename=controlplane --hostnames=192.0.126.72 awx-manage register_queue --queuename=execution --hostnames=192.0.113.178 awx-manage register_queue --queuename=execution --hostnames=192.0.127.70 - Register the peer relationship between each node. Note that when you register a peer

relationship between a source IP address to a target IP address, the peer relationship

establishes bidirectional communication. For example, the following commands registers

the host IP address of the execution nodes as the source and each tcp-peer connection

are the targets, which are the control plane

nodes:

awx-manage register_peers 192.0.113.178 --peers 192.0.121.28 awx-manage register_peers 192.0.113.178 --peers 192.0.126.172 awx-manage register_peers 192.0.127.70 --peers 192.0.121.28 awx-manage register_peers 192.0.127.70 --peers 192.0.126.172The command must be run for each link you want to establish between nodes.

- Register each instance group as the default queuename for either the control plane or the

execution plane. This ensures that only control type jobs go to the control plane

instance group and only Oracle Linux Automation Engine jobs go to execution plane

instance group. To do this, you must edit the

/etc/tower/settings.pyfile with theDEFAULT_EXECUTION_QUEUE_NAMEand theDEFAULT_CONTROL_PLANE_QUEUE_NAMEparameters.

For more information about these steps, see Configuring the Control, Execution, and Hop Nodes.

- Provision each host's IP address or host name, and designate it as a control plane or execution

plane node type. For example, the following commands provision two control plane and

two execution plane nodes as illustrated in the figure

above:

- In some cases you may have an execution node that cannot be directly connected to a control plane node. In such cases you can connect the execution node to another execution node that is connected to the control node. This does introduce a risk such that if the intermediate execution node were to fail, then the connected execution node would become inaccessible to the control node.

- In some cases you may have an execution node that cannot be directly connected to a control plane node. In such cases you can connect the execution node to a hop node that is connected to the control node. This does introduce a risk such that if the intermediate hop node were to fail, then the connected execution node would become inaccessible to the control node.

- Establishing a peer relationship between control plane nodes. This ensures that control plane nodes are always directly accessible to one another. If no such relationship is established, then control plane nodes are aware of each other through connected execution plane nodes. For example, control A connects to control B through execution A which is connected to both.

Tuning Instances for Playbook Duration

Oracle Linux Automation Manager monitors jobs for status changes. For example, some job statuses are Running, Successful, Failed, Waiting, and so on. Normally the playbook being run triggers status changes as it makes progress in various ways. However, in some cases, the playbook will get stuck in the Running or Waiting state. When this happens, a reaper process automatically changes the state of the task from Running or Waiting to Failed. The default timer for when the reaper changes the status of a stuck job to the Failed state is 60 seconds.

If you have jobs that are designed to run longer than 60 seconds, then modify the

REAPER_TIMEOUT_SEC parameter to the /etc/tower/settings.py file.

Specify a time in seconds that is longer than the duration that your playbooks with the

longest duration is expected to run. This avoids scenarios where the reaper mistakenly sets a

long running playbook to the Failed state because the REAPER_TIMEOUT_SEC value has

expired.

A possible scenario could occur if you run many short and long duration playbooks together with a reaper that has a long timeout value. If one or more of the short duration playbooks run for longer than expected, (for example, because of a network outage making it impossible for these playbooks to complete) the reaper continues to track the status of the stuck short duration playbooks until they either get unstuck and transition to the Successful state or until the reaper timeout value is reached. This scenario should cause no performance difficulties if only a few such failures were to occur. However, if hundreds of such failures were to occur at the same time, Oracle Linux Automation Manager would waste resources on tracking these stuck jobs and could degrade the performance of the host processing the jobs.

For more information about setting the REAPER_TIMEOUT_SEC parameter, see Setting up Hosts.