Oracle Linux Virtualization Manager Provider

Use the olvm provider to create Kubernetes clusters on Oracle Linux Virtualization Manager.

Kubernetes clusters are deployed to Oracle Linux Virtualization Manager using the olvm provider. The olvm provider is an

implementation of the Kubernetes Cluster API. For information about the Kubernetes Cluster

API, see the upstream documentation. The olvm provider uses the oVirt REST API

to communicate with Oracle Linux Virtualization Manager. For information on the oVirt

REST API, see the oVirt REST API upstream documentation.

Creating a cluster on Oracle Linux Virtualization Manager requires you to provide the OAuth 2.0 credentials to an existing instance of Oracle Linux Virtualization Manager. For information on OAuth 2.0, see the oVirt upstream documentation.

The olvm provider implements an infrastructure Cluster controller

(OLVMCluster) Custom Resource Definition (CRD), and an infrastructure Machine controller

(OLVMMachine) CRD, as Cluster API controllers. For each cluster, one OLVMCluster Custom

Resource (CR) is needed, and an OLVMMachine CR for control plane nodes, and another for worker

nodes. The configuration to create these CRs is set in a cluster configuration file.

The controllers that implement the Kubernetes Cluster API run inside a Kubernetes cluster. These clusters are known as management clusters. Management clusters control the life cycle of other clusters, known as workload clusters. A workload cluster can be its own management cluster.

Using the Kubernetes Cluster API to deploy a cluster on Oracle Linux Virtualization Manager requires that a Kubernetes cluster is available to use as the management cluster. Any running cluster can be used. To set the management cluster, set the KUBECONFIG environment variable, or use the --kubeconfig option of ocne commands. You could also set this cluster using a configuration file. If no management cluster is available, a cluster is created automatically using the libvirt provider, with the default configuration.

When a workload cluster has been deployed, it's managed using the Cluster API resources in the management cluster.

A workload cluster can be its own management cluster. This is known as a self-managed cluster. In a self-managed cluster, when the workload cluster has been deployed, the Cluster API resources are migrated into the workload cluster.

The olvm provider can be deployed using IPv4 IP addresses, or as a dual

stack configuration using both IPv4 and IPv6 IP addresses. IPv6 on its own can't be used.

Note:

Dynamic Host Configuration Protocol (DHCP) can't be used to assign IP addresses to the VMs used for Kubernetes nodes. Instead, provide a range of IP addresses for VMs to use as Kubernetes nodes, and set an IP address for the built-in Keepalived and NGINX load balancer for control plane nodes (the virtual IP).

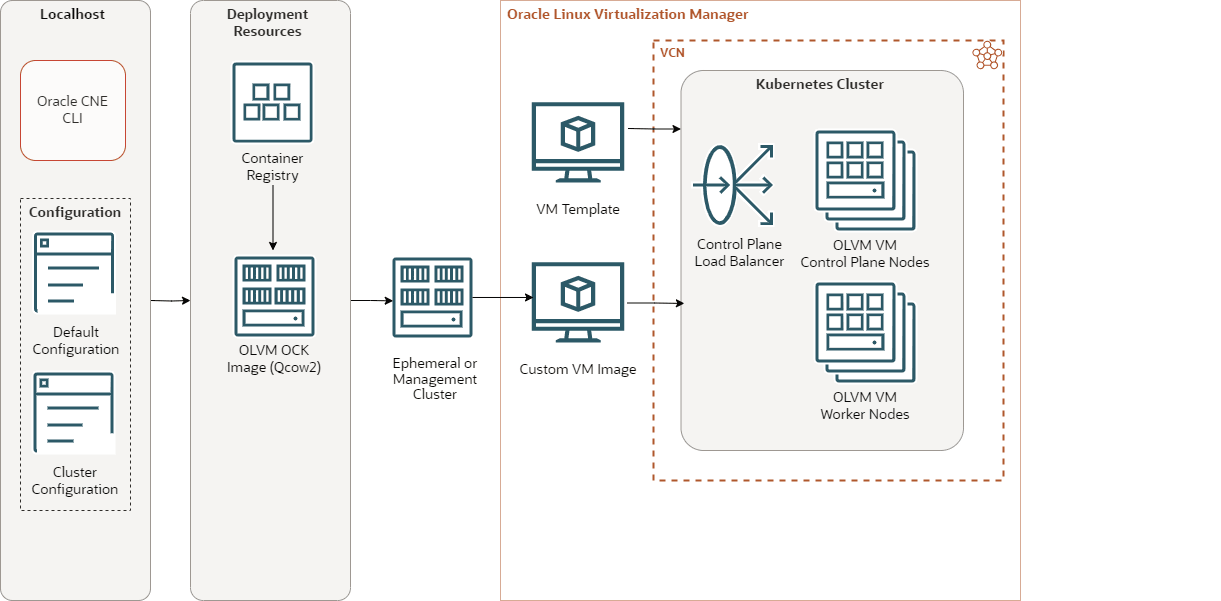

Figure 3-3 Oracle Linux Virtualization Manager Cluster

The Oracle Linux Virtualization Manager cluster architecture has the following components:

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Container registry: A container registry used to pull the images used to create nodes in a Kubernetes cluster. The default is the Oracle Container Registry.

-

Oracle Linux Virtualization Manager OCK image: The CLI is used to create this image, based on the OCK image, pulled from the container registry. The CLI is then used to upload this image to Oracle Linux Virtualization Manager.

-

Ephemeral or management cluster: A Kubernetes cluster used to perform a CLI command. This cluster might also be used to start the cluster services, or to manage the cluster.

-

VM template: An Oracle Linux Virtualization Manager VM template, used to create VMs in the Kubernetes cluster.

-

Custom VM image: The OCK image is loaded into Oracle Linux Virtualization Manager as a custom VM image.

-

Control plane load balancer: A network load balancer used for High Availability (HA) of the control plane nodes.

-

Control plane nodes: VMs running control plane nodes in a Kubernetes cluster.

-

Worker nodes: VMs running worker nodes in a Kubernetes cluster.