7 OCI Provider

Learn about the oci provider used to create Kubernetes clusters on Oracle Cloud Infrastructure (OCI).

Kubernetes clusters are deployed to OCI using the oci provider. The oci provider uses the

Kubernetes Cluster API Provider for OCI to perform the

deployment. This is an implementation of the Kubernetes Cluster API. The Kubernetes Cluster

API is implemented as Kubernetes Custom Resources (CRs), that are serviced by applications

running in a Kubernetes cluster. The Kubernetes Cluster API has a large interface and is

explained in the upstream documentation. For information on the Kubernetes Cluster API, see

the Kubernetes

Cluster API documentation. For information on the Cluster API implementation for OCI, see the Kubernetes Cluster API Provider for OCI

documentation.

Creating a cluster on OCI requires you to provide the credentials to an existing tenancy. The required privileges depend on the configuration of the cluster that's created. For some deployments, it might be enough to have the privileges to create and destroy compute instances. For other deployments, more privilege might be required.

Clusters are deployed into specific compartments. The oci provider requires

that a compartment is available. Compartments can be specified either by the Oracle Cloud

Identifier (OCID), or by its path in the compartment hierarchy, for example,

parentcompartment/mycompartment.

The controllers that implement the Kubernetes Cluster API run inside a Kubernetes cluster. These clusters are known as management clusters. Management clusters control the life cycle of other clusters, known as workload clusters. A workload cluster can be its own management cluster.

Using the Kubernetes Cluster API to deploy a cluster on OCI requires that a Kubernetes cluster is available. Any running cluster can be used. To set

the cluster to use, set the KUBECONFIG environment variable, or use the

--kubeconfig option of ocne commands. You could also set

this cluster using a configuration file. If no cluster is available, a cluster is created

automatically using the libvirt provider, with the default configuration.

This cluster is known as a bootstrap cluster, or an ephemeral cluster, depending

on the context.

When a cluster has been deployed, it's managed using the Kubernetes Cluster API resources in the management cluster.

A workload cluster can be its own management cluster. This is known as a self-managed cluster. When the cluster has been deployed by a bootstrap cluster, the Kubernetes Cluster API resources are migrated from the bootstrap cluster into the new cluster.

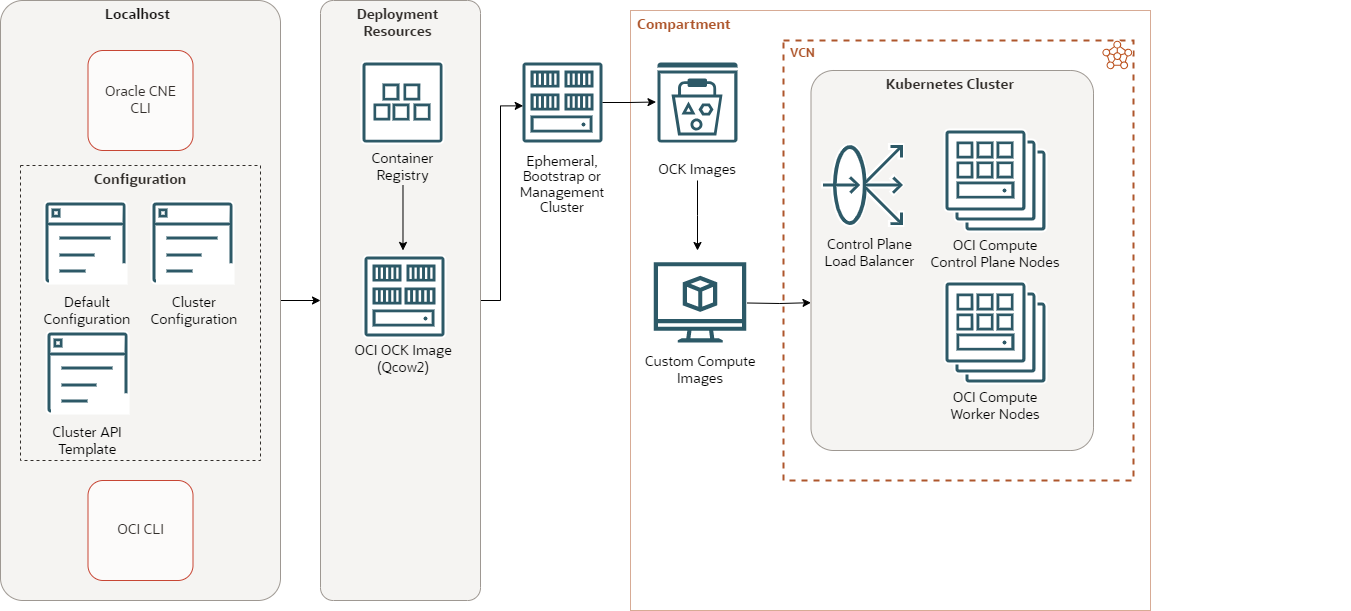

Figure 7-1 OCI Cluster

The OCI cluster architecture has the following components:

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Cluster API template: A YAML file that contains Cluster Resources for the Kubernetes Cluster API to create a cluster.

-

OCI CLI: The OCI CLI is installed on the localhost, including the configuration to read and write to the tenancy and compartment.

-

Container registry: A container registry used to pull the images used to create nodes in a Kubernetes cluster. The default is the Oracle Container Registry.

-

OCI OCK image: The CLI is used to create this image, based on the OCK image, pulled from the container registry. The CLI is then used to upload this image to OCI.

-

Ephemeral, bootstrap, or management cluster: A Kubernetes cluster used to perform a CLI command. This cluster might also be used to boostrap the cluster services, or to manage the cluster.

-

Compartment: An OCI compartment in which the cluster is created.

-

OCK images: The OCK image is loaded into an Object Storage bucket. When the upload is complete, a custom compute image is created from the OCK image.

-

Custom compute images: The OCK image is available as a custom compute image and can be used to create compute nodes in a Kubernetes cluster.

-

Control plane load balancer: A network load balancer used for High Availability (HA) of the control plane nodes.

-

Control plane nodes: Compute instances running control plane nodes in a Kubernetes cluster.

-

Worker nodes: Compute instances running worker nodes in a Kubernetes cluster.