6 libvirt Provider

Learn about the libvirt provider used to create KVM based Kubernetes

clusters with libvirt.

The libvirt provider is the default cluster provider, and

can be used to provision Kubernetes clusters using Kernel-based Virtual Machines (KVM). The

default KVM stack includes libvirt, and is included, by default, with Oracle Linux.

Note:

We recommend the Oracle KVM stack as this KVM version offers many more features for Oracle Linux systems. For information on the Oracle KVM stack and libvirt, see the Oracle Linux: KVM User's Guide.

The system used to create libvirt clusters must be a 64-bit x86 or 64-bit ARM system running Oracle Linux 8 or 9, and include the Unbreakable Enterprise Kernel Release 7 (UEK R7).

The libvirt provider provisions Kubernetes clusters using

libvirt on a single host, and is useful for creating and destroying Kubernetes clusters for

testing and development. While the libvirt provider can be used for test and

development clusters, it does deploy a production worthy cluster configuration.

Important:

As all libvirt cluster nodes are running on a single host, be aware that if the host running the cluster goes down, so do all the cluster nodes.

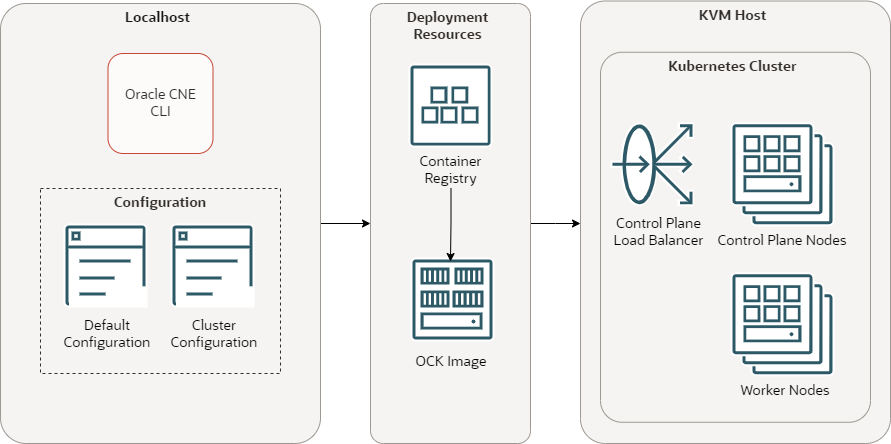

Figure 6-1 libvirt Cluster Architecture

The libvirt cluster architecture has the following components:

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Container registry: A container registry used to pull the images used to create nodes in a Kubernetes cluster. The default is the Oracle Container Registry.

-

OCK image: The OCK image pulled from the container registry, which is used to create Kubernetes nodes.

-

Control plane load balancer: A load balancer used for High Availability (HA) of the control plane nodes.

-

Control plane nodes: Control plane nodes in a Kubernetes cluster.

-

Worker nodes: Worker nodes in a Kubernetes cluster.

The libvirt provider is also used to provision Kubernetes clusters when

using some CLI commands. This cluster type is often referred to as an ephemeral

cluster. An ephemeral cluster is a single node cluster that lives for a short time and is

created and destroyed as needed by the CLI. An existing cluster can also be used as an

ephemeral cluster by including the location of a kubeconfig file as an option

with CLI commands.

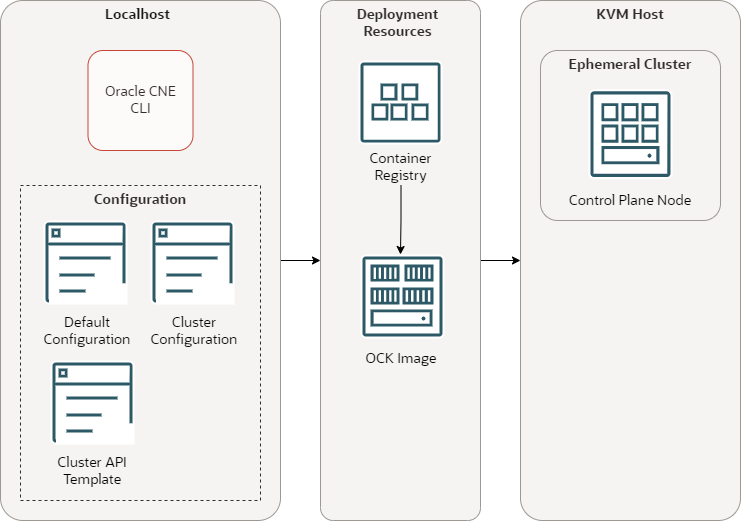

Figure 6-2 libvirt Ephemeral Cluster

The ephemeral cluster architecture has the following components:

-

CLI: The CLI used to create and manage Kubernetes clusters. The

ocnecommand. -

Default configuration: A YAML file that contains configuration for all

ocnecommands. -

Cluster configuration: A YAML file that contains configuration for a specific Kubernetes cluster.

-

Cluster API template: A YAML file that contains Cluster Resources for the Kubernetes Cluster API to create a cluster.

-

Container registry: A container registry used to pull the images used to create nodes in a Kubernetes cluster. The default is the Oracle Container Registry.

-

OCK image: The OCK image pulled from the container registry, which is used to create Kubernetes nodes.

-

Ephemeral cluster: A temporary Kubernetes cluster used to perform a CLI command. The default for this is a single node cluster created with the libvirt provider on the localhost. This might also be an external cluster.

Single and multi node clusters can be created on Oracle Linux 8 and 9, on both 64-bit x86 and 64-bit ARM systems. Because all cluster nodes run on a single host, it's not possible to create hybrid clusters. However, it's possible to use an ARM system to create a remote cluster on x86 hardware and, conversely, x86 hardware can be used to create a remote cluster on ARM.

The libvirt provider requires the target system to be running libvirt and

requires that the user be configured to have access to libvirt. Oracle CNE implements a libvirt connection using the

legacy single-socket client. If local libvirt clusters are created, the UNIX domain socket is

used.

To create Kubernetes clusters on a remote system, enable a remote transport mechanism for libvirt. We recommend you set up SSH key-based authentication to the remote system as a normal user, and that you configure the user with the privilege to run libvirt. You can, however, use any of the libvirt remote transport options. For more information on libvirt remote transports, see the upstream libvirt documentation.

Most remote cluster deployments leverage the qemu+ssh transport, which uses

SSH to tunnel the UNIX domain socket back to the CLI. Oracle CNE doesn't configure the libvirt transports or

system services. This must be set up correctly, according to the documentation for the OS.

Clusters created with the libvirt provider create a tunnel so the cluster

can be accessed through a port on the host where the cluster is deployed. The port range

starts at 6443 and increments from there. As clusters are deleted, the ports are freed. If a

cluster is created on a remote system, ensure a range of ports are accessible through the

system firewall, starting at 6443.

Important:

You can disable the firewall in a testing environment, however we don't recommend this for production systems.

Use the ocne cluster start command to create a Kubernetes cluster using the

libvirt provider. As this provider is the default, you don't need to

specify the provider type. For example:

ocne cluster startThis command creates a single node cluster using all the default options, and installs the UI and application catalog.

You can add extra command line options to the ocne cluster start command to

set up the cluster with non default settings, such as the number of control plane and worker

nodes. For information on these command options, see Oracle Cloud Native Environment: CLI.

You can also customize the default settings by adding options to the default configuration file, or a configuration file specific to the cluster you want to create. For information on these configuration files, see Cluster Configuration Files and Oracle Cloud Native Environment: CLI.

For clusters started on systems with access to privileged libvirt

instances, two kubeconfig files are created when you create a cluster, one

for access to the local cluster, and one that can be used on the remote cluster host.