Abstract

This guide describes how to provision and manage Oracle WebCenter Portal instances on Kubernetes.

Preface

This guide outlines the process for provisioning and managing Oracle WebCenter Portal instances within a Kubernetes environment. It serves as a resource for users seeking to effectively create and manage these instances on Kubernetes.

Audience

This guide is intended for users who want to create and manage Oracle WebCenter Portal instances on Kubernetes.

Oracle WebCenter Portal on Kubernetes

The WebLogic Kubernetes Operator, which supports running WebLogic Server and Fusion Middleware Infrastructure domains on Kubernetes, facilitates the deployment of Oracle WebCenter Portal in a Kubernetes environment.

In this release, the Oracle WebCenter Portal domain is structured around the domain on a persistent volume model only, where the domain home is stored in a persistent volume.

This release includes support for the Portlet Managed Server, enabling the deployment and management of portlet applications within the Oracle WebCenter Portal environment.

The operator provides several key features to assist in deploying and managing the Oracle WebCenter Portal domain in Kubernetes. These features enable you to:

- Create Oracle WebCenter Portal instances in a Kubernetes persistent volume (PV), which can be hosted on a Network File System (NFS) or other types of Kubernetes volumes.

- Start servers based on declarative startup parameters and desired states.

- Expose Oracle WebCenter Portal services for external access.

- Scale the Oracle WebCenter Portal domain by starting and stopping Managed Servers on demand or through integration with a REST API.

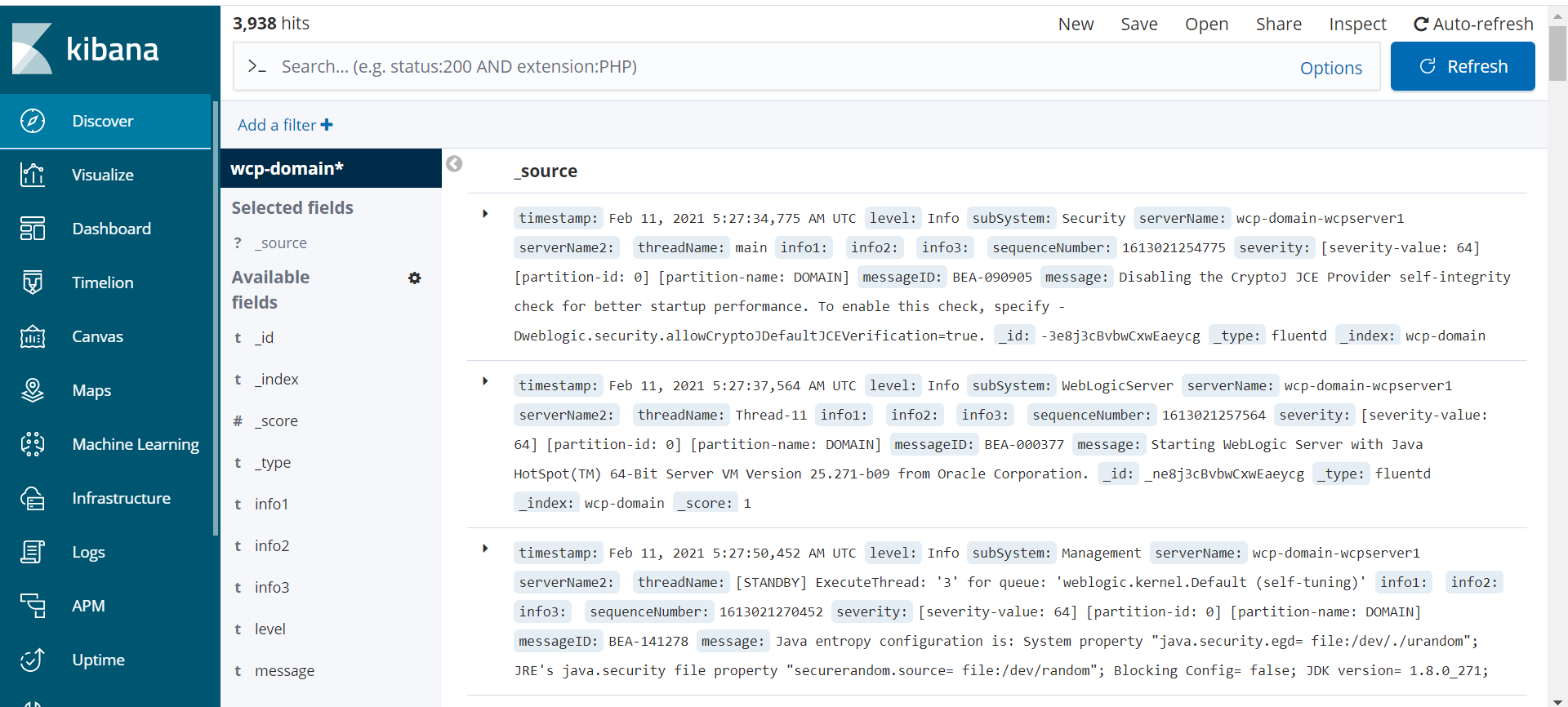

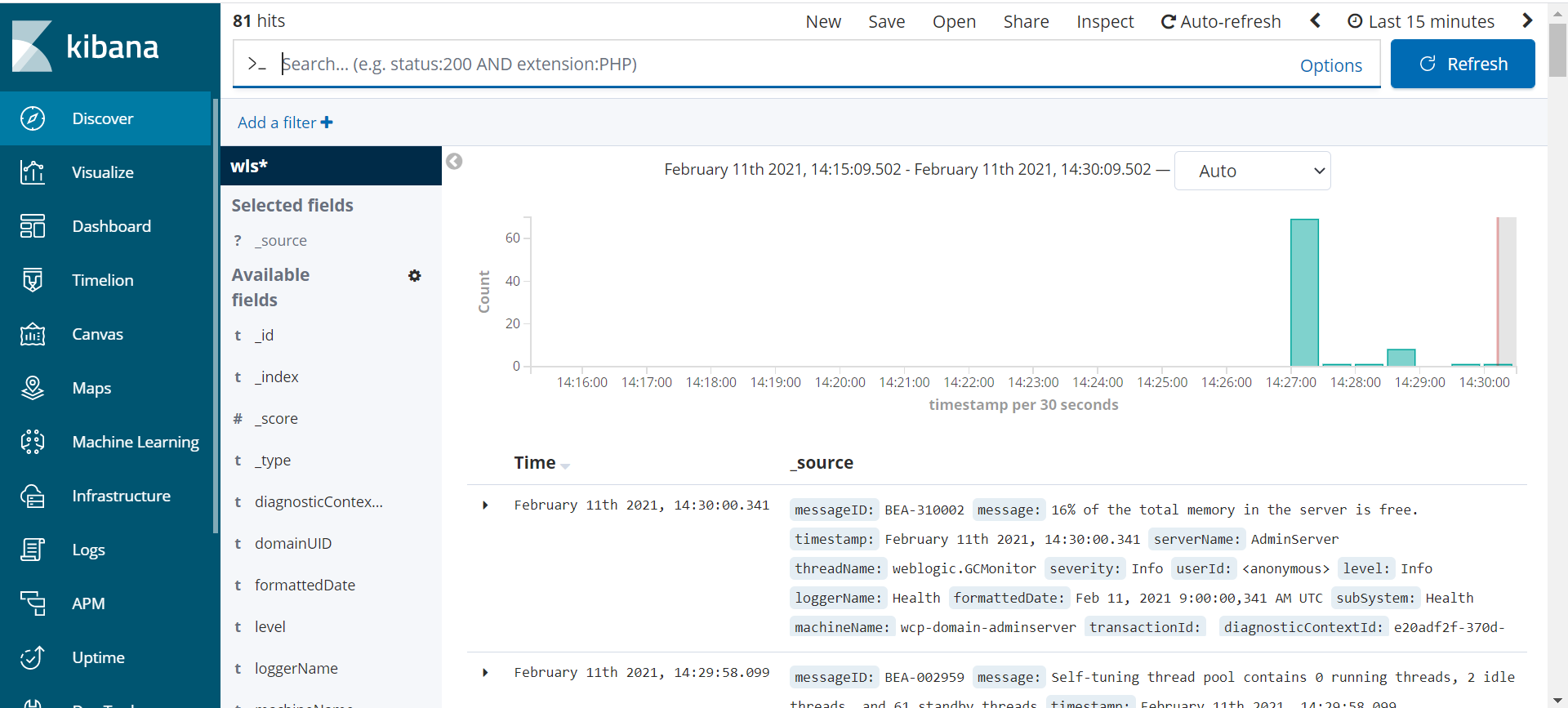

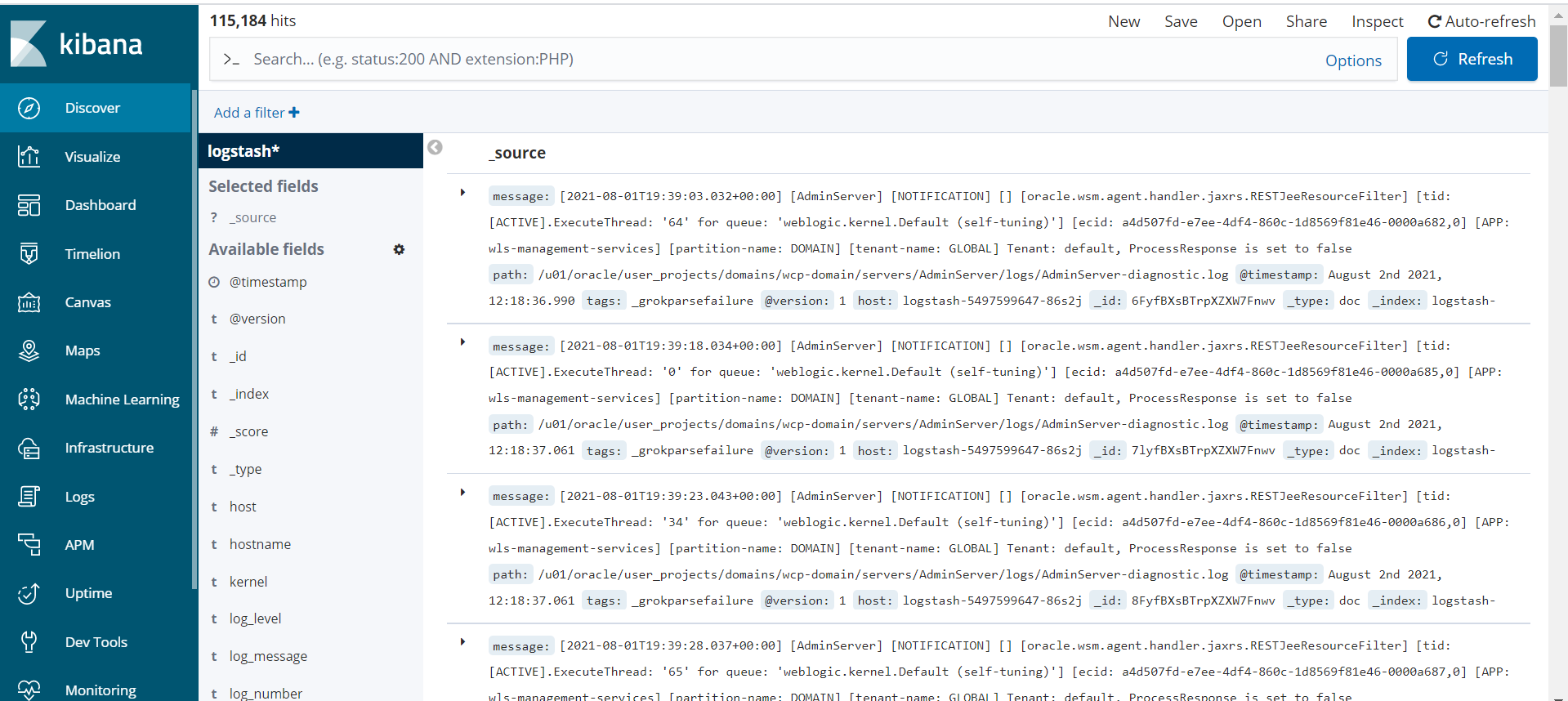

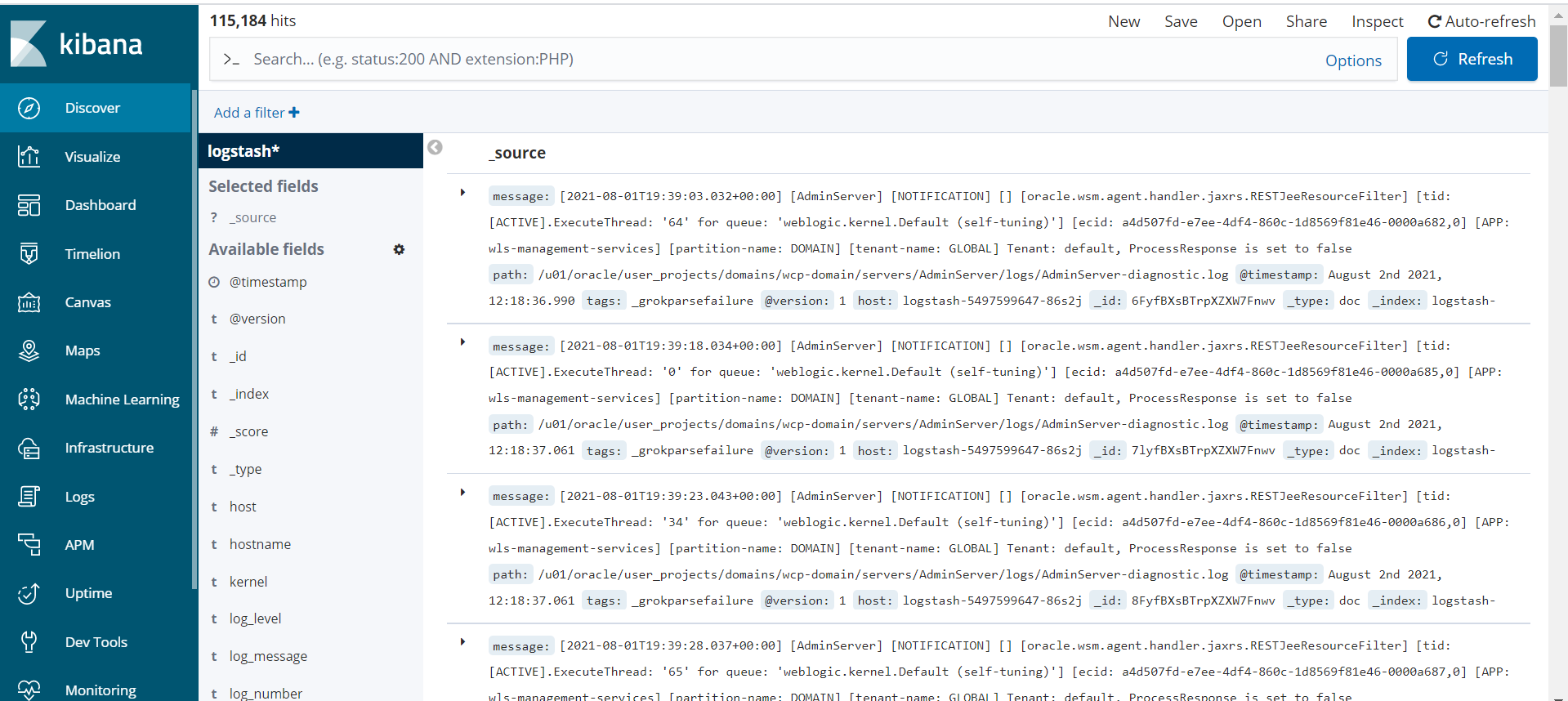

- Publish logs from both the operator and WebLogic Server to Elasticsearch for interaction via Kibana.

- Monitor the Oracle WebCenter Portal instance using Prometheus and Grafana.

Current release

The current release for the Oracle WebCenter Portal domain deployment on Kubernetes is 24.4.3. This release uses the WebLogic Kubernetes Operator version 4.2.9.

Recent changes and known issues

See the Release Notes for recent changes and known issues with the Oracle WebCenter Portal domain deployment on Kubernetes.

About this documentation

This documentation includes sections targeted to different audiences. To help you find what you are looking for more easily, please use this table of contents:

Quick Start explains how to quickly get an Oracle WebCenter Portal domain instance running, using the defaults, nothing special. Note that this is only for development and test purposes.

Install Guide and Administration Guide provide detailed information about all aspects of using the Kubernetes operator including:

- Installing and configuring the operator

- Using the operator to create and manage Oracle WebCenter Portal domain

- Configuring WebCenter Portal for search functionality

- Setting up Kubernetes load balancers

- Configuring Prometheus and Grafana for monitoring WebCenter Portal

- Setting up logging with Elasticsearch

Release Notes

Recent changes

Review the release notes for Oracle WebCenter Portal on Kubernetes.

| Date | Version | Change |

|---|---|---|

| December 2024 | 14.1.2.0.0 GitHub release version 24.4.3 |

First release of Oracle WebCenter Portal on Kubernetes 14.1.2.0.0. |

Install Guide

Install the WebLogic Kubernetes operator and prepare and deploy the Oracle WebCenter Portal domain.

Requirements and limitations

Understand the system requirements and limitations for deploying and running Oracle WebCenter Portal with the WebLogic Kubernetes operator.

Introduction

This document outlines the specific considerations for deploying and running a WebCenter Portal domain using the WebLogic Kubernetes Operator. Apart from the considerations mentioned here, the WebCenter Portal domain operates similarly to Fusion Middleware Infrastructure and WebLogic Server domains.

In this release, WebCenter Portal domain is based on the domain on a persistent volume model where the domain resides in a persistent volume (PV).

System Requirements

Release 24.4.3 has the following system requirements:

- Kubernetes: Versions 1.24.0+, 1.25.0+, 1.26.2+, 1.27.2+, 1.28.2+ and 1.29.1+ (check with

kubectl version). - Networking: v0.13.0-amd64 or later (verify with

docker images | grep flannel), or Calico v3.16.1+. - Helm: Version 3.10.2+ (verify with

helm version --client --short). - Container Runtime: Docker 19.03.11+ (check with

docker version) or CRI-O 1.20.2+ (check withcrictl version | grep RuntimeVersion). - WebLogic Kubernetes Operator: Version 4.2.9 (see the operator release notes).

- Oracle WebCenter Portal: Version 14.1.2.0 image.

Proxy Setup: The following proxy configurations are used to pull required binaries and source code from the respective repositories:

export NO_PROXY="localhost,127.0.0.0/8,$(hostname -i),.your-company.com,/var/run/docker.sock"

export no_proxy="localhost,127.0.0.0/8,$(hostname -i),.your-company.com,/var/run/docker.sock"

export http_proxy=http://www-proxy-your-company.com:80

export https_proxy=http://www-proxy-your-company.com:80

export HTTP_PROXY=http://www-proxy-your-company.com:80

export HTTPS_PROXY=http://www-proxy-your-company.com:80Limitations

Compared to running a WebLogic Server domain in Kubernetes using the operator, the following limitations currently exist for a WebCenter Portal domain:

- The

Domain in imagemodel is not supported in this version of the operator. Additionally,WebLogic Deploy Tooling (WDT)based deployments are currently not supported. - Only configured clusters are supported; dynamic clusters are not supported on WebCenter Portal domains. Note that you can still utilize all scaling features; you just need to define the maximum size of your cluster at the time of creating a domain.

- At present, WebCenter Portal does not run on non-Linux containers.

- Deploying and running a WebCenter Portal domain is supported only in operator versions 4.2.9.

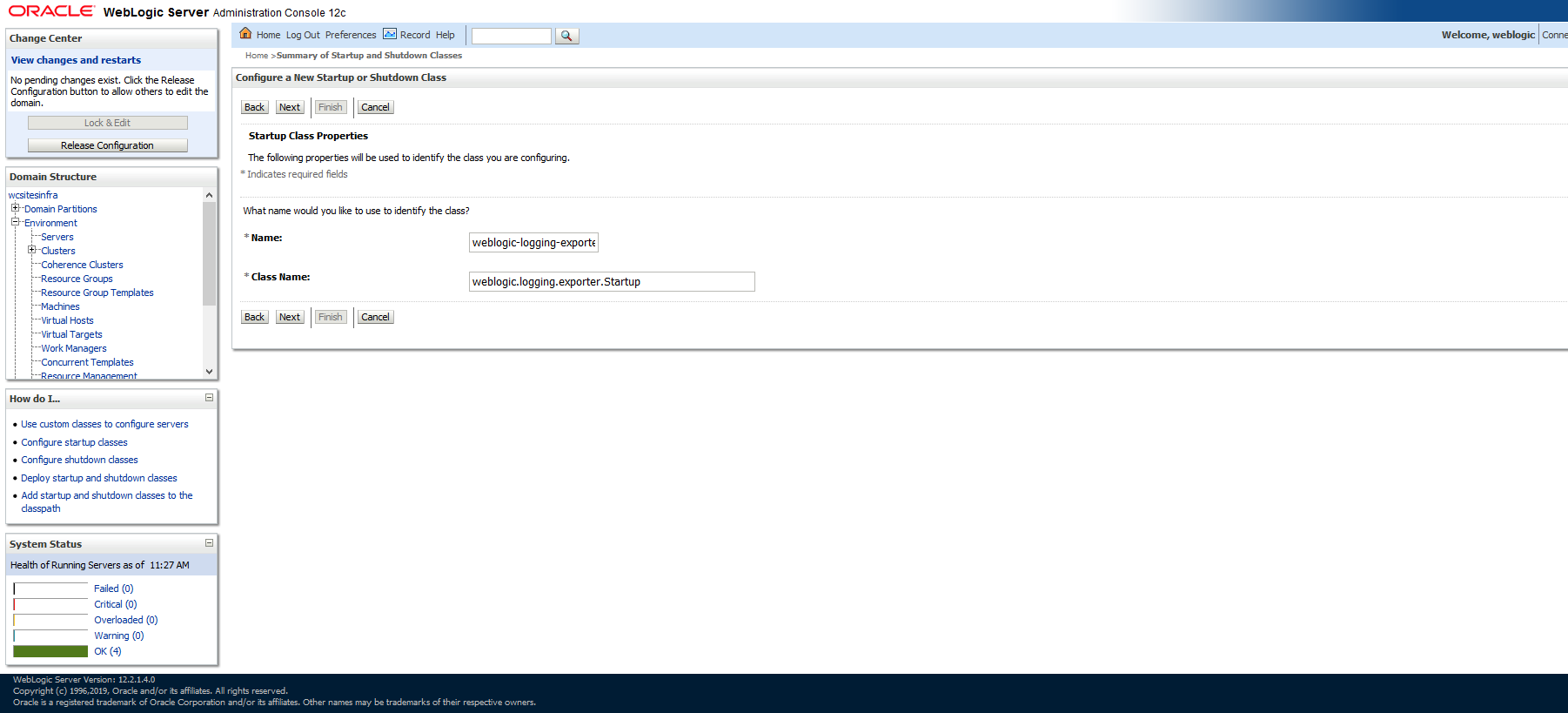

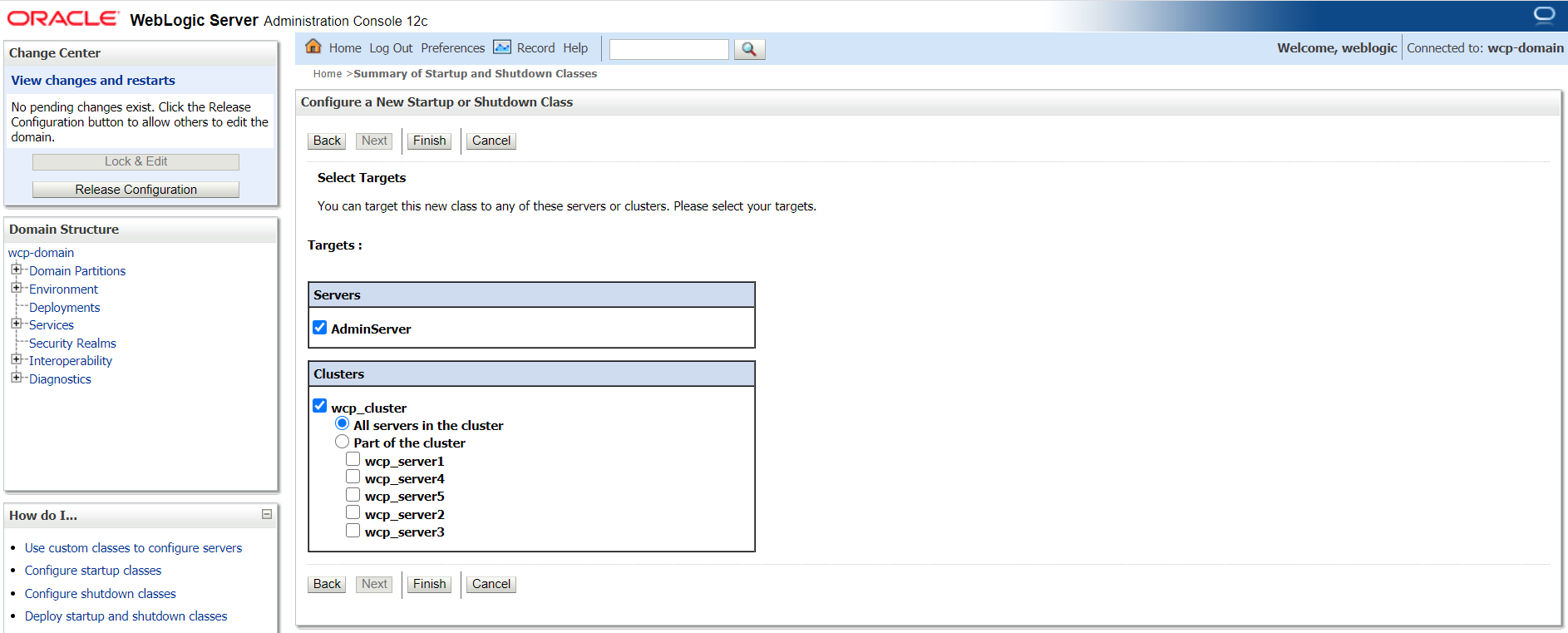

- The WebLogic Logging Exporter project has been archived. Users are encouraged to use Fluentd or Logstash.

- The WebLogic Monitoring Exporter currently supports only the WebLogic MBean trees. Support for JRF MBeans has not yet been added.

Prepare Your Environment

Prepare for creating the Oracle WebCenter Portal domain. This preparation includes, but is not limited to, creating the required secrets, persistent volume, volume claim, and database schema.

Set up the environment, including establishing a Kubernetes cluster and the WebLogic Kubernetes Operator.

Set Up the Code Repository to Deploy Oracle WebCenter Portal Domain

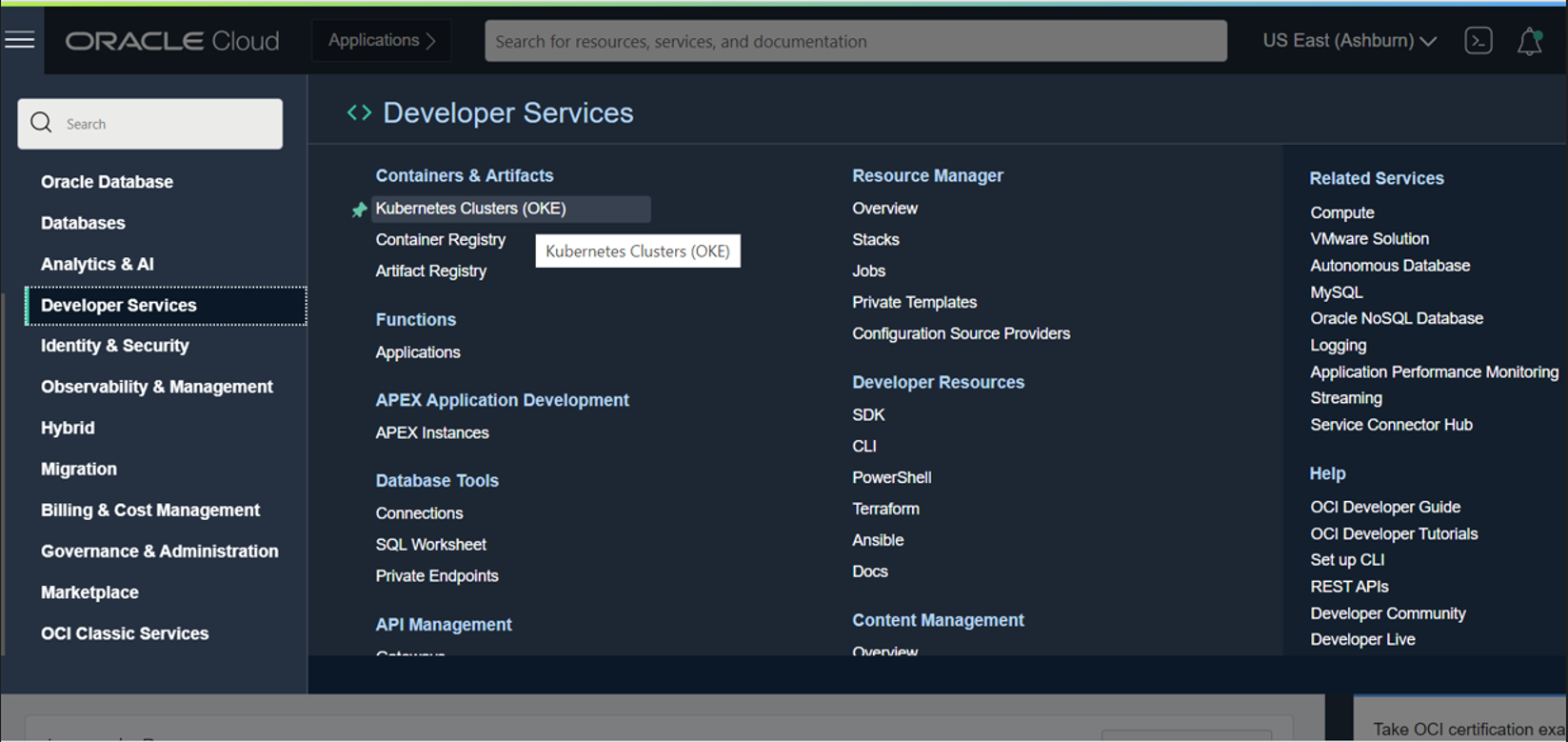

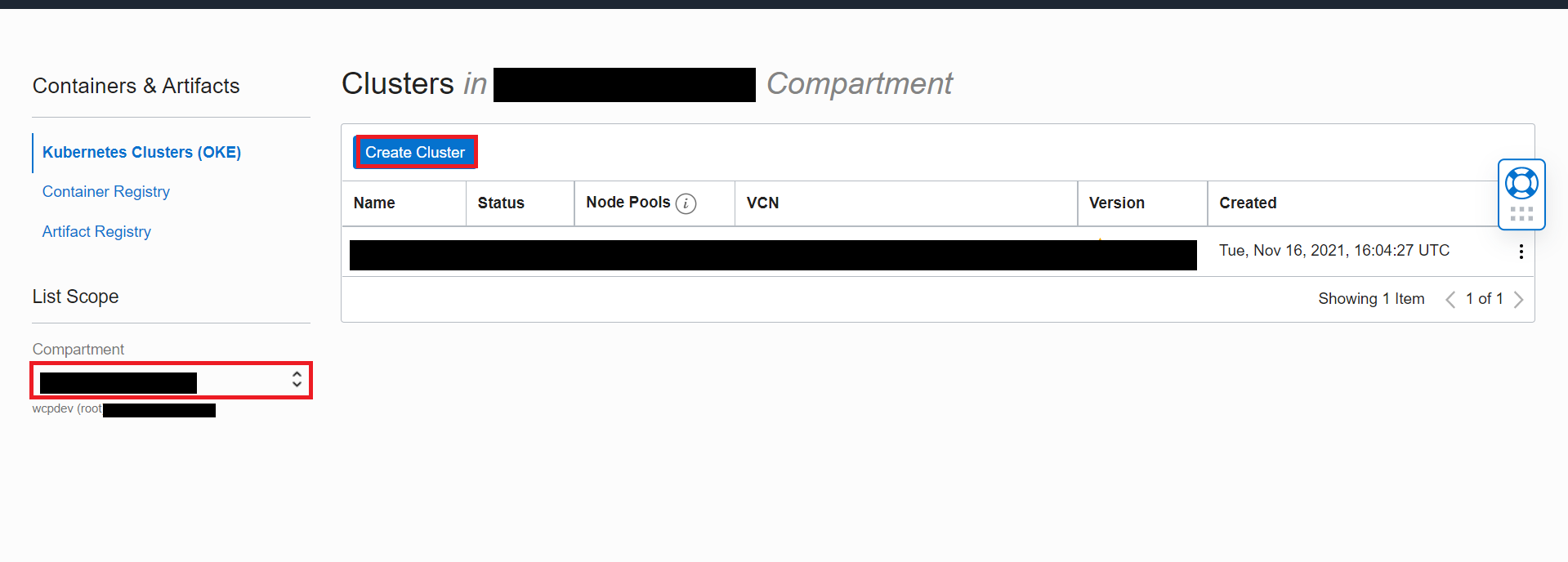

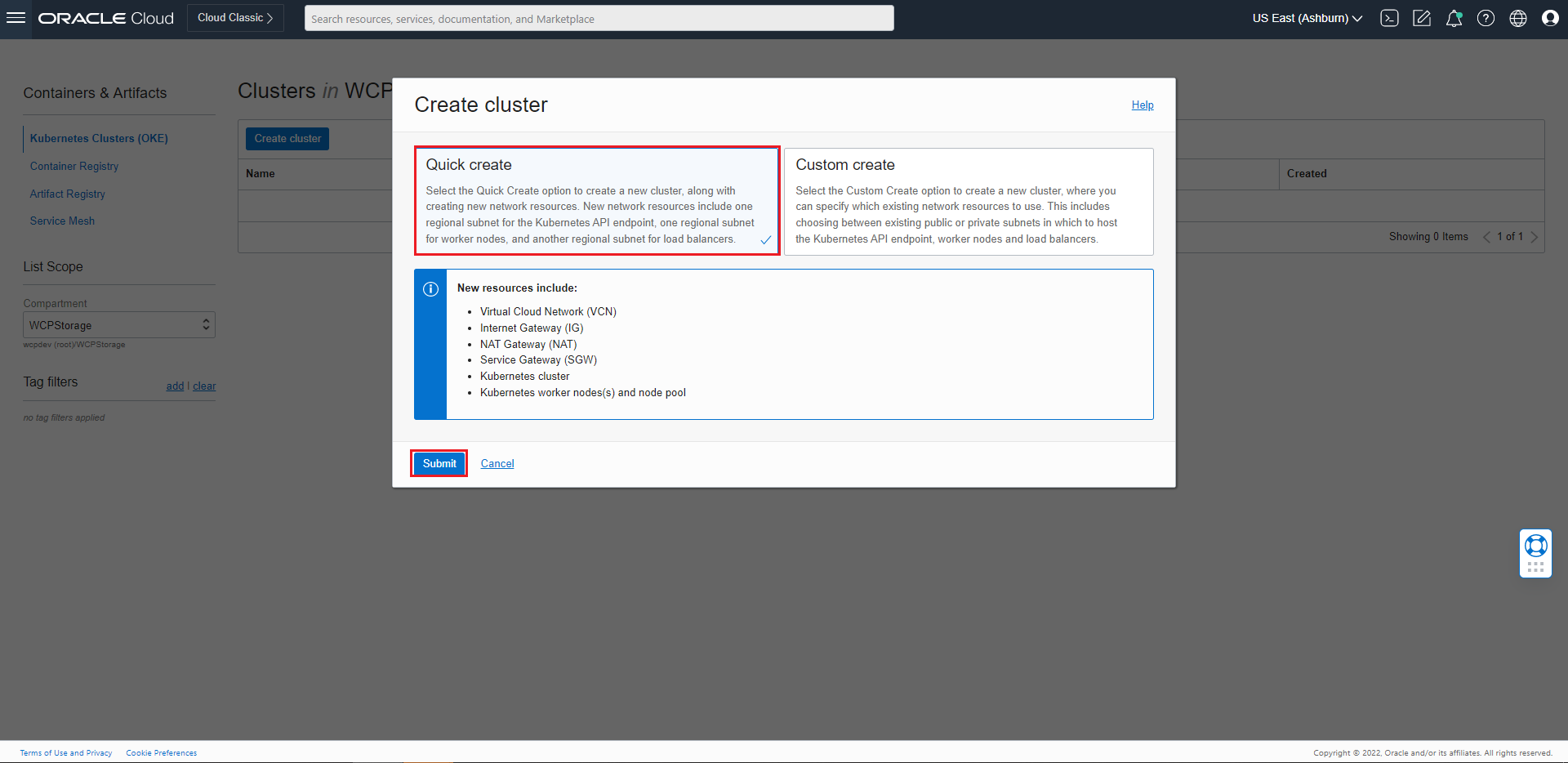

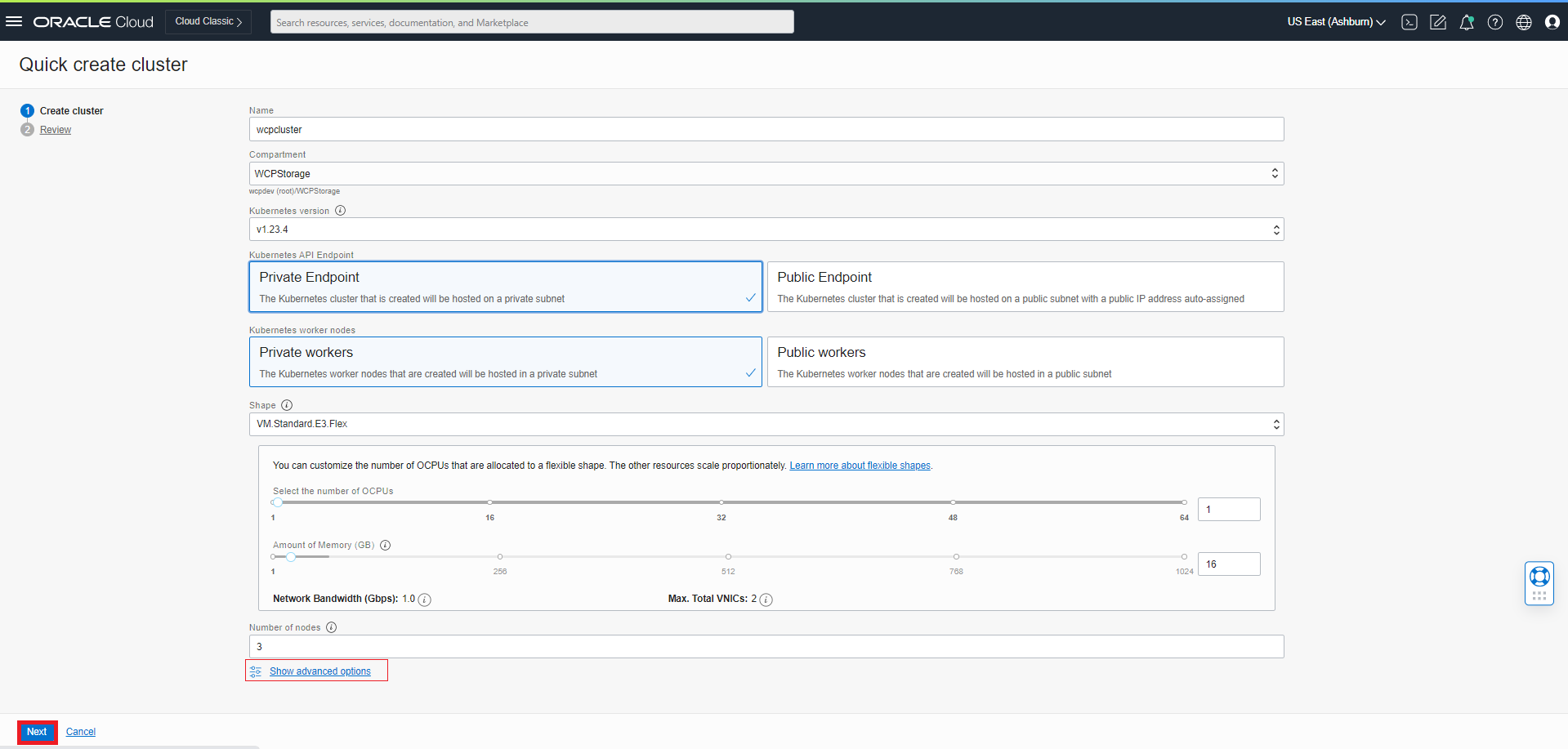

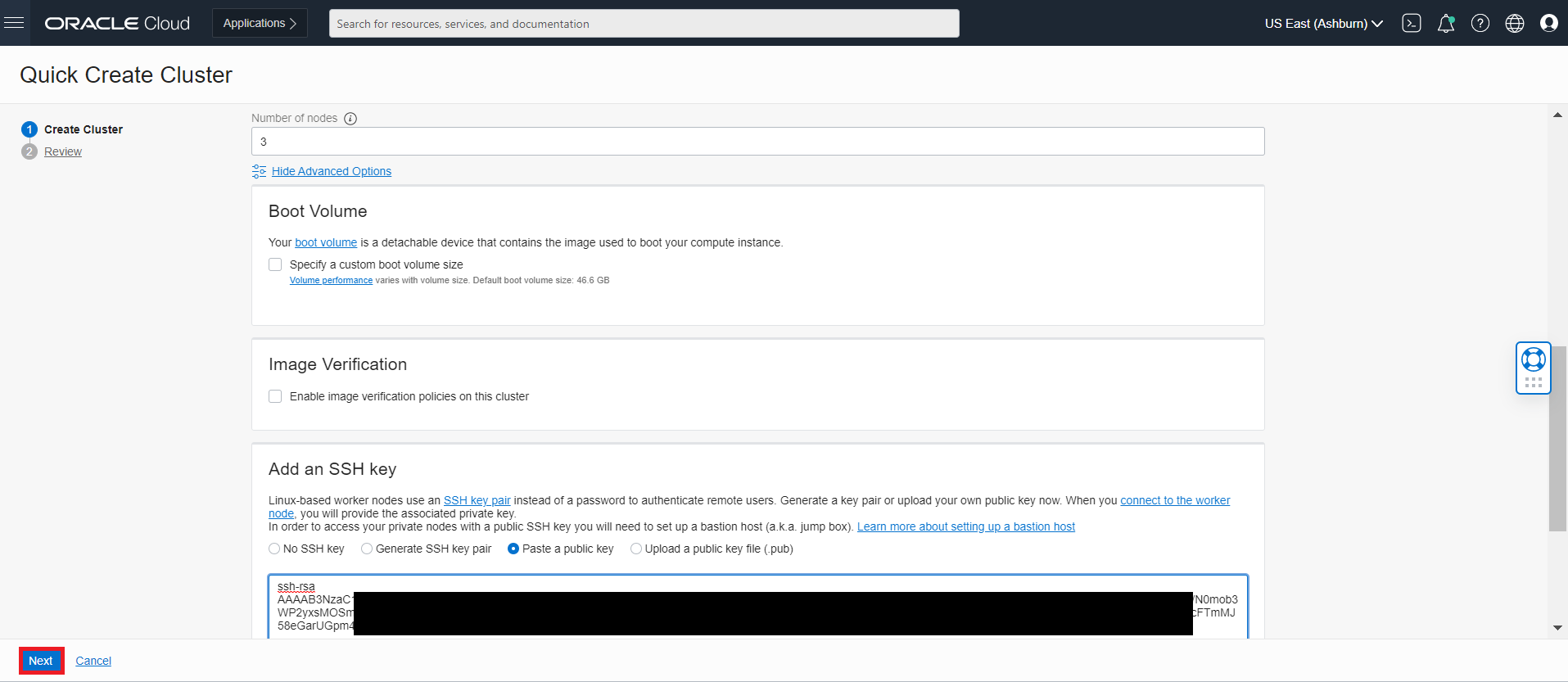

Set Up Your Kubernetes Cluster

Refer to the official Kubernetes setup documentation to establish a production-grade Kubernetes cluster.

After creating Kubernetes clusters, you can optionally:

- Create load balancers to direct traffic to the backend domain.

- Configure Kibana and Elasticsearch for your operator logs.

Install Helm

The operator uses Helm to create and deploy the necessary resources and then run the operator in a Kubernetes cluster. For Helm installation and usage information, see here.

Pull Other Dependent Images

Dependent images include WebLogic Kubernetes Operator, database, and Traefik. Pull these images and add them to your local registry:

Pull these docker images and re-tag them as shown:

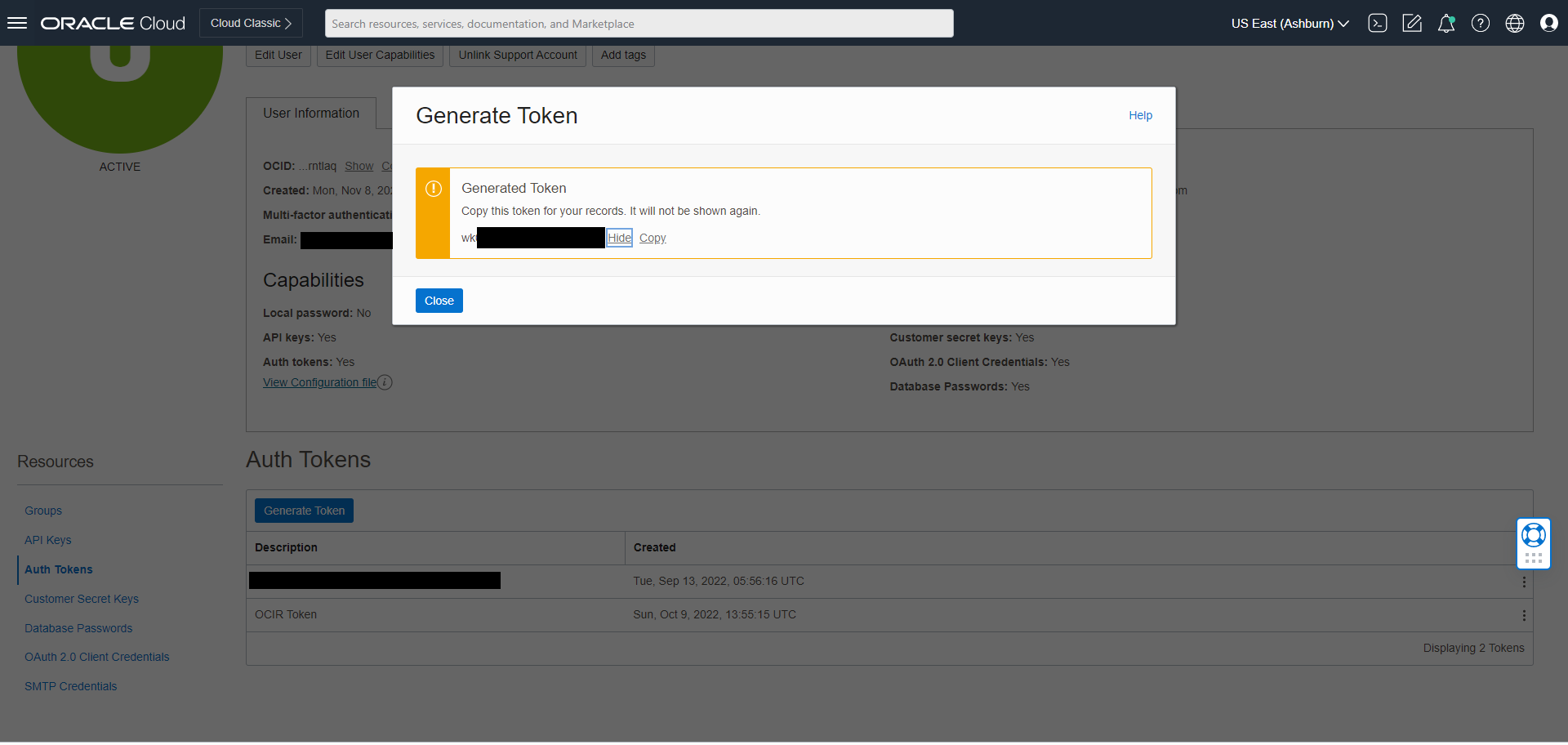

To pull an image from the Oracle Container Registry, in a web browser, navigate to

https://container-registry.oracle.comand log in using the Oracle Single Sign-On authentication service. If you do not already have SSO credentials, at the top of the page, click the Sign In link to create them.Use the web interface to accept the Oracle Standard Terms and Restrictions for the Oracle software images that you intend to deploy. Your acceptance of these terms are stored in a database that links the software images to your Oracle Single Sign-On login credentials.

Then, pull these docker images:

#This step is required once at every node to get access to the Oracle Container Registry. docker login https://container-registry.oracle.com (enter your Oracle email Id and password)WebLogic Kubernetes Operator image:

docker pull ghcr.io/oracle/weblogic-kubernetes-operator:4.2.9Copy all the built and pulled images to all the nodes in your cluster or add to a Docker registry that your cluster can access.

Note: If you’re not running Kubernetes on your development machine, you’ll need to make the Docker image available to a registry visible to your Kubernetes cluster.

Upload your image to a machine running Docker and Kubernetes as follows:

# on your build machine docker save Image_Name:Tag > Image_Name-Tag.tar scp Image_Name-Tag.tar YOUR_USER@YOUR_SERVER:/some/path/Image_Name-Tag.tar # on the Kubernetes server docker load < /some/path/Image_Name-Tag.tar

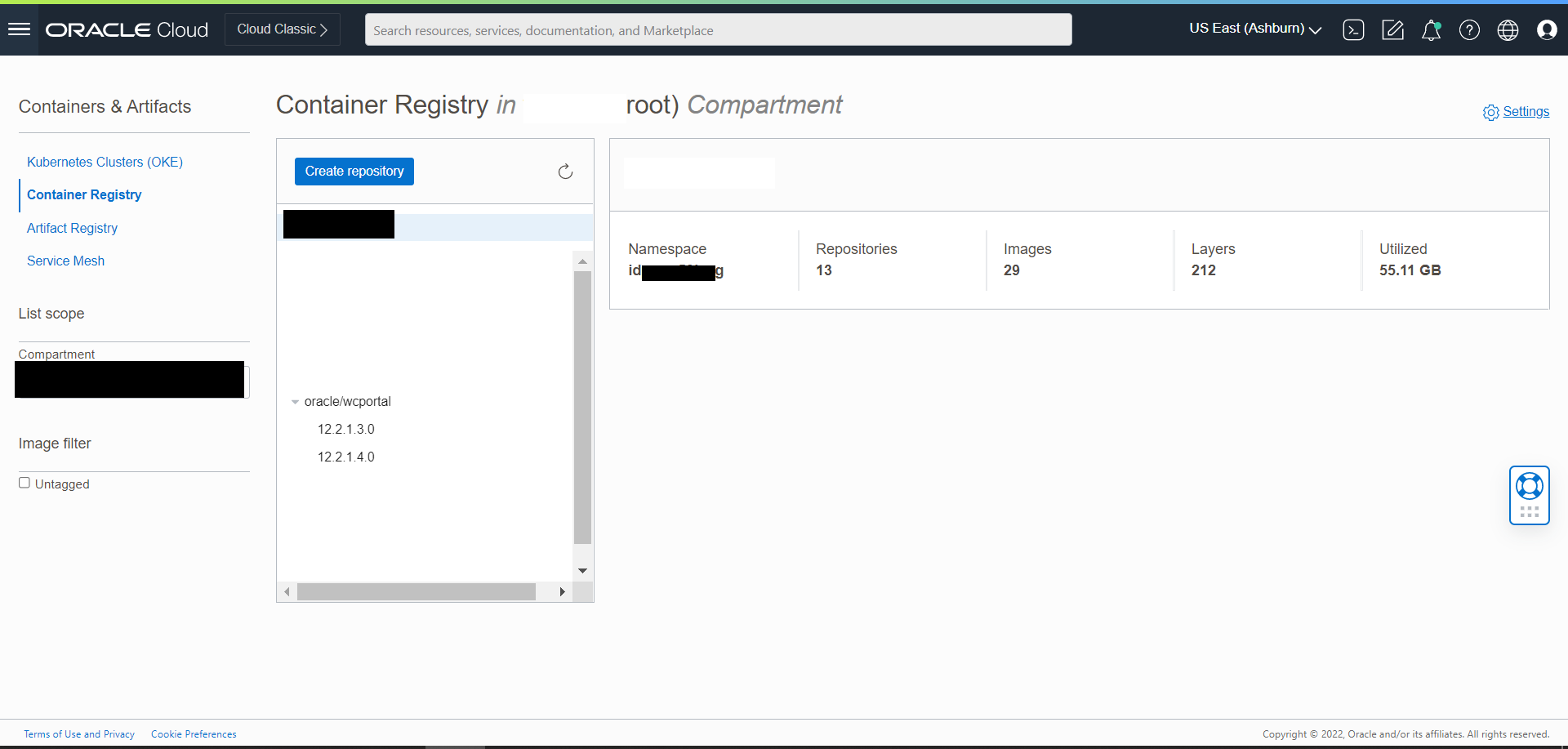

Obtain the Oracle WebCenter Portal Docker Image

Get the Oracle WebCenter Portal Image from the Oracle Container Registry (OCR)

For first-time users, follow these steps to pull the image from the Oracle Container Registry:

Navigate to Oracle Container Registry and log in using the Oracle Single Sign-On (SSO) authentication service.

Note: If you do not already have SSO credentials, you can create an Oracle Account here.

Use the web interface to accept the Oracle Standard Terms and Restrictions for the Oracle software images you intend to deploy.

Note: Your acceptance of these terms is stored in a database linked to your Oracle Single Sign-On credentials.

Log in to the Oracle Container Registry using the following command:

docker login container-registry.oracle.com- Find and pull the prebuilt Oracle WebCenter Portal image by running the following command:

docker pull container-registry.oracle.com/middleware/webcenter-portal_cpu:14.1.2.0-<TAG>Build Oracle WebCenter Portal Container Image

Alternatively, if you prefer to build and use the Oracle WebCenter Portal container image with the WebLogic Image Tool, including any additional bundle or interim patches, follow these steps to create the image.

Note:

- The default Oracle WebCenter Portal image name used for Oracle WebCenter Portal domain deployment is

oracle/wcportal:14.1.2.0.- The image created must be tagged as

oracle/wcportal:14.1.2.0using thedocker tagcommand.- If a different name is chosen for the image, the new tag name must be updated in the

create-domain-inputs.yamlfile and in all other instances where theoracle/wcportal:14.1.2.0image name is referenced.

Set Up the Code Repository to Deploy Oracle WebCenter Portal Domain

Oracle WebCenter Portal domain deployment on Kubernetes leverages the WebLogic Kubernetes Operator infrastructure. For deploying the Oracle WebCenter Portal domain, you need to set up the deployment scripts as below:

Create a working directory to set up the source code.

mkdir $HOME/wcp_14.1.2.0 cd $HOME/wcp_14.1.2.0Download the Oracle WebCenter Portal Kubernetes deployment scripts from the Github repository. Required artifacts are available at

FMW-DockerImages/OracleWeCenterPortal/kubernetes.git clone https://github.com/oracle/fmw-kubernetes.git export WORKDIR=$HOME/wcp_14.1.2.0/fmw-kubernetes/OracleWebCenterPortal/kubernetes/

You can now use the deployment scripts from <$WORKDIR> to set up the WebCenter Portal domain as described later in this document.

Grant Roles and Clear Stale Resources

To confirm if there is already a WebLogic custom resource definition, execute the following command:

kubectl get crdSample Output:

NAME CREATED AT domains.weblogic.oracle 2020-03-14T12:10:21ZDelete the WebLogic custom resource definition, if you find any, by executing the following command:

kubectl delete crd domains.weblogic.oracleSample Output:

customresourcedefinition.apiextensions.k8s.io "domains.weblogic.oracle" deleted

Install the WebLogic Kubernetes Operator

The WebLogic Kubernetes Operator supports the deployment of Oracle WebCenter Portal domains in the Kubernetes environment.

Follow the steps in this document to install the operator.

Optionally, you can follow these steps to send the contents of the operator’s logs to Elasticsearch.

In the following example commands to install the WebLogic Kubernetes Operator, opns is the namespace and op-sa is the service account created for the operator:

kubectl create namespace operator-ns

kubectl create serviceaccount -n operator-ns operator-sa

helm repo add weblogic-operator https://oracle.github.io/weblogic-kubernetes-operator/charts --force-update

helm install weblogic-kubernetes-operator weblogic-operator/weblogic-operator --version 4.2.9 --namespace operator-ns --set serviceAccount=operator-sa --set "javaLoggingLevel=FINE" --waitNote: In this procedure, the namespace is referred to as

operator-ns, but any name can be used.The following values can be used:

- Domain UID/Domain name:wcp-domain

- Domain namespace:wcpns

- Operator namespace:operator-ns

- Traefik namespace:traefik

Prepare the Environment for the WebCenter Portal Domain

Create a namespace for an Oracle WebCenter Portal domain

Create a Kubernetes namespace (for example, wcpns) for the domain unless you intend to use the default namespace. For details, see Prepare to run a domain.

kubectl create namespace wcpnsSample Output:

namespace/wcpns createdTo manage domain in this namespace, configure the operator using helm:

Helm upgrade weblogic-operator

helm upgrade --reuse-values --set "domainNamespaces={wcpns}" \

--wait weblogic-kubernetes-operator charts/weblogic-operator --namespace operator-nsSample Output:

NAME: weblogic-kubernetes-operator

LAST DEPLOYED: Wed Jan 6 01:52:58 2021

NAMESPACE: operator-ns

STATUS: deployed

REVISION: 2Create a Kubernetes secret with domain credentials

Create the Kubernetes secrets username and password of the administrative account in the same Kubernetes namespace as the domain:

cd ${WORKDIR}/create-weblogic-domain-credentials

./create-weblogic-credentials.sh -u weblogic -p welcome1 -n wcpns -d wcp-domain -s wcp-domain-domain-credentialsSample Output:

secret/wcp-domain-domain-credentials created

secret/wcp-domain-domain-credentials labeled

The secret wcp-domain-domain-credentials has been successfully created in the wcpns namespace.Where:

- -u user name, must be specified.

- -p password, must be provided using the -p argument or user will be prompted to enter a value.

- -n namespace. Example: wcpns

- -d domainUID. Example: wcp-domain

- -s secretName. Example: wcp-domain-domain-credentials

Note: You can inspect the credentials as follows:

kubectl get secret wcp-domain-domain-credentials -o yaml -n wcpnsFor more details, see this document.

Create a Kubernetes secret with the RCU credentials

Create a Kubernetes secret for the Repository Configuration Utility (user name and password) using the create-rcu-credentials.sh script in the same Kubernetes namespace as the domain:

cd ${WORKDIR}/create-rcu-credentials

sh create-rcu-credentials.sh \

-u username \

-p password \

-a sys_username \

-q sys_password \

-d domainUID \

-n namespace \

-s secretNameSample Output:

secret/wcp-domain-rcu-credentials created

secret/wcp-domain-rcu-credentials labeled

The secret wcp-domain-rcu-credentials has been successfully created in the wcpns namespace.The parameters are as follows:

-u username for schema owner (regular user), must be specified.

-p password for schema owner (regular user), must be provided using the -p argument or user will be prompted to enter a value.

-a username for SYSDBA user, must be specified.

-q password for SYSDBA user, must be provided using the -q argument or user will be prompted to enter a value.

-d domainUID, optional. The default value is wcp-domain. If specified, the secret will be labeled with the domainUID unless the given value is an empty string.

-n namespace, optional. Use the wcpns namespace if not specified.

-s secretName, optional. If not specified, the secret name will be determined based on the domainUID value.Note: You can inspect the credentials as follows:

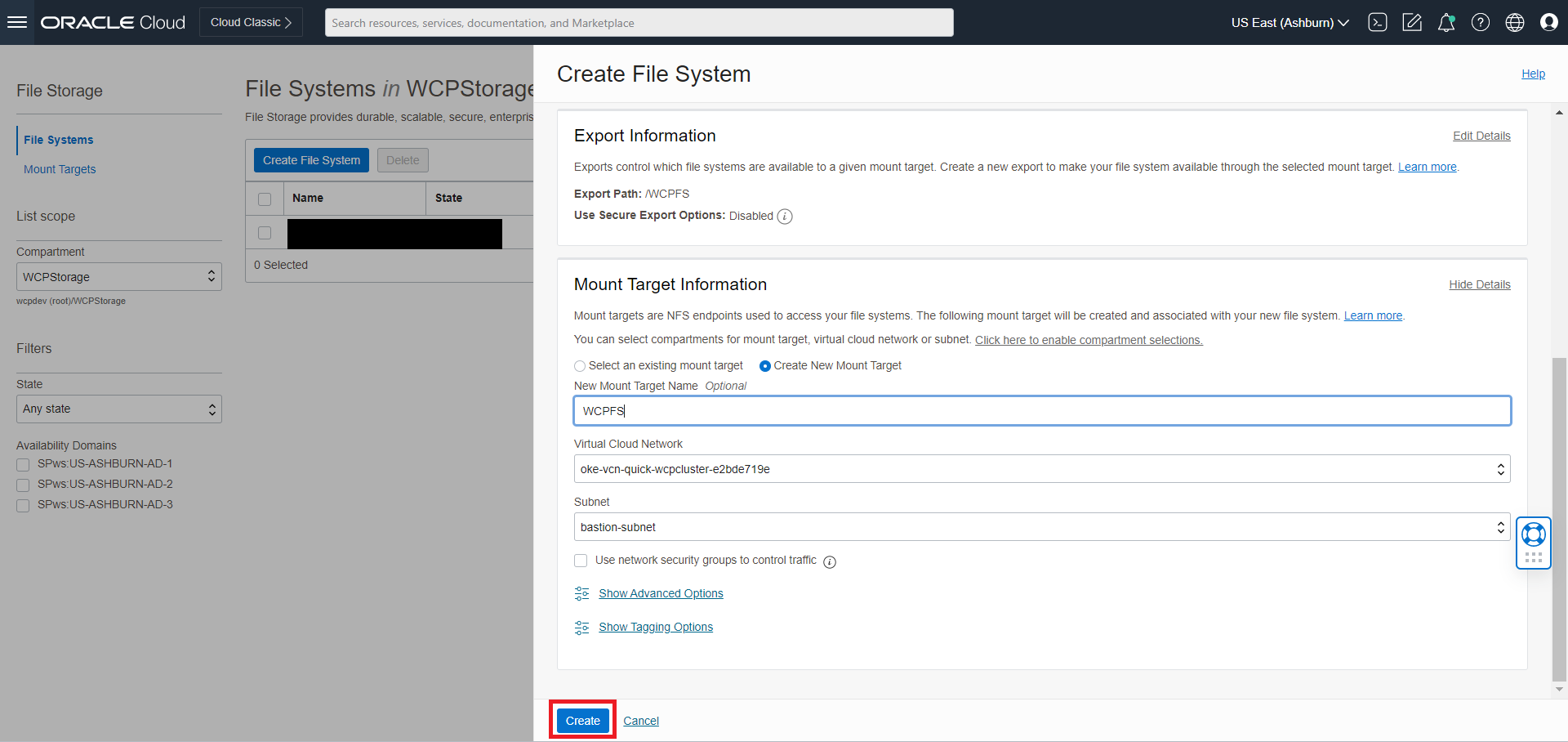

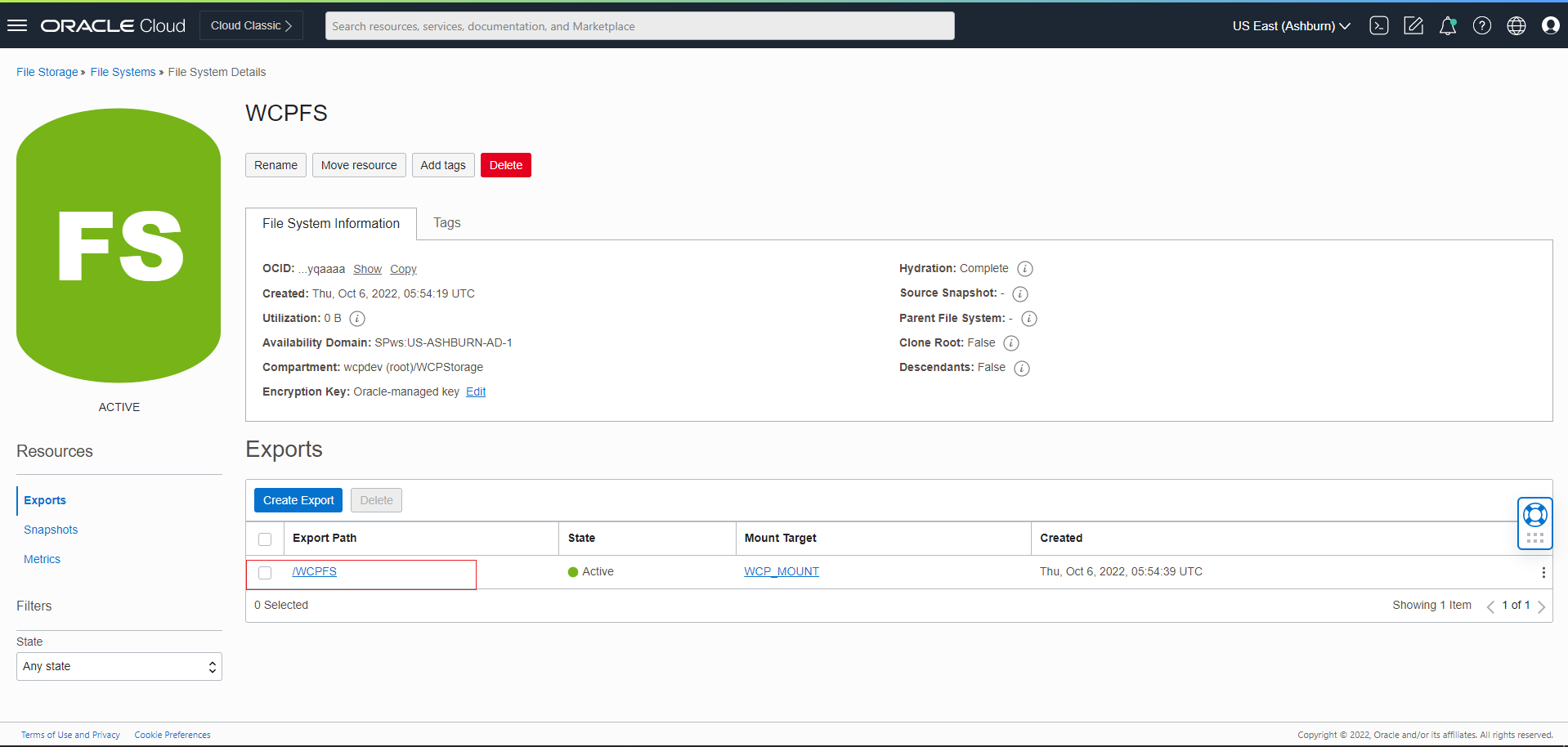

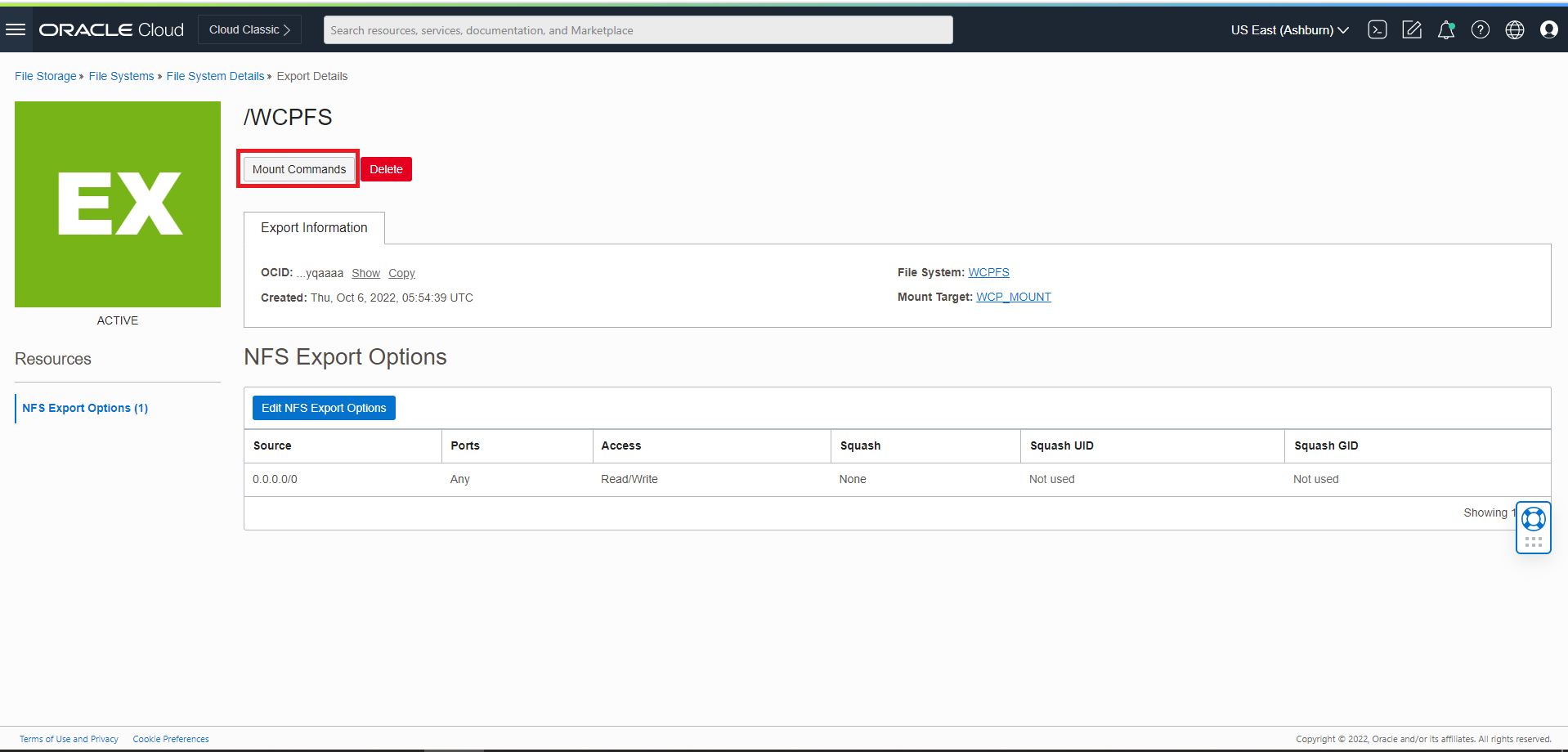

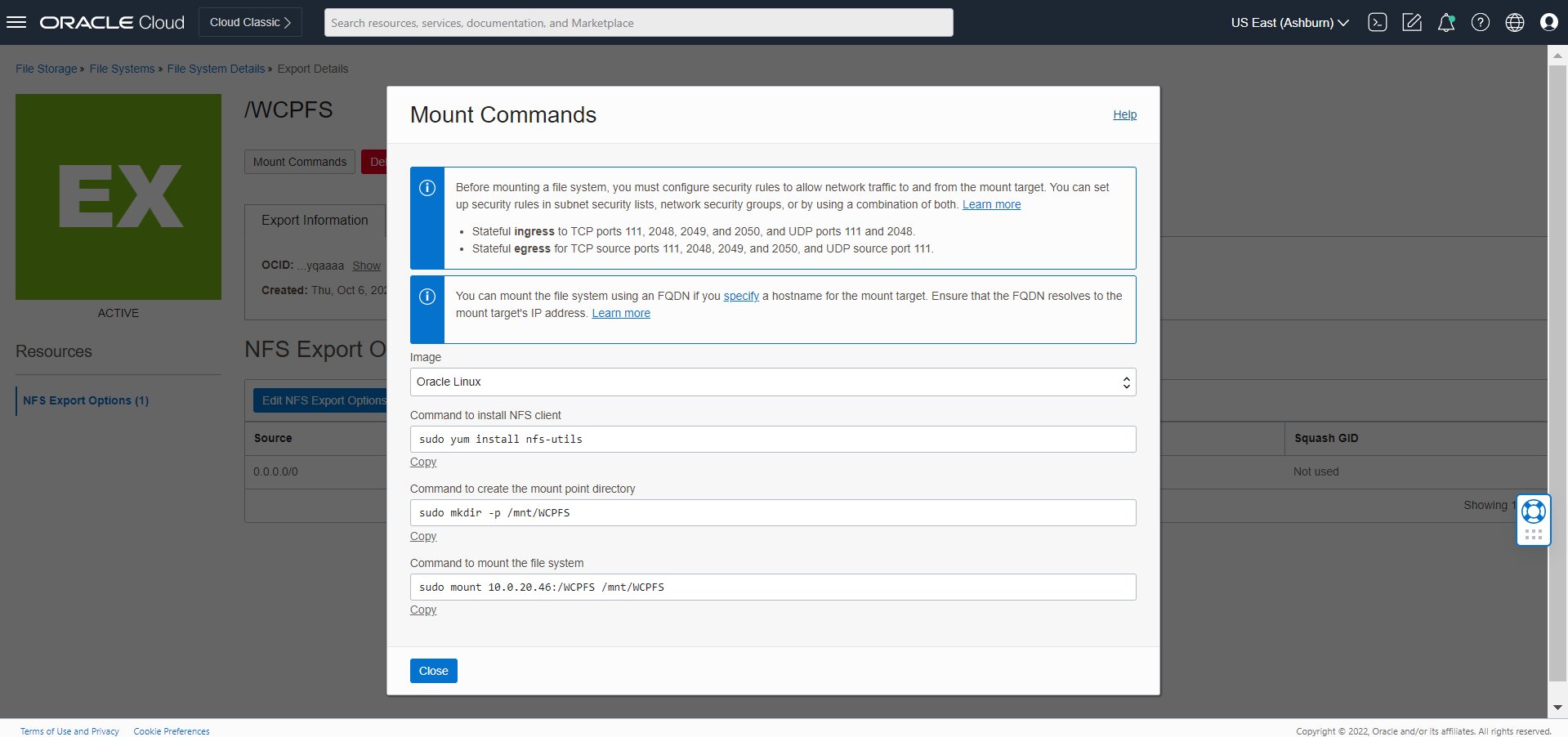

kubectl get secret wcp-domain-rcu-credentials -o yaml -n wcpnsCreate a persistent storage for an Oracle WebCenter Portal domain

Create a Kubernetes PV and PVC (Persistent Volume and Persistent Volume Claim):

In the Kubernetes namespace you created, create the PV and PVC for the domain by running the create-pv-pvc.sh script. Follow the instructions for using the script to create a dedicated PV and PVC for the Oracle WebCenter Portal domain.

Review the configuration parameters for PV creation. Based on your requirements, update the values in the

create-pv-pvc-inputs.yamlfile located at${WORKDIR}/create-weblogic-domain-pv-pvc/. Sample configuration parameter values for an Oracle WebCenter Portal domain are:baseName: domaindomainUID: wcp-domainnamespace: wcpnsweblogicDomainStorageType: HOST_PATHweblogicDomainStoragePath: /scratch/kubevolume

Ensure that the path for the

weblogicDomainStoragePathproperty exists (create one if it doesn’t exist), that it has full access permissions, and that the folder is empty.Run the

create-pv-pvc.shscript:cd ${WORKDIR}/create-weblogic-domain-pv-pvc ./create-pv-pvc.sh -i create-pv-pvc-inputs.yaml -o outputSample Output:

Input parameters being used export version="create-weblogic-sample-domain-pv-pvc-inputs-v1" export baseName="domain" export domainUID="wcp-domain" export namespace="wcpns" export weblogicDomainStorageType="HOST_PATH" export weblogicDomainStoragePath="/scratch/kubevolume" export weblogicDomainStorageReclaimPolicy="Retain" export weblogicDomainStorageSize="10Gi" Generating output/pv-pvcs/wcp-domain-domain-pv.yaml Generating output/pv-pvcs/wcp-domain-domain-pvc.yaml The following files were generated: output/pv-pvcs/wcp-domain-domain-pv.yaml output/pv-pvcs/wcp-domain-domain-pvc.yamlThe

create-pv-pvc.shscript creates a subdirectorypv-pvcsunder the given/path/to/output-directorydirectory and creates two YAML configuration files for PV and PVC. Apply these two YAML files to create the PV and PVC Kubernetes resources using thekubectl create -fcommand:kubectl create -f output/pv-pvcs/wcp-domain-domain-pv.yaml kubectl create -f output/pv-pvcs/wcp-domain-domain-pvc.yaml

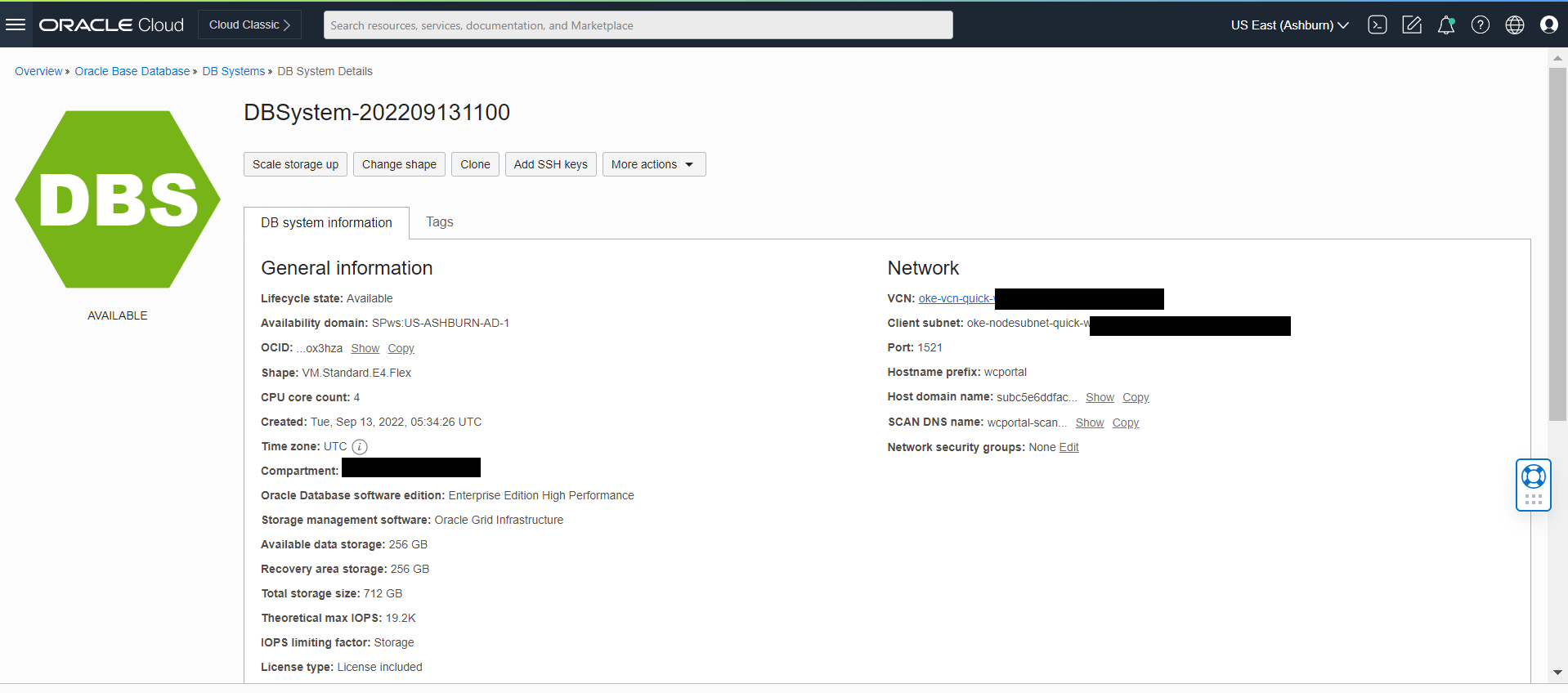

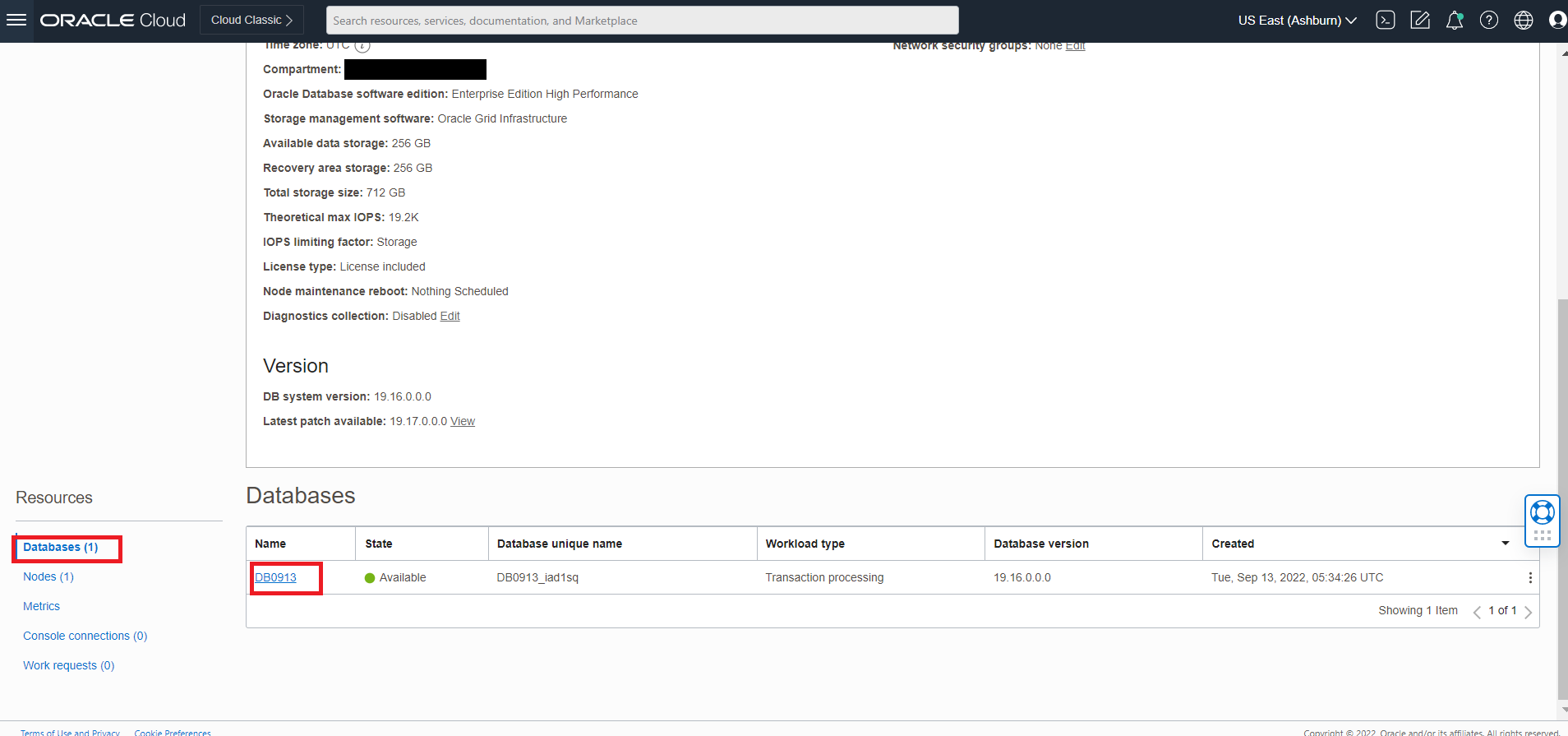

Configure Database Access

The Oracle WebCenter Portal domain requires a database configured with specific schemas, which can be created using the Repository Creation Utility (RCU). It is essential to set up the database before creating the domain.

For production environments, it is recommended to use a standalone (non-containerized) database running outside of Kubernetes.

Ensure the required schemas are set up in your database before proceeding with domain creation.

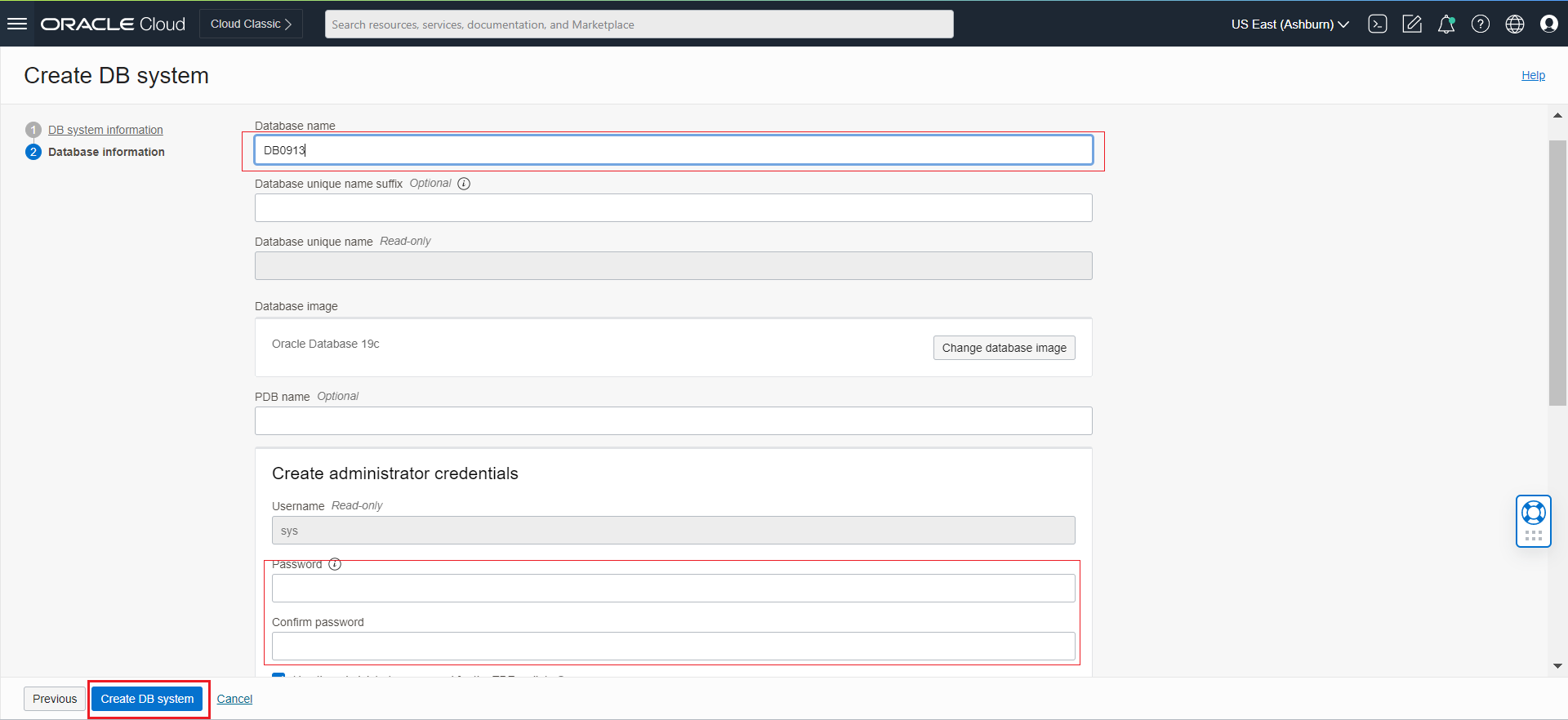

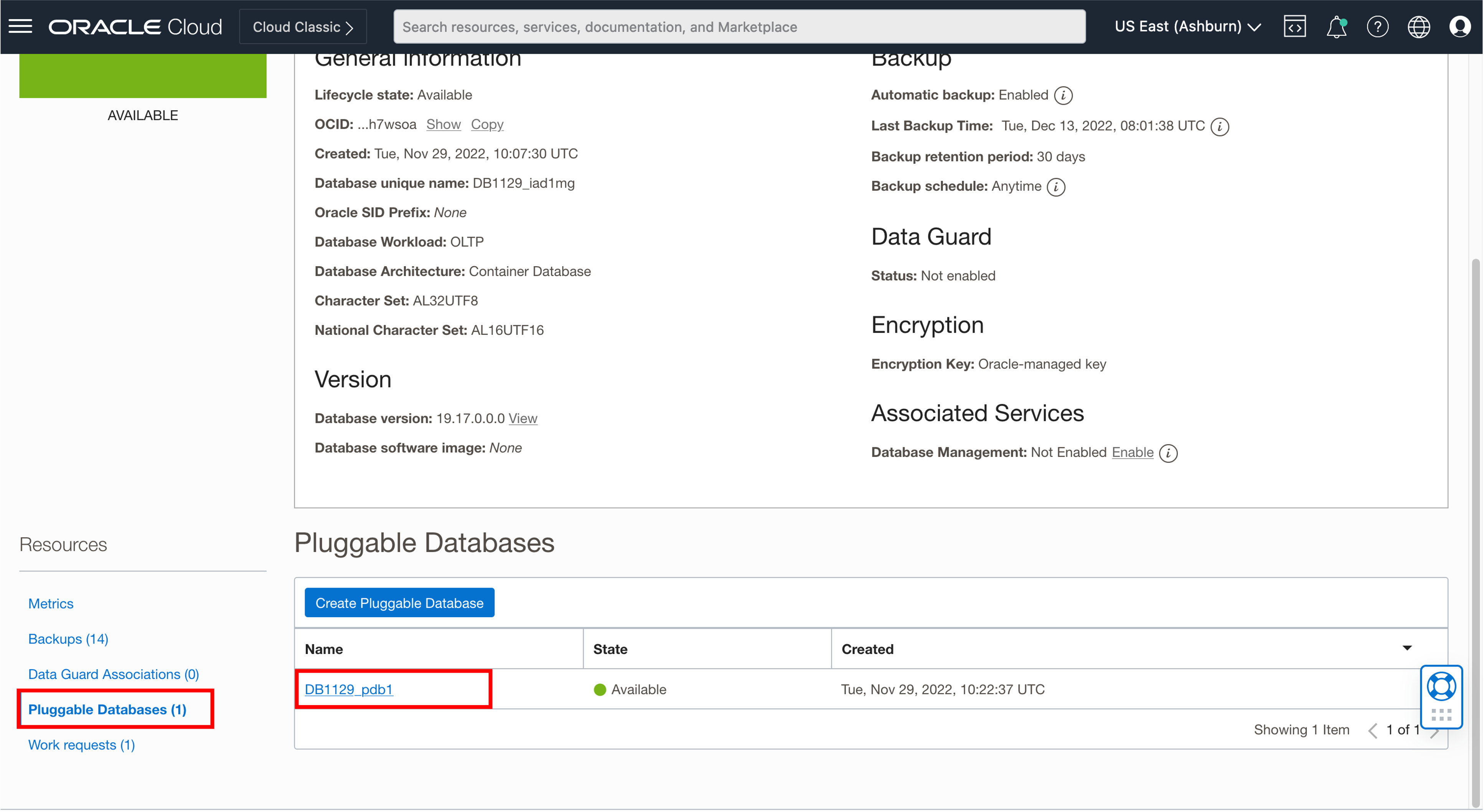

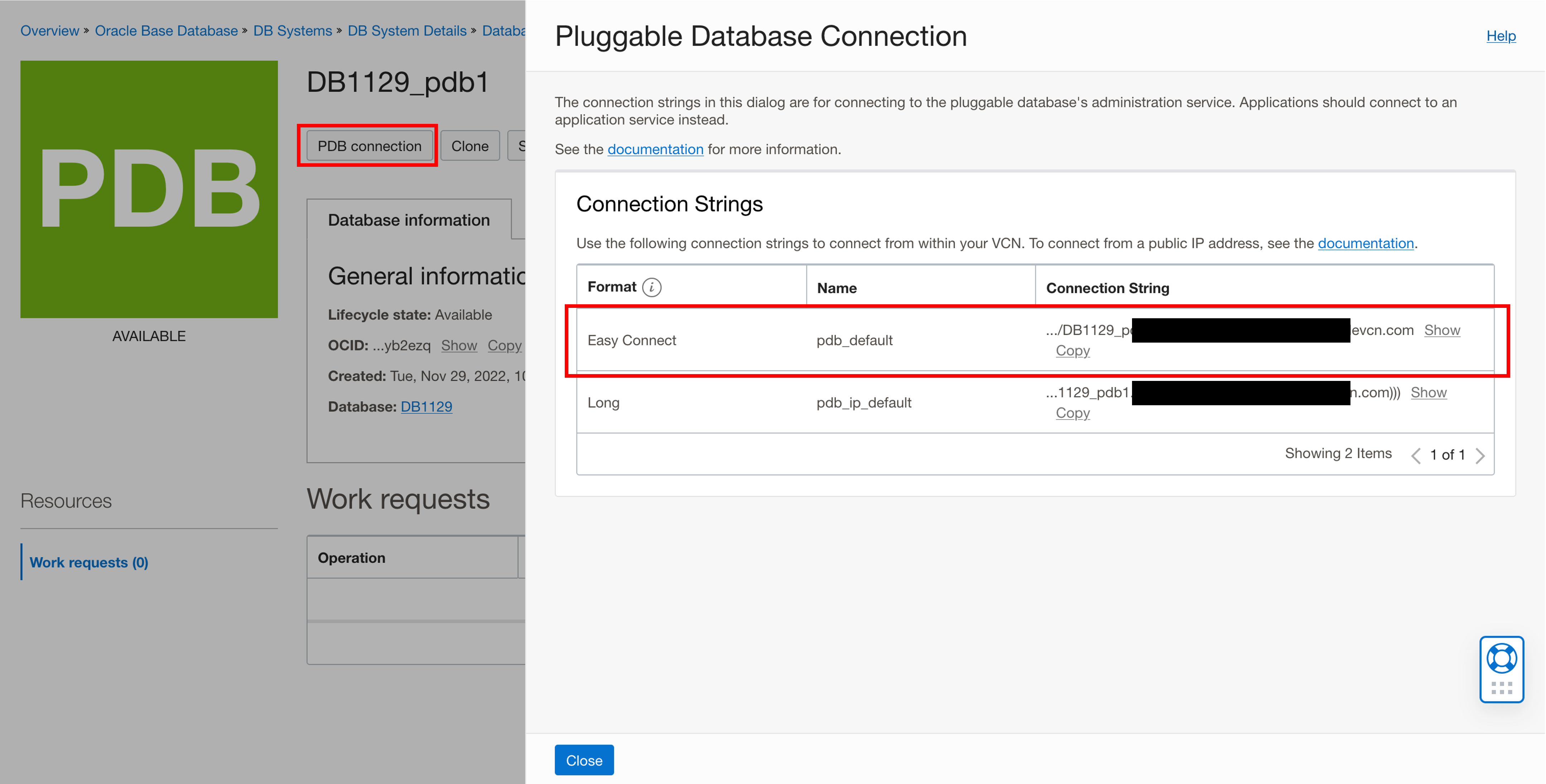

Run the Repository Creation Utility to set up your database schemas

To create the database schemas for Oracle WebCenter Portal domain, run the create-rcu-schema.sh script.

cd ${WORKDIR}/create-rcu-schema

./create-rcu-schema.sh \

-s WCP1 \

-t wcp \

-d xxx.oraclevcn.com:1521/DB1129_pdb1.xxx.wcpcluster.oraclevcn.com \

-i iad.ocir.io/xxxxxxxx/oracle/wcportal:14.1.2.0 \

-n wcpns \

-c wcp-domain-rcu-credentials \

-r ANALYTICS_WITH_PARTITIONING=NUsage:

./create-rcu-schema.sh -s <schemaPrefix> [-t <schemaType>] [-d <dburl>] [-n <namespace>] [-c <credentialsSecretName>] [-p <docker-store>] [-i <image>] [-u <imagePullPolicy>] [-o <rcuOutputDir>] [-r <customVariables>] [-l <timeoutLimit>] [-e <edition>] [-h]

-s RCU Schema Prefix (required)

-t RCU Schema Type (optional)

(supported values: wcp,wcpp)

-d RCU Oracle Database URL (optional)

(default: oracle-db.default.svc.cluster.local:1521/devpdb.k8s)

-n Namespace for RCU pod (optional)

(default: default)

-c Name of credentials secret (optional).

(default: oracle-rcu-secret)

Must contain SYSDBA username at key 'sys_username',

SYSDBA password at key 'sys_password',

and RCU schema owner password at key 'password'.

-p OracleWebCenterPortal ImagePullSecret (optional)

(default: none)

-i OracleWebCenterPortal Image (optional)

(default: oracle/wcportal:release-version)

-u OracleWebCenterPortal ImagePullPolicy (optional)

(default: IfNotPresent)

-o Output directory for the generated YAML file. (optional)

(default: rcuoutput)

-r Comma-separated custom variables in the format variablename=value. (optional).

(default: none)

-l Timeout limit in seconds. (optional).

(default: 300)

-e The edition name. This parameter is only valid if you specify databaseType=EBR. (optional).

(default: 'ORA$BASE')

-h Help

Note: The c, p, i, u, and o arguments are ignored if an rcu pod is already running in the namespace.Notes:

- Where RCU Schema type

wcpgenerates webcenter portal related schema andwcppgenerates webcenter portal plus portlet schemas.- To enable or disable database partitioning for Analytics installation in Oracle WebCenter Portal, use the -r flag. Enter Y to enable database partitioning or N to disable it. For example: -r ANALYTICS_WITH_PARTITIONING=N. Supported values for ANALYTICS_WITH_PARTITIONING are Y and N.

Create WebCenter Portal domain

Create an Oracle WebCenter Portal domain home on an existing PV or PVC, and create the domain resource YAML file for deploying the generated Oracle WebCenter Portal domain.

- Introduction

- Prerequisites

- Prepare the WebCenter Portal Domain Creation Input File

- Create the WebCenter Portal Domain

- Initialize the WebCenter Portal Domain

- Verify the WebCenter Portal Domain

- Managing WebCenter Portal

Introduction

You can use the sample scripts to create a WebCenter Portal domain home on an existing Kubernetes persistent volume (PV) and persistent volume claim (PVC).The scripts also generate the domain YAML file, which helps you start the Kubernetes artifacts of the corresponding domain.

Prerequisites

- Ensure that you have completed all of the steps under prepare-your-environment.

- Ensure that the database and the WebLogic Kubernetes operator is up.

Prepare the WebCenter Portal Domain Creation Input File

If required, you can customize the parameters used for creating a domain in the create-domain-inputs.yaml file.

Please note that the sample scripts for the WebCenter Portal domain deployment are available from the previously downloaded repository at ${WORKDIR}/create-wcp-domain/domain-home-on-pv/.

Make a copy of the create-domain-inputs.yaml file before updating the default values.

The default domain created by the script has the following characteristics:

- An Administration Server named

AdminServerlistening on port7001. - A configured cluster named

wcp-clusterof size5. - Managed Server, named

wcpserver, listening on port8888. - If

configurePortletServeris set totrue. It configures a cluster namedwcportlet-clusterof size5and Managed Server, namedwcportletserver, listening on port8889. - Log files that are located in

/shared/logs/<domainUID>.

Configuration parameters

The following parameters can be provided in the inputs file:

| Parameter | Definition | Default |

|---|---|---|

sslEnabled |

SSL mode enabling flag | false |

configurePortletServer |

Configure portlet server cluster | false |

adminPort |

Port number for the Administration Server inside the Kubernetes cluster. | 7001 |

adminServerSSLPort |

SSL Port number for the Administration Server inside the Kubernetes cluster. | 7002 |

adminAdministrationPort |

Administration Port number for the Administration Server inside the Kubernetes cluster. | 9002 |

adminServerName |

Name of the Administration Server. | AdminServer |

domainUID |

Unique ID that identifies this particular domain. Used as the name of the generated WebLogic domain as well as the name of the Kubernetes domain resource. This ID must be unique across all domains in a Kubernetes cluster. This ID cannot contain any character that is not valid in a Kubernetes service name. | wcp-domain |

domainHome |

Home directory of the WebCenter Portal domain. Note: This field cannot be modified. | /u01/oracle/user_projects/domains/wcp-domain |

serverStartPolicy |

Determines which WebLogic Server instances are to be started by the WebLogic Kubernetes Operator. Valid values: Never, IfNeeded, AdminOnly. Never means no servers will be started, IfNeeded will start both Administration and Managed Servers as required, and AdminOnly will only start the Administration Server. |

IfNeeded |

clusterName |

Name of the WebLogic cluster instance to generate for the domain. By default the cluster name is wcp-cluster for the WebCenter Portal domain. |

wcp-cluster |

configuredManagedServerCount |

Number of Managed Server instances for the domain. | 5 |

initialManagedServerReplicas |

Number of Managed Servers to initially start for the domain. | 2 |

managedServerNameBase |

Base string used to generate Managed Server names. | wcpserver |

managedServerPort |

Port number for each Managed Server. By default, the managedServerPort is 8888 for the wcpserver and managedServerPort is 8889 for the wcportletserver. |

8888 |

managedServerSSLPort |

SSL port number for each Managed Server. By default, the managedServerPort is 8788 for the wcpserver and managedServerPort is 8789 for the wcportletserver. |

8788 |

managedAdministrationPort |

Administration port number for each Managed Server. This port is used for administrative communications with the Managed Servers. | 9008 |

portletClusterName |

Name of the Portlet cluster instance to generate for the domain. By default, the cluster name is wcportlet-cluster for the Portlet. |

wcportlet-cluster |

portletServerNameBase |

Base string used to generate Portlet Server names. | wcportletserver |

portletServerPort |

Port number for each Portlet Server. By default, the portletServerPort is 8889 for the wcportletserver. |

8889 |

portletServerSSLPort |

SSL port number for each Portlet Server. By default, the portletServerSSLPort is 8789 for the wcportletserver. |

8789 |

portletAdministrationPort |

Administration port number for each Portlet Server. This port is used for administrative communications with the Portlet Servers. | 9009 |

image |

WebCenter Portal Docker image. The WebLogic Kubernetes Operator requires WebCenter Portal release 14.1.2.0. Refer to WebCenter Portal Docker Image for details on how to obtain or create the image. | oracle/wcportal:14.1.2.0 |

imagePullPolicy |

Defines when the WebLogic Docker image is pulled from the repository. Valid values: IfNotPresent (default, pulls the image only if it is not present on the node), Always (pulls the image on every deployment), and Never (never pulls the image, it must already be available locally). |

IfNotPresent |

productionModeEnabled |

Boolean flag that indicates whether the domain is running in production mode. In production mode, WebLogic Server enforces stricter security and resource management settings. | true |

secureEnabled |

Boolean indicating whether secure mode is enabled for the domain. When set to true, WebLogic enables additional security settings such as SSL configuration, enforcing stricter authentication and encryption protocols. This is relevant in production environments where security is critical. It has significance when running WebLogic in production mode (productionModeEnabled is true). When set to false, the domain operates without these additional security measures. |

false |

weblogicCredentialsSecretName |

Name of the Kubernetes secret for the Administration Server’s user name and password. If not specified, then the value is derived from the domainUID as <domainUID>-weblogic-credentials. |

wcp-domain-domain-credentials |

includeServerOutInPodLog |

Boolean indicating whether to include the server.out logs in the pod’s stdout stream. When set to true, the WebLogic Server’s standard output is redirected to the pod’s log output, making it easier to access logs via Kubernetes log management tools. |

true |

logHome |

The in-pod location for the domain log, server logs, server out, and Node Manager log files. Note: This field cannot be modified. | /u01/oracle/user_projects/logs/wcp-domain |

httpAccessLogInLogHome |

Boolean indicating where HTTP access log files are written. If set to true, logs are written to the logHome directory; if set to false, they are written to the WebLogic domain home directory. |

true |

t3ChannelPort |

Port for the T3 channel of the NetworkAccessPoint. | 30012 |

exposeAdminT3Channel |

Boolean indicating whether the T3 channel for the Admin Server should be exposed as a service. If set to false, the T3 channel remains internal to the cluster. |

false |

adminNodePort |

Port number of the Administration Server outside the Kubernetes cluster, allowing external access to the WebLogic Admin Server. | 30701 |

exposeAdminNodePort |

Boolean indicating if the Administration Server is exposed outside of the Kubernetes cluster. | false |

namespace |

Kubernetes namespace in which to create the WebLogic domain, isolating resources and facilitating management within the cluster. | wcpns |

javaOptions |

Java options for starting the Administration Server and Managed Servers. A Java option can include references to one or more of the following pre-defined variables to obtain WebLogic domain information: $(DOMAIN_NAME), $(DOMAIN_HOME), $(ADMIN_NAME), $(ADMIN_PORT), and $(SERVER_NAME). |

-Dweblogic.StdoutDebugEnabled=false |

persistentVolumeClaimName |

Name of the persistent volume claim created to host the domain home. If not specified, the value is derived from the domainUID as <domainUID>-weblogic-sample-pvc. |

wcp-domain-domain-pvc |

domainPVMountPath |

Mount path of the domain persistent volume. Note: This field cannot be modified. | /u01/oracle/user_projects/domains |

createDomainScriptsMountPath |

Mount path where the create domain scripts are located inside a pod. The create-domain.sh script creates a Kubernetes job to run the script (specified in the createDomainScriptName property) in a Kubernetes pod that creates a domain home. Files in the createDomainFilesDir directory are mounted to this location in the pod, so that the Kubernetes pod can use the scripts and supporting files to create a domain home. |

/u01/weblogic |

createDomainScriptName |

Script that the create domain script uses to create a WebLogic domain. The create-domain.sh script creates a Kubernetes job to run this script that creates a domain home. The script is located in the in-pod directory that is specified in the createDomainScriptsMountPath property. If you need to provide your own scripts to create the domain home, instead of using the built-in scripts, you must use this property to set the name of the script to that which you want the create domain job to run. |

create-domain-job.sh |

createDomainFilesDir |

Directory on the host machine to locate all the files that you need to create a WebLogic domain, including the script that is specified in the createDomainScriptName property. By default, this directory is set to the relative path wlst, and the create script uses the built-in WLST offline scripts in the wlst directory to create the WebLogic domain. An absolute path is also supported to point to an arbitrary directory in the file system. The built-in scripts can be replaced by the user-provided scripts or model files as long as those files are in the specified directory. Files in this directory are put into a Kubernetes config map, which in turn is mounted to createDomainScriptsMountPath, so that the Kubernetes pod can use the scripts and supporting files to create a domain home. |

wlst |

rcuSchemaPrefix |

The schema prefix to use in the database, for example WCP1. You may wish to make this the same as the domainUID in order to simplify matching domain to their RCU schemas. |

WCP1 |

rcuDatabaseURL |

The database URL. | dbhostname:dbport/servicename |

rcuCredentialsSecret |

The Kubernetes secret containing the database credentials. | wcp-domain-rcu-credentials |

loadBalancerHostName |

Host name for the final URL accessible outside K8S environment. | abc.def.com |

loadBalancerPortNumber |

Port for the final URL accessible outside K8S environment. | 30305 |

loadBalancerProtocol |

Protocol for the final URL accessible outside K8S environment. | http |

loadBalancerType |

Load balancer name. Example: Traefik or “” | traefik |

unicastPort |

Starting range of unicast port that the application will use. | 50000 |

You can form the names of the Kubernetes resources in the generated YAML files with the value of these properties specified in the create-domain-inputs.yaml file: adminServerName, clusterName, and managedServerNameBase. Characters that are invalid in a Kubernetes service name are converted to valid values in the generated YAML files. For example, an uppercase letter is converted to a lowercase letter, and an underscore (“_“) is converted to a hyphen (”-“).

The sample demonstrates how to create a WebCenter Portal domain home and associated Kubernetes resources for a domain that has one cluster only. In addition, the sample provides users with the capability to supply their own scripts to create the domain home for other use cases. You can modify the generated domain YAML file to include more use cases.

Create the WebCenter Portal Domain

Run the create domain script, specifying your inputs file and an output directory to store the generated artifacts:

./create-domain.sh \

-i create-domain-inputs.yaml \

-o /<path to output-directory>The script will perform the following steps:

Create a directory for the generated Kubernetes YAML files for this domain if it does not already exist. The path name is

<path to output-directory>/weblogic-domains/<domainUID>. If the directory already exists, its contents must be removed before using this script.Create a Kubernetes job that will start up a utility Oracle WebCenter Portal container and run offline WLST scripts to create the domain on the shared storage.

Run and wait for the job to finish.

Create a Kubernetes domain YAML file,

domain.yaml, in the “output” directory that was created above.This YAML file can be used to create the Kubernetes resource using the

kubectl create -forkubectl apply -fcommand:kubectl apply -f ../<path to output-directory>/weblogic-domains/<domainUID>/domain.yamlCreate a convenient utility script,

delete-domain-job.yaml, to clean up the domain home created by the create script.

Run the

create-domain.shsample script, pointing it at thecreate-domain-inputs.yamlinputs file and an output directory like below:cd ${WORKDIR}/create-wcp-domain/ sh create-domain.sh -i create-domain-inputs.yaml -o outputSample Output:

Input parameters being used export version="create-weblogic-sample-domain-inputs-v1" export sslEnabled="false" export adminPort="7001" export adminServerSSLPort="7002" export adminServerName="AdminServer" export domainUID="wcp-domain" export domainHome="/u01/oracle/user_projects/domains/$domainUID" export serverStartPolicy="IF_NEEDED" export clusterName="wcp-cluster" export configuredManagedServerCount="5" export initialManagedServerReplicas="2" export managedServerNameBase="wcpserver" export managedServerPort="8888" export managedServerSSLPort="8788" export portletServerPort="8889" export portletServerSSLPort="8789" export image="oracle/wcportal:14.1.2.0" export imagePullPolicy="IfNotPresent" export productionModeEnabled="true" export weblogicCredentialsSecretName="wcp-domain-domain-credentials" export includeServerOutInPodLog="true" export logHome="/u01/oracle/user_projects/domains/logs/$domainUID" export httpAccessLogInLogHome="true" export t3ChannelPort="30012" export exposeAdminT3Channel="false" export adminNodePort="30701" export exposeAdminNodePort="false" export namespace="wcpns" javaOptions=-Dweblogic.StdoutDebugEnabled=false export persistentVolumeClaimName="wcp-domain-domain-pvc" export domainPVMountPath="/u01/oracle/user_projects/domains" export createDomainScriptsMountPath="/u01/weblogic" export createDomainScriptName="create-domain-job.sh" export createDomainFilesDir="wlst" export rcuSchemaPrefix="WCP1" export rcuDatabaseURL="oracle-db.wcpns.svc.cluster.local:1521/devpdb.k8s" export rcuCredentialsSecret="wcp-domain-rcu-credentials" export loadBalancerHostName="abc.def.com" export loadBalancerPortNumber="30305" export loadBalancerProtocol="http" export loadBalancerType="traefik" export unicastPort="50000" Generating output/weblogic-domains/wcp-domain/create-domain-job.yaml Generating output/weblogic-domains/wcp-domain/delete-domain-job.yaml Generating output/weblogic-domains/wcp-domain/domain.yaml Checking to see if the secret wcp-domain-domain-credentials exists in namespace wcpns configmap/wcp-domain-create-wcp-infra-sample-domain-job-cm created Checking the configmap wcp-domain-create-wcp-infra-sample-domain-job-cm was created configmap/wcp-domain-create-wcp-infra-sample-domain-job-cm labeled Checking if object type job with name wcp-domain-create-wcp-infra-sample-domain-job exists Deleting wcp-domain-create-wcp-infra-sample-domain-job using output/weblogic-domains/wcp-domain/create-domain-job.yaml job.batch "wcp-domain-create-wcp-infra-sample-domain-job" deleted $loadBalancerType is NOT empty Creating the domain by creating the job output/weblogic-domains/wcp-domain/create-domain-job.yaml job.batch/wcp-domain-create-wcp-infra-sample-domain-job created Waiting for the job to complete... status on iteration 1 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 2 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 3 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 4 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 5 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 6 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Running status on iteration 7 of 20 pod wcp-domain-create-wcp-infra-sample-domain-job-b5l6c status is Completed Domain wcp-domain was created and will be started by the WebLogic Kubernetes Operator The following files were generated: output/weblogic-domains/wcp-domain/create-domain-inputs.yaml output/weblogic-domains/wcp-domain/create-domain-job.yaml output/weblogic-domains/wcp-domain/domain.yaml CompletedTo monitor the above domain creation logs:

$ kubectl get pods -n wcpns |grep wcp-domain-createSample Output:

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 1/1 Running 0 6s$ kubectl get pods -n wcpns | grep wcp-domain-create | awk '{print $1}' | xargs kubectl -n wcpns logs -fSample Output:

The domain will be created using the script /u01/weblogic/create-domain-script.sh Initializing WebLogic Scripting Tool (WLST) ... Welcome to WebLogic Server Administration Scripting Shell Type help() for help on available commands ================================================================= WebCenter Portal Weblogic Operator Domain Creation Script 14.1.2.0 ================================================================= Creating Base Domain... Creating Admin Server... Creating cluster... managed server name is wcpserver1 managed server name is wcpserver2 managed server name is wcpserver3 managed server name is wcpserver4 managed server name is wcpserver5 ['wcpserver1', 'wcpserver2', 'wcpserver3', 'wcpserver4', 'wcpserver5'] Creating porlet cluster... managed server name is wcportletserver1 managed server name is wcportletserver2 managed server name is wcportletserver3 ['wcportletserver1', 'wcportletserver2', 'wcportletserver3', 'wcportletserver4', 'wcportletserver5'] Managed servers created... Creating Node Manager... Will create Base domain at /u01/oracle/user_projects/domains/wcp-domain Writing base domain... Base domain created at /u01/oracle/user_projects/domains/wcp-domain Extending Domain... Extending domain at /u01/oracle/user_projects/domains/wcp-domain Database oracle-db.wcpns.svc.cluster.local:1521/devpdb.k8s ExposeAdminT3Channel false with 100.111.157.155:30012 Applying JRF templates... Applying WCPortal templates... Extension Templates added... WC_Portal Managed server deleted... Configuring the Service Table DataSource... fmwDatabase jdbc:oracle:thin:@oracle-db.wcpns.svc.cluster.local:1521/devpdb.k8s Getting Database Defaults... Targeting Server Groups... Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcpserver1 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcpserver2 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcpserver3 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcpserver4 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcpserver5 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcportletserver1 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcportletserver2 Set CoherenceClusterSystemResource to defaultCoherenceCluster for server:wcportletserver3 Targeting Cluster ... Set CoherenceClusterSystemResource to defaultCoherenceCluster for cluster:wcp-cluster Set WLS clusters as target of defaultCoherenceCluster:wcp-cluster Set CoherenceClusterSystemResource to defaultCoherenceCluster for cluster:wcportlet-cluster Set WLS clusters as target of defaultCoherenceCluster:wcportlet-cluster Preparing to update domain... Jan 12, 2021 10:30:09 AM oracle.security.jps.az.internal.runtime.policy.AbstractPolicyImpl initializeReadStore INFO: Property for read store in parallel: oracle.security.jps.az.runtime.readstore.threads = null Domain updated successfully Domain Creation is done... Successfully Completed

Initialize the WebCenter Portal Domain

To start the domain, apply the above domain.yaml:

kubectl apply -f output/weblogic-domains/wcp-domain/domain.yamlSample Output:

domain.weblogic.oracle/wcp-domain createdVerify the WebCenter Portal Domain

Verify that the domain and servers pods and services are created and in the READY state:

Sample run below:

kubectl get pods -n wcpns -wSample Output:

NAME READY STATUS RESTARTS AGE

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 15m

wcp-domain-adminserver 1/1 Running 0 8m9s

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 3h6m

wcp-domain-wcp-server1 0/1 Running 0 6m5s

wcp-domain-wcp-server2 0/1 Running 0 6m4s

wcp-domain-wcp-server2 1/1 Running 0 6m18s

wcp-domain-wcp-server1 1/1 Running 0 6m54skubectl get all -n wcpnsSample Output:

NAME READY STATUS RESTARTS AGE

pod/wcp-domain-adminserver 1/1 Running 0 13m

pod/wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 3h12m

pod/wcp-domain-wcp-server1 1/1 Running 0 11m

pod/wcp-domain-wcp-server2 1/1 Running 0 11m

pod/wcp-domain-wcportletserver1 1/1 Running 1 21h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/wcp-domain-adminserver ClusterIP None <none> 7001/TCP 13m

service/wcp-domain-cluster-wcp-cluster ClusterIP 10.98.145.173 <none> 8888/TCP 11m

service/wcp-domain-wcp-server1 ClusterIP None <none> 8888/TCP 11m

service/wcp-domain-wcp-server2 ClusterIP None <none> 8888/TCP 11m

service/wcp-domain-cluster-wcportlet-cluster ClusterIP 10.98.145.173 <none> 8889/TCP 11m

service/wcp-domain-wcportletserver1 ClusterIP None <none> 8889/TCP 11m

NAME COMPLETIONS DURATION AGE

job.batch/wcp-domain-create-fmw-infra-sample-domain-job 1/1 16m 3h12mTo see the Admin and Managed Servers logs, you can check the pod logs:

$ kubectl logs -f wcp-domain-adminserver -n wcpns$ kubectl logs -f wcp-domain-wcp-server1 -n wcpnsVerify the Pods

Use the following command to see the pods running the servers:

$ kubectl get pods -n NAMESPACEHere is an example of the output of this command:

kubectl get pods -n wcpnsSample Output:

NAME READY STATUS RESTARTS AGE

rcu 1/1 Running 1 14d

wcp-domain-adminserver 1/1 Running 0 16m

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 3h14m

wcp-domain-wcp-server1 1/1 Running 0 14m

wcp-domain-wcp-server2 1/1 Running 0 14m

wcp-domain-wcportletserver1 1/1 Running 1 14mVerify the Services

Use the following command to see the services for the domain:

$ kubectl get services -n NAMESPACEHere is an example of the output of this command:

kubectl get services -n wcpnsSample Output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

wcp-domain-adminserver ClusterIP None <none> 7001/TCP 17m

wcp-domain-cluster-wcp-cluster ClusterIP 10.98.145.173 <none> 8888/TCP 14m

wcp-domain-wcp-server1 ClusterIP None <none> 8888/TCP 14m

wcp-domain-wcp-server2 ClusterIP None <none> 8888/TCP 14m

wcp-domain-cluster-wcportlet-cluster ClusterIP 10.98.145.173 <none> 8889/TCP 14m

wcp-domain-wcportletserver1 ClusterIP None <none> 8889/TCP 14mManaging WebCenter Portal

To stop Managed Servers:

kubectl patch domain wcp-domain -n wcpns --type='json' -p='[{"op": "replace", "path": "/spec/clusters/0/replicas", "value": 0 }]'To start all configured Managed Servers:

kubectl patch domain wcp-domain -n wcpns --type='json' -p='[{"op": "replace", "path": "/spec/clusters/0/replicas", "value": 3 }]' kubectl get pods -n wcpns -wSample Output:

NAME READY STATUS RESTARTS AGE

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 15m

wcp-domain-adminserver 1/1 Running 0 8m9s

wcp-domain-create-fmw-infra-sample-domain-job-8jr6k 0/1 Completed 0 3h6m

wcp-domain-wcp-server1 0/1 Running 0 6m5s

wcp-domain-wcp-server2 0/1 Running 0 6m4s

wcp-domain-wcp-server2 1/1 Running 0 6m18s

wcp-domain-wcp-server1 1/1 Running 0 6m54sAdministration Guide

Explains how to utilize various utility tools and configurations to manage the WebCenter Portal domain.

Administer the Oracle WebCenter Portal domain in a Kubernetes environment.

Setting Up a Load Balancer

Set up various load balancers for the Oracle WebCenter Portal domain.

The WebLogic Kubernetes Operator supports ingress-based load balancers like Traefik and NGINX (kubernetes/ingress-nginx). It also works with the Apache web tier load balancer.

Traefik

Set up the Traefik ingress-based load balancer for the Oracle WebCenter Portal domain.

To load balance Oracle WebCenter Portal domain clusters, install the ingress-based Traefik load balancer (version 2.6.0 or later for production environments) and configure it for non-SSL, SSL termination, and end-to-end SSL access for the application URL. Follow these steps to configure Traefik as a load balancer for an Oracle WebCenter Portal domain in a Kubernetes cluster:

Non-SSL and SSL termination

Install the Traefik (ingress-based) load balancer

Use Helm to install the Traefik (ingress-based) load balancer. You can use the following

values.yamlsample file and set kubernetes.namespaces as required.cd ${WORKDIR} kubectl create namespace traefik helm repo add traefik https://helm.traefik.io/traefik --force-updateSample output:

"traefik" has been added to your repositoriesInstall Traefik:

helm install traefik traefik/traefik \ --namespace traefik \ --values charts/traefik/values.yaml \ --set "kubernetes.namespaces={traefik}" \ --set "service.type=NodePort" --waitSample output:

LAST DEPLOYED: Sun Sep 13 21:32:00 2020 NAMESPACE: traefik STATUS: deployed REVISION: 1 TEST SUITE: NoneHere is a sample

values.yamlfor deploying Traefik:image: name: traefik pullPolicy: IfNotPresent ingressRoute: dashboard: enabled: true # Additional ingressRoute annotations (e.g. for kubernetes.io/ingress.class) annotations: {} # Additional ingressRoute labels (e.g. for filtering IngressRoute by custom labels) labels: {} providers: kubernetesCRD: enabled: true kubernetesIngress: enabled: true # IP used for Kubernetes Ingress endpoints ports: traefik: port: 9000 # The exposed port for this service exposedPort: 9000 # The port protocol (TCP/UDP) protocol: TCP web: port: 8000 exposedPort: 30305 nodePort: 30305 # The port protocol (TCP/UDP) protocol: TCP # Use nodeport if set. This is useful if you have configured Traefik in a # LoadBalancer # nodePort: 32080 # Port Redirections # Added in 2.2, you can make permanent redirects via entrypoints. # https://docs.traefik.io/routing/entrypoints/#redirection # redirectTo: websecure websecure: port: 8443 exposedPort: 30443 # The port protocol (TCP/UDP) protocol: TCP nodePort: 30443Verify the Traefik status and find the port number of the SSL and non-SSL services:

kubectl get all -n traefikSample output:

NAME READY STATUS RESTARTS AGE pod/traefik-f9cf58697-29dlx 1/1 Running 0 35s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/traefik NodePort 10.100.113.37 <none> 9000:30070/TCP,30305:30305/TCP,30443:30443/TCP 35s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/traefik 1/1 1 1 36s NAME DESIRED CURRENT READY AGE replicaset.apps/traefik-f9cf58697 1 1 1 36sAccess the Traefik dashboard through the URL

http://$(hostname -f):30070, with the HTTP hosttraefik.example.com:curl -H "host: $(hostname -f)" http://$(hostname -f):30070/dashboard/Note: Make sure that you specify a fully qualified node name for

$(hostname -f)

Configure Traefik to manage ingresses

Configure Traefik to manage ingresses created in this namespace. In the following sample, traefik is the Traefik namespace and wcpns is the namespace of the domain:

helm upgrade traefik traefik/traefik \

--reuse-values \

--namespace traefik \

--set "kubernetes.namespaces={traefik,wcpns}" \

--waitSample output:

Release "traefik" has been upgraded. Happy Helming!

NAME: traefik

LAST DEPLOYED: Tue Jan 12 04:33:15 2021

NAMESPACE: traefik

STATUS: deployed

REVISION: 2

TEST SUITE: NoneCreating an Ingress for the Domain

To create an ingress for the domain within the domain namespace, use the sample Helm chart, which implements path-based routing for ingress. Sample values for the default configuration can be found in the file ${WORKDIR}/charts/ingress-per-domain/values.yaml.

By default, the type is set to TRAEFIK, and tls is configured as Non-SSL. You can override these values either by passing them through the command line or by editing the sample values.yaml file according to your configuration type (non-SSL or SSL).

NOTE: This is not an exhaustive list of rules. You can modify it to include any application URLs that need external access.

If necessary, you can update the ingress YAML file to define additional path rules in the spec.rules.host.http.paths section based on the domain application URLs that require external access. The template YAML file for the Traefik (ingress-based) load balancer is located at ${WORKDIR}/charts/ingress-per-domain/templates/traefik-ingress.yaml. You can add new path rules as demonstrated below.

- path: /NewPathRule

backend:

serviceName: 'Backend Service Name'

servicePort: 'Backend Service Port'Install

ingress-per-domainusing Helm for non-SSL configuration:cd ${WORKDIR} helm install wcp-traefik-ingress \ charts/ingress-per-domain \ --namespace wcpns \ --values charts/ingress-per-domain/values.yaml \ --set "traefik.hostname=$(hostname -f)"Sample output:

NAME: wcp-traefik-ingress LAST DEPLOYED: Mon Jul 20 11:44:13 2020 NAMESPACE: wcpns STATUS: deployed REVISION: 1 TEST SUITE: NoneFor secured access (SSL) to the Oracle WebCenter Portal application, create a certificate and generate a Kubernetes secret:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/tls1.key -out /tmp/tls1.crt -subj "/CN=*" kubectl -n wcpns create secret tls wcp-domain-tls-cert --key /tmp/tls1.key --cert /tmp/tls1.crtNote:: The value of

CNis the host on which this ingress is to be deployed.Create the Traefik TLSStore custom resource.

In case of SSL termination, Traefik should be configured to use the user-defined SSL certificate. If the user-defined SSL certificate is not configured, Traefik creates a default SSL certificate. To configure a user-defined SSL certificate for Traefik, use the TLSStore custom resource. The Kubernetes secret created with the SSL certificate should be referenced in the TLSStore object. Run the following command to create the TLSStore:

cat <<EOF | kubectl apply -f - apiVersion: traefik.containo.us/v1alpha1 kind: TLSStore metadata: name: default namespace: wcpns spec: defaultCertificate: secretName: wcp-domain-tls-cert EOFInstall

ingress-per-domainusing Helm for SSL configuration.The Kubernetes secret name should be updated in the template file.

The template file also contains the following annotations:

traefik.ingress.kubernetes.io/router.entrypoints: websecure traefik.ingress.kubernetes.io/router.tls: "true" traefik.ingress.kubernetes.io/router.middlewares: wcpns-wls-proxy-ssl@kubernetescrdThe entry point for SSL access and the Middleware name should be updated in the annotation. The Middleware name should be in the form

<namespace>-<middleware name>@kubernetescrd.cd ${WORKDIR} helm install wcp-traefik-ingress \ charts/ingress-per-domain \ --namespace wcpns \ --values charts/ingress-per-domain/values.yaml \ --set "traefik.hostname=$(hostname -f)" \ --set sslType=SSLSample output:

NAME: wcp-traefik-ingress LAST DEPLOYED: Mon Jul 20 11:44:13 2020 NAMESPACE: wcpns STATUS: deployed REVISION: 1 TEST SUITE: NoneFor non-SSL access to the Oracle WebCenter Portal application, get the details of the services by the ingress:

kubectl describe ingress wcp-domain-traefik -n wcpnsSample services supported by the above deployed ingress:

Name: wcp-domain-traefik Namespace: wcpns Address: Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>) Rules: Host Path Backends ---- ---- -------- www.example.com /webcenter wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /console wcp-domain-adminserver:7001 (10.244.0.51:7001) /rsscrawl wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /rest wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /webcenterhelp wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /em wcp-domain-adminserver:7001 (10.244.0.51:7001) /wsrp-tools wcp-domain-cluster-wcportlet-cluster:8889 (10.244.0.52:8889,10.244.0.53:8889) Annotations: kubernetes.io/ingress.class: traefik meta.helm.sh/release-name: wcp-traefik-ingress meta.helm.sh/release-namespace: wcpns Events: <none>For SSL access to the Oracle WebCenter Portal application, get the details of the services by the above deployed ingress:

kubectl describe ingress wcp-domain-traefik -n wcpnsSample services supported by the above deployed ingress:

Name: wcp-domain-traefik Namespace: wcpns Address: Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>) TLS: wcp-domain-tls-cert terminates www.example.com Rules: Host Path Backends ---- ---- -------- www.example.com /webcenter wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /console wcp-domain-adminserver:7001 (10.244.0.51:7001) /rsscrawl wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /rest wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /webcenterhelp wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /em wcp-domain-adminserver:7001 (10.244.0.51:7001) /wsrp-tools wcp-domain-cluster-wcportlet-cluster:8889 (10.244.0.52:8889,10.244.0.53:8889) Annotations: kubernetes.io/ingress.class: traefik meta.helm.sh/release-name: wcp-traefik-ingress meta.helm.sh/release-namespace: wcpns traefik.ingress.kubernetes.io/router.entrypoints: websecure traefik.ingress.kubernetes.io/router.middlewares: wcpns-wls-proxy-ssl@kubernetescrd traefik.ingress.kubernetes.io/router.tls: true Events: <none>To confirm that the load balancer noticed the new ingress and is successfully routing to the domain server pods, you can send a request to the URL for the WebLogic ReadyApp framework, which should return an HTTP 200 status code, as follows:

curl -v http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER_PORT}/weblogic/readySample output:

* Trying 149.87.129.203... > GET http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER_PORT}/weblogic/ready HTTP/1.1 > User-Agent: curl/7.29.0 > Accept: */* > Proxy-Connection: Keep-Alive > host: $(hostname -f) > < HTTP/1.1 200 OK < Date: Sat, 14 Mar 2020 08:35:03 GMT < Vary: Accept-Encoding < Content-Length: 0 < Proxy-Connection: Keep-Alive < * Connection #0 to host localhost left intact

Verify domain application URL access

For non-SSL configuration

After setting up the Traefik (ingress-based) load balancer, verify that the domain application URLs are accessible through the non-SSL load balancer port 30305 for HTTP access. The sample URLs for Oracle WebCenter Portal domain are:

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/webcenter

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/console

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/em

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/rsscrawl

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/rest

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/webcenterhelp

http://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-Non-SSLPORT}/wsrp-toolsFor SSL configuration

After setting up the Traefik (ingress-based) load balancer, verify that the domain applications are accessible through the SSL load balancer port 30443 for HTTPS access. The sample URLs for Oracle WebCenter Portal domain are:

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenter

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/console

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/em

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/rsscrawl

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/rest

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenterhelp

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/wsrp-toolsUninstall the Traefik ingress

Uninstall and delete the ingress deployment:

helm delete wcp-traefik-ingress -n wcpnsEnd-to-end SSL configuration

Install the Traefik load balancer for end-to-end SSL

Use Helm to install the Traefik (ingress-based) load balancer. You can use the

values.yamlsample file and set kubernetes.namespaces as required.cd ${WORKDIR} kubectl create namespace traefik helm repo add traefik https://containous.github.io/traefik-helm-chartSample output:

"traefik" has been added to your repositoriesInstall Traefik:

helm install traefik traefik/traefik \ --namespace traefik \ --values charts/traefik/values.yaml \ --set "kubernetes.namespaces={traefik}" \ --set "service.type=NodePort" --waitSample output:

LAST DEPLOYED: Sun Sep 13 21:32:00 2020 NAMESPACE: traefik STATUS: deployed REVISION: 1 TEST SUITE: NoneVerify the Traefik operator status and find the port number of the SSL and non-SSL services:

kubectl get all -n traefikSample output:

NAME READY STATUS RESTARTS AGE pod/traefik-845f5d6dbb-swb96 1/1 Running 0 32s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/traefik NodePort 10.99.52.249 <none> 9000:31288/TCP,30305:30305/TCP,30443:30443/TCP 32s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/traefik 1/1 1 1 33s NAME DESIRED CURRENT READY AGE replicaset.apps/traefik-845f5d6dbb 1 1 1 33sAccess the Traefik dashboard through the URL

http://$(hostname -f):31288, with the HTTP hosttraefik.example.com:curl -H "host: $(hostname -f)" http://$(hostname -f):31288/dashboard/Note: Make sure that you specify a fully qualified node name for

$(hostname -f).

Configure Traefik to manage the domain

Configure Traefik to manage the domain application service created in this namespace. In the following sample, traefik is the Traefik namespace and wcpns is the namespace of the domain:

helm upgrade traefik traefik/traefik --namespace traefik --reuse-values \

--set "kubernetes.namespaces={traefik,wcpns}"Sample output:

Release "traefik" has been upgraded. Happy Helming!

NAME: traefik

LAST DEPLOYED: Sun Sep 13 21:32:12 2020

NAMESPACE: traefik

STATUS: deployed

REVISION: 2

TEST SUITE: NoneCreate IngressRouteTCP

For each backend service, create different ingresses, as Traefik does not support multiple paths or rules with annotation

ssl-passthrough. For example, forwcp-domain-adminserverandwcp-domain-cluster-wcp-cluster,different ingresses must be created.To enable SSL passthrough in Traefik, you can configure a TCP router. A sample YAML for

IngressRouteTCPis available at${WORKDIR}/charts/ingress-per-domain/tls/traefik-tls.yaml. The following should be updated intraefik-tls.yaml:- The service name and the SSL port should be updated in the

services. - The load balancer host name should be updated in the

HostSNIrule.

Sample

traefik-tls.yaml:apiVersion: traefik.containo.us/v1alpha1 kind: IngressRouteTCP metadata: name: wcp-domain-cluster-routetcp namespace: wcpns spec: entryPoints: - websecure routes: - match: HostSNI(`${LOADBALANCER_HOSTNAME}`) services: - name: wcp-domain-cluster-wcp-cluster port: 8888 weight: 3 TerminationDelay: 400 tls: passthrough: true- The service name and the SSL port should be updated in the

Create the IngressRouteTCP:

kubectl apply -f traefik-tls.yaml

Verify end-to-end SSL access

Verify the access to application URLs exposed through the configured service. The configured WCP cluster service enables you to access the following WCP domain URLs:

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenter

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/rsscrawl

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/rest

https://${LOADBALANCER_HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenterhelpUninstall Traefik

helm delete traefik -n traefik

cd ${WORKDIR}/charts/ingress-per-domain/tls

kubectl delete -f traefik-tls.yamlNGINX

Configure the ingress-based NGINX load balancer for an Oracle WebCenter Portal domain.

To load balance Oracle WebCenter Portal domain clusters, you can install the ingress-based NGINX load balancer and configure NGINX for non-SSL, SSL termination, and end-to-end SSL access of the application URL. Follow these steps to set up NGINX as a load balancer for an Oracle WebCenter Portal domain in a Kubernetes cluster:

See the official installation document for prerequisites.

Non-SSL and SSL termination

To get repository information, enter the following Helm commands:

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo updateInstall the NGINX load balancer

Deploy the

ingress-nginxcontroller by using Helm on the domain namespace:helm install nginx-ingress ingress-nginx/ingress-nginx -n wcpns \ --set controller.service.type=NodePort \ --set controller.admissionWebhooks.enabled=falseSample Output

NAME: nginx-ingress

LAST DEPLOYED: Tue Jan 12 21:13:54 2021

NAMESPACE: wcpns

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export HTTP_NODE_PORT=30305

export HTTPS_NODE_PORT=$(kubectl --namespace wcpns get services -o jsonpath="{.spec.ports[1].nodePort}" nginx-ingress-ingress-nginx-controller)

export NODE_IP=$(kubectl --namespace wcpns get nodes -o jsonpath="{.items[0].status.addresses[1].address}")

echo "Visit http://$NODE_IP:$HTTP_NODE_PORT to access your application via HTTP."

echo "Visit https://$NODE_IP:$HTTPS_NODE_PORT to access your application via HTTPS."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tlsCheck the status of the deployed ingress controller:

kubectl --namespace wcpns get services | grep ingress-nginx-controllerSample output:

nginx-ingress-ingress-nginx-controller NodePort 10.101.123.106 <none> 80:30305/TCP,443:31856/TCP 2m12s

Configure NGINX to manage ingresses

- Create an ingress for the domain in the domain namespace by using the sample Helm chart. Here path-based routing is used for ingress. Sample values for default configuration are shown in the file

${WORKDIR}/charts/ingress-per-domain/values.yaml. By default,typeisTRAEFIK,tlsisNon-SSL. You can override these values by passing values through the command line or edit them in the samplevalues.yamlfile.

Note: This is not an exhaustive list of rules. You can enhance it based on the application URLs that need to be accessed externally.

If needed, you can update the ingress YAML file to define more path rules (in section spec.rules.host.http.paths) based on the domain application URLs that need to be accessed. Update the template YAML file for the NGINX load balancer located at ${WORKDIR}/charts/ingress-per-domain/templates/nginx-ingress.yaml

You can add new path rules like shown below .

- path: /NewPathRule

backend:

serviceName: 'Backend Service Name'

servicePort: 'Backend Service Port' cd ${WORKDIR}

helm install wcp-domain-nginx charts/ingress-per-domain \

--namespace wcpns \

--values charts/ingress-per-domain/values.yaml \

--set "nginx.hostname=$(hostname -f)" \

--set type=NGINXSample output:

NAME: wcp-domain-nginx

LAST DEPLOYED: Fri Jul 24 09:34:03 2020

NAMESPACE: wcpns

STATUS: deployed

REVISION: 1

TEST SUITE: NoneFor secured access (SSL) to the Oracle WebCenter Portal application, create a certificate and generate a Kubernetes secret:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/tls1.key -out /tmp/tls1.crt -subj "/CN=*" kubectl -n wcpns create secret tls wcp-domain-tls-cert --key /tmp/tls1.key --cert /tmp/tls1.crtInstall

ingress-per-domainusing Helm for SSL configuration:cd ${WORKDIR} helm install wcp-domain-nginx charts/ingress-per-domain \ --namespace wcpns \ --values charts/ingress-per-domain/values.yaml \ --set "nginx.hostname=$(hostname -f)" \ --set type=NGINX --set sslType=SSLFor non-SSL access to the Oracle WebCenter Portal application, get the details of the services by the ingress:

kubectl describe ingress wcp-domain-nginx -n wcpnsSample Output:

Name: wcp-domain-nginx Namespace: wcpns Address: 10.101.123.106 Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>) Rules: Host Path Backends ---- ---- -------- * /webcenter wcp-domain-cluster-wcp-cluster:8888 (10.244.0.52:8888,10.244.0.53:8888) /console wcp-domain-adminserver:7001 (10.244.0.51:7001) /rsscrawl wcp-domain-cluster-wcp-cluster:8888 (10.244.0.53:8888) /rest wcp-domain-cluster-wcp-cluster:8888 (10.244.0.53:8888) /webcenterhelp wcp-domain-cluster-wcp-cluster:8888 (10.244.0.53:8888) /wsrp-tools wcp-domain-cluster-wcportlet-cluster:8889 (10.244.0.53:8889) /em wcp-domain-adminserver:7001 (10.244.0.51:7001) Annotations: meta.helm.sh/release-name: wcp-domain-nginx meta.helm.sh/release-namespace: wcpns nginx.com/sticky-cookie-services: serviceName=wcp-domain-cluster-wcp-cluster srv_id expires=1h path=/; nginx.ingress.kubernetes.io/proxy-connect-timeout: 1800 nginx.ingress.kubernetes.io/proxy-read-timeout: 1800 nginx.ingress.kubernetes.io/proxy-send-timeout: 1800 Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Sync 48m (x2 over 48m) nginx-ingress-controller Scheduled for syncFor SSL access to the Oracle WebCenter Portal application, get the details of the services by the above deployed ingress:

kubectl describe ingress wcp-domain-nginx -n wcpnsSample Output:

Name: wcp-domain-nginx Namespace: wcpns Address: 10.106.220.140 Default backend: default-http-backend:80 (<error: endpoints "default-http-backend" not found>) TLS: wcp-domain-tls-cert terminates mydomain.com Rules: Host Path Backends ---- ---- -------- * /webcenter wcp-domain-cluster-wcp-cluster:8888 (10.244.0.43:8888,10.244.0.44:8888) /console wcp-domain-adminserver:7001 (10.244.0.42:7001) /rsscrawl wcp-domain-cluster-wcp-cluster:8888 (10.244.0.43:8888,10.244.0.44:8888) /webcenterhelp wcp-domain-cluster-wcp-cluster:8888 (10.244.0.43:8888,10.244.0.44:8888) /rest wcp-domain-cluster-wcp-cluster:8888 (10.244.0.43:8888,10.244.0.44:8888) /em wcp-domain-adminserver:7001 (10.244.0.42:7001) /wsrp-tools wcp-domain-cluster-wcportlet-cluster:8889 (10.244.0.43:8889,10.244.0.44:8889) Annotations: kubernetes.io/ingress.class: nginx meta.helm.sh/release-name: wcp-domain-nginx meta.helm.sh/release-namespace: wcpns nginx.ingress.kubernetes.io/affinity: cookie nginx.ingress.kubernetes.io/affinity-mode: persistent nginx.ingress.kubernetes.io/configuration-snippet: more_set_input_headers "X-Forwarded-Proto: https"; more_set_input_headers "WL-Proxy-SSL: true"; nginx.ingress.kubernetes.io/ingress.allow-http: false nginx.ingress.kubernetes.io/proxy-connect-timeout: 1800 nginx.ingress.kubernetes.io/proxy-read-timeout: 1800 nginx.ingress.kubernetes.io/proxy-send-timeout: 1800 nginx.ingress.kubernetes.io/session-cookie-expires: 172800 nginx.ingress.kubernetes.io/session-cookie-max-age: 172800 nginx.ingress.kubernetes.io/session-cookie-name: stickyid nginx.ingress.kubernetes.io/ssl-redirect: false Events: <none>

Verify non-SSL and SSL termination access

Verify that the Oracle WebCenter Portal domain application URLs are accessible through the nginx NodePort LOADBALANCER-NODEPORT 30305:

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/console

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/em

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/webcenter

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/rsscrawl

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/rest

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/webcenterhelp

http://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-NODEPORT}/wsrp-toolsUninstall the ingress

Uninstall and delete the ingress-nginx deployment:

helm delete wcp-domain-nginx -n wcpns

helm delete nginx-ingress -n wcpnsEnd-to-end SSL configuration

Install the NGINX load balancer for End-to-end SSL

For secured access (SSL) to the Oracle WebCenter Portal application, create a certificate and generate secrets:

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout /tmp/tls1.key -out /tmp/tls1.crt -subj "/CN=domain1.org" kubectl -n wcpns create secret tls wcp-domain-tls-cert --key /tmp/tls1.key --cert /tmp/tls1.crtNote: The value of

CNis the host on which this ingress is to be deployed.Deploy the ingress-nginx controller by using Helm on the domain namespace:

helm install nginx-ingress -n wcpns \ --set controller.extraArgs.default-ssl-certificate=wcpns/wcp-domain-tls-cert \ --set controller.service.type=NodePort \ --set controller.admissionWebhooks.enabled=false \ --set controller.extraArgs.enable-ssl-passthrough=true \ ingress-nginx/ingress-nginxSample Output:

NAME: nginx-ingress LAST DEPLOYED: Tue Sep 15 08:40:47 2020 NAMESPACE: wcpns STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: The ingress-nginx controller has been installed. Get the application URL by running these commands: export HTTP_NODE_PORT=$(kubectl --namespace wcpns get services -o jsonpath="{.spec.ports[0].nodePort}" nginx-ingress-ingress-nginx-controller) export HTTPS_NODE_PORT=$(kubectl --namespace wcpns get services -o jsonpath="{.spec.ports[1].nodePort}" nginx-ingress-ingress-nginx-controller) export NODE_IP=$(kubectl --namespace wcpns get nodes -o jsonpath="{.items[0].status.addresses[1].address}") echo "Visit http://$NODE_IP:$HTTP_NODE_PORT to access your application via HTTP." echo "Visit https://$NODE_IP:$HTTPS_NODE_PORT to access your application via HTTPS." An example Ingress that makes use of the controller: apiVersion: networking.k8s.io/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: nginx name: example namespace: foo spec: rules: - host: www.example.com http: paths: - backend: serviceName: exampleService servicePort: 80 path: / # This section is only required if TLS is to be enabled for the Ingress tls: - hosts: - www.example.com secretName: example-tls If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided: apiVersion: v1 kind: Secret metadata: name: example-tls namespace: foo data: tls.crt: <base64 encoded cert> tls.key: <base64 encoded key> type: kubernetes.io/tlsCheck the status of the deployed ingress controller:

kubectl --namespace wcpns get services | grep ingress-nginx-controllerSample output:

nginx-ingress-ingress-nginx-controller NodePort 10.96.177.215 <none> 80:32748/TCP,443:31940/TCP 23s

Deploy tls to access services

Deploy tls to securely access the services. Only one application can be configured with

ssl-passthrough. A sample tls file for NGINX is shown below for the servicewcp-domain-cluster-wcp-clusterand port8889. All the applications running on port8889can be securely accessed through this ingress.For each backend service, create different ingresses, as NGINX does not support multiple paths or rules with annotation

ssl-passthrough. For example, forwcp-domain-adminserverandwcp-domain-cluster-wcp-cluster,different ingresses must be created.As

ssl-passthroughin NGINX works on the clusterIP of the backing service instead of individual endpoints, you must exposewcp-domain-cluster-wcp-clustercreated by the operator with clusterIP.For example:

Get the name of wcp-domain cluster service:

kubectl get svc -n wcpns | grep wcp-domain-cluster-wcp-clusterSample output:

wcp-domain-cluster-wcp-cluster ClusterIP 10.102.128.124 <none> 8888/TCP,8889/TCP 62m

Deploy the secured ingress:

cd ${WORKDIR}/charts/ingress-per-domain/tls kubectl create -f nginx-tls.yamlNote: The default

nginx-tls.yamlcontains the backend for WebCenter Portal service with domainUIDwcp-domain. You need to create similar tls configuration YAML files separately for each backend service.Content of the file

nginx-tls.yaml:apiVersion: extensions/v1beta1 kind: Ingress metadata: name: wcpns-ingress namespace: wcpns annotations: kubernetes.io/ingress.class: nginx nginx.ingress.kubernetes.io/ssl-passthrough: "true" spec: tls: - hosts: - domain1.org secretName: wcp-domain-tls-cert rules: - host: domain1.org http: paths: - path: backend: serviceName: wcp-domain-cluster-wcp-cluster servicePort: 8889Note: Host is the server on which this ingress is deployed.

Check the services supported by the ingress:

kubectl describe ingress wcpns-ingress -n wcpns

Verify end-to-end SSL access

Verify that the Oracle WebCenter Portal domain application URLs are accessible through the LOADBALANCER-SSLPORT 30233:

https://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenter

https://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-SSLPORT}/rsscrawl

https://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-SSLPORT}/webcenterhelp

https://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-SSLPORT}/rest

https://${LOADBALANCER-HOSTNAME}:${LOADBALANCER-SSLPORT}/wsrp-tools Uninstall ingress-nginx tls

cd ${WORKDIR}/charts/ingress-per-domain/tls

kubectl delete -f nginx-tls.yaml

helm delete nginx-ingress -n wcpnsApache Webtier

Configure the Apache webtier load balancer for an Oracle WebCenter Portal domain.

To load balance Oracle WebCenter Portal domain clusters, you can install Apache webtier and configure it for both non-SSL and SSL termination access for the application URLs. Follow these steps to set up Apache webtier as a load balancer for an Oracle WebCenter Portal domain in a Kubernetes cluster:

- Build the Apache webtier image

- Create the Apache plugin configuration file

- Prepare the certificate and private key

- Install the Apache webtier Helm chart

- Verify domain application URL access

- Uninstall Apache webtier

Build the Apache webtier image

To build the Apache webtier Docker image, refer to the sample.

Create the Apache plugin configuration file

The configuration file named

custom_mod_wl_apache.confshould have all the URL routing rules for the Oracle WebCenter Portal applications deployed in the domain that needs to be accessible externally. Update this file with values based on your environment. The file content is similar to below.Sample content of the configuration file

custom_mod_wl_apache.conffor wcp-domain domain:cat ${WORKDIR}/charts/apache-samples/custom-sample/custom_mod_wl_apache.confSample output: