18 Apache Kafka Adapter

The Apache Kafka Adapter enables you to create an integration that connects to an Apache Kafka messaging system. The Apache Kafka Adapter connects to the Apache Kafka distributed publish-subscribe messaging system and allows for the publishing and consumption of messages from a Kafka topic.

Introduction to Apache Kafka Adapter

The Apache Kafka Adapter provides the following benefits:

- Establishes a connection to the Apache Kafka messaging system to enable messages to be published and consumed.

- Consumes messages from a Kafka topic and produces messages to a Kafka topic in the invoke (outbound) direction.

- Enables you to browse the available metadata using the Adapter Endpoint Configuration Wizard (that is, the topics and partitions to which messages are published and consumed).

- Supports a consumer group.

- Supports headers.

- Supports the following message structures:

- Avro schema

- Sample JSON

- XML schema (XSD)

- Sample XML

- Supports the following security policies:

- Simple Authentication and Security Layer Plain (SASLPLAIN)

- SASL Plain over SSL

- TLS

- Mutual TLS

- Supports direct connectivity to an Apache Kafka messaging system over SSL.

- Supports optionally configuring the Kafka producer to be transactional. This enables an application to send messages to multiple partitions atomically.

See https://kafka.apache.org/.

The Apache Kafka Adapter is one of many predefined adapters included with the Oracle SOA Suite.

Workflow to Configure the Apache Kafka Adapter

You follow a very simple workflow to configure the Apache Kafka Adapter.

This table lists the workflow steps for both adapter tasks and overall SOA composite tasks and provides links to instructions for each step.

| Step | Description | More Information |

|---|---|---|

| 1 | Create a SOA application in Oracle JDeveloper with a BPEL process service component. | See Getting Started with Developing SOA Composite ApplicationsGetting Started with Developing SOA Composite Applications and Getting Started with Oracle BPEL Process Manager. |

| 2 | Add and configure the Apache Kafka Adapter. |

Drag and drop the Apache Kafka Adapter from the Components window of Oracle JDeveloper. The Apache Kafka Adapter Welcome Screen appears. See Configure Apache Kafka Adapter for more information. Kafka server should be configured and running. |

| 3 | Connect services, service components, and references. | See Adding WiresAdding Wires in Getting Started with Developing SOA Composite Applications. |

| 4 | Deploy the SOA composite application. | See Deploying a SOA Composite Application in Getting Started with Developing SOA Composite Applications. |

| 5 | Manage and test the SOA composite application | See Managing and Testing a SOA Composite Application in Getting Started with Developing SOA Composite Applications. |

Prerequisites

You must satisfy the following prerequisites to configure and add the Apache Kafka Adapter in your SOA composite application.

Know the Host and Port of the Bootstrap Server

Know the host and port of the bootstrap server to use to connect to a list of Kafka brokers.

Obtain Security Policy Details

Obtain the following security policy details for the Apache Kafka Adapter.

- If using the Simple Authentication and Security Layer (SASL) Plain over SSL or SASL Plain security policy, know the SASL username and password.

- To use SASL Plain over SSL, TLS, or Mutual TLS policies, have the required certificates.

Note the following restrictions:

- The Apache Kafka Adapter supports Apache Kafka serializers/deserializers (String/ByteArray). It doesn't support Confluent or any other serializers/deserializers.

- Supports only the SASL PLAIN over SSL security policy.

- Supports the XML/JSON and AVRO message structures. Other structures/formats are not supported.

- The schema registry is not supported with the Apache Kafka Adapter.

Configure the Apache Kafka Adapter

When you drag the Apache Kafka Adapter into the External References swim lane, the Adapter Endpoint Configuration Wizard is invoked. This wizard guides you through configuration of the Apache Kafka Adapter endpoint properties.

The following sections describe the wizard pages that guide you through configuration of the Apache Kafka Adapter as a trigger and an invoke in a SOA composite application.

Basic Info Page

You can enter a name and description on the Basic Info page of each adapter in your application.

| Element | Description |

|---|---|

| What do you want to call your endpoint? |

Provide a meaningful name so that others can understand the responsibilities of this adapter. You can include English alphabetic characters, numbers, underscores, and hyphens in the name. You can't include the following characters:

|

| What does this endpoint do? |

Enter an optional description of the adapter’s responsibilities. For example: This adapter receives an inbound request to synchronize account information with the cloud application. |

Connection Info Page

Specify the connectivity details for your Apache Kafka Adapter.

| Element | Description |

|---|---|

| Connection URL |

Enter the URL of your Apache Kafka instance. |

| Security Policy |

Select the security policy appropriate to your environment (for example, USERNAME_PASSWORD_TOKEN).

|

| Authentication Key |

Select the CSF authentication key.

|

| Test | Click to validate the authentication key. |

Operations Page

Select the operation to perform.

| Element | Description |

|---|---|

| What operation do you want to perform on a Kafka topic? |

|

Topic & Partition Page

Select the operation and topic on which to perform the operation and optionally specify the message structure.

Configure the Apache Kafka Adapter as an Invoke Connection

| Element | Description |

|---|---|

| Select a Topic | Select the topic on which to perform the operation. You can also enter the beginning letters of the topic to filter the display of topics. A topic is a category in which applications can add, process, and reprocess messages. You subscribe to messages in topics. |

| Specify the Partition (This field is only displayed if you select Publish records to a Kafka topic or Consume records from a Kafka topic.) | Specify the partition to which to push the selected topic. Kafka topics are divided into partitions that enable you to split data across multiple brokers. If you do not select a specific partition and use the Default selection, Kafka considers all available partitions and decides which one to use. |

| Consumer Group (This field is only displayed if you select Consume records from a Kafka topic.) | Specify the consumer group to attach. Consumers join a group by using the same group ID. Kafka assigns the partitions of a topic to the consumers in a group. |

| Specify the option for consuming messages (This field is only displayed if you select Consume records from a Kafka topic.) |

When you configure the adapter to read messages from the beginning and edit it again, the following options are displayed:

Note: When you select the Read from beginning or Read latest option, not all messages are guaranteed to be consumed in a single run. However, any remaining messages are consumed in subsequent runs. |

| Maximum Number of Records to be fetched (This field is only displayed if you select Consume records from a Kafka topic or Consume records from a Kafka topic by specifying offset.) |

Specify the number of messages to read. The threshold for the complete message payload is 10 MB. Note: This field specifies the upper boundary of records to fetch. It does not guarantee the specified amount of records to retrieve from the stream in a single run. Remaining messages are fetched in subsequent runs. |

| Do you want to specify the message structure? | Select Yes if you want to define the message structure to use on the Message Structure page of the wizard. Otherwise, select No. |

| Do you want to specify the headers for the message? | Select Yes if you want to define the message headers to use on the Headers page of the wizard. Otherwise, select No. |

| Review and update advanced configurations | Click Edit to open the Advanced Options section to enable or disable the transactional producer.

|

Configure the Apache Kafka Adapter as a Trigger Connection

| Element | Description |

|---|---|

| Select a Topic | Select the topic on which to perform the operation. You can also enter the beginning letters of the topic to filter the display of topics. A topic is a category in which applications can add, process, and reprocess messages. You subscribe to messages in topics. |

| Specify the Partition | Specify the partition to which to push the selected topic. Kafka topics are divided into partitions that enable you to split data across multiple brokers. If you do not select a specific partition and use the Default selection, Kafka considers all available partitions and decides which one to use. |

| Consumer Group | Specify the consumer group to attach. Consumers join a group by using the same group ID. Kafka assigns the partitions of a topic to the consumers in a group. |

| Polling Frequency (Sec) |

Specify the frequency at which to fetch records. |

| Maximum Number of Records to be fetched |

Specify the number of messages to read. The threshold for the complete message payload is 10 MB. Note: This field specifies the upper boundary of records to fetch. It does not guarantee the specified number of records to retrieve from the stream in a single run. Remaining messages are fetched in subsequent runs. |

| Do you want to specify the message structure? | Select Yes if you want to define the message structure to use on the Message Structure page of the wizard. Otherwise, select No. |

| Do you want to specify the headers for the message? | Select Yes if you want to define the message headers to use on the Headers page of the wizard. Otherwise, select No. |

| Review and update advanced configurations | Click Edit to open the Advanced Options section to enable or disable the transactional producer.

|

Message Structure Page

Select the message structure to use. This page is displayed if you selected Yes for the Do you want to specify the message structure? field on the Topic & Partition page.

| Element | Description |

|---|---|

| How would you like to specify the message structure? |

|

| Select File | Click Browse to select the file. Once selected, the file name is displayed in the File Name field. |

| Element | Select the element if you specified an XSD or Avro file. |

Headers Page

Define the message headers structure to attach to the message. This page is displayed if you selected Yes for the Do you want to specify the headers for the message? field on the Topic & Partition page.

| Element | Description |

|---|---|

| Specify Message Headers | Specify headers and optional descriptions. |

Summary Page

You can review the specified adapter configuration values on the Summary page.

| Element | Description |

|---|---|

| Summary |

Displays a summary of the configuration values you defined on previous pages of the wizard. The information that is displayed can vary by adapter. For some adapters, the selected business objects and operation name are displayed. For adapters for which a generated XSD file is provided, click the XSD link to view a read-only version of the file. To return to a previous page to update any values, click the appropriate tab in the left panel or click Back. To cancel your configuration details, click Cancel. |

Implement Common Patterns Using the Apache Kafka Adapter

You can use the Apache Kafka Adapter to implement the following common patterns.

Produce Messages to an Apache Kafka Topic

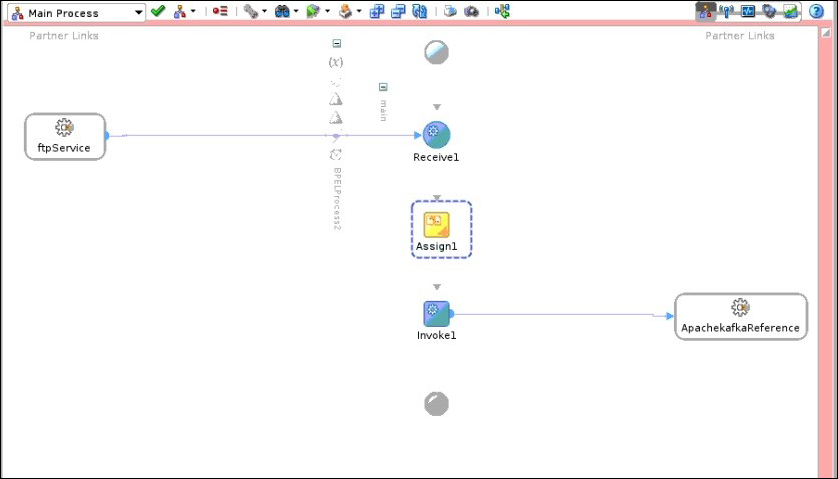

You can configure a SOA composite application to read records using the FTP Adapter and stage file read action and publish them to an Apache Kafka topic using the Apache Kafka Adapter produce operation.

The following integration provides one example of how to implement this pattern:

- A SOA composite application with a project.

- A BPEL process to perform appropriate source-to-target mappings between the SOA composite and the FTP Adapter.

- An FTP Adapter to fetch files (records) from an input directory and put them in a download directory.

- A stage file action configured to:

- Perform a Read File in Segments operation on each file (record) in the download directory.

- Specify the structure for the contents of the message to use (for this example, an XML schema (XSD) document).

- Perform appropriate source-to-target mappings between the stage file action and an Apache Kafka Adapter.

- An Apache Kafka Adapter configured to:

- Publish records to a Kafka topic.

- Specify the message structure to use (for this example, an XML schema (XSD) document) and the headers to use for the message.

- A BPEL process to perform appropriate source-to-target mappings between the Apache Kafka Adapter and FTP Adapter.

- An FTP Adapter to delete files from the download directory when processing is complete.

The completed SOA composite application looks as follows:

Consume Messages from an Apache Kafka Topic

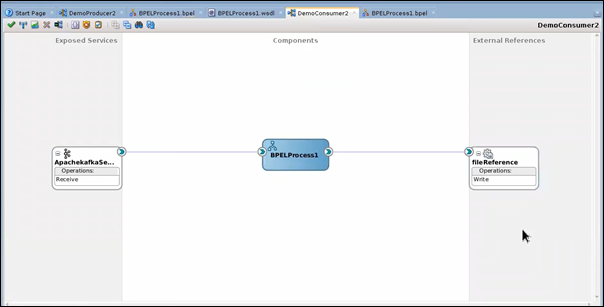

You can configure a SOA composite application to use the Apache Kafka Adapter to consume messages from an Apache Kafka topic.

The following use case provides one example of how to implement this pattern:

- A SOA composite application with a project.

- A BPEL process to perform appropriate source-to-target mappings between the SOA composite and the FTP Adapter.

- An Apache Kafka Adapter configured to:

- Consume records from a Kafka topic.

- Specify the consumer group to attach. Kafka assigns the partitions of a topic to the consumers in a group.

- Specify Read latest as the option for consuming messages. The latest messages are read starting at the time at which the SOA composite is deployed.

- Specify the message structure to use (for this example, an XML schema (XSD) document) and the headers to use for the message.

The completed setup looks as follows: