7 Plato Orchestration Services

This topic describes about the Plato Orchestration Services.

Migration Endpoint

Note:

This topic is applicable only to the existing customers.The task blob usage is removed for GET endpoints in plato-orch-service for task list screens. The table HTASK_ADDN_DTLS contains the task-related details. A migration endpoint must be executed to populate the data for the completed tasks in this table. In-Progress tasks, the data is automatically populated by the poller. This improves the performance in Free Tasks/My Tasks/Completed Tasks/Supervisor Tasks inquiry.

To populate the table HTASK_ADDN_DTLS with previously COMPLETED tasks (for tasks not present in task_in_progress table), a migration API needs to be executed.

GET Request:

Endpoint: http://<host>:<port>/plato-orch-service/api/v1/extn/migrate

Headers: Sample inputs shown below.

appId: platoorch

branchCode: 000

Content-Type: application/json

entityId: DEFAULTENTITY

SELECT COUNT(*) FROM TASK t

WHERE JSON_VALUE(json_data, '$.status') = 'COMPLETED'

AND JSON_VALUE (json_data, '$.taskType') = 'WAIT'

AND TASK_ID NOT IN (SELECT TASK_ID FROM HTASK_ADDN_DTLS);Note:

For future tasks and previous non-completed tasks present in task_in_progress table, poller keeps checking the task_in_progress table and populates the HTASK_ADDN_DTLS table.Archival Framework

The Archival Framework intends to archive the continuously growing data to the history tables on the basis of frequency and retention period provided by the user. The framework is specific to conductor schema.

The framework supports two levels of Archival. The first level of archival happens from main conductor tables to the corresponding history_1 tables and the second level of Archival happens from history_1 tables to history_2 tables. The frequency and retention period for both levels should be maintained and it should be taken care that the level2 archival has a slower frequency than the level archival.

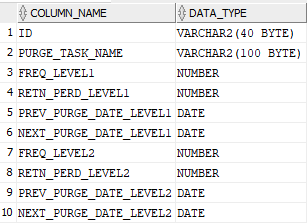

Table 7-1 Configuration - Field Description

| Field | Description |

|---|---|

| PURGE_TASK_NAME | This is the name of the configuration which the user want to execute and is sent from the controller in a request for identifying the remaining configuration for purging. |

| FREQ_LEVEL1/2 | This is the frequency level where the user can mention the daily, weekly, monthly or annual frequency. |

| RETN_PERD_LEVEL1/2 | The retention period signifies the number of day for which the data needs to be retained. For example if the frequency is set to daily and retention period is set for 60 days then in that case the 60 days data will be retained and the purge will be happening daily keeping the 60 days data. |

| PREV_PURGE_DATE_LEVEL1/2 | This column gets updated as soon as a purge is triggered by the user. |

| NEXT_PURGE_DATE_LEVEL1/2 | The Next purge date level1 also gets updated automatically based on the frequency. |

| Frequency | Description |

|---|---|

| 1 | Daily |

| 2 | Weekly |

| 3 | Monthly |

| 4 | Annually |

- Currently the user needs to manually hit the controller for archival. So in that case suppose the next purge date is today and the user did not trigger archiving today and has archived on 3 days later then the purging will be happening on the basis of frequency and the next purge date in the configuration table.

- We have not provided the configuration for tables to be archived. The tables for archival are determined by the framework because most of the tables are dependent on each other and if the user misses a table for archival then there will be data inconsistency.

- After archiving the data the user might still see the data in base tables from archival dates. This happens when a task of a workflow is still pending and the workflow is not completed. Only the completed workflows are archived.

- Currently Branch wise archival is not handles. All the data based on the retention period and frequency will be purged (given the workflows are completed).

End Point : /api/v1/extn/purgeRequest

Type: POST

Request Body:

{

"purge_config_name": "<purge task name from the table>"

}

Headers : all standard headers