Optimizing KVM for VT800

For higher level hypervisors such as ESXi, much of the details and optimization of the hypervisor are handled for you, as the operating system is optimized to be a hypervisor. As KVM is just a piece of the broader Linux kernel, and other non-KVM entities are present, the tuning of the OS to optimize KVM is left to the administrator. For some deployments of KVM their performance may not be mission critical, so no tuning may be required. These recommendations assume the VT800 is mission critical and may be the only application running on the hardware/system.

These strategies apply to VT800 and VT800_128 and are not limited to 9.1 and may be used at prior releases.

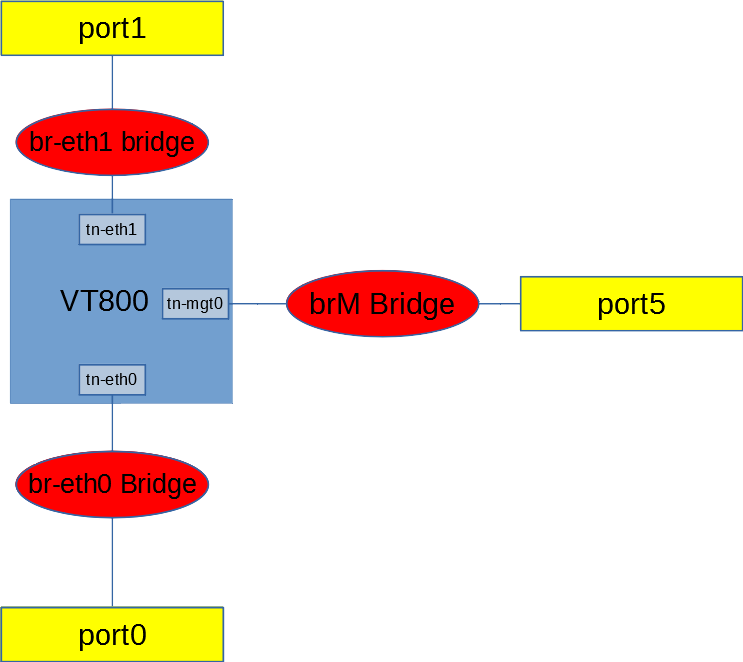

The deployment assumes the VT800 network interfaces use the virtio device model and connect to the device ports via bridges.

The VT800 supports up to 12 data ports. This example demonstrates two data ports and one management port.

The optimizations below assume the VT800 is already running and able to pass traffic.

Interface optimizations

For each used physical data port on the system (port0 and port1, above), set txqueuelen to 10000 and the mtu to 2004. Assuming port0 and port1 port names are eth0 and eth1.sudo ifconfig eth0 txqueuelen 10000 mtu 2004

sudo ifconfig eth1 txqueuelen 10000 mtu 2004sudo ifconfig vnet0 txqueuelen 10000 mtu 2004

sudo ifconfig vnet1 txqueuelen 10000 mtu 2004

sudo ifconfig vnet2 txqueuelen 10000 mtu 2004sudo ifconfig br-eth0 txqueuelen 10000 mtu 2004

sudo ifconfig bt-eth1 txqueuelen 10000 mtu 2004These settings will need to be reset after each guest start or restart.

CPU threading optimization

Inside the VT800 (or any appliance), threads are carefully pinned to the available CPUs. But by default, these CPUs float around on the hypervisor. The VT800 CPUs must be pinned to the physical CPUs. This can be as simple as a one-to-one mapping.

virsh vcpupin <domain> <guest cpu#> <host cpu#>

sudo virsh vcpupin ol7.9 0 0

sudo virsh vcpupin ol7.9 1 1

sudo virsh vcpupin ol7.9 2 2

sudo virsh vcpupin ol7.9 3 3These mapping will need to be reset after each guest start or restart.