1 Cloud Deployment

The cloud native Oracle Communications Converged Application Server Server is deployed on a Kubernetes cluster using Docker images from the Oracle Container Registry. Use the Docker images to deploy Admin and Managed servers as Kubernetes Pods.

The high-level steps to deploy the Converged Application Server include:

- Install the dependencies.

- Install Docker.

- Log in to the Oracle Container Registry.

- Pull the image from the Oracle Container Registry.

- Install Kubernetes.

- Install the Kubernetes WebLogic Operator.

- Create a Persistent Volume

- Deploy the domain.

Optional steps include:

- Bring up another managed server.

- Install the EFK stack (ElasticSearch, FluentD, Kibana).

- Install Prometheus and Grafana

- Apply a patch

Install Dependencies

The java-devel and iproute-tc packages

are required dependencies for Converged Application Server. The git package is required to clone the Kubernetes

WebLogic Operator.

Install Docker

Docker is a popular container platform that allows you to create and distribute applications across your Oracle Linux servers. The Converged Application Server containers published on the Oracle Container Registry require Docker in order to pull and manage.

Log in to Oracle Container Registry

Before deploying Converged Application Server, you must log in to the Oracle Container Registry and accept the Java License Agreement for the JDK and the Middleware License Agreement for Converged Application Server.

Download the Docker Files

In addition to downloading the occas_generic.jar file, you need to download the Docker image files and scripts.

Pull the Image

The Converged Application Server docker image is located on the Oracle Container Registry.

Install Kubernetes

Install the Kubernetes Weblogic Operator

The WebLogic Kubernetes Operator supports running your WebLogic Server domains on Kubernetes, an industry standard, cloud neutral deployment platform. It lets you encapsulate your entire WebLogic Server installation and layered applications into a portable set of cloud neutral images and simple resource description files. You can run them on any on-premises or public cloud that supports Kubernetes where you’ve deployed the operator.

kubectl get pods -n sample-weblogic-operator-nsNote:

If you are installing the EFK stack and setelkIntegrationEnabled to true, then the READY

column will display 2/2 when both containers are ready. If you are not installing

the EFK stack and left elkIntegrationEnabled as false, then the

READY column will display 1/1 when the container is

ready.

[weblogic-kubernetes-operator]$ kubectl get pods -n sample-weblogic-operator-ns

NAME READY STATUS RESTARTS AGE

weblogic-operator-7885684685-4jz5l 2/2 Running 0 2m32s

[weblogic-kubernetes-operator]$Create the Persistent Volume

Converged Application Server uses a Persistent Volume (PV) to store logs that can be viewed with Kibana. The PV may be either a local path on the master node or a NFS location.

Deploy the Replicated Domain

An Converged Application Server replicated domain is a logically related group of resources. Domains include the administration server and all managed servers. Converged Application Server domains extend Oracle WebLogic Server domains to support SIP and other telecommunication protocols.

For information on building a custom application router, see Configuring a Custom Application Router in the Developer Guide.

Modify the Domain File

For quick, on-demand changes to your domain, modify the domain.yaml file directly and use kubectl to apply your changes. By modifying the domain file you can scale up or down the number of managed servers, change JMV options, or update the mountPath for your PV.

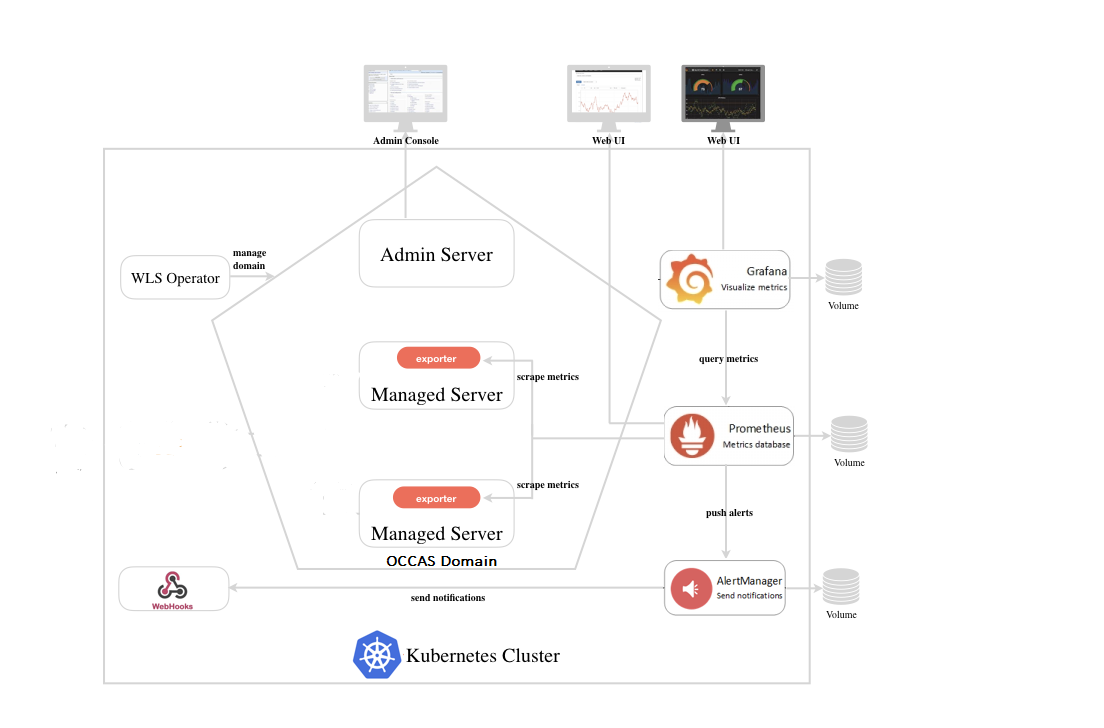

Monitoring Tools

Converged Application Server can integrate with several third-party monitoring tools.

- The EFK stack—The EFK stack is the combination of Elasticsearch, FluentD, and Kibana working together to centralize log files for easy monitoring. FluentD gathers logs files from multiple nodes and sends them to Elasticsearch, which stores and indexes them, while Kibana provides the front-end web interface.

- Prometheus and Grafana—Converged Application Server can use the WebLogic Monitoring Exporter to export Prometheus-compatible metrics that are then exposed to Grafana, which visualizes the metrics over time to provide futher insights into the data.

Deploy the EFK Stack

Customers may deploy the EFK stack to increase visibility into the state of their Converged Application Server instances. Once the EFK stack is deployed, the Kibana web interface displays multiple Converged Application Server metrics.

Note:

Converged Application Server does not require the EFK stack and its installation is optional.Note:

The FluentD pod runs in the sample-domain1-ns namespace and the ElasticSearch and Kibana pods run in the sample-weblogic-operator-ns namespace.Deploy Prometheus and Grafana

Prometheus and Grafana are optional third-party monitoring tools that you can install to gather metrics on your Converged Application Server installation. When both are installed, Converged Application Server uses the WebLogic Monitoring Exporter to export Prometheus-compatible metrics that are then exposed to Grafana, which visualizes the metrics over time to provide futher insights into the data.

Figure 1-1 Cluster Architecture with Monitoring Tools

Refer to the Prometheus documentation and the Grafana documentation for how to configure and use those products.

Apply a Patch

The Dockerfile in the

occas8/dockerfiles/8.0.0.0.0/patch-opatch-update directory extends the

Converged Application Server image by updating

OPatch and applying a patch. If an update is released for Converged Application Server or one of its components (like WebLogic or

Confluence), use OPatch to patch your system.