Example Distributed Database Deployment

This example explains how to deploy a typical system-managed Oracle Globally Distributed Database with multiple replicas, using Oracle Data Guard for high availability.

To deploy a system-managed distributed database you create shardgroups and shards, create and configure the databases to

be used as shards, run the DEPLOY command, and create

role-based global services.

You are not required to map data to shards in the system-managed data distribution method, because the data is automatically distributed across shards using partitioning by consistent hash. The partitioning algorithm evenly and randomly distributes data across shards. For more conceptual information about the system-managed distribution method, see System-Managed Data Distribution.

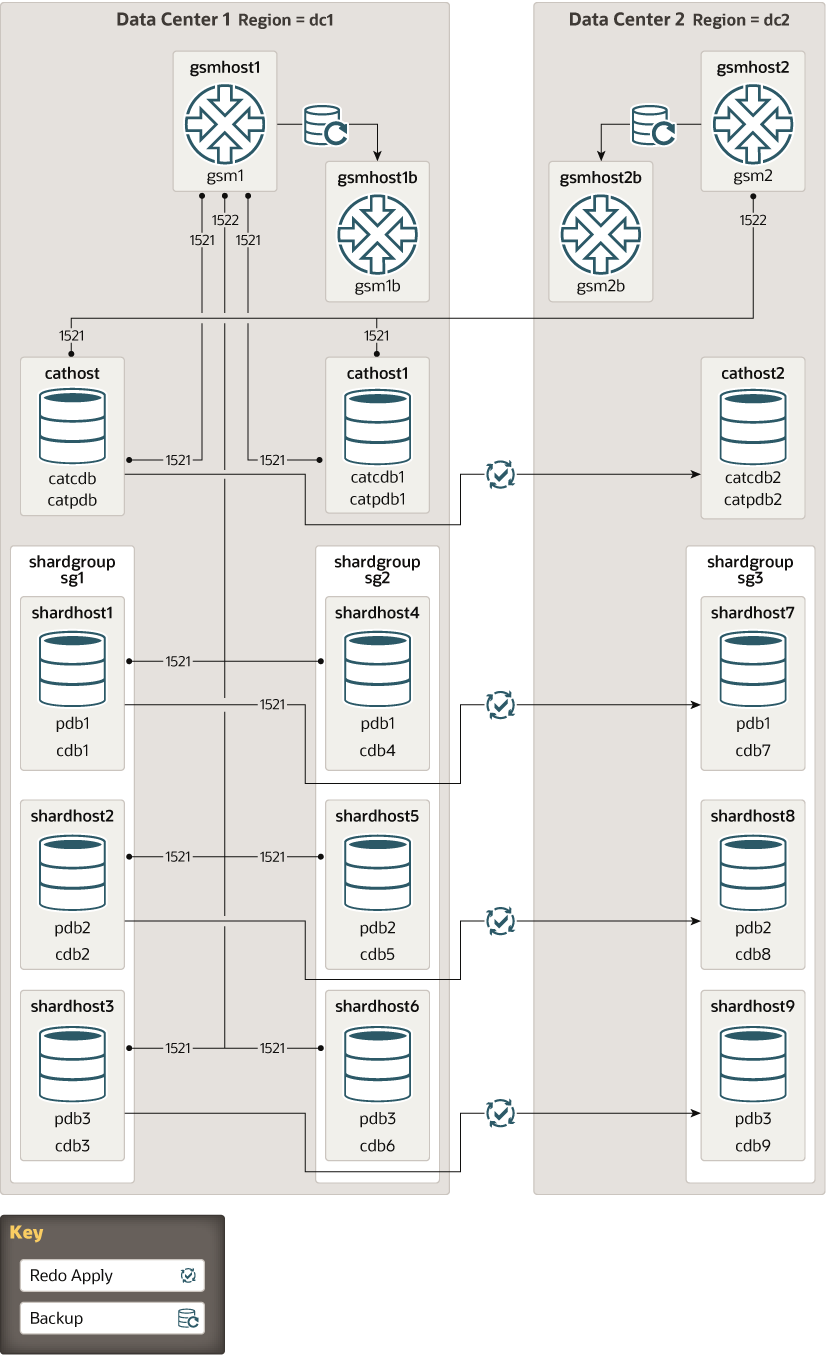

Example Oracle Globally Distributed Database Topology

Consider the following system-managed Oracle Globally Distributed Database configuration, where shardgroup sg1 contains the primary shards, while shardgroups sg2 and sg3 contain standby replicas.

In addition, let’s assume that the replicas in shardgroup sg2 are Oracle Active Data Guard standbys (that is, databases open for read-only access), while the replicas in shardgroup sg3 are mounted databases that have not been opened.

Table 3-1 Example System-Managed Topology Host Names

| Topology Object | Description |

|---|---|

| Shard Catalog Database |

Every distributed database topology requires a shard catalog. In our example, the shard catalog database has 2 standbys, one in each data center. Primary

Active Standby

Standby

|

| Regions |

Because there are two data centers involved in this configuration, there are two corresponding regions created in the shard catalog database. Data center 1

Data center 2

|

| Shard Directors (global service managers) |

Each region requires a shard director running on a host within that data center. Data center 1

Data center 2

|

| Shardgroups |

Data center 1

Data center 2

|

| Shards |

|