3 Setting Up the Membership Service

This chapter discusses how to set up your membership service.

Overview of the TimesTen Scaleout membership service

The TimesTen Scaleout membership service enables a grid to operate in a consistent manner, even if it encounters a network failure between instances that interrupts communication and cooperation between the instances.

The TimesTen Scaleout membership service performs the following:

-

Tracking the instance status. This helps instances maintain communication between each other.

-

Recovering from a network partition error, once the communications fault is fixed.

Tracking the instance status

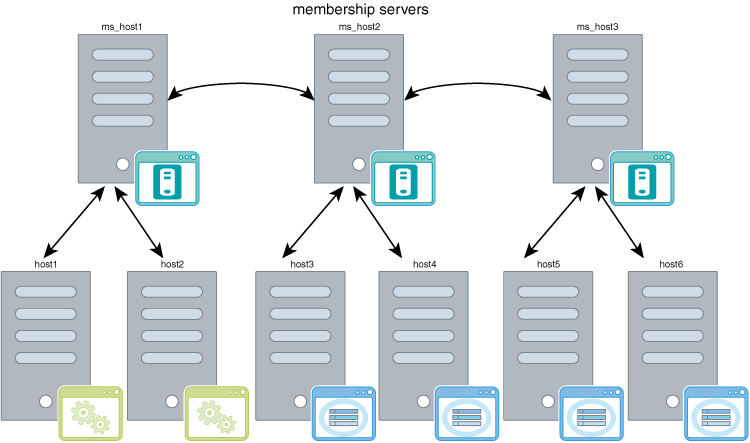

A grid is a collection of instances that reside on multiple hosts that communicate over a single private network. The membership service knows which instances are active. When each instance starts, it connects to a membership server within the membership service to register itself, as shown in Figure 3-1. If one of the membership servers fails, the instances that were connected to the failed membership server transparently reconnect to one of the available membership servers.

Figure 3-1 Instances register with the membership servers

Description of ''Figure 3-1 Instances register with the membership servers''

Each instance maintains a persistent connection to one of the membership servers, so that it can query the active instance list. If the network between the membership servers and the instances is down, the instances refuse to perform until the network is fixed and communication is restored with the membership servers.

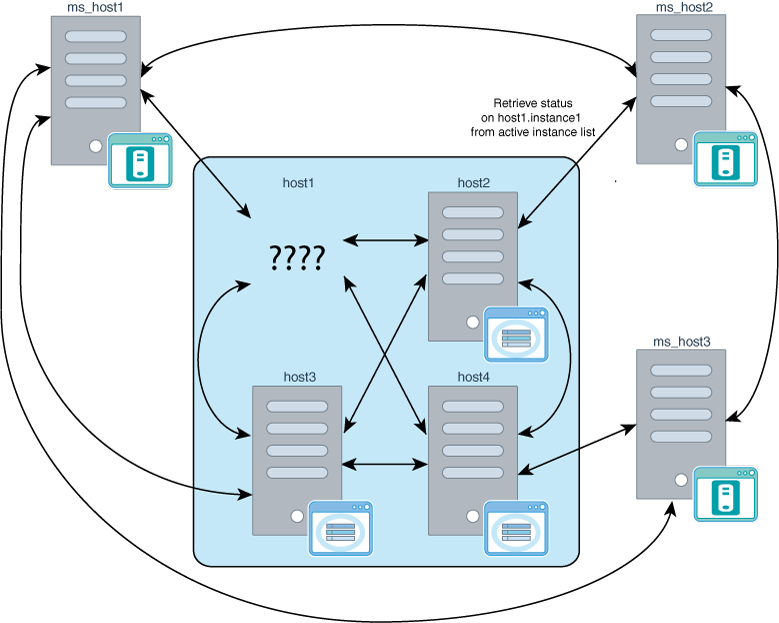

Figure 3-2 demonstrates how data instances in a grid connect to each other, where each data instance connects to every other data instance in a grid. It also shows how each data instance in this example maintains a persistent connection with one of the membership servers.

Figure 3-2 Data instances communicating with each other

Description of ''Figure 3-2 Data instances communicating with each other''

If a data instance loses a connection to another instance, it queries the active instance list on its membership server to verify if the "lost" instance is up. If the "lost" instance is up, then the data instance makes an effort to re-establish a connection with that instance. Otherwise, to avoid unnecessary delays, no further attempts are made to establish communication to the "lost" instance.

When a "lost" instance restarts, it registers itself with the membership service and proactively informs all other instances in a grid that it is up. When it is properly synchronized with the rest of a grid, the recovered instance is once again used to process transactions from applications.

In Figure 3-3, the host1 data instance is not up. If the host2 data instance tries to communicate with the host1 data instance, it discovers a broken connection. The host2 data instance queries the active instance list on its membership server, which informs it that the host1 data instance is not on the active instance list. If the host1 data instance comes back up, it registers itself again with the membership service, which then includes it in the list of active instances in this grid.

Figure 3-3 Instance reacts to a dead connection

Description of ''Figure 3-3 Instance reacts to a dead connection''

Recovering from a network partition error

A network partition error splits the instances involved in a single grid into two subsets. With a network partition error, each subset of instances is unable to communicate with the other subset of instances.

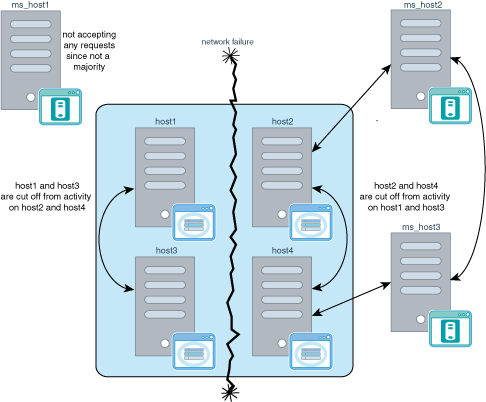

Figure 3-4 shows a network partition that would return inconsistent results to application queries without the membership service, since the application could access one subset of instances without being able to contact the disconnected subset of instances. Any updates made to one subset of instances would not be reflected in the other subset. If an application connects to the host1 data instance, then the query returns results from the host1 and host3 data instances; but any data that resides on the host2 and host4 data instances is not available because there is no connection between the two subsets.

If you encounter a network partition, the membership service provides a resolution. Figure 3-5 shows a grid with four instances and three membership servers. A network communications error has split a grid into two subsets where host1 and host3 no longer know about or communicate with host2 and host4. In addition, the ms_host1 membership server is not in communication with the other two membership servers.

For the membership service to work properly to manage the status of a grid, there must be a majority of active membership servers of the total servers created that can communicate with each other in order to work properly. If a membership server fails, the others continue to serve requests as long as a majority is available.

For example:

-

A membership service that consists of three membership servers can handle one membership server failure.

-

A membership service of five membership servers can handle two membership server failures.

-

A membership service of six membership servers can handle only two failures since three membership servers are not a majority.

Note:

When you configure the number of membership servers, you should always create an odd number of membership servers to serve as the membership service. If you have an even number of membership servers and a network partition error occurs, then each subset of a grid might have the same number of membership servers where neither side would have a majority. Thus, both sides of the network partitioned grid would stop working.If the number of remaining membership servers falls below the number needed for a majority, the remaining membership servers refuse all requests until at least a majority of membership servers are running. In addition, data instances that cannot communicate with the membership service cannot execute any transactions. You must research the failure issue and restart any failed membership servers.

Because of the communications failure, the ms_host1 membership server does not know about the other two membership servers. Since there are not enough membership servers that it does know to constitute a majority, the ms_host1 membership server can no longer accept incoming requests from the host1 and host3 data instances. And the host1 and host3 data instances cannot execute any transactions until the failed membership server is restarted.

Figure 3-5 Network partition with membership service

Description of ''Figure 3-5 Network partition with membership service''

To discover if there may be a network partition, you will see errors in the daemon log about elements losing contact with their membership server.

Once you resolve the connection error that caused your grid to split into two, all of the membership servers reconnect and synchronize the membership information. In our example in Figure 3-5, the ms_host1 membership server rejoins the membership service. After which, the host1 and host3 data instances also rejoin this grid as active instances.

Using Apache ZooKeeper as the membership service

Apache ZooKeeper is a third-party, open-source centralized service that maintains information for distributed systems and coordinates services for multiple hosts. TimesTen Scaleout uses Apache ZooKeeper to provide its membership service, which tracks the status of all instances and provides a consistent view of the instances that are active within a grid.

TimesTen Scaleout requires that you install and configure Apache ZooKeeper to work as the membership service for a grid. Each membership server in a grid is an Apache ZooKeeper server.

Note:

Since membership servers are ZooKeeper servers, see the Apache ZooKeeper documentation on how to use and manage ZooKeeper servers athttp://zookeeper.apache.org.If you create a second grid, you can use the same ZooKeeper servers to act as the membership service for the second grid. However, all ZooKeeper servers should act only as a membership service for TimesTen Scaleout.

For ZooKeeper servers in a production environment, it is advisable to:

-

Configure an odd number of replicated ZooKeeper servers on separate hosts. Use a minimum of three ZooKeeper servers for your membership service. If you have n Zookeeper servers, you should have (n

/2+1) ZooKeeper servers alive as a majority. A larger number of ZooKeeper servers increases reliability. -

It is recommended (but not required) that you use hosts for your membership servers that are separate from any hosts used for instances. If you do locate your ZooKeeper servers and instances on separate hosts, then this guarantees that if the host fails, you do not lose both the instance and one of the membership servers.

-

Avoid having ZooKeeper servers be subject to any single point of failure. For example, use independent physical racks, power sources, and network locations.

-

Your Zookeeper servers could share the same physical infrastructure as your data instances. For example, if your data instances are spread across two physical racks, you could host your Zookeeper servers in these same two racks.

For example, you configure your grid with an active and standby management instance, two data space groups (each with three data instances), and three ZooKeeper servers configured in your grid. If you have two data racks, the best way to organize your hosts is to:

-

Locate one of the management instances on rack 1 and the other management instance on rack 2.

-

Locate two of the ZooKeeper servers on rack 1 and the third on rack 2.

-

Locate the hosts for data instances for data space group 1 on rack 1 and the hosts for the data instances for data space group 2 on rack 2.

Thus, if rack 2 loses power or its ethernet connection, this grid continues to work since rack 1 has the majority of ZooKeeper servers. If rack 1 fails, you lose the majority of the ZooKeeper servers and need to recover your ZooKeeper servers. A grid does not work without at least a majority of the configured ZooKeeper servers active.

Note:

For more directions for best practices for your ZooKeeper servers, go to:http://zookeeper.apache.org. -

Installing Apache ZooKeeper

On each host on which you intend to provide a membership server, install the TimesTen-specific Apache ZooKeeper distribution, which is a ZooKeeper TAR file located in the installation_dir/tt18.1.4.1.0/3rdparty directory of the TimesTen installation.

Important:

-

Using Apache ZooKeeper as a membership service for TimesTen Scaleout requires Java release 1.8 (JDK 8) or greater on each ZooKeeper server.

-

All hosts that contain data instances, management instances and membership servers must be connected to the same private network.

-

Create a directory for the ZooKeeper installation on each host that you intend to act as one of the membership servers. You may install the ZooKeeper distribution file into any directory with any name you wish.

-

From a host where you have already installed TimesTen Scaleout, copy the ZooKeeper

apache-zookeeper-3.5.8-bin.tar.gzfile frominstallation_dir/tt18.1.4.1.0/3rdpartyto the desired directory on each host. -

Unpack the provided Apache ZooKeeper distribution using the standard operating system

tarcommand into the desired location on each host intended to be a membership server.

The following example on Linux unpacks an Apache ZooKeeper installation into the zkdir directory (a subdirectory of the current directory). A TimesTen Scaleout installation on host1 is located in /swdir/TimesTen/tt18.1.4.1.0.

On the ms1_host membership server, create the zkdir directory.

% mkdir -p zkdir

Copy the apache-zookeeper-3.5.8-bin.tar.gz file from the installation_dir/tt18.1.4.1.0/3rdparty directory on host1 to the zkdir directory you created on ms1_host.

% tar -C zkdir -xzvf /swdir/TimesTen/tt18.1.4.1.0/3rdparty/apache-zookeeper-3.5.8-bin.tar.gz

[...TAR OUTPUT...]

Note:

The version of the ZooKeeper distribution that TimesTen Scaleout provides is shown in the name of the TAR file provided in theinstallation_dir/18.1.4.1.0/3rdparty directory. For example, the apache-zookeeper-3.5.8-bin.tar.gz file in this example shows that the provided Apache ZooKeeper distributed version is 3.5.8.Configuring Apache ZooKeeper as the membership service

To configure each Apache ZooKeeper server to act as a membership server for your grid, you need to configure the following configuration files on each host that hosts a membership server:

-

zoo.cfgconfiguration file: In replicated mode, each membership server has azoo.cfgconfiguration file. Thezoo.cfgconfiguration file identifies all of the membership servers involved in the membership service, where each membership server is identified by its DNS (or IP address) and port number.All configuration parameters in the

zoo.cfgon each membership server must be exactly the same, except for the client port. The client port can be different (but is not required to be different) for each membership server. The client port can be the same if each membership server runs on a different host.Place the

zoo.cfgfile in the Apache ZooKeeper installation/confdirectory. For example, if you unpacked theapache-zookeeper-3.5.8-bin.tar.gzfile into the/swdir/zkdirdirectory on each membership server, then you would place thezoo.cfgfile into the following directory:/swdir/zkdir/apache-zookeeper-3.5.8-bin/conf/zoo.cfg

-

myidconfiguration file: Provides the number that identifies this particular membership server. Each membership server is identified by a unique number. For example, if you have 5 servers, they must be identified with unique integers of1,2,3,4and5.This number corresponds to the definition of the host in the

zoo.cfgfile by the x in theserver.x parameter. Allzoo.cfgfiles must have a listing for all membership servers. For example, if you have 5 membership servers, they are configured asserver.1,server.2, and so on in thezoo.cfgfile.The

myidconfiguration file on each host contains a single line with the integer number of that server. For example, the 2nd membership server is identified inzoo.cfgasserver.2and in itsmyidconfiguration file is a single line with a2.The

myidconfiguration file is a text file located in the Apache ZooKeeper data directory of the membership server. The location of the data directory is configured with thedataDirparameter in thezoo.cfgfile. For example, if you configure the data directory to be/swdir/zkdir/3.5.8/data, then you would place themyidtext configuration file as follows:/swdir/zkdir/apache-zookeeper-3.5.8-bin/data/myid

Table 3-1 shows the commonly used configuration parameters for the zoo.cfg file.

Table 3-1 zoo.cfg configuration parameters

| Parameter | Description |

|---|---|

|

The unit of time (in milliseconds) used for each tick for both |

|

|

The timeout (in ticks) for how long the membership servers have to connect to the leader. For the best performance, you should set this to the recommended setting of |

|

|

The limit of how out of date a membership server can be from a leader. This limit (in ticks) specifies how long is allowed between sending a request and receiving an acknowledgement. For best performance, you should set this recommended setting to |

|

|

You decide on and create the data directory location to store the ZooKeeper data, snapshots and its transaction logs. When creating the directory where the transaction logs are written, it is important to your performance that the transaction logs are written to non-volatile storage. A dedicated device for your transaction logs is key to consistent good performance. Logging your transactions to a busy device adversely effects performance. |

|

|

The port on which to listen for client connections. The default is port |

|

|

Defines the number of most recent snapshots and corresponding Apache ZooKeeper transaction logs to keep in the |

|

|

The time interval in hours for when to trigger the purge of older snapshots and corresponding Apache ZooKeeper transaction logs. Set to a positive integer (1 and above) to enable the auto purge. Defaults to 0. We recommend that you set this to 1. |

|

|

The minimum session timeout in milliseconds that the server will allow the client to negotiate. Defaults to 2 times the |

|

|

The maximum session timeout in milliseconds that the server will allow the client to negotiate. Defaults to 20 times the |

|

|

The configuration for each membership server is identified by the This parameter is required to run the membership server in replicated mode. The x is the identifying integer number for the membership server, which is also configured in the The Define two port numbers after each server name.

For a production environment, each of the membership servers should be configured on different hosts. In this case, the convention is to assign the same port numbers, such as: server.1=system1:2888:3888 server.2=system2:2888:3888 server.3=system3:2888:3888 However, for a testing environment, you may want to place all membership servers on the same host. In this case, you need to configure all membership servers with different ports. |

|

|

Enables the specified Zookeeper four-letter-words commands. TimesTen Scaleout utilities like |

All membership servers that are installed should be run in replicated mode. To run your membership servers in replicated mode, you need to include the tickTime, initLimit, and syncLimit parameters and provide the host name with two port numbers for each membership server.

Note:

For more details on replicated mode, go to:http://zookeeper.apache.org.

Then, refer to the Getting Started > Running Replicated ZooKeeper section of the documentation.

The following example demonstrates the zoo.cfg membership server configuration file, where there are three membership servers installed on hosts whose DNS names are ms_host1, ms_host2 and ms_host3. All three membership servers are configured to run in replicated mode.

# The number of milliseconds of each tick tickTime=250 # The number of ticks that the initial synchronization phase can take initLimit=40 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=12 # The directory where you want the ZooKeeper data stored. dataDir=/swdir/zkdir/apache-zookeeper-3.5.8-bin/data # The port at which the clients will connect clientPort=2181 # Every hour, keep the latest three Apache ZooKeeper snapshots and # transaction logs and purge the rest autopurge.snapRetainCount=3 autopurge.purgeInterval=1 # The minimum and maximum allowable timeouts for Apache ZooKeeper sessions. # Actual timeout is negotiated at connect time. minSessionTimeout=2000 maxSessionTimeout=10000 # The membership servers server.1=ms_host1:2888:3888 server.2=ms_host2:2888:3888 server.3=ms_host3:2888:3888 # Enabled Zookeeper four-letter-words commands 4lw.commands.whitelist=stat, ruok, conf, isro

Note:

There is a sample file that explains some of the parameters for yourzoo.cfg file in the Apache ZooKeeper installation /conf directory called zoo_sample.cfg. However, it does not have all of the recommended parameters or settings for TimesTen Scaleout. Use zoo_sample.cfg for reference only.This example creates a myid text file on three hosts, where each is a membership server. Each myid text file contains a single-line with the server id (an integer) corresponding to one of the membership servers configured in the zoo.cfg file. The server id is the number x in the server.x= entry of the configuration file. The myid text file must be located within the data directory on each membership server. The data directory location is /swdir/zkdir/apache-zookeeper-3.5.8-bin/data.

-

Create a

myidtext file in the/swdir/zkdir/apache-zookeeper-3.5.8-bin/datadirectory onms_host1for its membership server. Themyidtext file contains the value1. -

Create a

myidtext file in the/swdir/zkdir/apache-zookeeper-3.5.8-bin/datadirectory onms_host2for its membership server. Themyidtext file contains the value2. -

Create a

myidtext file in the/swdir/zkdir/apache-zookeeper-3.5.8-bin/datadirectory onms_host3for its membership server. Themyidtext file contains the value3.

When the membership server starts up, it identifies which server it is in by the integer configured in the myid file in the ZooKeeper data directory.

Note:

For full details of the configuration parameters that can exist in the Apache ZooKeeperzoo.cfg configuration file, see http://zookeeper.apache.org.Starting the membership servers

Before you can start the membership server with the zkServer.sh shell script, you need to set the maximum Java heap size, which determines if ZooKeeper swaps to the file system. The Java maximum heap size should not be larger than the amount of available real memory. Edit the zkEnv.sh shell script to add a new line with the JVMFLAGS environment variable setting the maximum Java heap size to 4 GB. Upon startup, the zkServer.sh shell script sources the zkEnv.sh shell script to include this new environment variable.

The ZooKeeper shell scripts are located in the ZooKeeper server /bin directory. For example, if you unpacked the apache-zookeeper-3.5.8-bin.tar.gz file into the /swdir/zkdir directory on each membership server, then the zkEnv.sh and zkServer.sh shell scripts are located in the /swdir/zkdir/bin directory.

The following example edits the zkEnv.sh shell script and adds the JVMFLAGS=Xmx4g configuration within the zkEnv.sh script after the line for ZOOKEEPER_PREFIX.

ZOOBINDIR="${ZOOBINDIR:-/usr/bin}"

ZOOKEEPER_PREFIX="${ZOOBINDIR}/.."

JVMFLAGS=-Xmx4g

Start each membership server by running the zkServer.sh start shell script on each server.

% setenv ZOOCFGDIR /swdir/zkdir/apache-zookeeper-3.5.8-bin/conf % /swdir/zkdir/apache-zookeeper-3.5.8-bin/bin/zkServer.sh start

You can verify the status for each membership server by executing the zkServer.sh status command on each membership server:

% /swdir/zkdir/apache-zookeeper-3.5.8-bin/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /swdir/zkdir/apache-zookeeper-3.5.8-bin/conf/zoo.cfg

Mode: { leader | follower }

If the membership server is not running, is not in replicated mode, or there is not a majority executing, these errors are displayed:

ZooKeeper JMX enabled by default Using config: /swdir/zkdir/apache-zookeeper-3.5.8-bin/conf/zoo.cfg Error contacting service. It is probably not running.

Additionally, you can verify if a membership sever is running in a non-error state with the ruok ZooKeeper command. The command returns imok if the server is running. There is no response otherwise. From a machine within the network, run:

% echo ruok | nc ms_host1 2181 imok

For statistics about performance and connected clients, use the stat ZooKeeper command. From a machine within the network, run:

% echo stat | nc ms_host1 2181

Once the membership servers are started, you can create your grid. See "Configure a grid as a membership service client" for details on how to create a grid that knows about your membership servers.

Configure a grid as a membership service client

A grid must know how to connect to each of the membership servers. Thus, you must provide a ZooKeeper client configuration file to the ttGridAdmin utility when you create a grid that details all of the membership servers. You can name the ZooKeeper client configuration file with any prefix as long as the suffix is .conf.

The ZooKeeper client configuration file specifies all membership servers that coordinate with each other to provide a membership service. Within the client configuration file is a single line with the Servers parameter that provides the DNS (or IP address) and client port numbers for each membership server. The configuration information for these hosts must:

-

Use the same DNS (or IP address) as what you specified in the

server.xparameters in each of the individualzoo.cfgfiles on each membership server. -

Provide the same client port number as what is specified in the

clientPortparameter specified in each of the individualzoo.cfgfiles on each membership server.

In our example, we use the membership.conf file as the ZooKeeper client configuration file. For this example, there are three hosts that support three membership servers, where ms_host1 listens on client port 2181, ms_host2 listens on client port 2181, and ms_host3 listens on client port 2181.

Servers ms_host1!2181,ms_host2!2181,ms_host3!2181

A grid knows how to reach these membership servers because the ZooKeeper client configuration file is provided as an input parameter when you create your grid. See "Creating a grid" for more details.

Once you provide the ZooKeeper client configuration file to the ttGridAdmin command when a grid is created, the ZooKeeper client configuration file is no longer needed and can be discarded.

Note:

You can modify the list of provided membership servers for a grid by importing a new list of membership servers. See "Reconfiguring membership servers" for details.